A New Framework of Human Interaction Recognition Based on Multiple Stage Probability Fusion

Abstract

:1. Introduction

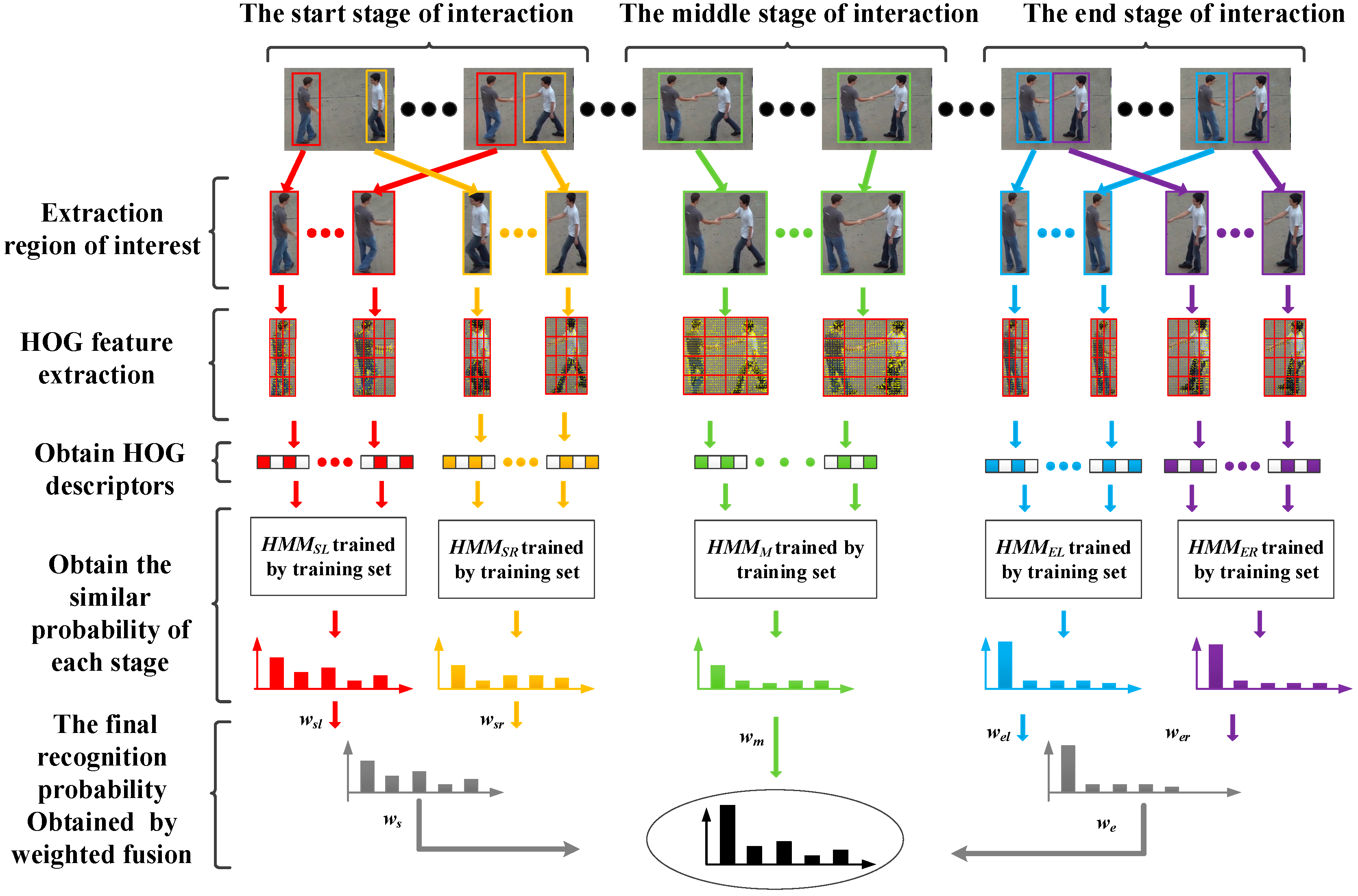

- (1)

- Video segmentation in time domain. Interactive behavior is divided into three stages, i.e., start stage, middle stage and end stage in accordance with the distance between two persons.

- (2)

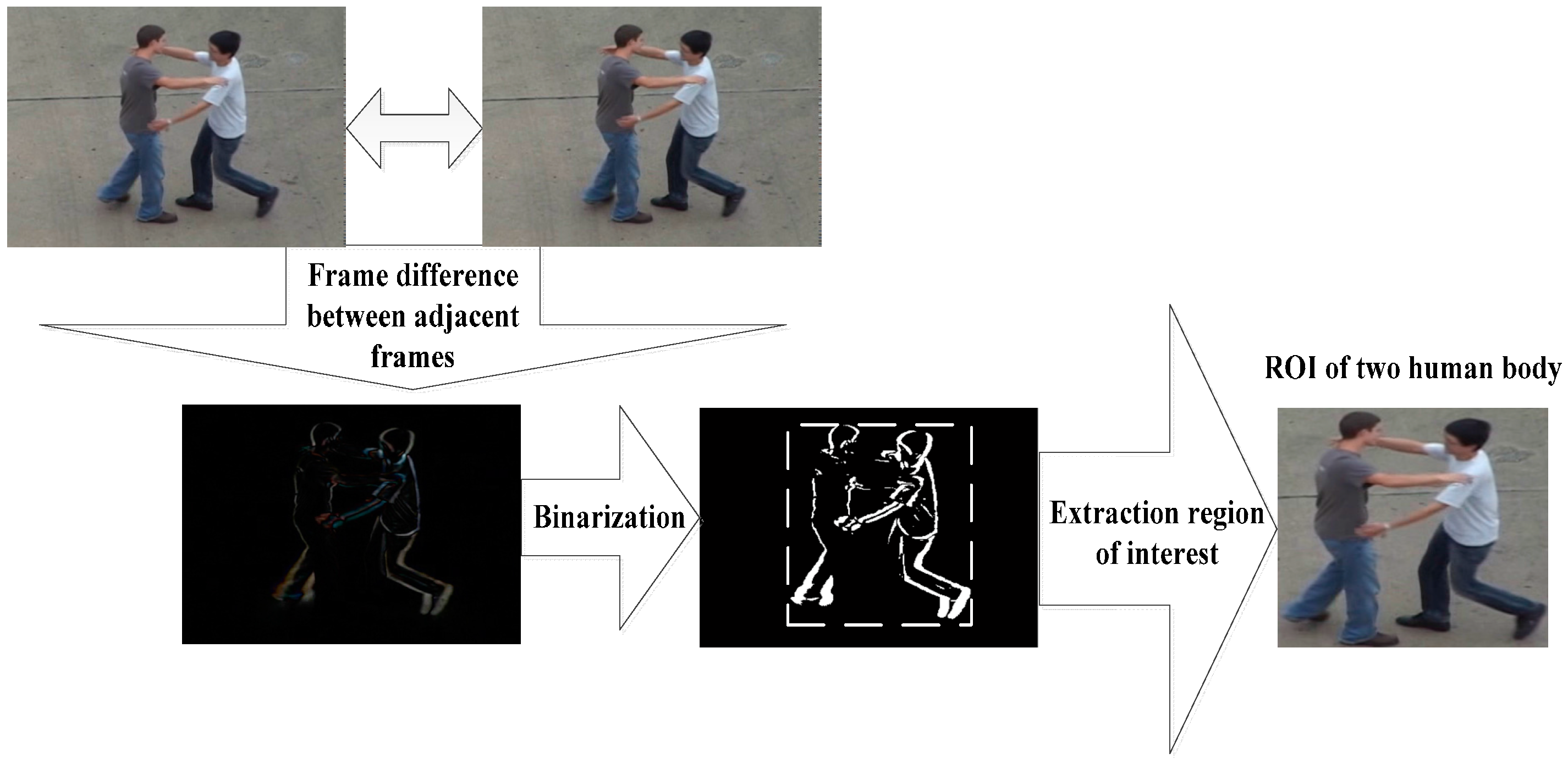

- Region of interest extraction. The interaction areas are divided into two independent regions at the start and end stages, when the distance between two persons is extensive. The interaction area is extracted as a whole in the middle stage, when the distance between two persons is smaller and the participants are close to each other or in contact.

- (3)

- Feature extraction. The HOG (Histogram of Oriented Gradient) descriptor is used to represent the region of interest (ROI) of each frame. The HOG descriptors are separately extracted in two ROIs at the start and end stages, and the HOG descriptor is extracted in the global ROI in the middle stage.

- (4)

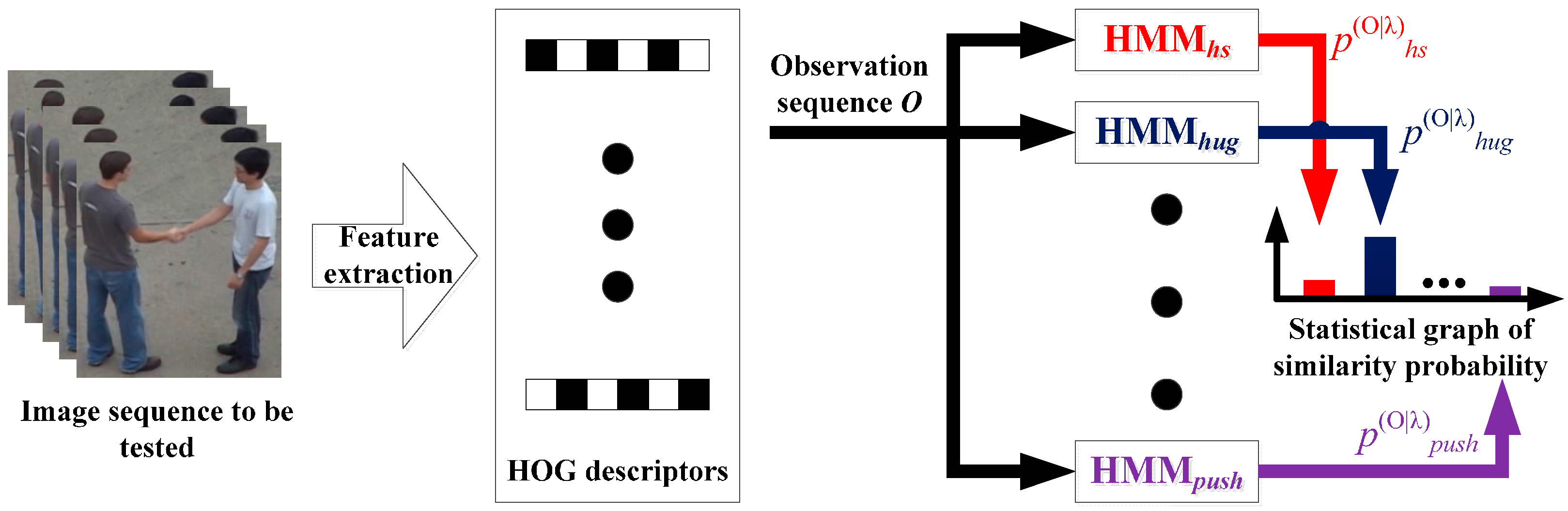

- Interaction model training. By using HOG features which have been extracted from each interaction stage. The Hidden Markov model (HMM) is chosen as our action model, because it captures the dynamics of the human action and has robustness in relation to noise.

- (5)

- Interaction recognition. When the unknown interaction is taking place, the video segmentation and feature extraction are first performed. Then the probabilities of the test sequence for the sub-HMMs are computed. At the end, the recognition result is obtained by weighted fusing of the likelihood probability of these three stages.

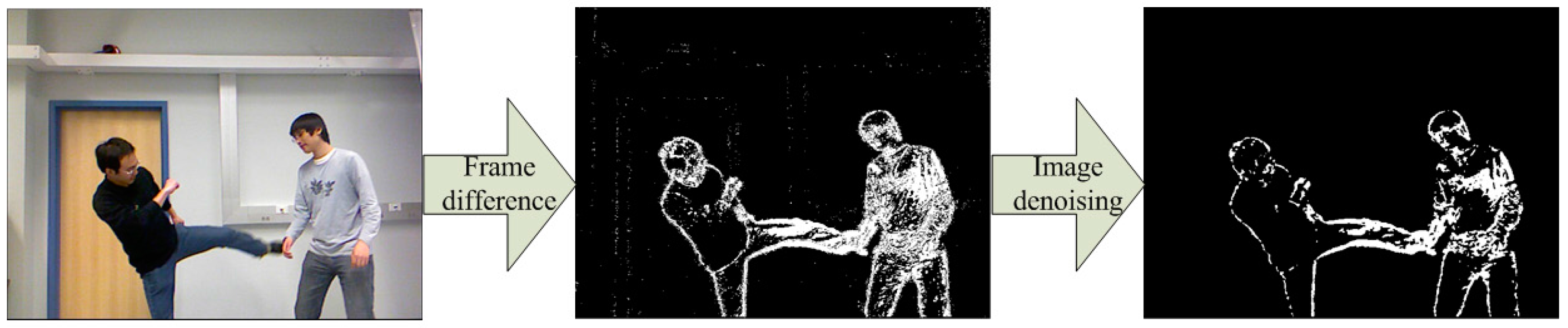

2. Piecewise Segmentation of Interactive Behavior

3. The Global Feature Extraction and Representation

- (1)

- Calculation of the pixels gradient:represents the amplitude of the gradient, and represents the direction of the gradient. and are used to represent and calculate the horizontal gradient and vertical gradient respectively.

- (2)

- Count the histogram of gradient:The pixels gradient distribution is shown as Figure 5b,e. The gradient distribution is then divided into ns × ns cells. Finally, the gradient over all the pixels within each cell is projected on m orientations to form a m-dimensions feature vector v. The vector v should be normalized as Equation (3):is a small constant.

4. Piecewise Fusion Recognition Algorithm

4.1. Recognition Based Hidden Markov Model

4.2. Weighted Fusion of Three Stages of Probability Similarity

5. Algorithm Verification and Results Analysis

5.1. Algorithm Tested in UT-Interaction Dataset

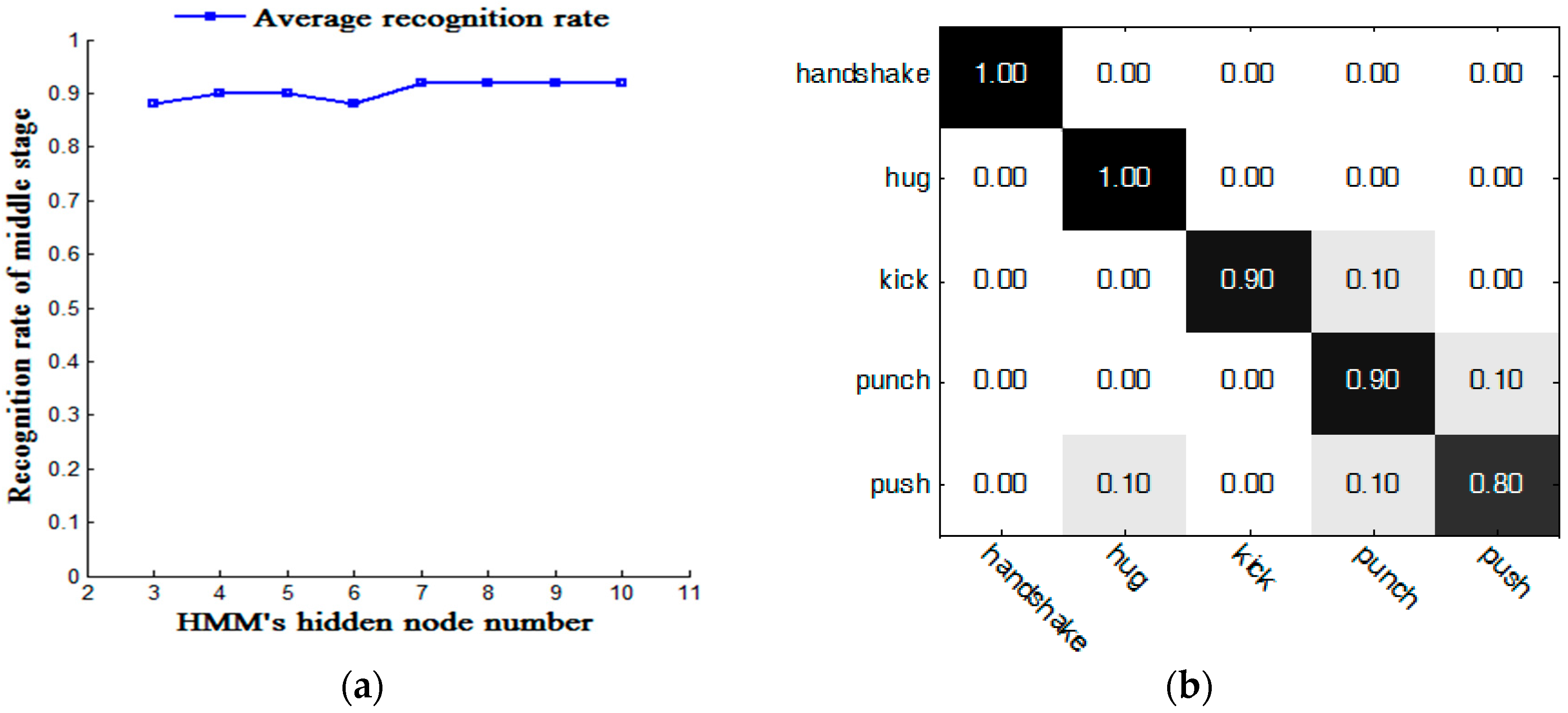

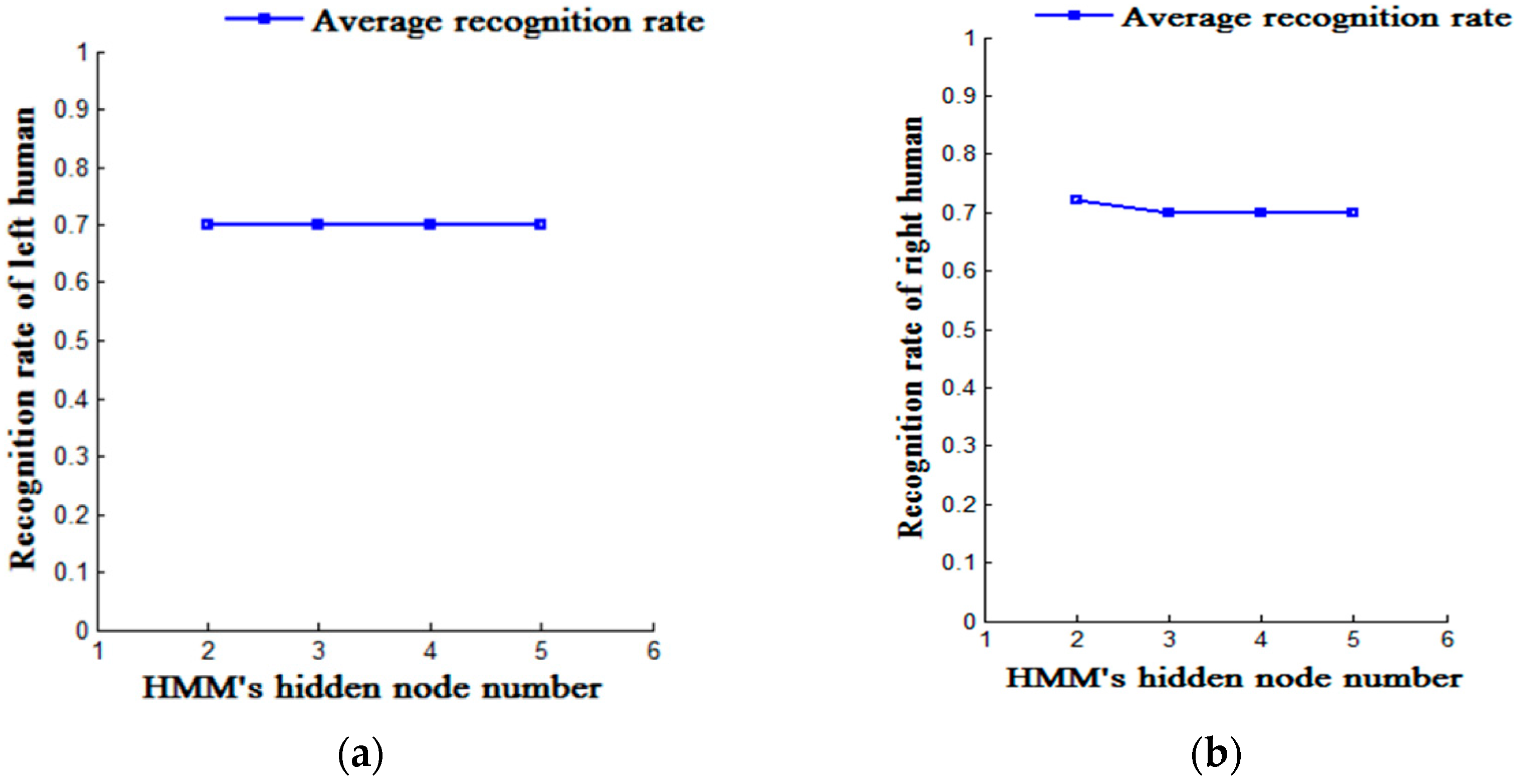

5.1.1. HMM Number of Hidden Nodes Test in Different Stages

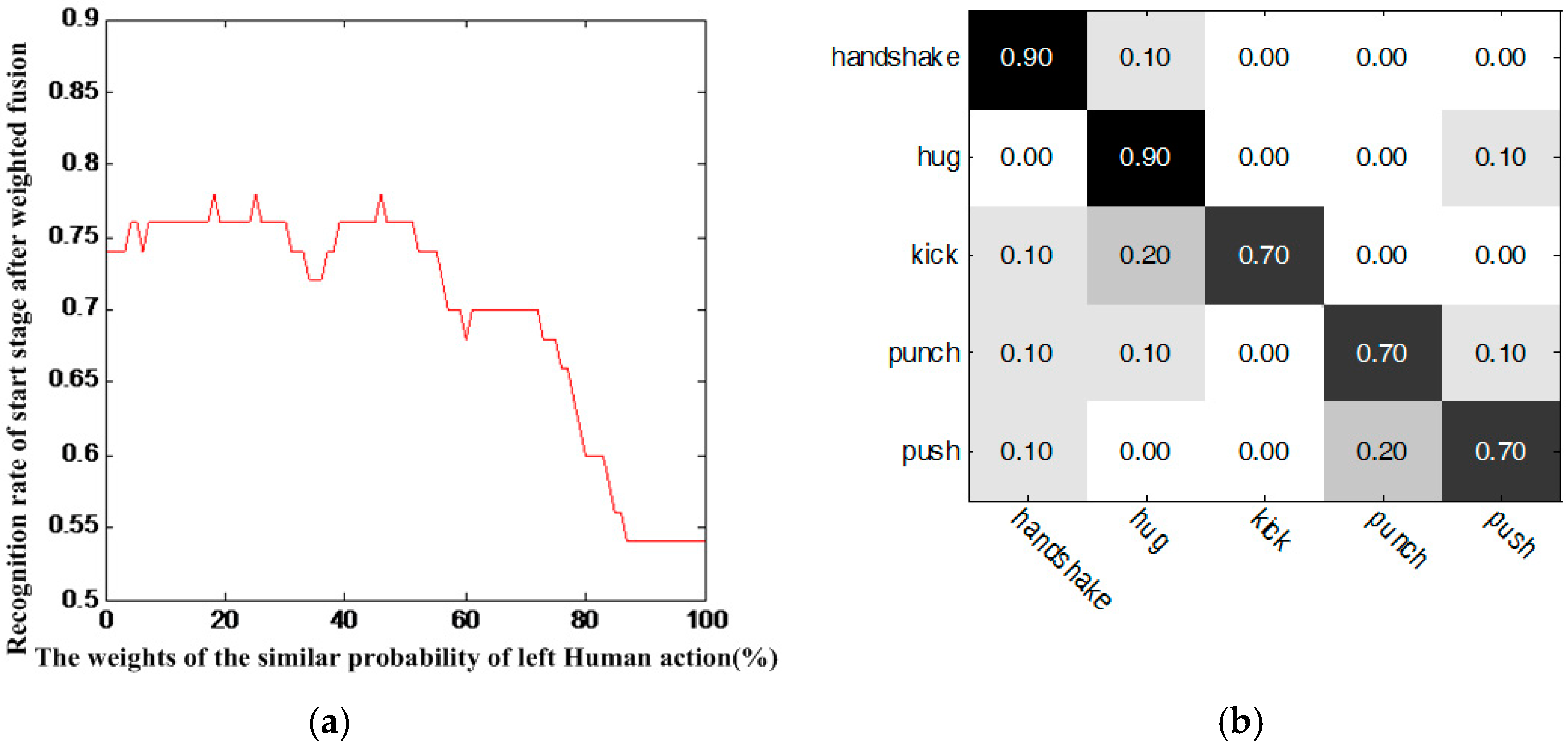

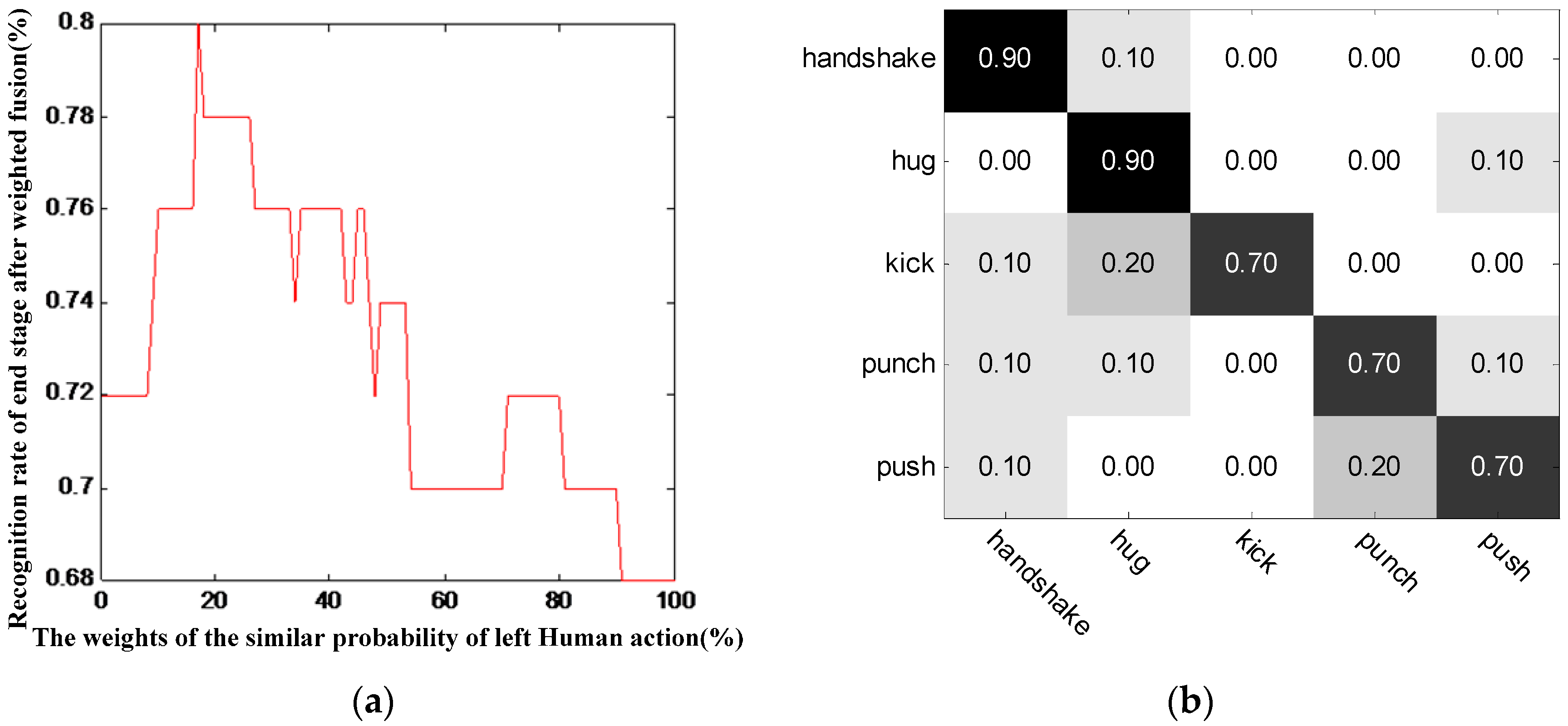

5.1.2. Weighted Fusion Test

5.1.3. The Comparison of the Performance

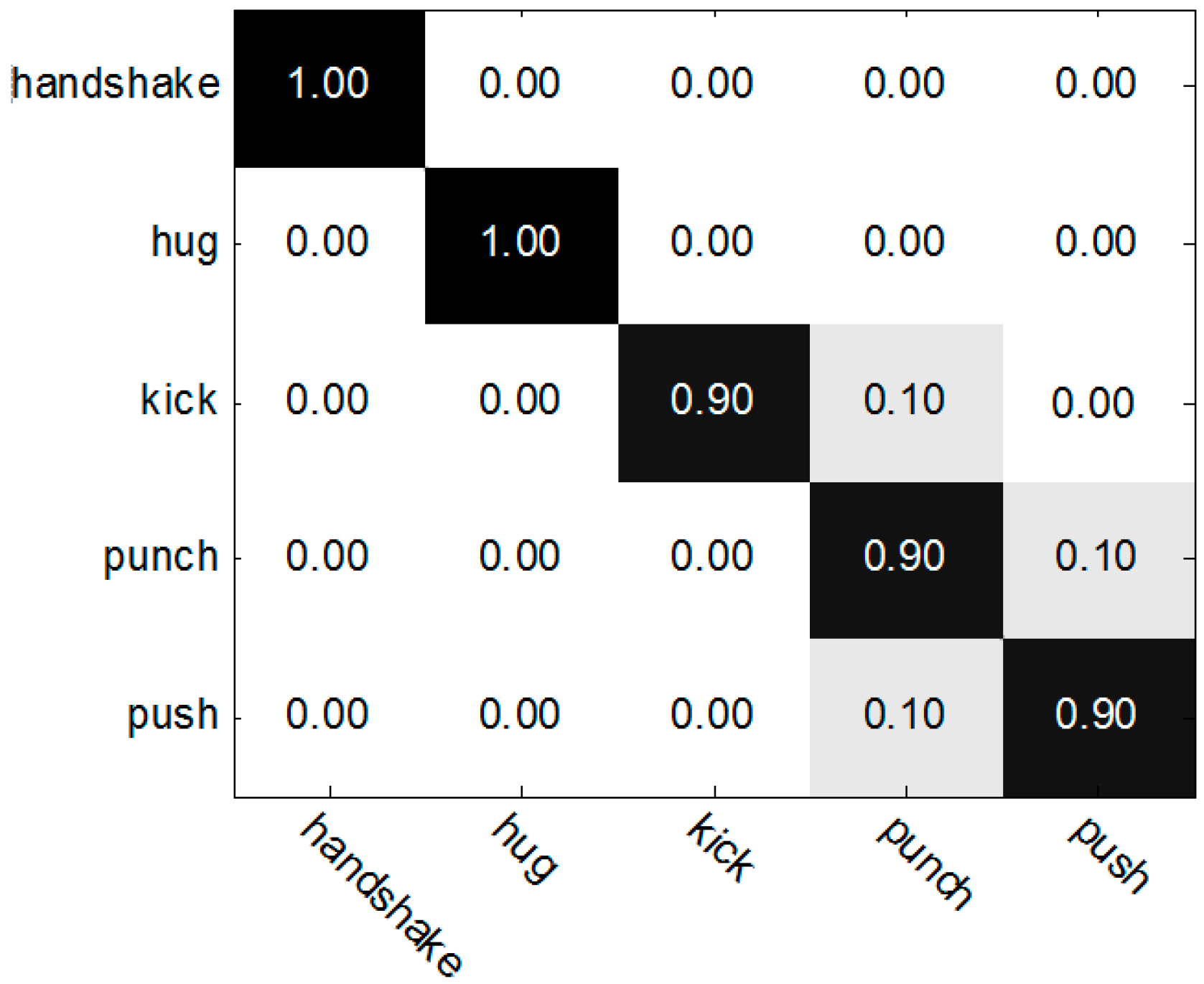

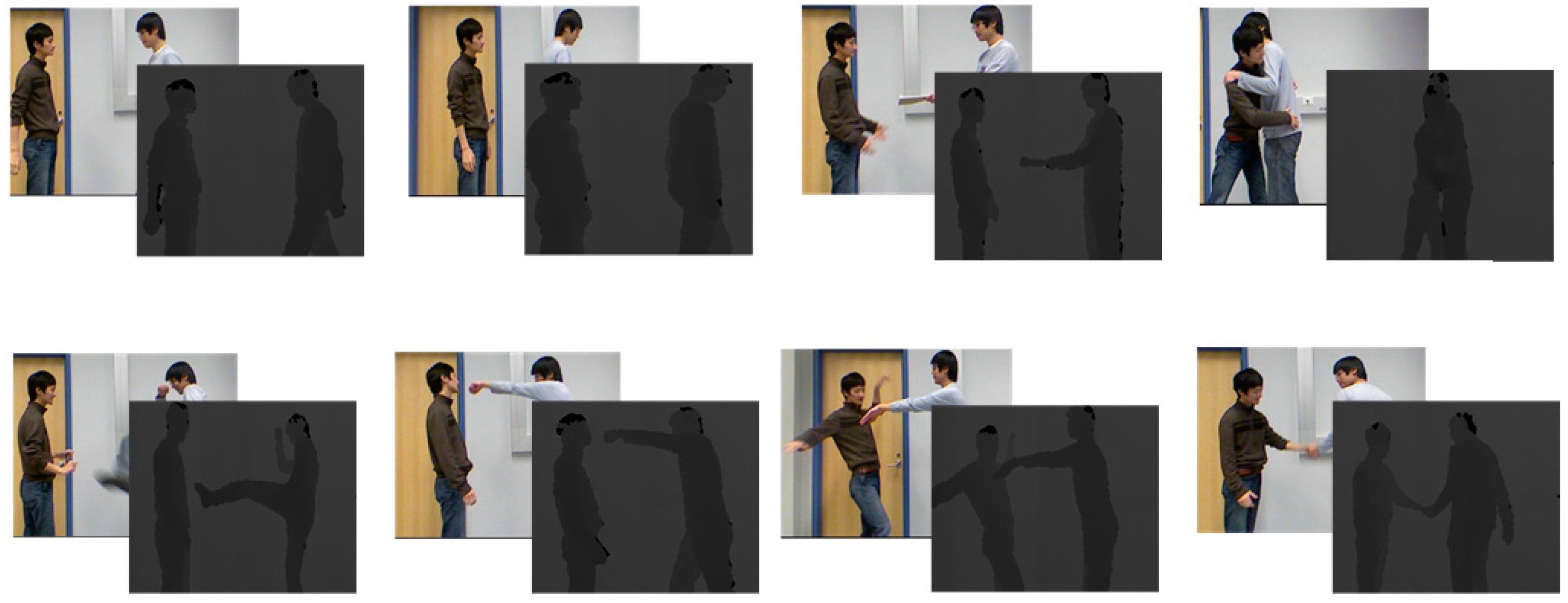

5.2. Algorithm Tested in SBU-Interaction Dataset

5.2.1. Experimental Results and Analysis

5.2.2. The Comparison of the Performance

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Weinland, D.; Ronfard, R.; Boyer, E. A survey of vision-based methods for action representation, Segmentation and Recognition. Comput. Vis. Image Underst. 2011, 115, 224–241. [Google Scholar] [CrossRef]

- Ji, X.; Liu, H. Advances in View-Invariant Human Motion Analysis: A Review. IEEE Trans. Syst. Man Cybern. C 2010, 40, 13–24. [Google Scholar]

- Jalal, A.; Kim, Y.H.; Kim, Y.J.; Kamal, S.; Kim, D. Robust Human activity recognition from depth video using spatiotemporal multi-fused features. Pattern Recognit. 2016, 61, 295–308. [Google Scholar] [CrossRef]

- Jalal, A.; Kamal, S.; Kim, D. Depth Silhouettes Context: A New Robust Feature for Human Tracking and Activity Recognition Based on Embedded HMMs. In Proceedings of the International Conference on Ubiquitous Robots and Ambient Intelligence, Goyang, Korea, 28–30 October 2015; pp. 1–7. [Google Scholar]

- Farooq, A.; Jalal, A.; Kamal, S. Dense RGB-D map-based human tracking and activity recognition using skin joints features and self-organizing map. KSII Trans. Internet Inf. Syst. 2015, 9, 1856–1867. [Google Scholar]

- Jalal, A.; Kamal, S.; Kim, D. Human depth sensors-based activity recognition using spatiotemporal features and hidden markov model for smart environments. J. Comput. Netw. Commun. 2016, 2016, 8087545. [Google Scholar] [CrossRef]

- Gaur, U.; Zhu, Y.; Song, B. A “String of Feature Graphs” Model for Recognition of Complex Activities in Natural Videos. In Proceedings of the International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2595–2602. [Google Scholar]

- Yu, T.; Kim, T.; Cipolla, R. Real-time Action Recognition by Spatio-Temporal Semantic and Structural Forests. In Proceedings of the British Machine Vision Conference, Aberystwyth, UK, 31 August–3 September 2010; pp. 1–12. [Google Scholar]

- Burghouts, G.J.; Schutte, K. Spatio-temporal layout of human actions for improved bag-of-words action detection. Pattern Recognit. Lett. 2013, 34, 1861–1869. [Google Scholar] [CrossRef]

- Peng, X.; Peng, Q.; Qiao, Y. Exploring Dense Trajectory Feature and Encoding Methods for Human Interaction Recognition. In Proceedings of the International Conference on Internet Multimedia Computing and Service, Huangshan, China, 17–19 August 2013; pp. 23–27. [Google Scholar]

- Li, N.; Cheng, X.; Guo, H.; Wu, Z. A Hybrid Method for Human Interaction Recognition Using Spatio-Temporal Interest Points. In Proceedings of the International Conference on 22nd Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 2513–2518. [Google Scholar]

- Bingbing, N.; Guang, W.; Pierre, M. RGBD-HuDaAct: A Color-Depth Video Database for Human Daily Activity Recognition. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Barcelona, Spain, 6–11 November 2011; pp. 1147–1153. [Google Scholar]

- Yun, K.; Honorio, J.; Chattopadhyay, D.; Berg, T.L.; Samaras, D. Two-Person Interaction Detection Using Body-Pose Features and Multiple Instance Learning. In Proceedings of the IEEE Computer Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Providence, RI, USA, 16–21 June 2012; pp. 1–8. [Google Scholar]

- Patron-Perez, A.; Marszalek, M.; Reid, I.; Zisserman, A. Structured learning of human interactions in TV shows. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2441–2453. [Google Scholar] [CrossRef] [PubMed]

- Raptis, M.; Sigal, L. Poselet Key-framing: A Model for Human Activity Recognition. In Proceedings of the IEEE conference on Computer Vision Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2650–2657. [Google Scholar]

- Kong, Y.; Jia, Y.; Fu, Y. Interactive Phrases: Semantic descriptions for human interaction recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1775–1788. [Google Scholar] [CrossRef] [PubMed]

- Slimani, K.; Benezeth, Y.; Souami, F. Human Interaction Recognition Based on the Co-Occurrence of Visual Words. In Proceedings of the IEEE Conference on Computer Vision Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 461–466. [Google Scholar]

- Dong, Z.; Kong, Y.; Liu, C.; Li, H.; Jia, Y. Recognizing Human Interaction by Multiple Features. In Proceedings of the First Asian Conference on Pattern Recognition, Beijing, China, 28 November 2011; pp. 77–81. [Google Scholar]

- Kong, Y.; Liang, W.; Dong, Z.; Jia, Y. Recognising human interaction from videos by a discriminative model. IET Comput. Vis. 2014, 8, 277–286. [Google Scholar] [CrossRef]

- Barnich, O.; Van Droogenbroeck, M. ViBe: A universal background subtraction algorithm for video sequences. IEEE Trans. Image Process. 2011, 20, 1709–1724. [Google Scholar] [CrossRef] [PubMed]

- Dalad, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the IEEE Conference on Computer Vision Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Ji, X.; Zhou, L.; Li, Y. Human Action Recognition Based on AdaBoost Algorithm for Feature Extraction. In Proceedings of the IEEE Conference on Computer and Information Technology, Xi’an, China, 22–23 December 2014; pp. 801–805. [Google Scholar]

- Rabiner, L.R. A tutorial on hidden markov models and selected applications in speech recognition. Proc. IEEE 1989, 77, 267–296. [Google Scholar] [CrossRef]

- Jalal, A.; Uddin, M.; Kim, T. Depth video-based human activity recognition system using translation and scaling invariant features for life logging at smart home. IEEE Trans. Consum. Electron. 2012, 58, 863–871. [Google Scholar] [CrossRef]

- Jalal, A.; Kamal, S.; Kim, D. A Depth Video Sensor-Based Life-Logging Human Activity Recognition System for Elderly Care in Smart Indoor Environments. Sensors 2014, 14, 11735–11759. [Google Scholar] [CrossRef] [PubMed]

- Ryoo, M.S.; Aggarwal, J.K. Spatio-Temporal Relationship Match: Video Structure Comparison for Recognition of Complex Human Activities. In Proceedings of the IEEE Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 1593–1600. [Google Scholar]

- Mukherjee, S.; Biswas, S.K.; Mukherjee, D.P. Recognizing Interaction between Human Performers Using ‘Key Pose Doublet’. In Proceedings of the 19th ACM Multimedia Conference on Multimedia, Scottsdale, AZ, USA, 28 November–1 December 2011; pp. 1329–1332. [Google Scholar]

- Brendel, W.; Todorovic, S. Learning Spatiotemporal Graphs of Human Activities. In Proceedings of the IEEE Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 778–785. [Google Scholar]

- Liang, J.; Xu, C.; Feng, Z.; Ma, X. Affective interaction recognition using spatio-temporal features and context. Comput. Vis. Image Underst. 2016, 144, 155–165. [Google Scholar] [CrossRef]

- Ji, Y.; Cheng, H.; Zheng, Y.; Li, H. Learning contrastive feature distribution model for interaction recognition. Vis. Commun. Image Represent. 2015, 33, 340–349. [Google Scholar] [CrossRef]

| Action Type | Hand Shake | Hug | Kick | Punch | Push | Avr/% |

|---|---|---|---|---|---|---|

| Left | 80 | 40 | 50 | 50 | 50 | 54 |

| Right | 90 | 90 | 60 | 60 | 70 | 74 |

| Final | 90 | 90 | 70 | 70 | 70 | 78 |

| Action Type | Hand Shake | Hug | Kick | Punch | Push | Avr/% |

|---|---|---|---|---|---|---|

| Left | 100 | 80 | 40 | 50 | 70 | 68 |

| Right | 70 | 70 | 90 | 50 | 80 | 72 |

| Final | 90 | 70 | 90 | 70 | 80 | 80 |

| Source | Year | Method | Acc/% |

|---|---|---|---|

| Our approach | 2017 | Stage model + HOG + HMM | 94 |

| Kong et al. [16] | 2014 | global template + local 3D feature + discriminative model | 85 |

| Mukherjee et al. [27] | 2011 | Bipartite graph + key pose doublets | 79.17 |

| Brendel et al. [28] | 2011 | tubes + spatio-temporal relationships graph model | 78.9 |

| Liang et al. [29] | 2016 | Spatio-temporal features_context | 92.3 |

| Methods | Without Piece Fusion | With Piece Fusion |

|---|---|---|

| RGB image | 74.86% | 80.00% |

| Depth image | 78.38% | 86.67% |

| Weighted fusion | 85.88% | 91.70% |

| Comparative Literature | Features and Recognition Methods | Recognition Rate (%) |

|---|---|---|

| Yun et al. [13] | Joint distance + SVM Joint distance + MILBoost | 87.6% 91.1% |

| Yanli Ji et al. [30] | BOW CFDM | 82.5% 89.4% |

| Our paper method | HOG(RGB) + HOG(depth) without piece weighted fusion HOG(RGB) + HOG(depth) + piece weighted fusion | 85.9% 91.7% |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ji, X.; Wang, C.; Ju, Z. A New Framework of Human Interaction Recognition Based on Multiple Stage Probability Fusion. Appl. Sci. 2017, 7, 567. https://doi.org/10.3390/app7060567

Ji X, Wang C, Ju Z. A New Framework of Human Interaction Recognition Based on Multiple Stage Probability Fusion. Applied Sciences. 2017; 7(6):567. https://doi.org/10.3390/app7060567

Chicago/Turabian StyleJi, Xiaofei, Changhui Wang, and Zhaojie Ju. 2017. "A New Framework of Human Interaction Recognition Based on Multiple Stage Probability Fusion" Applied Sciences 7, no. 6: 567. https://doi.org/10.3390/app7060567