Perception-Driven Obstacle-Aided Locomotion for Snake Robots: The State of the Art, Challenges and Possibilities †

Abstract

:1. Introduction

- necessary conditions for lateral undulation locomotion in the presence of obstacles;

- lateral undulation is highly dependent on the actuator torque output and environmental friction;

- knowledge about the environment and its properties, in addition to its geometric representation, can be successfully exploited for improving locomotion performance for obstacle-aided locomotion.

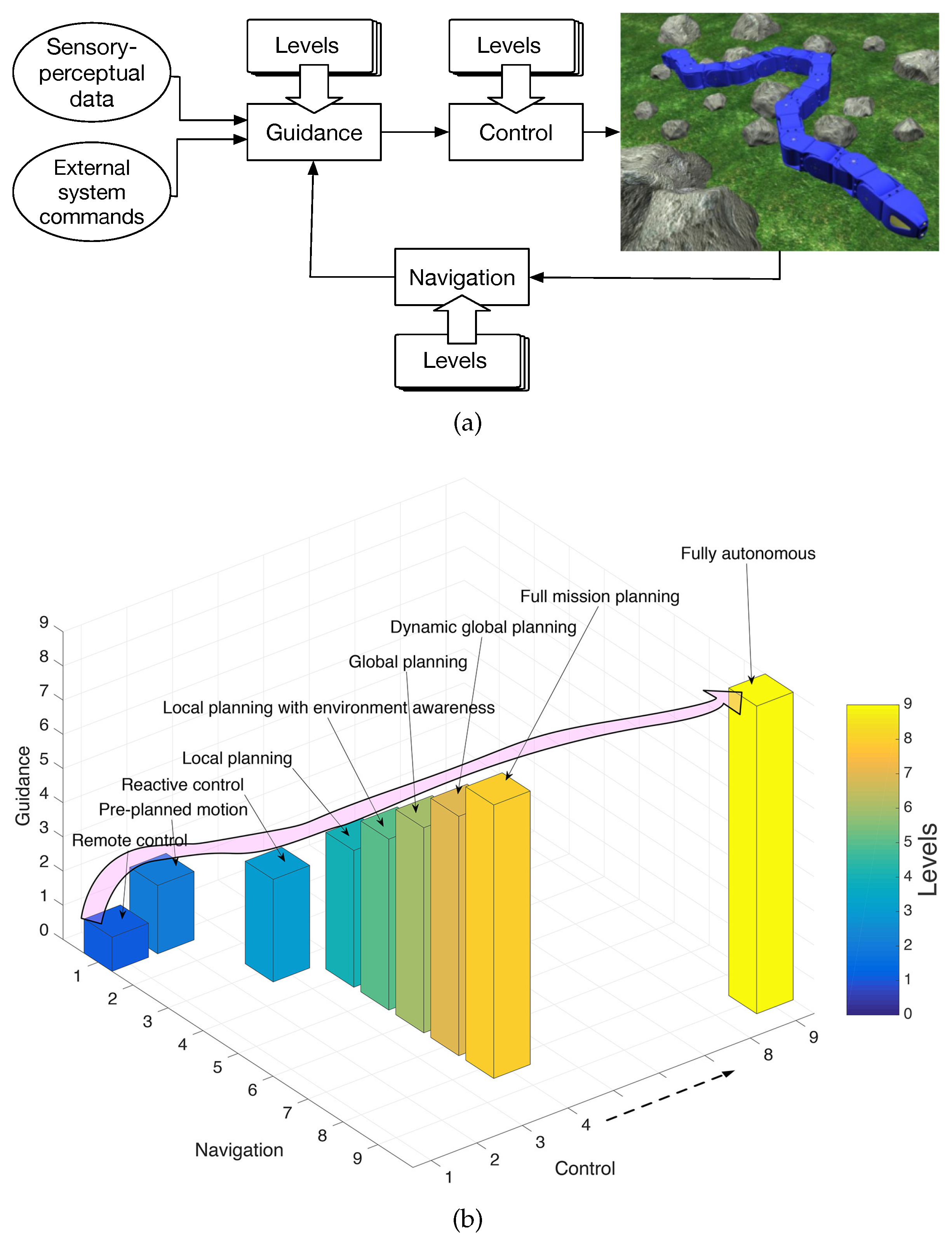

2. Challenges and Possibilities in the Context of Autonomy Levels for Unmanned Systems

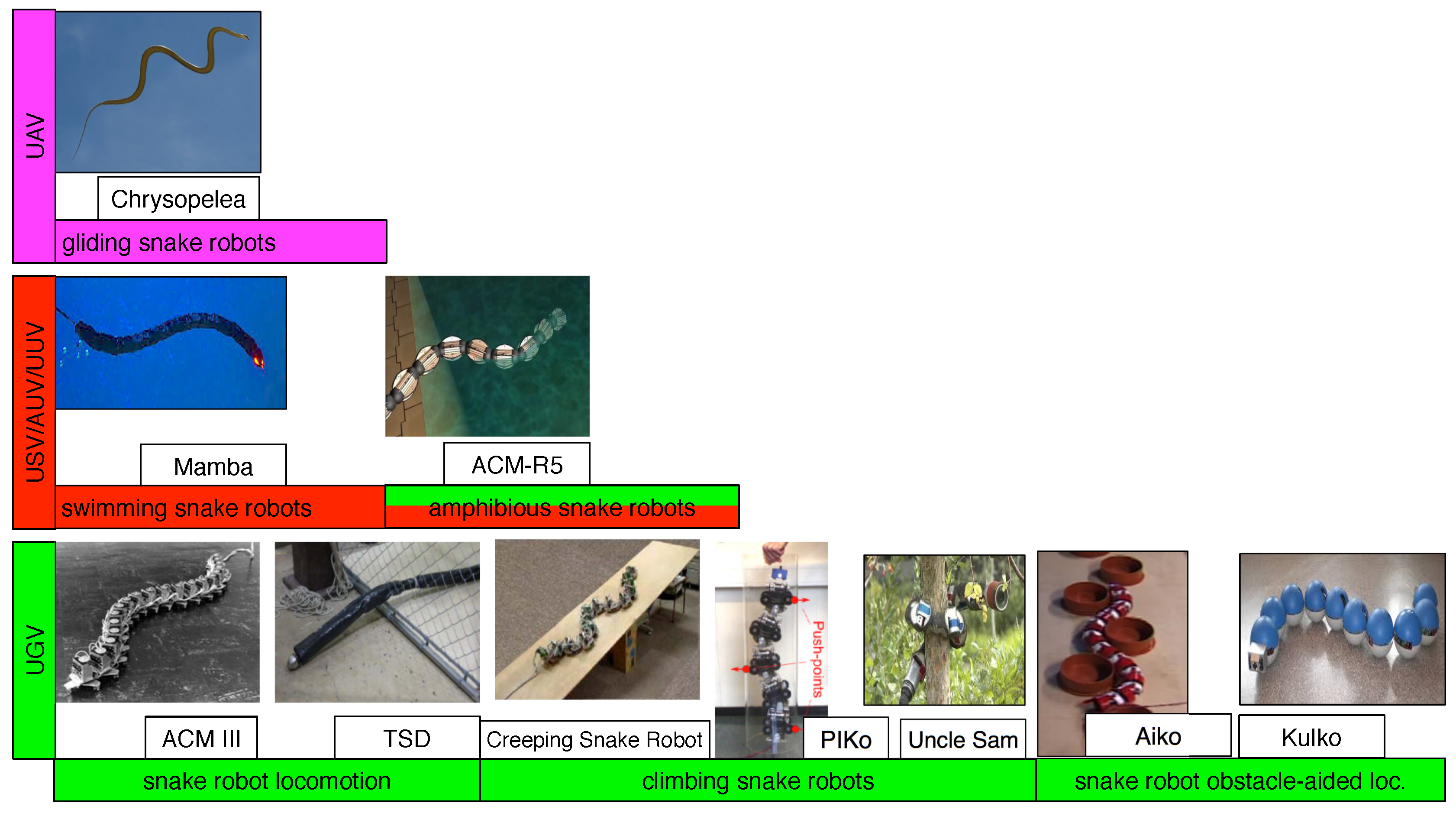

2.1. Classification of Snake Robots as Unmanned Vehicle Systems

- climbing slopes, pipes, or trees, such as the Creeping snake Robot [12], which is capable of obtaining an environmentally-adaptable body shape to climb slopes, or the PIKo snake robot [13], which is equipped with a mechanism for navigating complex pipe structures, or the Uncle Sam snake robot [14], which is provided with a strong and compact joint mechanism for climbing trees;

2.2. Similarities and Differences between Traditional Snake Robots and Snake Robots for Perception-Driven Obstacle-Aided Locomotion

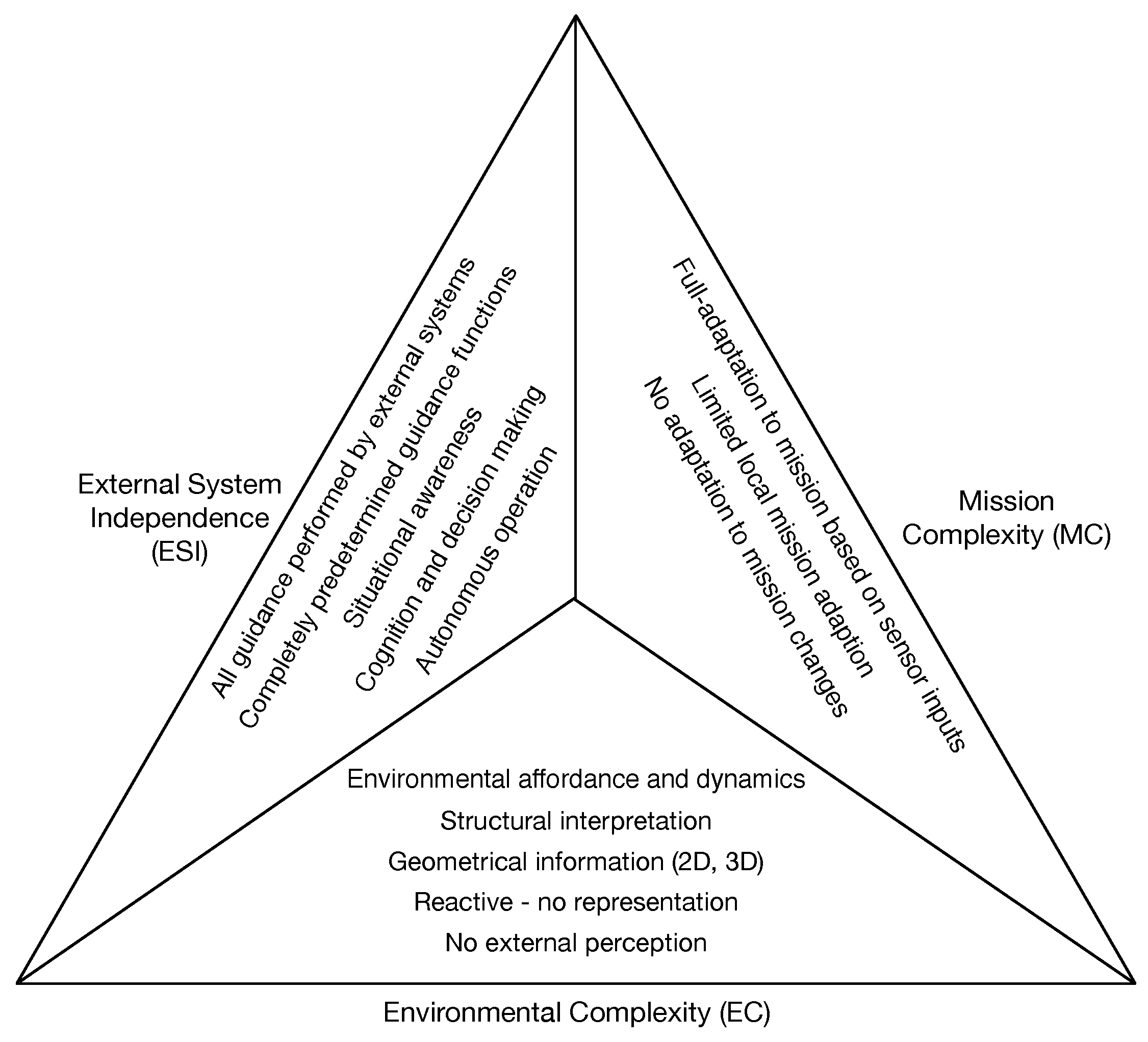

2.3. The ALFUS Framework for Snake Robot Perception-Driven Obstacle-Aided Locomotion

- All guidance performed by external systems. In order to successfully accomplish the assigned mission within a defined scope, the snake robot requires full guidance and interaction with either a human operator or other external systems;

- Completely predetermined guidance functions. All planning, guidance, and navigation actions are predetermined in advance based on perception. The snake robot is capable of very low adaptation to environmental changes;

- Situational awareness [21]. The snake robot has a higher level of perception and autonomy with high adaptation to environmental changes. The system is not only capable of comprehending and understanding the current situation, but it can also make an extrapolation or projection of the actual information forward in time to determine how it will affect future states of the operational environment;

- Cognition and decision making. The snake robot has higher levels of prehension, intrinsically safe cognition, and decision-making capacity for reacting to unknown environmental changes;

- Autonomous operation. The snake robot is capable of fully autonomous capabilities. The system can achieve its assigned mission successfully without any intervention from human or any other external system while adapting to different environmental conditions.

- No external perception. The snake robot executes a set of preprogrammed or planned actions in an open loop manner;

- Reactive—no representation. The snake robot does not generate an explicit environment representation, but the motion planner is able to react to sensor input feedback;

- Geometrical information (2D, 3D). Starting from sensor data, the snake robot can generate a geometric representation of the environment which is used for planning—typically for obstacle avoidance;

- Structural interpretation. The environment representation includes structural relationships between objects in the environment;

- Environmental affordance and dynamics. Higher-level entities and properties can be derived from the environment perception, including separate treatment for static and dynamic elements; different properties from the objects which the snake robot is interacting with might be of interest according to the specific task being performed.

- No adaptation to mission changes. The mission plan is predetermined, the snake robot is not capable of any adaptation to mission changes;

- Limited local mission adaptation. The snake robot has low adaptation capabilities to small, externally-commanded mission changes;

- Full-adaptation to mission based on sensor inputs. The snake robot has high and independent adaptation capabilities.

2.4. A Framework for Autonomy and Technology Readiness Assessment

3. Control Strategies for Obstacle-Aided Locomotion

3.1. Obstacle Avoidance

3.2. Obstacle Accommodation

3.3. Obstacle-Aided Locomotion

- it occurs over irregular ground with vertical projections;

- propulsive forces are generated from the lateral interaction between the mobile body and the vertical projections of the irregular ground, called push-points;

- at least three simultaneous push-points are necessary for this type of motion to take place;

- during the motion, the mobile body slides along its contacted push-points.

4. Environment Perception, Mapping, and Representation for Locomotion

- sensing, using the adequate sensor or sensor combinations to capture information about the environment;

- mapping, which combines and organises the sensing output in order to create a representation that can be exploited for the specific task to be performed by the robot;

- localisation, which estimates the robot’s pose in the environment representation according to the sensor inputs.

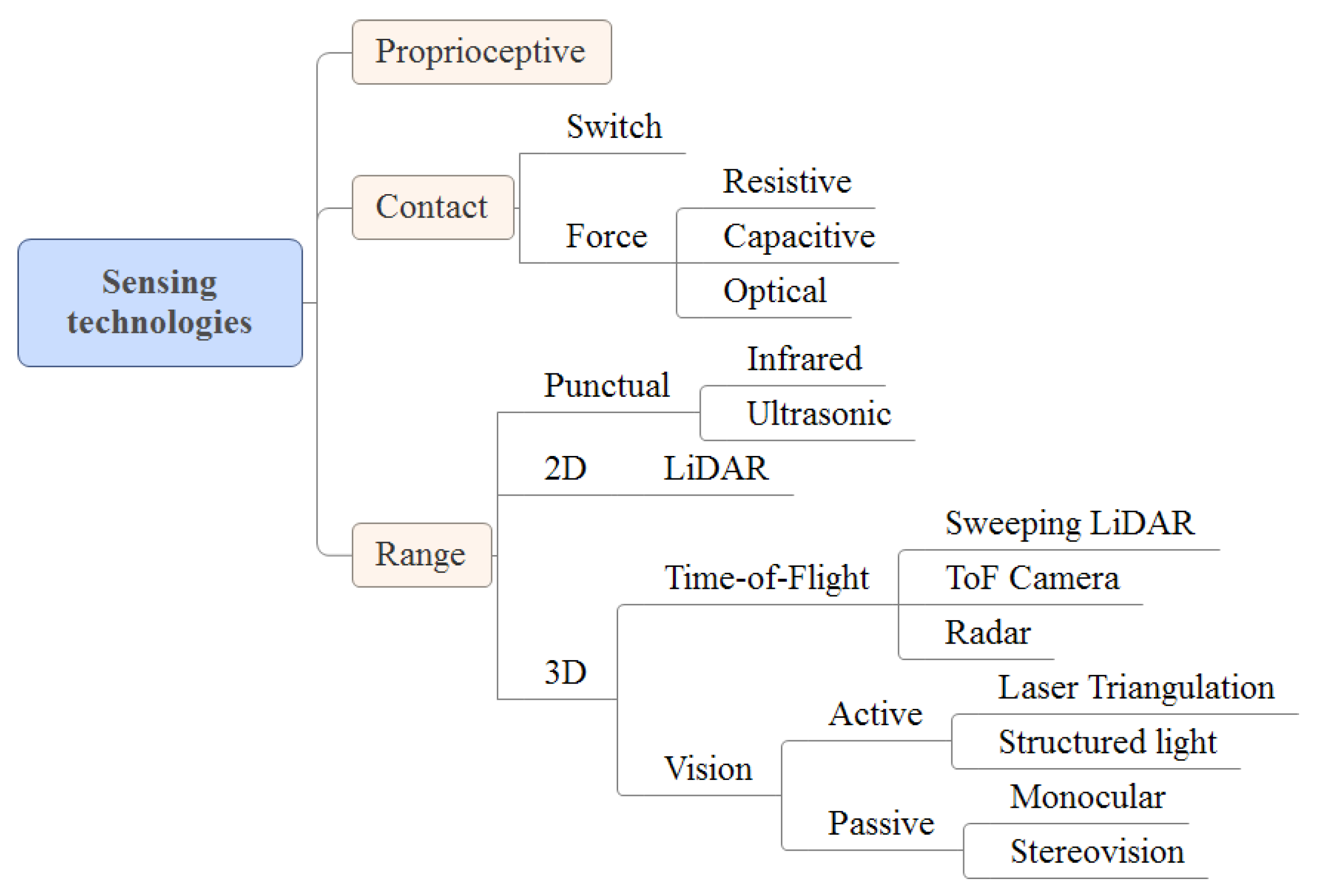

4.1. Sensor Technologies for Environment Perception for Navigation in Robotics

4.2. Survey of Environment Perception for Locomotion in Snake Robots

4.3. Other Relevant Sensor Technologies for Navigation in Non-Snake Robots

5. Concluding Remarks

Acknowledgments

Conflicts of Interest

References

- Perrow, M.R.; Davy, A.J. Handbook of Ecological Restoration: Volume 1, Principles of Restoration; Cambridge University Press: Cambridge, UK, 2008. [Google Scholar]

- Gray, J. The Mechanism of Locomotion in Snakes. J. Exp. Biol. 1946, 23, 101–120. [Google Scholar] [PubMed]

- Transeth, A.; Leine, R.; Glocker, C.; Pettersen, K.; Liljebäck, P. Snake Robot Obstacle-Aided Locomotion: Modeling, Simulations, and Experiments. IEEE Trans. Robot. 2008, 24, 88–104. [Google Scholar] [CrossRef]

- Holden, C.; Stavdahl, Ø.; Gravdahl, J.T. Optimal dynamic force mapping for obstacle-aided locomotion in 2D snake robots. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Chicago, IL, USA, 14–18 September 2014; pp. 321–328. [Google Scholar]

- Sanfilippo, F.; Azpiazu, J.; Marafioti, G.; Transeth, A.A.; Stavdahl, Ø.; Liljebäck, P. A review on perception-driven obstacle-aided locomotion for snake robots. In Proceedings of the 14th International Conference on Control, Automation, Robotics and Vision (ICARCV), Phuket, Thailand, 13–15 November 2016; pp. 1–7. [Google Scholar]

- Huang, H.M.; Pavek, K.; Albus, J.; Messina, E. Autonomy levels for unmanned systems (ALFUS) framework: An update. In Proceedings of the 2005 SPIE Defense and Security Symposium, Orlando, FL, USA, 28 March–1 April 2005; International Society for Optics and Photonics: Orlando, FL, USA, 2005; pp. 439–448. [Google Scholar]

- Kendoul, F. Towards a Unified Framework for UAS Autonomy and Technology Readiness Assessment (ATRA). In Autonomous Control Systems and Vehicles; Nonami, K., Kartidjo, M., Yoon, K.J., Budiyono, A., Eds.; Springer: Tokyo, Japan, 2013; pp. 55–71. [Google Scholar]

- Nonami, K.; Kartidjo, M.; Yoon, K.J.; Budiyono, A. Autonomous Control Systems and Vehicles: Intelligent Unmanned Systems; Springer Science & Business Media: Tokyo, Japan, 2013. [Google Scholar]

- Blasch, E.P.; Lakhotia, A.; Seetharaman, G. Unmanned vehicles come of age: The DARPA grand challenge. Computer 2006, 39, 26–29. [Google Scholar]

- Hirose, S. Biologically Inspired Robots: Snake-Like Locomotors and Manipulators; Oxford University Press: Oxford, UK, 1993. [Google Scholar]

- McKenna, J.C.; Anhalt, D.J.; Bronson, F.M.; Brown, H.B.; Schwerin, M.; Shammas, E.; Choset, H. Toroidal skin drive for snake robot locomotion. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA 2008), Pasadena, CA, USA, 19–23 May 2008; pp. 1150–1155. [Google Scholar]

- Ma, S.; Tadokoro, N. Analysis of Creeping Locomotion of a Snake-like Robot on a Slope. Auton. Robots 2006, 20, 15–23. [Google Scholar] [CrossRef]

- Fjerdingen, S.A.; Liljebäck, P.; Transeth, A.A. A snake-like robot for internal inspection of complex pipe structures (PIKo). In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2009), Saint Louis, MO, USA, 11–15 October 2009; pp. 5665–5671. [Google Scholar]

- Wright, C.; Buchan, A.; Brown, B.; Geist, J.; Schwerin, M.; Rollinson, D.; Tesch, M.; Choset, H. Design and architecture of the unified modular snake robot. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 4347–4354. [Google Scholar]

- Liljebäck, P.; Pettersen, K.; Stavdahl, Ø. A snake robot with a contact force measurement system for obstacle-aided locomotion. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–8 May 2010; pp. 683–690. [Google Scholar]

- Anderson, G.T.; Yang, G. A proposed measure of environmental complexity for robotic applications. In Proceedings of the 2007 IEEE International Conference on Systems, Man and Cybernetics, Montreal, QC, Canada, 7–10 October 2007; pp. 2461–2466. [Google Scholar]

- Kelasidi, E.; Liljebäck, P.; Pettersen, K.Y.; Gravdahl, J.T. Innovation in Underwater Robots: Biologically Inspired Swimming Snake Robots. IEEE Robot. Autom. Mag. 2016, 23, 44–62. [Google Scholar] [CrossRef]

- Chigisaki, S.; Mori, M.; Yamada, H.; Hirose, S. Design and control of amphibious Snake-like Robot ACM-R5. In In Proceedings of the 2005 JSME Conference on Robotics and Mechatronics, Kobe, Japan, 9–11 June 2005. [Google Scholar]

- Socha, J.J.; O’Dempsey, T.; LaBarbera, M. A 3-D kinematic analysis of gliding in a flying snake, Chrysopelea paradisi. J. Exp. Biol. 2005, 208, 1817–1833. [Google Scholar] [PubMed]

- Vagia, M.; Transeth, A.A.; Fjerdingen, S.A. A literature review on the levels of automation during the years. What are the different taxonomies that have been proposed? Appl. Ergon. 2016, 53 Pt A, 190–202. [Google Scholar] [CrossRef] [PubMed]

- Yanco, H.A.; Drury, J. “Where am I?” Acquiring situation awareness using a remote robot platform. In Proceedings of the 2004 IEEE International Conference on Systems, Man and Cybernetics, The Hague, The Netherlands, 10–13 October 2004; Volume 3, pp. 2835–2840. [Google Scholar]

- Liljebäck, P.; Pettersen, K.Y.; Stavdahl, Ø.; Gravdahl, J.T. Snake Robots: Modelling, Mechatronics, and Control; Springer Science & Business Media: London, UK, 2012. [Google Scholar]

- Chirikjian, G.S.; Burdick, J.W. The kinematics of hyper-redundant robot locomotion. IEEE Trans. Robot. Autom. 1995, 11, 781–793. [Google Scholar] [CrossRef]

- Ostrowski, J.; Burdick, J. The Geometric Mechanics of Undulatory Robotic Locomotion. Int. J. Robot. Res. 1998, 17, 683–701. [Google Scholar] [CrossRef]

- Prautsch, P.; Mita, T.; Iwasaki, T. Analysis and Control of a Gait of Snake Robot. IEEJ Trans. Ind. Appl. 2000, 120, 372–381. [Google Scholar]

- Liljebäck, P.; Pettersen, K.Y.; Stavdahl, Ø.; Gravdahl, J.T. Controllability and Stability Analysis of Planar Snake Robot Locomotion. IEEE Trans. Autom. Control 2011, 56, 1365–1380. [Google Scholar]

- Liljebäck, P.; Pettersen, K.Y.; Stavdahl, Ø.; Gravdahl, J.T. A review on modelling, implementation, and control of snake robots. Robot. Auton. Syst. 2012, 60, 29–40. [Google Scholar]

- Hu, D.L.; Nirody, J.; Scott, T.; Shelley, M.J. The mechanics of slithering locomotion. Proc. Natl. Acad. Sci. USA 2009, 106, 10081–10085. [Google Scholar] [CrossRef] [PubMed]

- Saito, M.; Fukaya, M.; Iwasaki, T. Serpentine locomotion with robotic snakes. IEEE Control Syst. 2002, 22, 64–81. [Google Scholar] [CrossRef]

- Tang, W.; Reyes, F.; Ma, S. Study on rectilinear locomotion based on a snake robot with passive anchor. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; pp. 950–955. [Google Scholar]

- Transeth, A.A.; Pettersen, K.Y.; Liljebäck, P. A survey on snake robot modeling and locomotion. Robotica 2009, 27, 999–1015. [Google Scholar] [CrossRef]

- Lee, M.C.; Park, M.G. Artificial potential field based path planning for mobile robots using a virtual obstacle concept. In Proceedings of the 2003 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM 2003), Port Island, Japan, 20 July–24 July 2003; Volume 2, pp. 735–740. [Google Scholar]

- Ye, C.; Hu, D.; Ma, S.; Li, H. Motion planning of a snake-like robot based on artificial potential method. In Proceedings of the 2010 IEEE International Conference on Robotics and Biomimetics (ROBIO), Tianjin, China, 14–18 December 2010; pp. 1496–1501. [Google Scholar]

- Yagnik, D.; Ren, J.; Liscano, R. Motion planning for multi-link robots using Artificial Potential Fields and modified Simulated Annealing. In Proceedings of the 2010 IEEE/ASME International Conference on Mechatronics and Embedded Systems and Applications (MESA), Qingdao, China, 5–17 July 2010; pp. 421–427. [Google Scholar]

- Nor, N.M.; Ma, S. CPG-based locomotion control of a snake-like robot for obstacle avoidance. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 347–352. [Google Scholar]

- Shan, Y.; Koren, Y. Design and motion planning of a mechanical snake. IEEE Trans. Syst. Man Cybern. 1993, 23, 1091–1100. [Google Scholar] [CrossRef]

- Shan, Y.; Koren, Y. Obstacle accommodation motion planning. IEEE Trans. Robot. Autom. 1995, 11, 36–49. [Google Scholar] [CrossRef]

- Kano, T.; Sato, T.; Kobayashi, R.; Ishiguro, A. Decentralized control of scaffold-assisted serpentine locomotion that exploits body softness. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 5129–5134. [Google Scholar]

- Kano, T.; Sato, T.; Kobayashi, R.; Ishiguro, A. Local reflexive mechanisms essential for snakes’ scaffold-based locomotion. Bioinspir. Biomim. 2012, 7, 046008. [Google Scholar] [CrossRef] [PubMed]

- Bayraktaroglu, Z.Y.; Blazevic, P. Understanding snakelike locomotion through a novel push-point approach. J. Dyn. Syst. Meas. Control 2005, 127, 146–152. [Google Scholar] [CrossRef]

- Bayraktaroglu, Z.Y.; Kilicarslan, A.; Kuzucu, A. Design and control of biologically inspired wheel-less snake-like robot. In Proceedings of the First IEEE/RAS-EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob 2006), Pisa, Italy, 20–22 February 2006; pp. 1001–1006. [Google Scholar]

- Gupta, A. Lateral undulation of a snake-like robot. Master’s Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2007. [Google Scholar]

- Andruska, A.M.; Peterson, K.S. Control of a Snake-Like Robot in an Elastically Deformable Channel. IEEE/ASME Trans. Mechatron. 2008, 13, 219–227. [Google Scholar] [CrossRef]

- Kamegawa, T.; Kuroki, R.; Travers, M.; Choset, H. Proposal of EARLI for the snake robot’s obstacle aided locomotion. In Proceedings of the 2012 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), College Station, TX, USA, 5–8 November 2012; pp. 1–6. [Google Scholar]

- Kamegawa, T.; Kuroki, R.; Gofuku, A. Evaluation of snake robot’s behavior using randomized EARLI in crowded obstacles. In Proceedings of the 2014 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Toyako-cho, Japan, 27–30 October 2014; pp. 1–6. [Google Scholar]

- Liljebäck, P.; Pettersen, K.Y.; Stavdahl, Ø.; Gravdahl, J.T. Compliant control of the body shape of snake robots. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 4548–4555. [Google Scholar]

- Travers, M.; Whitman, J.; Schiebel, P.; Goldman, D.; Choset, H. Shape-Based Compliance in Locomotion. In Proceedings of the Robotics: Science and Systems (RSS), Cambridge, MA, USA, 12–16 July 2016; Number 5. p. 10. [Google Scholar]

- Whitman, J.; Ruscelli, F.; Travers, M.; Choset, H. Shape-based compliant control with variable coordination centralization on a snake robot. In Proceedings of the IEEE 55th Conference on Decision and Control (CDC). IEEE, Las Vegas, NV, USA, 12–14 December 2016; pp. 5165–5170. [Google Scholar]

- Ma, S.; Ohmameuda, Y.; Inoue, K. Dynamic analysis of 3-dimensional snake robots. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; Volume 1, pp. 767–772. [Google Scholar]

- Sanfilippo, F.; Stavdahl, Ø.; Marafioti, G.; Transeth, A.A.; Liljebäck, P. Virtual functional segmentation of snake robots for perception-driven obstacle-aided locomotion. In Proceedings of the IEEE Conference on Robotics and Biomimetics (ROBIO), Qingdao, China, 3–7 December 2016; pp. 1845–1851. [Google Scholar]

- Thrun, S.; Burgard, W.; Fox, D. Probabilistic Robotics; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Boyle, J.H.; Johnson, S.; Dehghani-Sanij, A.A. Adaptive Undulatory Locomotion of a C. elegans Inspired Robot. IEEE/ASME Trans. Mechatron. 2013, 18, 439–448. [Google Scholar] [CrossRef]

- Gong, C.; Tesch, M.; Rollinson, D.; Choset, H. Snakes on an inclined plane: Learning an adaptive sidewinding motion for changing slopes. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2014), Chicago, IL, USA, 14–18 September 2014; pp. 1114–1119. [Google Scholar]

- Taal, S.; Yamada, H.; Hirose, S. 3 axial force sensor for a semi-autonomous snake robot. In Proceedings of the IEEE International Conference on Robotics and Automation, (ICRA ’09), Kobe, Japan, 12–17 May 2009; pp. 4057–4062. [Google Scholar]

- Bayraktaroglu, Z.Y. Snake-like locomotion: Experimentations with a biologically inspired wheel-less snake robot. Mech. Mach. Theory 2009, 44, 591–602. [Google Scholar] [CrossRef]

- Gonzalez-Gomez, J.; Gonzalez-Quijano, J.; Zhang, H.; Abderrahim, M. Toward the sense of touch in snake modular robots for search and rescue operations. In Proceedings of the ICRA 2010 Workshop on Modular Robots: State of the Art, Anchorage, AK, USA, 3–8 May 2010; pp. 63–68. [Google Scholar]

- Wu, X.; Ma, S. Development of a sensor-driven snake-like robot SR-I. In Proceedings of the 2011 IEEE International Conference on Information and Automation (ICIA), Shenzhen, China, 6–8 June 2011; pp. 157–162. [Google Scholar]

- Liljebäck, P.; Stavdahl, Ø.; Pettersen, K.; Gravdahl, J. Mamba—A waterproof snake robot with tactile sensing. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2014), Chicago, IL, USA, 14–18 September 2014; pp. 294–301. [Google Scholar]

- Paap, K.; Christaller, T.; Kirchner, F. A robot snake to inspect broken buildings. In Proceedings of the 2000 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2000), Takamatsu, Japan, 30 October–5 November 2000; Volume 3, pp. 2079–2082. [Google Scholar]

- Caglav, E.; Erkmen, A.M.; Erkmen, I. A snake-like robot for variable friction unstructured terrains, pushing aside debris in clearing passages. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2007), San Diego, CA, USA, 29 October–2 November 2007; pp. 3685–3690. [Google Scholar]

- Sfakiotakis, M.; Tsakiris, D.P.; Vlaikidis, A. Biomimetic Centering for Undulatory Robots. In Proceedings of the First IEEE/RAS-EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob 2006), Pisa, Italy, 20–22 February 2006; pp. 744–749. [Google Scholar]

- Wu, Q.; Gao, J.; Huang, C.; Zhao, Z.; Wang, C.; Su, X.; Liu, H.; Li, X.; Liu, Y.; Xu, Z. Obstacle avoidance research of snake-like robot based on multi-sensor information fusion. In Proceedings of the 2012 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guangzhou, China, 11–14 December 2012; pp. 1040–1044. [Google Scholar]

- Chavan, P.; Murugan, M.; Vikas Unnikkannan, E.; Singh, A.; Phadatare, P. Modular Snake Robot with Mapping and Navigation: Urban Search and Rescue (USAR) Robot. In Proceedings of the 2015 International Conference on Computing Communication Control and Automation (ICCUBEA), Pune, India, 26–27 February 2015; pp. 537–541. [Google Scholar]

- Tanaka, M.; Kon, K.; Tanaka, K. Range-Sensor-Based Semiautonomous Whole-Body Collision Avoidance of a Snake Robot. IEEE Trans. Control Syst. Technol. 2015, 23, 1927–1934. [Google Scholar] [CrossRef]

- Pfotzer, L.; Staehler, M.; Hermann, A.; Roennau, A.; Dillmann, R. KAIRO 3: Moving over stairs & unknown obstacles with reconfigurable snake-like robots. In Proceedings of the 2015 European Conference on Mobile Robots (ECMR), Lincoln, UK, 2–4 September 2015; pp. 1–6. [Google Scholar]

- Tian, Y.; Gomez, V.; Ma, S. Influence of two SLAM algorithms using serpentine locomotion in a featureless environment. In Proceedings of the IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 182–187. [Google Scholar]

- Kon, K.; Tanaka, M.; Tanaka, K. Mixed Integer Programming-Based Semiautonomous Step Climbing of a Snake Robot Considering Sensing Strategy. IEEE Trans. Control Syst. Technol. 2016, 24, 252–264. [Google Scholar] [CrossRef]

- Ponte, H.; Queenan, M.; Gong, C.; Mertz, C.; Travers, M.; Enner, F.; Hebert, M.; Choset, H. Visual sensing for developing autonomous behavior in snake robots. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 2779–2784. [Google Scholar]

- Ohno, K.; Nomura, T.; Tadokoro, S. Real-Time Robot Trajectory Estimation and 3D Map Construction using 3D Camera. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 5279–5285. [Google Scholar]

- Fjerdingen, S.A.; Mathiassen, J.R.; Schumann-Olsen, H.; Kyrkjebø, E. Adaptive Snake Robot Locomotion: A Benchmarking Facility for Experiments. In European Robotics Symposium 2008; Bruyninckx, H., Přeučil, L., Kulich, M., Eds.; Number 44 in Springer Tracts in Advanced Robotics; Springer: Berlin/Heidelberg, Germany, 2008; pp. 13–22. [Google Scholar]

- Mobedi, B.; Nejat, G. 3-D Active Sensing in Time-Critical Urban Search and Rescue Missions. IEEE/ASME Trans. Mechatron. 2012, 17, 1111–1119. [Google Scholar]

- Labbé, M.; Michaud, F. Online global loop closure detection for large-scale multi-session graph-based SLAM. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 2661–2666. [Google Scholar]

- Agrawal, M.; Konolige, K.; Bolles, R.C. Localization and Mapping for Autonomous Navigation in Outdoor Terrains: A Stereo Vision Approach. In Proceedings of the IEEE Workshop on Applications of Computer Vision (WACV ’07), Austin, TX, USA, 21–22 February 2007. [Google Scholar]

- Weiss, S.; Achtelik, M.W.; Lynen, S.; Chli, M.; Siegwart, R. Real-time onboard visual-inertial state estimation and self-calibration of MAVs in unknown environments. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 957–964. [Google Scholar]

- Fontana, R.J.; Richley, E.A.; Marzullo, A.J.; Beard, L.C.; Mulloy, R.W.T.; Knight, E.J. An ultra wideband radar for micro air vehicle applications. In Proceedings of the 2002 IEEE Conference on Ultra Wideband Systems and Technologies, Digest of Papers, Baltimore, MD, USA, 21–23 May 2002; pp. 187–191. [Google Scholar]

- Dudek, G.; Jenkin, M. Computational Principles of Mobile Robotics; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Surmann, H.; Nüchter, A.; Hertzberg, J. An autonomous mobile robot with a 3D laser range finder for 3D exploration and digitalization of indoor environments. Robot. Auton. Syst. 2003, 45, 181–198. [Google Scholar] [CrossRef]

- Sansoni, G.; Trebeschi, M.; Docchio, F. State-of-the-art and applications of 3D imaging sensors in industry, cultural heritage, medicine, and criminal investigation. Sensors 2009, 9, 568–601. [Google Scholar] [CrossRef] [PubMed]

- Henry, P.; Krainin, M.; Herbst, E.; Ren, X.; Fox, D. RGB-D mapping: Using Kinect-style depth cameras for dense 3D modeling of indoor environments. Int. J. Robot. Res. 2012, 31, 647–663. [Google Scholar] [CrossRef]

- Foix, S.; Alenya, G.; Torras, C. Lock-in Time-of-Flight (ToF) Cameras: A Survey. IEEE Sens. J. 2011, 11, 1917–1926. [Google Scholar] [CrossRef]

- Scharstein, D.; Szeliski, R. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-Time Single Camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef] [PubMed]

- Fox, C.; Evans, M.; Pearson, M.; Prescott, T. Tactile SLAM with a biomimetic whiskered robot. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 4925–4930. [Google Scholar]

- Pearson, M.; Fox, C.; Sullivan, J.; Prescott, T.; Pipe, T.; Mitchinson, B. Simultaneous localisation and mapping on a multi-degree of freedom biomimetic whiskered robot. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 586–592. [Google Scholar]

- Xinyu, L.; Matsuno, F. Control of snake-like robot based on kinematic model with image sensor. In Proceedings of the 2003 IEEE International Conference on Robotics, Intelligent Systems and Signal Processing, Changsha, Hunan, China, 8–13 October 2003; Volume 1, pp. 347–352. [Google Scholar]

- Zhang, Z. Iterative point matching for registration of free-form curves and surfaces. Int. J. Comput. Vis. 1994, 13, 119–152. [Google Scholar] [CrossRef]

- Xiao, X.; Cappo, E.; Zhen, W.; Dai, J.; Sun, K.; Gong, C.; Travers, M.; Choset, H. Locomotive reduction for snake robots. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 3735–3740. [Google Scholar]

- Yamakita, M.; Hashimoto, M.; Yamada, T. Control of locomotion and head configuration of 3D snake robot (SMA). In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA ’03), Taipei, Taiwan, 14–19 September 2003; Volume 2, pp. 2055–2060. [Google Scholar]

- Galindo, C.; Fernández-Madrigal, J.A.; González, J.; Saffiotti, A. Robot task planning using semantic maps. Robot. Auton. Syst. 2008, 56, 955–966. [Google Scholar] [CrossRef]

- Oberländer, J.; Klemm, S.; Heppner, G.; Roennau, A.; Dillmann, R. A multi-resolution 3-D environment model for autonomous planetary exploration. In Proceedings of the 2014 IEEE International Conference on Automation Science and Engineering (CASE), New Taipei, Taiwan, 18–22 August 2014; pp. 229–235. [Google Scholar]

- Karaman, S.; Frazzoli, E. Sampling-based algorithms for optimal motion planning. Int. J. Robot. Res. 2011, 30, 846–894. [Google Scholar] [CrossRef]

| Obstacle Avoidance | Obstacle Accommodation | Obstacle-Aided Locomotion |

|---|---|---|

| [32,33,34,35] | [36,37] | [3,4,40,41,42,43,44,45,46,47,48] |

| Sensor/Sensing Technology | Pros | Cons | References |

|---|---|---|---|

| Proprioceptive | No need for additional payload. | Depends on accuracy of the robot’s model. Low level of detail. Does not allow to plan in advance. | [52,53] |

| Contact/Force | Bioinspired. Suitable for simple obstacle-aided locomotion. | Low level of detail. Reactive, does not allow to plan in advance. | [10,15,54,55,56,57,58] |

| Proximity (US and IR) | Suitable for simple obstacle-aided locomotion. Allows for some lookahead planning. | Low level of detail. Additional payload. | [59,60,61,62] |

| LiDAR | Well-known sensor in robotics community. Provides dense information about environment. | Usually requires sweeping and/or rotating movement for full 3D perception. | [62,63,64,65,66,67] |

| Laser triangulation | Provides high accuracy measurements. | Very limited measurement range. Requires sweeping movement. Limitations in dynamic environments. | [68] |

| ToF camera | Provides direct 3D measurements. | Low resolution, low accuracy. Not suitable for outdoor operation. | [69,70] |

| Structured light—Temporal coding | Provides direct 3D measurements. High accuracy, high resolution. | Limited measurement range. Not suitable for outdoor operation. Limitations in dynamic environments. Sensor size. | Non-snake: [71] |

| Structured light—Light coding | Provides direct 3D measurements. Small sensor size. | Noisy measurements. Not suitable for outdoor operation. | Non-snake: [72] |

| Stereovision | High accuracy, high resolution, wide range. | Measuring range limited by available baseline. Computationally demanding. Dependent on texture. | Non-snake: [73] |

| Monocular—SfM | Small sensor, lightweight, low power. Wide measurement range. No active lighting. | Computationally demanding. Dependent on texture. Scale ambiguity. | Non-snake: [74] |

| Radar (e.g., UWB) | Sense through obstacles. | Mechanical or electronic sweeping required. Computationally demanding. | Non-snake: [75] |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sanfilippo, F.; Azpiazu, J.; Marafioti, G.; Transeth, A.A.; Stavdahl, Ø.; Liljebäck, P. Perception-Driven Obstacle-Aided Locomotion for Snake Robots: The State of the Art, Challenges and Possibilities †. Appl. Sci. 2017, 7, 336. https://doi.org/10.3390/app7040336

Sanfilippo F, Azpiazu J, Marafioti G, Transeth AA, Stavdahl Ø, Liljebäck P. Perception-Driven Obstacle-Aided Locomotion for Snake Robots: The State of the Art, Challenges and Possibilities †. Applied Sciences. 2017; 7(4):336. https://doi.org/10.3390/app7040336

Chicago/Turabian StyleSanfilippo, Filippo, Jon Azpiazu, Giancarlo Marafioti, Aksel A. Transeth, Øyvind Stavdahl, and Pål Liljebäck. 2017. "Perception-Driven Obstacle-Aided Locomotion for Snake Robots: The State of the Art, Challenges and Possibilities †" Applied Sciences 7, no. 4: 336. https://doi.org/10.3390/app7040336

APA StyleSanfilippo, F., Azpiazu, J., Marafioti, G., Transeth, A. A., Stavdahl, Ø., & Liljebäck, P. (2017). Perception-Driven Obstacle-Aided Locomotion for Snake Robots: The State of the Art, Challenges and Possibilities †. Applied Sciences, 7(4), 336. https://doi.org/10.3390/app7040336