1. Introduction

Collaborative virtual environments (CVEs) are computer simulated settings that allow multiple people (the users) from disparate locations to work collaboratively on a task. Such a collaborative task requires each user to share information and delegate activities in order to achieve a common goal. The applications of CVEs have been increased drastically due to the improved performance of personal computers and networks that they operate on.

Research efforts of CVEs have mainly focused on investigating whether factors such as communication, awareness, and presence enhance user experience. These factors let the users feel the sense of being immersed in an environment and enable them to interact socially with each other [

1,

2]. For the factor of communication, the tools and methods were designed to transmit information through various networked media in order to facilitate collaborative tasks [

3,

4]. In contrast, the factor of awareness relies heavily on the users’ mental perception of their surroundings and their exercise of this perception to aid their communication [

5,

6]. Furthermore, the factor of presence allows the users to have a sense of working at the same location [

7]. The users are more inclined to interact with collocated users at a high degree of presence, rather than with remote users at a low degree of presence [

8]. As for interactive strategy design, research studies have concentrated on the feasibility and efficiency of task accomplishment, such as allowing multiple users to implement simultaneous manipulation in efficient and usable manner rather than single-user manipulation [

9]. Nevertheless, these research activities did not take into account the users’ level of expertise. When the users have shared a common ground of knowledge and have been at the same level of expertise, each of them has worked autonomously as their interactive strategy to perform a collaborative task [

10]. In the case that the users have had different levels of expertise, their interactive strategy has commonly been for an expert to guide a novice using different tools [

11]. Most research efforts of CVEs need to deploy one interactive strategy among the users. The efforts rarely consider the effect of interactive strategies on performing a collaborative task, especially when the users have different levels of expertise. Thus, we have investigated three interactive strategies for undertaking a collaborative task between two users with different levels of expertise. The investigation is the first step towards discovering general rules of applying these strategies to facilitate collaborative tasks in different scenarios. For instance, learning tasks, surgical training, and maintenance tasks may require different interactive strategies, respectively, in order to achieve desired performance outcomes.

The strategies under investigation were inspired from human/robot interactions, especially from Sheridan’s work [

12]. To perform a collaborative task, interactive strategies between two users with different levels of expertise could be similar to those in human/robot systems. In a human/robot system, the human operator possesses a high level of expertise and is a supervisor and/or controller of movements, whereas the robot has a low level of expertise and is the executor of the movements. Many studies exist on human/robot interactions [

13,

14], especially for optimizing the operator’s intervention to the robot’s movements and the division of tasks between the operator and robot, etc. In general, human/robot interactions fall into a spectrum encased by two extreme types [

12]. One type is tele-operation, in which the human operator directs every movement of the robot; another type is autonomous, in which the robot runs pre-defined routines without any intervention from the operator. Within the spectrum is the flexible type of tele-assistance, in which the operator may intervene at certain degrees with particular movements of the robot.

Accordingly, we designed a CVE for the investigation where two users must work collaboratively to complete a task. One user was an expert corresponding to the role of the human operator; another was a novice playing the role of the robot. The CVE and the task to be undertaken were proven solutions used in our previous study which demonstrated ventral/dorsal streams possessing complementary function at making collaborative venture effectively [

15]. Both expert and novice collaboratively undertook the task by deploying the following three interactive strategies, which on one hand allow the task to not be beyond the scope of cooperation, while on the other hand permitting the novice various degrees of autonomy from scratch, to less, to more:

Tele-Operation, under which the novice had no autonomy and executed all step-by-step operations decided by the expert for completing the task.

Tele-Assistance3, under which the novice received each sub-goal of the task from the expert and possessed a degree of autonomy to devise an operation plan of achieving the sub-goal and to execute the plan. The expert could overrule the operations if necessary.

Tele-Assistance7, under which the novice had more degree of autonomy than under Tele-Assistance3 to decide and act upon operations for completing a sub-goal, because the expert would not interfere with the operations of the novice.

Three experiments were performed to observe the effect of interaction strategies on the task performance of an expert user and a participant working on a collaborative task. Each experiment consisted of the expert user interacting with the participant using two different interaction strategies. To keep the expert’s level of expertise consistent, we always employed the developer of the CVE as the expert to pair with each novice (a human participant). Thus, we evaluated the performance of the novice under each interactive strategy by recording and analyzing four parameters: the completion time of the task, the error in performing the task, the number of operation steps, and the overall workload.

The experiment results revealed that different interaction strategies help to facilitate the performance of a collaborative task in different ways. In particular, Tele-Operation is a suitable interaction strategy in situations where there are no communication delays between people, and where the expert user clearly knows what should be done at every step of the task. Tele-Operation allows the task to be completed in less time and error, as well as less workload for the novice. Tele-Assistance7 shows the power of tele-assistance. Although more time and larger error are obtained, Tele-Assistance7 shows that people actually used lesser amount of moves to perform the task compared to Tele-Operation. Tele-Assistance3 shows the advantages of both Tele-Operation and Tele-Assistance7 to complete the task efficiently. Giving the expert user the power to veto the participant’s actions allows the task to be completed in a shorter time and a decrease in the overall workload perceived by the participant, as compared to Tele-Assistance7.

The outcome of this investigation could aid in choosing an optimal interactive strategy for performing a collaborative task between an expert and a novice. There are many practical collaborative tasks involving these users, such as in remote maintenance tasks requiring a remote expert and a field worker who is not familiar to the task, and in surgical training tasks involving a skilled surgeon and a trainee, etc. The observations demonstrate that different interaction strategies affect the task performance in different ways. One should identify key performance parameters of a collaborative task when considering an interaction strategy for facilitating the task. Then, it is essential to assess the influence of the interaction strategy on these parameters.

This paper was organized as follows:

Section 2 describes the design of the CVE;

Section 3 presents the deployment of three interactive strategies;

Section 4 details the experimental methodology and results of the investigation;

Section 5 gives a general discussion about the findings and the application of these strategies.

2. CVE Design

The CVE, developed using C++ along with the toolkits Qt and OpenGL, applied visual communication to facilitate the interaction between the expert and novice. We utilized a WYSIWIS (what you see is what I see) view and WYSIWID (what you see is what I do) display widgets to create awareness between the expert and novice in the CVE. On completing a task, both expert and novice collaborated side-by-side to invoke a sense of co-presence. By varying the interactive strategy incorporated into the CVE, we observed the performance of the novice executing a collaborative task with the expert.

2.1. Devices

In this CVE, two users (the expert and novice) needed to complete a collaborative task.

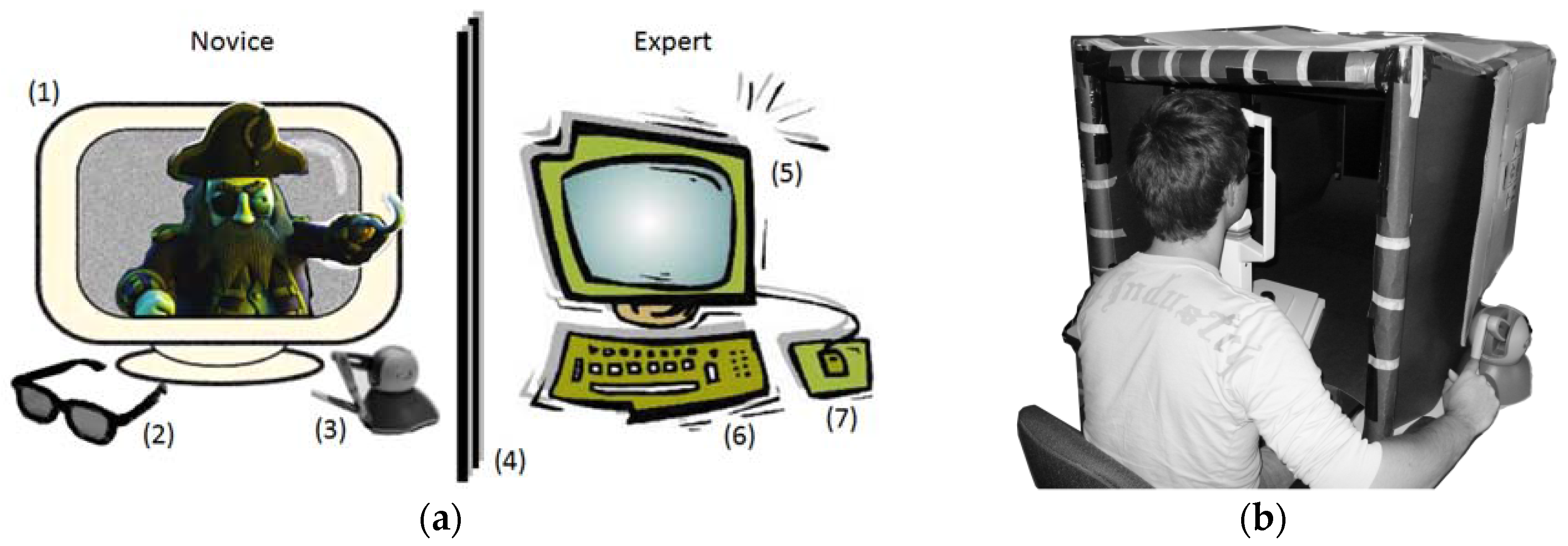

Figure 1 illustrates the devices used in the CVE and their setup. The expert saw some objects associated with the task through a non-stereoscopic LCD monitor, whereas the novice viewed some objects of the task via a three-dimensional (3D) stereoscopic LCD monitor with a pair of polarized 3D glasses. The use of a dual video output extended the display of the objects onto both monitors from the same PC (Dell Precision T5500 with Dual Intel

® Xeon

® Quad Core 2.53 GHz processor, 4 GB of memory, and a NVIDIA

® Quadro

® FX 4800 graphics card). The expert used the keyboard and mouse to operate in two-dimensional (2D) space to guide the novice executing in 3D space. The novice utilized a haptic device (PHANToM Omni

® Haptic Device), as a 6 degrees-of-freedom (DOF) input device to interact with objects in 3D space. Lastly, there was a divider placed between the expert and the novice preventing them from seeing each other’s monitors, as the expert had access to some objects which were absent on the novice’s monitor.

2.2. Collaborative Task

In our collaborative task, both expert and novice manipulated two identical objects: A (a manipulator object) and B (a target object). The orientation of object A was initially different from that of object B, causing both objects to have a dissemblance with each other. The goal of the task was to manipulate object A using a series of translational and rotational operations until it appeared to resemble object B. The translational and rotational operations were mathematical operations described using linear algebra. At any time, only one translational or rotational operation could be applied onto the object A or B.

The expert and novice assumed different roles in the task, and they had their own strengths and weaknesses at undertaking the task. The expert, with a full perspective of both objects A and B, oversaw the entire CVE in 2D space. By utilizing the mouse, the expert could move object B in 2D space but could not directly maneuver object A. The novice, with a 3D stereoscopic view of object A, could perform operations on object A with fine adjustments in 3D space. However, the novice could not see the target object B in the CVE, so he/she could not plan the set of operations himself. Overall, to achieve the final goal of the task, the expert and the novice had to collaborate with each other: the expert was always aware of the final goal and gave instructions to the novice; the novice followed the instructions and completed them.

2.3. Objects

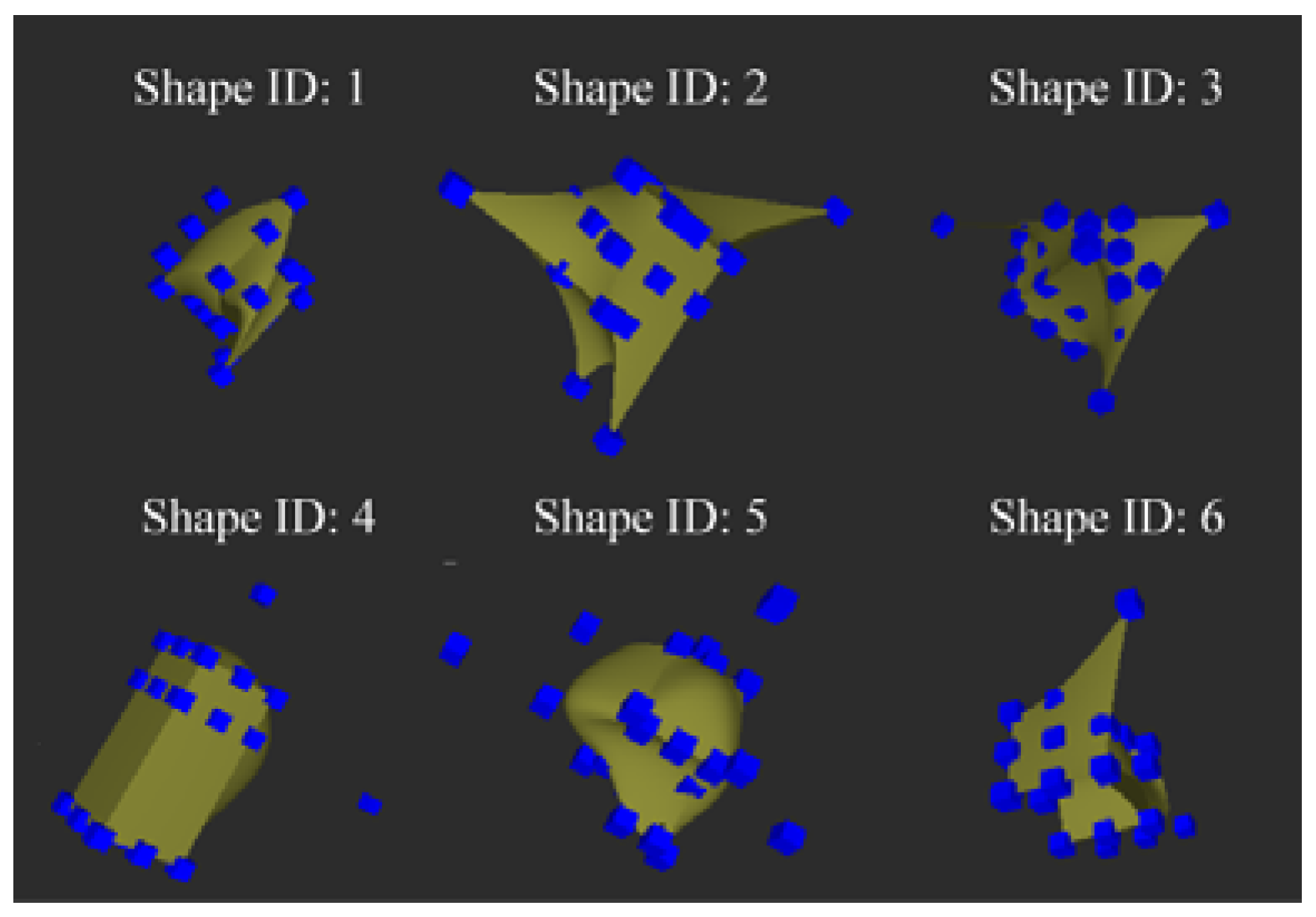

Figure 2 depicts the all eight different objects presented in the CVE for the execution of the collaborative task:

- (1)

Widget indicator: It consisted of two arrows and three dashed circles. Its main function was to communicate the mode of an operation (i.e., translation through one of the arrows or rotation via one of the circular dials) that should be applied to the manipulator object A. Both expert and novice had a view of the widget indicator. The expert employed it to instruct an operation, which the novice should execute on the manipulator object.

- (2)

Target object: The design of the target object

B combined different characteristics of geon objects [

16] with certain manipulations of the object (

Figure 3). Geon objects do not occur in nature and are not easily identifiable by individuals. These objects are widely used to study object recognition [

17,

18]. Only the expert had a view and control of the target object

B.

- (3)

Reference point: It appeared in the monitors of both expert and novice. However, it only appeared when both expert and novice collaborated together using Tele-Assistance7 or Tele-Assistance3 as an interactive strategy. The reference point functioned to instruct the novice how far the expert expected him/her to move the manipulator object A. Only the expert had the ability of specifying the location of the reference point anywhere in the CVE; the novice used this location as a sub-goal to be reached.

- (4)

Starting point: The initial location and orientation of manipulator object A and target object B were recorded. This allowed the expert to remember the starting state of the cooperation task.

- (5)

Manipulator object: It was a geon object identical to the target object B. Both expert and novice could view the manipulator object A on their display monitors. Differing from the target object that only the expert could view and control, the novice (but not the expert) could perform translations and rotations on the manipulator object.

- (6)

Control points: They were small cubic points on the surface of both target and manipulator objects, as shown in

Figure 2. These control points served the following three purposes: as gauges showing the novice how far away a sub-goal needed to be reached; as handles for maneuvering the manipulator object

A; and as indicators depicting whether the novice had successfully grabbed onto the manipulator object in order to move it.

- (7)

Selected control point: It was one of the control points on the manipulator object, which the novice chose in order to grab and move the manipulator object. Once chosen by a virtual probe which corresponded to the interface point of a haptic device, the selected control point changed its color from blue to pink.

- (8)

Go signal: Its color turned from red to green to signal the novice to initiate his/her operation on the manipulator object, following the instruction of the expert set on the widget indicator. The go signal changed its color from green to red after the novice completed his/her operation.

In the CVE, the expert had a view of all eight objects on the non-stereoscopic monitor, whereas the novice could see all objects except the target object on the 3D stereoscopic monitor. By using these objects, the expert guided the novice to maneuver the manipulator object until it had the same position and orientation with respect to the starting point as the target object. That is, the manipulator object resembled the target object. By having the ability of controlling all objects except moving the manipulator object, the expert instructed and provided guidance to the novice via the operation widget indicator or the reference point. In responding to the guidance, the novice then performed the following actions: select a control point on the manipulator object by using the haptic device and move the manipulator object as instructed. The novice took these actions without the privilege of seeing the target object. The guidance from the expert differed from each interactive strategy.

3. Interactive Strategies

Three different interactive strategies—Tele-Operation, Tele-Assistance3, and Tele-Assistance7— were imposed on the expert and novice to perform the collaborative task. We derived these interactive strategies from Sheridan’s 10 levels of tele-assistance [

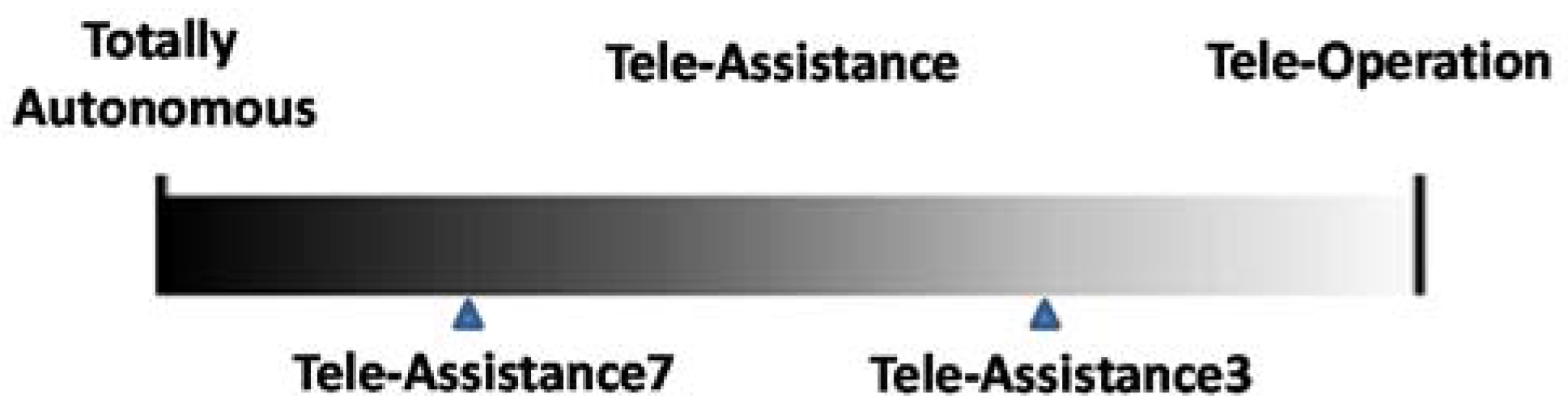

12]. The interactive strategies, as illustrated in

Figure 4, gave the novice various degrees of autonomy to collaborate with the expert on performing the task.

3.1. Tele-Operation

Under Tele-Operation, the novice had no autonomy and executed all step-by-step operations determined by the expert in order to complete the task. To instruct an operation, the expert used the keyboard/mouse to manipulate an arrow or a dial on the widget indicator. The arrow or dial changed from yellow to green to signal the novice which operation to perform. The length of the arrow conveyed the instruction of how far the manipulator object should be translated along the direction of the arrow, while the arc of a dial gave the instruction of how much the manipulator object needed to be rotated about the direction perpendicular to the dial.

The novice duplicated the instructed operation once the go signal turned green from red. By using the haptic device, the novice moved the manipulator object with a virtual probe, shaped like a matchstick with a red sphere (corresponding to the haptic interface point of the device) attached to a blue rod. The novice used the sphere of the virtual probe to locate and select one of the control points on the manipulator object, causing the rest control points to turn from blue to red. The novice then moved the manipulator object, as instructed by the widget indicator, until the color of the widget indicator changed from green to yellow. Note that the novice could only move the manipulator object along the direction indicated by the widget indicator by using the haptic device as a 6DOF input.

3.2. Tele-Assistance3

Under Tele-Assistance3, the expert followed the Bernstein model of a coordinative structure to break down a final goal of the task into a set of sub-goals [

19] and used these sub-goals to guide the novice. The final goal of the task under Tele-Assistance3 was the same as that under Tele-Operation. By receiving each sub-goal of the task from the expert, the novice possessed a degree of autonomy to devise an operation plan of achieving the sub-goal and to execute the plan. The expert would interfere with the operations of the novice.

To convey a sub-goal from the expert to the novice, we introduced an object, the reference point as shown in

Figure 2, into the CVE. The reference point along with the widget indicator was used by the expert to instruct the novice on a sub-goal that the novice needed to reach. The expert used the keyboard/mouse to highlight a control point on the manipulator object by changing the control point from blue to pink to place the reference point somewhere with the CVE and to make the widget indicator to signify whether a translational or rotational operation should be performed. That is, the sub-goal conveyed by the expert was as follows: translate (or rotate) the manipulator object until the distance between the pink control point on the manipulator object and the reference point was as close as possible.

Then, the novice needed to identify the location of the reference point and used the virtual probe to move (translate or rotate) the manipulator object in the same way as under Tele-Operation, in order to permit the pink control point on the manipulator object to be as close to the reference point as possible. With respect to the reference point, the novice had to plan a course of operations (multiple translations or rotations) and to execute the operations to ensure the shortest distance between the pink control point of the manipulation object and the reference point as possible. The expert had authority to undo the operations undertaken by the novice and to use the widget indicator steering the novice towards an intended sub-goal. This authority of veto would occur in the situation that the novice had misinterpreted the intention of the sub-goal. Through using the widget indicator, the expert thus could communicate concretely the intention of the sub-goal. In this situation, the novice would then follow the step-by-step instructions given by the expert to achieve the sub-goal (i.e., same as under Tele-Operation). Consequently, Tele-Assistance3 gave the novice a certain degree of autonomy which Tele-Operation could not offer at all.

3.3. Tele-Assistance7

In the same way as Tele-Assistance3, Tele-Assistance7 permitted the novice to decide an operation plan and act upon the plan in order to complete a sub-goal. However, the expert under Tele-Assistance7 had no authority to undo the operations undertaken by the novice. The novice had the freedom to devise and execute an operation plan by selecting a series of operations among many possible alternatives. The expert’s lack of authority to exercise vetoes increased the degree of autonomy that the novice possessed under Tele-Assistance3. Thus, the degree of autonomy for the novice under Tele-Assistance7 was more than those under Tele-Operation and Tele-Assistance3, as shown in

Figure 4.

4. Experiments

Three experiments were conducted to observe the effect of the three interactive strategies on the task performance of the novice. Each experiment consisted of the expert collaborating with each novice (a human participant naïve to the purpose of the experiment) under two different interactive strategies. To keep the expert’s level of expertise consistent, we always employed the developer of the CVE as the expert in all experiments. Thus, the expert’s level of expertise had no contribution to the effect of the interactive strategies. In each experiment, we recorded for every novice four task performance parameters and compared these parameters pair-wisely between two interactive strategies. These task performance parameters were as follows:

Completion time of the task: It was defined as the period of execution between the first and last operations that the novice performed to accomplish the collaborative task.

Error in performing the task: It was determined by calculating the Euclidean distance between the control points on the manipulator object and the corresponding counterparts on the target object as follows:

where (

xm,

ym,

zm) are the coordinate of each control point on the manipulator object, and (

xt,

yt,

zt) are the coordinate of the corresponding control point on the target object.

Number of operations: It was recorded by counting the number of times that the color of the control points on the manipulator object changed from blue to red.

Overall workload: It was assessed by completing a valid NASA Task Load Index (TLX) questionnaire [

20].

We conducted a pilot test prior to commencing each experiment. The pilot test served the following two purposes: to ensure the proper design of the experiment and to estimate the sample size of participants (assuming the role of the novice) in order to attain sufficient power of statistical significance on analyzing each performance parameter. In order to have a proper experiment design, a three-period cross-over experiment was carried out firstly, which allowed three conditions (Tele-Operation, Tele-Assistance3, and Tele-Assistance7) to be performed at the same time. This experimental design used the participants as their own control and every participant was given the same set of conditions, which inherently minimized any variability caused by participants. In the pilot test, one stumbling factor encountered while employing this experimental design was the amount of time required for a participant to complete all the conditions, which surpassed the fatigue limit (i.e., visual fatigue and operation fatigue). On this account, a two-period two conditions cross-over design was adopted. By using this experimental design, a pair-wise comparison between each condition was made, so that the varying effect on performance could be observed with different levels of tele-assistance. This experimental design allowed the experiment to be completed within the allotted time of 1.5 h.

The pilot study was also conducted to estimate the sample size of participants for all experiments. The sample size was computed using the following equation, according to Jones and Kenward [

21]:

where

is the pooled estimated variance;

is the z-value at a 2-sided significance level of α;

is the z-value with the given power of test at β; and

is the mean differences of performance parameters between different interaction strategies.

The pilot study provided data for the above parameters. Moreover, for calculating the parameters, it was assumed that the measurements of the task performance parameters followed a normal distribution, and that the task performance of individuals involved in a collaborative task were affected by the interaction strategies.

For all experiments, we obtained an ethics clearance following the Canadian Tri-Council guidelines.

4.1. Experiment I: Tele-Operation vs. Tele-Assistance7

This experiment investigated the task performance of the two interactive strategies—Tele-Operation and Tele-Assistance7, respectively.

Participants: There were a total of 22 participants (assuming the role of the novice) between the ages of 20 and 30 years old, more than the minimum sample size (11) determined from the pilot test. All participants were naïve to the purpose of the experiment, possessed normal or corrected-to-normal vision with a stereo acuity of at least 40° arc (determined by using the Randot Stereotest), and had no difficulty in recognizing colors under the Ishihara color-blindness test. As ascertained by using a modified version of the Edinburgh Handedness Inventory [

22], they were all strongly right-handed for holding the stylus of the haptic device while performing the task.

Procedure: To decrease the variability among the participants and to counterbalance the inter-effect between both interactive strategies, we adopted a within-participant, cross-over design for the experiment. That is, half of the participants undertook the collaborative task under the Tele-Operation format first and then under the Tele-Assistance7 format, while the other half of the participants performed the task in the reverse order.

Under one interactive strategy, each participant underwent six sessions. As a practice session, the first session allowed the participant to become familiarized with the CVE and his/her role in the collaborative task. This session used the object of Shape ID: 6, as depicted in

Figure 3, as both target and manipulator objects. The rest of the sessions consisted of five testing sessions to record raw data for computing all performance parameters. In each testing session, one of the objects from Shape ID: 1 to Shape ID: 5, as shown in

Figure 3, functioned as both target and manipulator objects. The order of the testing sessions was randomized under each interactive strategy. The use of the six objects decreased the chance of the participant remembering previously viewed manipulator objects, since humans have a short-term memory capacity of approximately four items [

23].

In each session, the manipulator object was randomly placed some distance away and reoriented from the starting point. Upon having finished all testing sessions under one interactive strategy, the participant completed a NASA-TLX questionnaire [

20] to assess his/her perceived workload of the task. Each participant engaged in the experiment for approximately 1.5 h, including the time needed to complete the NASA-TLX questionnaire. The order of the two interactive strategies was counterbalanced for all participants.

Data analyses and results: Data analyses used the Wilcoxon-signed-rank test to examine whether the interactive strategies have any significant effect on each performance parameter. A p-value less than 0.05 indicated a statistical significance, as in most studies on human performance.

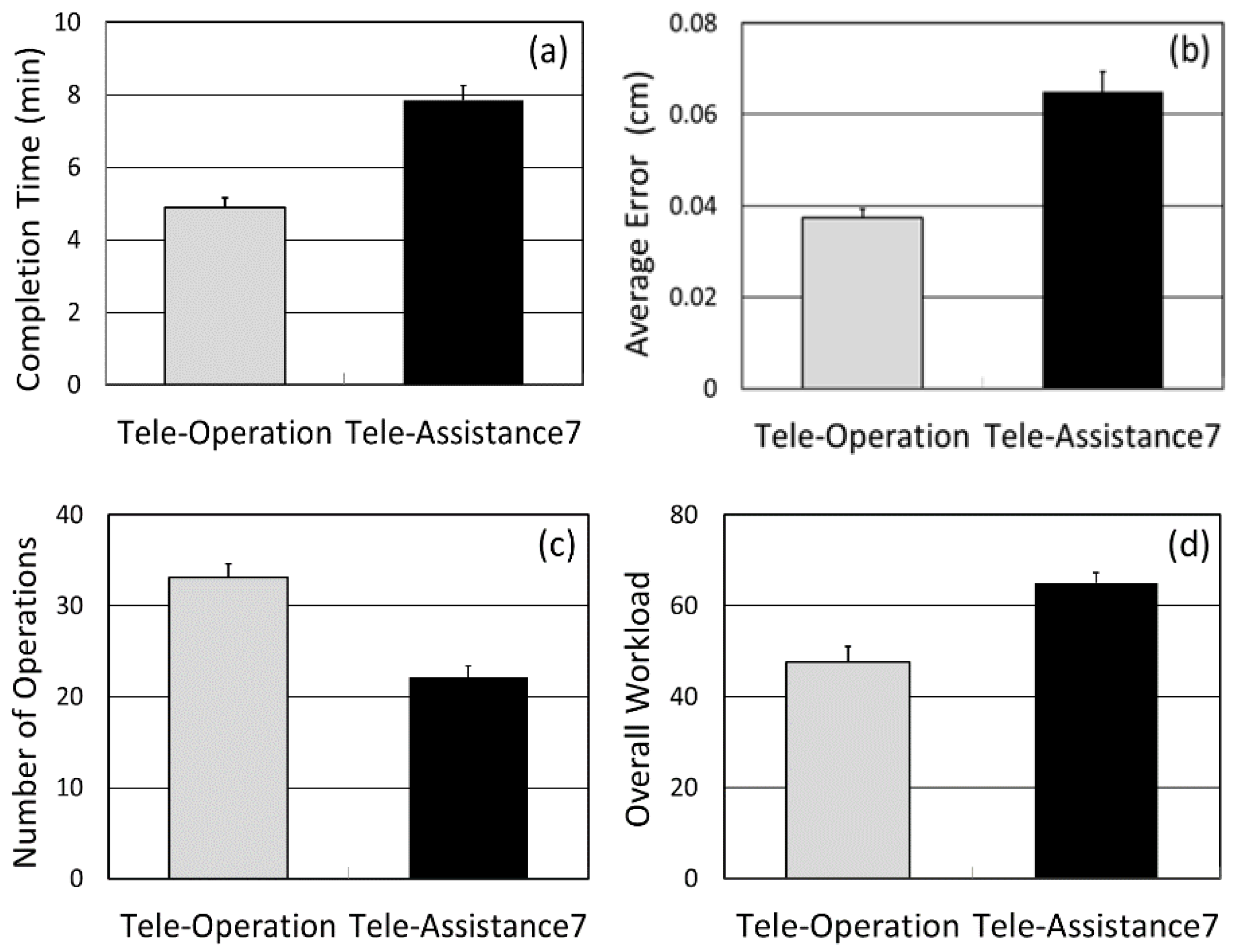

On average, the participants took less time to complete the collaborative task under Tele-Operation (4.88 ± 0.07 min) than under Tele-Assistance7 (7.85 ± 1.90 min), as shown in

Figure 5a. The difference of the completion time between Tele-Operation and Tele-Assistance7 was statistically significant (Z = −4.107;

p < 0.05). As illustrated in

Figure 5b, the participants made more accurate operations under Tele-Operation with an error of 0.037 ± 0.002 cm, compared to under Tele-Assistance7 with an error of 0.065 ± 0.004 cm. A Wilcoxon-signed-rank test confirmed the observation that the participants under Tele-Operation were able to attain a greater degree of precision than under Tele-Assistance7 (Z = −4.010;

p < 0.05). On average, the participants took a greater number of operations under Tele-Operation (33.10 ± 1.51 steps) than Tele-Assistance7 (22.15 ± 1.22 steps), as revealed in

Figure 5c. This difference of moves was statistically significant too (Z = −3.913;

p < 0.05). In addition, the overall workload perceived by the participants was different between Tele-Operation and Tele-Assistance7. Specifically, the participants under the Tele-Operation perceived a lower workload (47.67 ± 3.35) compared to under Tele-Assistance7 (65.02 ± 2.30), as depicted in

Figure 5d. The difference of these workloads was statistically significant (Z = −3.866;

p < 0.05).

Discussion: For executing the task, the mental computation of the novice could play an important role in the completion time of the task. Under Tele-Operation, the novice was in some extent required to interpret the expert’s intention for every operation but had no need to plan the course of operations. That is, under this format the expert shoulders the whole burden of the planning. Fortunately, the expert (the developer of the CVE) was familiar with the task and undertook the planning before the experiment. Thus, the completion time of the task depended heavily upon the length of each novice’s mental computation of the operations. Under Tele-Assistance7, however, the novice had to understand what needed to be done and to compute the shortest distance between the selected control point on the manipulator object and the reference point. This certainly elevated the demand of the novice’s mental computation and, in turn, resulted in a longer time required for the computation. In other words, both expert and novice shared the burden of planning the course of operations. Therefore, the completion time of the task was shorter under Tele-Operation than under Tele-Assistance7, mirroring the lower workload of the novice under Tele-Operation than under Tele-Assistance7.

Additionally, there existed a smaller error in performing the task under Tele-Operation than under Tele-Assistance7. This may result from the preciseness of the operations commanded by the expert under Tele-Operation. Despite resulting in more time and errors in the process of performing the task, Tele-Assistance7 had an advantage of facilitating the collaboration. The novice was able to complete the task with less number of operations under Tele-Assistance7 than under Tele-Operation. This implies that the break down of the sub-goals of the task, as explained in

Section 3, permitted the novice to have certain autonomy in finding an efficient way in completing the task. This is because in Tele-Operation, the number of steps decomposed by the expert was not the least to accomplish the task, however, in Tele-Operation7, the set of sub-goal steps was the key operations to achieving the final goal. Under Tele-Assistance7, on one hand the expert gave his intention of operations through a series of sub-goals and communicated these sub-goals via a high-level symbolic language—using the widget indicator, the selected control point on the manipulator object, and the reference point; on the other hand, the novice used the haptic device as a 6 DOF input device and had the ability of performing fine movements in 3D space. Consequently, the novice could find relatively optimal sequences amongst many alternatives for each sub-goal.

4.2. Experiment II: Tele-Operation vs. Tele-Assistance3

This experiment compared the effect of Tele-Operation on performing the collaborative task to that of Tele-Assistance3. In Experiment II, we utilized the same CVE and devices as those in Experiment I.

Participants: There were a total of 16 participants (assuming the role of the novice, although one of them experienced a behavior disorder during the experiment and the data collected could not be used), according to the sample size determined from a pilot study. Although within the same age group as the participants in Experiment I, all of these participants differed from those in Experiment I. Similarly, all of these participants were naïve to the purpose of Experiment II. They underwent the same vision and handedness tests as those in Experiment I.

Procedure: The procedure of Experiment II was the same as that of Experiment I, except under Tele-Assistance3 rather than Tele-Assistance7. We recorded and computed the same four performance parameters.

Data analyses and results: As in Experiment I, we employed the same data analyses. We found that the average completion time under Tele-Operation (4.40 ± 0.22 min) was shorter than under Tele-Assistance3 (6.11 ± 0.21 min), as shown in

Figure 6a. The difference of the completion time between Tele-Operation and Tele-Assistance3 was statistically significant (Z = −3.351;

p < 0.05). As illustrated in

Figure 6b, Tele-Operation yielded an average error of 0.03 ± 0.01 cm, whereas Tele-Assistance3 resulted in an average error of 0.05 ± 0.01 cm. The difference between these errors was statically significant (Z = −3.237;

p < 0.05). For the number of operations, the participants took, on average, 12.01 ± 0.42 steps and 5.11 ± 0.25 steps under Tele-Operation and Tele-Assistance3, respectively, revealing a significant difference of these steps (Z = −3.412;

p < 0.05) as seen in

Figure 6c. However, the participants perceived the overall workload to be at the similar level for both Tele-Operation (57.09 ± 3.73) and Tele-Assistance3 (58.47 ± 3.00). There was no statistically significant difference observed between the perceived workload for either format (Z = −0.350;

p > 0.05). As depicted in

Figure 6d, the finding of the overall workload in Experiment II departed from that in Experiment I.

Discussion: Compared to Tele-Assistance3, Tele-Operation permitted the novice to complete the collaborative task in less time and with fewer errors, but a greater number of operations. These observations were similar to those in Experiment I when comparing between Tele-Operation and Tele-Assistnce7. However, the expert had the ability of overriding the novice’s operations under Tele-Assistance3. The same ability was absent under Tele-Assistance7. It seemed that this ability did not affect the capabilities of both expert and novice in maneuvering the manipulator object while performing the collaborative task. Interestingly, there was no significant difference of the overall workload perceived by the novice under Tele-Operation and Tele-Assistance3. The novice followed the commands directed by the expert under Tele-Operation, whereas he/she had to do more under Tele-Assistance3 in terms of interpreting the intentions of the expert, devising accordingly his/her own plan of operations, and executing the plan. The overriding ability of the expert reduced the novice’s degree of autonomy. This might have prevented the novice from taking his/her responsibility seriously in terms to the perceived need to ensure the success of his/her operations. Consequently, the novice perceived the overall workload under Tele-Assistance3 to be the same as under Tele-Operation.

4.3. Experiment III: Tele-Assistance3 vs. Tele-Assistance7

This experiment compared the effect of Tele-Assistance7 on performing the collaborative task with that of Tele-Assistance3. We used the same CVE and devices as those for Experiment I and Experiment II.

Participants: There were a total of 18 participants (assuming the role of the novice), according to the sample size determined from a pilot study. All participants were in the same age group as those in Experiment I and were naïve to the purpose of the experiment. They underwent the same vision and handedness tests as those in Experiment I. However, these 18 participants differed from their counterparts in Experiment I and Experiment II.

Procedure: The procedure of Experiment III was the same as that of Experiment I, with the exception that it followed the format of Tele-Assistance3 rather than Tele-Operation. We recorded and computed the same four performance parameters.

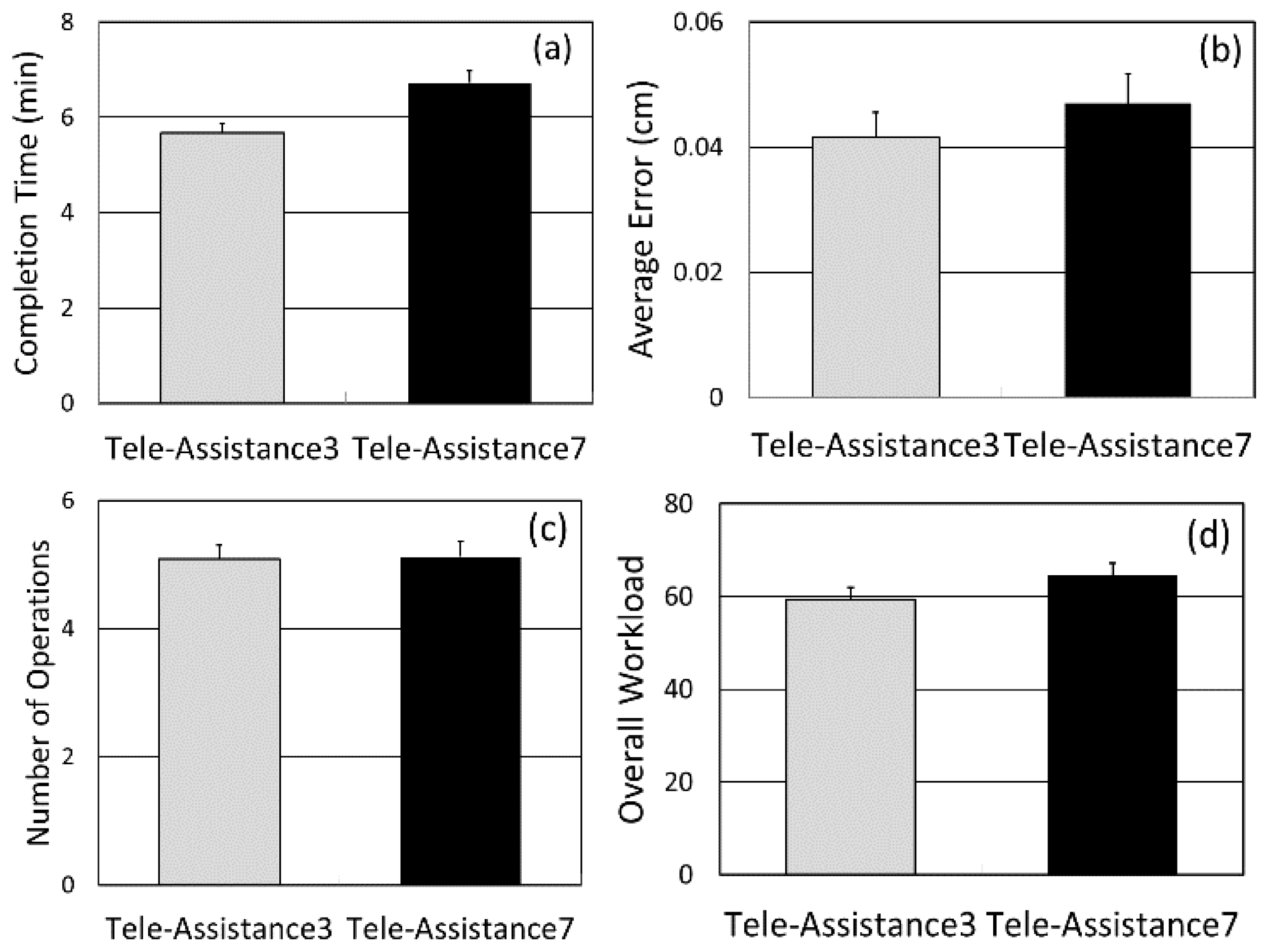

Data analyses and results: The same data analyses were implemented as in Experiment I and Experiment II. UnderTele-Assistance3, the participants used a shorter length of time to complete the task than under Tele-Assistance7. As shown in

Figure 7a, the participants took, on average, 5.77 ± 0.21 min to finish the task under Tele-Assistance3, compared to 6.70 ± 0.25 min under Tele-Assistance7. These interactive strategies yielded a significant difference in the completion time (Z = −2.461;

p < 0.05). However, the errors of performing the task between the two interactive strategies were not significantly different (Z = −0.980;

p > 0.05). As illustrated in

Figure 7b, Tele-Assistance3 yielded an error of 0.045 ± 0.004 cm, whereas Tele-Assistance7 generated an error of 0.051 ± 0.004 cm. Similarly, the interactive strategies produced no significant difference in the number of operations either (Z = −0.047;

p > 0.05). On average, the participants executed respectively 5.09 ± 0.22 operations under Tele-Assistance3 and 5.14 ± 0.24 operations under Tele-Assistance7, as shown in

Figure 7c. Moreover, the participants perceived the overall workload to be less under Tele-Assistance3 (59.38 ± 2.52) than under Tele-Assistance7 (64.56 ± 2.57), as revealed in

Figure 7d. This difference in the overall workload was statistically significant (Z = −2.580;

p < 0.05).

Discussion: This experiment examined the task performance when the novice performed the collaborative task with the expert under different degrees of autonomy. In performing the task under Tele-Assistance3 and Tele-Assistance7, there were similarities, but a few distinct differences. The less time needed to complete the task under Tele-Assistance3 than Tele-Assistance7 might imply that the added overriding ability of the expert facilitated the novice in finishing the collaborative task faster. This addition between the expert and the novice had no significant impact on the number of operations used by the novice and the errors made while performing the task. This finding indicates that the high-level and symbolic communication used in the CVE aided in transmitting commands and maneuvering the manipulator object efficiently. The expert’s overriding ability helped to reduce the overall workload perceived by the novice, because of his/her decreased autonomy (and responsibility). The novice might translate this decreased sense of responsibility in his/her ability to understand the expert’s intention and perform the task with ease.

5. General Discussion and Application

We conducted three experiments to compare the effects of three interactive strategies on the task performance.

Table 1 summarizes the four parameters used to measure the task performance under each interactive strategy. The values of these parameters were averaged from their counterparts in the three experiments. Generally, Tele-Operation allowed the novice to complete the task in the shortest length of time and with the smallest amount of errors in performing the task, compared to both Tele-Assistance3 and Tele-Assistance7. The novice used much fewer operations and carried more workload under Tele-Assistance7 than under Tele-Operation. Tele-Assistance3 exhibited the characteristics between Tele-Operation and Tele-Assisatnce7 for these parameters, except the number of operations were comparable to that of Tele-Assitance7. These observations suggest that interactive strategies affect the task performance of the novice in a collaborative task. This is associated with the novice’s level of autonomy in performing the task: the higher level of autonomy reflects a higher level of responsibility, resulting in a higher level of perceived workload, completion time, and error in performing the task. Nevertheless, the higher level of responsibility yields a lower number of operations for efficiency.

To summarize, these results suggest that when considering interaction strategies used to facilitate collaboration that one should consider the different parameters that can affect the performance of the collaborative task. The above evaluation of the effect of different interaction strategies on these parameters will allow one to select an optimal interaction strategy to accomplish a collaborative task with the best performance. If a short completion time of the task and/or a little amount of errors are the key parameters, Tele-Operation is a suitable interactive strategy. For example, this strategy can be used in helping children with dysgraphia to develop handwriting skills. By utilizing Tele-Operation, a teacher (expert) can guide a student with dysgraphia (novice) step-by-step to undertake various tracing tasks in order to help the student to develop fine motor movements required for handwriting. In contrast, Tele-Assistance7 shows the practicality of tele-assistance. Although Tele-Assistance7 requires more time and produces larger errors in completing the collaborative task than Tele-Operation, the novice actually deploys fewer operations in order to perform the task more efficiently. Thus, Tele-Assistance7 can relieve some burden in the communication between the expert and the novice. This strategy would be suitable for performing a maintenance task involving both a remote expert and a field worker. The reduced number of operations would result in the decrease of maintenance cost, if expensive equipment is required in such maintenance. Tele-Assistance3 could be used for a collaborative task, in which the number of operations is the key parameter and other parameters need to be balanced. This strategy could be used to train surgical trainees for proper techniques, where a skilled surgeon could give instructions to a trainee to perform a surgical task, and the trainee would devise the actions of the task. By observing how the trainee undertakes the task, the surgeon could intervene (and veto) the trainee’s actions in order to directly guide the trainee’s actions. In short, our assessment of three interactive strategies offers an analytical base for tailoring these strategies to certain collaborative tasks in the future.