Case-Based FCTF Reasoning System

Abstract

:1. Introduction

2. Preliminaries

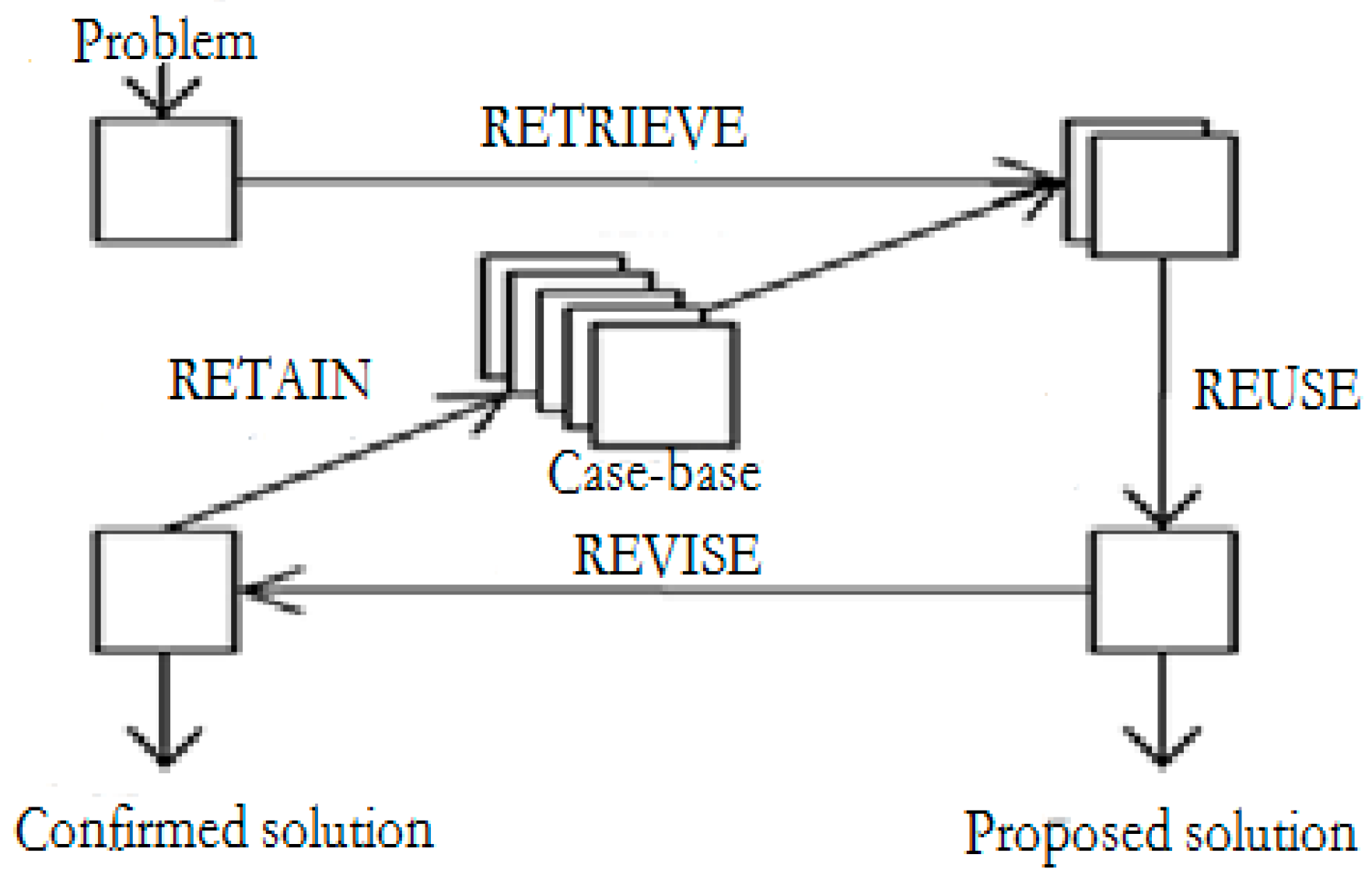

2.1. Case-Based Reasoning

| Examples/Featues | X1 | X2 | X3 | X4 | X5 | Xi | Xn |

|---|---|---|---|---|---|---|---|

| e1 | e1(X1) | e1(X2) | e1(X3) | e1(X4) | e1(X5) | e1(Xi) | e1(Xn) |

| e2 | e2(X1) | e2(X2) | e2(X3) | e2(X4) | e2(X5) | e2(Xi) | e2(Xn) |

2.2. Occam’s Razor

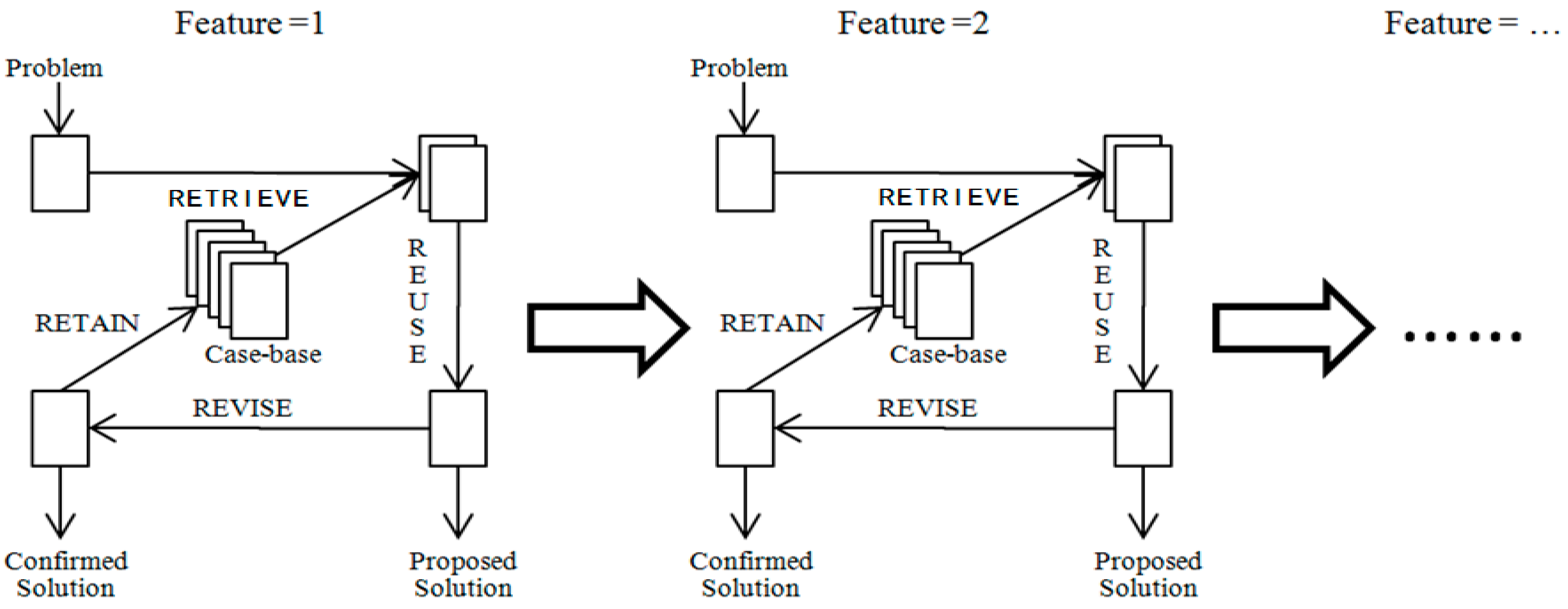

3. Case-Based “FCTF” Reasoning System

3.1. Case-Based Reasoning Problem Definition

3.2. Case-Based “FCTF” Reasoning System

4. Experiments and Analysis

4.1. Data Collection

4.2. Experimental Section

| Atmospheric Pressure | Dry and Wet Bulb Temperature | Relative Humidity | Wind Speed | ||||

|---|---|---|---|---|---|---|---|

| Rating | Value (hPa) | Rating | Value (°C) | Rating | Value (%) | Rating | Value (MPH) |

| Moderate | >940 | Lowest | <−10 | Dry | [0,30) | Calm | (0,2) |

| Lower slightly | [930, 940) | Lower | [−10,5) | Less dry | [30,50) | Light Air | [2,4) |

| Moderate | [5,30) | Less humid | [50,70) | Light Breeze | [4,7) | ||

| Lower | [920,930) | Higher | [30,45) | Gentle Breeze | [7,11) | ||

| Lowest | <920 | Highest | >45 | Humid | [70,100) | Moderate Breeze | [11,17) |

| Experiment Number | Input Conditions | |||

|---|---|---|---|---|

| Atmospheric Pressure | Dry and Wet Bulb Temperature | Relative Humidity | Wind Speed | |

| 1 | × | ○ | ○ | ○ |

| 2 | × | × | ○ | ○ |

| 3 | × | × | × | ○ |

| 4 | × | × | × | × |

| Precipitation Rating | Precipitation Value (mm) |

|---|---|

| Light rain | (0,10.0) |

| Moderate rain | [10.0,24.9) |

| Heavy rain | [24.9,49.9) |

| Rainstorm | [49.9,99.9) |

| Heavy rainstorm | [99.9,249.0) |

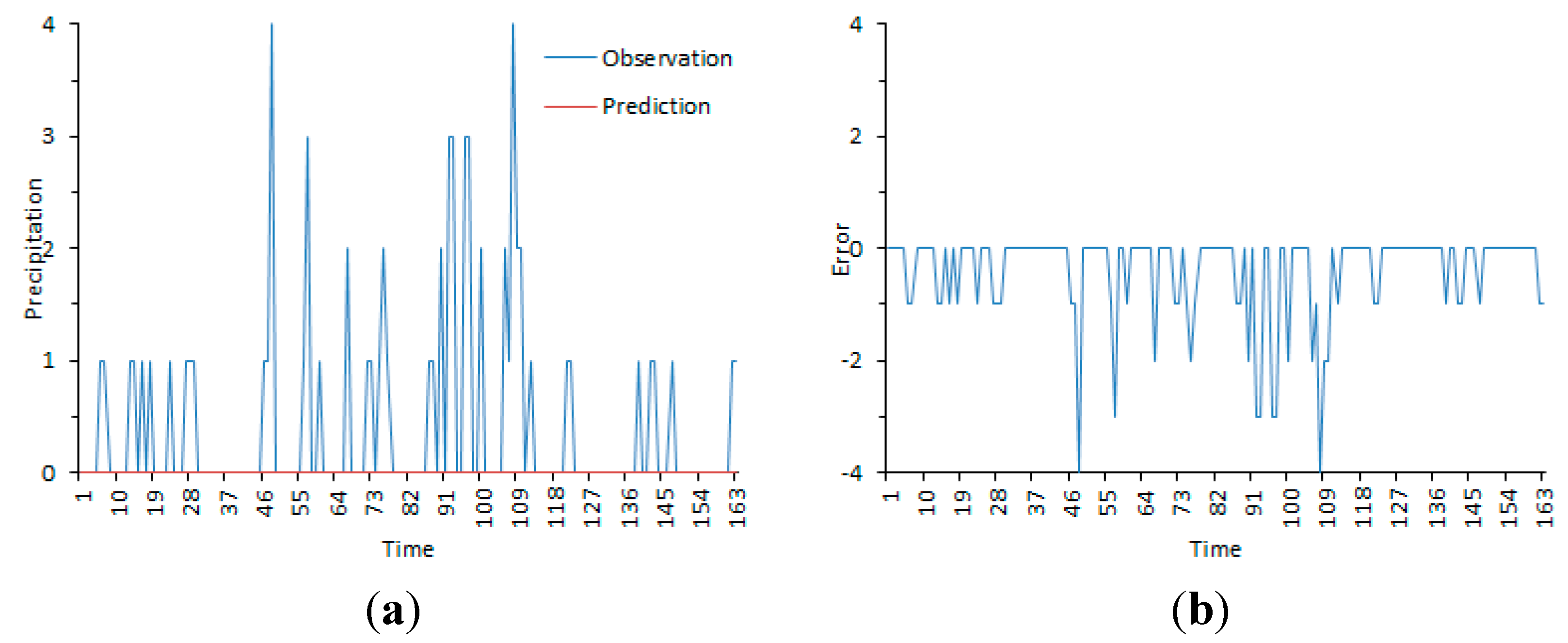

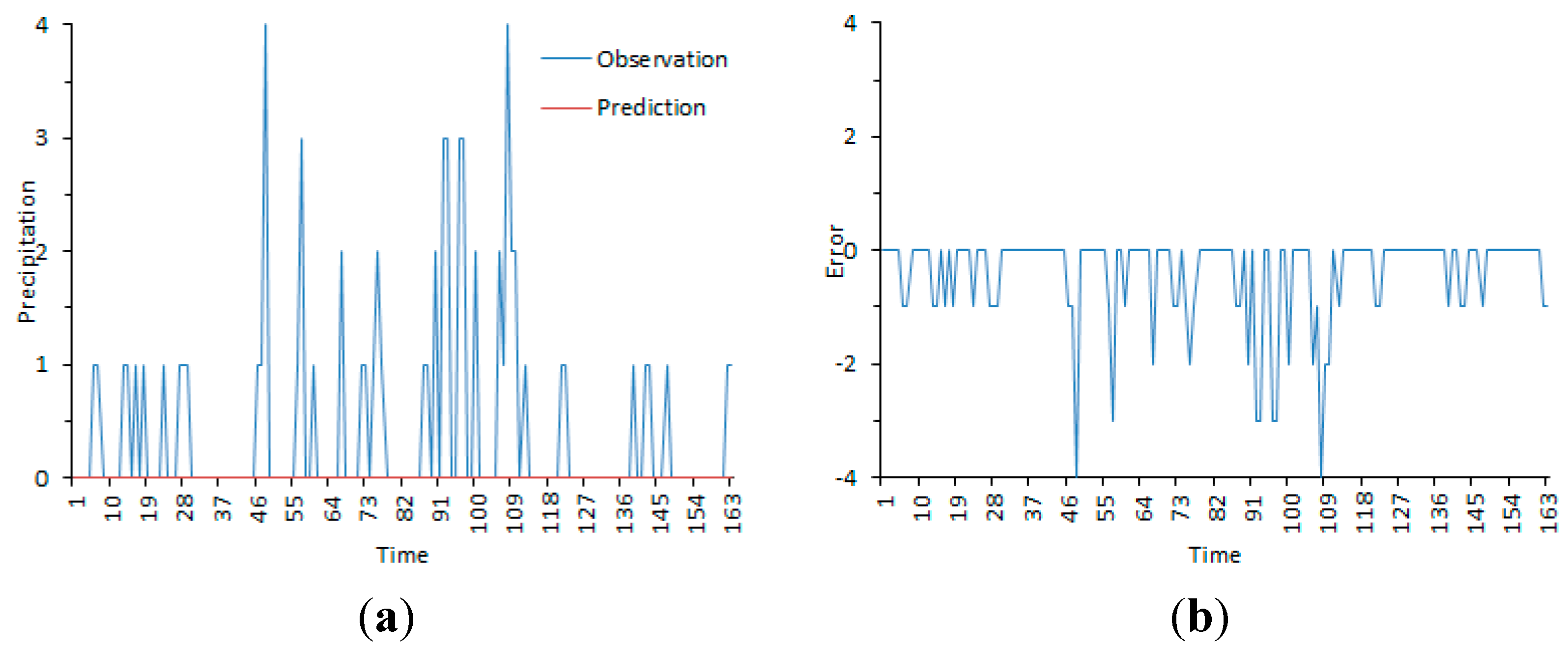

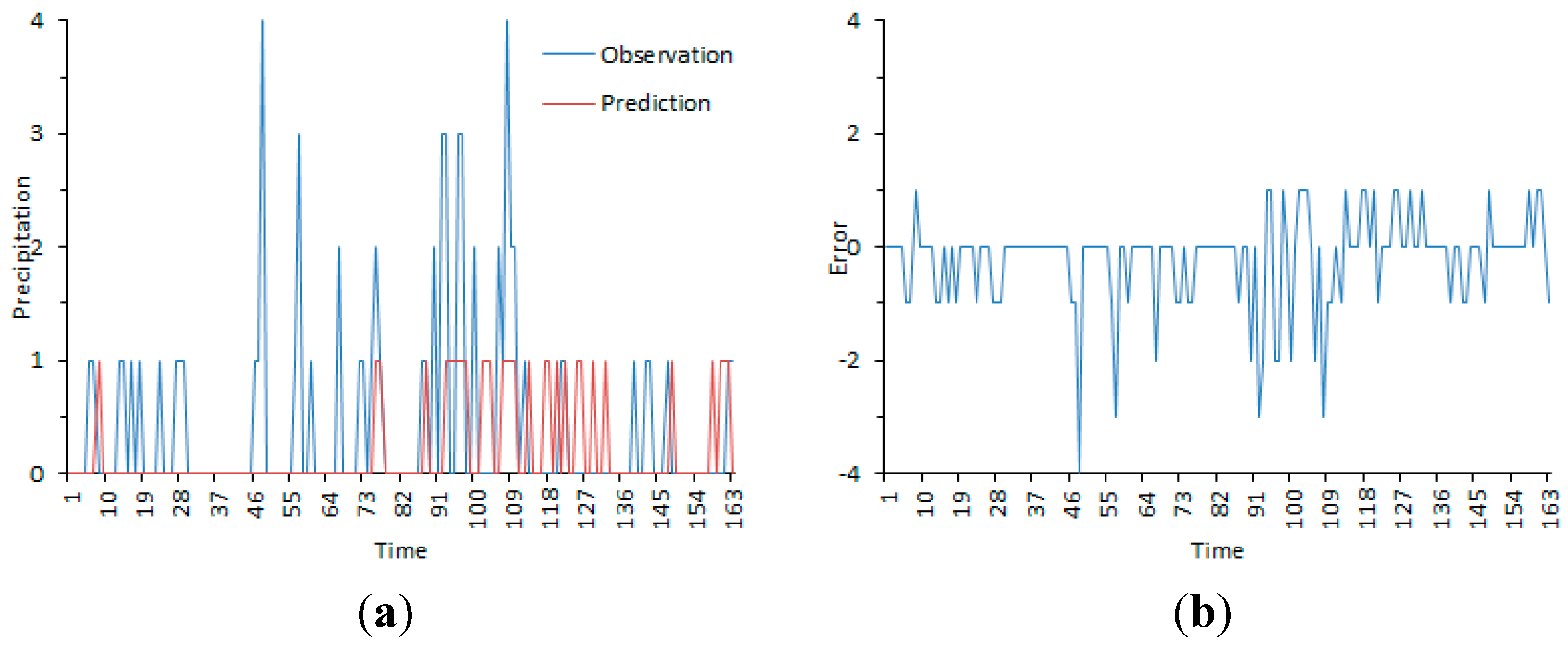

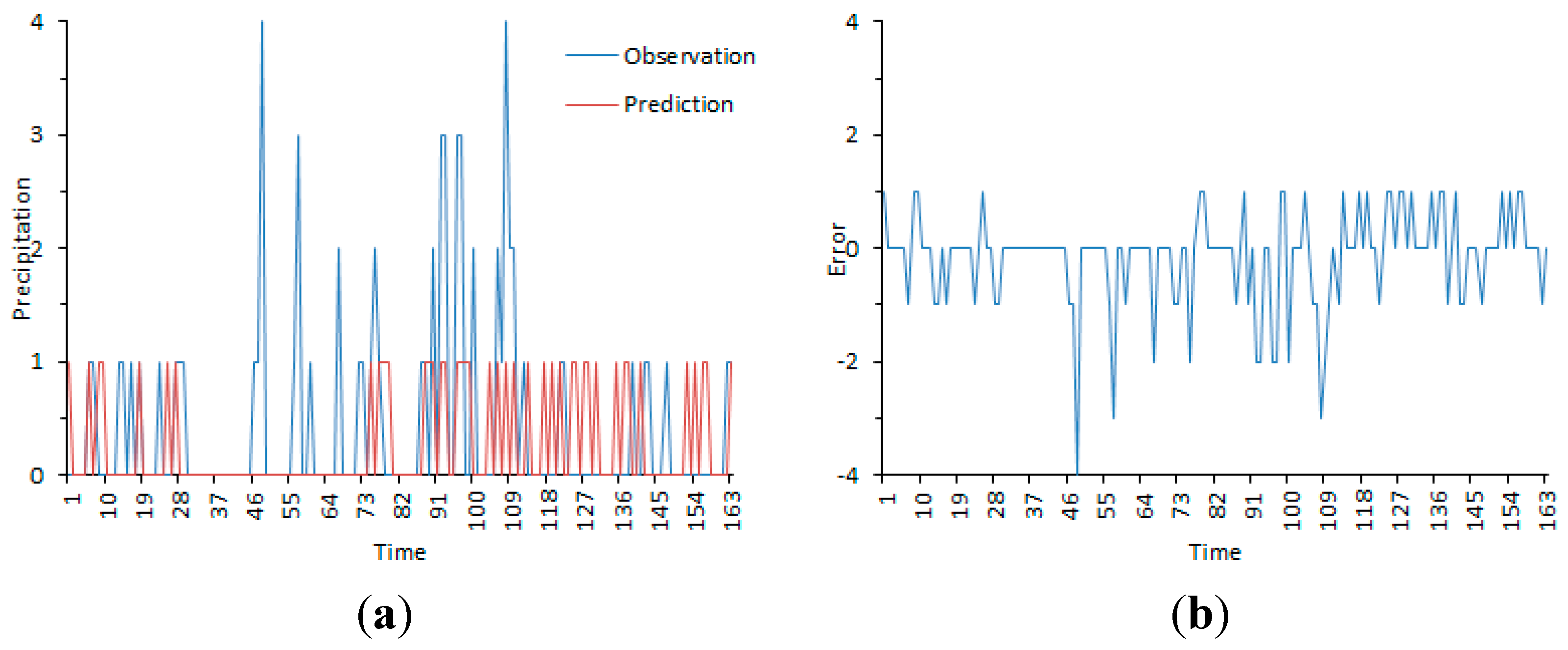

4.3. Experiment Results and Error Analysis

| Experiment Number | Number of Input Conditions | Minimum Error | Maximum Error | Minimum |Error| | Maximum |Error| | Average |Error| | Hit Rate |

|---|---|---|---|---|---|---|---|

| 1 | 1 | −4 levels | 0 level | 0 level | 4 levels | 1.52 levels | 0.00 |

| 2 | 2 | −4 levels | 0 level | 0 level | 4 levels | 1.52 levels | 0.00 |

| 3 | 3 | −4 levels | 1 level | 0 level | 4 levels | 1.25 levels | 0.27 |

| 4 | 4 | −4 levels | 1 level | 0 level | 4 levels | 1.16 levels | 0.36 |

4.4. Analysis of Our System

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Kolodner, J. Case-Based Reasoning, 14rd ed.; Elsevier Science: Burlington, MA, USA, 2014; pp. 3–8. [Google Scholar]

- Richter, M.M.; Weber, R.O. Case-Based Reasoning: A Text Book, 5rd ed.; Springer: Heidelberg, Germany, 2013; pp. 5–10. [Google Scholar]

- Fischer, B.; Welter, P.; Günther, R.W.; Deserno, T.M. Web-based bone age assessment by content-based image retrieval for case-based reasoning. Int. J. Comput. Ass. Rad. 2012, 7, 389–399. [Google Scholar] [CrossRef] [PubMed]

- Recio-García, G.A.; González-Calero, P.A.; Díaz-Agudo, B. Jcolibri2: A framework for building case-based reasoning systems. Sci. Comput. Program. 2014, 79, 126–145. [Google Scholar] [CrossRef]

- Bichindaritz, I.; Montani, S. Report on the Eighteenth International Conference on Case-Based Reasoning. AI Mag. 2012, 33, 79–82. [Google Scholar]

- Jo, H.; Han, I.; Lee, H. Bankruptcy prediction using case-based reasoning, neural networks, and discriminant analysis. Expert Syst. Appl. 1997, 13, 97–108. [Google Scholar] [CrossRef]

- Schank, R.C. Dynamic Memory: A Theory of Reminding and Learning in Computers and People, 1st ed.; Cambridge University Press: Cambridge, UK, 1983; pp. 1–10. [Google Scholar]

- Chattopadhyay, S.; Banerjee, S.; Rabhi, F.A.; Acharya, U.R. A case-based reasoning system for complex medical diagnosis. Expert Syst. 2013, 30, 12–20. [Google Scholar] [CrossRef]

- Aha, D.W.; Breslow, L.A.; Muñoz-Avila, H. Conversational case-based reasoning. Appl. Intell. 2001, 14, 9–32. [Google Scholar] [CrossRef]

- Lenat, D.B.; Durlach, P.J. Reinforcing math knowledge by immersing students in a simulated learning-by-teaching experience. Int. J. Artif. Intell. Edu. 2014, 24, 216–250. [Google Scholar] [CrossRef]

- Qi, J.; Hu, J.; Peng, Y. A new adaptation method based on adaptability under k-nearest neighbors for case adaptation in case-based design. Expert Syst. Appl. 2012, 39, 6485–6502. [Google Scholar] [CrossRef]

- Begum, S.; Barua, S.; Filla, R.; Ahmed, M.U. Classification of physiological signals for wheel loader operators using Multi-scale Entropy analysis and case-based reasoning. Expert Syst. Appl. 2014, 41, 295–305. [Google Scholar] [CrossRef]

- Chen, X.; Wang, Z.; Sy, C.; Liu, X.; Qian, J.; Zheng, J.; Dong, Z.; Cao, Li.; Geng, X.; Xu, S.; et al. Computer-aided diagnosis expert system for cerebrovascular diseases. Neurol. Res. 2014, 36, 468–474. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, M.U.; Begum, S.; Funk, P.; Xiong, N.; Schéeleet, B.V. A multi-module case-based biofeedback system for stress treatment. Artif. Intell. Med. 2011, 51, 107–115. [Google Scholar] [CrossRef] [PubMed]

- Mansouri, D.; Mille, A. Hamdi-Cherif, A. Adaptive delivery of trainings using ontologies and case-based reasoning. Arabian J. Sci. Eng. 2014, 39, 1849–1861. [Google Scholar] [CrossRef]

- Wess, S.; Althoff, K.D.; Richter, M.M. Using kd trees to improve the retrieval step in case-based reasoning. In Topics in Case-Based Reasoning Lecture Notes in Computer Science, 1st ed.; Wess, S., Ed.; Springer: Berlin/Heidelberg, Germany, 1994; pp. 167–181. [Google Scholar]

- Aamodt, A.; Plaza, E. Case-based reasoning: Foundational issues, methodological variations, and system approaches. AI Commun. 1994, 7, 39–59. [Google Scholar]

- Li, M.; Tromp, J.; Vitanyi, P. Sharpening Occam’s razor. Inf. Process. Lett. 2003, 85, 267–274. [Google Scholar] [CrossRef]

- Floridi, L. A defence of constructionism: Philosophy as conceptual engineering. Metaphilosophy 2011, 42, 282–304. [Google Scholar] [CrossRef]

- Gill, Z. The other edge of Occam’s Razor: The A-PR hypothesis and the origin of mind. Biosemiotics 2014, 6, 403–419. [Google Scholar] [CrossRef]

- Sinclair, T.R.; Muchow, R.C. Occam’s Razor, radiation-use efficiency, and vapor pressure deficit. Field Crops Res. 1999, 62, 239–243. [Google Scholar] [CrossRef]

- Elia, F.; Pagnozzi, B.L.; Aprà, F.; Roccatello, D. A victim of the Occam’s razor. Int. Emerg. Med. 2013, 8, 767–768. [Google Scholar] [CrossRef] [PubMed]

- Bontly, T.D. Modified Occam’s Razor: Parsimony, Pragmatics, and the Acquisition of Word Meaning. Mind Lang. 2005, 20, 288–312. [Google Scholar] [CrossRef]

- Riesch, H. Simple or simplistic? Scientists’ views on Occam’s Razor. Theoria 2010, 25, 75–90. [Google Scholar]

- Graham, J.; Iyer, R. The unbearable vagueness of “essence”: Forty-four clarification questions for gray, young, and waytz. Psychol. Inq. 2012, 23, 162–165. [Google Scholar] [CrossRef]

- Sinnott-Armstrong, W. Does morality have an essence? Psychol. Inq. 2012, 23, 194–197. [Google Scholar] [CrossRef]

- Koleva, S.; Haidt, J. Let’s use Einstein’s Safety Razor, not Occam’s Swiss Army Knife or Occam’s Chainsaw. Psychological. Inq. 2012, 23, 175–178. [Google Scholar] [CrossRef]

- Geary, T.G.; Bourguinat, C.; Prichard, R.K. Evidence for Macrocyclic Lactone Anthelmintic Resistance in Dirofilariaimmitis. Top Companion Anim. Med. 2011, 26, 186–192. [Google Scholar] [CrossRef] [PubMed]

- Lewowicz, L. Phlogiston, Lavoisier and the purloined referent. Stud. Hist. Philos. Sci. Part A 2011, 42, 436–444. [Google Scholar] [CrossRef]

- Mokady, O. Occam’s Razor, invertebrate allorecognition and Ig superfamily evolution. Res. Immunol. 1996, 147, 241–246. [Google Scholar] [CrossRef]

- Harta, J. Terence Hutchison and the introduction of Popper’s falsifiability criterion to economics. J. Econ. Methodol. 2011, 18, 409–426. [Google Scholar] [CrossRef]

- Charles, A.; Timbal, B.; Fernandez, E.; Hendon, H. Analog downscaling of seasonal rainfall forecasts in the Murray Darling Basin. Mon. Wea. Rev. 2013, 141, 1099–1117. [Google Scholar] [CrossRef]

- Rajagopalan, B; Molnar, P. Pacific Ocean sea-surface temperature variability and predictability of rainfall in the early and late parts of the Indian summer monsoon season. Clim. Dyn. 2012, 39, 1543–1557. [Google Scholar]

- Refai, M.H.; Yusof, Y. Partial Rule Match for Filtering Rules in Associative Classification. J. Comput. Sci. 2014, 10, 570–577. [Google Scholar] [CrossRef]

- Korena, I.; Feingoldb, G. Aerosol-cloud-precipitation system as a predator-prey problem. PNAS 2011, 108, 12227–12232. [Google Scholar] [CrossRef] [PubMed]

- Rollenbeck, R.; Bendix, J. Rainfall distribution in the Andes of southern Ecuador derived from blending weather radar data and meteorological field observations. Atmos. Res. 2011, 99, 277–289. [Google Scholar] [CrossRef]

- Gultepe, I.; Milbrandt, J.A. Probabilistic parameterizations of visibility using observations of rain precipitation rate, relative humidity, and visibility. J. Appl. Meteor. Climatol. 2010, 49, 36–46. [Google Scholar] [CrossRef]

- Fay, P.A. Relative effects of precipitation variability and warming on tall grass prairie ecosystem function. Biogeosciences 2011, 8, 3053–3068. [Google Scholar] [CrossRef]

- Stahl, C. Seasonal variation in atmospheric relative humidity contributes to explaining seasonal variation in trunk circumference of tropical rain-forest trees in French Guiana. J. Trop. Ecol. 2010, 26, 393–405. [Google Scholar] [CrossRef]

- Zhang, W. Differences in teleconnection over the North Pacific and rainfall shift over the USA associated with two types of El Niño during Boreal autumn. J. Meteorol. Soc. Jpn. 2012, 90, 535–552. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, J.; Zhang, X.; Li, P.; Zhu, Y. Case-Based FCTF Reasoning System. Appl. Sci. 2015, 5, 825-839. https://doi.org/10.3390/app5040825

Lu J, Zhang X, Li P, Zhu Y. Case-Based FCTF Reasoning System. Applied Sciences. 2015; 5(4):825-839. https://doi.org/10.3390/app5040825

Chicago/Turabian StyleLu, Jing, Xiakun Zhang, Peiren Li, and Yu Zhu. 2015. "Case-Based FCTF Reasoning System" Applied Sciences 5, no. 4: 825-839. https://doi.org/10.3390/app5040825

APA StyleLu, J., Zhang, X., Li, P., & Zhu, Y. (2015). Case-Based FCTF Reasoning System. Applied Sciences, 5(4), 825-839. https://doi.org/10.3390/app5040825