1. Introduction

The number of digital images has increased significantly with the popularization of imaging devices. Because image quality is often degraded by noise in the process of acquisition or transmission, image-denoising technology, which aims to extract noise while preserving image detail [

1], has been used in various high-level visual tasks such as object detection [

2], image generation [

3], and semantic segmentation [

4]. In most studies [

5,

6,

7,

8,

9], degradation by noise is modeled by additive Gaussian white noise (AGWN) [

10]. Its expression is:

where

y represents the corrupted image,

x denotes the undistorted image, and

n denotes an additive noise that obeys a Gaussian distribution. Previously, various denoising algorithms [

11,

12,

13,

14,

15,

16] with different performance have been proposed, and they can be divided into three categories: filtering-based, model-based, and learning-based.

The non-local means (NLM) [

17], block-matching3D (BM3D) [

18] and weighted nuclear norm minimization (WNNM) [

19] represent the standard filter-based denoising algorithms, which utilize the intrinsic statistics of the image patch for denoising. Typically, model-based methods define the task of denoising as an optimization problem using the maximum a posteriori (MAP) approach including nonlocally centralized sparse representation (NCSR) [

20] and multivariate sparse regularization (MUSR) [

21]. Although these methods can significantly decrease the image’s noise, they have to encounter the problem of computational efficiency, over-smoothness, and parameter selection. Compared with conventional methods, the learn-based approaches can achieve more satisfactory noise fitting ability and denoising performance by extracting the shallow pixel-level features and deep semantic features in training. Based on whether clean images are needed to train models, the learn-based method can be categorized into two groups: supervised and unsupervised.

In 2017, Zhang et al. [

22] proposed a denoising convolutional neural network called DnCNN, which can be regarded as milestone work in the supervised denoising model. Distinct from considerable discriminative denoising models, DnCNN utilized residual learning [

23] and batch normalization techniques [

24] to expedite the training process, and achieve superior performance with simpler architectures. Nevertheless, the model is unable to achieve the desired results when the level of noise is unknown. To improve the adaptability of the model to diverse noise level, a fast and flexible denoising network (FFDNet) was proposed by Zhang et al. [

25]. It can manage a significant range of noise levels effectively and efficiently by incorporating an adjustable noise level map as an input. However, the generalization ability of FFDNet can improve under complex noise. Guo et al. incorporated synthetic images with real noise and actual images with that are practically noiseless to train their convolutional blind denoising network (CBDNet) [

26] with a noise level estimation subnetwork. This improved the generalization ability of the model significantly. However, interactive denoising and separate subnets degrade the model’s efficiency and flexibility, and are not conducive to perceiving the model’s optimal solution. Recently, numerous innovative network architectures have been introduced and have achieved the desired effect. For example, the RIDNet [

8] proposed by Anwar et al. applied feature attention in denoising and utilized the modular architecture to alleviate vanishing gradients while improving performance. Inevitably, the CNN-based denoisers mentioned above frequently suffer from a limited receptive field and lack long-range pixel perception ability. The Transformer [

27], which was originally applied in natural language processing, has been broadened to the realm of visual tasks. In 2021, Liang et al. [

28] proposed a Transformer-based model called SwinIR for image restoration, which achieved local attention and cross-window interaction by introducing the residual Swin Transformer blocks (RSTB) [

29]. Moreover, SwinIR achieved satisfying results with fewer parameters through the attention mechanism and network design. Although the Transformer model has a global receptive field, its computational efficiency increases quadratically with the image resolution. To decrease the computational overhead, Zamir et al. [

30] proposed the Restormer model for multi-scale local-global representation learning. The MDTA (multi-Dconv head transposed attention) applies self-attention layers across channels to create an attention map, significantly reducing the computation cost while maintaining the global learning ability. Meanwhile, the design of the GDFN (gated-Dconv feed-forward network) suppress less informative features and increases the model’s denoising efficiency. The combination of MDTA and GDFN allows attention-based denoisers to function sufficiently with high-resolution images. Although the existing supervised learning models exhibit excellent performance in denoising, their performance often deteriorates when processing images distinct from training datasets, and the model’s generalization ability still requires improvement. Meanwhile, data dependence problems generally exist in supervised models, which require significant noisy-clean image pairs to enhance their general denoising ability. However, the acquisition of clear images is challenging. In the image acquisition process, noise is usually introduced due to the material characteristics of the sensor; in the transmission process, quality degradation will occur because of transmission media and recording equipment. Furthermore, in medical image denoising, acquiring substantial training images involves exposing the human body to high doses of radiation, which is unethical. In conclusion, data dependence results in training data significantly affecting the denoising performance of supervised models, which causes them to lack robust generalization ability and be unable to repair fine details according to specific images, thus limiting the practicability of supervised models.

Considering the dependence of supervised models on massive training data, the unsupervised methods, which use single noisy images for training, are more prevalent in some fields. For instance, Lehtinen et al. [

31] introduced a point estimation procedure from a statistical perspective and proposed the Noise2Noise (N2N) denoising model, which suggested that models can be trained with only the corrupted image pairs for different noise distributions. The N2N significantly reduces the practical training process by mitigating requirements on the availability of clean images. However, the model still requires significant pairs of noisy images to improve its denoising effect, and the collection of noisy image pairs with a constant signal is only feasible for static scenes, which limits the practical application of N2N. Inspired by the N2N, Krull et al. [

32] proposed a self-supervised training strategy called Noise2Void (N2V) that further overcomes the dependence on noisy image pairs. Based on two statistical assumptions: every pixel in the image is conditionally related to adjacent pixels, and the noise is independent of the pixels. Krull et al. innovatively proposed the blind-point network to understand the mapping relationship between the adjacent and center pixels in a pixel block. Considering the center pixel as the model’s learning target, the N2V prevents the model from becoming the identical map of a single pixel and reduces the restriction that training images only derive from the same scene in N2N. However, limited by the previous assumptions, the model has unsatisfactory performance on the noisy image where the adjacent pixels and noise are correlated. Subsequently, Huang et al. [

33] proposed the Neighbor2Neighbor denoising model, which introduced the strategy of a random neighbor sub-sampler to produce noisy image pairs for training. Thus, Neighbor2Neighbor further decreases the acquisition difficulty of training images in the unsupervised model. However, the sampling operation will reduce the image resolution, and result in the degradation of the denoising effect. Meanwhile, Neighbor2Neighbor is also unsuitable for dealing with spatially-correlated noise and extremely dark images. Although the unsupervised methods no longer depend on numerous distortion-free images compared with the supervised approach, they still require several noisy images pairs for training to achieve desired denoising performance. Therefore, these methods still have significant limitations in practical application.

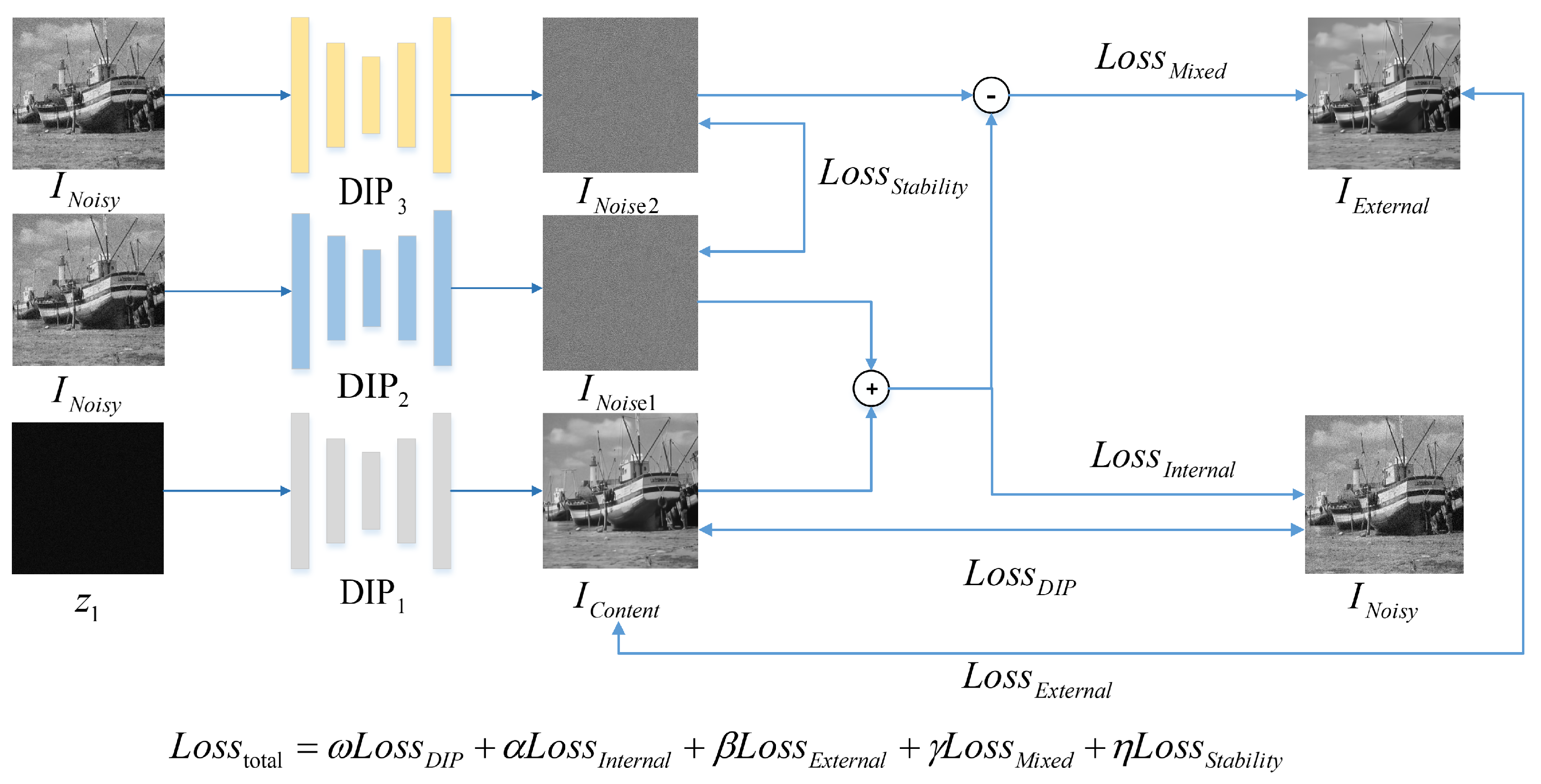

Compared to most learning-based methods that learn realistic image priors from numerous data, the deep image prior (DIP) presented by Ulyanov et al. [

34] has shown that plenty of image statistics can be implicitly captured by a suitable network architecture like U-Net [

35]. Therefore, the DIP can be trained using a single degraded image with random initialization of the parameters through gradient descent, significantly decreasing the requirements on massive training data. However, there is still a gap regarding the DIP’s denoising performance. This can be attributed to the substantial reliance on internal priors, insufficient constraints, and a fixed number of stopped iterations. First, reliance only on the internal priors results in the model’s denoising performance depend on the target image’s quality. When confronted with poor self-similarity or high-noise images, DIP cannot fully exploit its advantages. Second, DIP only uses a mean square error (MSE) between network output and noisy image to optimize the network parameters, which cannot adequately guide the model’s denoising process. Finally, most of the DIP’s iterations are based on empirical values obtained from extensive experiments, which cannot accurately determine when the model’s iterative process stops. To address the above issues, we propose a Triple Deep Image Prior model for image denoising based on internal and external mixed priors and two-branch independent noise learning, called TripleDIP. Specifically, we first utilize the effective and efficient Restormer model to obtain the preprocessed image with rich external priors. Subsequently, we introduce a DIP branch to learn the image content and two DIP branches to learn the image noise independently. To evaluate the proposed TripleDIP, we experimented with two common datasets. The experimental results demonstrate that the denoising effect of our TripleDIP significantly outperforms the original DIP model, and the unsupervised models and exceeds that of some efficient supervised models.

The main contributions of this study are as follows:

(1) We construct a novel triple branch network architecture to improve the denoising performance of DIP. Regarding single branch image content learning, random initialization and single constraint usually result in low quality in the DIP’s output. Therefore, we introduce noise learning to maintain the structural information of the images and provide more noise detail. We caused the learned noise to be more stable by utilizing two branches to generate noise independently. Thus, TripleDIP can generate rich noise components more stably and produce satisfying output with content learning.

(2) Based on the supervised denoising model, we introduce high-quality preprocessed images as external prior. The introduction of external priors limits the range of network output solutions and effectively prevents DIP from overfitting noisy images. In addition, the internal and external mixed priors compensates for the lack of perception of general image information in traditional DIP while preventing model performance from being constrained by the low-quality target image. This significantly enhances the denoising effect of DIP.

(3) We propose an automatic stop criterion based on SSIM. We record the SSIM value between the network output and the preprocessed image for each iteration of training process. When the SSIM of a particular iteration number is no longer increased within the search range, we terminate the model’s training process and select it to generate denoised image, effectively preventing the model from overfitting.

The remaining text is organized as follows:

Section 2 describes the Restormer and DIP denoising model that are relevant to our study.

Section 3 describes the designed TripleDIP in detail, introducing the architecture and the compound loss function. In

Section 4, we explain the experiment datasets, the setup of experiments, and experimental results compared to other state-of-the-art (SOTA) denoising algorithms. Finally, we provide a comprehensive summary of our work in

Section 5.

5. Conclusions

In this study, we proposed a novel denoising model called TripleDIP using the strategies of internal and external mixed priors and two-branch independent noise learning. Compared with the DIP which only uses internal prior information, we utilized the Restormer model to generate external priors before denoising, and the introduction of internal and external mixed priors significantly improved the quality of the target image. Additionally, Restormer, a supervised model based on the Transformer, has excellent general denoising capabilities, while DIP as an unsupervised model, can restore local details of a particular image well. Mixed priors enhance the denoising performance of the model in a complementary manner. For two-branch noise learning, the designed loss function realizes the independent generation of noise towards the same target, ensuring the stability of noise learning while enhancing the constraints on the content learning branch. The automatic stopping criterion we proposed effectively prevents overfitting and improves the execution efficiency. The experimental results demonstrate that the proposed TripleDIP method is far superior to the original DIP method, outperforms the mainstream unsupervised denoising models, and even surpasses the recently proposed supervised models on the two synthetic datasets, thus demonstrating the efficacy of internal and external mixed priors and two-branch noise learning.

Although the denoising performance of TripleDIP has been significantly improved over the original DIP model, it still endures long execution time and high computational load due to the simultaneous operation of three DIP subnetworks. The on-line training method is not suitable for some time-critical applications. There are two possible directions for future research: (1) Utilizing the model agnostic meta-learning method (MAML) [

52] to compress execution time without losing the effectiveness. The network parameters of TripleDIP produced by random initialization require several thousand iterations to obtain desirable results. We can utilize the MAML to get a better initialization. In this way, TripleDIP can significantly reduce the required number of iterations. (2) Finding better stopping criterions. As can be seen from

Figure 3, the stopping criterion can not only prevent overfitting but also effectively reduce the number of iterations. We can combine this with other methods such as the early stopping (ES) method [

38] to optimize execution efficiency.