Method for Training and White Boxing DL, BDT, Random Forest and Mind Maps Based on GNN

Abstract

1. Introduction

- (1)

- The black box problem;

- (2)

- The bias problem in which the output of the DL includes biases if training datasets includes biases;

- (3)

- Weakness against noise.

- (1)

- Deep Explanation: Attention heat map and natural language explanation generation by deep learning state analysis, etc.

- (2)

- Interpretable Models: Machine learning using models that are originally highly interpretable (improving the accuracy of white box type machine learning),

- (3)

- Model Induction: Creating an external, highly interpretable model that approximates the behavior of black box machine learning.

- (1)

- The period from rice planting until the ear emerges,

- (2)

- The stage when the ear of rice has fully grown,

- (3)

- The stage just before harvesting.

2. Previous Works

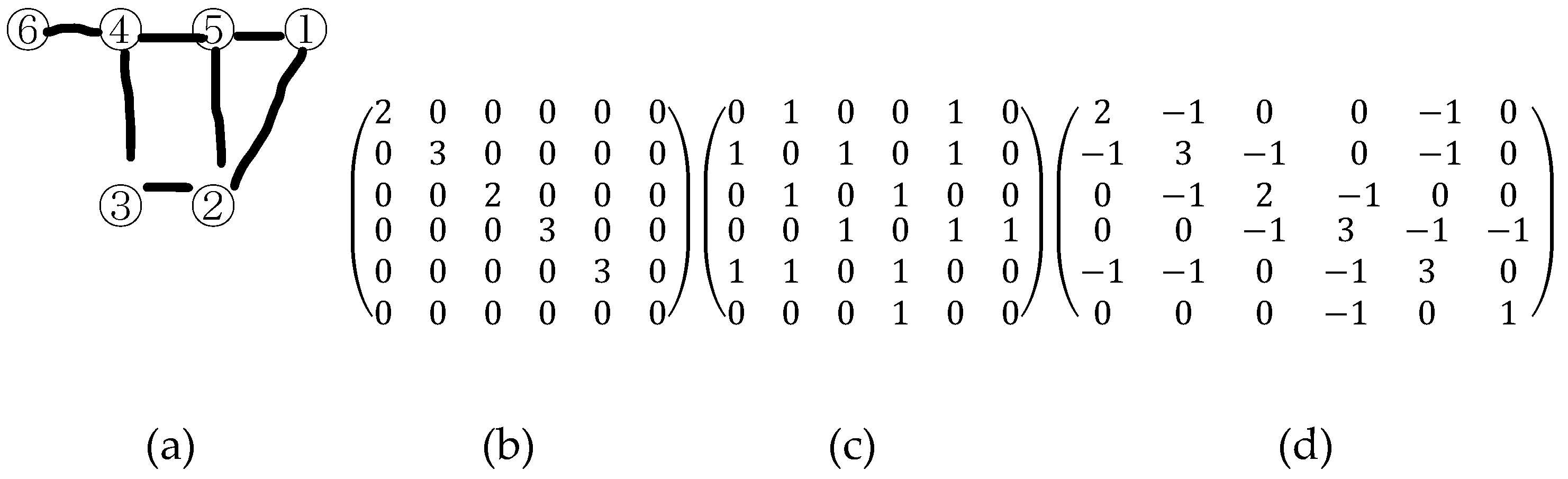

- (1)

- Adjacency matrix A expresses whether there is a connection relationship between nodes.

- (2)

- The degree matrix D represents how many edges are connected to each node.

- (1)

- Graph Recurrent Networks (recurrent networks)

- (2)

- Graph Convolutional Networks (convolutional network, hereinafter referred to as GCN)

- (3)

- Graph Attention Networks (Attention-based networks)

- (1)

- AGGREGATE: Aggregate information of neighboring nodes;

- (2)

- COMBINE: Update node features from the aggregated node information;

- (3)

- READOUT: Obtain a representation of the entire graph from the nodes in the graph.

3. Proposed Method

- (1)

- Make directed graphs of the DL, decision tree, random forest, or mind map;

- (2)

- Convert the directed graphs to matrices;

- (3)

- Use these matrices as input of the training samples of GNN and GCN;

- (4)

- Visualize the node in the hidden layers of the GNN and GCN, which results in white boxing of DL and the other models.

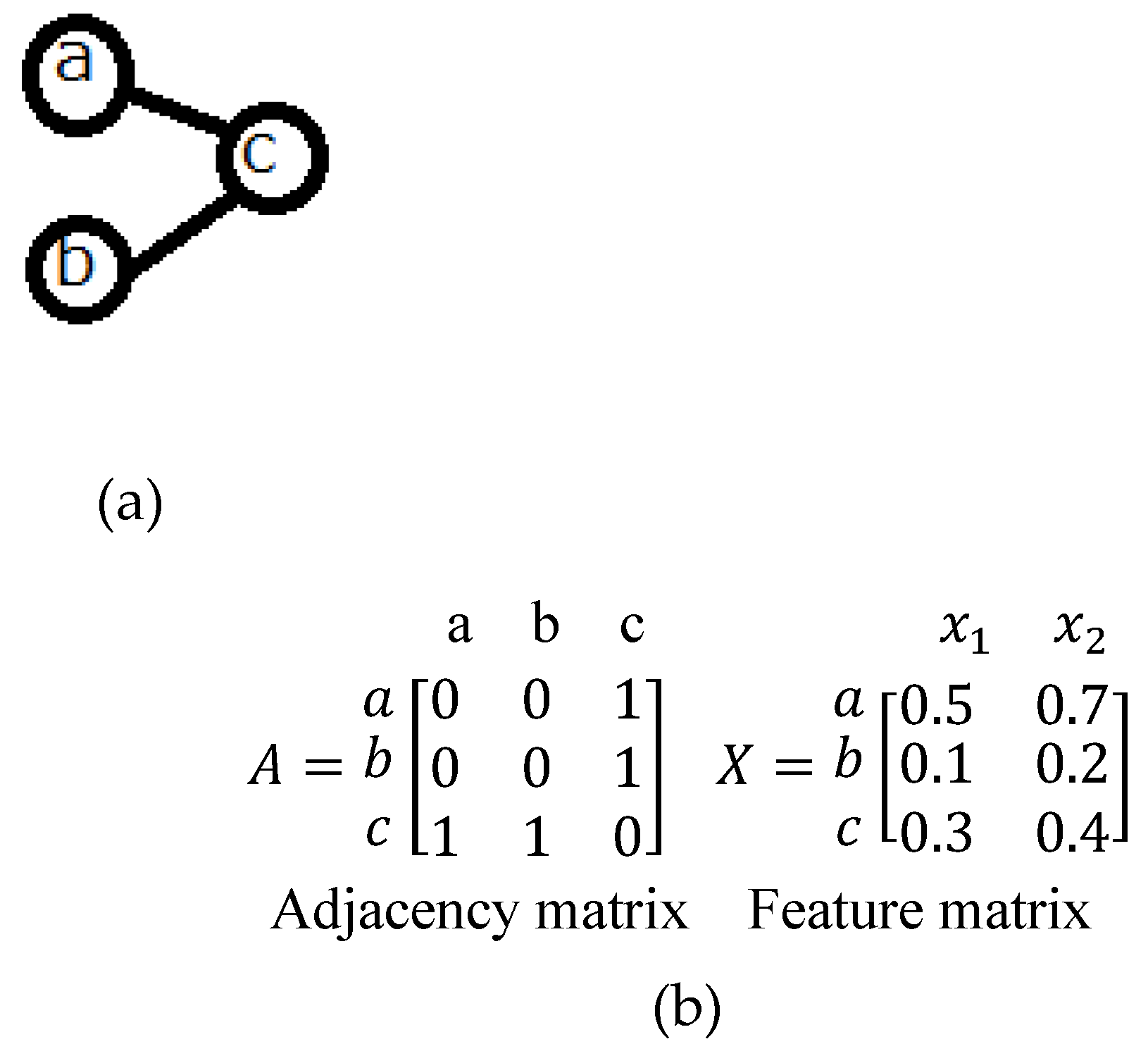

- (1)

- Adjacency matrix: a matrix that represents the connection relationship between nodes.

- (2)

- Feature Matrix: a matrix representing the feature vector of each node.

3.1. Input

- (1)

- Adjacency matrix:

- (2)

- Feature Matrix:

3.2. Output

- (1)

- Latent Matrix:

3.3. Formula

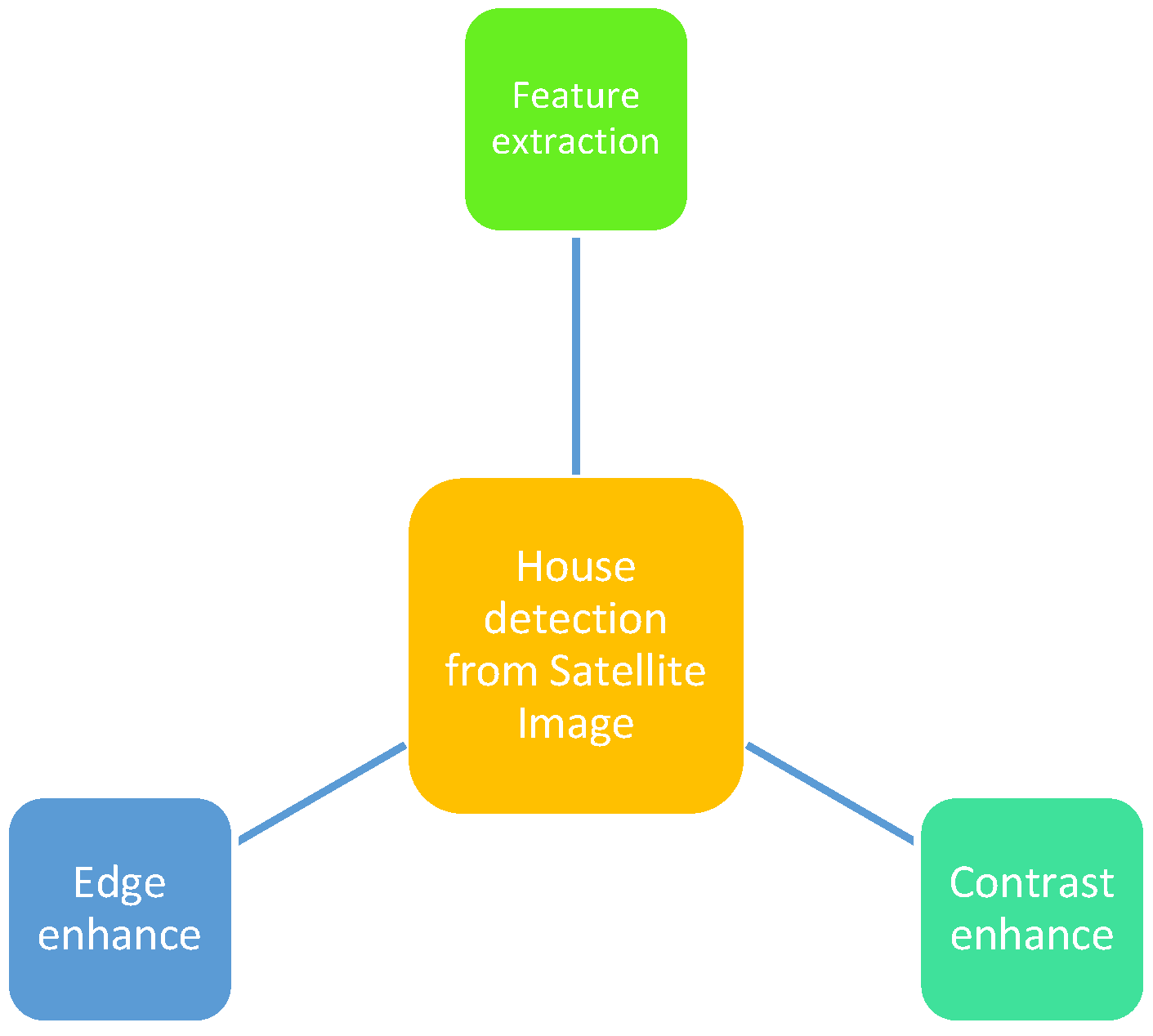

3.4. Mind Map

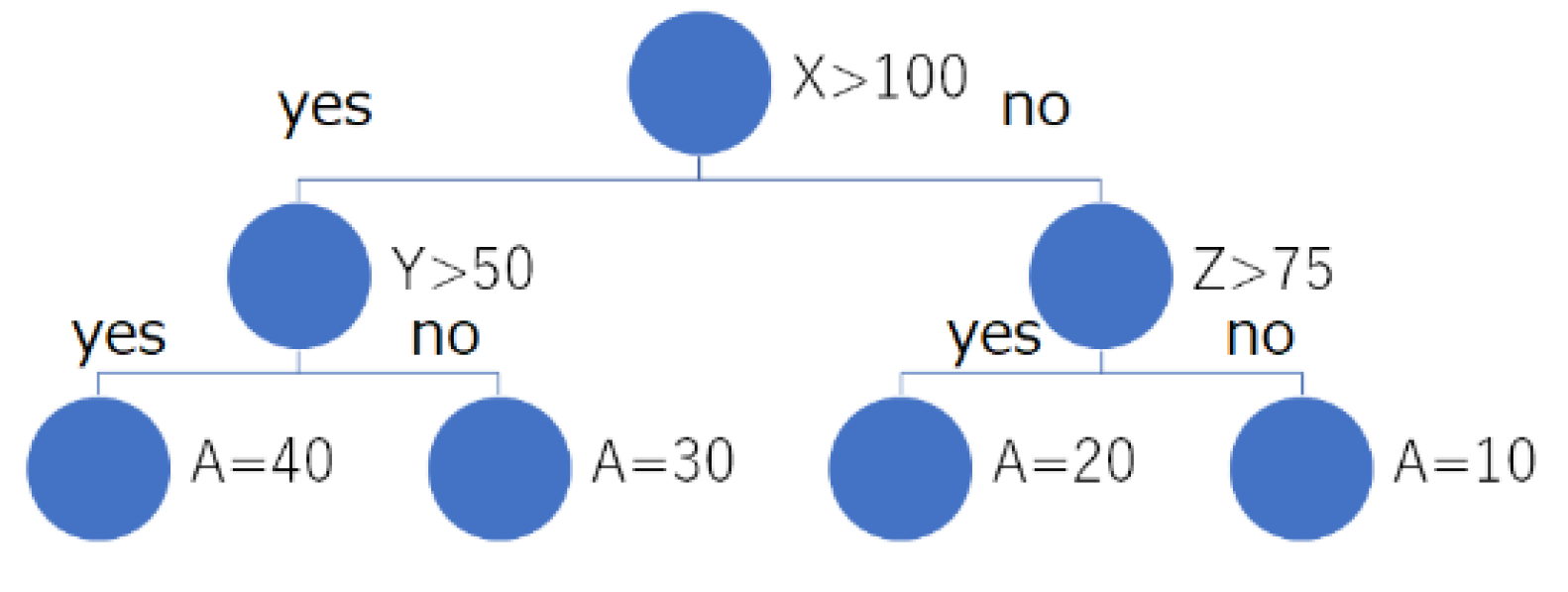

3.5. Decision Tree to Random Forest

3.6. Random Forest

- (1)

- Select a random sample from the given dataset;

- (2)

- Build a decision tree for each sample and obtain a prediction result from each decision tree;

- (3)

- Vote for each prediction result;

- (4)

- Select the prediction result with the most votes as the final prediction.

- (1)

- In random forests, each decision tree has different characteristics and can make complex decisions;

- (2)

- Compared with decision trees, there is no problem of overfitting. The main reason is the average of all predictions is taken to cancel out the bias;

- (3)

- Random forest can also handle missing values. There are two ways to handle these: using the median value to replace the continuous variable and computing the proximity-weighted average of the missing values;

- (4)

- Importance can be obtained. This helps select the features that contribute the most to the classifier.

- (1)

- Random forests are slow to generate predictions due to multiple decision trees. For every prediction, every tree in the forest must make a prediction on the same input and perform a vote on it. This entire process takes time.

- (2)

- Compared with decision trees, the models are harder to interpret because there are multiple trees.

3.7. Proposed Method

4. Examples

4.1. Application of the Proposed Method for Decision Tree Based Discrimation Method

4.2. Application for Mind Map Learning Method

4.3. Application for Random Forest Based Discrimination Method

4.4. Representation of CNN Architecture with Graphs

4.5. Representation of CNN Architecture with Matrices

4.6. White Boxing of Deep Learning

5. Conclusions

6. Future Research Works

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Arai, K.; Shigetomi, O.; Miura, Y. Artificial Intelligence Based Fertilizer Control for Improvement of Rice Quality and Harvest Amount. Int. J. Adv. Comput. Sci. Appl. IJACSA 2018, 9, 61–67. [Google Scholar] [CrossRef]

- Lui, Z.; Zhou, J. Introduction to Graph Neural Networks. In Synthesis Lectures on Artificial Intelligence and Machine Learning; Springer: Cham, Switzerland, 2020; p. 215857384. [Google Scholar] [CrossRef]

- Graph Neural Networks. Available online: https://speakerdeck.com/shimacos/graph-neural-networkswowan-quan-nili-jie-sitai (accessed on 3 February 2023).

- Zhang, H.; Yang, Z.; Ren, W.; Urtasun, R.; Fidler, S. Graph HyperNetworks for Neural Architecture Search. arXiv 2019, arXiv:1910.13051. [Google Scholar]

- Gilmer, J.; Schoenholz, S.S.; Riley, P.F.; Vinyals, O.; Dahl, G.E. Neural message passing for quantum chemistry. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 1263–1272. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the 13th International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010; pp. 249–256. [Google Scholar]

- Goyal, P.; Ferrara, E. Graph Embedding Techniques, Applications, and Performance: A Survey; Knowledge-Based Systems; Elsevier: Amsterdam, Netherlands, 2018; Volume 151, pp. 78–94. [Google Scholar]

- Grover, A.; Leskovec, J. node2vec: Scalable feature learning for networks. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 855–864. [Google Scholar]

- Kinga, D.; Adam, J.B. A method for stochastic optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Lai, Y.-A.; Hsu, C.-C.; Chen, W.H.; Yeh, M.-Y.; Lin, S.-D. Preserving proximity and global ranking for node embedding. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5261–5270. [Google Scholar]

- Li, Q.; Han, Z.; Wu, X.-M. Deeper insights into graph convolutional networks for semi-supervised learning. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Murata, T.; Afzal, N. Modularity optimization as a training criterion for graph neural networks. In Complex Networks IX; Springer: Berlin/Heidelberg, Germany, 2018; pp. 123–135. [Google Scholar]

- Perozzi, B.; Al-Rfou, R.; Skiena, S. DeepWalk: Online learning of social representations. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014; ACM: New York, NY, USA, 2014; pp. 701–710. [Google Scholar]

- Tang, J.; Qu, M.; Wang, M.; Zhang, M.; Yan, J.; Mei, Q. LINE: Large-scale information network embedding. In Proceedings of the 24th International Conference on World Wide Web, Florence, Italy, 18–22 May 2015; pp. 1067–1077. [Google Scholar]

- Weston, J.; Ratle, F.; Mobahi, H.; Collobert, R. Deep learning via semi-supervised embedding. In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2012; pp. 639–655. [Google Scholar]

- Yang, Z.; Cohen, W.W.; Salakhutdinov, R. Revisiting semi-supervised learning with graph embeddings. In Proceedings of the 33rd International Conference on International Conference on Machine Learning, New York, NY, USA, 20–22 June 2016; Volume 48, pp. 40–48. [Google Scholar]

- Zhu, X.; Ghahramani, Z.; Lafferty, J. Semi-supervised learning using gaussian fields and harmonic functions. In Proceedings of the 20th International Conference on Machine Learning, Washington, DC, USA, 21–24 August 2003; pp. 912–919. [Google Scholar]

- Structure-Aware Abstractive Conversation Summarization via Discourse and Action Graphs. Available online: https://arxiv.org/pdf/2104.08400.pdf (accessed on 3 February 2023).

- Aspect-based Sentiment Analysis with Type-aware Graph Convolutional Networks and Layer Ensemble. Available online: https://aclanthology.org/2021.naacl-main.231.pdf (accessed on 3 February 2023).

- Semi-Supervised Classification with Graph Convolutional Networks. Available online: https://arxiv.org/pdf/1609.02907.pdf (accessed on 3 February 2023).

- How Powerful Are Graph Neural Networks? Available online: https://arxiv.org/pdf/1810.00826.pdf (accessed on 3 February 2023).

- Xu, K.; Li, C.; Tian, Y.; Sonobe, T.; Kawarabayashi, K.-I.; Jegelka, S. Representation learning on graphs with jumping knowledge networks. In Proceedings of the 35th International Conference on Machine Learning (ICML 2018), Stockholm, Sweden, 10–15 July 2018; Volume 80, pp. 5449–5458. [Google Scholar]

- Yang, C.; Liu, J.; Shi, C. Extract the knowledge of graph neural networks and go beyond it: An effective knowledge distillation framework. In Proceedings of the The Web Conference 2021 (WWW 2021), New York, NY, USA, 12–16 April 2021; Leskovec, J., Grobelnik, M., Najork, M., Tang, J., Zia, L., Eds.; Association for Computing Machinery: New York, NY, USA, 2021; pp. 1227–1237. [Google Scholar]

- Zhu, J.; Yan, Y.; Zhao, L.; Heimann, M.; Akoglu, L.; Koutra, D. Beyond homophily in graph neural networks: Currentlimitations and effective designs. In Proceedings of the Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems (NeurIPS 2020), Online, 6–12 December 2020. [Google Scholar]

- Zhu, X.; Ghahramani, Z. Learning from Labeled and Unlabeled Data with Label Propagation, School of Computer Science, Carnegie Mellon University: Pittsburgh, PA, USA, 07 June 2002.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arai, K. Method for Training and White Boxing DL, BDT, Random Forest and Mind Maps Based on GNN. Appl. Sci. 2023, 13, 4743. https://doi.org/10.3390/app13084743

Arai K. Method for Training and White Boxing DL, BDT, Random Forest and Mind Maps Based on GNN. Applied Sciences. 2023; 13(8):4743. https://doi.org/10.3390/app13084743

Chicago/Turabian StyleArai, Kohei. 2023. "Method for Training and White Boxing DL, BDT, Random Forest and Mind Maps Based on GNN" Applied Sciences 13, no. 8: 4743. https://doi.org/10.3390/app13084743

APA StyleArai, K. (2023). Method for Training and White Boxing DL, BDT, Random Forest and Mind Maps Based on GNN. Applied Sciences, 13(8), 4743. https://doi.org/10.3390/app13084743