On Pseudorandomness and Deep Learning: A Case Study

Abstract

1. Introduction

2. Pseudorandomness

- Pseudorandom generator. A pseudorandom generator is an efficient (deterministic) algorithm that, given short seeds, stretches them into longer output sequences, which are computationally indistinguishable from uniform ones. The term “computationally indistinguishable” means that no efficient algorithm, the distinguisher, can tell them apart [11,12,13].

- Pseudorandom function. A pseudorandom function is an efficiently computable two-input function such that, for uniform choices of , the univariate function is computationally indistinguishable from a univariate function , chosen uniformly at random from the set of all univariate functions of n-bit inputs to n-bit outputs.

- Pseudorandom permutation. A pseudorandom permutation is a two-input permutation such that, for uniform choices of , the univariate permutation and its inverse are efficiently computable, and is computationally indistinguishable from a univariate permutation , chosen uniformly at random from the set of permutations on n-bit strings.

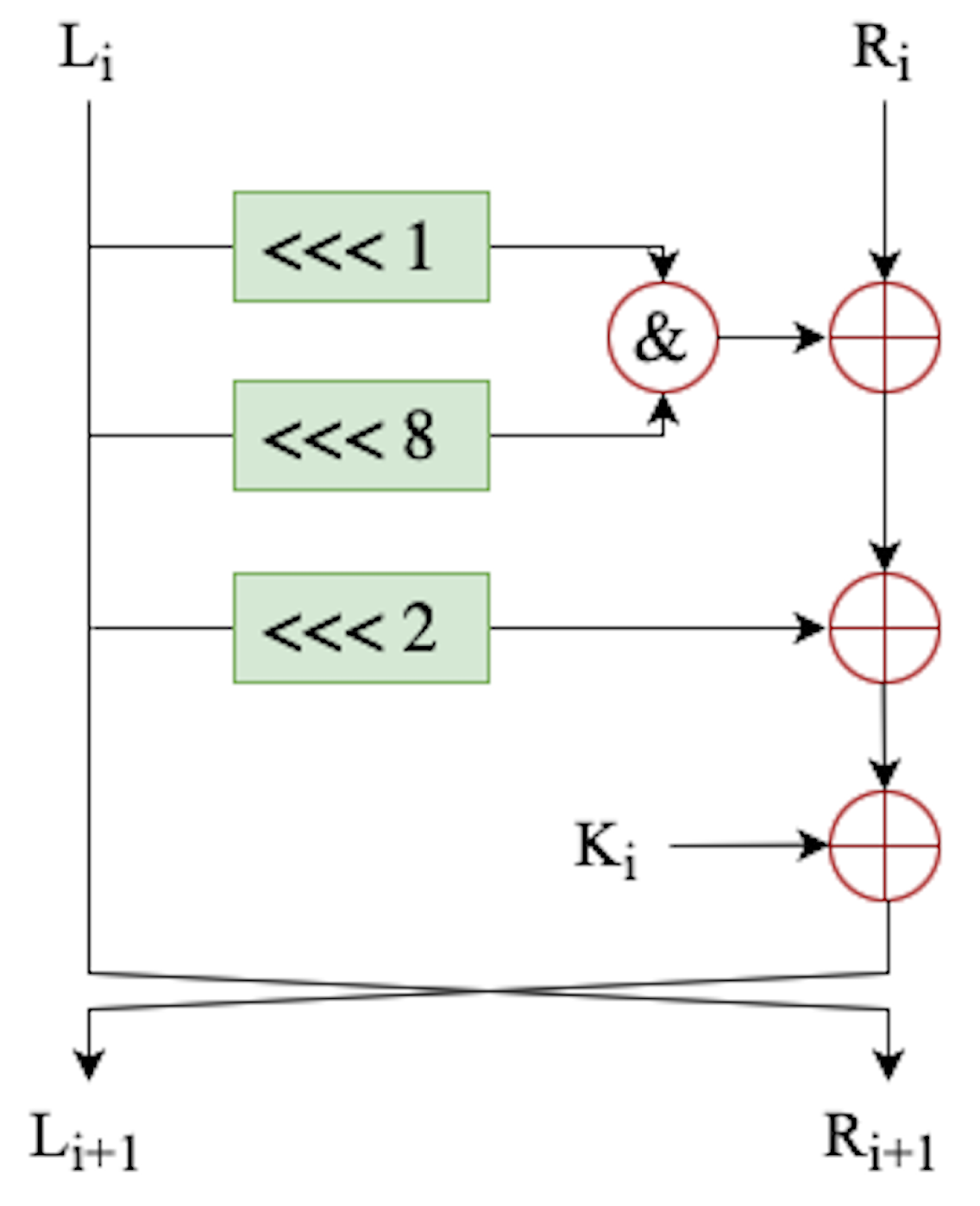

- Distinguishing experiment. Let be a block cipher (in our case, it is Speck32 or Simon32 with block length and key-size ). Let be a n-bit ciphertext. The description of the experiment in [9], , defined for any distinguisher D for the block cipher E, can be re-phrased as follows:

- 1.

- A bit b is chosen uniformly at random.If , then a key is chosen uniformly at random, and an oracle is set to reply to queries using . Otherwise, a function is chosen uniformly at random, from the set of all the functions of 32 bits to 32 bits, and is set to reply to queries using .

- 2.

- D receives access to oracle , and obtains replies to at most t queries.

- 3.

- D outputs a bit

- 4.

- The output of the experiment is 1 if ; otherwise, it is 0.

3. Previous Works

4. DL-Based Distinguisher

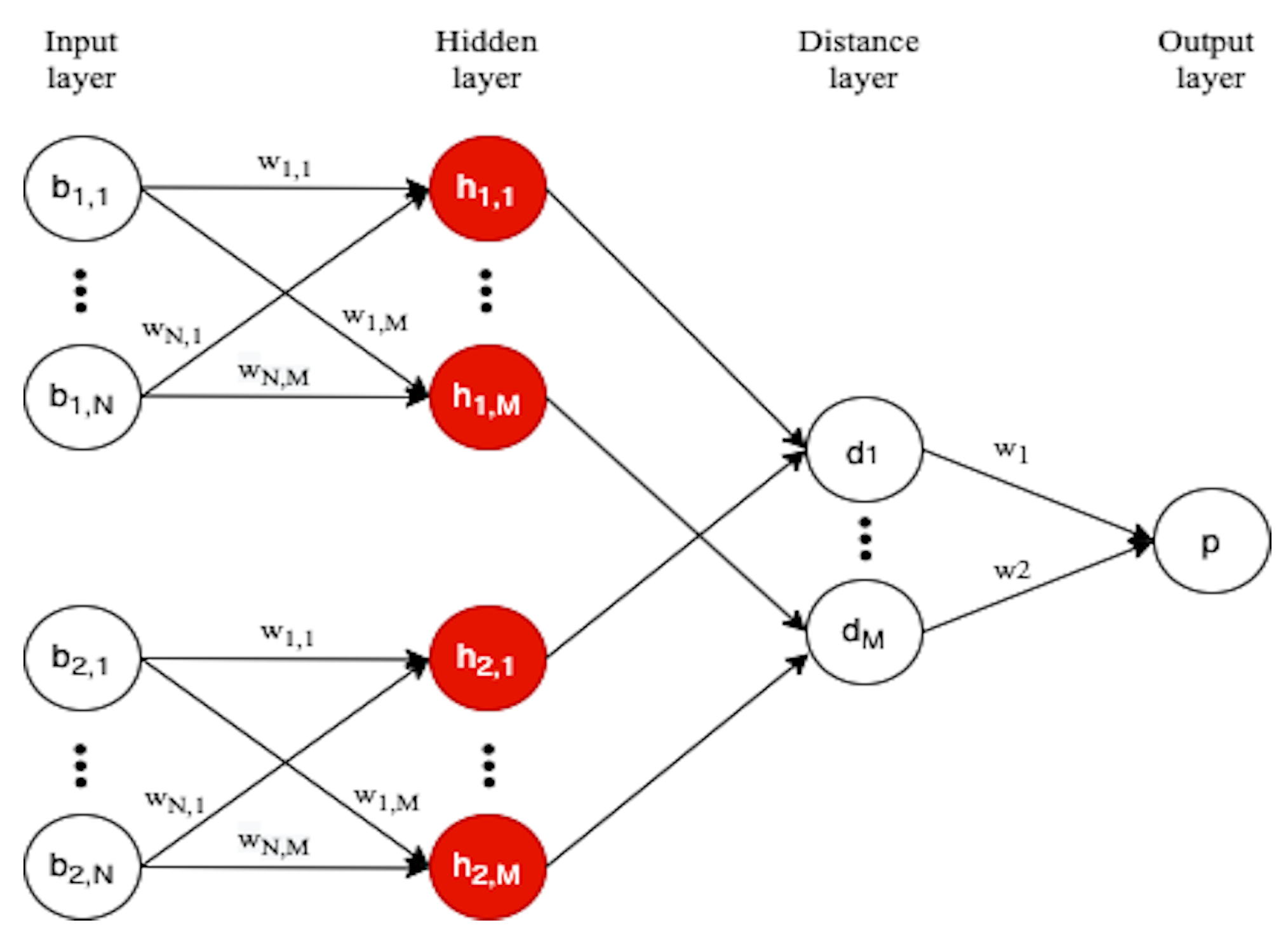

4.1. Siamese Network

- One-shot learning. Siamese networks are based on the so-called one-shot learning [8]. Such a learning strategy has the advantage that the network is able to classify objects even given only one training example for each category of objects. Indeed, during the training phase, instead of receiving pairs (object, class), the Siamese network receives (object1, object2, same-class/different-class ) as input and learns to produce a similarity score, denoting the chances that the two input objects belong to the same category. Typically, the similarity score is a value between 0 and 1, where the score 0 denotes no similarity, while the score 1 denotes full similarity.

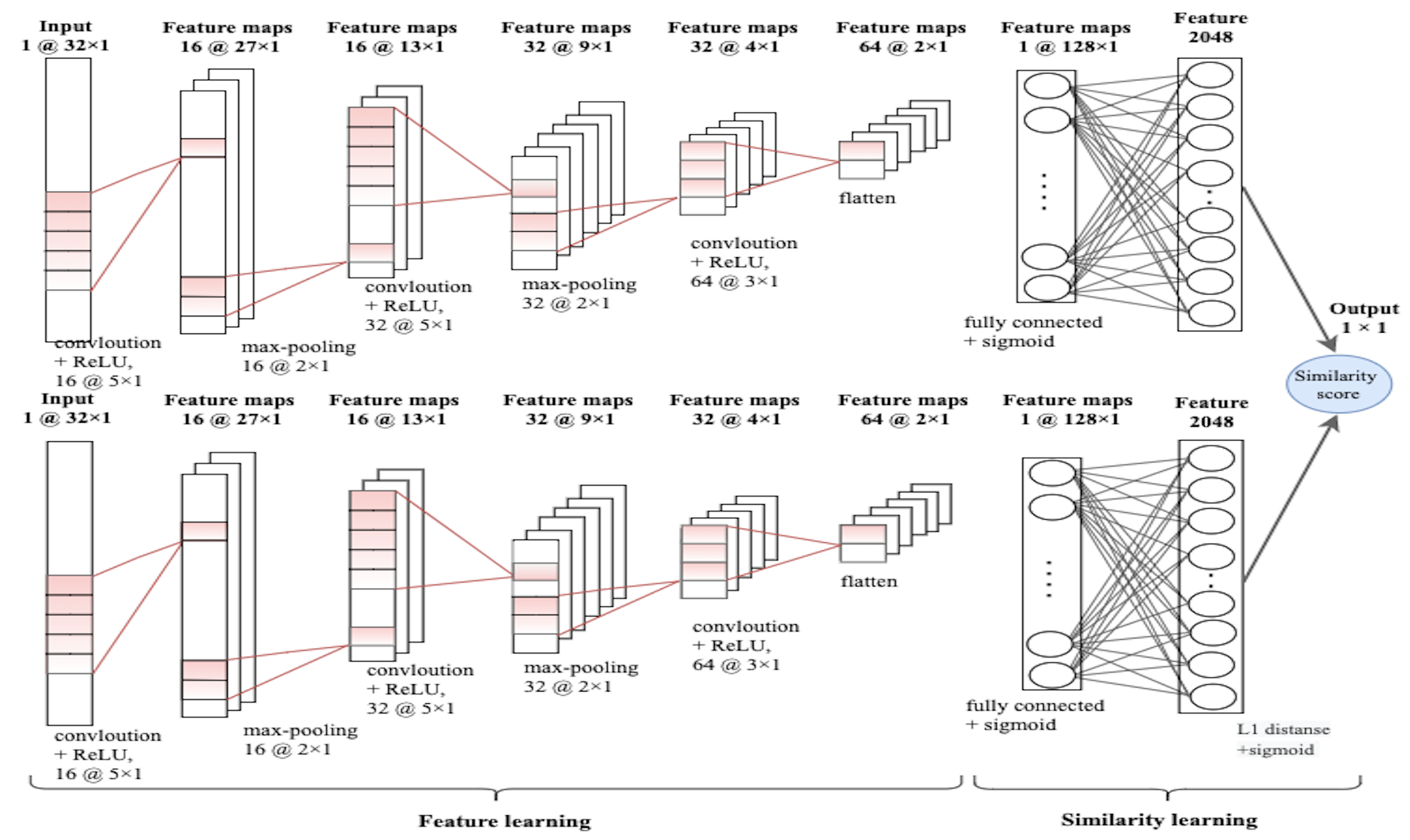

4.2. Model Definition

4.3. Loss Functions

5. Experiments

5.1. Datasets

- 1.

- , consisting of the of samples randomly chosen (i.e., 2500 samples), and used as training set generator, i.e., to build the training set for the Siamese network.

- 2.

- , consisting of the of samples randomly chosen (i.e., 1500 samples), and used as validation set generator, i.e., to build the validation set for the Siamese network.

- 3.

- , consisting of the remaining of samples (i.e., 1000 samples), and used as test set generator, i.e., to build the test set for the Siamese network.

- 200-: ×∪×, and × + ×, where (resp. ) consists of random (resp. cipher output) sequences randomly chosen from , with (resp. ), for . Observe that + + + = 200.

- 300-: ×∪×, and × + ×, where (resp. ) consists of random (resp. cipher output) sequences randomly chosen, with (resp. ), for . Observe that + + + + + = 300.

5.2. Experimental Setting

- The similar pair , includes the test sequence and a sequence from the same category,

- The non-similar pair , includes the test sequence and a sequence from the other category.

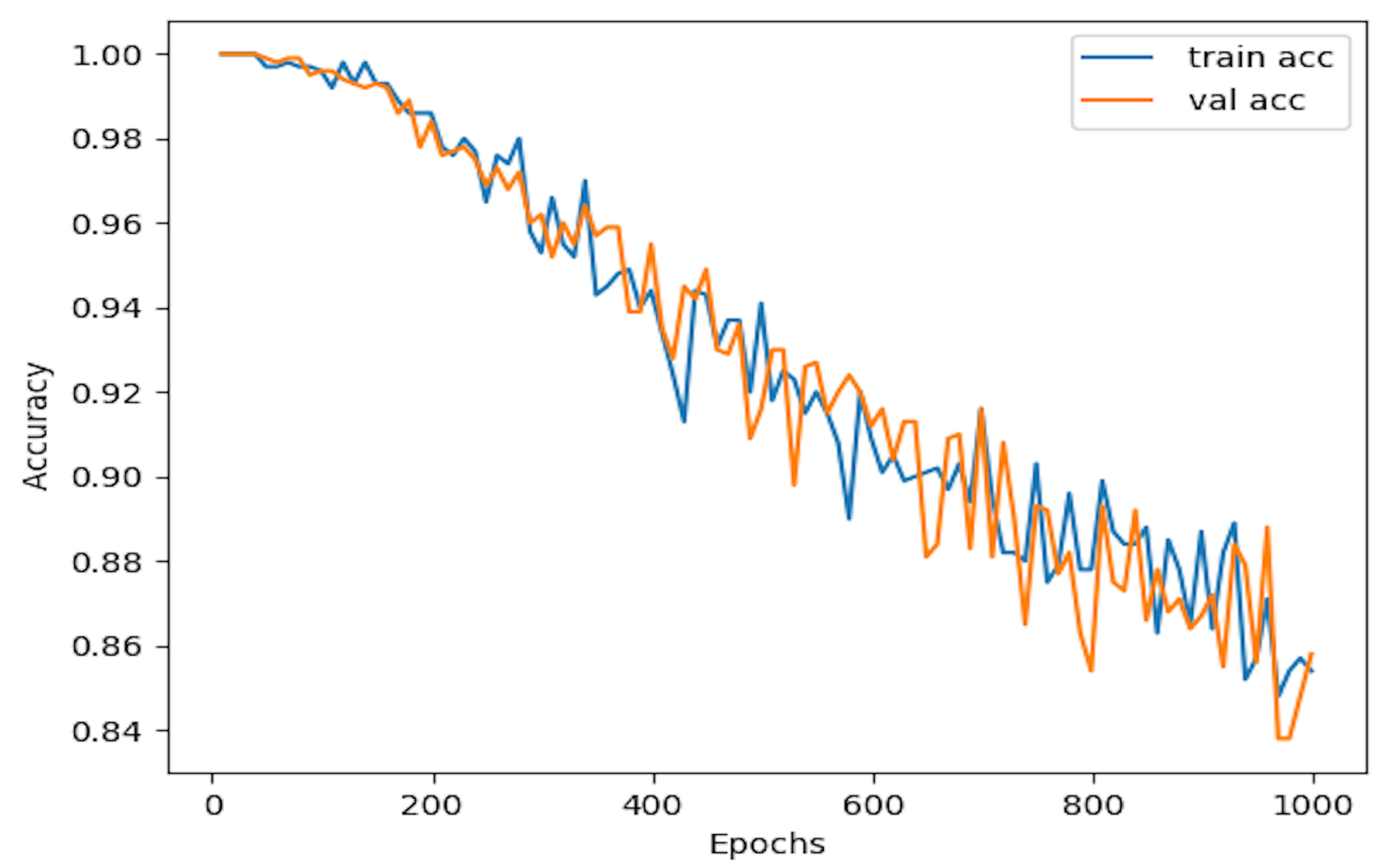

5.3. Results

Distinguisher D

|

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Block Size | Key Size | Speck Rounds | Simon Rounds |

|---|---|---|---|

| 32 | 64 | 22 | 32 |

| 48 | 72, 96 | 22, 23 | 36, 36 |

| 64 | 96, 128 | 26, 27 | 42, 44 |

| 96 | 96, 144 | 28, 29 | 52, 54 |

| 128 | 128, 192, 256 | 32, 33, 34 | 68, 69, 72 |

References

- Rukhin, A.; Soto, J.; Nechvatal, J.; Smid, M.; Barker, E. A Statistical Test Suite for Random and Pseudorandom Number Generators for Cryptographic Applications; Technical Report; Booz-Allen and Hamilton Inc.: Mclean VA, USA, 2001. [Google Scholar]

- Alani, M.M. Testing randomness in ciphertext of block-ciphers using DieHard tests. Int. J. Comput. Sci. Netw. Secur 2010, 10, 53–57. [Google Scholar]

- L’ecuyer, P.; Simard, R. TestU01: AC library for empirical testing of random number generators. Acm Trans. Math. Softw. (Toms) 2007, 33, 22. [Google Scholar] [CrossRef]

- Walker, J. ENT: A Pseudorandom Number Sequence Test Program.Software and Documentation. 2008. Available online: https://www.fourmilab.ch (accessed on 15 February 2023).

- Gohr, A. Improving attacks on round-reduced speck32/64 using deep learning. In Proceedings of the 39th Annual International Cryptology Conference, Santa Barbara, CA, USA, 18–22 August 2019; Springer: Berlin/Heidelberg, Germany; pp. 150–179. [Google Scholar]

- Bromley, J.; Guyon, I.; LeCun, Y.; Säckinger, E.; Shah, R. Signature verification using a “siamese” time delay neural network. Adv. Neural Inf. Process. Syst. 1993, 6, 737–744. [Google Scholar] [CrossRef]

- Chicco, D. Siamese neural networks: An overview. In Artificial Neural Networks; Humana: New York, NY, USA, 2021; pp. 73–94. [Google Scholar]

- Koch, G.; Zemel, R.; Salakhutdinov, R. Siamese neural networks for one-shot image recognition. In Proceedings of the 32nd ICML Deep Learning Workshop, Lille, France, 6–11 July 2015; Volume 2. [Google Scholar]

- D’Arco, P.; De Prisco, R.; Ansaroudi, Z.E.; Zaccagnino, R. Gossamer: Weaknesses and performance. Int. J. Inf. Secur. 2022, 21, 669–687. [Google Scholar] [CrossRef]

- Katz, J.; Lindell, Y. Introduction to Modern Cryptography; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

- Blum, M.; Micali, S. How to generate cryptographically strong sequences of pseudorandom bits. Siam J. Comput. 1984, 13, 850–864. [Google Scholar] [CrossRef]

- Yao, A.C. Theory and application of trapdoor functions. In Proceedings of the 23rd Annual Symposium on Foundations of Computer Science (SFCS 1982), Chicago, IL, USA, 3–5 November 1982; pp. 80–91. [Google Scholar]

- Goldreich, O. Modern Cryptography, Probabilistic Proofs and Pseudorandomness; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1998; Volume 17. [Google Scholar]

- Rivest, R.L. Cryptography and machine learning. In Proceedings of the International Conference on the Theory and Application of Cryptology, Berlin/Heidelberg, Germany, 11–14 November 1991; Springer: Berlin/Heidelberg, Germany, 1991; pp. 427–439. [Google Scholar]

- Kanter, I.; Kinzel, W.; Kanter, E. Secure exchange of information by synchronization of neural networks. EPL (Europhys. Lett.) 2002, 57, 141. [Google Scholar] [CrossRef]

- Karras, D.A.; Zorkadis, V. Strong pseudorandom bit sequence generators using neural network techniques and their evaluation for secure communications. In Proceedings of the AI 2002: Advances in Artificial Intelligence, Canberra, Australia, 2–6 December 2002; McKay, B., Slaney, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2002; pp. 615–626. [Google Scholar]

- Orlandi, C.; Piva, A.; Barni, M. Oblivious neural network computing via homomorphic encryption. EURASIP J. Inf. Secur. 2007, 2007, 037343. [Google Scholar] [CrossRef]

- Pinkas, B. Cryptographic techniques for privacy-preserving data mining. ACM Sigkdd Explor. Newsl. 2002, 4, 12–19. [Google Scholar] [CrossRef]

- Syamamol, T.; Jayakrushna, S.; Manjith, B.C. A Cognitive Approach to Deep Neural Cryptosystem in Various Applications. In Proceedings of the International Conference on IoT Based Control Networks & Intelligent Systems-ICICNIS 2021, Kottayam, India, 28–29 June 2021. [Google Scholar]

- Azraoui, M.; Bahram, M.; Bozdemir, B.; Canard, S.; Ciceri, E.; Ermis, O.; Masalha, R.; Mosconi, M.; Önen, M.; Paindavoine, M.; et al. SoK: Cryptography for neural networks. In Proceedings of the Privacy and Identity Management. Data for Better Living: AI and Privacy: 14th IFIP WG 9.2, 9.6/11.7, 11.6/SIG 9.2.2 International Summer School, Windisch, Switzerland, 19–23 August 2019; Revised Selected Papers. Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 63–81. [Google Scholar]

- Klimov, A.; Mityagin, A.; Shamir, A. Analysis of neural cryptography. In Proceedings of the Advances in Cryptology—ASIACRYPT 2002, Queenstown, New Zealand, 1–5 December 2002; Zheng, Y., Ed.; Springer: Berlin/Heidelberg, Germany, 2002; pp. 288–298. [Google Scholar]

- Alani, M.M. Neuro-cryptanalysis of DES and triple-DES. In Proceedings of the Neural Information Processing, Lake Tahoe, CA, USA, 3–8 December 2012; Huang, T., Zeng, Z., Li, C., Leung, C.S., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 637–646. [Google Scholar]

- Maghrebi, H.; Portigliatti, T.; Prouff, E. Breaking cryptographic implementations using deep learning techniques. In Proceedings of the International Conference on Security, Privacy, and Applied Cryptography Engineering, Hyderabad, India, 14–18 December 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 3–26. [Google Scholar]

- Lai, X.; Massey, J.L.; Murphy, S. Markov ciphers and differential cryptanalysis. In Proceedings of the Workshop on the Theory and Application of of Cryptographic Techniques, Brighton, UK, 8–11 April 1991; Springer: Berlin/Heidelberg, Germany; pp. 17–38. [Google Scholar]

- Zahednejad, B.; Li, J. An Improved Integral Distinguisher Scheme Based on Deep Learning; Technical Report; EasyChair: Online, 2020. [Google Scholar]

- Baksi, A.; Breier, J.; Dong, X.; Yi, C. Machine Learning Assisted Differential Distinguishers For Lightweight Ciphers. IACR Cryptol. ePrint Arch. 2020, 2020, 571. [Google Scholar]

- Yadav, T.; Kumar, M. Differential-ML Distinguisher: Machine Learning based Generic Extension for Differential Cryptanalysis. IACR Cryptol. ePrint Arch. 2020, 2020, 913. [Google Scholar]

- Hou, Z.; Ren, J.; Chen, S. Cryptanalysis of Round-Reduced Simon32 Based on Deep Learning. Cryptology ePrint Archive. 2021. Available online: https://eprint.iacr.org/2021/362 (accessed on 1 January 2022).

- Hou, Z.; Ren, J.; Chen, S. Improve Neural Distinguishers of SIMON and SPECK. Secur. Commun. Netw. 2021, 2021, 9288229. [Google Scholar] [CrossRef]

- Moura, L.d.; Bjørner, N. Z3: An efficient SMT solver. In Proceedings of the International conference on Tools and Algorithms for the Construction and Analysis of Systems, Budapest, Hungary, 29 March–6 April 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 337–340. [Google Scholar]

- Benamira, A.; Gerault, D.; Peyrin, T.; Tan, Q.Q. A deeper look at machine learning-based cryptanalysis. In Proceedings of the Annual International Conference on the Theory and Applications of Cryptographic Techniques, Zagreb, Croatia, 17–21 October 2021; Springer: Berlin/Heidelberg, Germany; pp. 805–835. [Google Scholar]

- Chen, Y.; Yu, H. Bridging machine learning and cryptanalysis via EDLCT. Cryptology ePrint Archive. 2021. Available online: https://eprint.iacr.org/2021/705 (accessed on 1 August 2022).

- Kimura, H.; Emura, K.; Isobe, T.; Ito, R.; Ogawa, K.; Ohigashi, T. Output Prediction Attacks on Block Ciphers using Deep Learning. In Applied Cryptography and Network Security Workshops; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Nielsen, M.A. Neural Networks and Deep Learning; Determination Press: San Francisco, CA, USA, 2015; Volume 25. [Google Scholar]

- Ansaroudi, Z.E. Reference Datasets for the Paper of “On Pseudorandomness and Deep Learning: A Case Study”. 2023. Available online: https://github.com/ZAnsaroudi/PRFDeep (accessed on 1 March 2023).

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Beaulieu, R.; Shors, D.; Smith, J.; Treatman-Clark, S.; Weeks, B.; Wingers, L. The SIMON and SPECK lightweight block ciphers. In Proceedings of the 52nd Annual Design Automation Conference, San Francisco, CA, USA, 8–12 June 2015; pp. 1–6. [Google Scholar]

| Cipher | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | Avg |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Speck | 0.81 | 0.76 | 0.82 | 0.9 | 0.87 | 0.84 | 0.8 | 0.74 | 0.87 | 0.83 | 0.82 |

| Speck | 0.93 | 0.94 | 0.91 | 0.92 | 0.97 | 0.95 | 0.97 | 0.93 | 0.97 | 0.91 | 0.94 |

| Simon | 0.92 | 0.95 | 0.92 | 0.93 | 0.95 | 0.93 | 0.96 | 0.97 | 0.98 | 0.91 | 0.942 |

| Cipher | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | Avg |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Speck | 1 | 0.99 | 1 | 1 | 0.99 | 0.99 | 0.99 | 0.99 | 1 | 1 | 0.995 |

| Simon | 1 | 1 | 1 | 0.99 | 1 | 1 | 0.99 | 0.99 | 0.99 | 1 | 0.996 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ebadi Ansaroudi, Z.; Zaccagnino, R.; D’Arco, P. On Pseudorandomness and Deep Learning: A Case Study. Appl. Sci. 2023, 13, 3372. https://doi.org/10.3390/app13053372

Ebadi Ansaroudi Z, Zaccagnino R, D’Arco P. On Pseudorandomness and Deep Learning: A Case Study. Applied Sciences. 2023; 13(5):3372. https://doi.org/10.3390/app13053372

Chicago/Turabian StyleEbadi Ansaroudi, Zahra, Rocco Zaccagnino, and Paolo D’Arco. 2023. "On Pseudorandomness and Deep Learning: A Case Study" Applied Sciences 13, no. 5: 3372. https://doi.org/10.3390/app13053372

APA StyleEbadi Ansaroudi, Z., Zaccagnino, R., & D’Arco, P. (2023). On Pseudorandomness and Deep Learning: A Case Study. Applied Sciences, 13(5), 3372. https://doi.org/10.3390/app13053372