A Real-Time Nut-Type Classifier Application Using Transfer Learning

Abstract

:1. Introduction

- A dataset of 1250 images of 5 different nut types is constructed by providing nut images from both online sources and manually taken pictures.

- The TL is applied to the nut-type classification problem, and four different TL architectures are consulted to train classifier models on the dataset. Moreover, a custom CNN model is trained to compare the success of TL models.

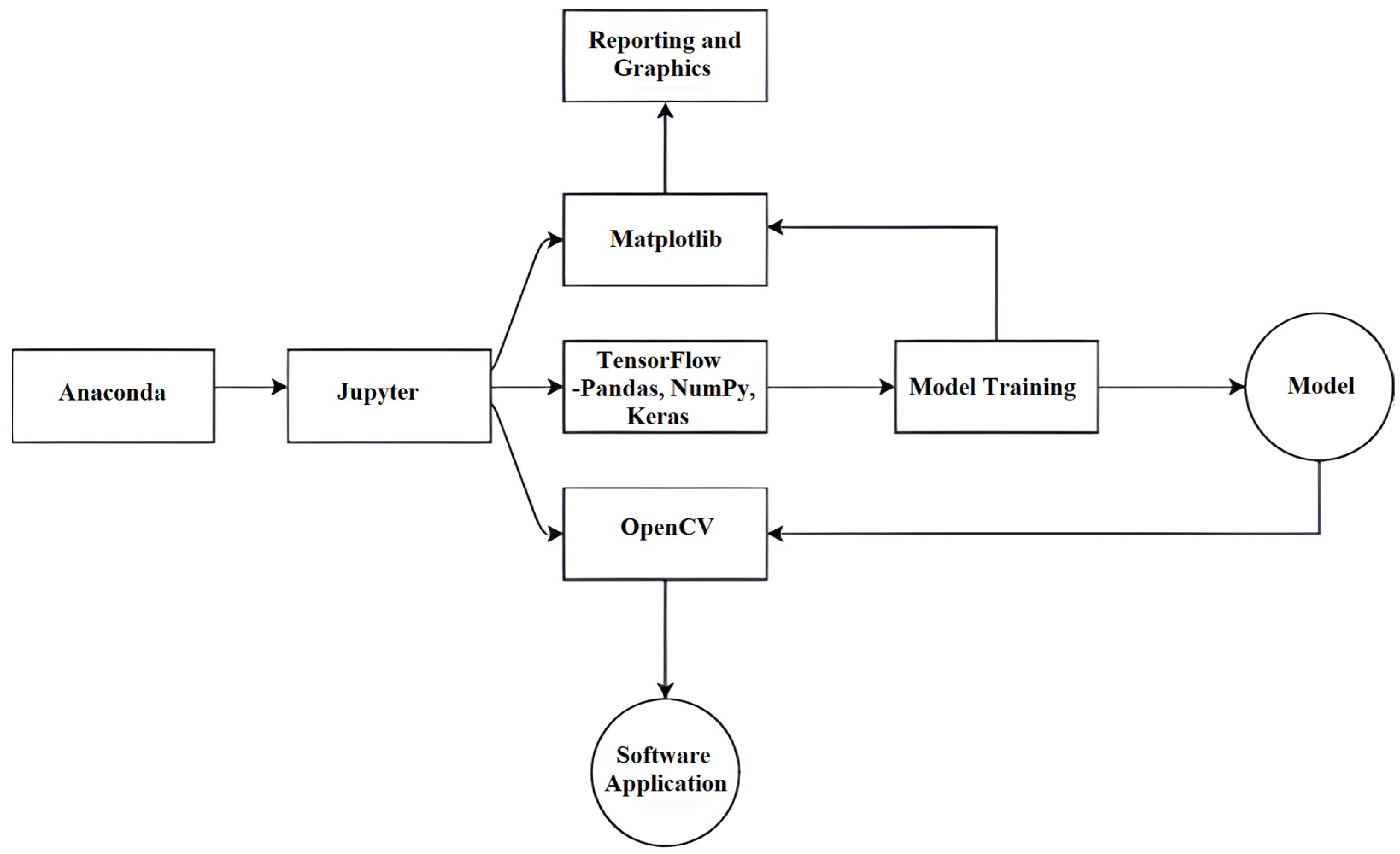

- A practical application developed using the Python language is presented to detect and recognize nuts in real time.

2. Materials and Methods

2.1. Data Acquisition

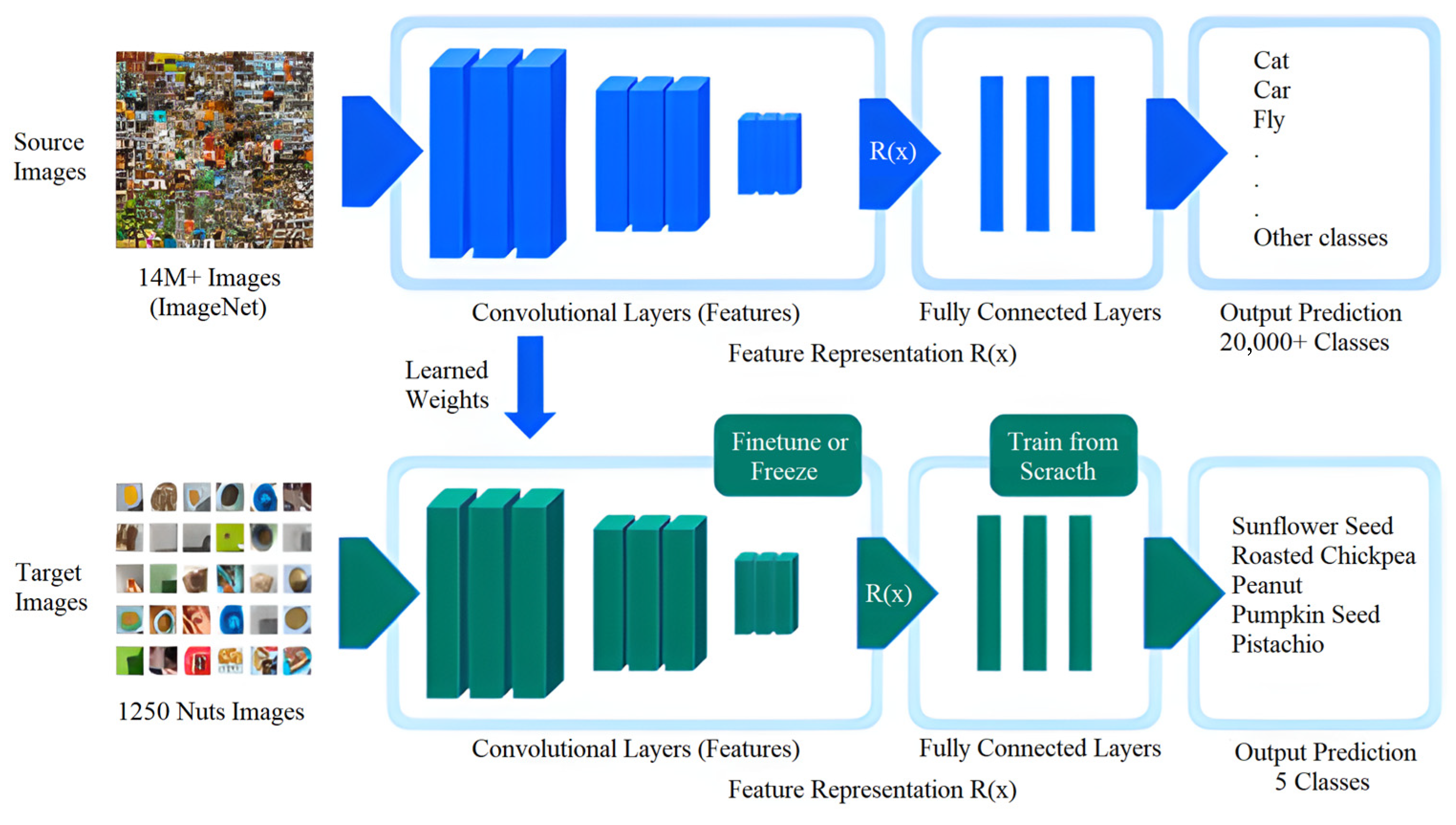

2.2. Transfer Learning

2.2.1. Inception

2.2.2. MobileNet

2.2.3. EfficientNet

2.2.4. ResNet

2.3. Performance Evaluation

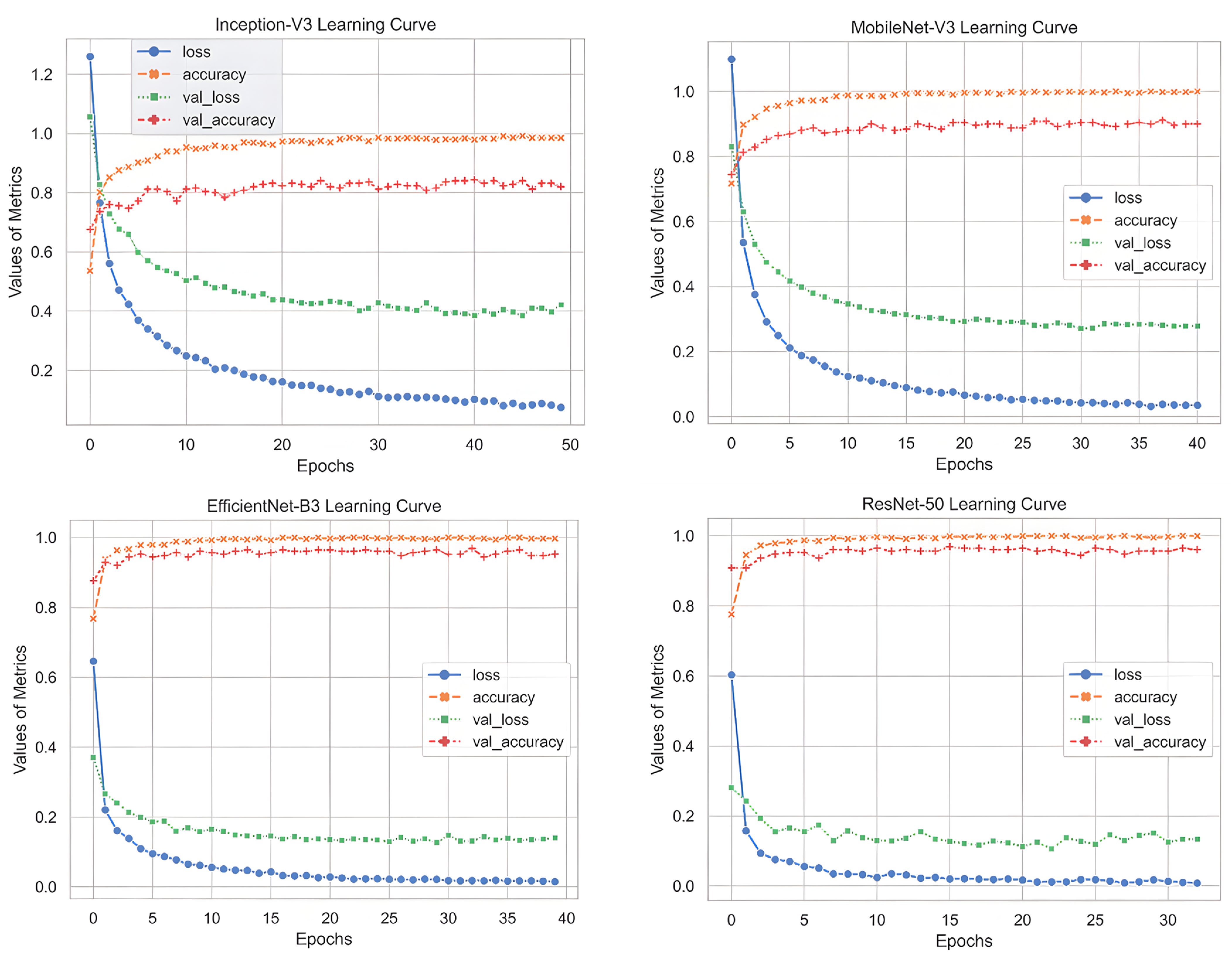

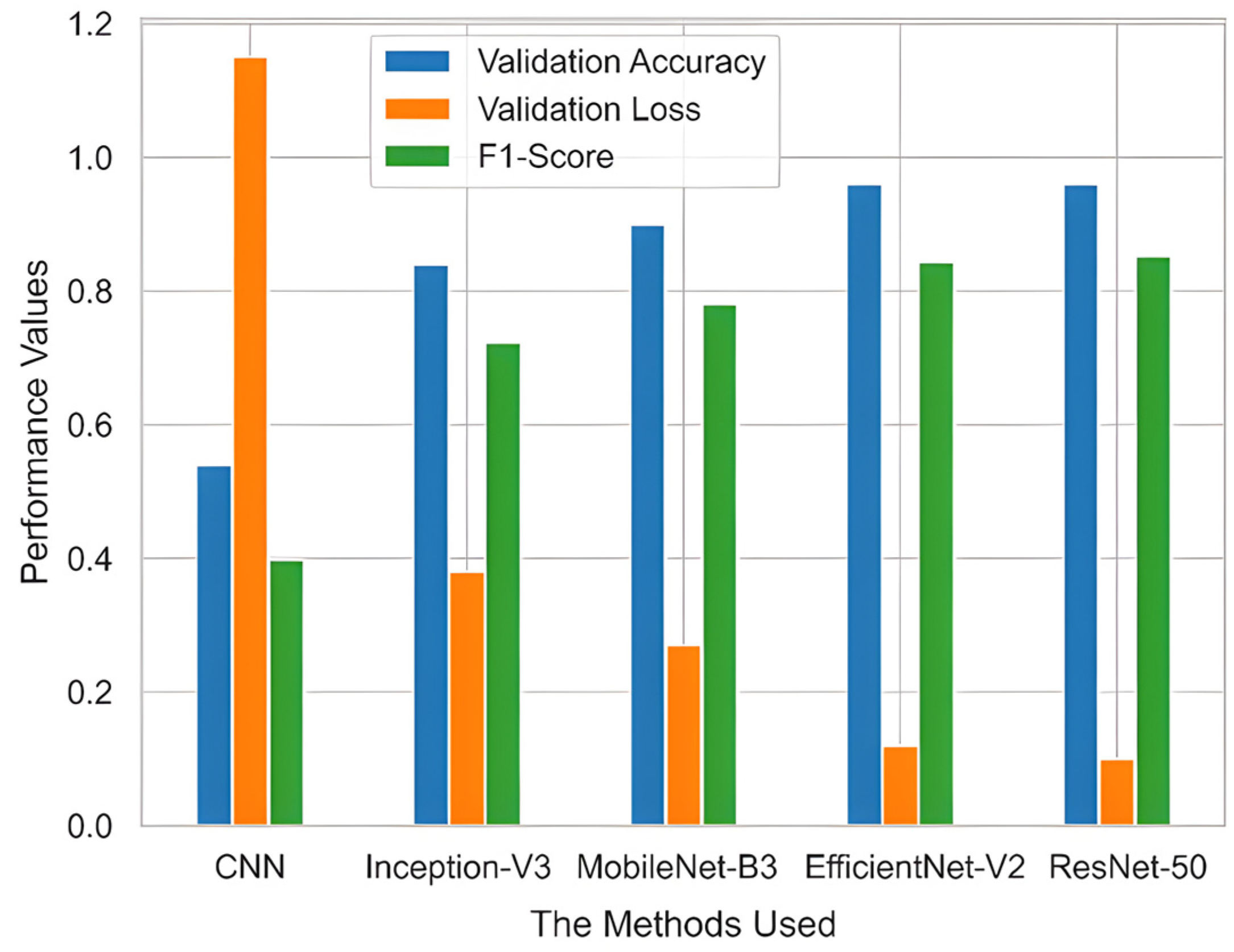

3. Results and Discussion

4. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bhatt, K.; Kumar, S.M. Way Forward to Digital Society–Digital Transformation of Msmes from Industry 4.0 to Industry 5.0. In Proceedings of the 2022 Fourth International Conference on Emerging Research in Electronics, Computer Science and Technology (ICERECT), Mandya, India, 26–27 December 2022; pp. 1–6. [Google Scholar]

- United Nations Publications (Ed.) Technology and Innovation Report 2023; United Nations Publications: New York, NY, USA, 2023. [Google Scholar]

- Thayyib, P.V.; Mamilla, R.; Khan, M.; Fatima, H.; Asim, M.; Anwar, I.; Khan, M.A. State-of-the-Art of Artificial Intelligence and Big Data Analytics Reviews in Five Different Domains: A Bibliometric Summary. Sustainability 2023, 15, 4026. [Google Scholar] [CrossRef]

- Mundhe, R.V.; Manwade, K.B. Continuous top-k monitoring on document streams. In Proceedings of the 2018 International Conference on Inventive Research in Computing Applications (ICIRCA), Coimbatore, India, 11–12 July 2018; pp. 1008–1013. [Google Scholar]

- Swamy, J.C.N.; Seshachalam, D.; Shariff, S.U. Smart RFID based Interactive Kiosk cart using wireless sensor node. In Proceedings of the 2016 International Conference on Computation System and Information Technology for Sustainable Solutions (CSITSS), Bengaluru, India, 6–8 October 2016; pp. 459–464. [Google Scholar]

- Makinde, O.M.; Ayeni, K.I.; Sulyok, M.; Krska, R.; Adeleke, R.A.; Ezekiel, C.N. Microbiological safety of ready-to-eat foods in low-and middle-income countries: A comprehensive 10-year (2009 to 2018) review. Compr. Rev. Food Sci. Food Saf. 2020, 19, 703–732. [Google Scholar] [CrossRef]

- Downs, S.M.; Fox, E.L.; Mutuku, V.; Muindi, Z.; Fatima, T.; Pavlovic, I.; Usain, S.; Sabbahi, M.; Kimenju, S.; Ahmed, S. Food Environments and Their Influence on Food Choices: A Case Study in Informal Settlements in Nairobi, Kenya. Nutrients 2022, 14, 2571. [Google Scholar] [CrossRef]

- Hameed, K.; Chai, D.; Rassau, A. Class distribution-aware adaptive margins and cluster embedding for classification of fruit and vegetables at supermarket self-checkouts. Neurocomputing 2021, 461, 292–309. [Google Scholar] [CrossRef]

- Alharbi, A.H.; Alkhalaf, S.; Asiri, Y.; Abdel-Khalek, S.; Mansour, R.F. Automated Fruit Classification using Enhanced Tunicate Swarm Algorithm with Fusion based Deep Learning. Comput. Electr. Eng. 2023, 108, 108657. [Google Scholar] [CrossRef]

- Yunus, R.; Arif, O.; Afzal, H.; Amjad, M.F.; Abbas, H.; Bokhari, H.N.; Haider, S.T.; Zafar, N.; Nawaz, R. A Framework to Estimate the Nutritional Value of Food in Real Time Using Deep Learning Techniques. Inst. Electr. Electron. Eng. 2019, 7, 2643–2652. [Google Scholar] [CrossRef]

- Park, S.J.; Palvanov, A.; Lee, C.H.; Jeong, N.; Cho, Y.I.; Lee, H.J. The development of food image detection and recognition model of Korean food for mobile dietary management. Nutr. Res. Pract. 2019, 13, 521–528. [Google Scholar] [CrossRef] [PubMed]

- Rabby, M.K.M.; Chowdhury, B.; Kim, J.H. A modified canny edge detection algorithm for fruit detection & classification. In Proceedings of the IEEE 2018 10th International Conference on Electrical and Computer Engineering (ICECE), Dhaka, Bangladesh, 20–22 December 2018; pp. 237–240. [Google Scholar]

- Vijayakanthan, G.; Kokul, T.; Pakeerathai, S.; Pinidiyaarachchi, U.A.J. Classification of vegetable plant pests using deep transfer learning. In Proceedings of the IEEE 2021 10th International Conference on Information and Automation for Sustainability (ICIAfS), Negambo, Sri Lanka, 11–13 August 2021; pp. 167–172. [Google Scholar]

- Taylor, E. Mobile payment technologies in retail: A review of potential benefits and risks. Int. J. Retail Distrib. Manag. 2016, 44, 159–177. [Google Scholar] [CrossRef]

- Awan, H.U.M.; Pettenella, D. Pine nuts: A review of recent sanitary conditions and market development. Forests 2017, 8, 367. [Google Scholar] [CrossRef]

- Adeigbe, O.O.; Olasupo, F.O.; Adewale, B.D.; Muyiwa, A.A. A review on cashew research and production in Nigeria in the last four decades. Sci. Res. Essays 2015, 10, 196–209. [Google Scholar] [CrossRef]

- Kandaswamy, S.; Swarupa, V.M.; Sur, S.; Choubey, G.; Devarajan, Y.; Mishra, R. Cashew nut shell oil as a potential feedstock for biodiesel production: An overview. Biotechnol. Bioeng. 2023, 120, 3137–3147. [Google Scholar] [CrossRef]

- Vidyarthi, S.K.; Singh, S.K.; Tiwari, R.; Xiao, H.W.; Rai, R. Classification of first quality fancy cashew kernels using four deep convolutional neural network models. J. Food Process Eng. 2020, 43, e13552. [Google Scholar] [CrossRef]

- Moscetti, R.; Berhe, D.H.; Agrimi, M.; Haff, R.P.; Liang, P.; Ferri, S.; Monarca, D.; Massantini, R. Pine nut species recognition using NIR spectroscopy and image analysis. J. Food Eng. 2021, 292, 110357. [Google Scholar] [CrossRef]

- Atban, F.; İlhan, H.O. Performance Evaluation of the Decision Level Fusion in Dried-Nut Species Classification. Eur. J. Sci. Technol. 2022, 45, 48–52. [Google Scholar]

- Paleyes, A.; Urma, R.G.; Lawrence, N.D. Challenges in deploying machine learning: A survey of case studies. ACM Comput. Surv. 2022, 55, 1–29. [Google Scholar] [CrossRef]

- Xie, W.; Li, S.; Xu, W.; Deng, H.; Liao, W.; Duan, X.; Wang, X. Study on the CNN model optimization for household garbage classification based on machine learning. J. Ambient Intell. Smart Environ. 2022, 14, 439–454. [Google Scholar] [CrossRef]

- Xia, X.; Xu, C.; Nan, B. Inception-v3 for flower classification. In Proceedings of the 2017 2nd International Conference on Image, Vision and Computing (ICIVC), Chengdu, China, 2–4 August 2017; pp. 783–787. [Google Scholar]

- Singh, T.; Vishwakarma, D.K. A deeply coupled ConvNet for human activity recognition using dynamic and RGB images. Neural Comput. Appl. 2021, 33, 469–485. [Google Scholar] [CrossRef]

- Bouguezzi, S.; Fredj, H.B.; Belabed, T.; Valderrama, C.; Faiedh, H.; Souani, C. An efficient FPGA-based convolutional neural network for classification: Ad-MobileNet. Electronics 2021, 10, 2272. [Google Scholar] [CrossRef]

- Zhu, F.; Gong, R.; Yu, F.; Liu, X.; Wang, Y.; Li, Z.; Yan, J. Towards unified int8 training for convolutional neural network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1969–1979. [Google Scholar]

- Yu, W.; Lv, P. An End-to-End Intelligent Fault Diagnosis Application for Rolling Bearing Based on MobileNet. IEEE Access 2021, 9, 41925–41933. [Google Scholar] [CrossRef]

- AlGarni, M.D.; AlRoobaea, R.; Almotiri, J.; Ullah, S.S.; Hussain, S.; Umar, F. An efficient convolutional neural network with transfer learning for malware classification. Wirel. Commun. Mob. Comput. 2022, 2022, 4841741. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Chhabra, M.; Kumar, R. An Efficient ResNet-50 based Intelligent Deep Learning Model to Predict Pneumonia from Medical Images. In Proceedings of the 2022 International Conference on Sustainable Computing and Data Communication Systems (ICSCDS), Erode, India, 20–22 March 2022; pp. 1714–1721. [Google Scholar]

- Thi, H.D.; Andres, F.; Quoc, L.T.; Emoto, H.; Hayashi, M.; Katsumata, K.; Oshide, T. Deep Learning-Based Water Crystal Classification. Appl. Sci. 2022, 12, 825. [Google Scholar] [CrossRef]

| Nut Type | Number of Images Captured Manually in a Nut Shop | Number of Images Obtained on the Internet | Total Number of Images |

|---|---|---|---|

| Pistachio | 193 (77.2%) | 57 (22.8%) | 250 (20%) |

| Peanut | 222 (88.8%) | 28 (11.2%) | 250 (20%) |

| Pumpkin seed | 229 (91.2%) | 21 (8.8%) | 250 (20%) |

| Sunflower seed | 201 (80.4%) | 49 (19.6%) | 250 (20%) |

| Roasted chickpea | 217 (86.8%) | 33 (13.2%) | 250 (20%) |

| Total | 1062 (84.96%) | 188 (15.04%) | 1250 (100%) |

| Model | Input Size | Patience Value | Number of Epochs | Optimizer Used | Loss Function Used |

|---|---|---|---|---|---|

| CNN | 300 × 300 | 7 | 50 | Adam optimizer | Sparse categorical cross entropy |

| Inception-V3 | 229 × 229 | 10 | |||

| MobileNet-V3 | 224 × 224 | 10 | |||

| EfficientNet-V2 | 300 × 300 | 10 | |||

| ResNet-50 | 300 × 300 | 10 |

| Model | Loss | Accuracy | Validation Loss | Validation Accuracy | F1-Score | Best Epoch | Last Epoch |

|---|---|---|---|---|---|---|---|

| CNN | 0.92 | 0.62 | 1.14 | 0.54 | 0.398 | 23 | 30 |

| Inception-V3 | 0.08 | 0.99 | 0.38 | 0.84 | 0.781 | 45 | 50 |

| MobileNet-V3 | 0.04 | 0.99 | 0.27 | 0.90 | 0.823 | 31 | 40 |

| EfficientNet-B3 | 0.02 | 0.99 | 0.12 | 0.96 | 0.843 | 30 | 39 |

| ResNet-50 | 0.01 | 1.00 | 0.10 | 0.96 | 0.852 | 23 | 32 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Özçevik, Y. A Real-Time Nut-Type Classifier Application Using Transfer Learning. Appl. Sci. 2023, 13, 11644. https://doi.org/10.3390/app132111644

Özçevik Y. A Real-Time Nut-Type Classifier Application Using Transfer Learning. Applied Sciences. 2023; 13(21):11644. https://doi.org/10.3390/app132111644

Chicago/Turabian StyleÖzçevik, Yusuf. 2023. "A Real-Time Nut-Type Classifier Application Using Transfer Learning" Applied Sciences 13, no. 21: 11644. https://doi.org/10.3390/app132111644

APA StyleÖzçevik, Y. (2023). A Real-Time Nut-Type Classifier Application Using Transfer Learning. Applied Sciences, 13(21), 11644. https://doi.org/10.3390/app132111644