3.1. Deep Deterministic Policy Gradient Algorithm

The DDPG algorithm is proposed by the Google DeepMind team for realizing continuous action space control. It is composed of an Actor–Critic structure, combined with the Deep Q-learning Network (DQN) algorithm.

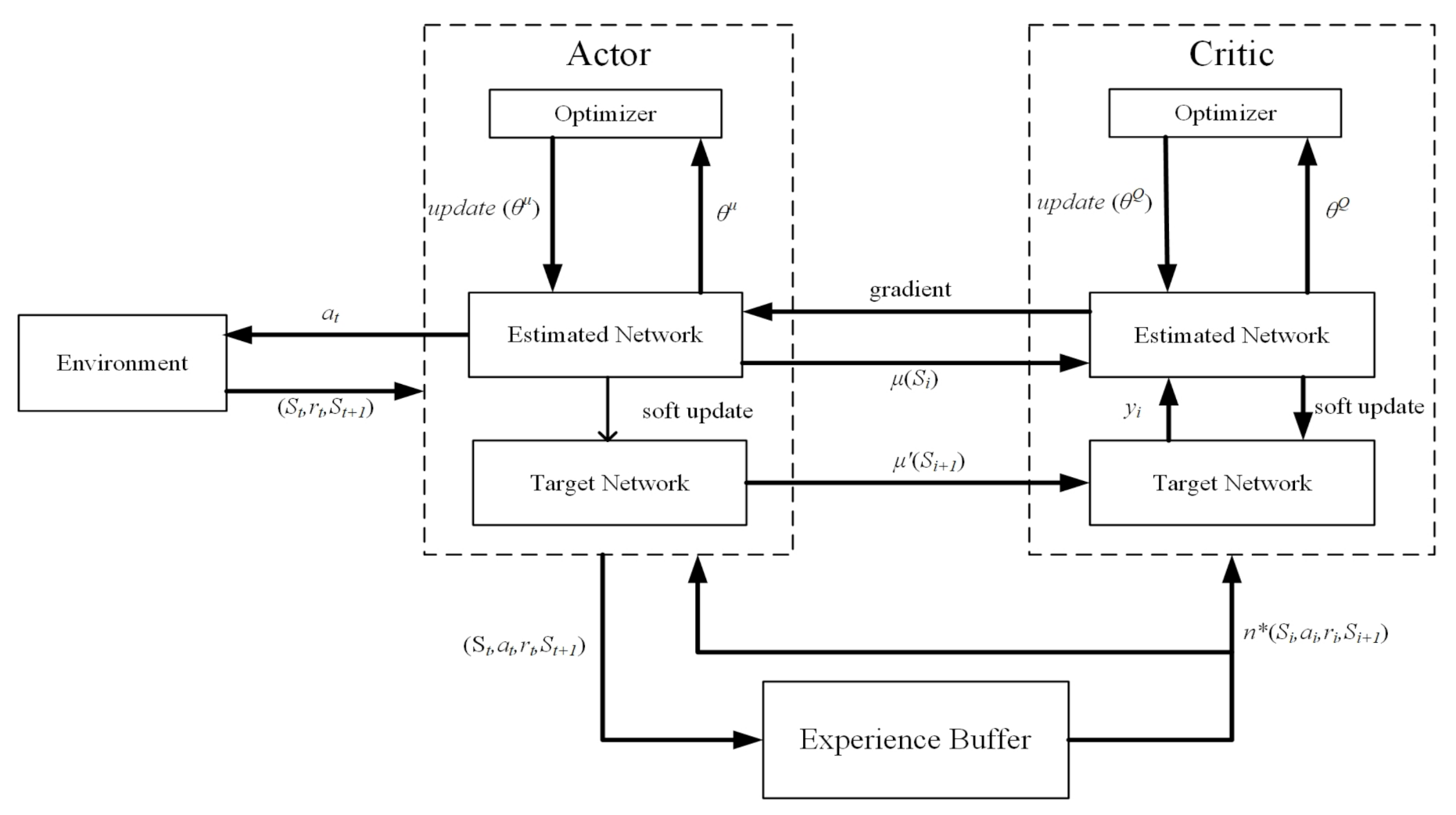

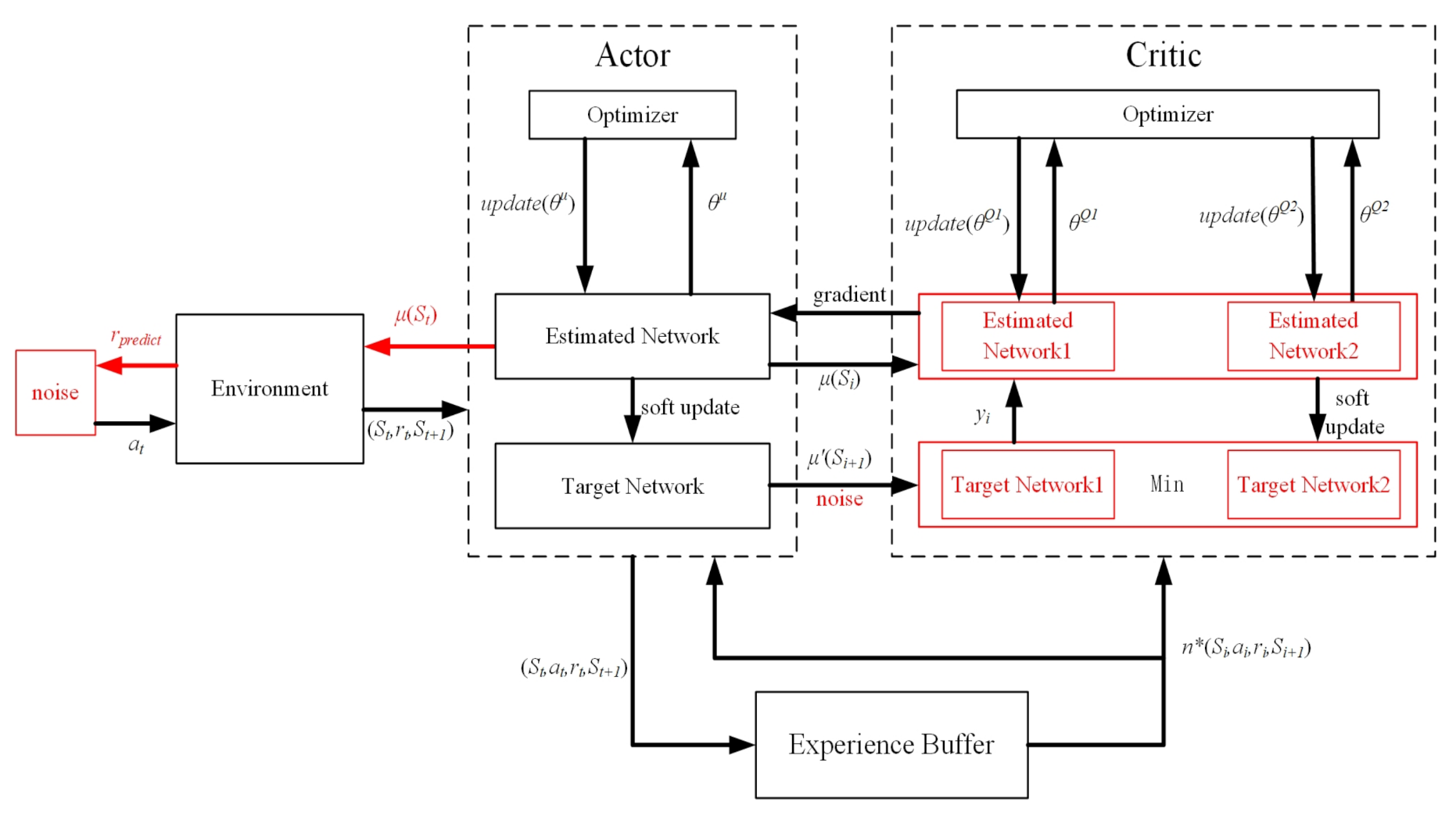

Figure 4 shows that the Actor–Critic structure consists of two parts: the actor network and the critic network. Among them, the actor represents the strategy network, which takes the current state as input and then generates the action under the current state through the analysis of neural networks. It takes advantage of Policy Gradient’s ability to select actions in a continuous interval, and then selects actions randomly based on the learned action distribution. However, DDPG is different from Policy Gradient in that it generates deterministic actions based on the output of the actor, instead of generating according to Policy Gradient. The critic is the value network with a single step update. This update method solves the problem of low learning efficiency caused by the strategy gradient of round update. Through the reward function to guide the learning direction of the network, the critic can obtain the potential rewards of the current state, and it takes the action output from the actor network as the input and outputs the evaluation value. Critic evaluates the action selected by the actor and guides the update direction of the network parameters of the actor, so that the actor after updating the network parameters can choose actions with a higher value as much as possible. The evaluation value

is the reward for taking action

under

. The formula is as follows:

where

denotes the parameter of the critic network.

The behavior of each state directly obtains a certain action value through the deterministic policy function

:

where

represents the deterministic behavior policy, which is defined as a function and simulated by a neural network,

represents the parameter of policy network, which is used to generate the determination action.

is the change rate of angular velocity in state

, and the control input

u in (1) is changed by

.

In order to make the DDPG algorithm more random and learning coverage, it is necessary to add random noise to the selected action to make the value of the action fluctuate. The action after adding noise can be expressed as:

where

N denotes the gaussian noise, and the noise follows the normal distribution, where

is the expectation and

is the variance,

is the minimum value of the action, and

is the maximum value of the action.

The design of DDPG is based on the off-policy approach, which separates the behavioral strategies from the evaluation strategies. There are estimated networks and target networks in the actor and the critic. Their estimated network parameters need to be trained, and the target network is soft-updated. Therefore, the two network structures of the actor and the critic are the same, but the network parameters are updated asynchronously. The soft update formula of the target network of the actor and the critic is as follows:

where

represents the soft update rate,

and

are the estimated network parameters of the actor and the critic, and

and

are the target network parameters of the actor and the critic.

The action selected by the target network of the actor and the observation value of the environmental state are used as the input of the target network of the critic, which determines the update direction of the target network parameters of the critic. The update formula of the critic network parameters is:

where

represents the real evaluation value which is calculated by the target network,

indicates environment status,

represents the real reward,

indicates the selected action under

,

represents deterministic policy function, and

denotes the reward decay rate, which controls the influence of the reward value of the future step on the evaluation value of the current step. Larger

indicates that the critic pays more attention to future rewards, and smaller

indicates that the critic pays more attention to current rewards.

L denotes the loss function, which is the sum of squared errors between the actual value

and the estimated value.

The update of the actor network parameters follows the deterministic strategy, whose formula is:

where

is from the critic, which is the update direction of the actor’s network parameters, so that the actor with updated parameters can choose the action to obtain a higher evaluation value from the critic.

is from the actor, which indicates the update direction of the parameters of the actor, so that the actor after updating the parameters is more likely to select the above action.

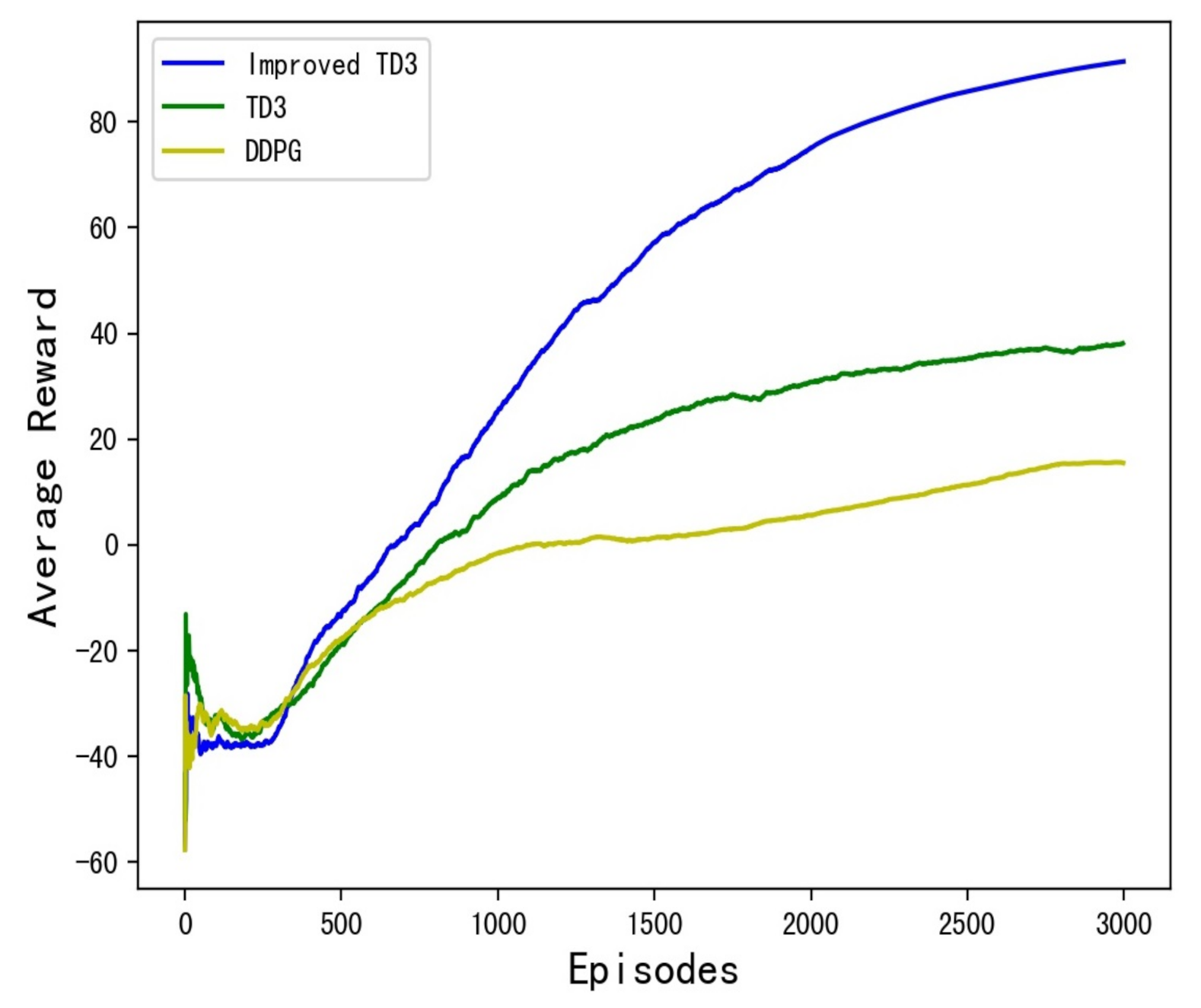

3.2. Improved Twin Delayed Deep Deterministic Policy Gradient Algorithm

Since DDPG is an off-policy method based on the DQN algorithm, each time it selects the highest value in the current state instead of using the actual action of the next interaction, there may be an overestimation. In the Actor–Critic framework of continuous action control, if each step is estimated in this way, the error will accumulate step by step, resulting in failure to find the optimal strategy and, ultimately, making the algorithm unable to converge. The twin delayed deep deterministic policy gradient (TD3) algorithm is optimized for mitigating the overestimation error of the DDPG algorithm.

The actor has two networks, an estimation network and a target network. The critic has two estimation networks and two target networks, respectively, as schematically illustrated in

Figure 5. Thus, the critic has four networks with the same structure. The state quantity and action are the input of the critic network, and the output value is the value generated by the action executed in the current environment state. Regarding the optimization algorithm, TD3 adopts the Actor–Critic architecture similar to DDPG and is used to solve the problems in continuous action space. The improvement of the TD3 algorithm relative to the DDPG is mainly reflected in the following three aspects:

The first is the double critic network structure. In TD3, the critic’s estimated network and target network have two, respectively. The smaller value of the target network is selected as the update target to update Estimated Critic1 and Estimated Critic2, which can alleviate the phenomenon of overestimation. TD3 uses the same method as DDPG to construct the loss function:

S and are state quantities, as the input of the actor, and the output is the actions and generated in the current environment.

The second is to delay updating the actor. In the TD3 algorithm, the critic network is updated once every step, the parameters of the actor are updated in a delayed manner, with a lower update frequency than the critic, that is, after the critic is updated multiple times, update the actor once. On the one hand, delaying updating the actor can reduce unnecessary repeated updates. On the other hand, it can also reduce errors accumulated in multiple updates.

The third is the smooth regularization of the target strategy. By adding noise based on the normal distribution to the action of the target network selection as (11):

the value function is updated more smoothly, the network is more robust, and the robustness of the algorithm is improved.

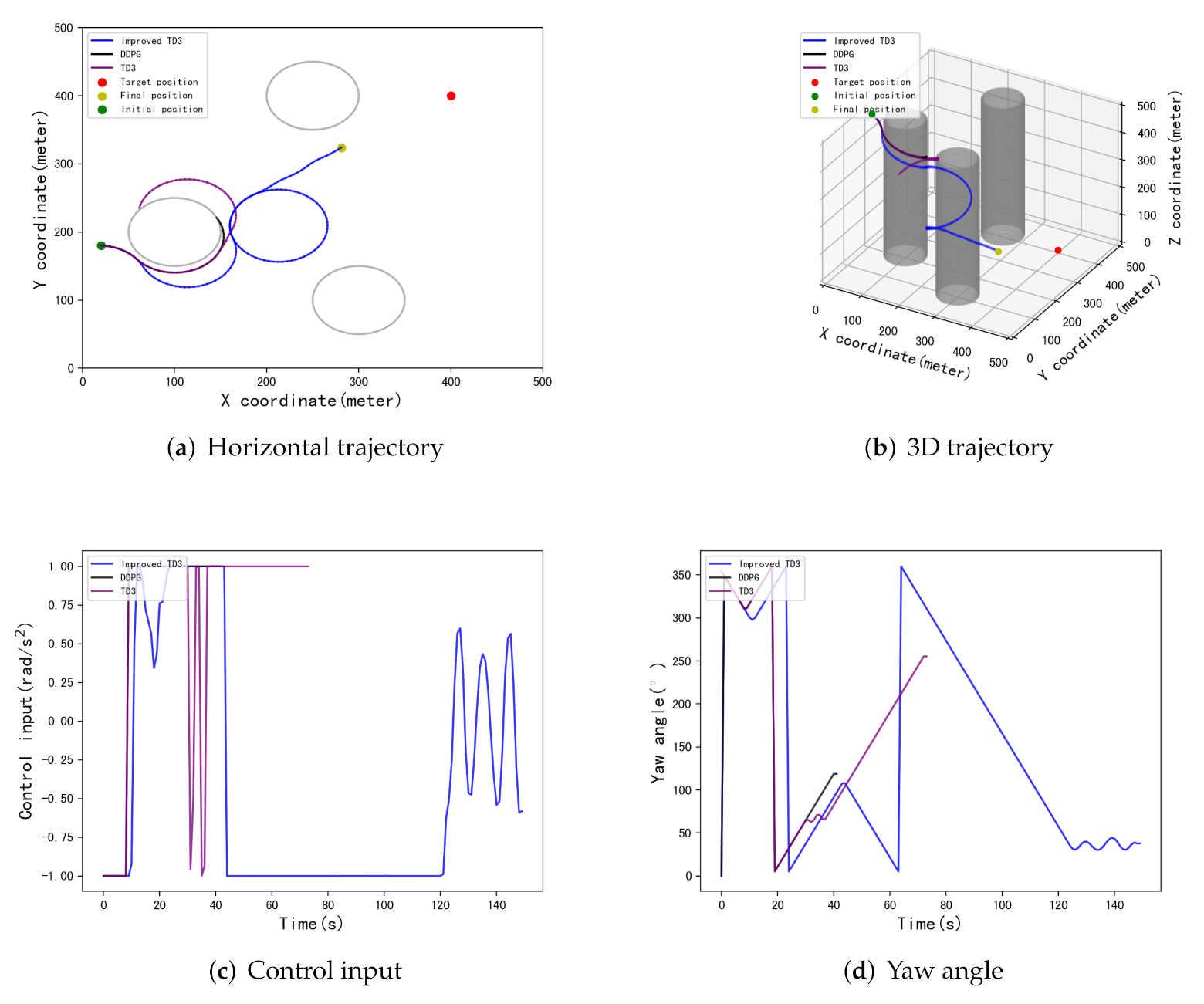

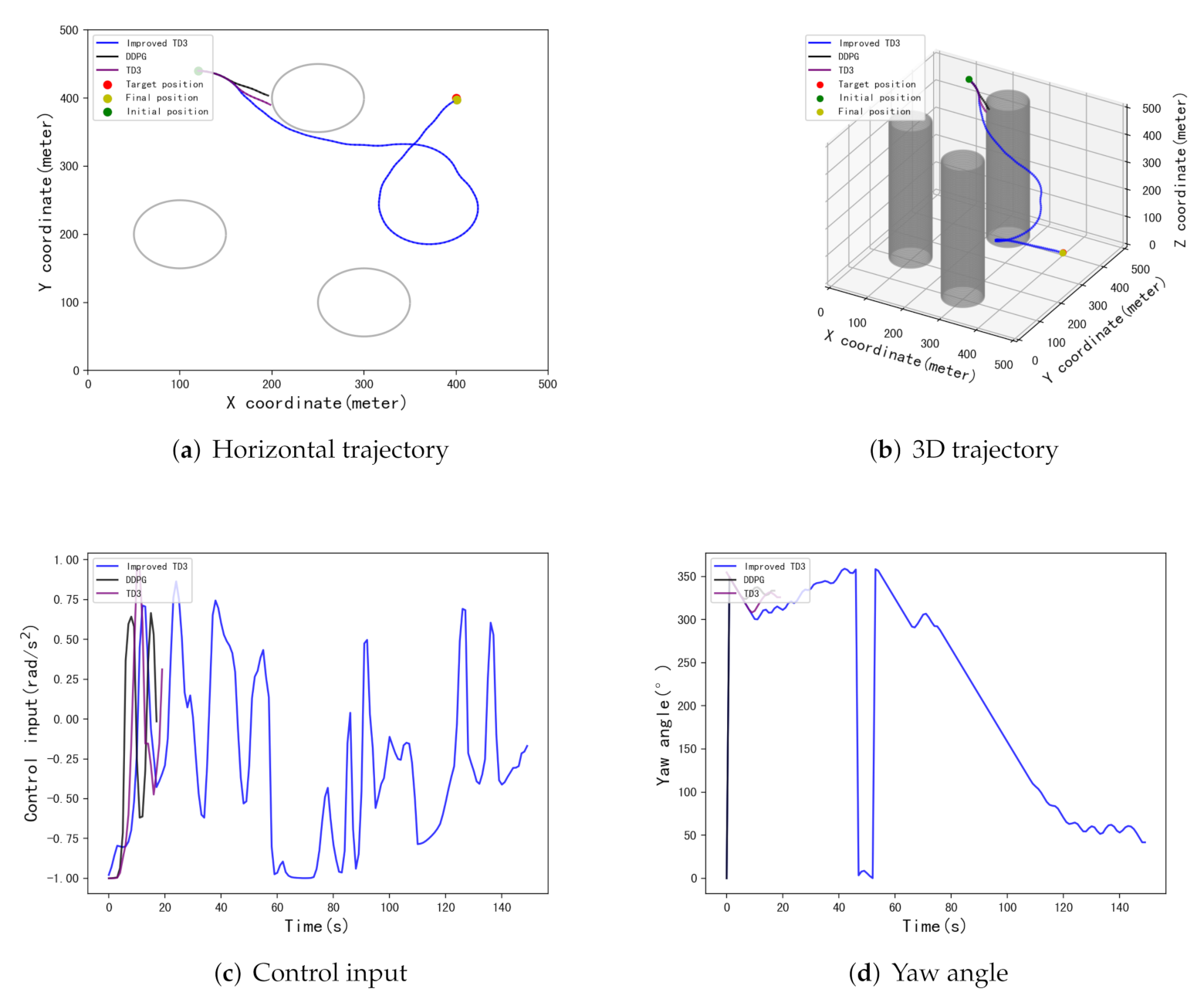

TD3 solves the problem of overvaluation of DDPG and facilitates the exploration of better strategies to improve the success rate and landing accuracy. However, applying the TD3 algorithm to the trajectory planning of the parafoil delivery system, combined with our existing simulation results and analyzing the experimental data, it is found that TD3 still has a larger landing error than the traditional trajectory optimization algorithm. This is difficult to solve only by increasing the number of training because the parafoil does not necessarily explore a better policy each time, or even a worse policy than the existing one, and stores it in the experience pool. This is due to the uncertainty of adding noise to the action. In the DDPG and the TD3 algorithms, in order to increase the randomness of the algorithm and the coverage of learning, they adopt the way of adding noise to the action to make it produce a certain fluctuation, hoping to explore more strategies. However, the action after increasing the noise is not necessarily better; it may make the action after increasing the noise obtain a lower reward value, thus storing a poor experience in the experience pool, which is not conducive to the algorithm learning a better strategy.

To solve this problem, we propose an improved twin delayed deep deterministic policy gradient algorithm, which dynamically changes the scale of noise to be added by evaluating the reward value of the selected action in advance. This method can effectively reduce the negative impact of noise uncertainty on strategy exploration, and make full use of excellent strategies.

The action

selected in the

state will first obtain the reward value

of environmental feedback without adding any noise, so as to pre-evaluate the value of the action. The purpose of pre-evaluation is to judge whether the action is an excellent strategy. If the action has a high value, reduce the scale of noise and maintain this strategy as much as possible. If the action has a low value, the scale of noise should be increased to explore better strategies. The variance

of Gaussian noise is determined by

. The higher the reward

is, the smaller the variance

of noise is, the lower the reward

is, and the more the variance

of noise is. In this work, the maximum value of

is limited to 2, and the minimum value is 0.4. If the real-time reward value is less than 0, the exploration intensity should be increased, so

of negative action is 2. When the real-time reward value is greater than 0,

decreases along the positive direction of the

x-axis, and the variation amplitude of

gradually decreases, but

cannot be reduced to 0, and a small amount of noise still needs to be retained to maintain the exploration. The agent uses action

after adding noise to explore. This makes better use of the good strategies explored by the agents and, to some extent, avoids storing the worse explored steps in the experience pool. In (12), the scale of variance

is selected according to the pre-evaluated reward value. The improved TD3 algorithm is described in Algorithm 1, where the additional step 6 reflects our proposed modification:

| Algorithm 1 Improved TD3 |

- 1:

Initial estimated critic network parameters ,, and estimated actor network parameter - 2:

Initial target networks parameters , - 3:

Set initial values of hyper-parameters according to the task requirements: experience playback buffer pool B, mini-batch size n, actor network learning rate , critic network learning rate , maximum episode E, soft update rate - 4:

for to T do - 5:

Select action - 6:

According to pre-evaluated reward value of action , select the size of noise variance , and add noise N to new action with noise, and observe reward in current state and new state - 7:

Store transition tuple of this step in B - 8:

Sample mini-batch of n transactions from B - 9:

Compute target actions - 10:

Compute Q-targets - 11:

Update estimated network parameters of the critic by minimizing loss: - 12:

if t mod d then - 13:

Update the actor policy using sampled policy gradient: - 14:

- 15:

Update parameters of target network of the critic and the actor: - 16:

for j = 1,2 - 17:

- 18:

end if - 19:

end for

|