Around-View-Monitoring-Based Automatic Parking System Using Parking Line Detection

Abstract

:1. Introduction

2. Related Works

3. Proposed Method

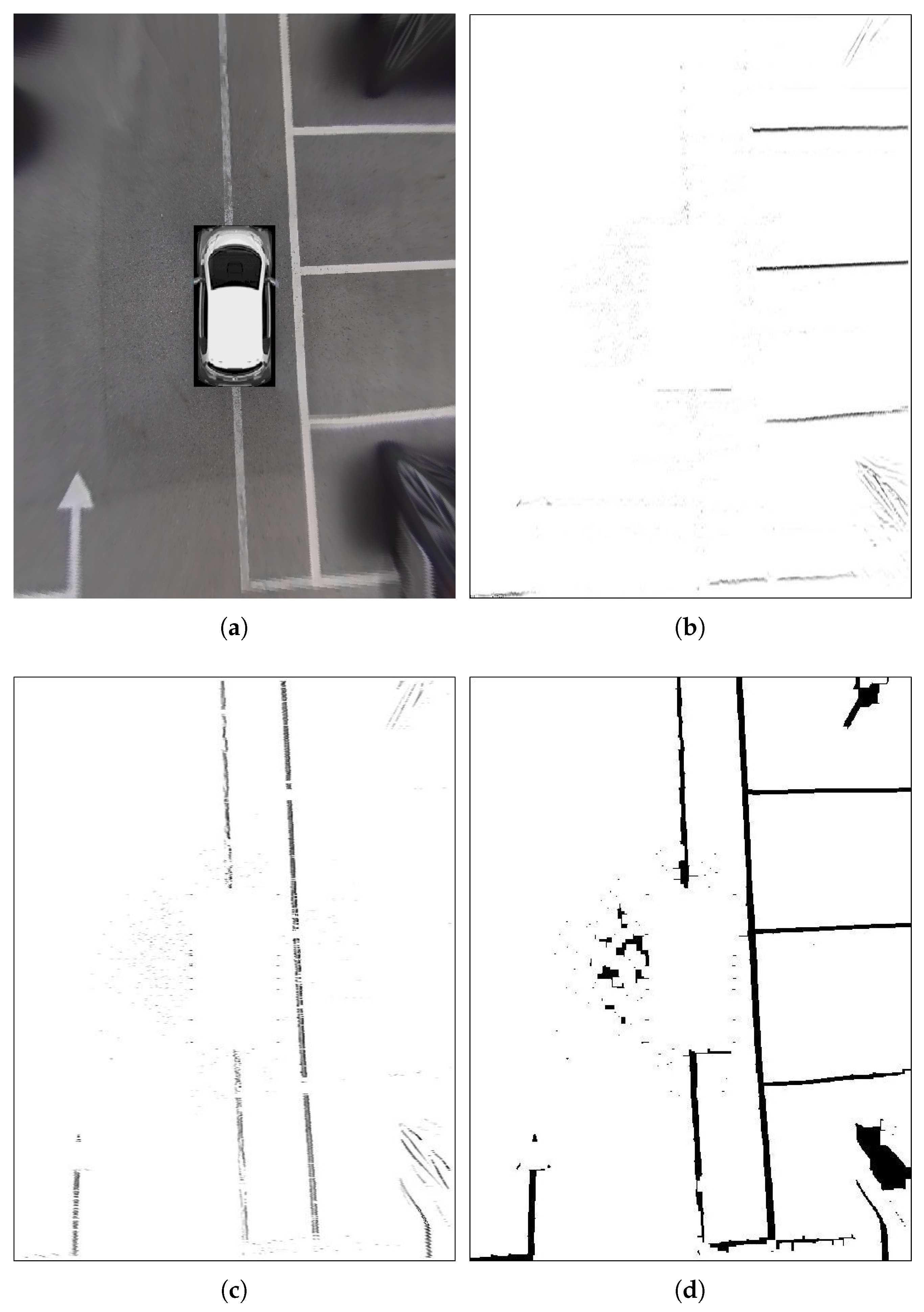

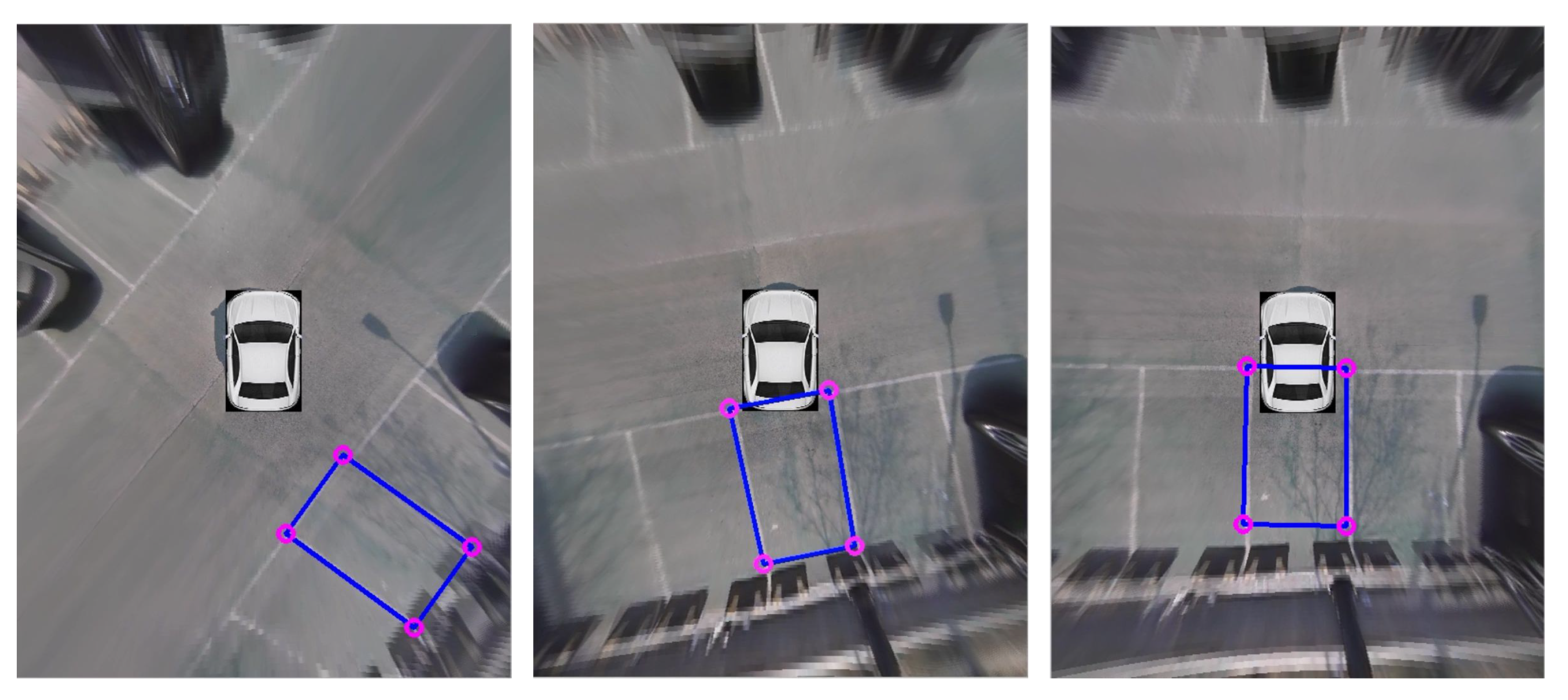

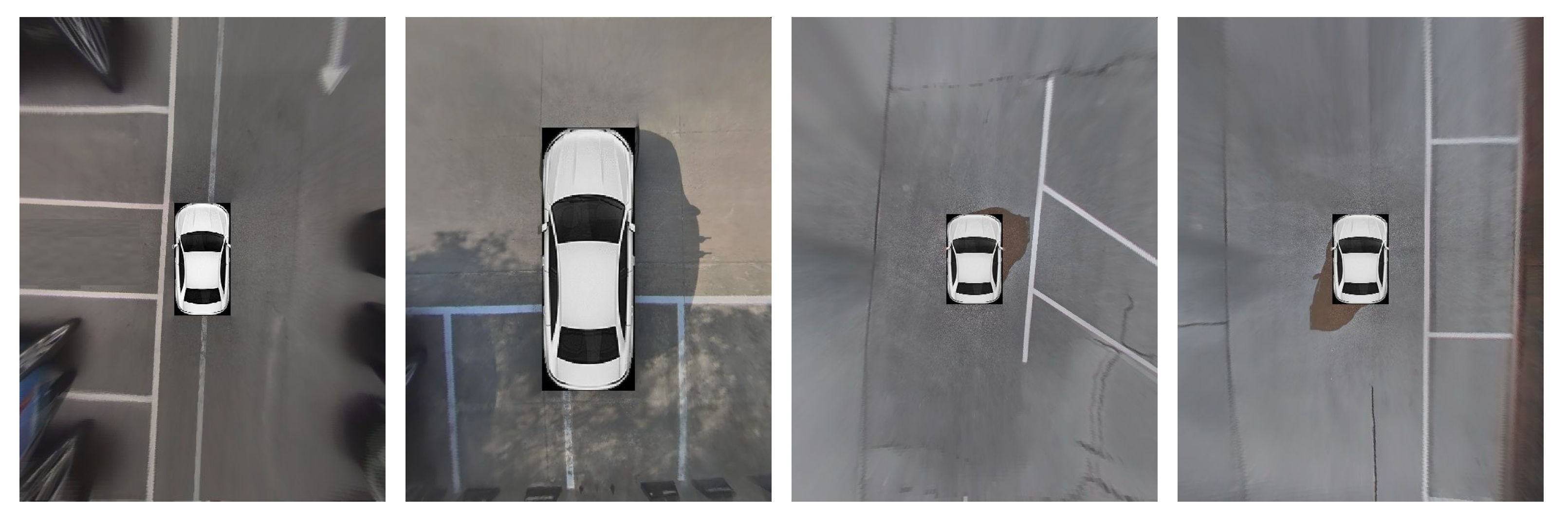

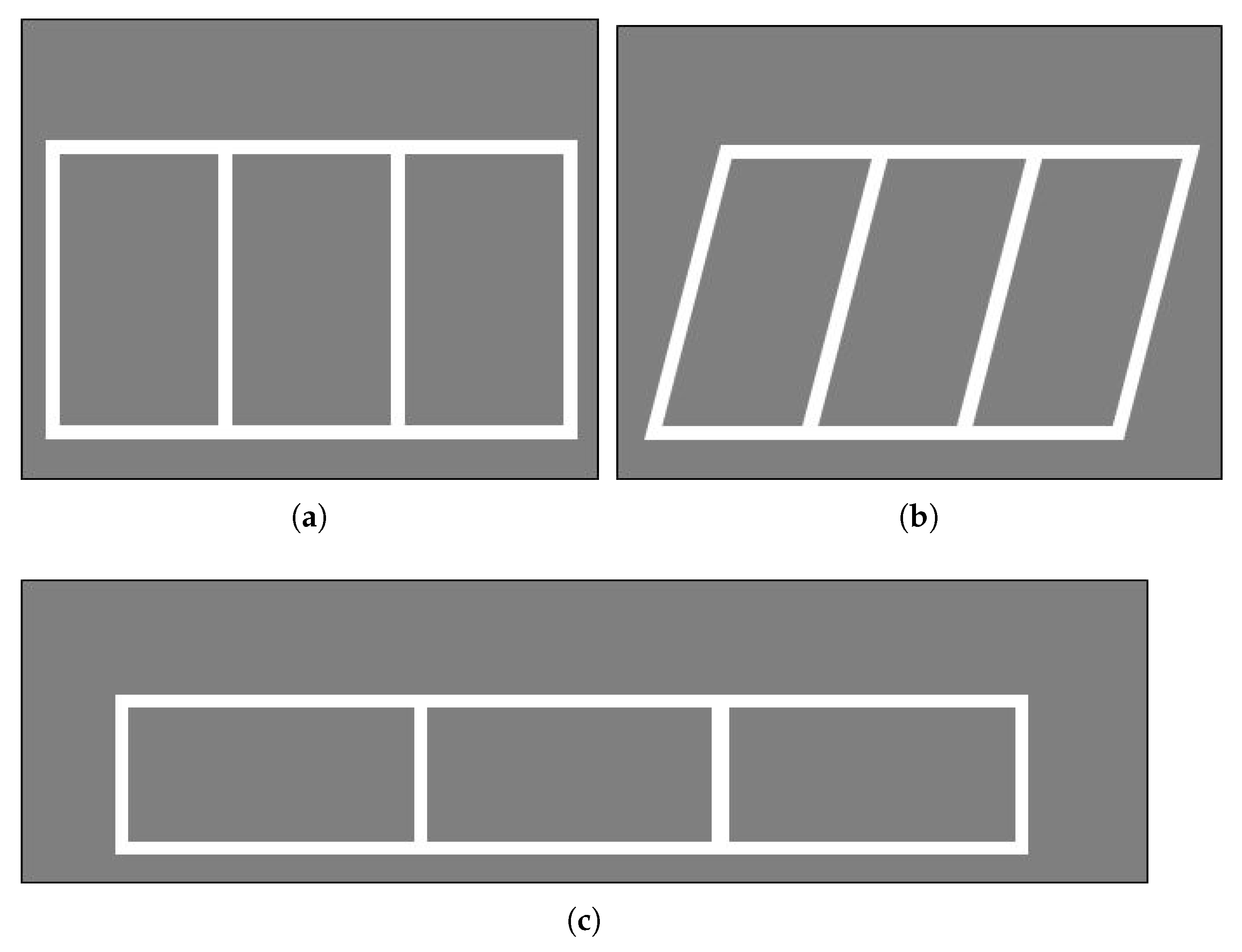

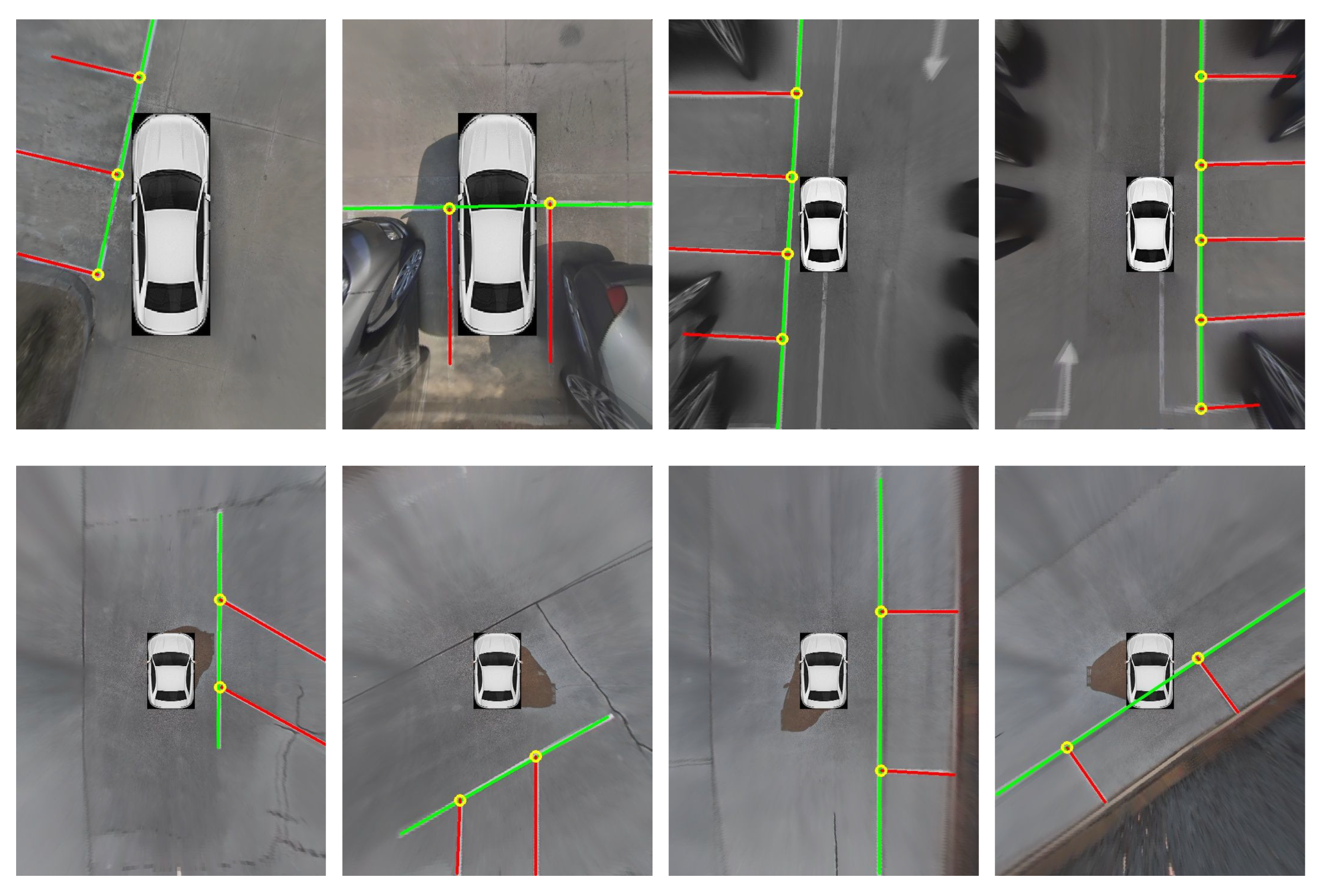

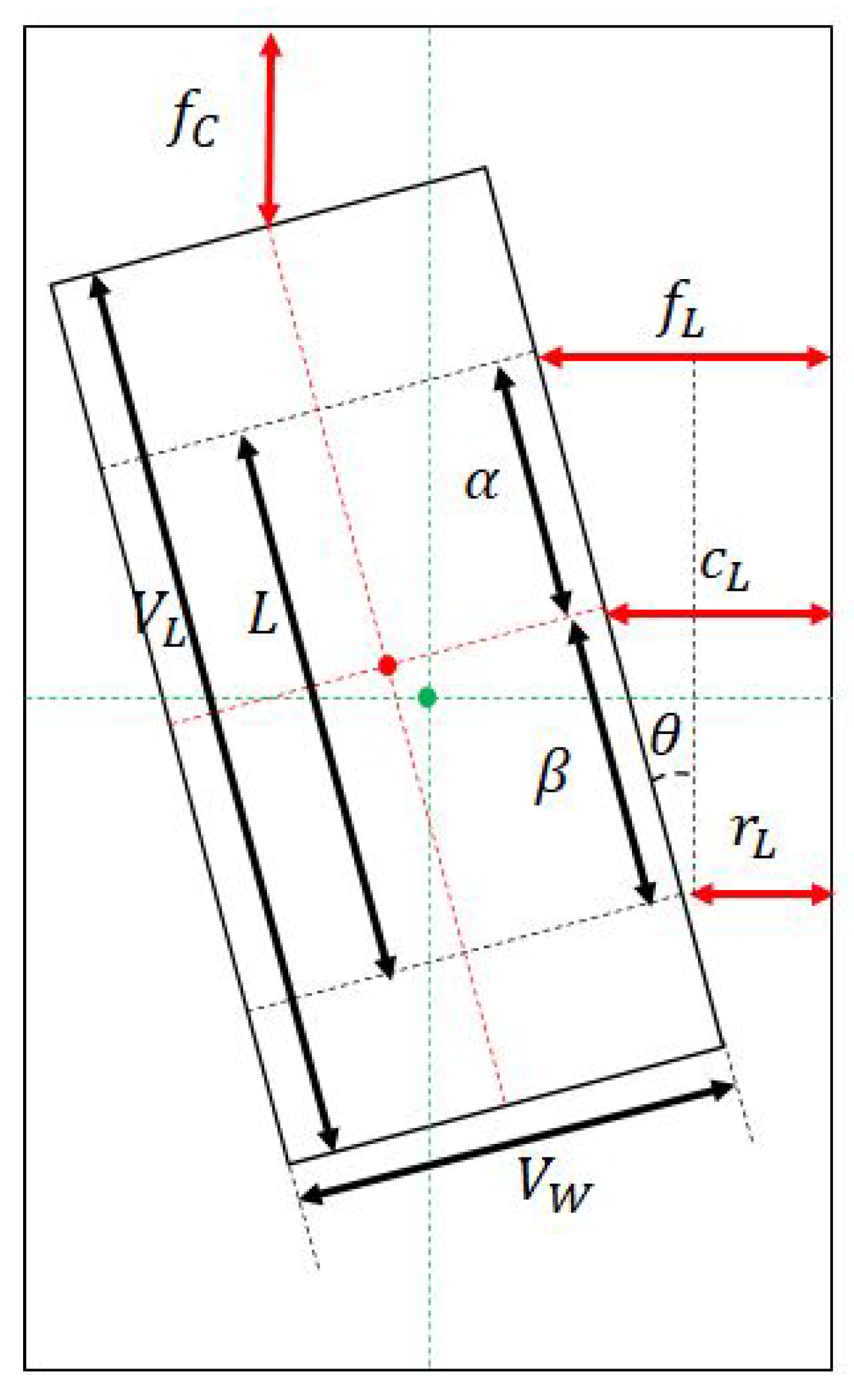

3.1. Parking Line Detection

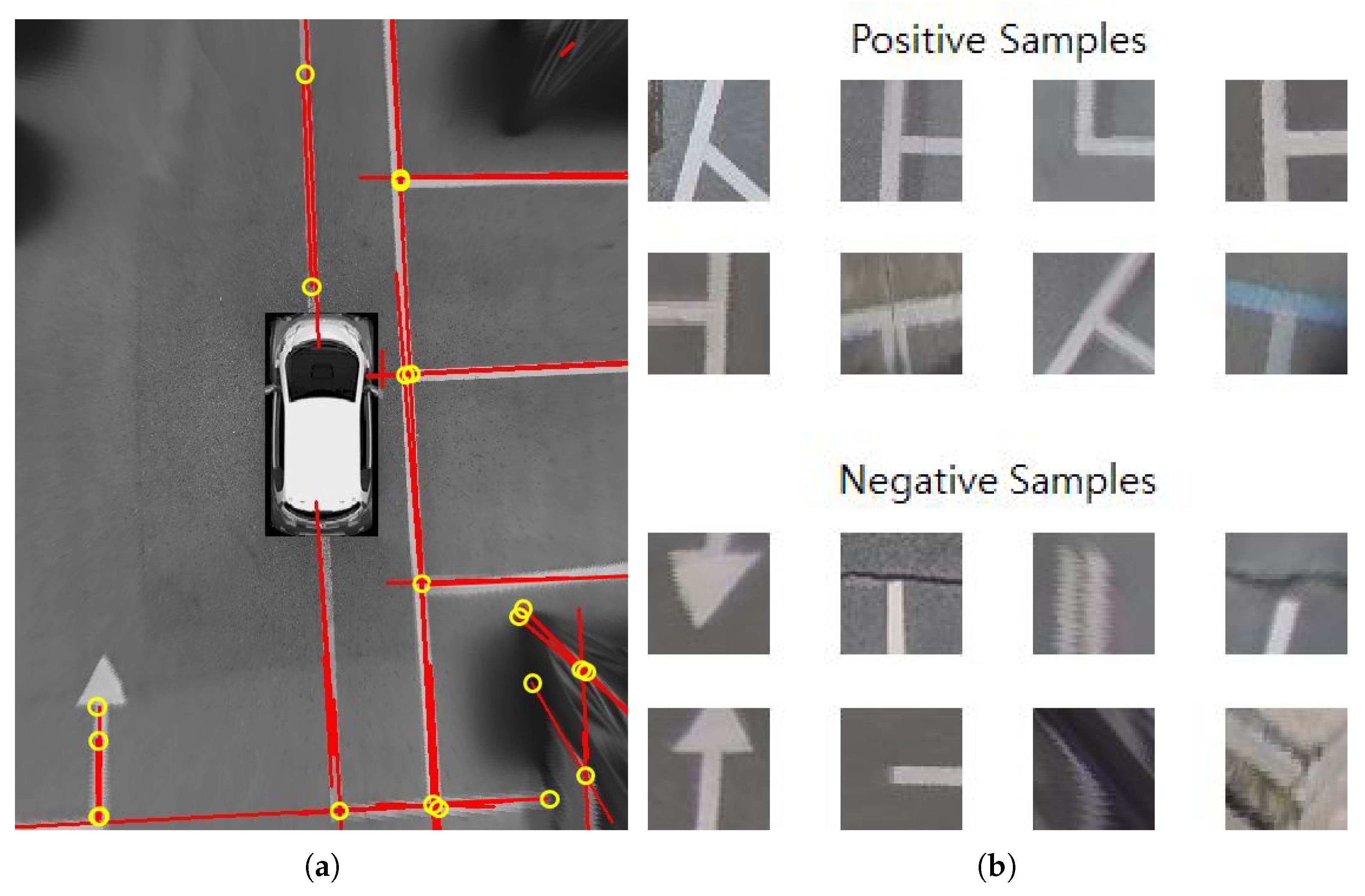

3.1.1. Parking Line Candidate Extraction Using the Hough Transform

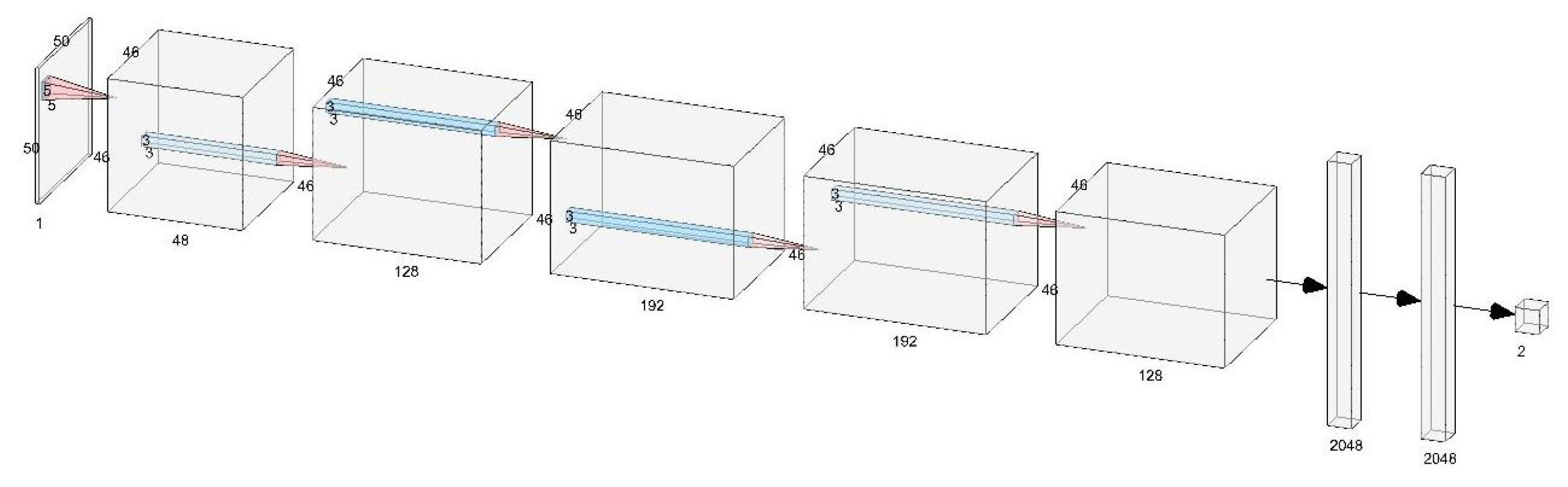

3.1.2. Parking Line Detection Using a CNN

3.1.3. Parking Slot Tracking

3.2. Motion Planning and Tracking

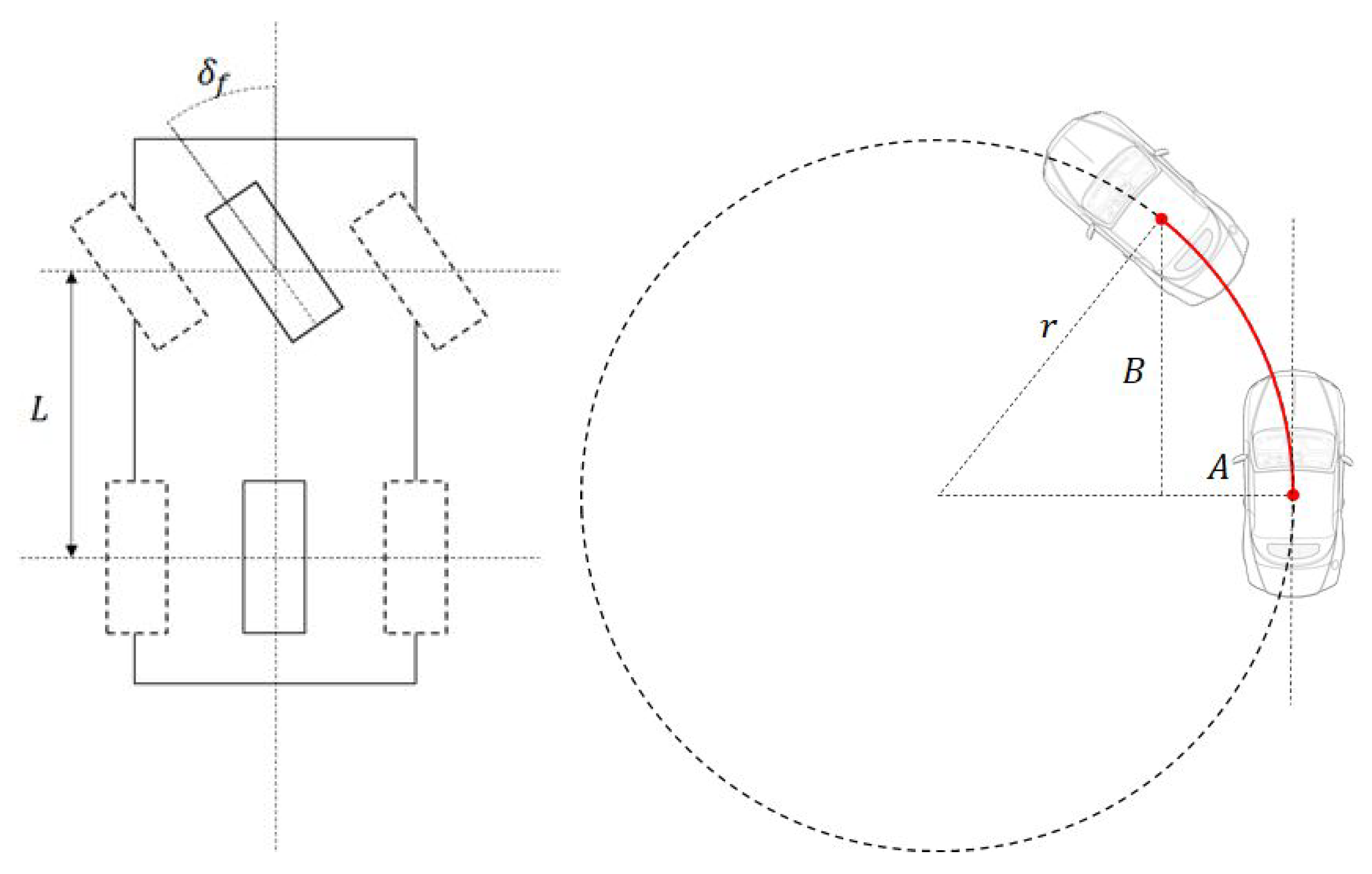

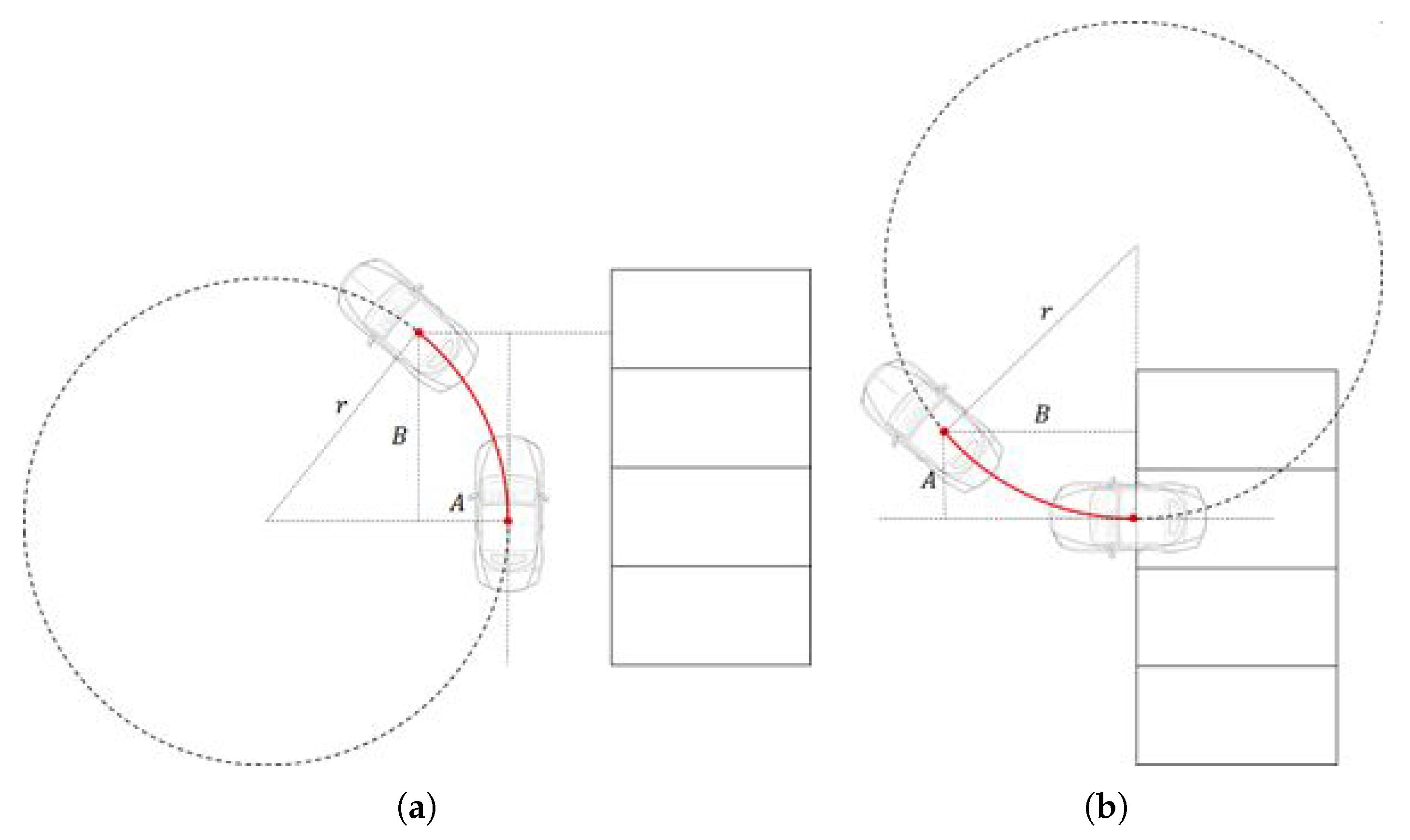

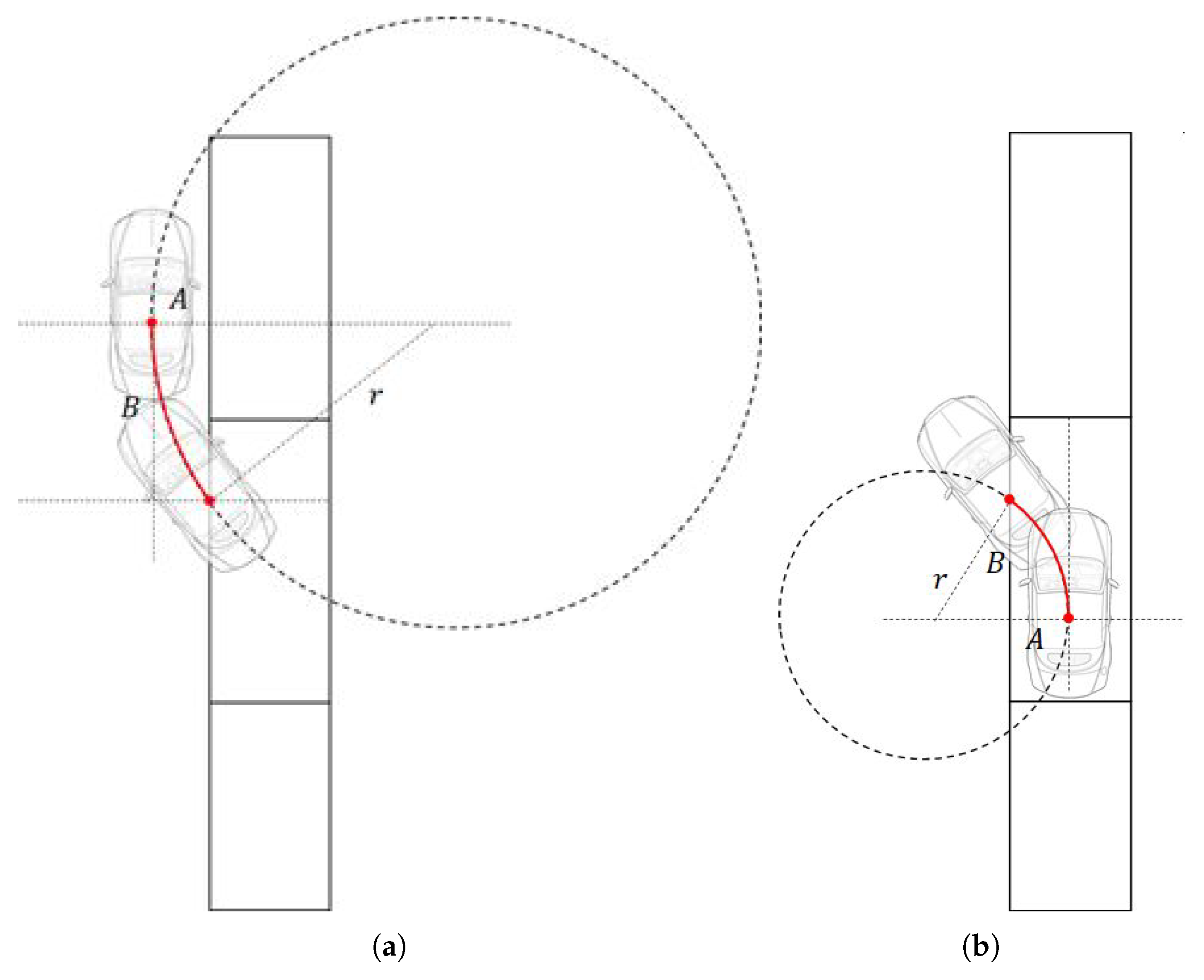

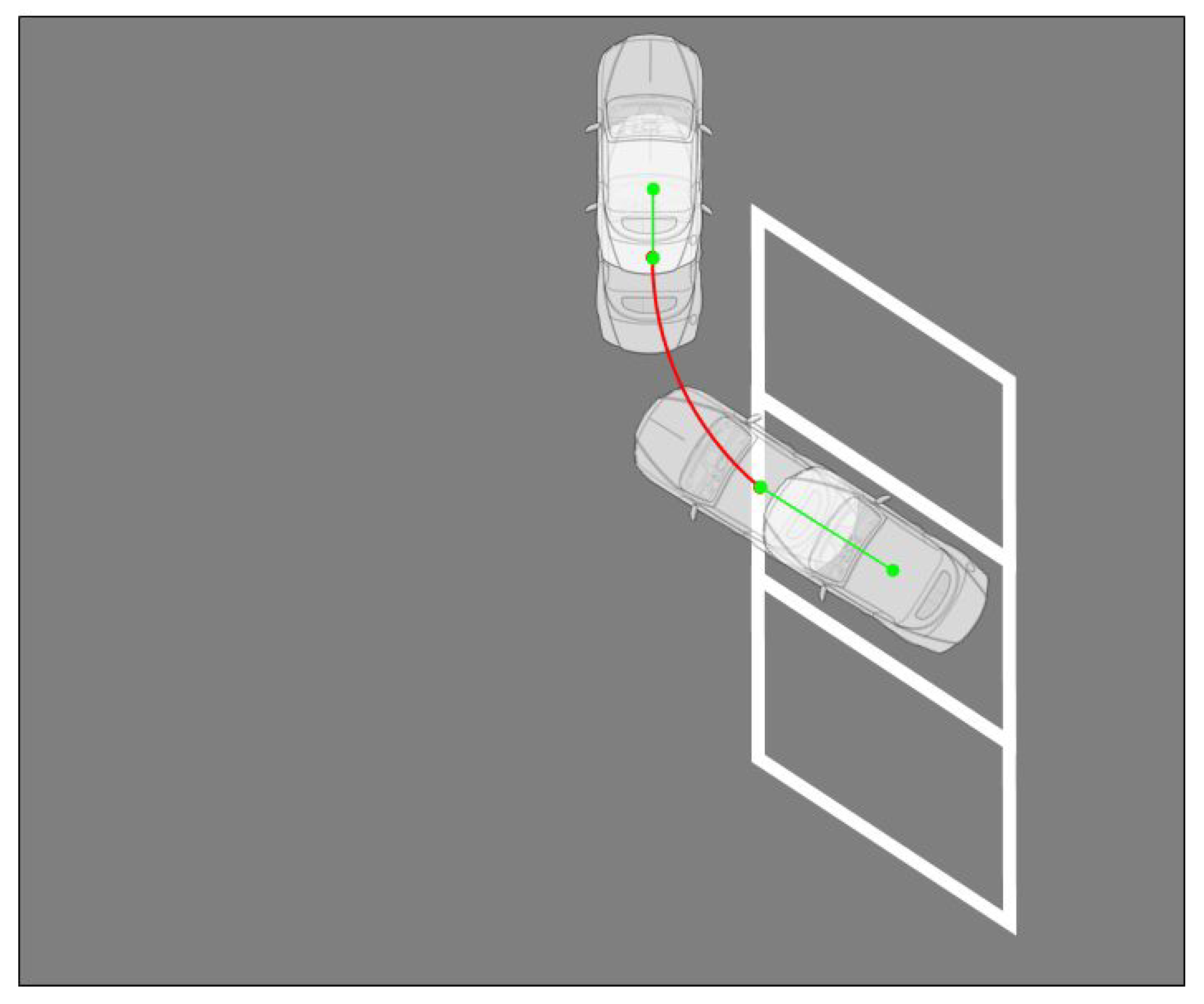

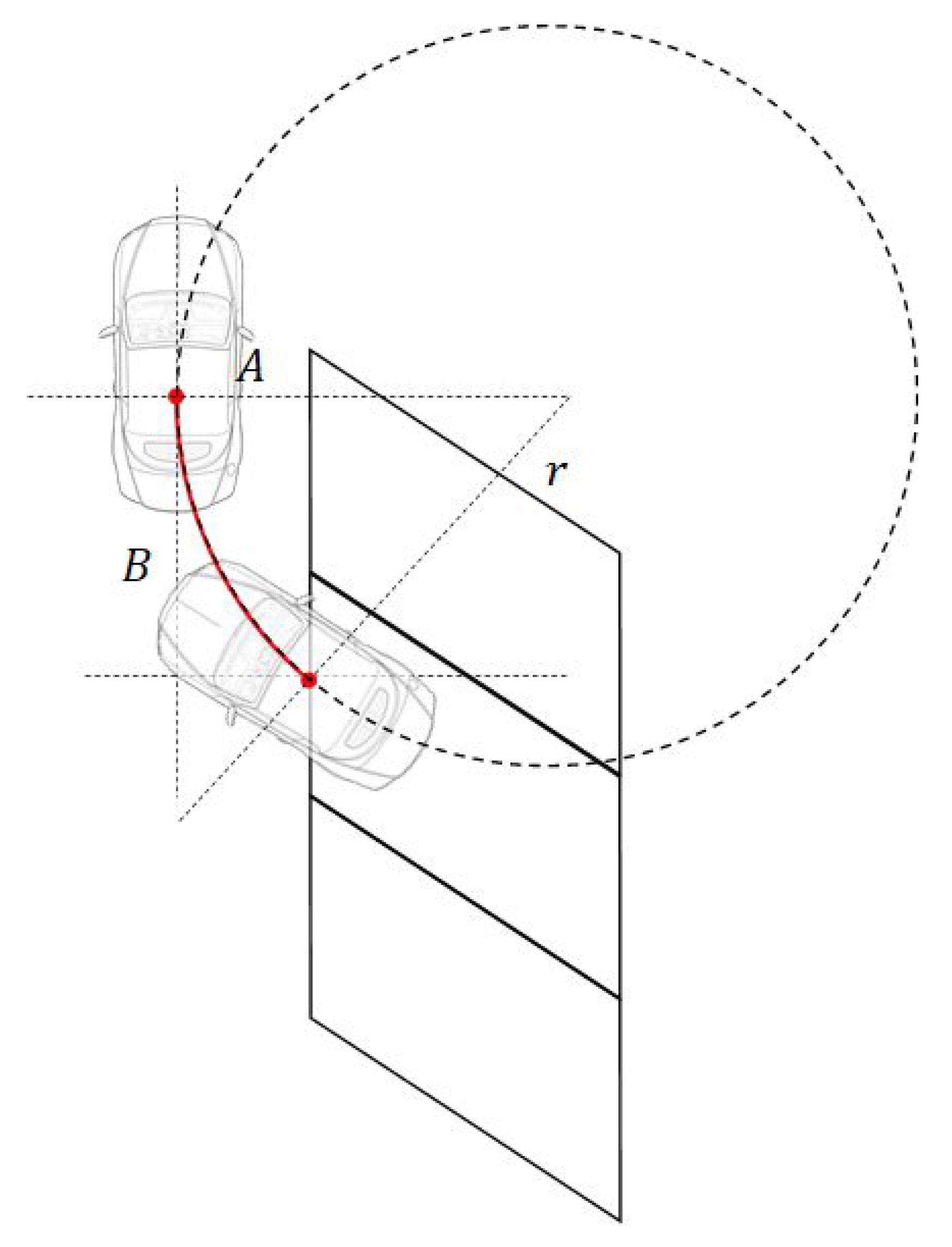

3.2.1. Motion Planning

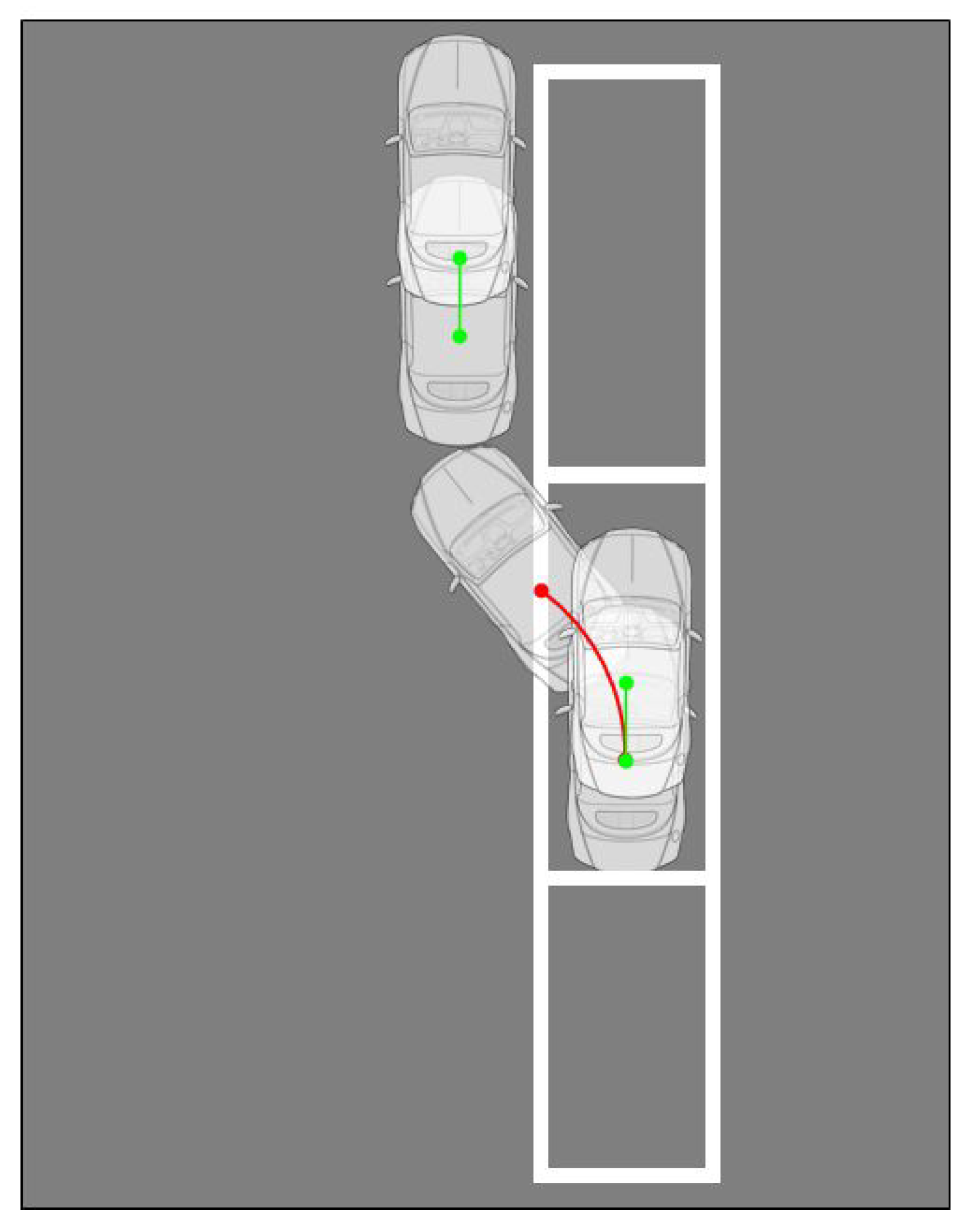

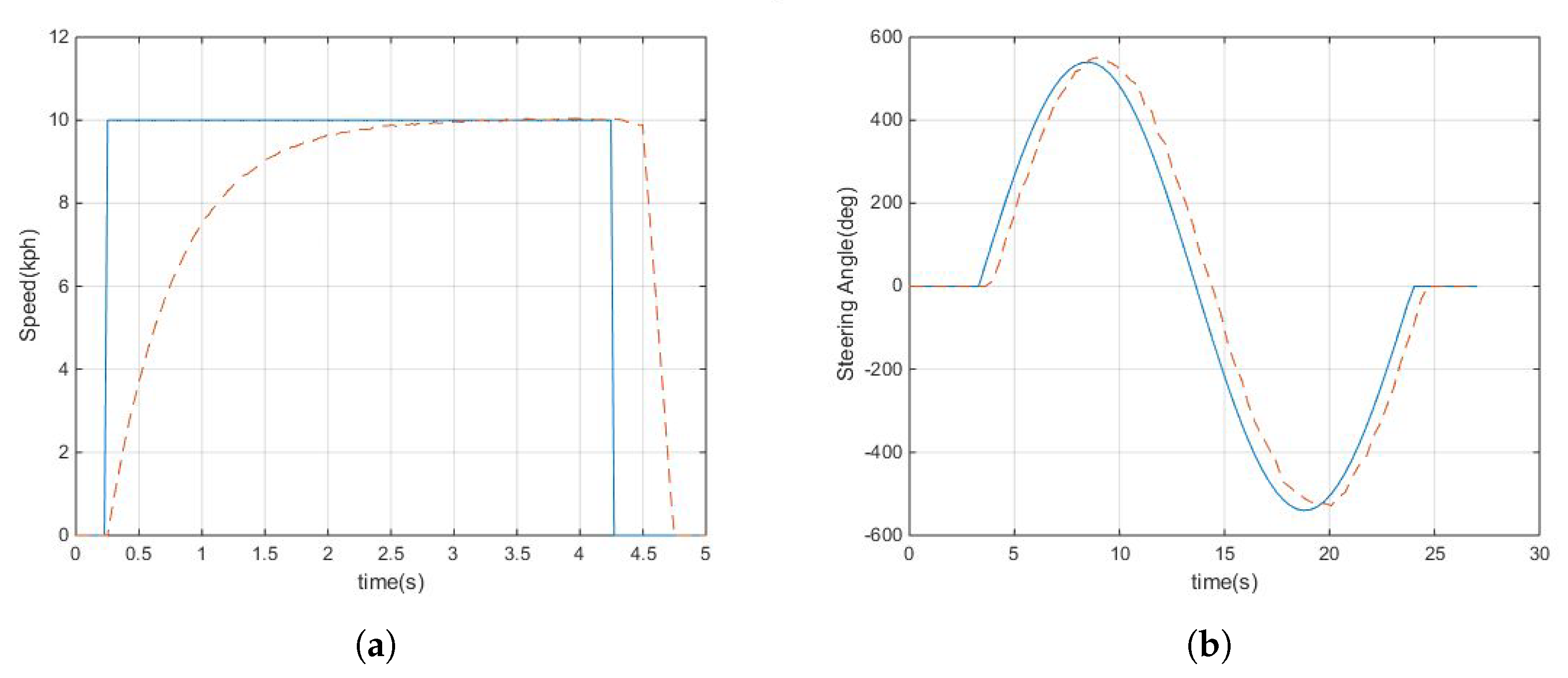

3.2.2. Motion Tracking

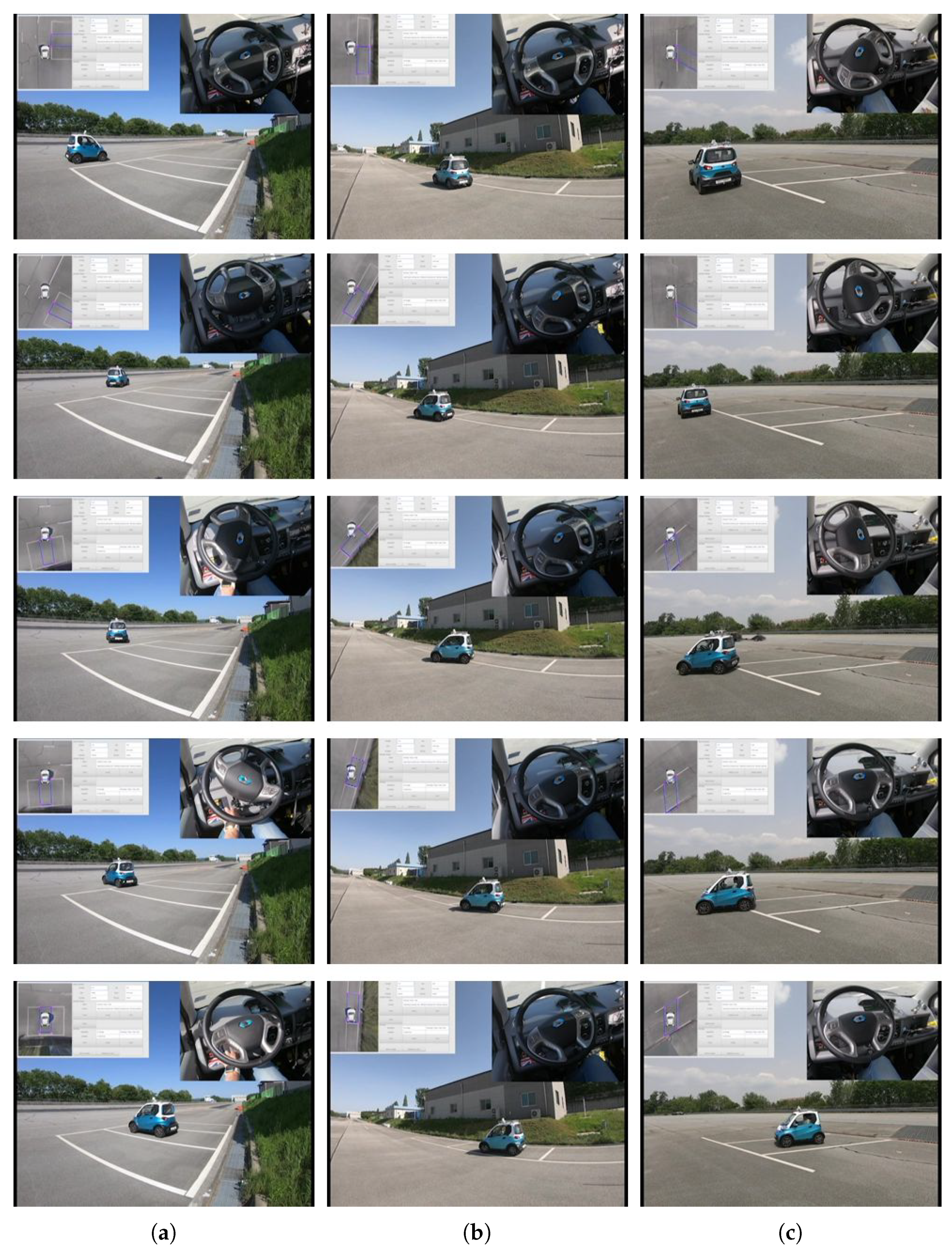

4. Experimental Results

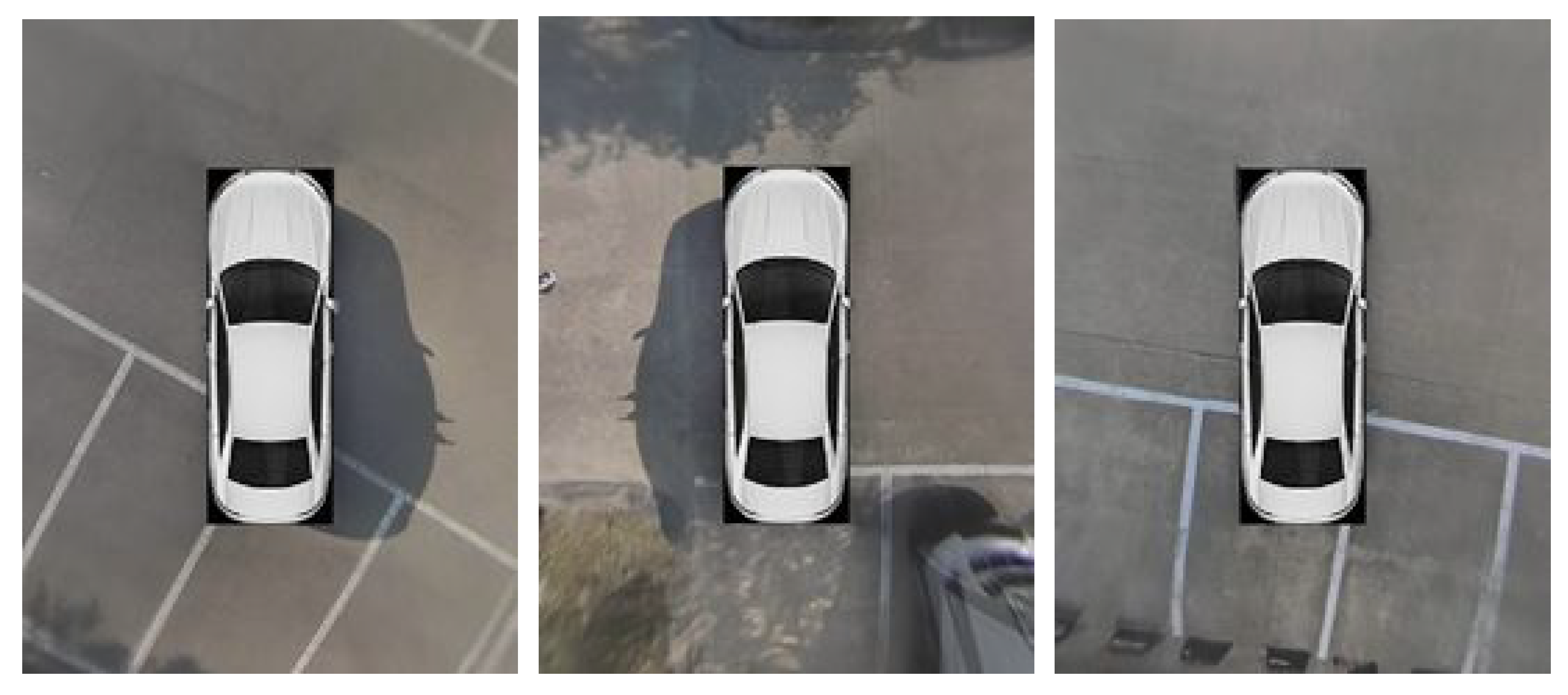

4.1. Experimental Environment

4.2. Experimental Results

5. Conclusions and Future Works

5.1. Conclusions

5.2. Future Works

Author Contributions

Funding

Conflicts of Interest

References

- Trivedi, M.M.; Gandhi, T.; McCall, J. Looking-in and looking-out of a vehicle: Computer-vision-based enhanced vehicle safety. IEEE Trans. Intell. Transp. Syst. 2007, 8, 108–120. [Google Scholar] [CrossRef] [Green Version]

- Jung, H.G.; Kim, D.S.; Yoon, P.J.; Kim, J. Parking slot markings recognition for automatic parking assist system. In Proceedings of the 2006 IEEE Intelligent Vehicles Symposium, Tokyo, Japan, 13–15 June 2006; pp. 106–113. [Google Scholar]

- Satonaka, H.; Okuda, M.; Hayasaka, S.; Endo, T.; Tanaka, Y.; Yoshida, T. Development of parking space detection using an ultrasonic sensor. In Proceedings of the 13th ITS World Congress, London, UK, 8–12 October 2006. [Google Scholar]

- Lee, Y.; Chang, S. Development of a verification method on ultrasonic-based perpendicular parking assist system. In Proceedings of the 18th IEEE International Symposium on Consumer Electronics (ISCE 2014), Jeju, Korea, 22–25 June 2014; pp. 1–3. [Google Scholar]

- Liu, Y.C.; Lin, K.Y.; Chen, Y.S. Bird’s-eye view vision system for vehicle surrounding monitoring. In International Workshop on Robot Vision; Springer: Auckland, New Zealand, 18–20 February 2008; pp. 207–218. [Google Scholar]

- Kum, C.H.; Cho, D.C.; Ra, M.S.; Kim, W.Y. Lane detection system with around view monitoring for intelligent vehicle. In Proceedings of the 2013 International SoC Design Conference (ISOCC), IEEE, Busan, Korea, 17–19 November 2013; pp. 215–218. [Google Scholar]

- Lee, Y.H.; Kim, W.Y. An automatic calibration method for AVM cameras. IEEE Access 2020, 8, 192073–192086. [Google Scholar] [CrossRef]

- Hamada, K.; Hu, Z.; Fan, M.; Chen, H. Surround view based parking lot detection and tracking. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, Korea, 28 June–1 July 2015; pp. 1106–1111. [Google Scholar]

- Lee, S.; Hyeon, D.; Park, G.; Baek, I.J.; Kim, S.W.; Seo, S.W. Directional-DBSCAN: Parking-slot detection using a clustering method in around-view monitoring system. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 349–354. [Google Scholar]

- Wang, C.; Zhang, H.; Yang, M.; Wang, X.; Ye, L.; Guo, C. Automatic parking based on a bird’s eye view vision system. Adv. Mech. Eng. 2014, 6, 1–10. [Google Scholar] [CrossRef]

- Houben, S.; Komar, M.; Hohm, A.; Lüke, S.; Neuhausen, M.; Schlipsing, M. On-vehicle video-based parking lot recognition with fisheye optics. In Proceedings of the 16th International IEEE Conference on Intelligent Transportation Systems (ITSC 2013), Hague, The Netherlands, 6–9 October 2013; pp. 7–12. [Google Scholar]

- Suhr, J.K.; Jung, H.G. A universal vacant parking slot recognition system using sensors mounted on off-the-shelf vehicles. Sensors 2018, 18, 1213. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, J.Y.; Hsu, C.M. A visual method tor the detection of available parking slots. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 2980–2985. [Google Scholar]

- Lee, M.; Kim, S.; Lim, W.; Sunwoo, M. Probabilistic occupancy filter for parking slot marker detection in an autonomous parking system using avm. IEEE Trans. Intell. Transp. Syst. 2018, 20, 2389–2394. [Google Scholar] [CrossRef]

- Zhang, L.; Li, X.; Huang, J.; Shen, Y.; Wang, D. Vision-based parking-slot detection: A benchmark and a learning-based approach. Symmetry 2018, 10, 64. [Google Scholar] [CrossRef] [Green Version]

- Kim, S.; Kim, J.; Ra, M.; Kim, W.Y. Vacant parking slot recognition method for practical autonomous valet parking system using around view image. Symmetry 2020, 12, 1725. [Google Scholar] [CrossRef]

- Kim, C.; Cho, S.; Jang, C.; Sunwoo, M.; Jo, K. Evidence filter of semantic segmented image from around view monitor in automated parking system. IEEE Access 2019, 7, 92791–92804. [Google Scholar] [CrossRef]

- Zhang, L.; Huang, J.; Li, X.; Xiong, L. Vision-based parking-slot detection: A DCNN-based approach and a large-scale benchmark dataset. IEEE Trans. Image Process. 2018, 27, 5350–5364. [Google Scholar] [CrossRef] [PubMed]

- Allodi, M.; Castangia, L.; Cionini, A.; Valenti, F. Monocular parking slots and obstacles detection and tracking. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 179–185. [Google Scholar]

- Lee, S.; Seo, S.W. Available parking slot recognition based on slot context analysis. IET Intell. Transp. Syst. 2016, 10, 594–604. [Google Scholar] [CrossRef]

- Zips, P.; Böck, M.; Kugi, A. A fast motion planning algorithm for car parking based on static optimization. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 2392–2397. [Google Scholar]

- Zips, P.; Böck, M.; Kugi, A. Optimisation based path planning for car parking in narrow environments. Robot. Auton. Syst. 2016, 79, 1–11. [Google Scholar] [CrossRef]

- Lin, L.; Zhu, J.J. Path planning for autonomous car parking. In Proceedings of the Dynamic Systems and Control Conference, American Society of Mechanical Engineers, Atlanta, GA, USA, 30 September–3 October 2018; pp. 1–10. [Google Scholar]

- Sedighi, S.; Nguyen, D.V.; Kuhnert, K.D. A new method of clothoid-based path planning algorithm for narrow perpendicular parking spaces. In Proceedings of the 5th International Conference on Mechatronics and Robotics Engineering, Rome, Italy, 16–18 February 2019; pp. 50–55. [Google Scholar]

- Kim, D.J.; Chung, C.C. Automated Perpendicular Parking System with Approximated Clothoid-Based Local Path Planning. IEEE Control. Syst. Lett. 2020, 5, 1940–1945. [Google Scholar] [CrossRef]

- Vorobieva, H.; Glaser, S.; Minoiu-Enache, N.; Mammar, S. Automatic parallel parking in tiny spots: Path planning and control. IEEE Trans. Intell. Transp. Syst. 2015, 16, 396–410. [Google Scholar] [CrossRef] [Green Version]

- Li, T.-H.; Chang, S.-J. Autonomous fuzzy parking control of a car-like mobile robot. IEEE Trans. Syst. Man Cybern.-Part A Syst. Hum. 2003, 33, 451–465. [Google Scholar] [CrossRef]

- Kim, D.; Chung, W. Motion planning for car-parking using the slice projection technique. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 1050–1055. [Google Scholar]

- Kwon, H.; Chung, W. Performance analysis of path planners for car-like vehicles toward automatic parking control. Intell. Serv. Robot. 2014, 7, 15–23. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In European Conference on Computer Vision; Springer: Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition(CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Peizhi, Z.; Zhuoping, Y.; Lu, X.; Dequan, Z. Research on Parking Slot Tracking Algorithm Based on Fusion of Vision and Vehicle Chassis Information. Int. J. Automot. Technol. 2020, 21, 603–614. [Google Scholar]

- Park, M.; Lee, S.; Kim, M.; Lee, J.; Yi, K. Integrated differential braking and electric power steering control for advanced lane-change assist systems. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2015, 229, 924–943. [Google Scholar] [CrossRef]

- Cammsys Corp. CEVO-C. Available online: https://www.cevo.co.kr/ (accessed on 25 October 2021).

| Parking Slot Type | No. of Parking Slots | No. of Detected Slots | No. of Miss Detections | No. of Wrong Detections | Precision | Recall |

|---|---|---|---|---|---|---|

| Perpendicular | 468 | 440 | 47 | 19 | 95.68 | 89.96 |

| Angle | 259 | 243 | 27 | 11 | 95.47 | 89.57 |

| Parallel | 93 | 88 | 12 | 7 | 92.04 | 87.10 |

| Sum | 820 | 771 | 86 | 34 | 95.2 | 89.51 |

| Parking Slot Type | No. of Parking Slots | No. of Detected Slots | No. of Miss Detections | No. of Wrong Detections | Precision | Recall |

|---|---|---|---|---|---|---|

| Perpendicular | 468 | 458 | 23 | 13 | 97.16 | 95.08 |

| Angle | 259 | 253 | 14 | 8 | 96.84 | 94.59 |

| Parallel | 93 | 90 | 7 | 4 | 95.56 | 92.47 |

| Sum | 820 | 801 | 44 | 25 | 96.88 | 94.63 |

| Parking Type | No. | X Offset | Y Offset | Heading Angle |

|---|---|---|---|---|

| 1 | 0.053 | 0.204 | 0.1 | |

| 2 | 0.27 | 0.211 | 0.5 | |

| 3 | 0.075 | 0.197 | 0.4 | |

| 4 | 0.11 | 0.023 | 0.2 | |

| Perpendicular | 5 | 0.104 | 0.024 | 0.2 |

| parking | 6 | 0.064 | 0.01 | 0.2 |

| 7 | 0.028 | 0.173 | 0.4 | |

| 8 | 0.072 | 0.162 | 0.3 | |

| 9 | 0.083 | 0.074 | 0.2 | |

| 10 | 0.053 | 0.204 | 0.1 | |

| 1 | 0.069 | 0.083 | 2.4 | |

| 2 | 0.143 | 0.004 | 1.7 | |

| 3 | 0.2 | 0.016 | 1.1 | |

| 4 | 0.178 | 0.053 | 1.4 | |

| Angle | 5 | 0.112 | 0.002 | 1.3 |

| parking | 6 | 0.191 | 0.067 | 2.1 |

| 7 | 0.136 | 0.048 | 2.2 | |

| 8 | 0.105 | 0.009 | 1.2 | |

| 9 | 0.254 | 0.007 | 1.0 | |

| 10 | 0.154 | 0.023 | 1.6 | |

| 1 | 0.073 | 0.108 | 0.8 | |

| 2 | 0.096 | 0.114 | 1.2 | |

| 3 | 0.058 | 0.099 | 0.7 | |

| 4 | 0.041 | 0.104 | 0.6 | |

| Parallel | 5 | 0.109 | 0.089 | 1.1 |

| parking | 6 | 0.172 | 0.104 | 0.7 |

| 7 | 0.138 | 0.098 | 1.2 | |

| 8 | 0.077 | 0.087 | 1.4 | |

| 9 | 0.048 | 0.112 | 1.0 | |

| 10 | 0.04 | 0.097 | 0.9 | |

| Average | 0.1027 | 0.0889 | 0.94 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, Y.; Park, M. Around-View-Monitoring-Based Automatic Parking System Using Parking Line Detection. Appl. Sci. 2021, 11, 11905. https://doi.org/10.3390/app112411905

Lee Y, Park M. Around-View-Monitoring-Based Automatic Parking System Using Parking Line Detection. Applied Sciences. 2021; 11(24):11905. https://doi.org/10.3390/app112411905

Chicago/Turabian StyleLee, Yunhee, and Manbok Park. 2021. "Around-View-Monitoring-Based Automatic Parking System Using Parking Line Detection" Applied Sciences 11, no. 24: 11905. https://doi.org/10.3390/app112411905

APA StyleLee, Y., & Park, M. (2021). Around-View-Monitoring-Based Automatic Parking System Using Parking Line Detection. Applied Sciences, 11(24), 11905. https://doi.org/10.3390/app112411905