InstaDam: Open-Source Platform for Rapid Semantic Segmentation of Structural Damage

Abstract

1. Introduction

2. InstaDam: Design and Features

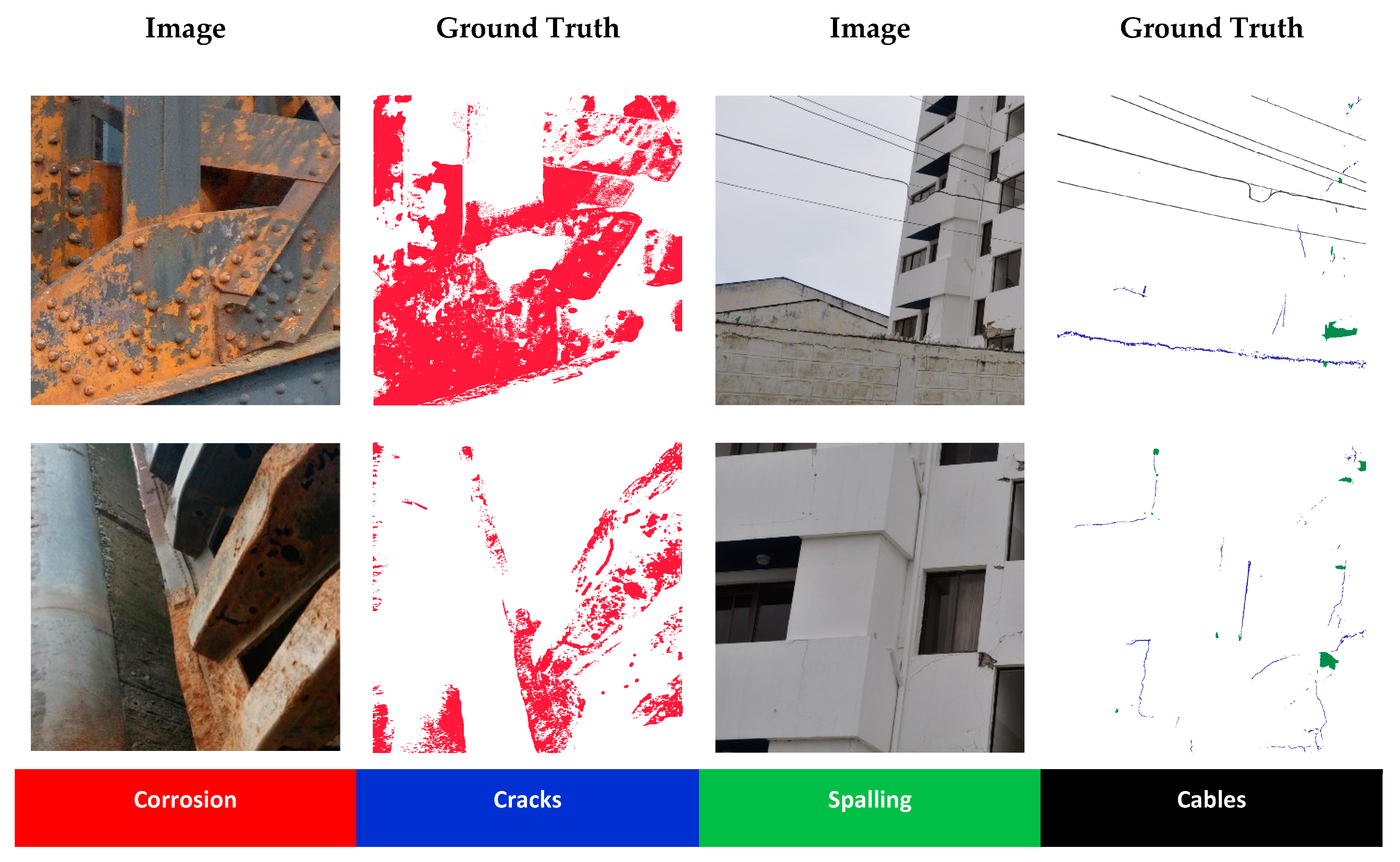

2.1. Damage Annotation for Semantic Segmentation

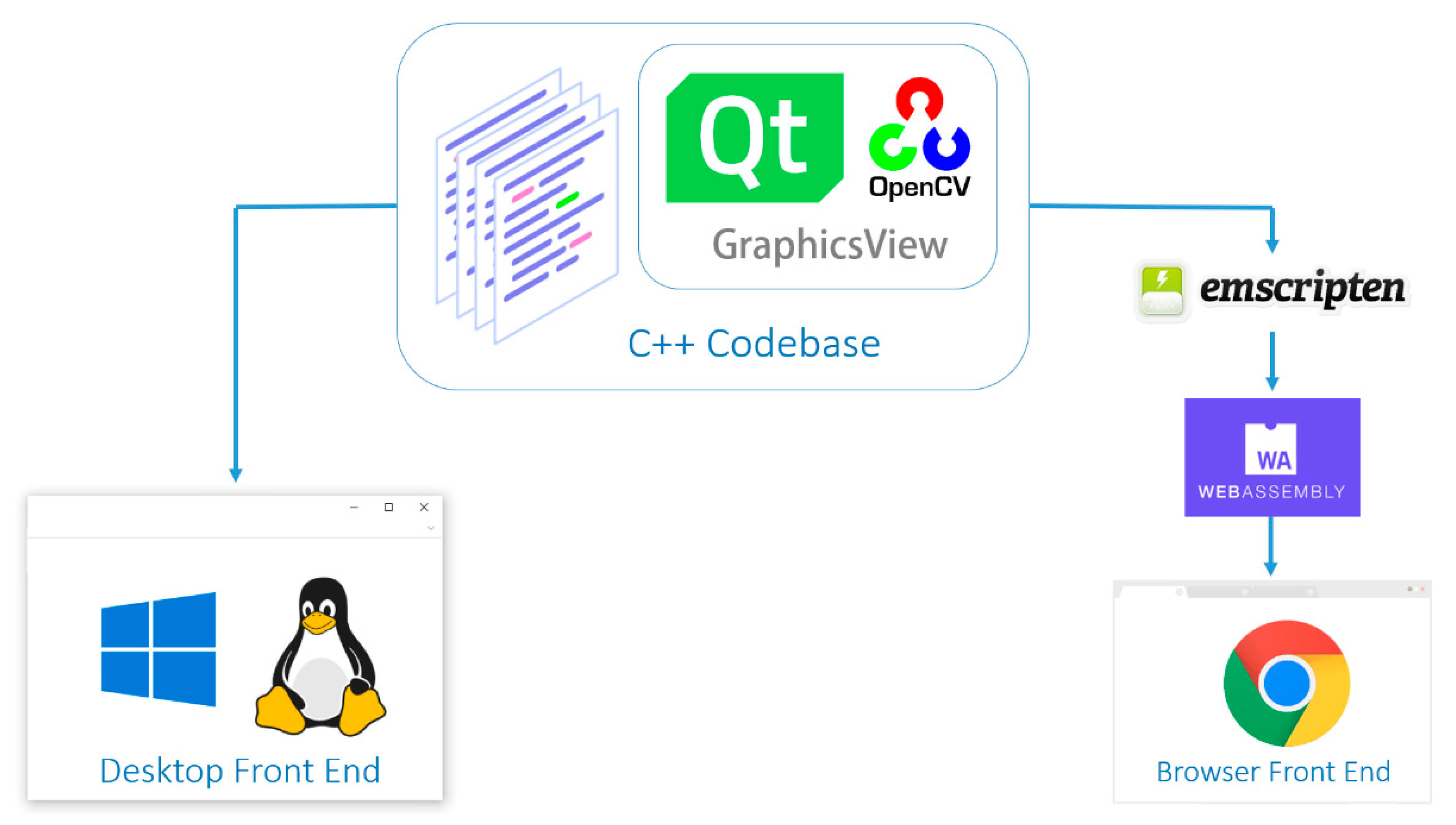

2.2. Choice of Software Frameworks

2.3. User Interface Design

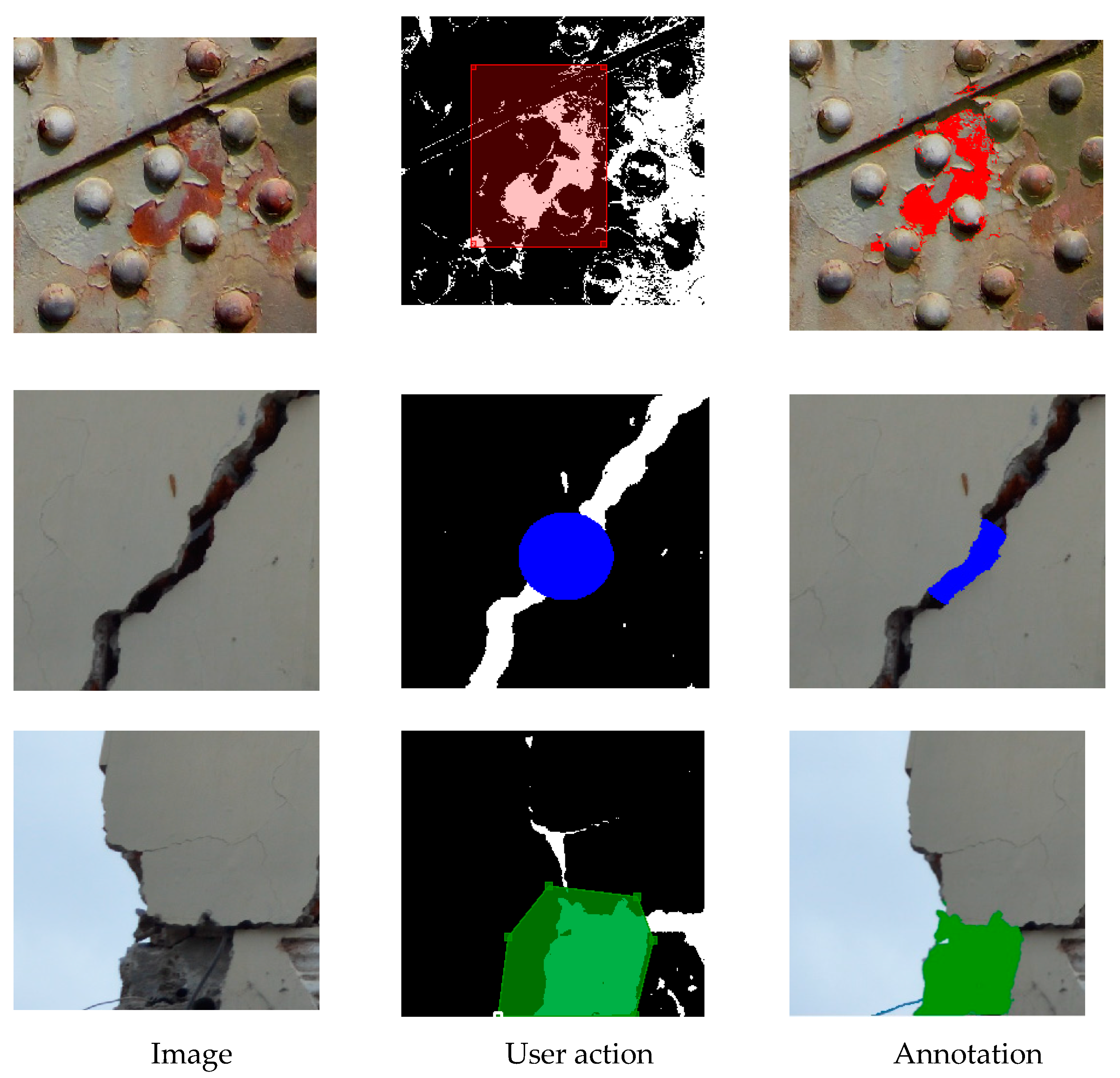

2.3.1. Annotation Tools

2.3.2. Image Processing Techniques Implemented

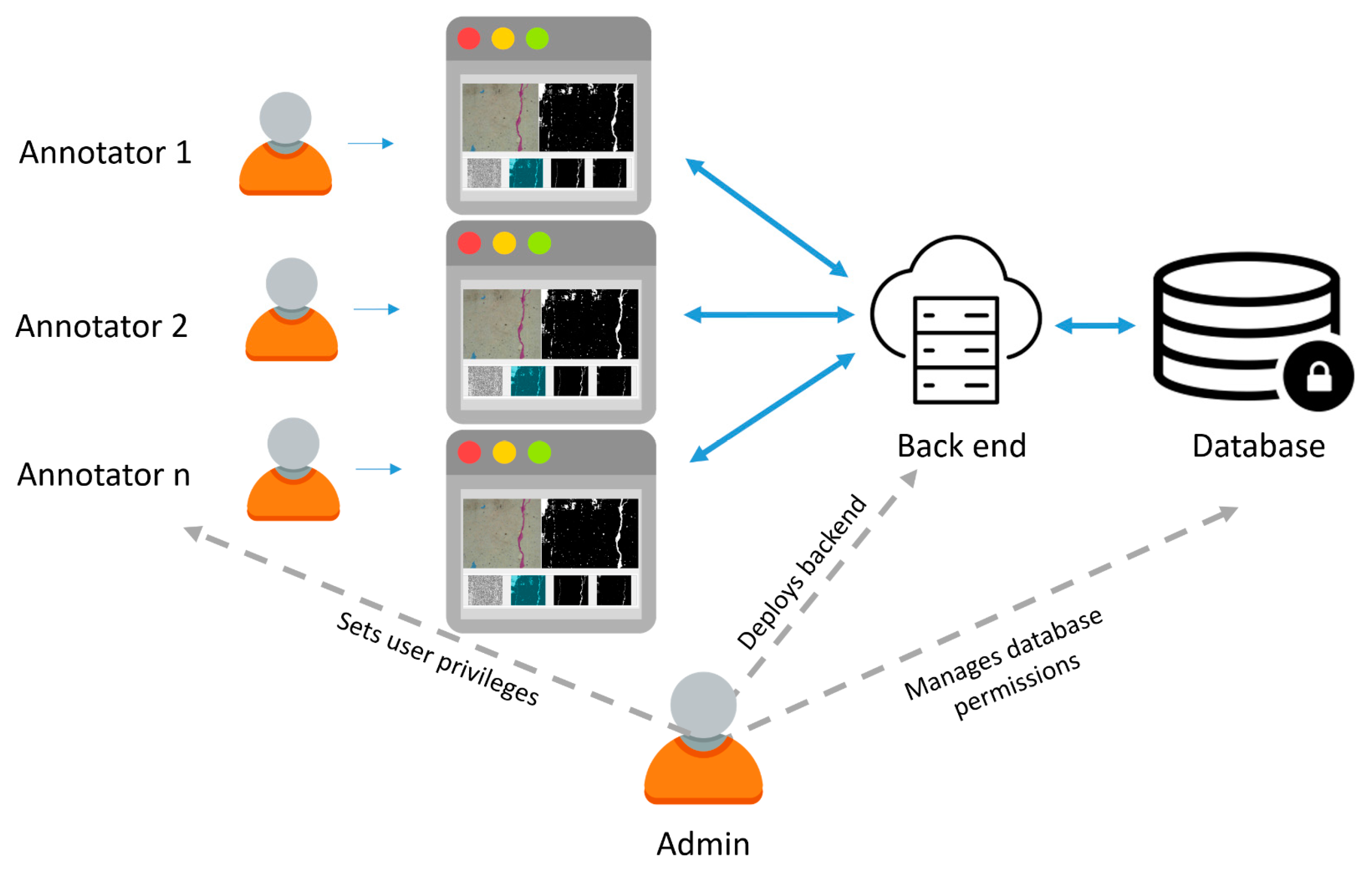

2.4. Data Management

3. Experiments and Software Analysis

3.1. User Testing with InstaDam

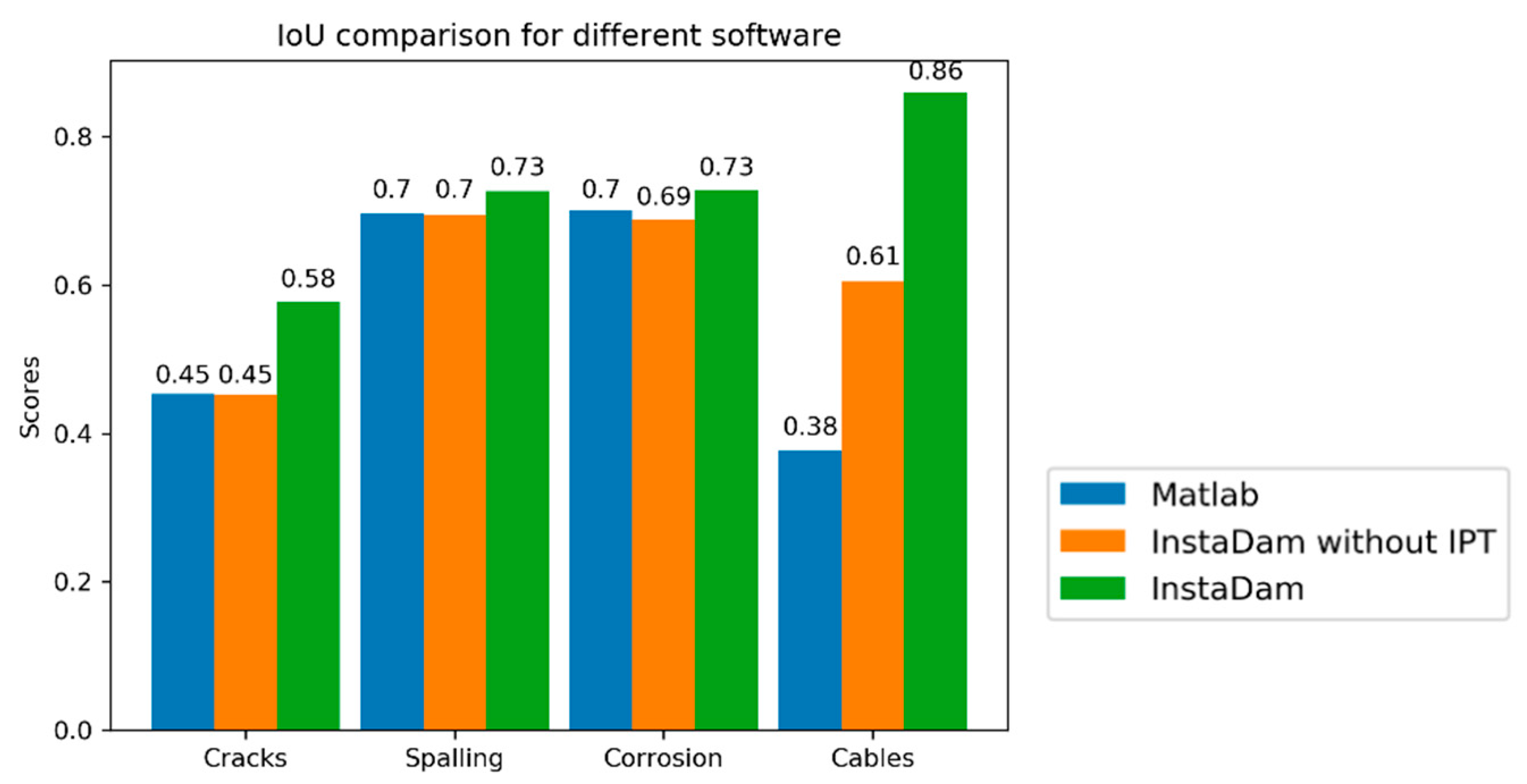

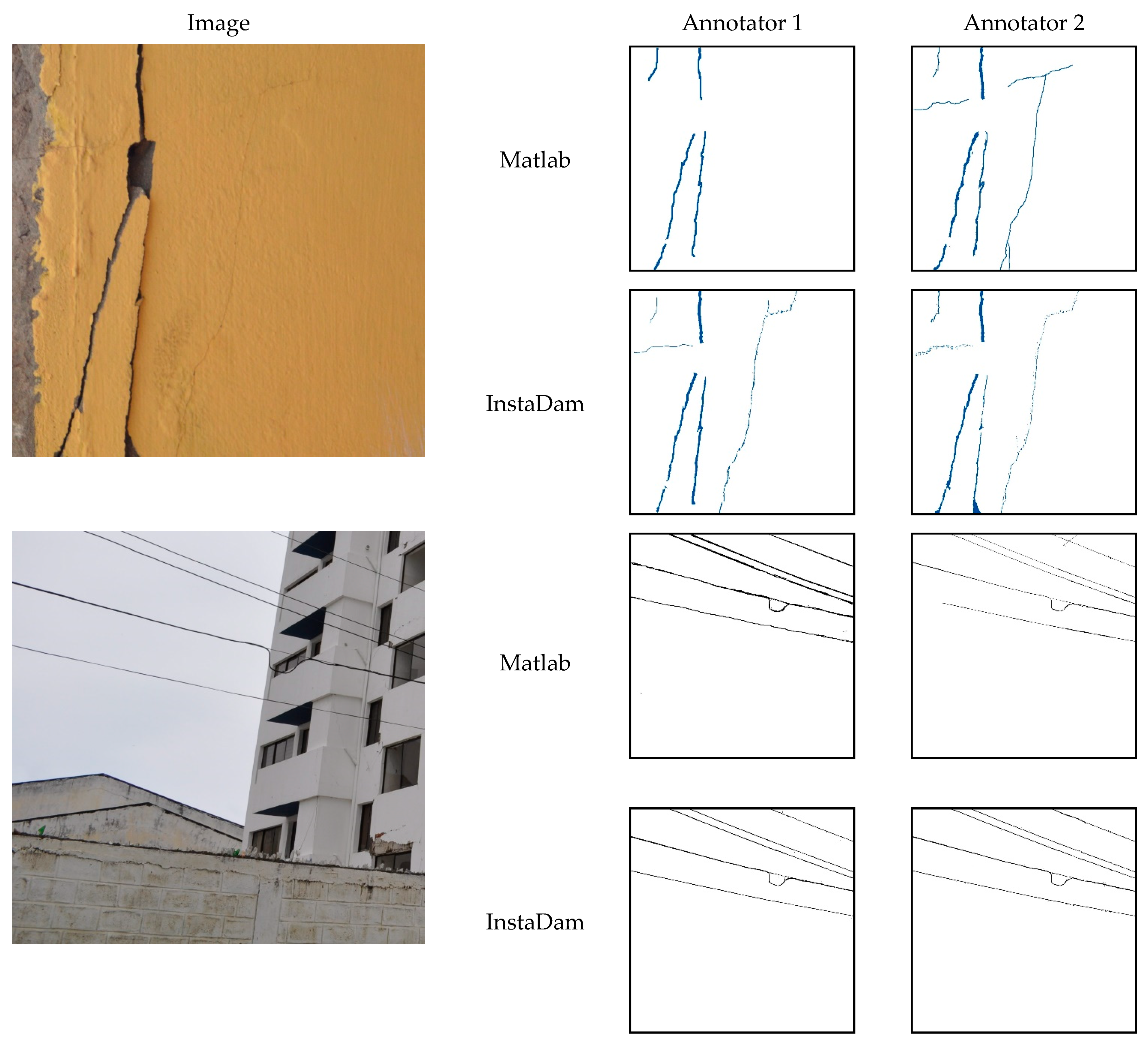

3.2. Comparison with Existing Annotation Software

3.3. Annotating Damage and Materials

4. Discussion of Results

4.1. Improvements in Efficiency

4.2. Improvements in Consistency

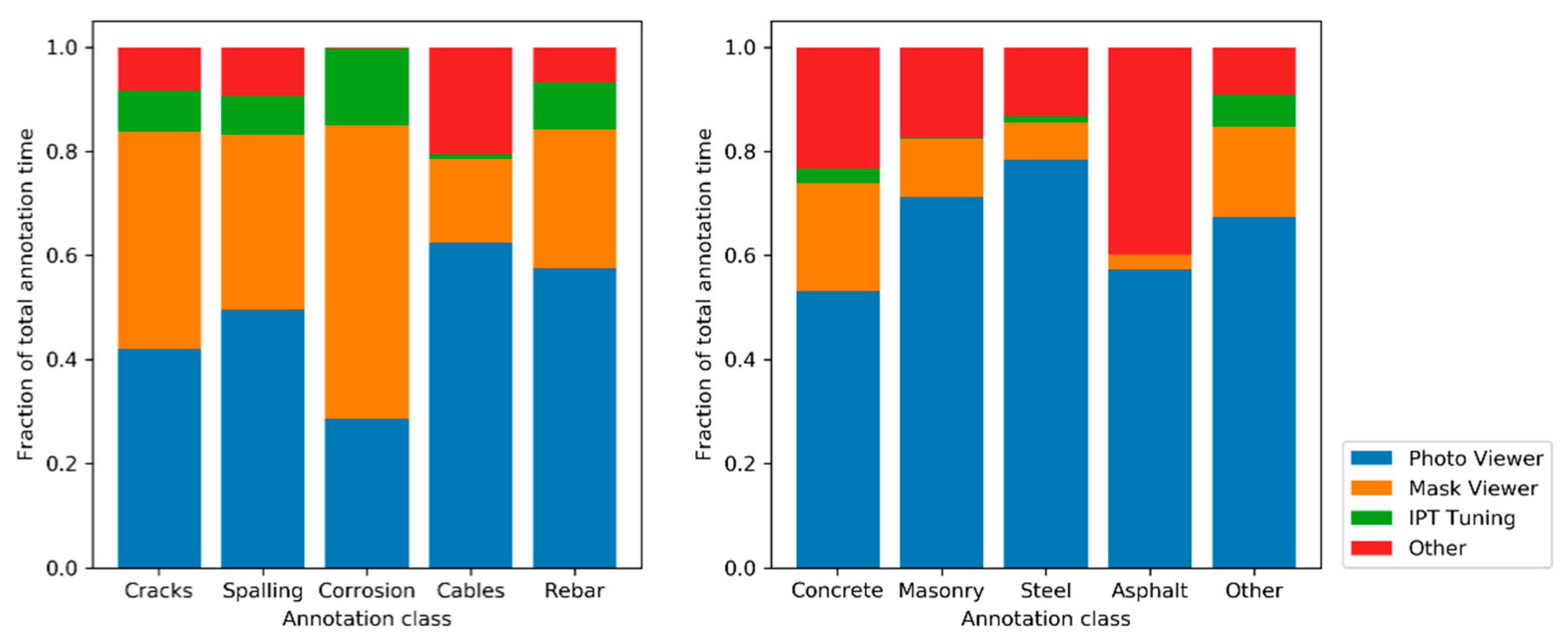

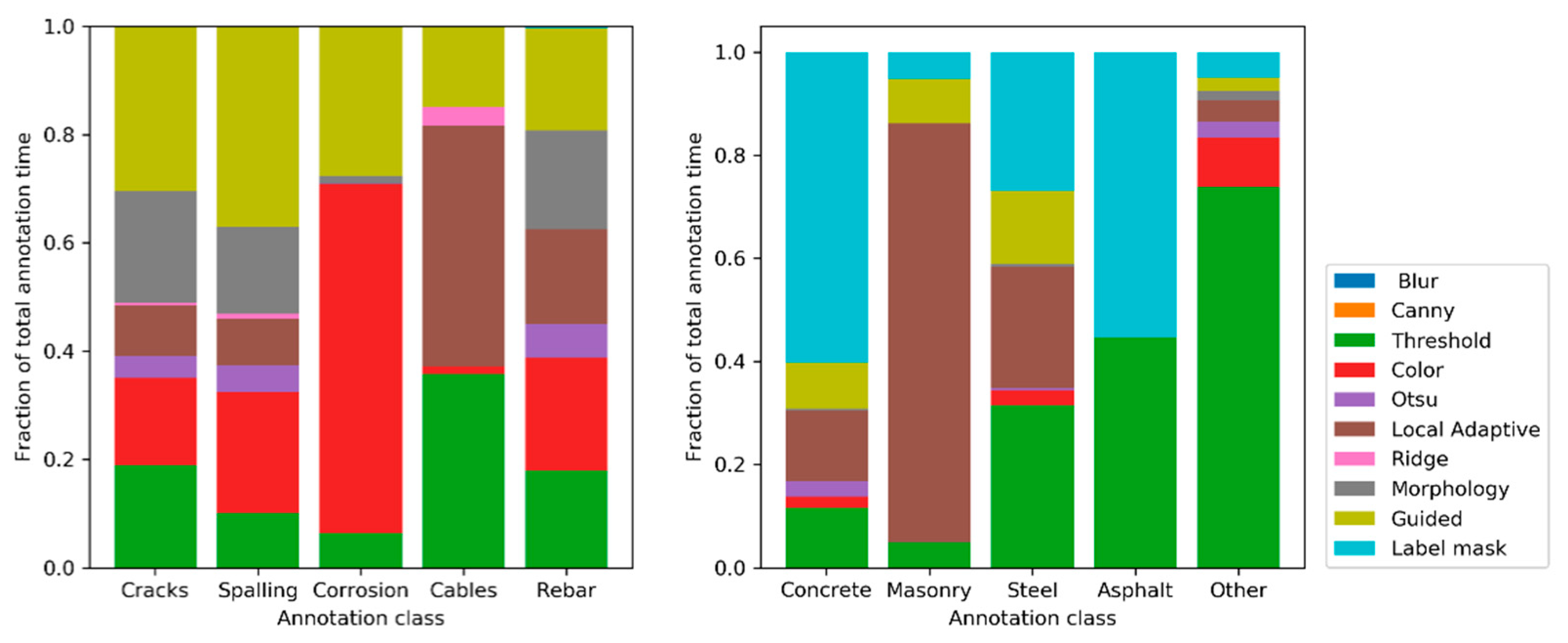

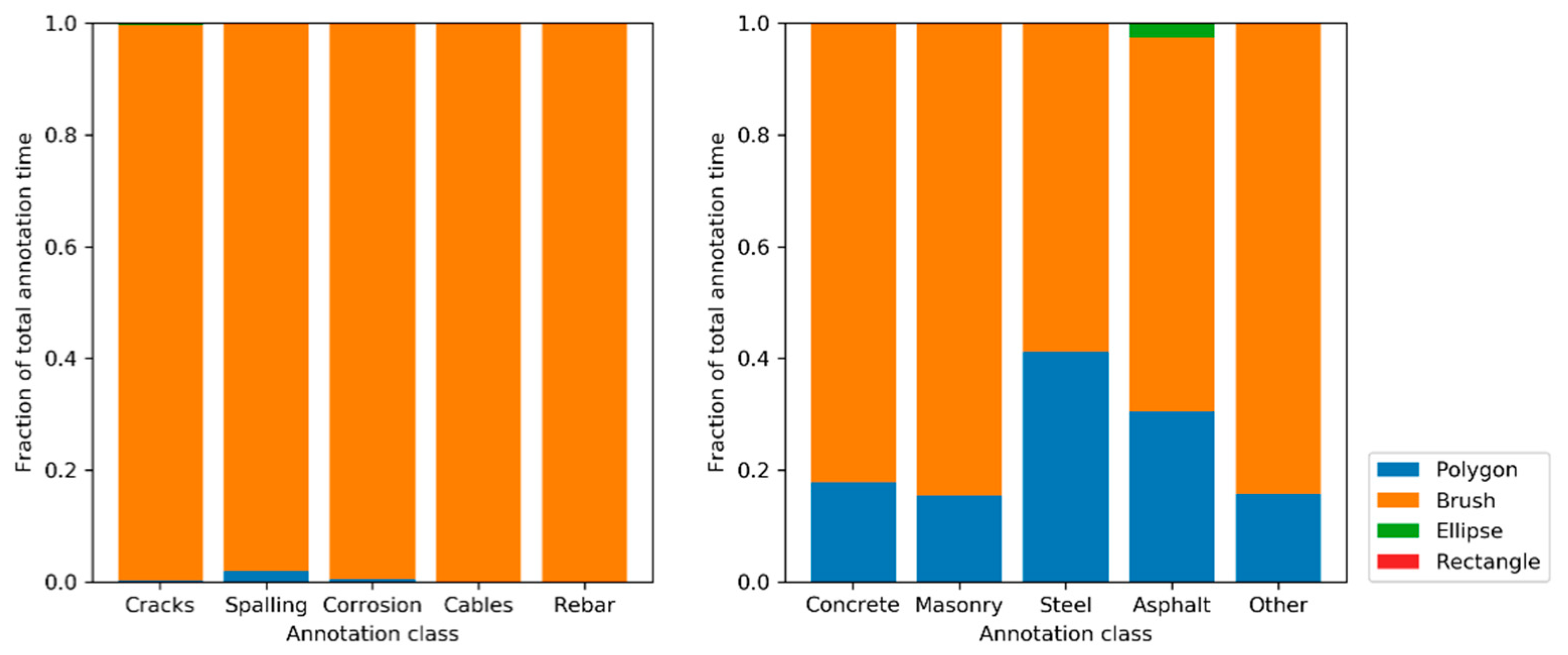

4.3. Analysis of Feature Use

4.4. Open Source Data Development Platform

5. Future Research

5.1. Machine Learning Enhanced Annotations

5.2. Web-Based Platform

5.3. Extension to Crowdsourced Annotation Platform

5.4. User Interface Testing

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hoskere, V.; Narazaki, Y.; Hoang, T.A.; Spencer, B.F., Jr. Vision-based Structural Inspection using Multiscale Deep Convolutional Neural Networks. In Proceedings of the 3rd Huixian International Forum on Earthquake Engineering for Young Researchers, Champaign, IL, USA, 12 August 2017. [Google Scholar]

- Spencer, B.F., Jr.; Hoskere, V.; Narazaki, Y. Advances in Computer Vision–based Civil Infrastructure Inspection and Monitoring. Engineering 2019. [Google Scholar] [CrossRef]

- Kim, B.; Cho, S. Automated Vision-Based Detection of Cracks on Concrete Surfaces Using a Deep Learning Technique. Sensors 2018, 18, 3452. [Google Scholar] [CrossRef] [PubMed]

- Hoskere, V.; Narazaki, Y.; Hoang, T.A.; Spencer, B.F., Jr. MaDnet: Multi-task Semantic Segmentation of Multiple types of Structural Materials and Damage in Images of Civil Infrastructure. J. Civ. Struct. Health Monit. 2020, 10, 757–773. [Google Scholar] [CrossRef]

- Chen, F.-C.; Jahanshahi, R.M.R. NB-CNN: Deep Learning-based Crack Detection Using Convolutional Neural Network and Naïve Bayes Data Fusion. IEEE Trans. Ind. Electron. 2017, 65, 1. [Google Scholar] [CrossRef]

- Cha, Y.J.; Choi, W.; Suh, G.; Mahmoudkhani, S.; Büyüköztürk, O. Autonomous Structural Visual Inspection Using Region-Based Deep Learning for Detecting Multiple Damage Types. Comput. Civ. Infrastruct. Eng. 2018, 33, 731–747. [Google Scholar] [CrossRef]

- Carr, T.A.; Jenkins, M.D.; Iglesias, M.I.; Buggy, T.; Morison, D.G. Road crack detection using a single stage detector based deep neural network. In Proceedings of the 2018 IEEE Workshop on Environmental, Energy, and Structural Monitoring Systems (EESMS), Salerno, Italy, 21–22 June 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Yeum, C.M. Computer Vision-Based Structural Assessment Exploiting Large Volumes of Images. Ph.D. Thesis, Purdue University, West Lafayette, IN, USA, 2016. Available online: https://docs.lib.purdue.edu/open_access_dissertations/1036 (accessed on 20 March 2020).

- Kim, B.; Cho, S. Automated crack detection from large volume of concrete images using deep learning. In Proceedings of the 7th World Conference on Structural Control and Monitoring, Qingdao, China, 22–25 July 2018. [Google Scholar]

- Narazaki, Y.; Hoskere, V.; Hoang, T.A.; Fujino, Y.; Sakurai, A.; Spencer, B.F. Vision-based automated bridge component recognition with high-level scene consistency. Comput. Civ. Infrastruct. Eng. 2019, 12505. [Google Scholar] [CrossRef]

- Alipour, M.; Harris, D.K.; Miller, G.R. Robust Pixel-Level Crack Detection Using Deep Fully Convolutional Neural Networks. J. Comput. Civ. Eng. 2019, 33, 04019040. [Google Scholar] [CrossRef]

- Dung, C.V.; Anh, L.D. Autonomous concrete crack detection using deep fully convolutional neural network. Autom. Constr. 2019, 99, 52–58. [Google Scholar] [CrossRef]

- Liang, X. Image-based post-disaster inspection of reinforced concrete bridge systems using deep learning with Bayesian optimization. Comput. Civ. Infrastruct. Eng. 2018. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Ros, G.; Sellart, L.; Materzynska, J.; Vazquez, D.; Lopez, A.M. The SYNTHIA Dataset: A Large Collection of Synthetic Images for Semantic Segmentation of Urban Scenes. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3234–3243. [Google Scholar]

- Menze, M.; Geiger, A. Object Scene Flow for Autonomous Vehicles. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3061–3070. [Google Scholar] [CrossRef]

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F.; Madhavan, V.; Darrell, T. BDD100K: A Diverse Driving Dataset for Heterogeneous Multitask Learning. Available online: https://bair.berkeley.edu/blog/2018/05/30/bdd/ (accessed on 12 March 2020).

- Li, Y.; Li, H.; Wang, H. Pixel-Wise Crack Detection Using Deep Local Pattern Predictor for Robot Application. Sensors 2018, 18, 3042. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Li, S.; Zhang, D.; Jin, Y.; Zhang, F.; Li, N.; Li, H. Identification framework for cracks on a steel structure surface by a restricted Boltzmann machines algorithm based on consumer-grade camera images. Struct. Control Health Monit. 2018, 25, e2075. [Google Scholar] [CrossRef]

- Hoskere, V.; Narazaki, Y.; Spencer, B.F.; Smith, M.D. Deep learning-based damage detection of miter gates using synthetic imagery from computer graphics. In Proceedings of the 12th International Workshop on Structural Health Monitoring, Stanford, CA, USA, 10–12 September 2019; Volume 2, pp. 3073–3080. [Google Scholar] [CrossRef]

- LabelMe: The Open Annotation Tool. Available online: http://labelme.csail.mit.edu/Release3.0/ (accessed on 1 August 2018).

- MATLAB-MathWorks, Natick, MA, USA. Available online: https://www.mathworks.com/products/matlab.html (accessed on 19 March 2020).

- Adobe, San Jose, California, United States|Adobe Photoshop|Photo, Image, and Design Editing Software. Available online: https://www.adobe.com/products/photoshop.htm (accessed on 19 March 2020).

- Labelbox, San Francisco, CA, USA. Available online: https://labelbox.com/ (accessed on 12 March 2020).

- Computer Vision Prodigy. An Annotation Tool for AI, Machine Learning & NLP. Available online: https://prodi.gy/features/computer-vision (accessed on 12 March 2020).

- Instance Segmentation Assistant–Hasty.ai Documentation. Available online: https://hasty.gitbook.io/documentation/annotating-environment/instance-segmentation-tool (accessed on 12 March 2020).

- Radically Efficient Annotation Platform to Speed up AI Projects–Kili Technology. Available online: https://kili-technology.com/ (accessed on 12 March 2020).

- Qt|Cross-Platform Software Development for Embedded & Desktop. Available online: https://www.qt.io/ (accessed on 12 March 2020).

- Main—Emscripten 1.39.8 Documentation. Available online: https://emscripten.org/ (accessed on 12 March 2020).

- Graphics View Framework|Qt Widgets 5.14.1. Available online: https://doc.qt.io/qt-5/graphicsview.html (accessed on 13 March 2020).

- InstaDam. Available online: https://youtu.be/N3z1YUMr-ME (accessed on 23 December 2020).

- Szeliski, R. Computer Vision. In Texts in Computer Science; Springer: London, UK, 2011; ISBN 978-1-84882-934-3. [Google Scholar]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Jahanshahi, M.R.; Masri, S.F.; Padgett, C.W.; Sukhatme, G.S. An innovative methodology for detection and quantification of cracks through incorporation of depth perception. Mach. Vis. Appl. 2013, 24, 227–241. [Google Scholar] [CrossRef]

- Abdel-Qader, I.; Abudayyeh, O.; Kelly, M.E. Analysis of Edge-Detection Techniques for Crack Identification in Bridges. J. Comput. Civ. Eng. 2003, 17, 255–263. [Google Scholar] [CrossRef]

- Medeiros, F.N.S.; Ramalho, G.L.B.; Bento, M.P.; Medeiros, L.C.L. On the evaluation of texture and color features for nondestructive corrosion detection. EURASIP J. Adv. Signal Process. 2010, 2010, 817473. [Google Scholar] [CrossRef]

- Staal, J.; Abràmoff, M.D.; Niemeijer, M.; Viergever, M.A.; Van Ginneken, B. Ridge-Based Vessel Segmentation in Color Images of the Retina. IEEE Trans. Med. Imaging 2004, 23, 501. [Google Scholar] [CrossRef] [PubMed]

- The Robotics Institute Carnegie Mellon University. Robust Crack Detection in Concrete Structures Images Using Multi-Scale Enhancement and Visual Features. Available online: https://www.ri.cmu.edu/publications/robust-crack-detection-in-concrete-structures-images-using-multi-scale-enhancement-and-visual-features/ (accessed on 31 July 2020).

- OpenCV. Available online: https://opencv.org/ (accessed on 12 March 2020).

- Flask (1.1.x). Available online: https://flask.palletsprojects.com/en/1.1.x/ (accessed on 19 March 2020).

- Amazon Mechanical Turk. Available online: https://www.mturk.com/ (accessed on 2 August 2020).

- Liu, K.; Golparvar-Fard, M. Crowdsourcing Construction Activity Analysis from Jobsite Video Streams. J. Constr. Eng. Manag. 2015, 141, 04015035. [Google Scholar] [CrossRef]

| IPT | Processing Time for 1MP Image (ms) | IPT Parameters |

|---|---|---|

| Common parameters | 0.65 | invert |

| Threshold | 1.24 | threshold |

| Otsu | 1.85 | NA |

| Morphology | 2.77 | erode, dilate, open close |

| Local Adaptive Threshold | 3.06 | strength, detail |

| Gaussian blur | 5.25 | kernel size, threshold, |

| Canny edge | 21.32 | threshold min, threshold max, kernel size |

| Color distance | 25.26 | R, G, B, fuzziness |

| Ridge filter | 50.02 | scale |

| Guided filter | 50.44 | threshold, diameter, sigma |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hoskere, V.; Amer, F.; Friedel, D.; Yang, W.; Tang, Y.; Narazaki, Y.; Smith, M.D.; Golparvar-Fard, M.; Spencer, B.F., Jr. InstaDam: Open-Source Platform for Rapid Semantic Segmentation of Structural Damage. Appl. Sci. 2021, 11, 520. https://doi.org/10.3390/app11020520

Hoskere V, Amer F, Friedel D, Yang W, Tang Y, Narazaki Y, Smith MD, Golparvar-Fard M, Spencer BF Jr. InstaDam: Open-Source Platform for Rapid Semantic Segmentation of Structural Damage. Applied Sciences. 2021; 11(2):520. https://doi.org/10.3390/app11020520

Chicago/Turabian StyleHoskere, Vedhus, Fouad Amer, Doug Friedel, Wanxian Yang, Yu Tang, Yasutaka Narazaki, Matthew D. Smith, Mani Golparvar-Fard, and Billie F. Spencer, Jr. 2021. "InstaDam: Open-Source Platform for Rapid Semantic Segmentation of Structural Damage" Applied Sciences 11, no. 2: 520. https://doi.org/10.3390/app11020520

APA StyleHoskere, V., Amer, F., Friedel, D., Yang, W., Tang, Y., Narazaki, Y., Smith, M. D., Golparvar-Fard, M., & Spencer, B. F., Jr. (2021). InstaDam: Open-Source Platform for Rapid Semantic Segmentation of Structural Damage. Applied Sciences, 11(2), 520. https://doi.org/10.3390/app11020520