A Needs-Based Augmented Reality System

Abstract

:1. Introduction

- Enhances real environments by adding virtual objects.

- Works in real-time and provides interactivity.

- Provides the correct placement of virtual objects within the environment.

2. Background

2.1. Technologies in Augmented Reality

- Image acquisition device: this device, usually a camera, captures the marker or object to be recognized.

- Computing and storage device: the component responsible for processing the gathered input and producing the correct output.

- Tracking and location technology: tracks the user position, view direction, and motion to decide on the virtual object to display.

- User interaction technology: the interface that captures the user input.

- Virtual object database: a database that includes relevant virtual objects.

- Virtual-real fusion technology: combines the virtual objects and real environment to ensure correct occlusion, shadows, and illumination.

- System display technology: the display technology presents the augmented information integrated with the real environment. The display technology depends on the type of AR system, visual, auditory, haptic, etc.

2.2. Classification of Augmented Reality

2.3. Review of Exiting Platforms

2.4. Triggers

3. Theoretical Framework

3.1. Personalization in Augmented Reality

- “a purpose or goal of personalization”.

- “What is personalized”. Four aspects of information systems may be personalized: the information (content), the presentation of information (user interface), the delivery method (channel), and the action.

- “The target of personalization”. The target can be a group of individuals or a specific individual.

- Purpose: to trigger AR experiences based on user needs

- What is personalized: the display of experience according to need

- Target: the user viewing the AR experience

- The following sections explain how these elements are applied in the proposed system.

3.2. Human Needs in Pervasive Environments

- Human needs are identified in several methods: interviews, questionnaires, signal processing on brain scans, and prediction methods that depend on sentiment analysis.

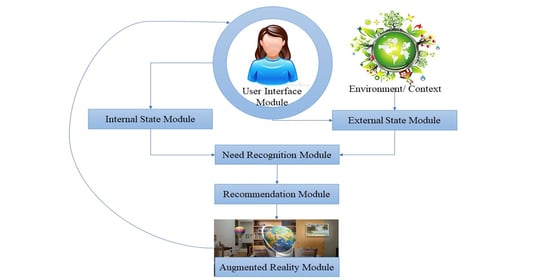

3.3. Proposed Concept

3.4. Need Analysis

- If a user has a subsistence need on a Being axis, trigger experience A.

- What elements define a need?

- How to measure a need?

- A person has a need.

- A need has one of three triggers: homeostasis imbalance, incentive, or stimulation.

- A need has a satisfier, which has an AR experience.

- A context describes the state of a person and can be an external state (environmental) or the internal state of a user.

- An external environmental state can be an incentive that triggers a need.

- An internal state can reflect a homeostasis imbalance that triggers a need.

- Homeostasis Imbalance: is the internal state that reflects a malfunction in the body processes resulting in a rise of a need. (internal)

- Incentive: is an external positive or negative environmental stimulus that motivates a person. (external)

- Stimulation: is an activity that causes excitement or pleasure.

3.5. Needs to Sensor Analysis

4. Materials and Methods

4.1. Scenario Analysis

- There is a homeostatic need for food.

- There is a regular habit of eating at a specific time.

- There is an external incentive that excites the user to have food.

4.2. Application Process

4.3. A Proof of Concept Prototype

- Decide on the need you plan to satisfy with the AR experience.

- 2.

- Determine needs specific elements and requirements (context, personas, type)

- 3.

- Select the trigger (homeostasis imbalance, habits, or incentives).

- 4.

- Develop the AR experience with multiple options and preferences for that particular need.

- 5.

- Based on the need and trigger, select the appropriate sensors required to collect data.

- 6.

- Collect data and build a user profile and database.

- 7.

- Decide and develop the analysis mechanism (a rule engine, predictive algorithms).

- 8.

- Apply a recommendation process to select the AR experience specific to the individual’s preferences.

- 9.

- Design a feedback collection method to improve recommendations.

5. Results

6. Discussion

- Technology is now reaching many people in various living conditions.

- People undergo changing levels of needs daily regardless of capabilities.

- AR experiences are custom-made for specific reasons; however, these reasons are usually generic and not user-related, even in the case of personalized AR, the experiences are product, location, or service related more than user related. This research questions that from a plethora of AR experiences that shall be available in the future, how do we make AR more user-specific and find the best experience for a user at a certain time? This study answers this question by incorporating basic human needs into the equation. Therefore, the novelty is in connecting the concepts of augmented reality, needs detection, and satisfier recommendation.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ronald, T.; Azuma, A. Survey of augmented reality. Presence Teleoper. Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Carmigniani, J.; Furht, B.; Anisetti, M.; Ceravolo, P.; Damiani, E.; Ivkovic, M. Augmented reality technologies, systems and applications. Multimed. Tools Appl. 2011, 51, 341–377. [Google Scholar] [CrossRef]

- Azuma, R.; Baillot, Y.; Behringer, R.; Feiner, S.; Julier, S.; MacIntyre, B. Recent advances in augmented reality. IEEE Comput. Graph. Appl. 2001, 21, 34–47. [Google Scholar] [CrossRef] [Green Version]

- Dunleavy, M.; Dede, C. Augmented reality teaching and learning. In Handbook of Research on Educational Communications and Technology; Springer: Cham, Switzerland, 2014; pp. 735–745. [Google Scholar]

- Patricio, J.M.; Costa, M.C.; Carranca, J.A.; Farropo, B. SolarSystemGO—An augmented reality based game with astronomical concepts. In Proceedings of the 2018 13th Iberian Conference on Information Systems and Technologies (CISTI), Caceres, Spain, 13–16 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–3. [Google Scholar]

- Waltner, G.; Schwarz, M.; Ladstätter, S.; Weber, A.; Luley, P.; Bischof, H.; Lindschinger, M.; Schmid, I.; Paletta, L. Mango-mobile augmented reality with functional eating guidance and food awareness. In Lecture Notes in Computer Science, Proceedings of the International Conference on Image Analysis and Processing, Genoa, Italy, 7–8 September 2015; Springer: Cham, Switzerland, 2015; pp. 425–432. [Google Scholar]

- Khor, W.S.; Baker, B.; Amin, K.; Chan, A.; Patel, K.; Wong, J. Augmented and virtual reality in surgery—the digital surgical environment: Applications, limitations and legal pitfalls. Ann. Translat. Med. 2016, 4, 454. [Google Scholar] [CrossRef] [Green Version]

- Frigo, M.A.; da Silva, E.C.; Barbosa, G.F. Augmented reality in aerospace manufacturing: A review. J. Ind. Intell. Inform. 2016, 4, 125–130. [Google Scholar] [CrossRef]

- Hammady, R.; Ma, M.; Temple, N. Augmented reality and gamification in heritage museums. In Lecture Notes in Computer Science, Proceedings of the Joint International Conference on Serious Games, Brisbane, QLD, Australia, 26–27 September 2016; Springer: Cham, Switzerland, 2016; pp. 181–187. [Google Scholar]

- Boy, G.A.; Platt, D. A situation awareness assistant for human deep space exploration. In Lecture Notes in Computer Science, Proceedings of the International Conference on Human-Computer Interaction, Las Vegas, NV, USA, 21–26 July 2013; Springer: Cham, Switzerland, 2013; pp. 629–636. [Google Scholar]

- Han, D.; tom Dieck, M.C.; Jung, T. User experience model for augmented reality applications in urban heritage tourism. J. Herit. Tour. 2018, 13, 46–61. [Google Scholar] [CrossRef]

- Zhou, F.; Duh, H.B.; Billinghurst, M. Trends in augmented reality tracking, interaction and display: A review of ten years of ISMAR. In Proceedings of the 2008 7th IEEE/ACM International Symposium on Mixed and Augmented Reality, Cambridge, UK, 15–18 September 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 193–202. [Google Scholar]

- Human, S.; Fahrenbach, F.; Kragulj, F.; Savenkov, V. Ontology for Representing Human Needs, Knowledge Engineering and Semantic Web; Różewski, P., Lange, C., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 195–210. [Google Scholar]

- Livingston, M.A. Evaluating human factors in augmented reality systems. IEEE Comput. Graph. Appl. 2005, 25, 6–9. [Google Scholar] [CrossRef]

- Juan, C.; YuLin, W.; Wei, S. Construction of interactive teaching system for course of mechanical drawing based on mobile augmented reality technology. Int. J. Emerg. Technol. Learn. 2018, 13, 126. [Google Scholar]

- Kim, K.; Billinghurst, M.; Bruder, G.; Duh, H.B.; Welch, G.F. Revisiting trends in augmented reality research: A review of the 2nd decade of ISMAR (2008–2017). IEEE Trans. Vis. Comput. Graph. 2018, 24, 2947–2962. [Google Scholar] [CrossRef]

- Gamper, H. Enabling Technologies for Audio Augmented Reality Systems. Ph.D. Thesis, Aalto University, Espoo, Finland, 2014. [Google Scholar]

- Edwards-Stewart, A.; Hoyt, T.; Reger, G. Classifying different types of augmented reality technology. Ann. Rev. CyberTher. Telemed. 2016, 14, 199–202. [Google Scholar]

- Ribeiro, F.; Florêncio, D.; Chou, P.A.; Zhang, Z. Auditory augmented reality: Object sonification for the visually impaired. In Proceedings of the IEEE 14th International Workshop on Multimedia Signal Processing (MMSP), Banff, AB, Canada, 17–19 September 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 319–324. [Google Scholar]

- Jeon, S.; Choi, S. Haptic augmented reality: Taxonomy and an example of stiffness modulation. Presence Teleoper. Virtual Environ. 2009, 18, 387–408. [Google Scholar] [CrossRef]

- Narumi, T.; Nishizaka, S.; Kajinami, T.; Tanikawa, T.; Hirose, M. Augmented Reality Flavors: Gustatory Display Based on Edible Marker and Cross-Modal Interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 93–102. [Google Scholar]

- Yamada, T.; Yokoyama, S.; Tanikawa, T.; Hirota, K.; Hirose, M. Wearable Olfactory Display: Using Odor in Outdoor Environment. In Proceedings of the IEEE Virtual Reality Conference (VR 2006), Alexandria, VA, USA, 25–29 March 2006; pp. 199–206. [Google Scholar]

- Bower, M.; Howe, C.; McCredie, N.; Robinson, A.; Grover, D. Augmented Reality in education—Cases, places and potentials. Educ. Media Int. 2014, 51, 1–15. [Google Scholar] [CrossRef]

- He, Z.; Wu, L.; Li, X.R. When art meets tech: The role of augmented reality in enhancing museum experiences and purchase intentions. Tour. Manag. 2018, 68, 127–139. [Google Scholar] [CrossRef]

- Serravalle, F.; Ferraris, A.; Vrontis, D.; Thrassou, A.; Christofi, M. Augmented reality in the tourism industry: A multi-stakeholder analysis of museums. Tour. Manag. Perspect. 2019, 32, 100549. [Google Scholar] [CrossRef]

- Billinghurst, M. Augmented reality in education. New Horiz. Learn. 2002, 12, 1–5. [Google Scholar]

- Chen, P.; Liu, X.; Cheng, W.; Huang, R. A review of using Augmented Reality in Education from 2011 to 2016. In Innovations in Smart Learning; Springer: Cham, Switzerland, 2017; pp. 13–18. [Google Scholar]

- Fan, H.; Poole, M.S. What is personalization? Perspectives on the design and implementation of personalization in information systems. J. Organ. Comput. Electr. Commer. 2006, 16, 179–202. [Google Scholar]

- Zimmermann, A.; Specht, M.; Lorenz, A. Personalization and context management. User Model. User Adapt. Interact. 2005, 15, 275–302. [Google Scholar] [CrossRef]

- Paavilainen, J.; Korhonen, H.; Alha, K.; Stenros, J.; Koskinen, E.; Mayra, F. The Pokémon GO experience: A location-based augmented reality mobile game goes mainstream. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 2493–2498. [Google Scholar]

- Tahara, T.; Seno, T.; Narita, G.; Ishikawa, T. Retargetable AR: Context-aware Augmented Reality in Indoor Scenes based on 3D Scene Graph. In Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Recife, Brazil, 9–13 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 249–255. [Google Scholar]

- Ghadiri, N.; Nematbakhsh, M.A.; Baraani-Dastjerdi, A.; Ghasem-Aghaee, N. A Context-Aware Service Discovery Framework Based on Human Needs Model, Service-Oriented Computing–ICSOC 20-07; Krämer, B.J., Lin, K., Narasimhan, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 404–409. [Google Scholar]

- Abramovich, A. Theory of needs and problems. Socio Econ. Sci. 2020, 4, 411. [Google Scholar]

- Zhu, Y.; Chen, H. Social media and human need satisfaction: Implications for social media marketing. Bus. Horiz. 2015, 58, 335–345. [Google Scholar] [CrossRef]

- Houghton, D.; Pressey, A.; Istanbulluoglu, D. Who needs social networking? An empirical enquiry into the capability of Facebook to meet human needs and satisfaction with life. Comput. Hum. Behav. 2020, 104, 106153. [Google Scholar] [CrossRef]

- Max-Neef, M.A. Human Scale Development; Apex Press: New York, NY, USA, 1991. [Google Scholar]

- Yahya, M.; Dahanayake, A. A Needs-based personalization model for context aware applications. Front. Artif. Intell. Appl. 2016, 292, 63–82. [Google Scholar]

- Max-Neef, M.; Elizalde, A.; Hopenhayn, M. Development and human needs. In Real-Life Economics: Understanding Wealth Creation; Routledge: London, UK, 1992; pp. 197–213. [Google Scholar]

- Yahya, M.A.; Dahanayake, A. Augmented reality for human needs: An ontology. Front. Artif. Intell. Appl. 2020, 333, 275–294. [Google Scholar]

- Myers, D.G. Psychology, 10th ed.; Worth Publishers: New York, NY, USA, 2013. [Google Scholar]

- Andreoni, G.; Standoli, C.E.; Perego, P. Defining requirements and related methods for designing sensorized garments. Sensors 2016, 16, 769. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cem Kaner, J.D. An Introduction to Scenario Testing; Florida Institute of Technology: Melbourne, Australia, 2013; pp. 1–13. [Google Scholar]

- Menu, A.R. Look at Food in a New Way. Available online: http://menuar.ru/en.php (accessed on 18 July 2021).

- See Food Augmented Reality Menu. Available online: https://sketchfab.com/seefood (accessed on 18 July 2021).

- Chen, A. Context-Aware Collaborative Filtering System: Predicting the User’s Preference in the Ubiquitous Computing Environment, Location-and Context-Awareness; Strang, T., Linnhoff-Popien, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 244–253. [Google Scholar]

| Core AR Technology | Definition |

|---|---|

| Tracking techniques | “Methods of tracking a target object/environment via cameras and sensors, and estimating viewpoint poses”. |

| Interaction techniques and user interfaces | “Techniques and interfaces for interacting with virtual content”. |

| Calibration and registration | “Geometric or photometric calibration methods, and method to align multiple coordinate frames”. |

| Display techniques | “Display hardware to present virtual content in AR, including head-worn, handheld, and projected displays”. |

| AR applications | “AR systems in application domains such as medicine, manufacturing, or military, among others”. |

| Category | Type | Brief | Example |

|---|---|---|---|

| Triggered | Marker-Based | Triggered by a marker: Paper (image) Physical object | Museum displays |

| Location-Based | Triggered by GPS location | Monocle Restaurant information | |

| Dynamic Augmentation | Responsive to object changes | Digitally trying clothes and accessories with shopping apps | |

| Complex Augmentation | A combination of the above | Dynamic view with digital information from the Internet | |

| View-Based | Indirect Augmentation | Intelligent augmentation of a static view | Taking a picture and changing the wall color |

| Non-Specific digital Augmentation | Augmentation of a dynamic view | Augmentation in mobile games |

| Procedure | Visual AR | Haptic AR |

|---|---|---|

| Sensing a real environment | Captures real information needed for visual augmentation | Senses real information needed for haptic augmentation |

| Sensor examples | Camera, range finder, tracker | Position encoder, accelerometer, force sensor, thermometer |

| Constructing stimuli for augmentation |

|

|

| Displaying augmented stimuli | Uses a visual display | Uses a haptic display |

| Display examples | Head-mounted display, projector, mobile phone | Force-feedback interface, tactile display, thermal display |

| Platform | Publish Application or Web-Based | SDK | Types of Experiences | Experience Trigger, AR-type |

|---|---|---|---|---|

| AWE Media Studio https://awe.media/ (accessed on 18 August 2021). | Web | No | Image, spatial, face tracking, GPS location, 360° | Weblink, Non-specific digital augmentation |

| Zap Works https://zap.works/ (accessed on 18 August 2021). | Either | Yes | Image, face tracking, 360° | Marker-based (special marker) |

| BlippAR Builder https://www.blippar.com/build-ar (accessed on 18 August 2021). | Either | Yes | Image | Marker-based (image scan) |

| Spark AR Studio https://sparkar.facebook.com/ar-studio/ (accessed on 18 August 2021). | On Facebook or Instagram | No | Face tracking, image-based | Maker-based, dynamic augmentation |

| Wikitude AR https://www.wikitude.com/ (accessed on 18 August 2021). | Application | Yes | Image, object, scene recognition, instant tracking, Geo AR | Marker-based, location-based, dynamic augmentation |

| Unity MARS https://unity.com/products/unity-mars (accessed on 18 August 2021). | Application | No | Location-aware, context-aware | Marker-based, complex augmentation |

| Needs According to Axiological Categories | Being | Having | Doing | Interacting |

|---|---|---|---|---|

| Subsistence | 1/Physical health, mental health, | 2/Food, shelter, work | 3/Feed, procreate, rest, work | 4/Living environment, social setting |

| Protection | 5/Care, adaptability, autonomy | 6/insurance systems, savings, work, | 7/prevent, plan, take care of, cure, help | 8/Living space, social environment, |

| Affection | 9/Self-esteem, solidarity, respect | 10/Friendship, family, partnerships, a sense of humor | 11/Caress, express emotions | 12/Privacy, intimacy, home |

| Understanding | 13/Critical conscience, curiosity, receptiveness | 14/Literature, teachers, method, educational policies | 15/Investigate, study, experiment, educate, analyze, meditate | 16/Settings of formative interaction, schools, universities |

| Participation | 17/Adaptability, receptiveness, solidarity | 18/Rights, responsibilities, duties, privilege, work, a sense of humor | 19/Become affiliated, cooperate, propose | 20/Settings of participative interaction, parties, associations |

| Leisure | 21/Curiosity, receptiveness, imagination | 22/Games, spectacles, clubs, parties, peace of mind, a sense of humor | 23/Day-dream, brood, dream | 24/Privacy, intimacy, spaces of closeness |

| Creation | 25/Passion, determination, intuition | 26/Abilities, skills, method, work | 27/Work, invent, build, design, compose, interpret | 28/Productive and feedback settings, workshops |

| Identity | 29/Sense of belonging, consistency | 30/Symbols, language, religions, habits, customs, | 31/Commit oneself, integrate oneself, confront | 32/Social rhythms, everyday settings, |

| Freedom | 33/Autonomy, self-esteem, determination | 34/Equal rights | 35/Dissent, choose, be different from | 36/Temporal/Spatial plasticity |

| Fundamental Human Needs Existential Categories | Being (Qualities) | Having (Things) | Doing (Actions) | Interacting (Settings) |

|---|---|---|---|---|

| Context-Aware Categorization | User, Who (Identity) | Things | What (Activity) | Where (Location), Weather, Social, Networking |

| When (Time) | ||||

| Sensors and Technology | Emotion Sensors Body Sensors | IoT systems and Sensors | Activity recognition through motion sensors | Location awareness, nearby user device (for proximity with other users) |

| Algorithm | Accuracy of all Needs Prediction | Eat/Drink Need Class Precision | Eat/Drink Need Class Recall |

|---|---|---|---|

| Decision Tree | 49.32% | 44.44% | 42.11% |

| Random Forest | 32.88% | 40.00% | 21.05% |

| Naive Bayes | 38.36% | 42.11% | 42.11% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yahya, M.A.; Dahanayake, A. A Needs-Based Augmented Reality System. Appl. Sci. 2021, 11, 7978. https://doi.org/10.3390/app11177978

Yahya MA, Dahanayake A. A Needs-Based Augmented Reality System. Applied Sciences. 2021; 11(17):7978. https://doi.org/10.3390/app11177978

Chicago/Turabian StyleYahya, Manal A., and Ajantha Dahanayake. 2021. "A Needs-Based Augmented Reality System" Applied Sciences 11, no. 17: 7978. https://doi.org/10.3390/app11177978