MenuNER: Domain-Adapted BERT Based NER Approach for a Domain with Limited Dataset and Its Application to Food Menu Domain

Abstract

:1. Introduction

- Proposing a simple yet effective NER task approach based on domain adaptation technique with extended input features for an application domain where a large dataset is scarce.

- Evaluating the advantage and effectiveness of using domain adaptation and transfer learning techniques for NER task to recognize rich dishes’ names in the food domain.

- Evaluating the impact of using domain-adapted BERT embedding along with character-level and POS tag feature representation to train the NER task network model with limited datasets.

- Achieving better results on the proposed NER task approach compared with baseline models for food menu NER.

- Preparing carefully annotated dataset for menu dishes.

2. Related Work

2.1. Neural Network Model Architecture for NER

2.2. NER Based on Contextualized Word Representations

2.3. Transfer Learning and Domain Adaptation in NLP Tasks

2.4. NER in Food Domain

3. Methodology

3.1. BERT Training

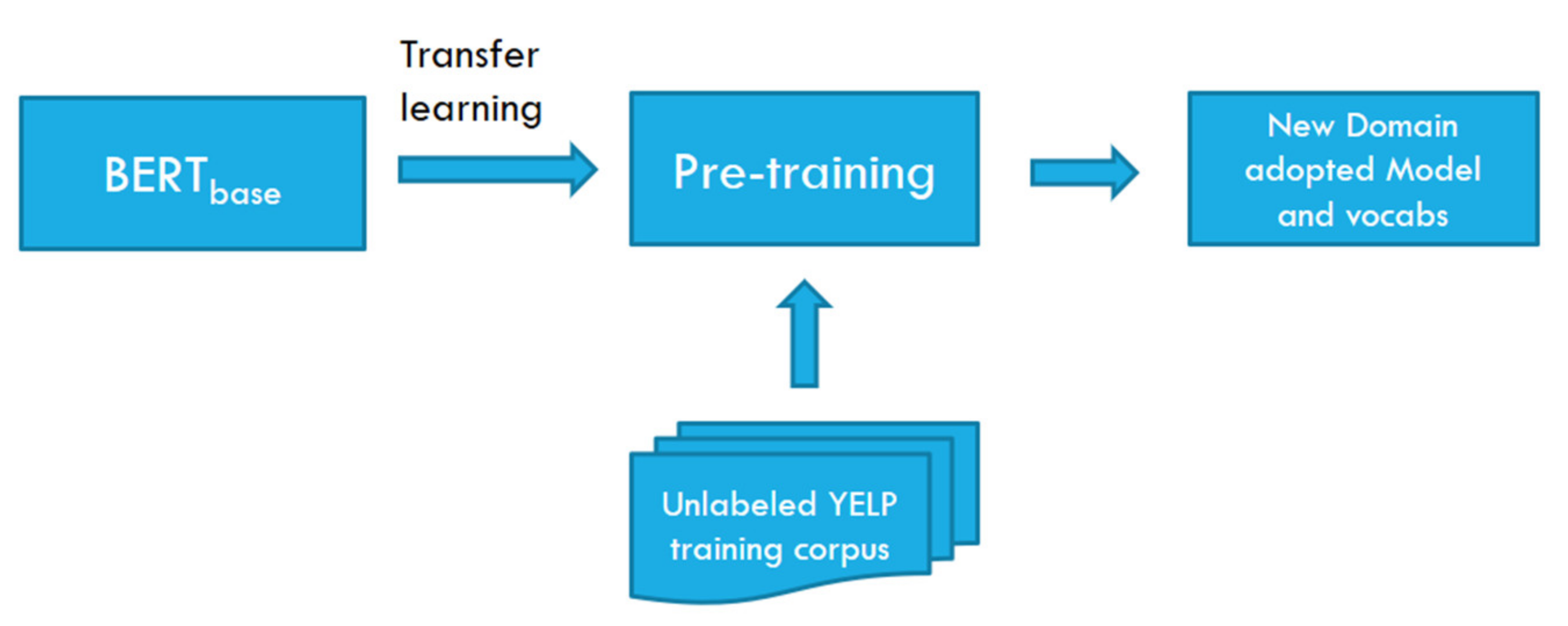

3.2. BERT-Domain; Further Training of BERT Language Model

3.3. Neural Network Architecture for NER Task Fine-Tuning

3.3.1. Embedding Layer

Word Embedding (BERT Embedder)

Character-Level Embedding (CharCNN: Character-level Convolutional Neural Network)

Part-Of-Speech (POS) Tag Feature Embedding

3.3.2. NER Task Network Architecture

Bidirectional Long short-term memory (Bi-LSTM) Layer

Conditional Random Field (CRF) Layer

4. Evaluation on Food Domain

4.1. Experimental Environments

4.1.1. Dataset for MenuNER and Fine-Tuning

4.1.2. Experiment Metrics

4.1.3. Experiment Setting

4.2. Experimental Results

4.2.1. Ablation Test

4.2.2. Evaluation for MenuNER

- What is the effect of domain adaptation (further pre-training of BERT-base model) on a MenuNER task in terms of its performance?

- What impact does a feature-based BERT model put on the NER task while combining with character-level and POS tag features? (see Table 2)

- Comparison of neural network architectures for the MenuNER task: “BERT with fully-connected FC layer and a SoftMax layer” vs. “Bi-LSTM-CRF” and a variant with multi-head self-attention.

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lin, B.Y.; Xu, F.; Luo, Z.; Zhu, K. Multi-channel BiLSTM-CRF Model for Emerging Named Entity Recognition in Social Media. In Proceedings of the 3rd Workshop on Noisy User-generated Text, Copenhagen, Denmark, 7 September 2017; Association for Computational Linguistics: Stroudsburg, PA, USA, 2017; pp. 160–165. [Google Scholar]

- Yan, H.; Deng, B.; Li, X.; Qiu, X. TENER: Adapting Transformer Encoder for Named Entity Recognition. arXiv 2019, arXiv:1911.04474. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Lafferty, J.D.; McCallum, A.; Pereira, F.C.N. Conditional Random Fields: Probabilistic Models for Segmenting and Labeling Sequence Data. In Proceedings of the 18th International Conference on Machine Learning, Williams College, Williamstown, MA, USA, 28 June–1 July 2001; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2001; pp. 282–289. [Google Scholar]

- Huang, Z.; Xu, W.; Yu, K. Bidirectional LSTM-CRF Models for Sequence Tagging. arXiv 2015, arXiv:1508.01991. [Google Scholar]

- Ma, X.; Hovy, E. End-to-end sequence labeling via bidirectional LSTM-CNNS-CRF. arXiv 2016, arXiv:1603.01354. [Google Scholar]

- Lample, G.; Ballesteros, B.; Subramanian, S.; Kawakami, K.; Dyer, C. Neural Architectures for Named Entity Recognition. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; Association for Computational Linguistics: Stroudsburg, PA, USA, 2016; pp. 260–270. [Google Scholar]

- Chiu, J.P.C.; Nichols, E. Named Entity Recognition with Bidirectional LSTM-CNNs. TACL 2016, 4, 357–370. [Google Scholar] [CrossRef]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed Representations of Words and Phrases and their Compositionality. Adv. Neural Inf. Process. Syst. 2013, 26, 3111–3119. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Peters, M.E.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep contextualized word representations. arXiv 2018, arXiv:1802.05365. [Google Scholar]

- Devlin, J.; Chang, M.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 4171–4186. [Google Scholar]

- Sun, C.; Qiu, X.; Xu, Y.; Huang, X. How to Fine-Tune BERT for Text Classification? In CCL, Lecture Notes in Computer Science; Sun, M., Huang, X., Ji, H., Liu, Z., Liu, Y., Eds.; Springer: Cham, Switzerland; Kunming, China, 2019; Volume 11856, pp. 194–206. [Google Scholar]

- Zhu, J.; Xia, Y.; Wu, L.; He, D.; Qin, T.; Zhou, W.; Li, H.; Liu, T. Incorporating BERT into neural machine translation. In Proceedings of the 18th International Conference on Learning Representations (ICLR), Virtual Conference, Formerly Addis Ababa Ethiopia. 26 April–1 May 2020. [Google Scholar]

- Symeonidou, A.; Sazonau, V.; Gorth, P. Transfer Learning for Biomedical Named Entity Recognition with BioBERT. SEMANTICS Posters Demos 2019, 2451, 100–104. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 2017, pp. 5999–6009. [Google Scholar]

- Rongali, S.; Jagannatha, A.; RAWAT, B.P.S.; Yu, H. Continual Domain-Tuning for Pretrained Language Models. arXiv 2021, arXiv:2004.02288. [Google Scholar]

- Ma, X.; Xu, P.; Wang, Z.; Nallapati, R.; Xiang, B. Domain Adaptation with BERT-based Domain Classification and Data Selection. In Proceedings of the 2nd Workshop on Deep Learning Approaches for Low-Resource NLP (DeepLo 2019), Hong Kong, China, 3 November 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 76–83. [Google Scholar]

- Xu, H.; Liu, B.; Shu, L.; Yu, P.S. BERT post-training for review reading comprehension and aspect-based sentiment analysis. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; pp. 2324–2335. [Google Scholar]

- Rietzler, A.; Stabinger, S.; Opitz, R.; Engl, S. Adapt or get left behind: Domain adaptation through BERT language model fine-tuning for aspect-target sentiment classification. arXiv 2019, arXiv:1908.11860. [Google Scholar]

- Seok, M.; Song, H.J.; Park, C.; Kim, J.D.; Kim, Y.S. Named Entity Recognition using Word Embedding as a Feature. Int. J. Softw. Eng. Appl. 2016, 10, 93–104. [Google Scholar] [CrossRef] [Green Version]

- Saad, F.; Aras, H.; Hackl-Sommer, R. Improving Named Entity Recognition for Biomedical and Patent Data Using Bi-LSTM Deep Neural Network Models. In Natural Language Processing and Information Systems (NLDB), Lecture Notes in Computer, Science; Métais, E., Meziane, F., Horacek, H., Cimiano, P., Eds.; Springer: Cham, Switzerland, 2020; Volume 12089. [Google Scholar]

- Zhai, A.; Nguyen, D.Q.; Akhondi, S.; Thorne, C.; Druckenbrodt, C.; Cohn, T.; Gregory, M.; Verspoor, K. Improving Chemical Named Entity Recognition in Patents with Contextualized Word Embeddings. In Proceedings of the 18th BioNLP Workshop and Shared Task, Florence, Italy, 1 August 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 328–338. [Google Scholar]

- Emelyanov, A.; Artemova, E. Multilingual Named Entity Recognition Using Pretrained Embeddings, Attention Mechanism and NCRF. In Proceedings of the 7th Workshop on Balto-Slavic Natural Language Processing, Florence, Italy, 2 August 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 94–99. [Google Scholar]

- Francis, S.; Landeghem, V.J.; Moens, M. Transfer Learning for Named Entity Recognition in Financial and Biomedical Documents. Information 2019, 10, 248. [Google Scholar] [CrossRef] [Green Version]

- Eftimov, T.; Seljak, B.K.; Korošec, P. DrNER: A rule-based named-entity recognition method for knowledge extraction of evidence-based dietary recommendations. PLoS ONE 2017, 12, e0179488. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Popovski, G.; Kochev, S.; Seljak, B.K.; Eftimov, T. Foodie: A rule-based named-entity recognition method for food information extraction. In Proceedings of the 8th International Conference on Pattern Recognition Application and Methods (ICPRAM), Prague, Czech Republic, 19–21 February 2019; pp. 915–922. [Google Scholar]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. HuggingFace’s Transformers: State-of-the-art Natural Language Processing. arXiv 2019, arXiv:1910.03771. [Google Scholar]

- Zhang, F. A hybrid structured deep neural network with Word2Vec for construction accident causes classification. Int. J. Constr. Manag. 2019, 1–21. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Zhao, J.; LeCun, Y. Character-level convolutional networks for text classification. In Proceedings of the 28th International Conference on Neural Information Processing System, Montreal, QC, Canada, 7–12 December 2015; Volume 1, pp. 649–657. [Google Scholar]

- YELP Dataset. Available online: https://www.yelp.com/dataset/ (accessed on 14 May 2021).

- Spacy. Available online: https://spacy.io/ (accessed on 14 May 2021).

- WebAnno Tool. Available online: https://webanno.github.io/webanno/ (accessed on 14 May 2021).

- Loper, E.; Bird, S. NLTK: The natural language toolkit. arXiv 2002, arXiv:cs/0205028. [Google Scholar]

| Dataset Features | Train | Test | Valid |

|---|---|---|---|

| No. of sentences | 5517 | 3037 | 2211 |

| Unique menu entities | 1027 | 637 | 516 |

| Total menu entities | 2626 | 1424 | 1106 |

| Embeddings | F1 Score (Test) | Training Time (s) | Test Execution Time (s) |

|---|---|---|---|

| BERT-domain | 91.70 | 2851.52 | 61.92 |

| BERT-domain + POS | 91.74 | 4156.91 | 62.40 |

| BERT-domain + CharCNN | 91.85 | 2484.65 | 62.01 |

| All (BERT-domain + CharCNN + POS) | 92.46 | 4827.43 | 64.14 |

| # | Word Embedding | BERT-Base Embedding | BERT-Domain Embedding | ||||

|---|---|---|---|---|---|---|---|

| NER Network | P | R | F1 | P | R | F1 | |

| 1 | SoftMax | 88.7 | 91.7 | 90.2 | 91.7 | 92.1 | 91.9 |

| 2 | Bi-LSTM-CRF | 90.3 | 91.3 | 91.8 | 92.7 | 92.3 | 92.5 |

| 3 | Bi-LSTM-Attn-CRF | 92.2 | 90.5 | 91.4 | 91.0 | 92.1 | 91.6 |

| # | Review Text | BERT-Base | BERT-Domain |

|---|---|---|---|

| 1 | I tried their wazeri platter which is grilled chicken, beef kafta kabab and rice. | wazeri platter, chicken, beef kafta kabab, rice | wazeri platter, grilled chicken, beef kafta kabab, rice |

| 2 | I never finish the rice and always ask for a takeaway container for the naan + leftover rice. | rice, naan, leftover rice | rice, naan, rice |

| 3 | We both ordered green tea and it had a very unique taste. | tea | green tea |

| 4 | The red spicy tomato-based sauce on the side was delicious. | sauce | red spicy tomato-based sauce |

| 5 | I would say it’s like the size of 2 medium sized chicken breasts for the order. | chicken | chicken breasts |

| 6 | Also check out Firni (a sweet custard) for desert | sweet custard | Firni, sweet custard |

| 7 | Chicken is well cooked tooOmg, super delicious kebabs. | chicken, tooOmg, kebabs | Chicken, kebabs |

| 8 | It’s called Double Ka Meeta and we loved it! | Double Ka Meeta | |

| 9 | Dessert options were kesari, gajar halwa, strawberry shortcake and chocolate mousse. | kesari, gajar halwa, strawberry shortcake | kesari, gajar halwa, strawberry shortcake, chocolate mousse |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Syed, M.H.; Chung, S.-T. MenuNER: Domain-Adapted BERT Based NER Approach for a Domain with Limited Dataset and Its Application to Food Menu Domain. Appl. Sci. 2021, 11, 6007. https://doi.org/10.3390/app11136007

Syed MH, Chung S-T. MenuNER: Domain-Adapted BERT Based NER Approach for a Domain with Limited Dataset and Its Application to Food Menu Domain. Applied Sciences. 2021; 11(13):6007. https://doi.org/10.3390/app11136007

Chicago/Turabian StyleSyed, Muzamil Hussain, and Sun-Tae Chung. 2021. "MenuNER: Domain-Adapted BERT Based NER Approach for a Domain with Limited Dataset and Its Application to Food Menu Domain" Applied Sciences 11, no. 13: 6007. https://doi.org/10.3390/app11136007

APA StyleSyed, M. H., & Chung, S.-T. (2021). MenuNER: Domain-Adapted BERT Based NER Approach for a Domain with Limited Dataset and Its Application to Food Menu Domain. Applied Sciences, 11(13), 6007. https://doi.org/10.3390/app11136007