Diversity of Strategies for Motivation in Learning (DSML)—A New Measure for Measuring Student Academic Motivation

Abstract

:1. Introduction

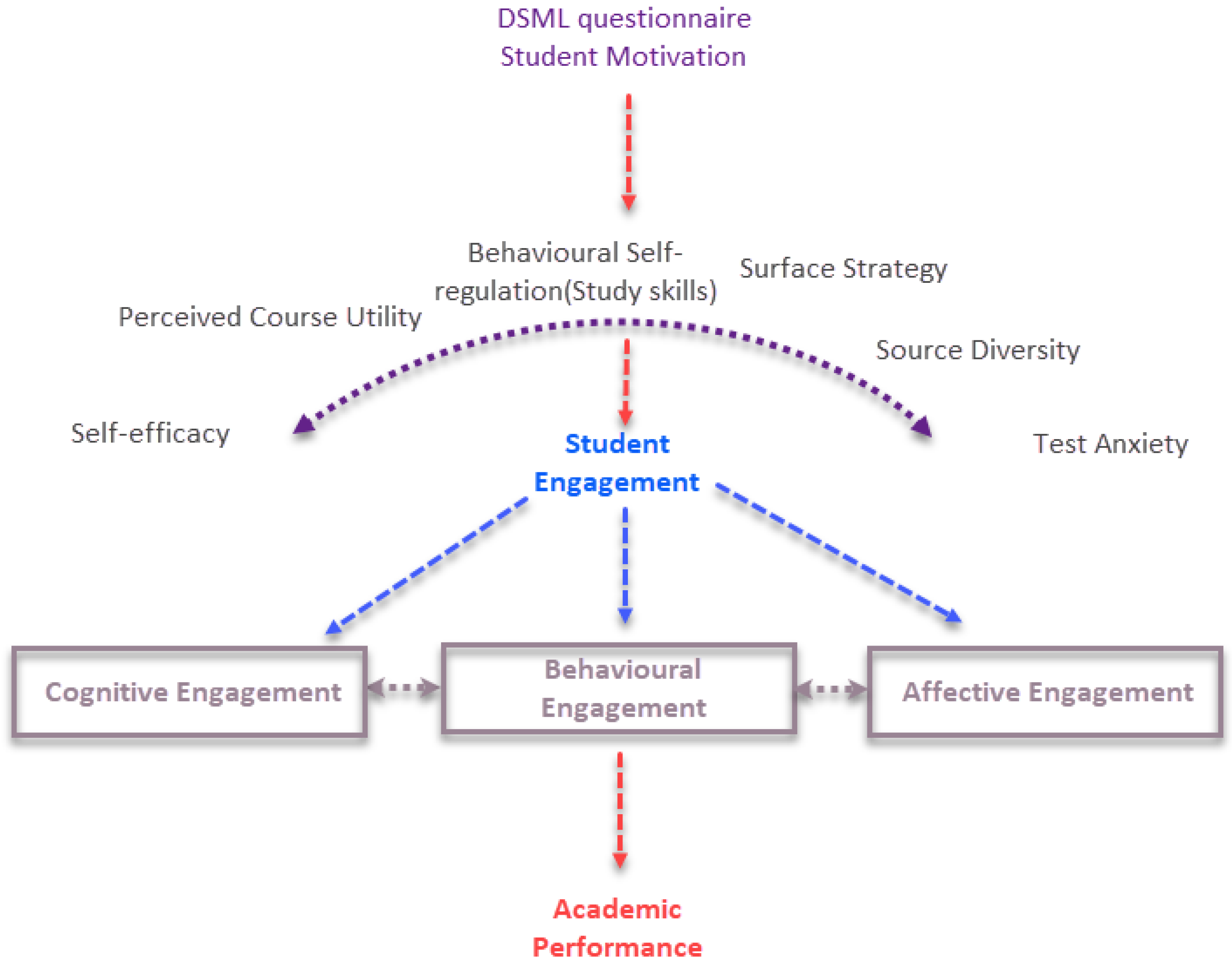

The Current Study

2. Methodology

2.1. Questionnaire Development

2.2. Participants and Procedure

3. Results

3.1. Exploratory Factor Analysis Results (N = 559)

3.2. Confirmatory Factor Analysis Results (n = 461)

3.3. CFA Results and Grade Boundaries

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Question | MSLQ | Changed? | Final DSML |

|---|---|---|---|

| I like material that really challenges me, even if it is difficult to learn. | 1 (SE) & 16 (GO) | Yes | No |

| I sometimes procrastinate to the extent that it negatively impacts my work. | No—New | N/A | DSML1 SR |

| When I take a test, I worry about my performance. | 14 (TA) | Yes | DSML2 TA |

| I think I will be able to use what I learn in this course elsewhere in life. | 4 (TV) | Yes | DSML3 CU |

| I believe I will achieve a high grade this year. | 5 (SE) | Yes | DSML4 SE |

| I should begin my coursework earlier than I do. | No—New | N/A | DSML5 |

| I put less effort into studying for classes that I don’t enjoy. | No—New | N/A | No |

| When I take a test, I worry about being unable to answer the questions. | 8 (TA) | Yes | DSML 6 TA |

| I believe I am capable of getting a high mark in this subject. | 5 (SE) &21 (SE) | Yes | DSML 7 SE |

| My goal is to do just enough to pass the course. | No—New | N/A | No |

| I regularly access the virtual learning environment (VLE), e.g., Blackboard/Vital to look at course material. | No—New | N/A | No |

| I am confident that I can understand the basic concepts in this course. | 12 (SE) | Minor | DSML 8 SE |

| I take course material at face value and don’t question it further. | No—New | N/A | No |

| When I take tests I think about the consequences of failing. | 14 (TA) | No | DSML 9 TA |

| I am confident that I can understand the most complex/difficult concepts in this course. | 6 (SE) & 15 (SE) | Yes | DSML10 SE |

| I prefer course material that arouses my curiosity, even if it is difficult to learn. | 16 (GO) | Minor | No |

| I am personally interested in the content of this course. | 17 (TV) | Yes | DSML 11 CU |

| I only access the virtual learning environment (VLE), e.g., Blackboard/Vital when I need to submit an assessment or take a test. | No-New | N/A | No |

| I have an uneasy, upset feeling when I take a test. | 19 (TA) | Minor | DSML 12 TA |

| I feel that virtually any topic can be highly interesting once I get into it. | No—RSPQ 5 | No | No |

| When course work is difficult, I give up or submit work I know is not my best. | 60 (ER) | Yes | No |

| I work hard at my studies because I find the material interesting. | 74 (ER) | Yes | No |

| I think the material in this course will be useful in my studies. | 23 (TV) | MINOR | DSML 13 |

| I make good use of various information sources (lectures, readings, videos, websites, etc.) to help me memorize information. | 53 (EL) | Yes | DSML 14 |

| I find the best way to pass examinations is to try to remember answers to likely questions. | No—RSPQ 20 | No | No |

| When studying for this class, I often repeatedly go over the same course material to make sure I understand it. | 55 (MC) & 63 (OR) | Yes | DSML15 SS |

| Sometimes I cannot motivate myself to study, even if I know I should. | No—New | N/A | DSML16 SR |

| If I use effective study techniques, then I will get a good grade. | No—New | N/A | No |

| I am not confident that I possess the skills needed to pass this course. | 31 (SE) & 29 (SE) | Yes | No |

| I am motivated to get a good grade to please other people in my life. | 30 (GO) | Yes | No |

| I am motivated to get a good grade for my own satisfaction. | 7 (GO) | Yes | No |

| I make good use of various information sources (lectures, readings, videos, websites, etc.) to help me understand. | 53 (EL) | Yes | DSML 17 SD |

| During class time I often miss important points because I’m thinking of other things. | 33 (MC) | No | DSML 18 SR |

| Poor grades are largely due to lack of support from my university/instructors. | 9 (COL) | Yes | No |

| If I receive a poor grade, I recognize what I could have done better. | No—New | N/A | No |

| I make up questions/quizzes to help focus my study. | No—MAI 22 | Yes | No |

| I often feel so bored when I study for this course that I quit before I finish what I planned to do. | 37 (ER) | Yes | No |

| I use the most effective learning strategies in my studies. | No—New | N/A | No |

| I go back to previously made notes and readings to refresh my understanding of them. | 80 (TS) & 42 (OR) | Yes | DSML19 SS |

| I use the internet to find materials to help support my studies. (Wikipedia, YouTube, social media, etc.) | No—New | N/A | No |

| For this question, please select: “Not at all true of me”. | CHECK | CHECK | CHECK |

| If I get confused when studying, I take steps to clarify any misunderstandings. | 41 (MC) | Yes | No |

| When studying for this class, I often repeatedly go over the same course material to memorize it. | 59 (RE) & 72 (RE) | Yes | DSML20 SS |

| I work hard to do well in this course, even if I don’t like what we are doing. | 48 (ER) | No | No |

| I make simple charts, diagrams or tables to help me organize course material. | 49 (OR) | No | No |

| I treat the course material as a starting point and try to develop my own ideas about it. | 51 (CT) | No | No |

| I find it hard to stick to a study schedule. | 52 (TaS) | No | DSML21 SR |

| When I study for this course, I examine a range of information from different sources (websites, videos, textbooks, journals, etc.). | 53 (EL) | Yes | DSML22 SD |

| Before I study new course material thoroughly, I often skim it to see how it is organized. | 54 (SR) | No | No |

| I ask myself questions to make sure I understand the material I have been studying. | 55 (MC) | Minor | No |

| I often find that I have been studying but don’t fully understand the material. | 76 (MC) | Yes | No |

| I find I can get by in most assessments by memorizing key points rather than trying to understand the topic. | No—RSPQ11 | Minor | No |

| I try to relate ideas in this subject to issues in the real world. | No—New | N/A | No |

| When studying, I try to relate the material to what I already know. | 64 (EL) | Minor | No |

| When I study for this course, I write summaries of the main ideas presented. | 67 (EL) | Yes | No |

| I try to understand the material in this class by making connections between the different types of information provided (lectures, readings, videos, websites etc.). | 53 (EL) | Yes | No |

| I make sure I keep up with the demands of my course. | 70 (Tas) | Yes | No |

| When presented with a theory or conclusion, I consider possible alternative explanations. | 47 (CT) & 71 (CT) | Yes | No |

| I make lists of important terms or key words for this course and memorize them. | 72 (REH) | Yes | No |

| I study the course materials regularly. | 73 (Tas) | Yes | No |

| I put less effort into studying subjects I find boring and uninteresting. | 74 (ER) | Yes | No |

| Other things in my life tend to take priority over this course. | 77 (Tas) & 33 (MC) | Yes | DSML23 SR |

| I set goals for myself in order to direct my activities in each study period. | 78 (MC) | Minor | No |

| I use an academic database to help find materials to help support my studies. | No—New | N/A | No |

| I rarely find time to review my notes or readings. | 80 (Tas) | Minor | DSML24 SR |

| My answers are fair reflection of my true feelings. | CHECK | CHECK | CHECK |

References

- Korhonen, V.; Mattsson, M.; Inkinen, M.; Toom, A. Understanding the multidimensional nature of student engagement during the first year of higher education. Front. Psychol. 2019, 10, 1056. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schellings, G.; Van Hout-Wolters, B. Measuring strategy use with self-report instruments: Theoretical and empirical considerations. Metacognition Learn. 2011, 6, 83–90. [Google Scholar] [CrossRef] [Green Version]

- Berger, J.L.; Karabenick, S.A. Construct validity of self-reported metacognitive learning strategies. Educ. Assess. 2016, 21, 19–33. [Google Scholar] [CrossRef]

- Lovelace, M.; Brickman, P. Best practices for measuring students’ attitudes toward learning science. CBE Life Sci. Educ. 2017, 12, 606–617. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bandura, A.; Freeman, W.H.; Lightsey, R. Self-efficacy: The exercise of control. J. Cogn. Psychother. 1997, 13, 158–166. [Google Scholar] [CrossRef]

- Biggs, J. What do inventories of students’ learning processes really measure? A theoretical review and clarification. Br. J. Educ. Psychol. 1993, 63, 3–19. [Google Scholar] [CrossRef]

- Zimmerman, B.J. Self-regulating academic learning and achievement: The emergence of a social cognitive perspective. Educ. Psychol. Rev. 1990, 2, 173–201. [Google Scholar] [CrossRef]

- Supervía, U.P.; Bordás, S.C.; Robres, Q.A. The mediating role of self-efficacy in the relationship between resilience and academic performance in adolescence. Learn. Motiv. 2022, 78, 101814. [Google Scholar] [CrossRef]

- Bakhtiarvand, F.; Ahmadian, S.; Delrooz, K.; Farahani, H.A. The moderating effect of achievement motivation on relationship of learning approaches and academic achievement. Procedia Soc. Behav. Sci. 2011, 28, 486–488. [Google Scholar] [CrossRef] [Green Version]

- Xu, J.; Qiu, X. The influence of self-regulation on learner’s behavioral intention to reuse E-learning systems: A moderated mediation model. Front. Psychol. 2021, 12, 763889. [Google Scholar] [CrossRef]

- Ning, H.K.; Downing, K. Influence of student learning experience on academic performance: The mediator and moderator effects of self-regulation and motivation. Br. Educ. Res. J. 2012, 38, 219–237. [Google Scholar] [CrossRef]

- Biggs, J.; Kember, D.; Leung, D.Y. The revised two-factor study process questionnaire: RSPQ-2F. Br. J. Educ. Psychol. 2001, 71, 133–149. [Google Scholar] [CrossRef] [PubMed]

- Schraw, G.; Dennison, R.S. Assessing metacognitive awareness. Contemp. Educ. Psychol. 1994, 19, 460–475. [Google Scholar] [CrossRef]

- Pintrich, P.R.; de Groot, E.V. Motivational and self-regulated learning components of classroom academic performance. J. Educ. Psychol. 1990, 82, 33–40. [Google Scholar] [CrossRef]

- Hancock, D.R.; Bray, M.; Nason, S.A. Influencing university students’ achievement and motivation in a technology course. J. Educ. Res. 2002, 95, 365–372. [Google Scholar] [CrossRef]

- Rotgans, J.; Schmidt, H. Examination of the context-specific nature of self-regulated learning. Educ. Stud. 2009, 35, 239–253. [Google Scholar] [CrossRef]

- Broadbent, J. Comparing online and blended learner’s self-regulated learning strategies and academic performance. Internet High. Educ. 2017, 33, 24–32. [Google Scholar] [CrossRef]

- Google Scholar. Available online: https://scholar.google.com/scholar?cites=7176398456277230671&as_sdt=2005&sciodt=0,5&hl=en (accessed on 16 July 2022).

- Laird, T.; Shoup, R.; Kuh, G.D. Measuring Deep Approaches to Learning Using the National Survey of Student Engagement. In Proceedings of the Association for Institutional Research Annual Forum, Chicago, IL, USA, 14–18 May 2006. [Google Scholar]

- Jackson, C.R. Validating and adapting the motivated strategies for learning questionnaire (MSLQ) for STEM courses at an HBCU. Aera Open 2018, 4, 2332858418809346. [Google Scholar] [CrossRef] [Green Version]

- Credé, M.; Phillips, L.A. A meta-analytic review of the Motivated Strategies for Learning Questionnaire. Learn. Individ. Differ. 2011, 21, 337–346. [Google Scholar] [CrossRef]

- Whitebread, D.; Coltman, P.; Pasternak, D.P.; Sangster, C.; Grau, V.; Bingham, S.; Almeqdad, Q.; Demetriou, D. The development of two observational tools for assessing metacognition and self-regulated learning in young children. Metacognition Learn. 2019, 4, 63–85. [Google Scholar] [CrossRef]

- Cho, M.H.; Summers, J. Factor validity of the motivated strategies for learning questionnaire (MSLQ) in asynchronous online learning environments (AOLE). J. Interact. Learn. Res. 2012, 23, 5–28. [Google Scholar]

- Richardson, J.T. Methodological issues in questionnaire-based research on student learning in higher education. Educ. Psychol. Rev. 2004, 16, 347–358. [Google Scholar] [CrossRef]

- Hernandez, I.A.; Silverman, D.M.; Destin, M. From deficit to benefit: Highlighting lower-SES students’ background-specific strengths reinforces their academic persistence. J. Exp. Soc. Psychol. 2021, 92, 104080. [Google Scholar] [CrossRef]

- Winne, P.H.; Baker, R.S. The potentials of educational data mining for researching metacognition, motivation and self-regulated learning. J. Educ. Data Min. 2013, 5, 1–8. [Google Scholar]

- Dunn, K.E.; Lo, W.J.; Mulvenon, S.W.; Sutcliffe, R. Revisiting the motivated strategies for learning questionnaire: A theoretical and statistical reevaluation of the metacognitive self-regulation and effort regulation subscales. Educ. Psychol. Meas. 2012, 72, 312–331. [Google Scholar] [CrossRef]

- Hilpert, J.C.; Stempien, J.; van der Hoeven Kraft, K.J.; Husman, J. Evidence for the latent factor structure of the MSLQ: A new conceptualization of an established questionnaire. SAGE Open 2013, 3, 2158244013510305. [Google Scholar] [CrossRef] [Green Version]

- Griese, B.; Lehmann, M.; Roesken-Winter, B. Refining questionnaire-based assessment of STEM students’ learning strategies. Int. J. STEM Educ. 2015, 2, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Khosim, F.; Awang, M.I. Validity and reliability of the MSLQ Malay version in measuring the level of motivation and self-regulated learning. Int. J. Sci. Technol. Res. 2020, 9, 903–905. [Google Scholar]

- Pintrich, P.R.; Smith, D.A.F.; García, T.; McKeachie, W.J. The Motivated Strategies for Learning Questionnaire (MSLQ); NCRIPTAL: Ann Arbor, MI, USA, 1991. [Google Scholar]

- Muis, K.R.; Winne, P.H.; Jamieson-Noel, D. Using a multi-trait-multimethod analysis to examine conceptual similarities of three self-regulated learning inventories. Br. J. Educ. Psychol. 2007, 77, 177–195. [Google Scholar] [CrossRef]

- Gable, R.K. Review of the Motivated Strategies for Learning Questionnaire. In The Thirteenth Mental Measurements Yearbook; Impara, C., Plake, B.S., Eds.; Buros Institute of Mental Measurements: Lincoln, NE, USA, 1998; pp. 681–682. [Google Scholar]

- Limniou, M.; Varga-Atkins, T.; Hands, C.; Elshamaa, M. Learning, student digital capabilities and academic performance over the COVID-19 pandemic. Educ. Sci. 2021, 11, 361. [Google Scholar] [CrossRef]

- Bayerl, K. The Student-Centered Assessment Network. Testing Change Ideas in Real Time. Available online: https://files.eric.ed.gov/fulltext/ED611292.pdf (accessed on 1 August 2022).

- Valverde-Berrocoso, J.; Garrido-Arroyo, M.D.C.; Burgos-Videla, C.; Morales-Cevallos, M.B. Trends in educational research about e-learning: A systematic literature review (2009–2018). Sustainability 2020, 12, 5153. [Google Scholar] [CrossRef]

- Richardson, M.; Abraham, C.; Bond, R. A systematic review and meta-analysis of the psychological correlates of university students’ academic performance: A systematic review and meta-analysis. Psychol. Bull. 2012, 138, 353–387. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sæle, R.G.; Dahl, T.I.; Sørlie, T.; Friborg, O. Relationships between learning approach, procrastination and academic achievement amongst first-year university students. High. Educ. 2017, 74, 757–774. [Google Scholar] [CrossRef] [Green Version]

- Isik, U.; Wilschut, J.; Croiset, G.; Kusurkar, R.A. The role of study strategy in motivation and academic performance of ethnic minority and majority students: A structural equation model. Adv. Health Sci. Educ. 2018, 23, 921–935. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Seipp, B. Anxiety and academic performance: A meta-analysis of findings. Anxiety Res. 1991, 4, 27–41. [Google Scholar] [CrossRef]

- Ali, B.J.; Anwar, G. Anxiety and foreign language learning: Analysis of students’ anxiety towards foreign language learning. Int. J. Engl. Lit. Soc. Sci. 2021, 6, 234–244. [Google Scholar] [CrossRef]

- Pekrun, R.; Hall, N.C.; Goetz, T.; Perry, R.P. Boredom and academic achievement: Testing a model of reciprocal causation. J. Educ. Psychol. 2014, 106, 696. [Google Scholar] [CrossRef]

- Wolters, C.A. Understanding procrastination from a self-regulated learning perspective. J. Educ. Psychol. 2003, 95, 179–187. [Google Scholar] [CrossRef]

- Yamada, M.; Goda, Y.; Matsuda, T.; Kato, H.; Miyagawa, H. The Relationship among Self-Regulated Learning, Procrastination, and Learning Behaviors in Blended Learning Environment. In Proceedings of the International Association for Development of the Information Society (IADIS) International Conference on Cognition and Exploratory Learning in the Digital Age (CELDA), Maynooth, Greater Dublin, Ireland, 24–26 October 2015. [Google Scholar]

- Goda, Y.; Matsuda, T.; Yamada, M.; Saito, Y.; Kato, H.; Miyagawa, H. Ingenious attempts to develop self-regulated learning strategies with e-Learning: Focusing on time-management skill and learning habit. In Proceedings of the World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education, Vancouver, VA, Canada, 26 October 2009. [Google Scholar]

- Kemper, C.J.; Trapp, S.; Kathmann, N.; Samuel, D.B.; Ziegler, M. Short versus long scales in clinical assessment: Exploring the trade-off between resources saved and psychometric quality lost using two measures of obsessive–compulsive symptoms. Assessment 2019, 26, 767–782. [Google Scholar] [CrossRef]

- Credé, M.; Harms, P.; Niehorster, S.; Gaye-Valentine, A. An evaluation of the consequences of using short measures of the Big Five personality traits. J. Personal. Soc. Psychol. 2012, 102, 874–888. [Google Scholar] [CrossRef] [Green Version]

- Entwistle, N.; McCune, V. The conceptual bases of study strategy inventories. Educ. Psychol. Rev. 2004, 16, 325–345. [Google Scholar] [CrossRef]

- Levy, P. Short-form tests: A methodological review. Psychol. Bull. 1968, 69, 410–416. [Google Scholar] [CrossRef] [PubMed]

- Niemi, R.G.; Carmines, E.G.; McIver, J.P. The impact of scale length on reliability and validity. Qual. Quant. 1986, 20, 371–376. [Google Scholar] [CrossRef]

- Smith, S.M.; Chen, C. Modified MSLQ: An analysis of academic motivation, self-regulated learning strategies, and scholastic performance in information systems courses. Issues Inf. Syst. 2017, 18, 129–140. [Google Scholar]

- Comrey, A.L.; Lee, H.B. A First Course in Factor Analysis; Erlbaum: Hillsdale, NJ, USA, 1992. [Google Scholar]

- Fabrigar, L.R.; Wegener, D.T.; MacCallum, R.C.; Strahan, E.J. Evaluating the use of exploratory factor analysis in psychological research. Psychol. Methods 1999, 4, 272–299. [Google Scholar] [CrossRef]

- Beavers, A.S.; Lounsbury, J.W.; Richards, J.K.; Huck, S.W.; Skolits, G.J.; Esquivel, S.L. Practical considerations for using exploratory factor analysis in educational research. Pract. Assess. Res. Eval. 2013, 18, 6. [Google Scholar]

- Brown, T.A.; Moore, M.T. Confirmatory factor analysis. In Handbook of Structural Equation Modeling; Hoyle, R.H., Ed.; Guilford Publications: New York, NY, USA, 2012; pp. 361–379. [Google Scholar]

- Yeşilyurt, E. Metacognitive awareness and achievement focused motivation as a predictor of the study process. Int. J. Soc. Sci. Educ. 2013, 4, 1013–1026. [Google Scholar]

- Ogasawara, H. Correlations among maximum likelihood and weighted/unweighted least squares estimators in factor analysis. Behaviormetrika 2003, 30, 63–86. [Google Scholar] [CrossRef]

- Gorsuch, R.L. Factor Analysis, 2nd ed.; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1983. [Google Scholar]

- Preacher, K.J.; Zhang, G.; Kim, C.; Mels, G. Choosing the optimal number of factors in exploratory factor analysis: A model selection perspective. Multivar. Behav. Res. 2013, 48, 28–56. [Google Scholar] [CrossRef]

- Hays, R.D.; Anderson, R.T.; Revicki, D. Assessing reliability and validity of measurement in clinical trials. In Quality of Life Assessment in Clinical Trials Methods and Practice; Staquet, M.J., Hays, R.D., Fayers, P.M., Eds.; Oxford University Press: Oxford, UK, 1998; pp. 169–182. [Google Scholar]

- Pett, M.A.; Lackey, N.R.; Sullivan, J.J. Making Sense of Factor Analysis: The Use of Factor Analysis for Instrument Development in Health Care Research; Sage Publications: London, UK, 2003. [Google Scholar]

- Hu, L.T.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Model. A Multidiscip. J. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Justicia, F.; Pichardo, M.C.; Cano, F.; Berbén, A.B.G.; De la Fuente, J. The revised two factor study process questionnaire (R-SPQ-2F): Exploratory and confirmatory factor analyses at item level. Eur. J. Psychol. Educ. 2008, 23, 355–372. [Google Scholar] [CrossRef]

- Kitsantas, A.; Winsler, A.; Huie, F. Self-regulation and ability predictors of academic success during college: A predictive validity study. J. Adv. Acad. 2008, 20, 42–68. [Google Scholar] [CrossRef]

- Zusho, A.; Pintrich, P.R.; Coppola, B. Skill and will: The role of motivation and cognition in the learning of college chemistry. Int. J. Sci. Educ. 2003, 25, 1081–1094. [Google Scholar] [CrossRef]

- Dörrenbächer, L.; Perels, F. Self-regulated learning profiles in college students: Their relationship to achievement, personality, and the effectiveness of an intervention to foster self-regulated learning. Learn. Individ. Differ. 2016, 51, 229–241. [Google Scholar] [CrossRef]

- Soemantri, D.; Mccoll, G.; Dodds, A. Measuring medical students’ reflection on their learning: Modification and validation of the motivated strategies for learning questionnaire (MSLQ). BMC Med. Educ. 2018, 18, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Duncan, T.G.; McKeachie, W.J. The making of the motivated strategies for learning questionnaire. Educ. Psychol. 2005, 40, 117–128. [Google Scholar] [CrossRef]

- Shea, P.; Bidjerano, T. Learning presence: Towards a theory of self-efficacy, self-regulation, and the development of a communities of inquiry in online and blended learning environments. Comput. Educ. 2010, 55, 1721–1731. [Google Scholar] [CrossRef]

- Martin, A.J. Motivation and engagement: Conceptual, operational, and empirical clarity. In Handbook of Research on Student Engagement; Christenson, S.L., Reschly, A.L., Wylie, C., Eds.; Springer: Boston, USA, 2012; pp. 303–311. [Google Scholar]

- Senior, R.M.; Bartholomew, P.; Soor, A.; Shepperd, D.; Bartholomew, N.; Senior, C. “The rules of engagement”: Student engagement and motivation to improve the quality of undergraduate learning. Front. Educ. 2018, 3, 32. [Google Scholar] [CrossRef]

- Bowden, J.L.H.; Tickle, L.; Naumann, K. The four pillars of tertiary student engagement and success: A holistic measurement approach. Stud. High. Educ. 2021, 46, 1207–1224. [Google Scholar] [CrossRef] [Green Version]

- Limniou, M.; Sedghi, N.; Kumari, D.; Drousiotis, E. Student engagement, learning environments and the COVID-19 pandemic: A comparison between psychology and engineering undergraduate students in the UK. Educ. Sci. 2022, 12, 671. [Google Scholar] [CrossRef]

- Alsharif, M.; Limniou, M. Device and social media usage in a lecture theatre in a Saudi Arabia university: Students’ views. In Proceedings of the ECSM 2020 8th European Conference on Social Media, Larnaca, Cyprus, 2–3 July 2020. [Google Scholar]

- Tourangeau, R.; Rips, L.J.; Rasinski, K. The Psychology of Survey Response; Cambridge University Press: London, UK, 2000. [Google Scholar]

- Batteson, T.J.; Tormey, R.; Ritchie, T.D. Approaches to learning, metacognition and personality; an exploratory and confirmatory factor analysis. Procedia Soc. Behav. Sci. 2014, 116, 2561–2567. [Google Scholar] [CrossRef] [Green Version]

- Meijs, C.; Neroni, J.; Gijselaers, H.J.; Leontjevas, R.; Kirschner, P.A.; de Groot, R.H. Motivated strategies for learning questionnaire part B revisited: New subscales for an adult distance education setting. Internet High. Educ. 2019, 40, 1–11. [Google Scholar] [CrossRef]

- Hadwin, A.F.; Winne, P.H.; Stockley, D.B.; Nesbit, J.C.; Woszczyna, C. Context moderates students’ self-reports about how they study. J. Educ. Psychol. 2001, 93, 477–487. [Google Scholar] [CrossRef]

- Eaves, M. The relevance of learning styles for international pedagogy in higher education. Teach. Teach. 2011, 17, 677–691. [Google Scholar] [CrossRef]

- Ilker, E. A Validity and Reliability Study of the Motivated Strategies for Learning Questionnaire. Educ. Sci. Theory Pract. 2014, 14, 829–833. [Google Scholar]

- Hands, C.; Liminou, M. How does student access to a virtual learning environment (VLE) change during periods of disruption? J. High. Educ. Theory Pract. 2023, 23. [Google Scholar] [CrossRef]

- Limniou, M.; Duret, D.; Hands, C. Comparisons between three disciplines regarding device usage in a lecture theatre, academic performance and learning. High. Educ. Pedagog. 2020, 5, 132–147. [Google Scholar] [CrossRef]

- Stowell, J.R.; Bennett, D. Effects of online testing on student exam performance and test anxiety. J. Educ. Comput. Res. 2010, 42, 161–171. [Google Scholar] [CrossRef]

- Ewell, S.N.; Josefson, C.C.; Ballen, C.J. Why did students report lower test anxiety during the COVID-19 pandemic? J. Microbiol. Biol. Educ. 2022, 23, e00282-e21. [Google Scholar] [CrossRef]

- Heikkilä, A.; Lonka, K. Studying in higher education: Students’ approaches to learning, self-regulation, and cognitive strategies. Stud. High. Educ. 2006, 31, 99–117. [Google Scholar] [CrossRef]

- Veenman, M.V. Learning to self-monitor and self-regulate. In Handbook of Research on Learning and Instruction; Mayer, R., Alexander, P., Eds.; Routledge: New York, NY, USA, 2011; pp. 197–218. [Google Scholar]

| Topic/Faculty | n | Percentage (%) |

|---|---|---|

| School of Psychology * | 382 | 33.9 |

| Faculty of Health and Life Sciences | 189 | 16.8 |

| Faculty of Science and Engineering | 140 | 12.6 |

| Faculty of Humanities and Social Sciences | 312 | 27.7 |

| School of Medicine | 52 | 4.6 |

| Other | 12 | 1.1 |

| Grade | n | Percentage (%) |

|---|---|---|

| First class (71–100) | 149 | 13.4 |

| 2:1 class (60–69) | 628 | 56.5 |

| 2:2 class (50–59) | 228 | 20.5 |

| Third class (40–49) | 28 | 2.5 |

| Failing grade (below 40) | 3 | 0.3 |

| Unable to estimate | 75 | 6.8 |

| Year of Study | n | Percentage (%) |

|---|---|---|

| First-year undergraduate | 553 | 49.1 |

| Second-year undergraduate | 307 | 27.3 |

| Third-year and above undergraduate | 164 | 14.7 |

| Post-taught students (Master’s) | 73 | 6.5 |

| Postgraduate research students (PhD) | 17 | 1.5 |

| Postdoc staff | 1 | 0.1 |

| EFA Step | Initial Suggested Factors | KMO | Items Removed |

|---|---|---|---|

| 1 | 11 | 0.88 | 28 |

| 2 | 8 | 0.83 | 6 |

| 3 | 8 | 0.83 | 1 |

| 4 | 8 | 0.83 | 1 |

| 5 | 7 | 0.81 | 1 |

| 6 | 7 | 0.82 | 2 |

| 7 | 6 | 0.82 | 1 |

| RMSEA | 90% CI Lower | 90% CI Upper | TLI | BIC | χ2 | df | p |

|---|---|---|---|---|---|---|---|

| 0.045 | 0.038 | 0.005 | 0.916 | 620.34 | 4384.66 | 147 | <0.001 |

| Factor | Contained Items | Model Variance (%) |

|---|---|---|

| 1. Self-Regulation | 7 | 22 |

| 2. Test Anxiety | 4 | 18 |

| 3. Self-Efficacy | 4 | 16 |

| 4. Source Diversity | 3 | 16 |

| 5. Course Utility | 3 | 15 |

| 6. Study Strategies | 4 | 13 |

| Total (final model) | 25 | 45 |

| RMSEA | 90% CI Lower | 90% CI Upper | TLI | CFI | χ2 | df | p | |

|---|---|---|---|---|---|---|---|---|

| Standard | 0.129 | 0.124 | 0.135 | 0.905 | 0.918 | 1808.11 | 237 | <0.001 |

| Robust | 0.040 | 0.033 | 0.047 | 0.883 | 0.900 | 390.230 | 237 | <0.001 |

| Factor (n) | M (±SD) | 1. Grade | 2. Self-Efficacy | 3. Self-Regulation | 4. Study Skills | 5. Test Anxiety | 6. Source Diversity | 7. Course Utility |

|---|---|---|---|---|---|---|---|---|

| 1. Grade (945) | 3.87 (±0.70) | - | 0.445 ** (n = 931) | 0.199 ** (n = 917) | 0.730 * (n = 992) | −0.105 * (n = 888) | 0.196 ** (n = 939) | 0.140 ** (n = 934) |

| 2. Self-efficacy (1006) | 4.95 (±0.93) | - | 0.147 ** (n = 977) | 0.208 ** (n = 992) | −0.158 ** (n = 948) | 0.273 ** (n = 998) | 0.367 ** (n = 995) | |

| 3. Self-regulation (992) | 4.47 (±1.09) | - | 0.158 ** (n = 980) | −0.143 ** (n = 937) | 0.196 ** (n = 985) | 0.132 ** (n = 979) | ||

| 4. Study skills (1007) | 5.31 (±1.09) | - | 0.174 ** (n = 950) | 0.428 ** (n = 1000) | 0.339 ** (n = 994) | |||

| 5. Test anxiety (962) | 5.09 (±1.20) | - | 0.122 * (n = 954) | 0.069 (n = 950) | ||||

| 6. Source diversity (1023) | 5.24 (±1.13) | - | 0.422 ** (n = 1000) | |||||

| 7. Course utility (1008) | 5.60 (±1.01) | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hands, C.; Limniou, M. Diversity of Strategies for Motivation in Learning (DSML)—A New Measure for Measuring Student Academic Motivation. Behav. Sci. 2023, 13, 301. https://doi.org/10.3390/bs13040301

Hands C, Limniou M. Diversity of Strategies for Motivation in Learning (DSML)—A New Measure for Measuring Student Academic Motivation. Behavioral Sciences. 2023; 13(4):301. https://doi.org/10.3390/bs13040301

Chicago/Turabian StyleHands, Caroline, and Maria Limniou. 2023. "Diversity of Strategies for Motivation in Learning (DSML)—A New Measure for Measuring Student Academic Motivation" Behavioral Sciences 13, no. 4: 301. https://doi.org/10.3390/bs13040301