Effect of Signal Design of Autonomous Vehicle Intention Presentation on Pedestrians’ Cognition

Abstract

:1. Introduction

2. Literature Review

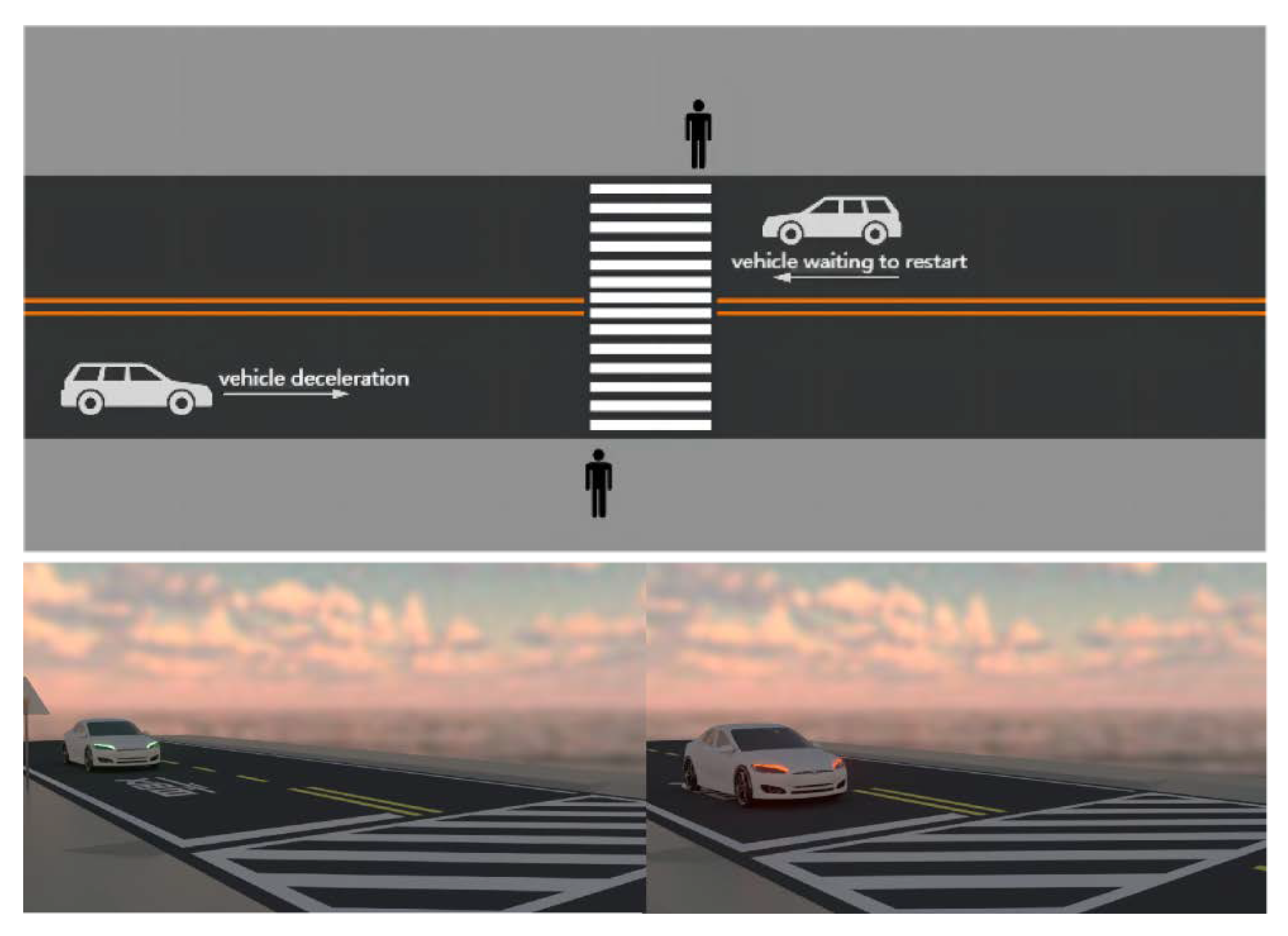

3. Experimental Design

3.1. Experimental Sample Setting

3.2. Experimental Procedure

4. Results and Discussion

4.1. Reliability Analysis of Questionnaire

4.2. Analysis Results of Vehicle Deceleration Scenario

4.3. Analysis Results of Waiting-to-Restart Scenario

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- National Highway Traffic Safety Administration. Automated Driving Systems: A Vision for Safety; DOT HS 812; US Department of Transportation: Washington, DC, USA, 2017; p. 442. Available online: https://www.nhtsa.gov/sites/nhtsa.gov/files/documents/13069a-ads2.0_090617_v9a_tag.pdf (accessed on 30 August 2016).

- Fridman, L.; Mehler, B.; Xia, L.; Yang, Y.; Facusse, L.Y.; Reimer, B. To Walk or Not to Walk: Crowdsourced Assessment of External Vehicle-to-Pedestrian Displays. arXiv 2017, arXiv:1707.02698. [Google Scholar] [CrossRef]

- LaFrance, A. Will Pedestrians Be Able to Tell What a Driverless Car Is About to Do? The Atlantic, 30 August 2016. Available online: https://www.theatlantic.com/technology/archive/2016/08/designing-a-driverlesscar-with-pedestrians-in-mind/497801/ (accessed on 30 August 2016).

- Charisi, V.; Habibovic, A.; Andersson, J.; Li, J.; Evers, V. Children’s views on identification and intention communication of self-driving vehicles. In Proceedings of the 2017 Conference on Interaction Design and Children, Stanford, CA, USA, 27–30 June 2017; pp. 399–404. [Google Scholar] [CrossRef]

- Šucha, M. Road users’strategies and communication: Driver-pedestrian interaction. In Proceedings of the Transport Research Arena (TRA), Paris, France, 14–17 April 2014. Available online: https://trid.trb.org/view/1327765. (accessed on 30 August 2016).

- Llorca, D.F.; Milanés, V.; Alonso, I.P.; Gavilán, M.; Daza, I.G.; Pérez, J.; Sotelo, M.Á. Autonomous pedestrian collision avoidance using a fuzzy steering controller. IEEE Trans. Intell. Transp. Syst. 2011, 12, 390–401. [Google Scholar] [CrossRef] [Green Version]

- Mirnig, N.; Perterer, N.; Stollnberger, G.; Tscheligi, M. Three strategies for autonomous car-to-pedestrian communication: A survival guide. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; p. 209. [Google Scholar] [CrossRef]

- Guéguen, N.; Meineri, S.; Eyssartier, C. A pedestrian’s stare and drivers’ stopping behavior: A field experiment at the pedestrian crossing. Saf. Sci. 2015, 75, 87–89. [Google Scholar] [CrossRef]

- Hamlet, C.C.; Axelrod, S.; Kuerschner, S. Eye contact as an antecedent to compliant behavior. J. Appl. Behav. Anal. 1984, 17, 553–557. [Google Scholar] [CrossRef] [Green Version]

- Ren, Z.; Jiang, X.; Wang, W. Analysis of the influence of pedestrians’ eye contact on drivers’ comfort boundary during the crossing conflict. Procedia Eng. 2016, 137, 399–406. [Google Scholar] [CrossRef] [Green Version]

- Schmidt, S.; Faerber, B. Pedestrians at the kerb–Recognising the action intentions of humans. Transp. Res. Part F Traffic Psychol. Behav. 2009, 12, 300–310. [Google Scholar] [CrossRef]

- Šucha, M. Sensation seeking v psychologické diagnostice řidičů. Psychologie pro praxi 2015, 48, 71–78. Available online: https://karolinum.cz/casopis/psychologie-pro-praxi/rocnik-48/cislo-3/clanek-971 (accessed on 17 February 2015).

- Mahadevan, K.; Somanath, S.; Sharlin, E. “Fight-or-Flight”: Leveraging Instinctive Human Defensive Behaviors for Safe Human-Robot Interaction. In Proceedings of the 2018 ACM/IEEE International Conference, Chicago, IL, USA, 5–8 March 2018. [Google Scholar] [CrossRef]

- Clamann, M.; Aubert, M.; Cummings, M.L. Evaluation of vehicle-topedestrian communication displays for autonomous vehicles. In Proceedings of the Transportation Research Board 96th Annual Meeting, Washington, DC, USA, 8–12 January 2017; p. 17. [Google Scholar]

- Stanciu, S.C.; Eby, D.W.; Molnar, L.J.; St. Louis, R.M.; Zanier, N.; Kostyniuk, L.P. Pedestrians/bicyclists and autonomous vehicles: How will they communicate? Transp. Res. Rec. 2018, 2672, 58–66. [Google Scholar] [CrossRef]

- Habibovic, A.; Lundgren, V.M.; Andersson, J.; Klingegård, M.; Lagström, T.; Sirkka, A.; Larsson, P. Communicating intent of automated vehicles to pedestrians. Front. Psychol. 2018, 9, 1336. [Google Scholar] [CrossRef]

- Fuest, T.; Sorokin, L.; Bellem, H.; Bengler, K. Taxonomy of traffic situations for the interaction between automated vehicles and human road users. In Advances in Human Aspects of Transportation; Springer: Cham, Switzerland, 2017; pp. 708–719. [Google Scholar]

- Petzoldt, T.; Schleinitz, K.; Banse, R. Potential safety effects of a frontal brake light for motor vehicles. IET Intell. Transp. Syst. 2018, 12, 449–453. [Google Scholar] [CrossRef]

- Deb, S.; Warner, B.; Poudel, S.; Bhandari, S. Identification of external design preferences in autonomous vehicles. In Proceedings of the 2016 Industrial and Systems Engineering Research Conference, Anaheim, CA, USA, 21–24 May 2016; Available online: https://www.researchgate.net/publication/297019875_Identification_of_External_Design_Preferences_in_Autonomous_Vehicles (accessed on 22 May 2016).

- Pugliese, B.J.; Barton, B.K.; Davis, S.J.; Lopez, G. Assessing pedestrian safety across modalities via a simulated vehicle time-to-arrival task. Accid. Anal. Prev. 2020, 134, 105344. [Google Scholar] [CrossRef] [PubMed]

- Wilde, G.J.S. Immediate and delayed social interaction in road user behaviour. Appl. Psychol. 1980, 29, 439–460. [Google Scholar] [CrossRef]

- Clay, D. Driver Attitude and Attribution: Implications for Accident Prevention. Ph.D. Thesis, Cranfield University, Cranfield, UK, 1995. Available online: http://hdl.handle.net/1826/3239. (accessed on 30 August 2016).

- Sucha, M.; Dostal, D.; Risser, R. Pedestrian-driver communication and decision strategies at marked crossings. Accid. Anal. Prev. 2017, 102, 41–50. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rasouli, A.; Tsotsos, J.K. Autonomous vehicles that interact with pedestrians: A survey of theory and practice. IEEE Trans. Intell. Transp. Syst. 2019, 21, 900–918. [Google Scholar] [CrossRef] [Green Version]

- Risto, M.; Emmenegger, C.; Vinkhuyzen, E.; Cefkin, M.; Hollan, J. Human-vehicle interfaces: The power of vehicle movement gestures in human road user coordination. In Proceedings of the Driving Assessment Conference, Manchester Village, Vermont, 26–29 June 2017; pp. 186–192. [Google Scholar] [CrossRef] [Green Version]

- Heimstra, N.W.; Nichols, J.; Martin, G. An experimental methodology for analysis of child pedestrian behavior. Pediatrics 1969, 44, 832–838. [Google Scholar] [CrossRef]

- Holland, C.; Hill, R. The effect of age, gender and driver status on pedestrians’ intentions to cross the road in risky situations. Accid. Anal. Prev. 2007, 39, 224–237. [Google Scholar] [CrossRef]

- Moore, R.L. Pedestrian choice and judgment. J. Oper. Res. Soc. 1953, 4, 3–10. [Google Scholar] [CrossRef]

- Yagil, D. Beliefs, motives and situational factors related to pedestrians’ self-reported behavior at signal-controlled crossings. Transp. Res. Part F Traffic Psychol. Behav. 2000, 3, 1–13. [Google Scholar] [CrossRef]

- Tom, A.; Granié, M.-A. Gender differences in pedestrian rule compliance and visual search at signalized and unsignalized crossroads. Accid. Anal. Prev. 2011, 43, 1794–1801. [Google Scholar] [CrossRef] [Green Version]

- Issa, T.; Isaias, P. Usability and human computer interaction (HCI). In Sustainable Design; Springer: London, UK, 2015; pp. 19–36. [Google Scholar] [CrossRef]

- Chen, J.Y.; Haas, E.C.; Barnes, M.J. Human performance issues and user interface design for teleoperated robots. IEEE Trans. Syst. Man Cybern. 2007, 37, 1231–1245. [Google Scholar] [CrossRef]

- Spool, J.M. Web Site Usability: A designer’s Guide; Morgan Kaufmann Publishers: Amsterdam, The Netherlands, 1999. [Google Scholar]

- Ben-Bassat, T.; Shinar, D. Ergonomic guidelines for traffic sign design increase sign comprehension. Hum. Factors 2006, 48, 182–195. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ng, A.W.; Chan, A.H. The guessability of traffic signs: Effects of prospective-user factors and sign design features. Accid. Anal. Prev. 2007, 39, 1245–1257. [Google Scholar] [CrossRef] [PubMed]

- Bengler, K.; Rettenmaier, M.; Fritz, N.; Feierle, A. From HMI to HMIs: Towards an HMI framework for automated driving. Information 2020, 11, 61. [Google Scholar] [CrossRef] [Green Version]

- Lagstrom, T.; Lundgren, V.M. AVIP-Autonomous Vehicles Interaction with Pedestrians. Ph.D. Thesis, Chalmers University of Technology, Gothenburg, Sweden. Available online: https://publications.lib.chalmers.se/records/fulltext/238401/238401.pdf (accessed on 2 May 2022).

- Mitsubishi Electric Introduces Road-Illuminating Directional Indicators. Available online: http://www.mitsubishielectric.com/news/2015/1023.html?cid=rss (accessed on 30 June 2017).

- Snyder, J.B. This Self-Driving Car Smiles at Pedestrians. 2016. Available online: https://www.autoblog.com/2016/09/16/this-self-driving-car-smiles-atpedestrians/ (accessed on 16 September 2016).

- Métayer, N.; Coeugnet, S. Improving the experience in the pedestrian’s interaction with an autonomous vehicle: An ergonomic comparison of external hmi. Appl. Ergon. 2021, 96, 103478. [Google Scholar] [CrossRef]

- Zhang, J.; Vinkhuyzen, E.; Cefkin, M. Evaluation of an autonomous vehicle external communication system concept: A survey study. In Advances in Human Aspects of Transportation; Springer: Cham, Switzerland, 2017; pp. 650–661. [Google Scholar] [CrossRef]

- Bazilinskyy, P.; Dodou, D.; de Winter, J. Survey on eHMI concepts: The effect of text, color, and perspective. Transp. Res. Part F Traffic Psychol. Behav. 2019, 67, 175–194. [Google Scholar] [CrossRef]

- McDougall, S.J.; Curry, M.B.; De Bruijn, O. Measuring symbol and icon characteristics: Norms for concreteness, complexity, meaningfulness, familiarity, and semantic distance for 239 symbols. Behav. Res. Methods Instrum. Comput. 1999, 31, 487–519. [Google Scholar] [CrossRef] [Green Version]

- De Clercq, K.; Dietrich, A.; Núñez Velasco, J.P.; De Winter, J.; Happee, R. External human-machine interfaces on automated vehicles: Effects on pedestrian crossing decisions. Hum. Factors 2019, 61, 1353–1370. [Google Scholar] [CrossRef] [Green Version]

- Mahadevan, K.; Somanath, S.; Sharlin, E. Communicating Awareness and Intent in Autonomous Vehicle-Pedestrian Interaction; University of Calgary: Calgary, AB, Canada, 2017. [Google Scholar] [CrossRef]

- Shuchisnigdha, D.; Strawderman, L.J.; Carruth, D.W. Investigating pedestrian suggestions for external features on fully autonomous vehicles: A virtual reality experiment. Transp. Res. Part F Traffic Psychol. Behav. 2018, 59, 135–149. [Google Scholar] [CrossRef]

- Merat, N.; Louw, T.; Madigan, R.; Wilbrink, M.; Schieben, A. What externally presented information do vrus require when interacting with fully automated road transport systems in shared space? Accid. Anal. Prev. 2018, 118, 244–252. [Google Scholar] [CrossRef]

- Chang, C.M.; Toda, K.; Igarashi, T.; Miyata, M.; Kobayashi, Y. A video-based study comparing communication modalities between an autonomous car and a pedestrian. In Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York, NY, USA, 23 September 2018; pp. 104–109. [Google Scholar] [CrossRef]

- Stadler, S.; Cornet, H.; Theoto, T.N.; Frenkler, F. A tool, not a toy: Using virtual reality to evaluate the communication between autonomous vehicles and pedestrians. In Augmented Reality and Virtual Reality; Tom Dieck, M., Jung, T., Eds.; Springer: Cham, Switzerland, 2019; pp. 203–216. [Google Scholar] [CrossRef]

- Othersen, I.; Conti-Kufner, A.; Dietrich, A.; Maruhn, P.; Bengler, K. Designing for automated vehicle and pedestrian communication. In Proceedings of the Perspectives on eHMIs from Older and Younger Persons (Netherlands: HFES Europe Annual Meeting), Amsterdam, The Netherlands, 14–16 October 2018; Available online: https://www.hfes-europe.org/largefiles/proceedingshfeseurope2018.pdf (accessed on 8 October 2018).

- Gordon, M.S.; Kozloski, J.R.; Kundu, A.; Malkin, P.K.; Pickover, C.A. Automated Control of Interactions between Self-Driving Vehicles and Pedestrians. U.S. Patent 9,483,948, 7 August 2015. [Google Scholar]

- Alexandros, R.; Alm, H. External human-machine interfaces for autonomous vehicle-to-pedestrian communication: A review of empirical work. Front. Psychol. 2019, 10, 2757. [Google Scholar] [CrossRef]

- Kim, J.K.; Schubert, E.F. Transcending the replacement paradigm of solid-state lighting. Opt. Express 2008, 16, 21835–21842. [Google Scholar] [CrossRef] [PubMed]

- Dey, D.; Habibovic, A.; Pfleging, B.; Martens, M.; Terken, J. Color and animation preferences for a light band eHMI in interactions between automated vehicles and pedestrians. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–13. [Google Scholar] [CrossRef]

- Guzman-Martinez, E.; Leung, P.; Franconeri, S.; Grabowecky, M.; Suzuki, S. Rapid eye-fixation training without eyetracking. Psychon. Bull. Rev. 2009, 16, 491–496. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cao, Y.; Zhuang, X.; Ma, G. Shorten pedestrians’ perceived waiting time: The effect of tempo and pitch in audible pedestrian signals at red phase. Accid. Anal. Prev. 2019, 123, 336–340. [Google Scholar] [CrossRef] [PubMed]

- Cocenas-Silva, R.; Bueno, J.L.O.; Molin, P.; Bigand, E. Multidimensional scaling of musical time estimations. Percept. Mot. Ski. 2011, 112, 737–748. [Google Scholar] [CrossRef] [PubMed]

- Coutinho, E.; Cangelosi, A. Musical emotions: Predicting second-by-second subjective feelings of emotion from low-level psychoacoustic features and physiological measurements. Emotion 2011, 11, 921. [Google Scholar] [CrossRef] [Green Version]

- Gomez, P.; Danuser, B. Relationships between musical structure and psychophysiological measures of emotion. Emotion 2007, 7, 377. [Google Scholar] [CrossRef] [Green Version]

- Husain, G.; Thompson, W.F.; Schellenberg, E.G. Effects of musical tempo and mode on arousal, mood, and spatial abilities. Music. Percept. 2002, 20, 151–171. [Google Scholar] [CrossRef] [Green Version]

- Rosenblum, L.D.; Carello, C.; Pastore, R.E. Relative effectiveness of three stimulus variables for locating a moving sound source. Perception 1987, 16, 175–186. [Google Scholar] [CrossRef]

- Poulsen, T. Acoustic traffic signal for blind pedestrians. Appl. Acoust. 1982, 15, 363–376. [Google Scholar] [CrossRef]

- Misdariis, N.; Cera, A.; Levallois, E.; Locqueteau, C. Do Electric Cars Have to Make Noise? An Emblematic Opportunity for Designing Sounds and Soundscapes. In Acoustics, Nantes, France. 23 April 2012. Available online: https://hal.archives-ouvertes.fr/hal-00810920. (accessed on 30 August 2016).

- Yue, L.; Abdel-Aty, M.; Wu, Y.; Zheng, O.; Yuan, J. In-depth approach for identifying crash causation patterns and its implications for pedestrian crash prevention. J. Saf. Res. 2020, 73, 119–132. [Google Scholar] [CrossRef]

- Eluru, N.; Bhat, C.R.; Hensher, D.A. A mixed generalized ordered response model for examining pedestrian and bicyclist injury severity level in traffic crashes. Accid. Anal. Prev. 2008, 40, 1033–1054. [Google Scholar] [CrossRef] [PubMed]

- Yamauchi, K.; Sano, T.; Hasegawa, S.; Tamura, F.; Takeda, Y. Detectability and hearing impression of additional warning sounds for electric or hybrid vehicles. In Proceedings of the INTER-NOISE and NOISE-CON Congress and Conference Proceedings, Fort Lauderdale, FL, USA, 16–19 November 2014; pp. 5279–5285. Available online: https://kyushu-u.pure.elsevier.com/en/publications/detectability-and-hearing-impression-of-additional-warning-sounds (accessed on 14 October 2014).

| Interactive Design Elements of Autonomous Driving | Type | Parameter |

|---|---|---|

| Lighting | Color | Red |

| Green | ||

| Status | Flash | |

| Always on | ||

| Melody | Single tone | |

| Continuous tone | ||

| Sound | Rhythm | Fast: 0.5 s/time |

| Slow: 0.8 s/time | ||

| Frequency | High frequency: 1000–3000 Hz | |

| Low frequency: 500–880 Hz |

| Item | Option | Number of People | Percentage (%) |

|---|---|---|---|

| Gender | Male | 17 | 48.57 |

| Female | 18 | 51.43 | |

| Age | Youth | 17 | 48.57 |

| Middle-aged and elderly | 18 | 51.43 | |

| Driving experience | No driving experience | 7 | 20.00 |

| Less than 1 year | 6 | 17.14 | |

| 1–3 years | 5 | 14.29 | |

| 4–10 years | 3 | 8.57 | |

| More than 10 years | 14 | 40.00 | |

| Traffic accident | With accidents | 17 | 48.57 |

| Without accidents | 18 | 51.43 | |

| Role in the accident | Driving | 13 | 37.14 |

| Motorcycle riders | 4 | 11.43 | |

| No | 18 | 51.43 |

| Road-User First | Color | Lighting | Rhythm | Frequency | Melody | Percentage |

|---|---|---|---|---|---|---|

| video1 | Green | Always on | Fast | Low | Single tone | 71.4% |

| video23 | Green | Flash | Slow | High | Single tone | 71.4% |

| video27 | Green | Flash | Slow | Low | Continuous tone | 71.4% |

| video31 | Green | Flash | Slow | High | Continuous tone | 71.4% |

| video21 | Green | Always on | Slow | High | Single tone | 68.6% |

| video25 | Green | Always on | Slow | Low | Continuous tone | 68.6% |

| Vehicle First | Color | Lighting | Rhythm | Frequency | Melody | Percentage |

| video26 | Red | Flash | Fast | High | Single tone | 77.1% |

| video32 | Red | Always on | Fast | Low | Single tone | 74.3% |

| video18 | Red | Flash | Fast | High | Continuous tone | 68.6% |

| video30 | Red | Flash | Fast | Low | Single tone | 68.6% |

| video24 | Red | Always on | Fast | Low | Continuous tone | 68.6% |

| video28 | Red | Always on | Fast | High | Single tone | 68.6% |

| Item | Value | Deceleration Intention (%) | χ2 | p | ||

|---|---|---|---|---|---|---|

| 1.0 | 2.0 | 3.0 | ||||

| Color | Green | 6(100.00) | 0(0.00) | 10(50.00) | 12.000 | 0.002 ** |

| Red | 0(0.00) | 6(100.00) | 10(50.00) | |||

| Lighting | Always on | 3(50.00) | 3(50.00) | 10(50.00) | 0.000 | 1.000 |

| Flash | 3(50.00) | 3(50.00) | 10(50.00) | |||

| Rhythm | Fast | 1(16.67) | 6(100.00) | 9(45.00) | 8.867 | 0.012 * |

| Slow | 5(83.33) | 0(0.00) | 11(55.00) | |||

| Frequency | Low | 3(50.00) | 3(50.00) | 10(50.00) | 0.000 | 1.000 |

| High | 3(50.00) | 3(50.00) | 10(50.00) | |||

| Melody | Single tone | 3(50.00) | 4(66.67) | 9(45.00) | 0.867 | 0.648 |

| Dual tone | 3(50.00) | 2(33.33) | 11(55.00) | |||

| Effect | Value | F | df | Degree of Freedom Error | Significance | n2p |

|---|---|---|---|---|---|---|

| Color | 0.940 | 5631.287 | 3.000 | 1086.000 | 0.000 ** | 0.940 |

| Lighting | 0.010 | 3.477 | 3.000 | 1086.000 | 0.016 ** | 0.010 |

| Melody | 0.010 | 3.487 | 3.000 | 1086.000 | 0.015 ** | 0.010 |

| Color × Rhythm | 0.008 | 2.926 | 3.000 | 1086.000 | 0.033 ** | 0.008 |

| Color × Melody | 0.022 | 8.248 | 3.000 | 1086.000 | 0.000 ** | 0.022 |

| Color × Lighting × Rhythm × Melody | 0.009 | 3.453 | 3.000 | 1086.000 | 0.016 ** | 0.009 |

| Melody | 0.010 | 3.487 | 3.000 | 1086.000 | 0.015 ** | 0.010 |

| Source | Dependent Variable | Type 2 SS | Degree of Freedom | Mean Square | F | Significance | n2p |

|---|---|---|---|---|---|---|---|

| Usability | Color | 9.844 | 1 | 9.844 | 10.448 | 0.001 ** | 0.010 |

| Color × Rhythm | 22.008 | 1 | 22.008 | 23.360 | 0.000 ** | 0.021 | |

| Color × Melody | 9.108 | 1 | 9.108 | 9.668 | 0.002 ** | 0.009 | |

| Usefulness | Color | 6.758 | 1 | 6.758 | 7.036 | 0.008 ** | 0.006 |

| Lighting | 6.758 | 1 | 6.758 | 7.036 | 0.008 ** | 0.006 | |

| Color × Rhythm | 15.322 | 1 | 15.322 | 15.953 | 0.000 ** | 0.014 | |

| Color × Melody | 4.251 | 1 | 4.251 | 4.426 | 0.036 * | 0.004 | |

| Satisfaction | Color | 5.432 | 1 | 5.432 | 5.609 | 0.018 * | 0.005 |

| Lighting | 8.229 | 1 | 8.229 | 8.497 | 0.004 ** | 0.008 | |

| Melody | 6.604 | 1 | 6.604 | 6.819 | 0.009 ** | 0.006 | |

| Color × Rhythm | 7.557 | 1 | 7.557 | 7.803 | 0.005 ** | 0.007 | |

| Color × Melody | 5.157 | 1 | 5.157 | 5.325 | 0.021 * | 0.005 |

| Type 2 SS | df | MS | F | p | Comparison | |||

|---|---|---|---|---|---|---|---|---|

| Usability | Green | Rhythm | 10.066 | 1 | 10.066 | 10.149 | 0.002 * | Fast rhythm (M = 3.30, SD = 1.032) < Slow rhythm (M = 3.57, SD = 0.958) |

| Red | Rhythm | 10.618 | 1 | 10.618 | 11.267 | 0.001 * | Fast rhythm (M = 3.75, SD = 0.947) > Slow rhythm (M = 3.47, SD = 0.994) | |

| Melody | 7.529 | 1 | 7.529 | 7.942 | 0.005 * | Single tone (M = 3.73, SD = 0.973) > Dual tone (M = 3.39, SD = 1.009) | ||

| Usefulness | Green | Rhythm | 4.596 | 1 | 4.596 | 4.802 | 0.029 * | Fast rhythm (M = 3.37, SD = 1.026) < Slow rhythm (M = 3.56, SD = 0.928) |

| Red | Rhythm | 9.529 | 1 | 9.529 | 9.428 | 0.002 ** | Fast rhythm (M = 3.74, SD = 0.994) > Slow rhythm (M = 3.48, SD = 1.013) | |

| Melody | 6.184 | 1 | 6.184 | 6.081 | 0.014 * | Single tone (M = 3.72, SD = 0.967) > Dual tone (M = 3.50, SD = 1.049) | ||

| Satisfaction | Red | Rhythm | 5.972 | 1 | 5.972 | 5.805 | 0.016 * | Fast rhythm (M = 3.58, SD = 1.017) > Slow rhythm (M = 3.37, SD = 1.011) |

| Melody | 11.472 | 1 | 11.472 | 11.262 | 0.001 ** | Single tone (M = 3.62, SD = 0.972) > Dual tone (M = 3.33, SD = 1.045) |

| M | SD | N | |||

|---|---|---|---|---|---|

| Usability | Lighting | Always on | 3.47 | 1.027 | 560 |

| Flash | 3.58 | 0.941 | 560 | ||

| Frequency | Low | 3.49 | 1.010 | 560 | |

| High | 3.55 | 0.961 | 560 | ||

| Usefulness | Lighting | Always on | 3.46 | 1.044 | 560 |

| Flash | 3.61 | 0.928 | 560 | ||

| Frequency | Low | 3.49 | 1.008 | 560 | |

| High | 3.57 | 0.971 | 560 | ||

| Satisfaction | Lighting | Always on | 3.31 | 1.017 | 560 |

| Flash | 3.48 | 0.955 | 560 | ||

| Frequency | Low | 3.37 | 0.999 | 560 | |

| High | 3.43 | 0.980 | 560 |

| Deceleration Scenario | Color | Lighting | Rhythm | Frequency | Melody |

|---|---|---|---|---|---|

| Road-user first | Green | Flash | Slow | High | Continuous tone |

| Vehicle first | Red | Flash | Fast | High | Single tone |

| Road-User First | Color | Lighting | Rhythm | Frequency | Melody | Percentage |

|---|---|---|---|---|---|---|

| video27 | Green | Flash | Slow | Low | Continuous tone | 80.0% |

| video23 | Green | Flash | Slow | High | Single tone | 77.1% |

| video29 | Green | Always on | Slow | Low | Single tone | 74.3% |

| video17 | Green | Flash | Slow | High | Continuous tone | 74.3% |

| video19 | Green | Always on | Slow | High | Continuous tone | 68.6% |

| Vehicle First | Color | Lighting | Rhythm | Frequency | Melody | Percentage |

| video26 | Red | Flash | Fast | High | Continuous tone | 68.6% |

| video32 | Red | Flash | Fast | High | Single tone | 68.6% |

| video18 | Red | Always on | Fast | Low | Single tone | 68.6% |

| Item | Value | Intention Judgment (%) | χ2 | p | ||

|---|---|---|---|---|---|---|

| Road-User First | Vehicle First | Not Known | ||||

| Color | Green | 5(100.00) | 0(0.00) | 11(45.83) | 8.167 | 0.017 * |

| Red | 0(0.00) | 3(100.00) | 13(54.17) | |||

| Lighting | Always on | 2(40.00) | 1(33.33) | 13(54.17) | 0.700 | 0.705 |

| Flash | 3(60.00) | 2(66.67) | 11(45.83) | |||

| Rhythm | Fast | 0(0.00) | 3(100.00) | 13(54.17) | 8.167 | 0.017 * |

| Slow | 5(100.00) | 0(0.00) | 11(45.83) | |||

| Frequency | Low | 3(60.00) | 1(33.33) | 12(50.00) | 0.533 | 0.766 |

| High | 2(40.00) | 2(66.67) | 12(50.00) | |||

| Melody | Single tone | 3(60.00) | 2(66.67) | 11(45.83) | 0.700 | 0.705 |

| Continuous tone | 2(40.00) | 1(33.33) | 13(54.17) | |||

| Effect | Value | F | Assumed Degree of Freedom | Degree of Freedom for Error | Significance | Partial Eta Squared |

|---|---|---|---|---|---|---|

| Lighting | 0.012 | 4.571 | 3.000 | 1086.000 | 0.003 ** | 0.012 |

| Color × Rhythm | 0.017 | 6.279 | 3.000 | 1086.000 | 0.000 ** | 0.017 |

| Source | Dependent Variable | Type III Sum of Squares | Degree of Freedom | Mean Square | F | Significance | Partial Eta Squared |

|---|---|---|---|---|---|---|---|

| Usability | Lighting | 6.451 | 1 | 6.451 | 6.737 | 0.010 ** | 0.006 |

| Color × Rhythm | 17.251 | 1 | 17.251 | 18.015 | 0.000 ** | 0.016 | |

| Usefulness | Lighting | 8.575 | 1 | 8.575 | 9.368 | 0.002 ** | 0.009 |

| Color × Rhythm | 14.629 | 1 | 14.629 | 15.981 | 0.000 ** | 0.014 | |

| Satisfaction | Lighting | 13.289 | 1 | 13.289 | 12.788 | 0.000 ** | 0.012 |

| Color × Rhythm | 11.604 | 1 | 11.604 | 11.166 | 0.001 ** | 0.010 |

| df | MS | F | p | Comparison | ||||

|---|---|---|---|---|---|---|---|---|

| Usability | Green | Rhythm | 4.971 | 1 | 4.971 | 5.331 | 0.021 * | Fast rhythm (M = 3.58, SD = 1.031) < Slow rhythm (M = 3.78, SD = 0.895) |

| Red | Rhythm | 10.618 | 1 | 10.618 | 10.473 | 0.001 ** | Fast rhythm (M = 3.72, SD = 0.988) > Slow rhythm (M = 3.44, SD = 1.016) | |

| Usefulness | Red | Rhythm | 11.184 | 1 | 11.184 | 11.449 | 0.001 ** | Fast rhythm (M = 3.74, SD = 0.983) > Slow rhythm (M = 3.46, SD = 0.993) |

| Satisfaction | Red | Rhythm | 5.765 | 1 | 5.765 | 5.163 | 0.023 * | Fast rhythm (M = 3.56, SD = 1.061) > Slow rhythm (M = 3.35, SD = 1.052) |

| M | SD | N | |||

|---|---|---|---|---|---|

| Usability | Lighting | Always on | 3.55 | 1.012 | 560 |

| Flash | 3.70 | 0.953 | 560 | ||

| Frequency | Low | 3.67 | 0.962 | 560 | |

| High | 3.58 | 1.007 | 560 | ||

| Melody | Single tone | 3.68 | 0.959 | 560 | |

| Dual tone | 3.58 | 1.009 | 560 | ||

| Usefulness | Lighting | Always on | 3.55 | 0.971 | 560 |

| Flash | 3.73 | 0.947 | 560 | ||

| Frequency | Low | 3.68 | 0.935 | 560 | |

| High | 3.60 | 0.989 | 560 | ||

| Melody | Single tone | 3.68 | 0.920 | 560 | |

| Dual tone | 3.60 | 1.003 | 560 | ||

| Satisfaction | Lighting | Always on | 3.37 | 1.040 | 560 |

| Flash | 3.59 | 0.993 | 560 | ||

| Frequency | Low | 3.51 | 1.015 | 560 | |

| High | 3.45 | 1.030 | 560 | ||

| Melody | Single tone | 3.51 | 0.997 | 560 | |

| Dual tone | 3.45 | 1.047 | 560 |

| Waiting-to-Restart Scenario | Color | Lighting | Rhythm | Frequency | Melody |

|---|---|---|---|---|---|

| Road-user first | Green | Flash | Slow | Low | Continuous tone |

| Vehicle first | Red | Flash | Fast | Low | Single tone |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, C.-F.; Xu, D.-D.; Lu, S.-H.; Chen, W.-C. Effect of Signal Design of Autonomous Vehicle Intention Presentation on Pedestrians’ Cognition. Behav. Sci. 2022, 12, 502. https://doi.org/10.3390/bs12120502

Wu C-F, Xu D-D, Lu S-H, Chen W-C. Effect of Signal Design of Autonomous Vehicle Intention Presentation on Pedestrians’ Cognition. Behavioral Sciences. 2022; 12(12):502. https://doi.org/10.3390/bs12120502

Chicago/Turabian StyleWu, Chih-Fu, Dan-Dan Xu, Shao-Hsuan Lu, and Wen-Chi Chen. 2022. "Effect of Signal Design of Autonomous Vehicle Intention Presentation on Pedestrians’ Cognition" Behavioral Sciences 12, no. 12: 502. https://doi.org/10.3390/bs12120502