Research Trends in the Study of Acceptability of Digital Mental Health-Related Interventions: A Bibliometric and Network Visualisation Analysis

Abstract

:1. Introduction

2. Objectives

- (a)

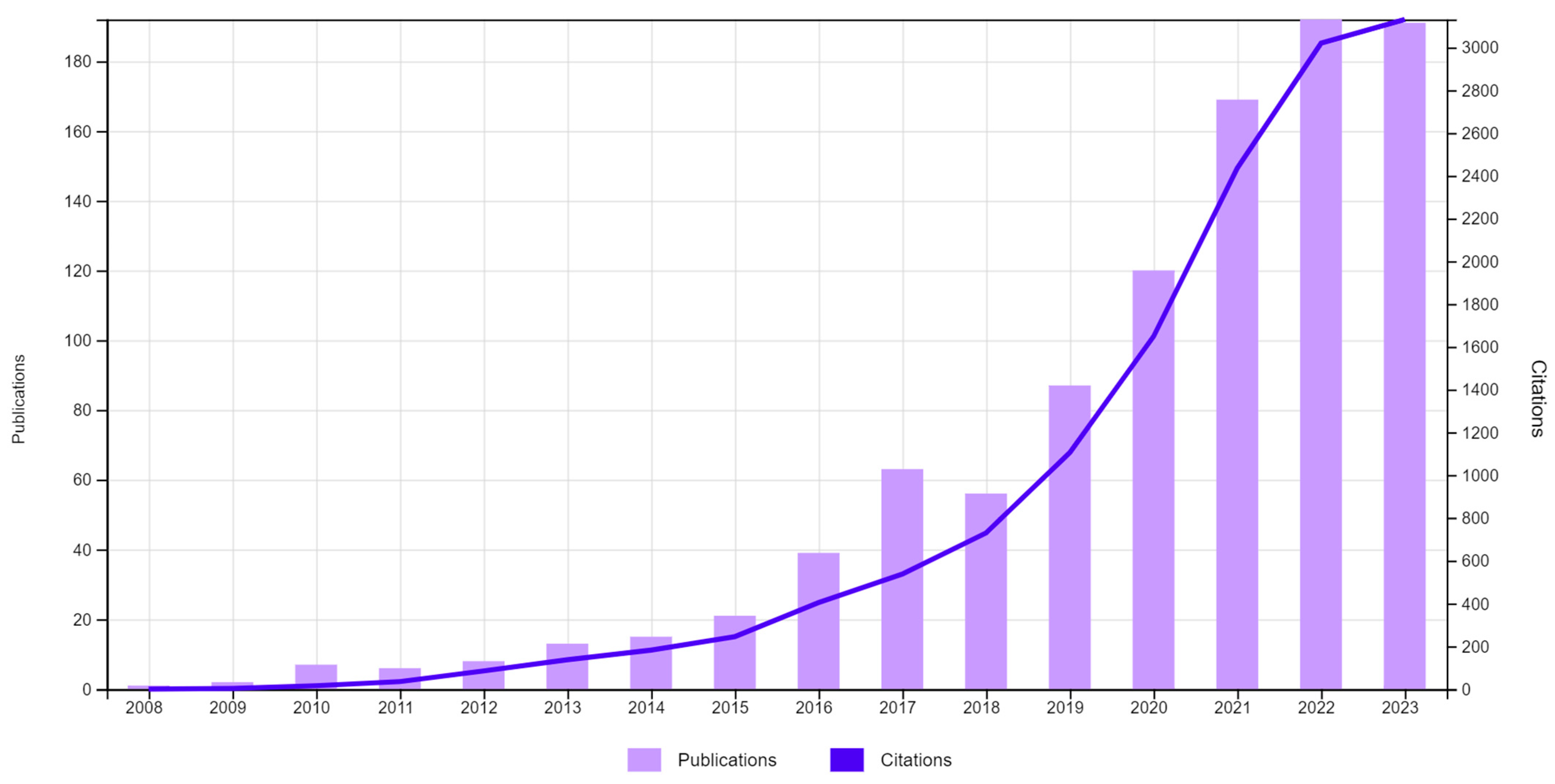

- Analyse the evolution of publications of primary studies from 2008 to 2023.

- (b)

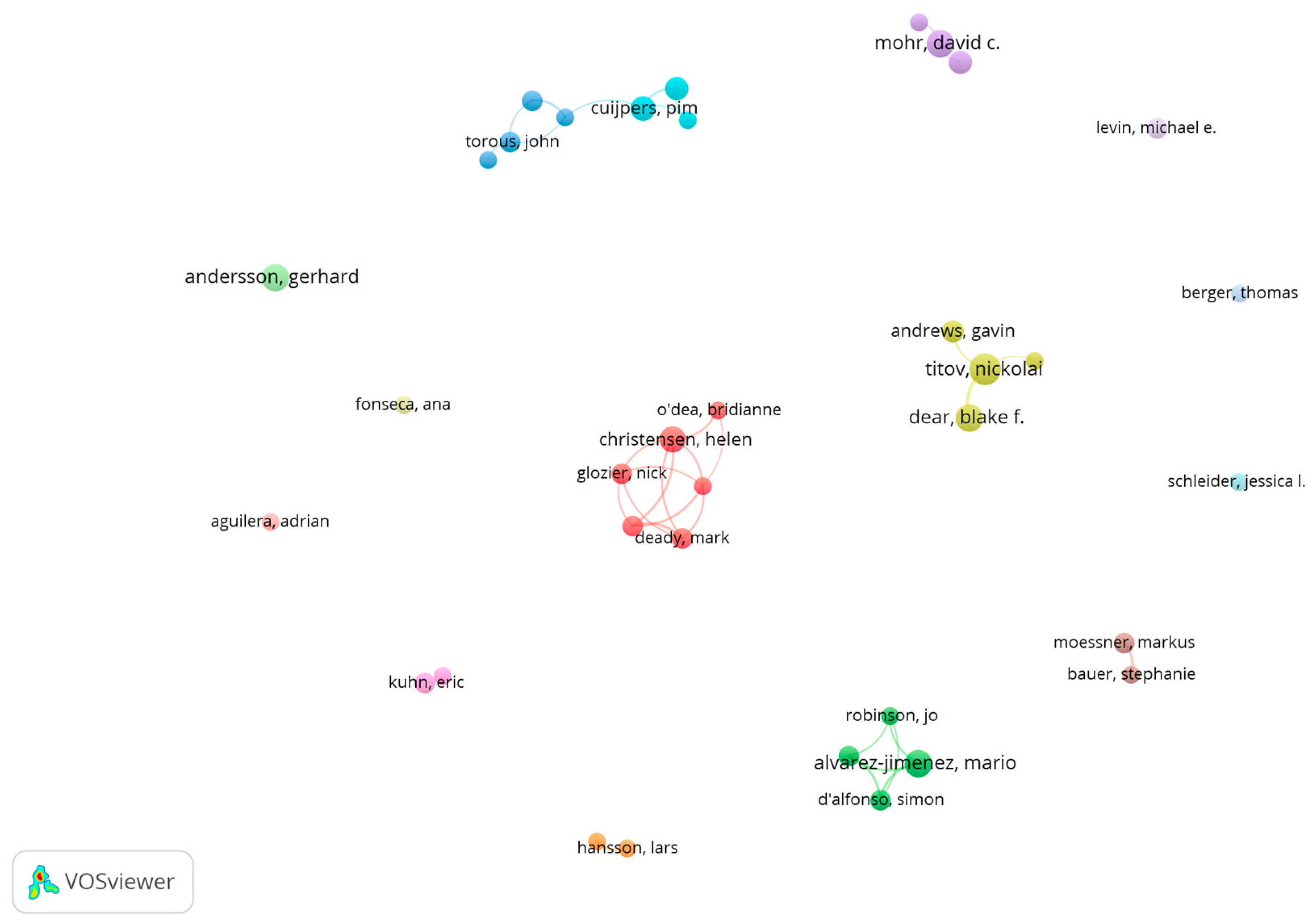

- Analyse the main contributors to the knowledge area.

- (c)

- Report on authors’ approaches to intervention acceptability based on an analysis of the documents’ bibliometric features.

3. Methods

3.1. Data Sources and Search Strategy

3.2. Eligibility Criteria

3.3. Data Selection

3.4. Analysis

4. Results

- (A)

- Descriptives

- (B)

- Analysis of knowledge production

4.1. Authors

4.2. Journals

4.3. Institutions

4.4. Countries

- (C)

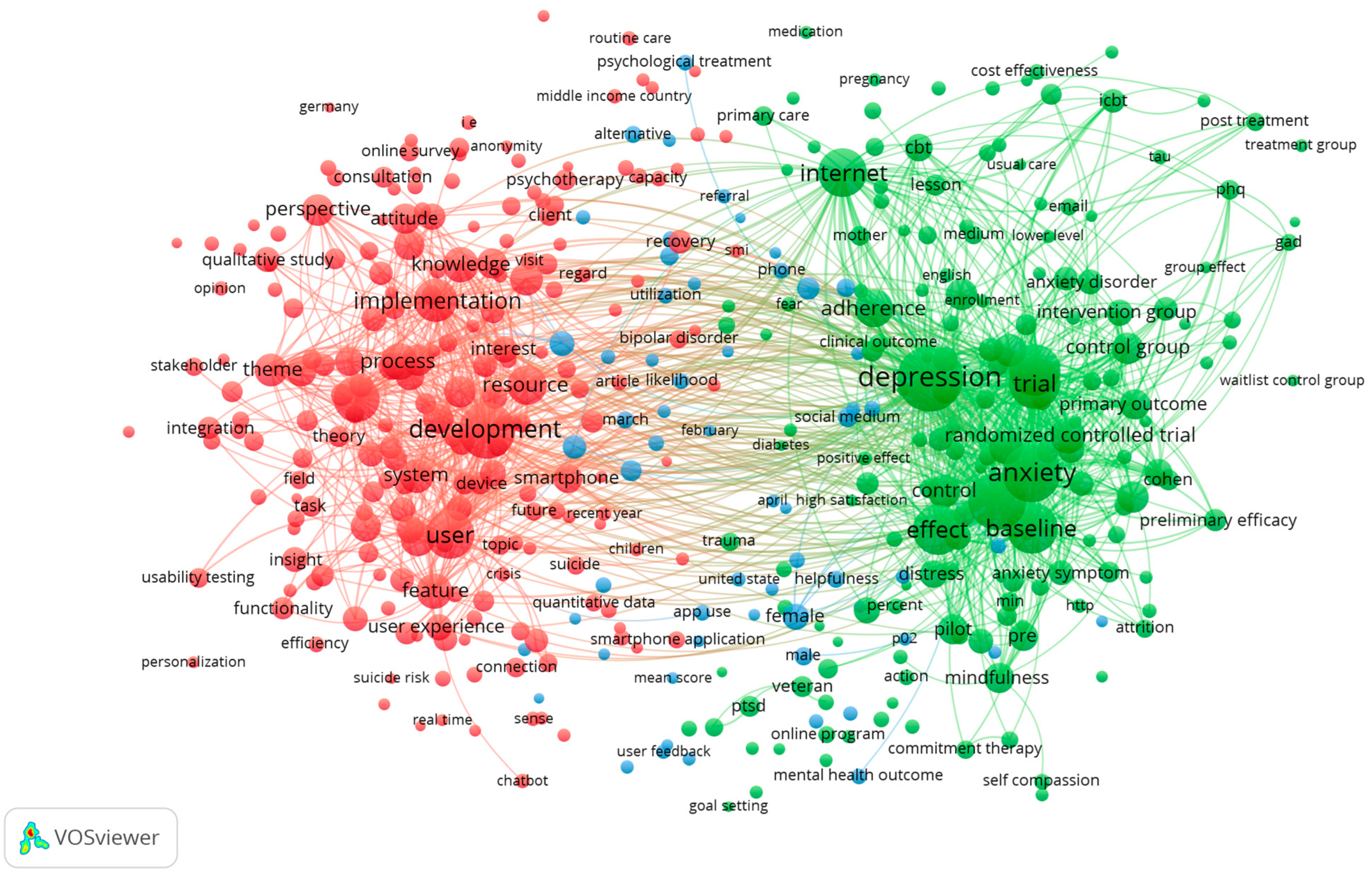

- Analysis of authors’ approaches to the acceptability of digital mental health-related interventions

4.5. Text Co-Occurrence Analysis

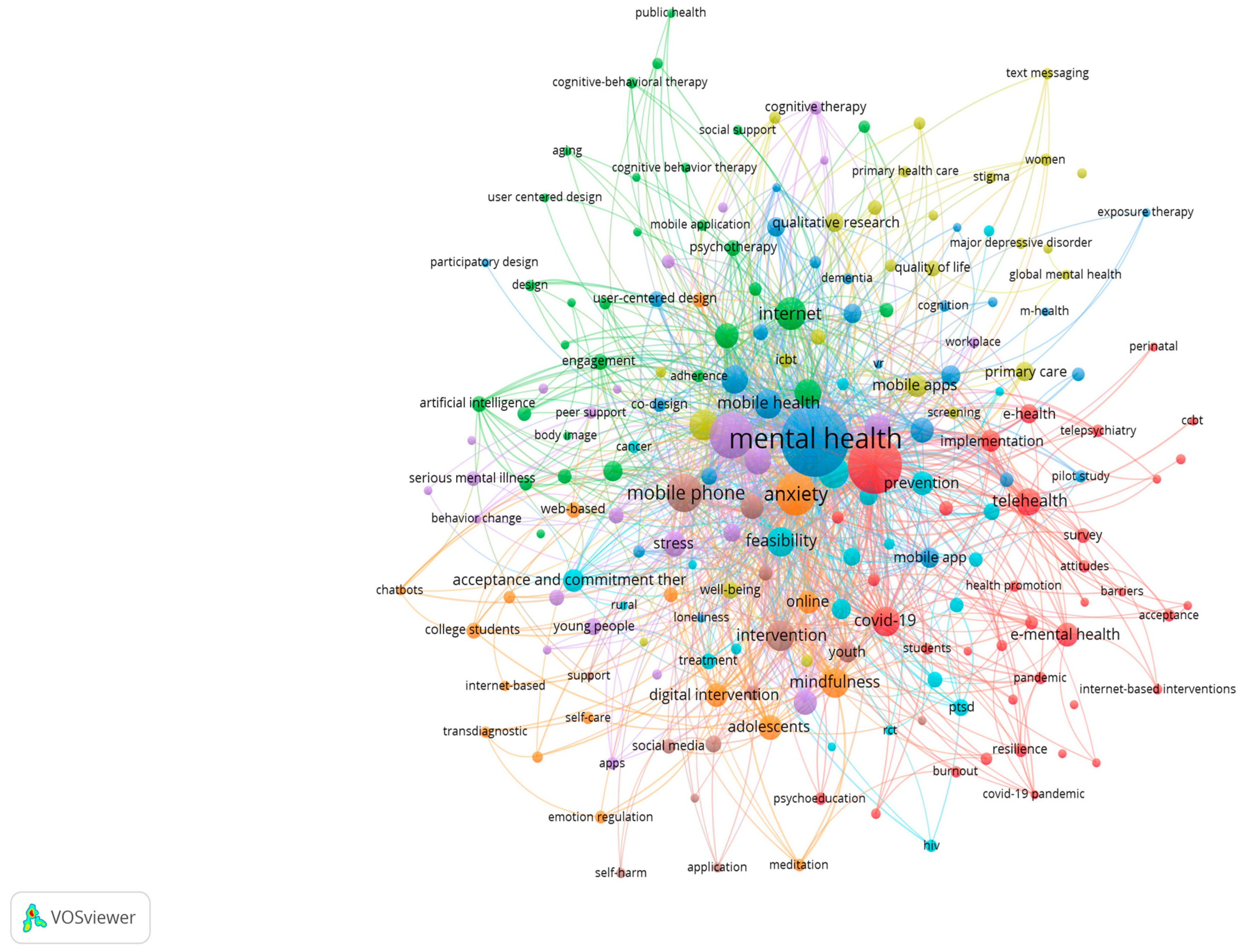

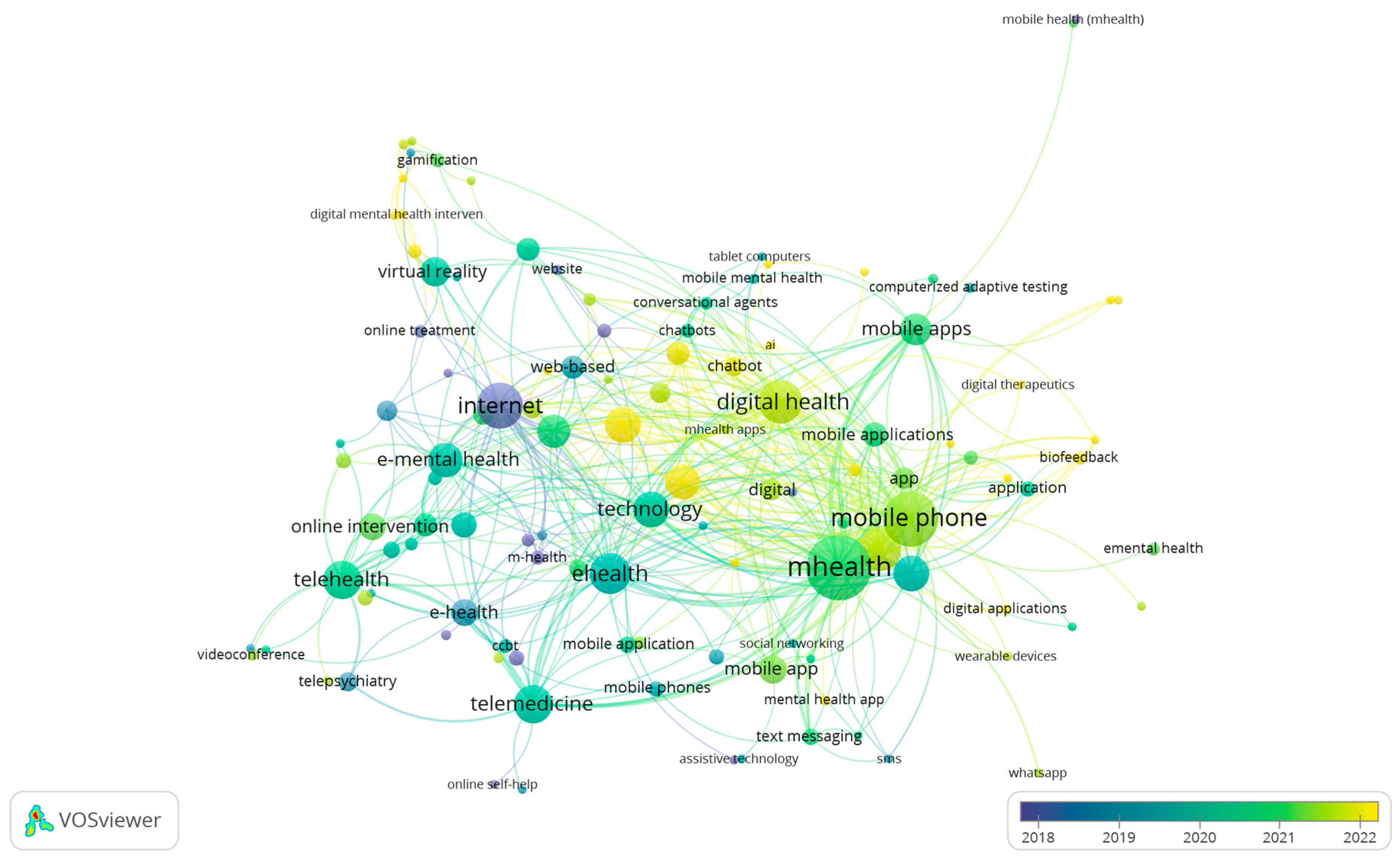

4.6. Authors’ Keywords Co-Occurrence Analysis

4.7. Digital Technology Application in the Delivery of Mental Health Intervention Studies

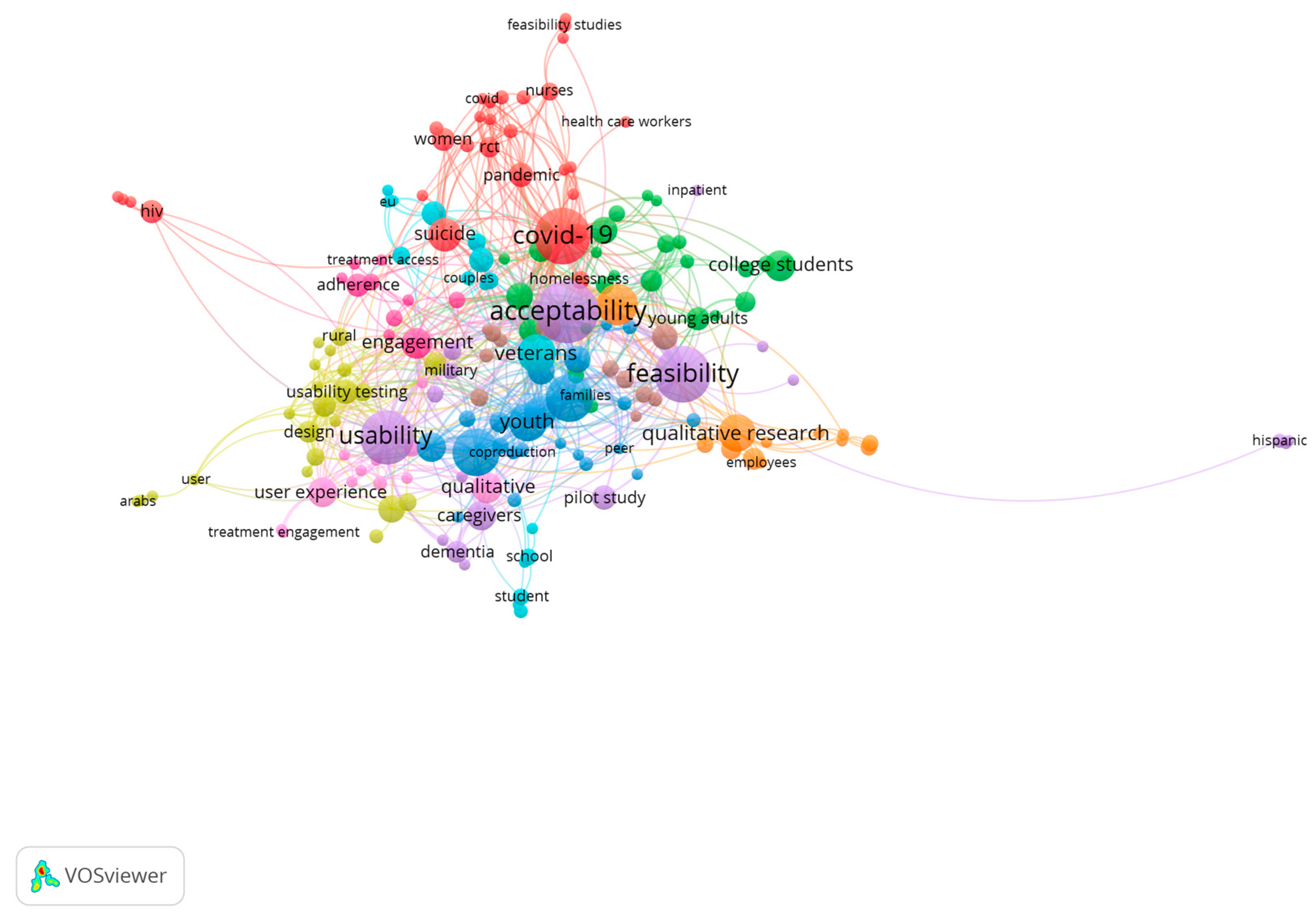

4.8. Acceptability and Usability Studies of Digital Mental Health-Related Interventions

4.9. Bibliographical Coupling

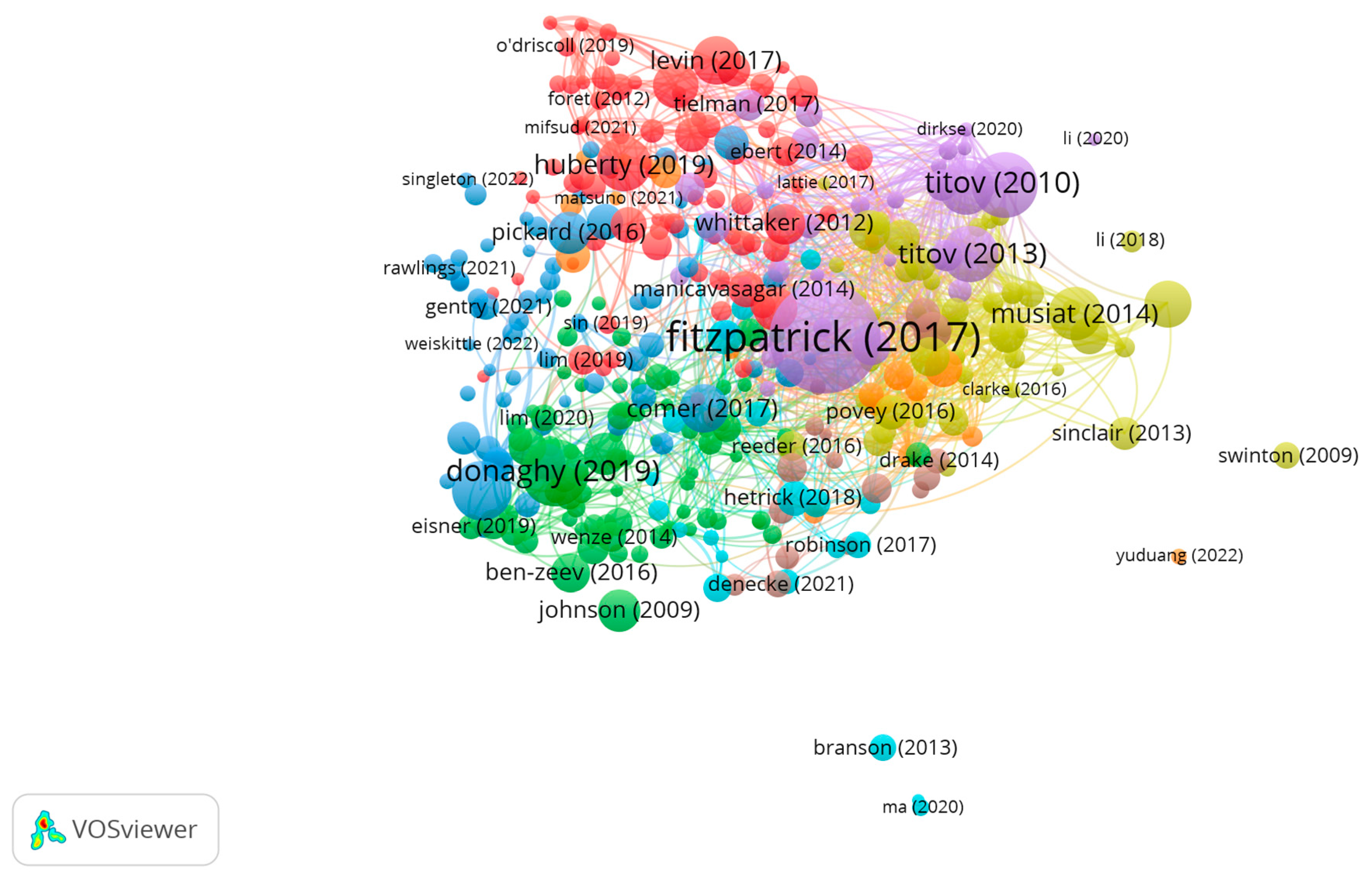

5. Discussion

5.1. Publication Trends

5.2. Visual Network Analyses: Key Findings

5.3. Acceptability Approaches

5.4. Limitations

6. Conclusions

Implications for Future Research

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Adepoju, Paul. 2020. Africa turns to telemedicine to close mental health gap. The Lancet Digital Health 2: e571–e572. [Google Scholar] [CrossRef]

- Agarwal, Ashok, Damayanthi Durairajanayagam, Sindhuja Tatagari, Sandro C. Esteves, Avi Harlev, Ralf Henkel, Shubhadeep Roychoudhury, Sheryl Homa, Nicolás Garrido Puchalt, and Ranjith Ramasamy. 2016. Bibliometrics: Tracking research impact by selecting the appropriate metrics. Asian Journal of Andrology 18: 296. [Google Scholar] [CrossRef]

- Alam, Mirza Mohammad Didarul, Mohammad Zahedul Alam, Syed Abidur Rahman, and Seyedeh Khadijeh Taghizadeh. 2021. Factors influencing mHealth adoption and its impact on mental well-being during COVID-19 pandemic: A SEM-ANN approach. Journal of Biomedical Informatics 116: 103722. [Google Scholar] [CrossRef]

- Apolinário-Hagen, Jennifer, Mathias Harrer, Fanny Kählke, Lara Fritsche, Christel Salewski, and David Daniel Ebert. 2018. Public attitudes toward guided internet-based therapies: Web-based survey study. JMIR Mental Health 5: e10735. [Google Scholar] [CrossRef]

- Apolinário-Hagen, Jennifer, Viktor Vehreschild, and Ramez M. Alkoudmani. 2017. Current views and perspectives on e-mental health: An exploratory survey study for understanding public attitudes toward internet-based psychotherapy in Germany. JMIR Mental Health 4: e6375. [Google Scholar] [CrossRef]

- Arnold, Chelsea, John Farhall, Kristi-Ann Villagonzalo, Kriti Sharma, and Neil Thomas. 2021. Engagement with online psychosocial interventions for psychosis: A review and synthesis of relevant factors. Internet Interventions 25: 100411. [Google Scholar] [CrossRef] [PubMed]

- Balcombe, Luke, and Diego De Leo. 2021. Digital mental health challenges and the horizon ahead for solutions. JMIR Mental Health 8: e26811. [Google Scholar] [CrossRef] [PubMed]

- Banerjee, Debanjan, Bhavika Vajawat, and Prateek Varshney. 2021. Digital gaming interventions: A novel paradigm in mental health? Perspectives from India. International Review of Psychiatry 33: 435–41. [Google Scholar] [CrossRef] [PubMed]

- Ben-Zeev, Dror, Susan M. Kaiser, Christopher J. Brenner, Mark Begale, Jennifer Duffecy, and David C. Mohr. 2013. Development and usability testing of FOCUS: A smartphone system for self-management of schizophrenia. Psychiatric Rehabilitation Journal 36: 289. [Google Scholar] [CrossRef] [PubMed]

- Borghouts, Judith, Elizabeth Eikey, Gloria Mark, Cinthia De Leon, Stephen M. Schueller, Margaret Schneider, Nicole Stadnick, Kai Zheng, Dana Mukamel, and Dara H. Sorkin. 2021. Barriers to and facilitators of user engagement with digital mental health interventions: Systematic review. Journal of Medical Internet Research 23: e24387. [Google Scholar] [CrossRef] [PubMed]

- Bowen, Deborah J., Matthew Kreuter, Bonnie Spring, Ludmila Cofta-Woerpel, Laura Linnan, Diane Weiner, Suzanne Bakken, Cecilia Patrick Kaplan, Linda Squiers, and Cecilia Fabrizio. 2009. How we design feasibility studies. American Journal of Preventive Medicine 36: 452–57. [Google Scholar] [CrossRef]

- Caputo, Andrea, and Mariya Kargina. 2022. A user-friendly method to merge Scopus and Web of Science data during bibliometric analysis. Journal of Marketing Analytics 10: 82–88. [Google Scholar] [CrossRef]

- Chan, Amy Hai Yan, and Michelle L. L. Honey. 2022. User perceptions of mobile digital apps for mental health: Acceptability and usability-An integrative review. Journal of Psychiatric and Mental Health Nursing 29: 147–68. [Google Scholar] [CrossRef]

- Comer, Jonathan S., Jami M. Furr, Elizabeth M. Miguel, Christine E. Cooper-Vince, Aubrey L. Carpenter, R. Meredith Elkins, Caroline E. Kerns, Danielle Cornacchio, Tommy Chou, and Stefany Coxe. 2017. Remotely delivering real-time parent training to the home: An initial randomized trial of Internet-delivered parent–child interaction therapy (I-PCIT). Journal of Consulting and Clinical Psychology 85: 909. [Google Scholar] [CrossRef]

- De Veirman, Ann E. M., Viviane Thewissen, Matthijs G. Spruijt, and Catherine A. W. Bolman. 2022. Factors Associated With Intention and Use of e–Mental Health by Mental Health Counselors in General Practices: Web-Based Survey. JMIR Formative Research 6: e34754. [Google Scholar] [CrossRef] [PubMed]

- Dominguez-Rodriguez, Alejandro, Reyna Jazmín Martínez-Arriaga, Paulina Erika Herdoiza-Arroyo, Eduardo Bautista-Valerio, Anabel de la Rosa-Gómez, Rosa Olimpia Castellanos Vargas, Laura Lacomba-Trejo, Joaquín Mateu-Mollá, Miriam de Jesús Lupercio Ramírez, and Jairo Alejandro Figueroa González. 2022. E-health psychological intervention for COVID-19 healthcare workers: Protocol for its implementation and evaluation. International Journal of Environmental Research and Public Health 19: 12749. [Google Scholar] [CrossRef]

- Donaghy, Eddie, Helen Atherton, Victoria Hammersley, Hannah McNeilly, Annemieke Bikker, Lucy Robbins, John Campbell, and Brian McKinstry. 2019. Acceptability, benefits, and challenges of video consulting: A qualitative study in primary care. British Journal of General Practice 69: e586–e594. [Google Scholar] [CrossRef] [PubMed]

- Ebadi, Ashkan, and Andrea Schiffauerova. 2015. Bibliometric analysis of the impact of funding on scientific development of researchers. International Journal of Computer and Information Engineering 9: 1541–51. [Google Scholar]

- Ellis, Louise A., Isabelle Meulenbroeks, Kate Churruca, Chiara Pomare, Sarah Hatem, Reema Harrison, Yvonne Zurynski, and Jeffrey Braithwaite. 2021. The application of e-mental health in response to COVID-19: Scoping review and bibliometric analysis. JMIR Mental Health 8: e32948. [Google Scholar] [CrossRef]

- Fitzpatrick, Kathleen Kara, Alison Darcy, and Molly Vierhile. 2017. Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (Woebot): A randomized controlled trial. JMIR Mental Health 4: e7785. [Google Scholar] [CrossRef]

- Flujas-Contreras, Juan M., Azucena García-Palacios, and Inmaculada Gómez. 2023. Technology in psychology: A bibliometric analysis of technology-based interventions in clinical and health psychology. Informatics for Health and Social Care 48: 47–67. [Google Scholar] [CrossRef]

- Gama, Ana, Maria J. Marques, João Victor Rocha, Sofia Azeredo-Lopes, Walaa Kinaan, Ana Sá Machado, and Sónia Dias. 2022. ‘I didn’t know where to go’: A mixed-methods approach to explore migrants’ perspectives of access and use of health services during the COVID-19 pandemic. International Journal of Environmental Research and Public Health 19: 13201. [Google Scholar] [CrossRef]

- Gan, Daniel Z. Q., Lauren McGillivray, Mark E. Larsen, Helen Christensen, and Michelle Torok. 2022. Technology-supported strategies for promoting user engagement with digital mental health interventions: A systematic review. Digital Health 8: 20552076221098268. [Google Scholar] [CrossRef]

- Gauthier-Beaupré, Amélie, and Sylvie Grosjean. 2023. Understanding acceptability of digital health technologies among francophone-speaking communities across the world: A metaethnographic study. Frontiers in Communication 8: 1230015. [Google Scholar] [CrossRef]

- Gega, Lina, Dina Jankovic, Pedro Saramago, David Marshall, Sarah Dawson, Sally Brabyn, Georgios F. Nikolaidis, Hollie Melton, Rachel Churchill, and Laura Bojke. 2022. Digital interventions in mental health: Evidence syntheses and economic modelling. Health Technology Assessment (Winchester, England) 26: 1. [Google Scholar] [CrossRef]

- Goodman, Ruth, Linda Tip, and Kate Cavanagh. 2021. There’s an app for that: Context, assumptions, possibilities and potential pitfalls in the use of digital technologies to address refugee mental health. Journal of Refugee Studies 34: 2252–74. [Google Scholar] [CrossRef]

- Gotadki, Rahul. 2024. Digital Mental Health Market Research Report Information by Component (Software and Services), by Disorder Type (Anxiety Disorder, Bipolar Disorder, Post-Traumatic Stress Disorder (PTSD). Substance Abuse Disorder, and Others), by Age Group (Hildren & Adolescents, Adult, and Geriatric), End User (Patients, Payers, and Providers) and by Region (Noth America, Europe, Asia-Pacific, and Rest of the World)—Forecase till 2032. Available online: https://www.marketresearchfuture.com/reports/digital-mental-health-market-11062 (accessed on 5 January 2024).

- Gun, Shih Ying, Nickolai Titov, and Gavin Andrews. 2011. Acceptability of Internet treatment of anxiety and depression. Australasian Psychiatry 19: 259–64. [Google Scholar] [CrossRef]

- Gusenbauer, Michael. 2022. Search where you will find most: Comparing the disciplinary coverage of 56 bibliographic databases. Scientometrics 127: 2683–745. [Google Scholar] [CrossRef]

- Hekler, Eric B., Predrag Klasnja, William T. Riley, Matthew P. Buman, Jennifer Huberty, Daniel E. Rivera, and Cesar A. Martin. 2016. Agile science: Creating useful products for behavior change in the real world. Translational Behavioral Medicine 6: 317–28. [Google Scholar] [CrossRef]

- Helha, Fernandes-Nascimento Maria, and Yuan-Pang Wang. 2022. Trends in complementary and alternative medicine for the treatment of common mental disorders: A bibliometric analysis of two decades. Complementary Therapies in Clinical Practice 46: 101531. [Google Scholar] [CrossRef]

- Hernández-Torrano, Daniel, Laura Ibrayeva, Jason Sparks, Natalya Lim, Alessandra Clementi, Ainur Almukhambetova, Yerden Nurtayev, and Ainur Muratkyzy. 2020. Mental health and well-being of university students: A bibliometric mapping of the literature. Frontiers in Psychology 11: 1226. [Google Scholar] [CrossRef]

- Hetrick, Sarah Elisabeth, Jo Robinson, Eloise Burge, Ryan Blandon, Bianca Mobilio, Simon M. Rice, Magenta B. Simmons, Mario Alvarez-Jimenez, Simon Goodrich, and Christopher G. Davey. 2018. Youth codesign of a mobile phone app to facilitate self-monitoring and management of mood symptoms in young people with major depression, suicidal ideation, and self-harm. JMIR Mental Health 5: e9041. [Google Scholar] [CrossRef]

- Huberty, Jennifer, Jeni Green, Christine Glissmann, Linda Larkey, Megan Puzia, and Chong Lee. 2019. Efficacy of the mindfulness meditation mobile app “calm” to reduce stress among college students: Randomized controlled trial. JMIR mHealth and uHealth 7: e14273. [Google Scholar] [CrossRef]

- Hunter, John F., Lisa C. Walsh, Chi-Keung Chan, and Stephen M. Schueller. 2023. The good side of technology: How we can harness the positive potential of digital technology to maximize well-being. Frontiers in Psychology 14: 1304592. [Google Scholar] [CrossRef]

- Johnson, Elizabeth I., Olivier Grondin, Marion Barrault, Malika Faytout, Sylvia Helbig, Mathilde Husky, Eric L. Granholm, Catherine Loh, Louise Nadeau, and Hans-Ulrich Wittchen. 2009. Computerized ambulatory monitoring in psychiatry: A multi-site collaborative study of acceptability, compliance, and reactivity. International Journal of Methods in Psychiatric Research 18: 48–57. [Google Scholar] [CrossRef]

- Kaihlanen, Anu-Marja, Lotta Virtanen, Ulla Buchert, Nuriiar Safarov, Paula Valkonen, Laura Hietapakka, Iiris Hörhammer, Sari Kujala, Anne Kouvonen, and Tarja Heponiemi. 2022. Towards digital health equity-a qualitative study of the challenges experienced by vulnerable groups in using digital health services in the COVID-19 era. BMC Health Services Research 22: 188. [Google Scholar] [CrossRef]

- Krukowski, Rebecca A., Kathryn M. Ross, Max J. Western, Rosie Cooper, Heide Busse, Cynthia Forbes, Emmanuel Kuntsche, Anila Allmeta, Anabelle Macedo Silva, and Yetunde O. John-Akinola. 2024. Digital health interventions for all? Examining inclusivity across all stages of the digital health intervention research process. Trials 25: 98. [Google Scholar] [CrossRef]

- Lancaster, Gillian A., and Lehana Thabane. 2019. Guidelines for reporting non-randomised pilot and feasibility studies. Pilot and Feasibility Studies 5: 114. [Google Scholar] [CrossRef]

- Levin, Michael E., Jack A. Haeger, Benjamin G. Pierce, and Michael P. Twohig. 2017. Web-based acceptance and commitment therapy for mental health problems in college students: A randomized controlled trial. Behavior Modification 41: 141–62. [Google Scholar] [CrossRef]

- Lipschitz, Jessica M., Chelsea K. Pike, Timothy P. Hogan, Susan A. Murphy, and Katherine E. Burdick. 2023. The engagement problem: A review of engagement with digital mental health interventions and recommendations for a path forward. Current Treatment Options in Psychiatry 10: 119–35. [Google Scholar] [CrossRef]

- Lipschitz, Jessica M., Rachel Van Boxtel, John Torous, Joseph Firth, Julia G Lebovitz, Katherine E. Burdick, and Timothy P. Hogan. 2022. Digital mental health interventions for depression: Scoping review of user engagement. Journal of Medical Internet Research 24: e39204. [Google Scholar] [CrossRef]

- Lu, Zheng-An, Le Shi, Jian-Yu Que, Yong-Bo Zheng, Qian-Wen Wang, Wei-Jian Liu, Yue-Tong Huang, Xiao-Xing Liu, Kai Yuan, and Wei Yan. 2022. Accessibility to digital mental health services among the general public throughout COVID-19: Trajectories, influencing factors and association with long-term mental health symptoms. International Journal of Environmental Research and Public Health 19: 3593. [Google Scholar] [CrossRef]

- Mindsolent. n.d. Available online: https://www.solentmind.org.uk/news-events/news/phased-return-to-mental-health-face-to-face-services/ (accessed on 5 January 2024).

- Musiat, Peter, Philip Goldstone, and Nicholas Tarrier. 2014. Understanding the acceptability of e-mental health-attitudes and expectations towards computerised self-help treatments for mental health problems. BMC Psychiatry 14: 109. [Google Scholar] [CrossRef]

- Niel, Gilles, Fabrice Boyrie, and David Virieux. 2015. Chemical bibliographic databases: The influence of term indexing policies on topic searches. New Journal of Chemistry 39: 8807–17. [Google Scholar] [CrossRef]

- Nwosu, Adaora, Samantha Boardman, Mustafa M Husain, and P. Murali Doraiswamy. 2022. Digital therapeutics for mental health: Is attrition the Achilles heel? Frontiers in Psychiatry 13: 900615. [Google Scholar] [CrossRef] [PubMed]

- Orsmond, Gael I., and Ellen S. Cohn. 2015. The distinctive features of a feasibility study: Objectives and guiding questions. OTJR: Occupation, Participation and Health 35: 169–77. [Google Scholar] [CrossRef]

- Park, Susanna Y., Chloe Nicksic Sigmon, Debra Boeldt, and Chloe A. Nicksic Sigmon. 2022. A Framework for the Implementation of Digital Mental Health Interventions: The Importance of Feasibility and Acceptability Research. Cureus 14: e29329. [Google Scholar] [CrossRef]

- Perski, Olga, and Camille E. Short. 2021. Acceptability of digital health interventions: Embracing the complexity. Translational Behavioral Medicine 11: 1473–80. [Google Scholar] [CrossRef]

- Pranckutė, Raminta. 2021. Web of Science (WoS) and Scopus: The titans of bibliographic information in today’s academic world. Publications 9: 12. [Google Scholar] [CrossRef]

- Prochaska, Judith J., Erin A. Vogel, Amy Chieng, Matthew Kendra, Michael Baiocchi, Sarah Pajarito, and Athena Robinson. 2021. A therapeutic relational agent for reducing problematic substance use (Woebot): Development and usability study. Journal of Medical Internet Research 23: e24850. [Google Scholar] [CrossRef]

- Renfrew, Melanie Elise, Darren Peter Morton, Jason Kyle Morton, and Geraldine Przybylko. 2021. The influence of human support on the effectiveness of digital mental health promotion interventions for the general population. Frontiers in Psychology 12: 716106. [Google Scholar] [CrossRef] [PubMed]

- Riboldi, Ilaria, Daniele Cavaleri, Angela Calabrese, Chiara Alessandra Capogrosso, Susanna Piacenti, Francesco Bartoli, Cristina Crocamo, and Giuseppe Carrà. 2023. Digital mental health interventions for anxiety and depressive symptoms in university students during the COVID-19 pandemic: A systematic review of randomized controlled trials. Revista de Psiquiatria y Salud Mental 16: 47–58. [Google Scholar] [CrossRef] [PubMed]

- Rice, Simon, John Gleeson, Christopher Davey, Sarah Hetrick, Alexandra Parker, Reeva Lederman, Greg Wadley, Greg Murray, Helen Herrman, and Richard Chambers. 2018. Moderated online social therapy for depression relapse prevention in young people: Pilot study of a ‘next generation’ online intervention. Early Intervention in Psychiatry 12: 613–25. [Google Scholar] [CrossRef] [PubMed]

- Robinson, Emma, Nickolai Titov, Gavin Andrews, Karen McIntyre, Genevieve Schwencke, and Karen Solley. 2010. Internet treatment for generalized anxiety disorder: A randomized controlled trial comparing clinician vs. technician assistance. PLoS ONE 5: e10942. [Google Scholar] [CrossRef]

- Roland, Jonty, Emma Lawrance, Tom Insel, and Helen Christensen. 2020. The Digital Mental Health Revolution: Transforming Care through Innovation and Scale-Up. Available online: https://www.wish.org.qa/wp-content/uploads/2021/08/044E.pdf (accessed on 5 January 2024).

- Sawrikar, Vilas, and Kellie Mote. 2022. Technology acceptance and trust: Overlooked considerations in young people’s use of digital mental health interventions. Health Policy and Technology 11: 100686. [Google Scholar] [CrossRef]

- Sekhon, Mandeep, Martin Cartwright, and Jill J. Francis. 2017. Acceptability of healthcare interventions: An overview of reviews and development of a theoretical framework. BMC Health Services Research 17: 88. [Google Scholar] [CrossRef]

- Semwanga, Agnes Rwashana, Hasifah Kasujja Namatovu, Swaib Kyanda, Mark Kaawaase, and Abraham Magumba. 2021. An ehealth Adoption Framework for Developing Countries: A Systematic Review. Health Informatics-An international Journal (HIJ) 10: 1–16. [Google Scholar] [CrossRef]

- Skivington, Kathryn, Lynsay Matthews, Sharon Anne Simpson, Peter Craig, Janis Baird, Jane M. Blazeby, Kathleen Anne Boyd, Neil Craig, David P French, and Emma McIntosh. 2021. A new framework for developing and evaluating complex interventions: Update of Medical Research Council guidance. BMJ 374: n2061. [Google Scholar] [CrossRef]

- Skute, Igors, Kasia Zalewska-Kurek, Isabella Hatak, and Petra de Weerd-Nederhof. 2019. Mapping the field: A bibliometric analysis of the literature on university–industry collaborations. The Journal of Technology Transfer 44: 916–47. [Google Scholar] [CrossRef]

- Suganuma, Shinichiro, Daisuke Sakamoto, and Haruhiko Shimoyama. 2018. An embodied conversational agent for unguided internet-based cognitive behavior therapy in preventative mental health: Feasibility and acceptability pilot trial. JMIR Mental Health 5: e10454. [Google Scholar] [CrossRef]

- The Scottish Government. 2021. NHS Recovery Plan 2021–2026. Available online: https://www.gov.scot/binaries/content/documents/govscot/publications/strategy-plan/2021/08/nhs-recovery-plan/documents/nhs-recovery-plan-2021-2026/nhs-recovery-plan-2021-2026/govscot%3Adocument/nhs-recovery-plan-2021-2026.pdf (accessed on 5 January 2024).

- Titov, Nickolai, Blake F. Dear, Luke Johnston, Carolyn Lorian, Judy Zou, Bethany Wootton, Jay Spence, Peter M McEvoy, and Ronald M. Rapee. 2013. Improving adherence and clinical outcomes in self-guided internet treatment for anxiety and depression: Randomised controlled trial. PLoS ONE 8: e62873. [Google Scholar] [CrossRef]

- Titov, Nickolai, Gavin Andrews, Matthew Davies, Karen McIntyre, Emma Robinson, and Karen Solley. 2010. Internet treatment for depression: A randomized controlled trial comparing clinician vs. technician assistance. PLoS ONE 5: e10939. [Google Scholar] [CrossRef]

- Torous, John, and Adam Haim. 2018. Dichotomies in the development and implementation of digital mental health tools. Psychiatric Services 69: 1204–6. [Google Scholar] [CrossRef]

- Witteveen, A. B., S. Young, P. Cuijpers, J. L. Ayuso-Mateos, C. Barbui, F. Bertolini, M. Cabello, C. Cadorin, N. Downes, and D. Franzoi. 2022. Remote mental health care interventions during the COVID-19 pandemic: An umbrella review. Behaviour Research and Therapy 159: 104226. [Google Scholar] [CrossRef]

- Zale, Arya, Meagan Lasecke, Katerina Baeza-Hernandez, Alanna Testerman, Shirin Aghakhani, Ricardo F Muñoz, and Eduardo L. Bunge. 2021. Technology and psychotherapeutic interventions: Bibliometric analysis of the past four decades. Internet Interventions 25: 100425. [Google Scholar] [CrossRef]

| Author | Documents | Countries | |

|---|---|---|---|

| 1 | Nickolai Titov | 14 | Australia |

| 2 | Blake F. Dear | 11 | Australia |

| 3 | David C. Mohr | 11 | USA |

| 4 | Mario Alvarez-Jimenez | 11 | Australia |

| 5 | Gerhard Andersson | 11 | Sweden |

| 6 | Helen Christensen | 10 | Australia |

| 7 | Pim Cuijpers | 9 | Vrije Universiteit Amsterdam |

| 8 | Dror Ben-zeev | 8 | USA |

| 9 | Helen Riper | 8 | Vrije Universiteit Amsterdam |

| 10 | Gavin Andrews | 7 | Australia |

| Ranking | Publication Titles | Record Count | Citations | Affiliations | Record Count | Citations | Countries | Record Count | Citations |

|---|---|---|---|---|---|---|---|---|---|

| 1 | JMIR Formative Research | 89 | 210 | University of Melbourne | 46 | 800 | USA | 391 | 5737 |

| 2 | JMIR Mental Health | 72 | 1893 | Kings College London | 41 | 638 | Australia | 193 | 3671 |

| 3 | Journal of Medical Internet Research | 59 | 1472 | University of Sydney | 34 | 492 | England | 163 | 1955 |

| 4 | Internet Interventions-The Application of Information Technology in Mental and Behavioural Health | 39 | 378 | University of Washington | 31 | 506 | Germany | 71 | 805 |

| 5 | International Journal of Environmental Research and Public Health | 28 | 240 | University of New South Wales | 29 | 950 | Canada | 70 | 475 |

| 6 | JMIR mHealth and uHealth | 24 | 617 | Stanford University | 24 | 252 | Netherlands | 67 | 880 |

| 7 | Frontiers in Psychiatry | 22 | 266 | Northwestern University | 22 | 381 | Sweden | 39 | 461 |

| 8 | Mindfulness | 14 | 116 | Monash University | 22 | 327 | Spain | 37 | 400 |

| 9 | Journal of Affective Disorders | 12 | 327 | Vrije University of Amsterdam | 21 | 244 | People’s Republic of China | 26 | 206 |

| 10 | BMC Psychiatry | 12 | 317 | University of Oxford | 20 | 161 | New Zealand | 22 | 289 |

| Keywords | Occurrences | Keywords | Occurrences |

|---|---|---|---|

| mhealth | 107 | e-health | 19 |

| mobile phone | 76 | online intervention | 18 |

| internet | 54 | web-based intervention | 16 |

| digital health | 47 | mobile applications | 15 |

| mobile health | 45 | internet intervention | 14 |

| ehealth | 42 | internet-based intervention | 14 |

| telehealth | 38 | web-based | 14 |

| telemedicine | 37 | artificial intelligence | 13 |

| digital mental health | 33 | app | 12 |

| technology | 33 | digital | 12 |

| smartphone | 32 | conversational agent | 11 |

| digital intervention | 30 | icbt | 11 |

| e-mental health | 29 | internet interventions | 11 |

| online | 27 | chatbot | 10 |

| mobile apps | 26 | ||

| virtual reality | 22 | ||

| mobile app | 21 | ||

| Keyword | Occurrences | Keyword | Occurrences | Keyword | Occurrences |

|---|---|---|---|---|---|

| Acceptability | 56 | Social media | 11 | Workplace | 7 |

| Feasibility | 45 | Survey | 11 | Clinical trial | 6 |

| COVID-19 | 45 | Young adult | 11 | Mixed methods | 6 |

| Usability | 42 | Feasibility study | 10 | Qualitative evaluation | 6 |

| Adolescent | 32 | University students | 10 | Qualitative study | 6 |

| Adolescents | 32 | Attitudes | 9 | RCT | 6 |

| implementation | 24 | Pandemic | 9 | Barriers | 5 |

| Veterans | 21 | Pilot study | 9 | Breast cancer | 5 |

| Youth | 21 | Pregnancy | 9 | Chronic illness | 5 |

| Qualitative research | 20 | Usability testing | 9 | COVID-19 pandemic | 5 |

| Suicide | 16 | Acceptance | 8 | Cultural adaptation | 5 |

| Young people | 15 | Adherence | 8 | Homelessness | 5 |

| College students | 14 | Children | 8 | Nurses | 5 |

| Engagement | 14 | Development | 8 | Participatory design | 5 |

| Qualitative | 14 | HIV | 8 | Perception | 5 |

| Caregivers | 13 | Women | 8 | Perinatal | 5 |

| User experience | 13 | Young adults | 8 | Postpartum period | 5 |

| User-centered design | 13 | Child | 7 | Rural | 5 |

| Co-design | 11 | Dementia | 7 | User centered design | 5 |

| Older adults | 11 | Design | 7 | Veteran | 5 |

| Parents | 11 | Students | 7 |

| Ranking | Documents | Title | Citations |

|---|---|---|---|

| 1 | Fitzpatrick et al. (2017) | Delivering Cognitive Behavior Therapy to Young Adults With Symptoms of Depression and Anxiety Using a Fully Automated Conversational Agent (Woebot): A Randomized Controlled Trial | 655 |

| 2 | Donaghy et al. (2019) | Acceptability, benefits, and challenges of video consulting: a qualitative study in primary care | 242 |

| 3 | Titov et al. (2010) | Internet Treatment for Depression: A Randomized Controlled Trial Comparing Clinician vs. Technician Assistance | 235 |

| 4 | Ben-Zeev et al. (2013) | Development and usability testing of FOCUS: A smartphone system for self-management of schizophrenia. | 189 |

| 5 | Titov et al. (2013) | Improving Adherence and Clinical Outcomes in Self-Guided Internet Treatment for Anxiety and Depression: Randomised Controlled Trial | 173 |

| 6 | Huberty et al. (2019) | Efficacy of the Mindfulness Meditation Mobile App “Calm” to Reduce Stress Among College Students: Randomized Controlled Trial | 163 |

| 7 | Robinson et al. (2010) | Internet Treatment for Generalized Anxiety Disorder: A Randomized Controlled Trial Comparing Clinician vs. Technician Assistance | 162 |

| 8 | Musiat et al. (2014) | Understanding the acceptability of e-mental health—attitudes and expectations towards computerised self-help treatments for mental health problems | 150 |

| 9 | Comer et al. (2017) | Remotely delivering real-time parent training to the home: An initial randomized trial of Internet-delivered parent–child interaction therapy (I-PCIT). | 126 |

| 10 | Levin et al. (2017) | Web-Based Acceptance and Commitment Therapy for Mental Health Problems in College Students: A Randomized Controlled Trial | 124 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Armaou, M. Research Trends in the Study of Acceptability of Digital Mental Health-Related Interventions: A Bibliometric and Network Visualisation Analysis. Soc. Sci. 2024, 13, 114. https://doi.org/10.3390/socsci13020114

Armaou M. Research Trends in the Study of Acceptability of Digital Mental Health-Related Interventions: A Bibliometric and Network Visualisation Analysis. Social Sciences. 2024; 13(2):114. https://doi.org/10.3390/socsci13020114

Chicago/Turabian StyleArmaou, Maria. 2024. "Research Trends in the Study of Acceptability of Digital Mental Health-Related Interventions: A Bibliometric and Network Visualisation Analysis" Social Sciences 13, no. 2: 114. https://doi.org/10.3390/socsci13020114