Patient and Clinician Experiences with Sharing Data Visualizations Integrated into Mental Health Treatment

Abstract

:1. Introduction

2. Materials and Methods

2.1. Procedures

2.2. MindLAMP and Cortex

2.3. Researcher Characteristics

2.4. Semi-Structured Interviews

2.5. Data Analysis

3. Results

3.1. Patient-Focused Results

3.1.1. Quantitative Results

3.1.2. Most/Least Meaningful Visuals

3.1.3. Graph Literacy Survey

3.1.4. Thematic Analysis

3.2. Clinician-Focused Results

4. Discussion

4.1. General

4.2. Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ben-Zeev, Dror, Robert Drake, and Lisa Marsch. 2015. Clinical technology specialists. BMJ 350: h945. [Google Scholar] [CrossRef]

- Blease, Charlotte. 2022. Sharing online clinical notes with patients: Implications for nocebo effects and health equity. Journal of Medical ethics 49: 14–21. [Google Scholar] [CrossRef]

- Braun, Virginia, and Victoria Clarke. 2006. Using thematic analysis in psychology. Qualitative Research in Psychology 3: 77–101. [Google Scholar] [CrossRef]

- Brewer, Noel T., Melissa B. Gilkey, Sarah E. Lillie, Bradford W. Hesse, and Stacey L. Sheridan. 2012. Tables or bar graphs? presenting test results in Electronic Medical Records. Medical Decision Making 32: 545–53. [Google Scholar] [CrossRef] [PubMed]

- Chang, Sarah, Lucy Gray, and John Torous. 2023. Smartphone app engagement and clinical outcomes in a hybrid clinic. Psychiatry Research 319: 115015. [Google Scholar] [CrossRef]

- Cohen, Asher, John A. Naslund, Sarah Chang, Srilakshmi Nagendra, Anant Bhan, Abhijit Rozatkar, Jagadisha Thirthalli, Ameya Bondre, Deepak Tugnawat, Preethi V. Reddy, and et al. 2023. Relapse prediction in schizophrenia with smartphone digital phenotyping during COVID-19: A prospective, three-site, two-country, Longitudinal Study. Schizophrenia 9: 6. [Google Scholar] [CrossRef]

- Daley, Kate, Jonathan Richardson, Ian James, Annette Chambers, and David Corbett. 2013. Clinical dashboard: Use in older adult mental health wards. The Psychiatrist 37: 85–88. [Google Scholar] [CrossRef]

- Division of Digital Psychiatry at BIDMC. 2023. LAMP Platform. June 28. Available online: https://docs.lamp.digital/ (accessed on 1 August 2023).

- Faiola, Anthony, and Chris Newlon. 2011. Advancing Critical Care in the ICU: A human-centered biomedical data visualization systems. In Ergonomics and Health Aspects of Work with Computers. International Conference, EHAWC 2011, Orlando, FL, USA, 9–14 July 2011. Berlin/Heidelberg: Springer, pp. 119–28. [Google Scholar] [CrossRef]

- Grossman, Lisa V., Steven K. Feiner, Elliot G. Mitchell, and Ruth M. Masterson Creber. 2018. Leveraging Patient-Reported Outcomes Using Data Visualization. Applied Clinical Informatics 9: 565–75. [Google Scholar] [CrossRef]

- Henson, Philip, Pamela Peck, and John Torous. 2019. Considering the Therapeutic Alliance in Digital Mental Health Interventions. Harvard Review of Psychiatry 27: 268–73. [Google Scholar] [CrossRef]

- Hossain, Shammamah. 2019. Visualization of bioinformatics data with Dash Bio. Paper presented at the 18th Python in Science Conference, Austin, TX, USA, July 8–14; vol. 126, p. 133. [Google Scholar]

- Hunter, John D. 2007. Matplotlib: A 2D graphics environment. Computing in Science & Engineering 9: 90–95. [Google Scholar] [CrossRef]

- Kim, Sung-Hee. 2022. A systematic review on visualizations for self-generated health data for daily activities. International Journal of Environmental Research and Public Health 19: 11166. [Google Scholar] [CrossRef] [PubMed]

- Lenze, Eric, John Torous, and Patricia Arean. 2023. Digital and precision clinical trials: Innovations for testing mental health medications, devices, and psychosocial treatments. Neuropsychopharmacology. [Google Scholar] [CrossRef]

- Macrynikola, Natalia, Nicolas Nguyen, Erlend Lane, Shirley Yen, and John Torous. 2023. The Digital Clinic: An innovative mental health care delivery model utilizing hybrid synchronous and asynchronous treatment. NEJM Catalyst 4: 9. [Google Scholar] [CrossRef]

- Mohr, David C., Stephen M. Schueller, Kathryn N. Tomasino, Susan M. Kaiser, Nameyeh Alam, Chris Karr, Jessica L. Vergara, Elizabeth L. Gray, Mary J. Kwasny, and Emily G. Lattie. 2019. Comparison of the Effects of Coaching and Receipt of App Recommendations on Depression, Anxiety, and Engagement in the IntelliCare Platform: Factorial Randomized Controlled Trial. Journal of Medical Internet Research 21: e13609. [Google Scholar] [CrossRef]

- Noel, Valerie A., Elizabeth Carpenter-Song, Stephanie C. Acquilano, John Torous, and Robert E. Drake. 2019. The technology specialist: A 21st century support role in clinical care. NPJ Digital Medicine 2: 61. [Google Scholar] [CrossRef] [PubMed]

- Polhemus, Ashley, Jan Novak, Shazmin Majid, Sara Simblett, Daniel Morris, Stuart Bruce, Patrick Burke, Marissa F. Dockendorf, Gergely Temesi, and Til Wykes. 2022. Data visualization for chronic neurological and Mental Health Condition Self-management: Systematic review of User Perspectives. JMIR Mental Health 9: e25249. [Google Scholar] [CrossRef] [PubMed]

- Scheuer, Luke, and John Torous. 2022. Usable data visualization for digital biomarkers: An analysis of usability, Data Sharing, and clinician contact. Digital Biomarkers 6: 98–106. [Google Scholar] [CrossRef]

- Stadler, Jennifer G., Kipp Donlon, Jordan D. Siewert, Tessa Franken, and Nathaniel E. Lewis. 2016. Improving the efficiency and ease of healthcare analysis through use of data visualization dashboards. Big Data 4: 129–35. [Google Scholar] [CrossRef]

- Torous, John, Keris Jän Myrick, Natali Rauseo-Ricupero, and Joseph Firth. 2020. Digital Mental Health and COVID-19: Using technology today to accelerate the curve on access and quality Tomorrow. JMIR Mental Health 7: 3. [Google Scholar] [CrossRef]

- Torous, John, Matthew V. Kiang, Jeanette Lorme, and Jukka -P. Onnela. 2016. New Tools for New Research in Psychiatry: A Scalable and customizable platform to empower data driven smartphone research. JMIR Mental Health 3: e5165. [Google Scholar] [CrossRef]

- Turchioe, Meghan R., Annie Myers, Samuel Isaac, Dawon Baik, Lisa V. Grossman, Jessica S. Ancker, and Ruth M. Creber. 2019. A systematic review of patient-facing visualizations of personal health data. Applied Clinical Informatics 10: 751–70. [Google Scholar] [CrossRef]

- Vaidyam, Aditya, John Halamka, and John Torous. 2022. Enabling research and clinical use of patient-generated health data (the mindLAMP Platform): Digital phenotyping study. JMIR mHealth and uHealth 10: e30557. [Google Scholar] [CrossRef]

- van Os, Jim, Simone Verhagen, Anne Marsman, Frank Peeters, Maarten Bak, Machteld Marcelis, Marjan Drukker, Ulrich Reininghaus, Nele Jacobs, Tineke Lataster, and et al. 2017. The experience sampling method as an mHealth tool to support self-monitoring, self-insight, and personalized health care in clinical practice. Depress Anxiety 34: 481–93. [Google Scholar] [CrossRef]

- VanderPlas, Jacob, Brian Granger, Jeffrey Heer, Dominik Moritz, Kanit Wongsuphasawat, Arvind Satyanarayan, Eitan Lees, Ilia Timofeev, Ben Welsh, and Scott Sievert. 2018. Altair: Interactive statistical visualizations for python. Journal of Open Source Software 3: 1057. [Google Scholar] [CrossRef]

- Waskom, Michael. 2021. Seaborn: Statistical data visualization. Journal of Open Source Software 6: 3021. [Google Scholar] [CrossRef]

- West, Vivian L., David Borland, and W. Ed Hammond. 2014. Innovative Information Visualization of Electronic Health Record Data: A systematic review. Journal of the American Medical Informatics Association 22: 330–39. [Google Scholar] [CrossRef] [PubMed]

- Wilbanks, Bryan A., and Patsy A. Langford. 2014. A review of dashboards for data analytics in nursing. CIN: Computers Informatics Nursing 32: 545–49. [Google Scholar] [CrossRef] [PubMed]

- Wu, Danny T. Y., Chen Xin, Shwetha Bindhu, Catherine Xu, Jyoti Sachdeva, Jennifer L. Brown, and Heekyoung Jung. 2020. Clinician Perspectives and Design Implications in Using Patient-Generated Health Data to Improve Mental Health Practices: Mixed Methods Study. JMIR Formative Research 4: e18123. [Google Scholar] [CrossRef]

| Sample Characteristics | N (%) | Mean | SD | |

|---|---|---|---|---|

| Age (years), mean (SD) | 37 | 10.7 | ||

| Gender | ||||

| Male | 5 (50%) | |||

| Female | 5 (50%) | |||

| Race | ||||

| White | 8 (80%) | |||

| Asian | 2 (20%) | |||

| Total | 10 | |||

| Visual Type | Example |

|---|---|

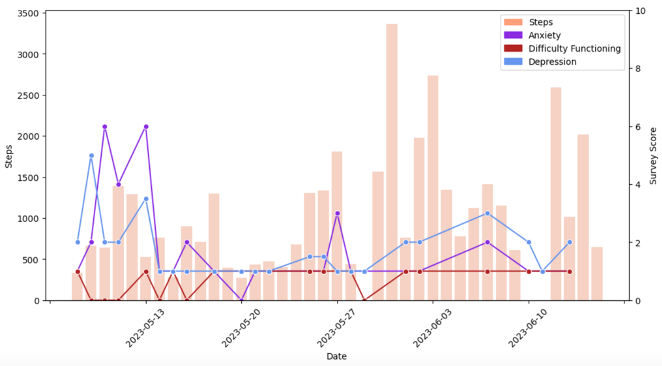

| Passive Data Bar Graphs |  |

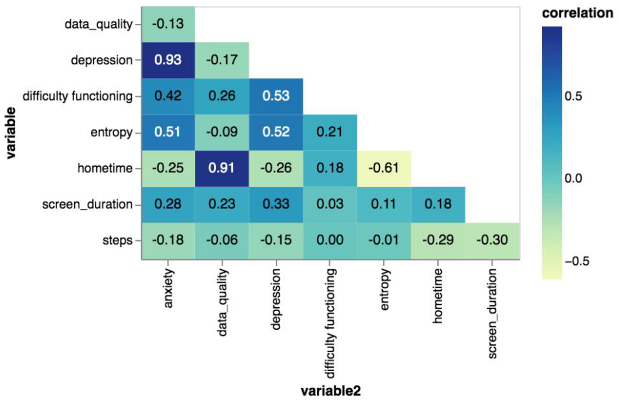

| Correlation Matrices |  |

| Calendar Charts |  |

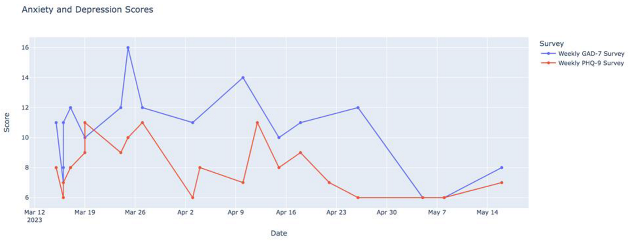

| Longitudinal Symptom Graphs |  |

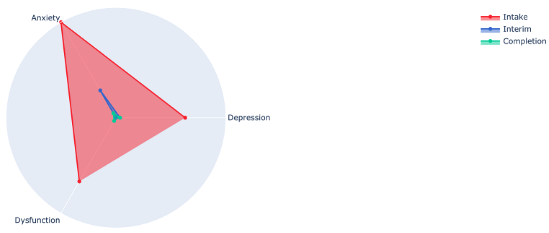

| Radar Plots |  |

| Yes (%) | No (%) | |

|---|---|---|

| Understood the visualizations | 9 (90%) | 1 (10%) |

| Found the visualizations meaningful | 9 (90%) | 1 (10%) |

| Found the data/visualizations accurate | 9 (90%) | 1 (10%) |

| Theme | Quote |

|---|---|

| Prompt reflection and action | I like that they just create a record and show trends because with depression and anxiety you can get into a little bit of a fog sometimes and they just show like I said the trends and they can also show milestones and if you’re feeling like you’re doing better and you’re actually doing better and not just in your head |

| The various surveys were really helpful to see like the trends within those because I had a lot of personal things going on during this time that I was in this that were really stressful in different ways and being able to see how those outside events were like directly related like if I went back and looked at the timing was helpful | |

| I mean it shows on a screen something that I might not even notice is happening or so it’s good to see it like that. If it’s not good stuff I change it–my ways or whatever–to be better so it’s definitely interesting to see it | |

| Again, I guess because it just defines when you are feeling something at the moment you don’t really maybe can define it but then when you look back you can think about it a little bit more and try to understand what’s happening | |

| Validation and motivation | It was just incredibly validating. It just confirmed that my response was consistent to the sort of severity of the circumstances. |

| The graphs just kind of proved it to me that the sleep disturbances were really affecting how the depression affected me during the day. […] So that was important when I saw that sleep was definitely a big effect on the long COVID and the depression and everything. | |

| Need for digital navigator | I’ve done these kinds of surveys before. Not very often, but I’ve had my data collected before because I’m quite the complicated patient. People like to study me. I think this one [study] was very nice though because I got a very good explanation of the data afterwards. And that made the difference. That it wasn’t straining to figure out what all the information meant |

| Thank you for taking the time to go through the results. Especially the last three charts so now I feel like my life has changed, so thank you. | |

| I mean like it’s definitely helpful if someone can explain what the graph means for you and maybe can point out, maybe you have this problem sleeping at this like this particular time period |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, S.; Gray, L.; Alon, N.; Torous, J. Patient and Clinician Experiences with Sharing Data Visualizations Integrated into Mental Health Treatment. Soc. Sci. 2023, 12, 648. https://doi.org/10.3390/socsci12120648

Chang S, Gray L, Alon N, Torous J. Patient and Clinician Experiences with Sharing Data Visualizations Integrated into Mental Health Treatment. Social Sciences. 2023; 12(12):648. https://doi.org/10.3390/socsci12120648

Chicago/Turabian StyleChang, Sarah, Lucy Gray, Noy Alon, and John Torous. 2023. "Patient and Clinician Experiences with Sharing Data Visualizations Integrated into Mental Health Treatment" Social Sciences 12, no. 12: 648. https://doi.org/10.3390/socsci12120648