Intraobserver and Interobserver Agreement between Six Radiologists Describing mpMRI Features of Prostate Cancer Using a PI-RADS 2.1 Structured Reporting Scheme

Abstract

:1. Introduction

2. Materials and Methods

2.1. Dataset

2.2. Structured Report Scheme

2.3. Radiological Assessment

- Three specialists with diagnostic experience of one to five years;

- Three specialists with more than ten years of diagnostic experience and at least five years of experience using the PI-RADS standard (since the first version of the standard).

2.4. Statistical Analysis

3. Results

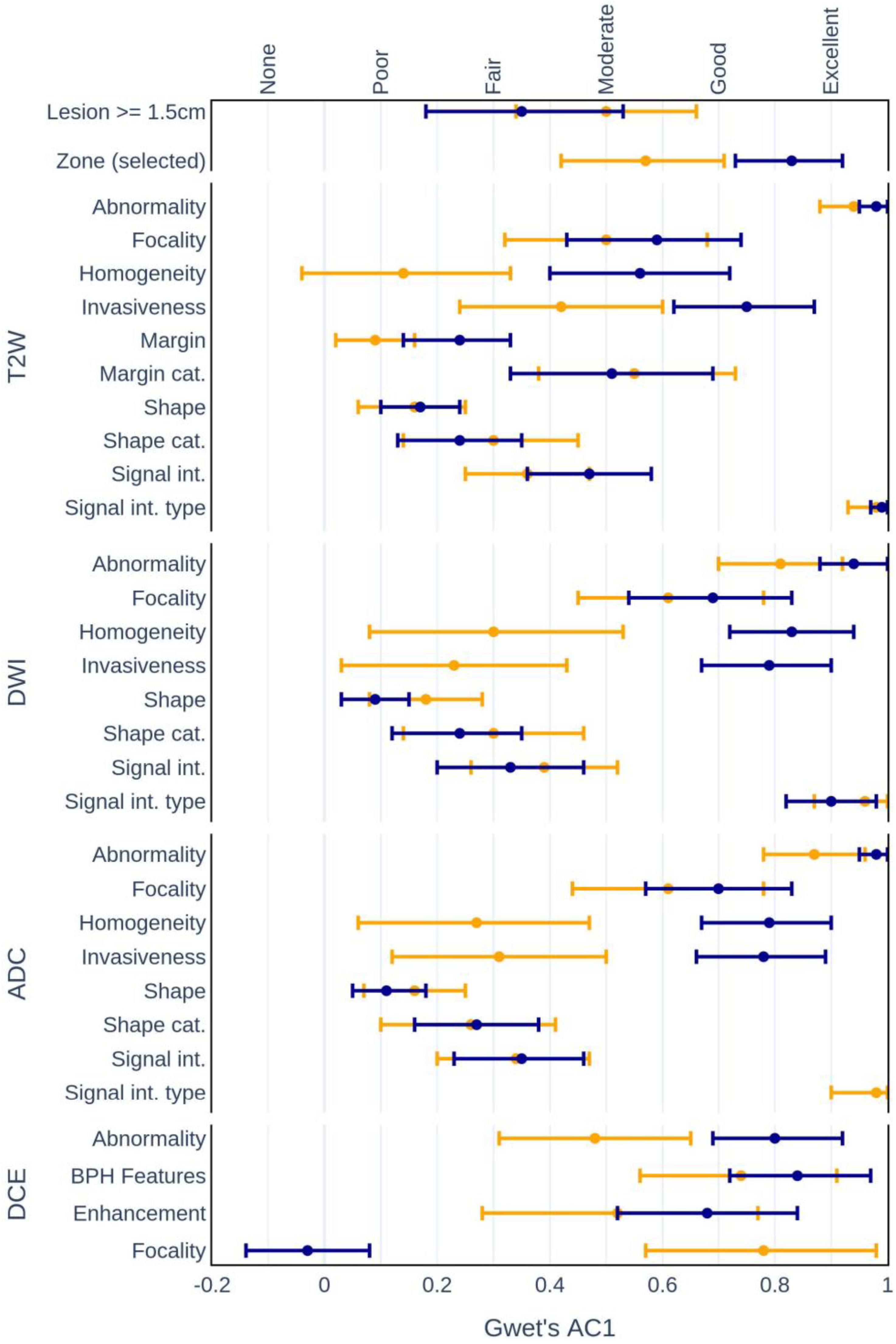

3.1. Interrater Agreement

- •

- Zonal locations of lesions:

- –

- Experienced AC1 = 0.57, p < 0.001 vs. Inexperienced AC1 = 0.83, p < 0.001.

- •

- Homogeneity on:

- –

- T2W (AC1 = 0.14, p = 0.13 vs. AC1 = 0.56, p < 0.001);

- –

- DWI (AC1 = 0.30, p < 0.01 vs. AC1 = 0.83, p < 0.001);

- –

- ADC (AC1 = 0.27, p < 0.05 vs. AC1 = 0.79, p < 0.001).

- •

- Invasiveness on:

- –

- T2W (AC1 = 0.42, p < 0.001 vs. AC1 = 0.75, p < 0.001);

- –

- DWI (AC1 = 0.30, p < 0.01 vs. AC1 = 0.83, p < 0.001);

- –

- ADC (AC1 = 0.27, p < 0.05 vs. AC1 = 0.79, p < 0.001).

- •

- Abnormality detection on:

- –

- DCE (AC1 = 0.48, p < 0.001 vs. AC1 = 0.80, p < 0.001).

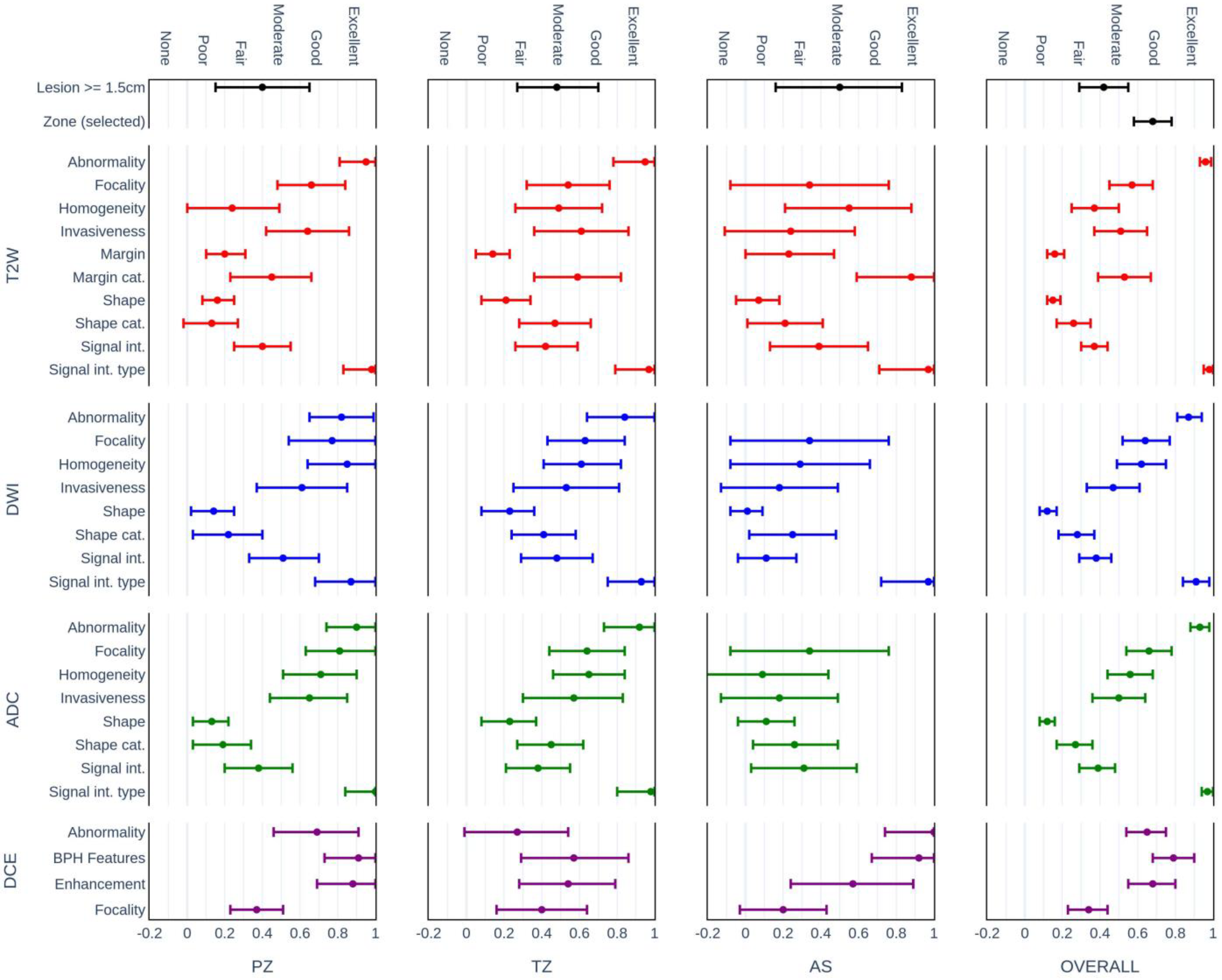

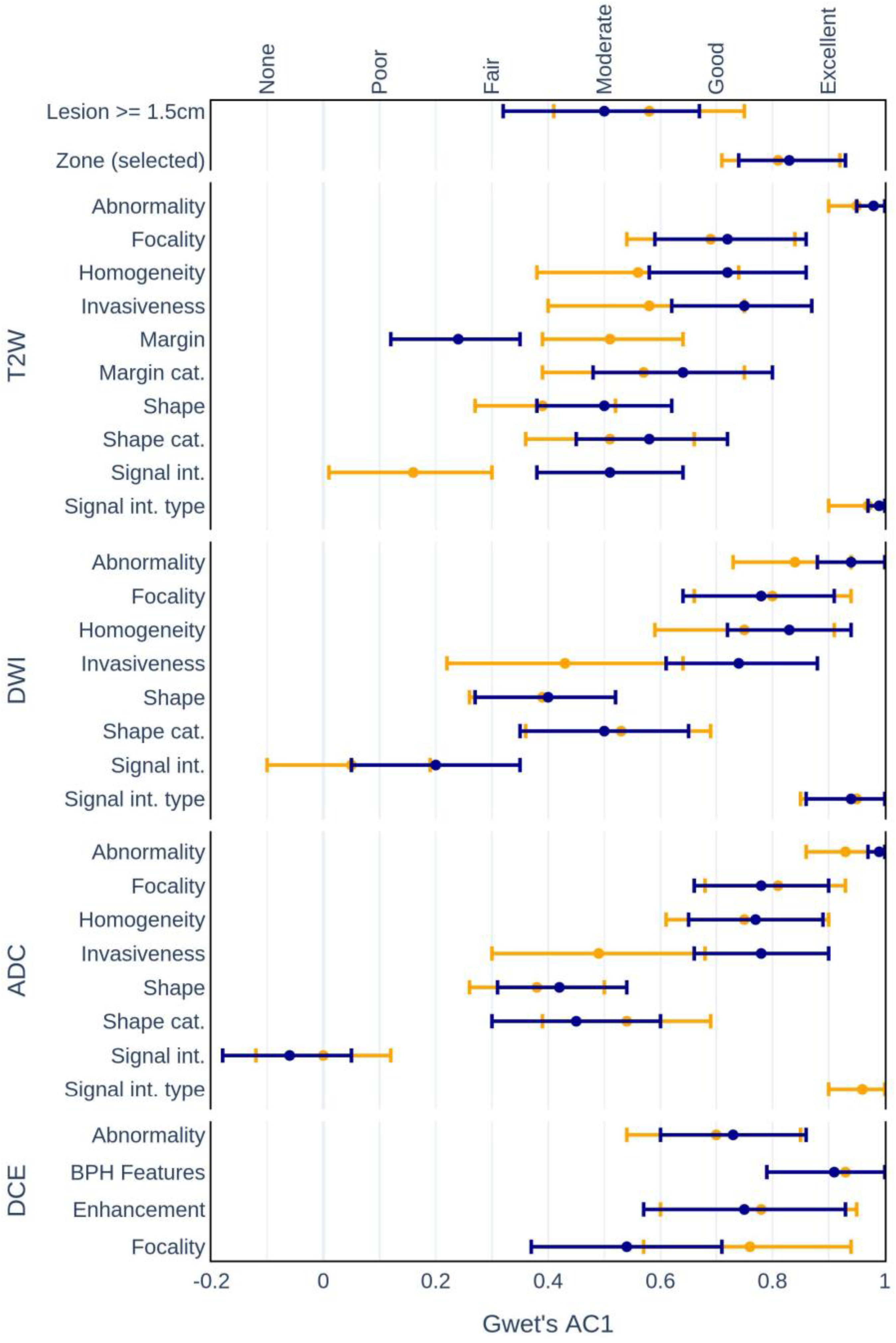

3.2. Intrarater Agreement of Defined CDEs

3.3. Agreement of the Assessment Categories

3.4. Diagnostic Accuracy Based on Category Assessment

3.5. Radiologists’ Opinions on System

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Carioli, G.; Bertuccio, P.; Boffetta, P.; Levi, F.; La Vecchia, C.; Negri, E.; Malvezzi, M. European cancer mortality predictions for the year 2020 with a focus on prostate cancer. Ann. Oncol. 2020, 31, 650–658. [Google Scholar] [CrossRef] [PubMed]

- de Rooij, M.; Hamoen, E.H.; Witjes, J.A.; Barentsz, J.O.; Rovers, M.M. Accuracy of Magnetic Resonance Imaging for Local Staging of Prostate Cancer: A Diagnostic Meta-analysis. Eur. Urol. 2016, 70, 233–245. [Google Scholar] [CrossRef] [PubMed]

- Bratan, F.; Niaf, E.; Melodelima, C.; Chesnais, A.L.; Souchon, R.; Mège-Lechevallier, F.; Colombel, M.; Rouvière, O. Influence of imaging and histological factors on prostate cancer detection and localisation on multiparametric MRI: A prospective study. Eur. Radiol. 2013, 23, 2019–2029. [Google Scholar] [CrossRef] [PubMed]

- Mottet, N.; van den Bergh, R.C.N.; Briers, E.; Van den Broeck, T.; Cumberbatch, M.G.; De Santis, M.; Fanti, S.; Fossati, N.; Gandaglia, G.; Gillessen, S.; et al. EAU-EANM-ESTRO-ESUR-SIOG Guidelines on Prostate Cancer—2020 Update. Part 1: Screening, Diagnosis, and Local Treatment with Curative Intent. Eur. Urol. 2021, 79, 243–262. [Google Scholar] [CrossRef] [PubMed]

- Witherspoon, L.; Breau, R.H.; Lavallee, L.T. Evidence-based approach to active surveillance of prostate cancer. World J. Urol. 2020, 38, 555–562. [Google Scholar] [CrossRef] [PubMed]

- Zapala, P.; Dybowski, B.; Bres-Niewada, E.; Lorenc, T.; Powala, A.; Lewandowski, Z.; Golebiowski, M.; Radziszewski, P. Predicting side-specific prostate cancer extracapsular extension: A simple decision rule of PSA, biopsy, and MRI parameters. Int. Urol. Nephrol. 2019, 1051, 1545–1552. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Barentsz, J.O.; Richenberg, J.; Clements, R.; Choyke, P.; Verma, S.; Villeirs, G.; Rouviere, O.; Logager, V.; Futterer, J.J.; European Society of Urogenital, R. ESUR prostate MR guidelines 2012. Eur. Radiol. 2012, 22, 746–757. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- American College of Radiology Committee on PI-RADS, MR Prostate Imaging Reporting and Data System Version 2.0. Available online: http://www.acr.org/Quality-Safety/Resources/PIRADS/ (accessed on 6 June 2022).

- American College of Radiology Committee on PI-RADS, PI-RADS: Prostate Imaging—Reporting and Data System. Version 2.1. Available online: https://www.acr.org/-/media/ACR/Files/RADS/Pi-RADS/PIRADS-V2-1.pdf?la=en (accessed on 6 June 2022).

- Westphalen, A.C.; McCulloch, C.E.; Anaokar, J.M.; Arora, S.; Barashi, N.S.; Barentsz, J.O.; Bathala, T.K.; Bittencourt, L.K.; Booker, M.T.; Braxton, V.G.; et al. Variability of the Positive Predictive Value of PI-RADS for Prostate MRI across 26 Centers: Experience of the Society of Abdominal Radiology Prostate Cancer Disease-focused Panel. Radiology 2020, 296, 76–84. [Google Scholar] [CrossRef] [PubMed]

- Greer, M.D.; Brown, A.M.; Shih, J.H.; Summers, R.M.; Marko, J.; Law, Y.M.; Sankineni, S.; George, A.K.; Merino, M.J.; Pinto, P.A.; et al. Accuracy and agreement of PIRADSv2 for prostate cancer mpMRI: A multireader study. J. Magn. Reson. Imaging 2017, 45, 579–585. [Google Scholar] [CrossRef] [PubMed]

- Purysko, A.S.; Bittencourt, L.K.; Bullen, J.A.; Mostardeiro, T.R.; Herts, B.R.; Klein, E.A. Accuracy and Interobserver Agreement for Prostate Imaging Reporting and Data System, Version 2, for the Characterization of Lesions Identified on Multiparametric MRI of the Prostate. AJR Am. J. Roentgenol. 2017, 209, 339–349. [Google Scholar] [CrossRef] [PubMed]

- Padhani, A.R.; Weinreb, J.; Rosenkrantz, A.B.; Villeirs, G.; Turkbey, B.; Barentsz, J. Prostate Imaging-Reporting and Data System Steering Committee: PI-RADS v2 Status Update and Future Directions. Eur. Urol. 2019, 75, 385–396. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rubin, D.L. Creating and curating a terminology for radiology: Ontology modeling and analysis. J. Digit. Imaging 2008, 21, 355–362. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- An, J.Y.; Unsdorfer, K.M.L.; Weinreb, J.C. BI-RADS, C-RADS, CAD-RADS, LI-RADS, Lung-RADS, NI-RADS, O-RADS, PI-RADS, TI-RADS: Reporting and Data Systems. Radiographics 2019, 39, 1435–1436. [Google Scholar] [CrossRef] [PubMed]

- Nobel, J.M.; Kok, E.M.; Robben, S.G.F. Redefining the structure of structured reporting in radiology. Insights Imaging 2020, 11, 10. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rubin, D.L.; Kahn, C.E., Jr. Common Data Elements in Radiology. Radiology 2017, 283, 837–844. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Litjens, G.; Debats, O.; Barentsz, J.; Karssemeijer, N.; Huisman, H. SPIE-AAPM PROSTATEx Challenge Data; The Cancer Imaging Archive (TCIA) Public Access: Manchester, NH, USA, 2017. [Google Scholar] [CrossRef]

- Gwet, K.L. Computing inter-rater reliability and its variance in the presence of high agreement. Br. J. Math. Stat. Psychol. 2008, 61, 29–48. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mussi, T.C.; Yamauchi, F.I.; Tridente, C.F.; Tachibana, A.; Tonso, V.M.; Recchimuzzi, D.Z.; Leao, L.R.S.; Luz, D.C.; Martins, T.; Baroni, R.H. Interobserver agreement of PI-RADS v. 2 lexicon among radiologists with different levels of experience. J. Magn. Reson. Imaging 2020, 51, 593–602. [Google Scholar] [CrossRef] [PubMed]

- Gwet K (2019) irrCAC: Computing chance-corrected agreement coefficients (CAC). R package version 1.0. Available online: https://CRAN.R-project.org/package=irrCAC (accessed on 30 December 2022).

- Kim, N.; Kim, S.; Prabhu, V.; Shanbhogue, K.; Smereka, P.; Tong, A.; Anthopolos, R.; Taneja, S.S.; Rosenkrantz, A.B. Comparison of Prostate Imaging and Reporting Data System V2.0 and V2.1 for Evaluation of Transition Zone Lesions: A 5-Reader 202-Patient Analysis. J. Comput. Assist. Tomogr. 2022, 46, 523–529. [Google Scholar] [CrossRef] [PubMed]

- Urase, Y.; Ueno, Y.; Tamada, T.; Sofue, K.; Takahashi, S.; Hinata, N.; Harada, K.; Fujisawa, M.; Murakami, T. Comparison of prostate imaging reporting and data system v2.1 and 2 in transition and peripheral zones: Evaluation of interreader agreement and diagnostic performance in detecting clinically significant prostate cancer. Br. J. Radiol. 2022, 95, 20201434. [Google Scholar] [CrossRef] [PubMed]

| Variable | Label | Related Radlex Terms | Possible Values |

|---|---|---|---|

| lesion_dim_max | Lesion max dimension (mm) | Diameter [RID13432] | <5, ≥5, ≥15 |

| lesion_location | Zone | Zone of prostate [RID38890] | PZ, TZ, Not Available |

| t2w_present_and_adequate | T2W present and adequate | Adequate [RID39308] | YES, NO |

| t2w_abnormality | T2W lesion present | Lesion [RID 38780] | YES, NO |

| t2w_invasive | T2W Invasive | Invasive [RID5680] | YES, NO |

| t2w_signal_intensity_type | T2W signal intensity type | Signal characteristic [RID6049] | Hypointense, Isointense, Hyperintense |

| t2w_signal_intensity | T2W signal intensity scale | Signal characteristic [RID6049] | Mild, Moderate, Marked |

| t2w_uniformity | T2W lesion uniformity | Uniformity descriptor [RID43293] | Homogeneous, Heterogeneous |

| t2w_focality | T2W focality | Focal [RID5702] | YES, NO |

| t2w_shape | T2W shape | Morphologic descriptor [RID5863] | Linear, Wedge, Lenticular, Water-Drop |

| t2w_shape_category | T2W shape category | Morphologic descriptor [RID5863] | Linear, Round, Irregular |

| t2w_margin | T2W margin | Margin [RID5972] | Indistinct, Obscured, Spiculated, Erased charcoal sign, Partly_Encapsulated, Encapsulated, Well_Defined |

| t2w_margin_category | T2W margin category | Margin [RID5972] | Circumscribed, Non_Circumscribed |

| adc_present_and_adequate | ADC present and adequate | Adequate [RID39308] | YES, NO |

| adc_abnormality | ADC lesion present | Lesion [RID38780] | YES, NO |

| adc_invasive | ADC invasive | Invasive [RID5680] | YES, NO |

| adc_signal_intensity_type | ADC signal intensity type | Signal characteristic [RID6049] | Hypointensitivity, Isointensitivity, Hyperintensitivity |

| adc_signal_intensity | ADC signal intensity scale | Signal characteristic [RID6049] | Mild, Moderate, Marked |

| adc_focality | ADC focality | Focal [RID5702] | YES, NO |

| adc_shape | ADC shape | Morphologic descriptor [RID5863] | Linear, Wedge, Lenticular, Water-Drop |

| adc_shape_category | ADC shape category | Morphologic descriptor [RID5863] | Linear, Round, Irregular |

| dwi_present_and_adequate | DWI present and adequate | Adequate [RID39308] | YES, NO |

| dwi_abnormality | DWI lesion present | Lesion [RID38780] | YES, NO |

| dwi_invasive | DWI invasive | Invasive [RID5680] | YES, NO |

| dwi_signal_intensity_type | DWI signal intensity type | Signal characteristic [RID6049] | Hypointense, Isointense, Hyperintense |

| dwi_signal_intensity | DWI signal intensity scale | Signal characteristic [RID6049] | Mild, Moderate, Marked |

| dwi_focality | DWI focality | Focal [RID5702] | YES, NO |

| dwi_shape | DWI shape | Morphologic descriptor [RID5863] | Linear, Wedge, Lenticular, Water-Drop |

| dwi_shape_category | DWI shape category | Morphologic descriptor [RID5863] | Linear, Round, Irregular |

| dce_present_and_adequate | Is DCE present and adequate? | Adequate [RID39308] | YES, NO |

| dce_abnormality | Does an abnormality appear on the DCE image? | Lesion [RID38780] | YES, NO |

| dce_enhancement | Enhancement pattern | Enhancement pattern [RID6058] | Positive_DCE, Negative_DCE |

| dce_corresponds_to | Corresponds to finding | MR tissue contrast attribute (Mr procedure attribute) [ RID10791] | T2, DWI, Not_Available |

| dce_bph_features | BPH features on T2 | Benign prostatic hyperplasia [RID3784] | YES, NO |

| Session 1 | Session 2 | Overall | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| PA | AC1 (95% CI) | p-Value | PA | AC1 (95% CI) | p-Value | PA | AC1 (95% CI) | p-Value | ||

| Modality | Feature | |||||||||

| OVERALL | Lesion >= 1.5 cm | 72.3 | 0.45 (0.26; 0.63) | <0.001 | 69.2 | 0.40 (0.21; 0.58) | <0.001 | 70.7 | 0.42 (0.29; 0.55) | <0.001 |

| Zone (selected) | 76.9 | 0.66 (0.51; 0.81) | <0.001 | 79.4 | 0.70 (0.56; 0.84) | <0.001 | 78.1 | 0.68 (0.58; 0.78) | <0.001 | |

| T2W | Abnormality | 94.0 | 0.94 (0.87; 1.00) | <0.001 | 99.0 | 0.99 (0.97; 1.00) | <0.001 | 96.5 | 0.96 (0.93; 0.99) | <0.001 |

| Focality | 66.6 | 0.48 (0.30; 0.65) | <0.001 | 74.4 | 0.65 (0.49; 0.80) | <0.001 | 70.5 | 0.57 (0.45; 0.68) | <0.001 | |

| Homogeneity | 63.9 | 0.38 (0.19; 0.56) | <0.001 | 62.7 | 0.37 (0.19; 0.54) | <0.001 | 63.3 | 0.37 (0.25; 0.50) | <0.001 | |

| Invasiveness | 68.1 | 0.45 (0.23; 0.66) | <0.001 | 72.7 | 0.57 (0.38; 0.77) | <0.001 | 70.4 | 0.51 (0.37; 0.65) | <0.001 | |

| Margin | 26.5 | 0.13 (0.06; 0.20) | <0.001 | 28.2 | 0.18 (0.11; 0.24) | <0.001 | 27.3 | 0.16 (0.12; 0.21) | <0.001 | |

| Margin cat. | 73.0 | 0.56 (0.36; 0.76) | <0.001 | 69.2 | 0.50 (0.29; 0.71) | <0.001 | 71.1 | 0.53 (0.39; 0.67) | <0.001 | |

| Shape | 27.4 | 0.18 (0.13; 0.23) | <0.001 | 23.0 | 0.13 (0.07; 0.18) | <0.001 | 25.2 | 0.15 (0.12; 0.19) | <0.001 | |

| Shape cat. | 46.2 | 0.22 (0.10; 0.35) | <0.01 | 50.6 | 0.31 (0.16; 0.45) | <0.001 | 48.4 | 0.26 (0.17; 0.35) | <0.001 | |

| Signal int. | 45.2 | 0.24 (0.15; 0.33) | <0.001 | 54.4 | 0.38 (0.24; 0.52) | <0.001 | 49.8 | 0.37 (0.30; 0.44) | <0.001 | |

| Signal int. type | 95.3 | 0.95 (0.90; 1.00) | <0.001 | 100.0 | 97.7 | 0.98 (0.95; 1.00) | <0.001 | |||

| DWI | Abnormality | 85.0 | 0.81 (0.69; 0.93) | <0.001 | 94.0 | 0.93 (0.87; 1.00) | <0.001 | 89.5 | 0.87 (0.81; 0.94) | <0.001 |

| Focality | 73.0 | 0.61 (0.41; 0.80) | <0.001 | 77.2 | 0.68 (0.50; 0.86) | <0.001 | 75.1 | 0.64 (0.52; 0.77) | <0.001 | |

| Homogeneity | 70.0 | 0.54 (0.33; 0.75) | <0.001 | 77.0 | 0.69 (0.53; 0.85) | <0.001 | 73.6 | 0.62 (0.49; 0.75) | <0.001 | |

| Invasiveness | 65.1 | 0.42 (0.22; 0.62) | <0.001 | 70.0 | 0.52 (0.32; 0.72) | <0.001 | 67.6 | 0.47 (0.33; 0.61) | <0.001 | |

| Shape | 25.2 | 0.16 (0.08; 0.23) | <0.001 | 20.3 | 0.10 (0.04; 0.15) | <0.01 | 22.7 | 0.12 (0.08; 0.17) | <0.001 | |

| Shape cat. | 46.8 | 0.24 (0.10; 0.38) | <0.01 | 51.2 | 0.31 (0.17; 0.46) | <0.001 | 49.0 | 0.28 (0.18; 0.37) | <0.001 | |

| Signal int. | 50.2 | 0.26 (0.13; 0.40) | <0.001 | 55.5 | 0.34 (0.18; 0.50) | <0.001 | 52.9 | 0.38 (0.29; 0.47) | <0.001 | |

| Signal int. type | 89.0 | 0.88 (0.75; 1.00) | <0.001 | 94.6 | 0.94 (0.87; 1.00) | <0.001 | 91.9 | 0.91 (0.84; 0.98) | <0.001 | |

| ADC | Abnormality | 92.3 | 0.91 (0.84; 0.99) | <0.001 | 94.4 | 0.94 (0.88; 1.00) | <0.001 | 93.3 | 0.93 (0.88; 0.98) | <0.001 |

| Focality | 74.2 | 0.64 (0.46; 0.82) | <0.001 | 76.4 | 0.67 (0.52; 0.83) | <0.001 | 75.3 | 0.66 (0.54; 0.78) | <0.001 | |

| Homogeneity | 65.3 | 0.49 (0.33; 0.65) | <0.001 | 73.6 | 0.63 (0.46; 0.80) | <0.001 | 69.5 | 0.56 (0.44; 0.68) | <0.001 | |

| Invasiveness | 67.1 | 0.45 (0.25; 0.65) | <0.001 | 71.2 | 0.54 (0.34; 0.75) | <0.001 | 69.2 | 0.50 (0.36; 0.64) | <0.001 | |

| Shape | 23.5 | 0.13 (0.08; 0.19) | <0.001 | 21.1 | 0.11 (0.05; 0.16) | <0.001 | 22.3 | 0.12 (0.08; 0.16) | <0.001 | |

| Shape cat. | 46.5 | 0.23 (0.09; 0.37) | <0.01 | 50.5 | 0.30 (0.17; 0.44) | <0.001 | 48.5 | 0.27 (0.17; 0.36) | <0.001 | |

| Signal int. | 47.4 | 0.24 (0.08; 0.41) | <0.01 | 59.9 | 0.44 (0.27; 0.61) | <0.001 | 53.6 | 0.39 (0.29; 0.48) | <0.001 | |

| Signal int. type | 94.8 | 0.95 (0.87; 1.00) | <0.001 | 100.0 | 97.4 | 0.97 (0.94; 1.00) | <0.001 | |||

| DCE | Abnormality | 77.9 | 0.64 (0.47; 0.82) | <0.001 | 75.0 | 0.65 (0.52; 0.78) | <0.001 | 76.5 | 0.65 (0.54; 0.75) | <0.001 |

| BPH features | 82.7 | 0.77 (0.57; 0.95) | <0.001 | 85.7 | 0.81 (0.67; 0.95) | <0.001 | 84.3 | 0.79 (0.68; 0.91) | <0.001 | |

| Enhancement | 79.3 | 0.75 (0.57; 0.93) | <0.001 | 75.7 | 0.60 (0.41; 0.79) | <0.001 | 77.3 | 0.68 (0.55; 0.80) | <0.001 | |

| Focality | 43.7 | 0.32 (0.17; 0.47) | <0.001 | 45.6 | 0.35 (0.19; 0.51) | <0.001 | 44.7 | 0.34 (0.23; 0.44) | <0.001 | |

| Session 1 | Session 2 | Overall | ||||

|---|---|---|---|---|---|---|

| Feature | PA | AC1 (95% CI) | PA | AC1 (95% CI) | PA | AC1 (95% CI) |

| T2W PI-RADS | 47.1 | 0.35 (0.26; 0.45) | 43.8 | 0.31 (0.19; 0.43) | 45.4 | 0.33 (0.26; 0.41) |

| DWI PI-RADS | 50.0 | 0.39 (0.28; 0.50) | 49.2 | 0.38 (0.29; 0.47) | 49.6 | 0.39 (0.32; 0.46) |

| DCE PI-RADS | 69.5 | 0.48 (0.28; 0.68) | 69.8 | 0.42 (0.24; 0.60) | 69.6 | 0.45 (0.31; 0.58) |

| OVERALL PI-RADS | 47.1 | 0.35 (0.25; 0.46) | 42.7 | 0.30 (0.19; 0.41) | 44.9 | 0.33 (0.26; 0.40) |

| Experienced | Inexperienced | Overall | ||||

|---|---|---|---|---|---|---|

| Feature | PA | AC1 (95% CI) | PA | AC1 (95% CI) | PA | AC1 (95% CI) |

| T2W PI-RADS | 72.3 | 0.66 (0.55; 0.78) | 60.4 | 0.51 (0.19; 0.43) | 66.3 | 0.59 (0.50; 0.41) |

| DWI PI-RADS | 61.3 | 0.53 (0.40; 0.66) | 61.7 | 0.53 (0.29; 0.47) | 61.5 | 0.53 (0.44; 0.46) |

| DCE PI-RADS | 75.0 | 0.51 (0.29; 0.73) | 76.8 | 0.59 (0.24; 0.60) | 76.0 | 0.55 (0.41; 0.69) |

| OVERALL PI-RADS | 67.0 | 0.60 (0.48; 0.72) | 61.5 | 0.53 (0.19; 0.41) | 64.2 | 0.56 (0.48; 0.65) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jóźwiak, R.; Sobecki, P.; Lorenc, T. Intraobserver and Interobserver Agreement between Six Radiologists Describing mpMRI Features of Prostate Cancer Using a PI-RADS 2.1 Structured Reporting Scheme. Life 2023, 13, 580. https://doi.org/10.3390/life13020580

Jóźwiak R, Sobecki P, Lorenc T. Intraobserver and Interobserver Agreement between Six Radiologists Describing mpMRI Features of Prostate Cancer Using a PI-RADS 2.1 Structured Reporting Scheme. Life. 2023; 13(2):580. https://doi.org/10.3390/life13020580

Chicago/Turabian StyleJóźwiak, Rafał, Piotr Sobecki, and Tomasz Lorenc. 2023. "Intraobserver and Interobserver Agreement between Six Radiologists Describing mpMRI Features of Prostate Cancer Using a PI-RADS 2.1 Structured Reporting Scheme" Life 13, no. 2: 580. https://doi.org/10.3390/life13020580