Vector-Valued Shepard Processes: Approximation with Summability

Abstract

:1. Introduction

2. Approximating by Vector-Valued Shepard Operators

- (i)

- If on , then it follows that the sequence is uniformly convergent to on ,

- (ii)

- If on , then one obtains the sequence is uniformly convergent to on .

- Can we preserve the approximation in Theorem 2 at “some sense” when or fails on (in the usual sense)?

3. Auxiliary Results and Demonstration of Theorem 1

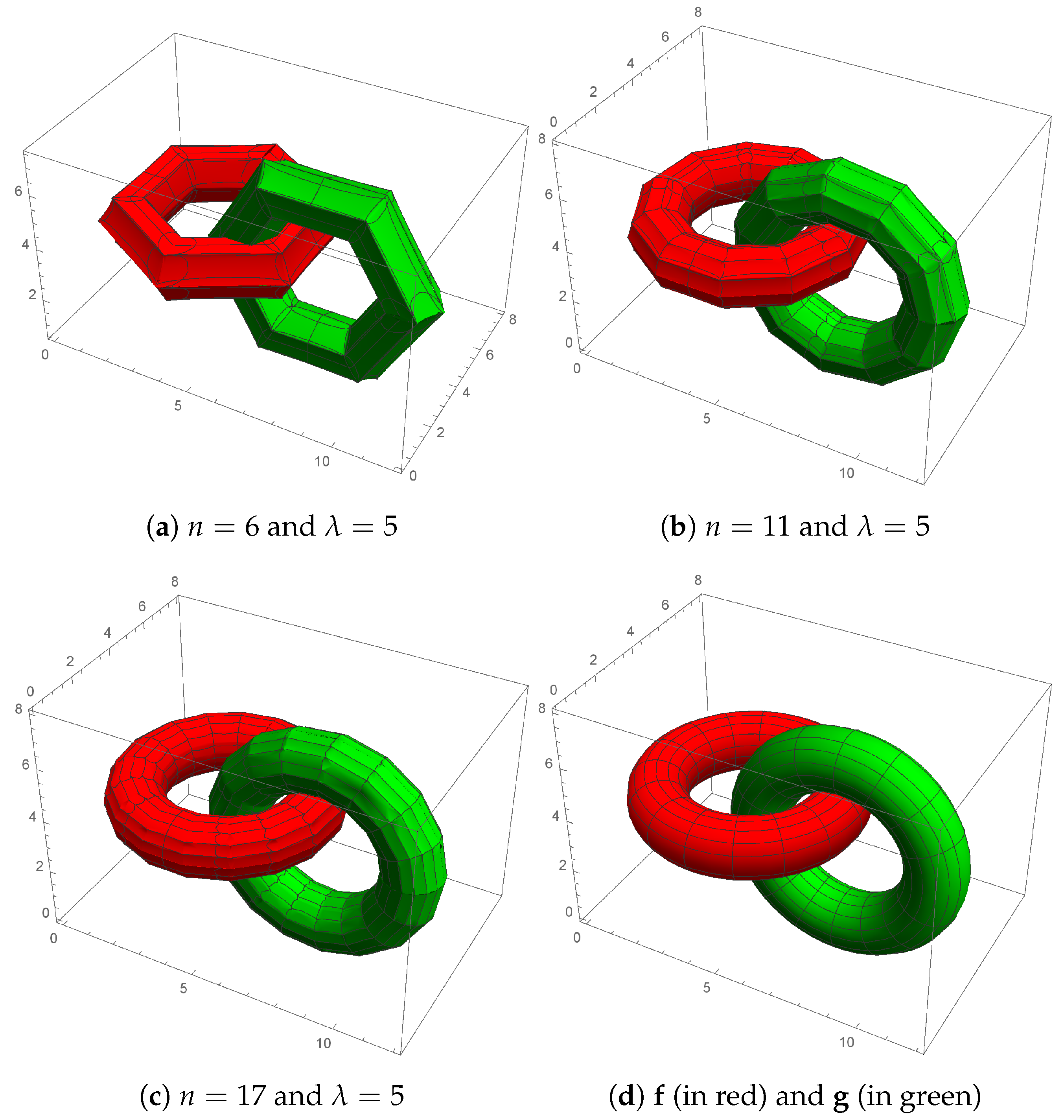

4. Applications and Special Cases

4.1. An Application of Theorem 1

4.2. Approximation Errors in Theorem 1

4.3. Effects of Regular Summability Methods

5. Concluding Remarks

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shepard, D. A two-dimensional interpolation function for irregularly-spaced data. In Proceedings of the 23rd ACM National Conference, New York, NY, USA, 27–29 August 1968; ACM: New York, NY, USA, 1968; pp. 517–524. [Google Scholar]

- Barnhill, R.; Dube, R.; Little, F. Properties of Shepard’s surfaces. Rocky Mt. J. Math. 1983, 13, 365–382. [Google Scholar] [CrossRef]

- Barnhill, R.; Ou, H. Surfaces defined on surfaces. Comput. Aided Geom. Des. 1990, 7, 323–336. [Google Scholar] [CrossRef]

- Cavoretto, R.; De Rossi, A.; Dell’Accio, F.; Di Tommaso, F. A 3D efficient procedure for Shepard interpolants on tetrahedra. In Numerical Computations: Theory and Algorithms; Sergeyev, Y.D., Kvasov, D.E., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 27–34. [Google Scholar] [CrossRef]

- Cavoretto, R.; De Rossi, A.; Dell’Accio, F.; Di Tommaso, F. An efficient trivariate algorithm for tetrahedral Shepard interpolation. J. Sci. Comput. 2020, 82, 57. [Google Scholar] [CrossRef]

- Dell’Accio, F.; Di Tommaso, F.; Hormann, K. On the approximation order of triangular Shepard interpolation. IMA J. Numer. Anal. 2016, 36, 359–379. [Google Scholar] [CrossRef]

- Dell’Accio, F.; Di Tommaso, F.; Nouisser, O.; Siar, N. Rational Hermite interpolation on six-tuples and scattered data. Appl. Math. Comput. 2020, 386, 125452. [Google Scholar] [CrossRef]

- Farwig, R. Rate of convergence of Shepard’s global interpolation formula. Math. Comput. 1986, 46, 577–590. [Google Scholar] [CrossRef]

- Criscuolo, G.; Mastroianni, G. Estimates of the Shepard interpolatory procedure. Acta Math. Hung. 1993, 61, 79–91. [Google Scholar] [CrossRef]

- Della Vecchia, B. Direct and converse results by rational operators. Constr. Approx. 1996, 12, 271. [Google Scholar] [CrossRef]

- Della Vecchia, B.; Mastroianni, G. Pointwise simultaneous approximation by rational operators. J. Approx. Theory 1991, 65, 140–150. [Google Scholar] [CrossRef]

- Szabados, J. Direct and converse approximation theorems for the Shepard operator. Approx. Theory Its Appl. 1991, 7, 63–76. [Google Scholar] [CrossRef]

- Yu, D. On weighted approximation by rational operators for functions with singularities. Acta Math. Hung. 2012, 136, 56–75. [Google Scholar] [CrossRef]

- Yu, D.; Zhou, S. Approximation by rational operators in Lp spaces. Math. Nachrichten 2009, 282, 1600–1618. [Google Scholar] [CrossRef]

- Zhou, X. The saturation class of Shepard operators. Acta Math. Hung. 1998, 80, 293–310. [Google Scholar] [CrossRef]

- Duman, O.; Della Vecchia, B. Complex Shepard operators and their summability. Results Math. 2021, 76, 214. [Google Scholar] [CrossRef]

- Duman, O.; Della Vecchia, B. Approximation to integrable functions by modified complex Shepard operators. J. Math. Anal. Appl. 2022, 512, 126161. [Google Scholar] [CrossRef]

- Boos, J.; Cass, P. Classical and Modern Methods in Summability; Oxford Mathematical Monographs, Oxford University Press: Oxford, UK, 2000; p. 600. [Google Scholar]

- Hardy, G.H. Divergent Series; Clarendon Press: Oxford, UK, 1949. [Google Scholar]

- Atlihan, Ö.G.; Orhan, C. Matrix summability and positive linear operators. Positivity 2007, 11, 387–398. [Google Scholar] [CrossRef]

- Atlihan, Ö.G.; Orhan, C. Summation process of positive linear operators. Comput. Math. Appl. 2008, 56, 1188–1195. [Google Scholar] [CrossRef]

- Gökçer, T.Y.; Duman, O. Regular summability methods in the approximation by max-min operators. Fuzzy Sets Syst. 2021, 426, 106–120. [Google Scholar] [CrossRef]

- King, J.P.; Swetits, J.J. Positive linear operators and summability. J. Aust. Math. Soc. 1970, 11, 281–290. [Google Scholar] [CrossRef]

- Mohapatra, R.N. Quantitative results on almost convergence of a sequence of positive linear operators. J. Approx. Theory 1977, 20, 239–250. [Google Scholar] [CrossRef]

- Nishishiraho, T. Convergence rates of summation processes of convolution type operators. J. Nonlinear Convex Anal. 2010, 11, 137–156. [Google Scholar]

- Swetits, J. On summability and positive linear operators. J. Approx. Theory 1979, 25, 186–188. [Google Scholar] [CrossRef]

- Vanderbei, R.J. Uniform Continuity Is Almost Lipschitz Continuity; Statistics and Operations Research Series SOR-91 11; Princeton University: Princeton, NJ, USA, 1991. [Google Scholar]

- Coroianu, L.; Costarelli, D.; Gal, S.G.; Vinti, G. Approximation by multivariate max-product Kantorovich-type operators and learning rates of least-squares regularized regression. Commun. Pure Appl. Anal. 2020, 19, 4213–4225. [Google Scholar] [CrossRef]

- Costarelli, D.; Vinti, G. Approximation by max-product neural network operators of Kantorovich type. Results Math. 2016, 69, 505–519. [Google Scholar] [CrossRef]

- Costarelli, D.; Vinti, G. An inverse result of approximation by sampling Kantorovich series. Proc. Edinb. Math. Soc. 2019, 62, 265–280. [Google Scholar] [CrossRef]

| n | |||

|---|---|---|---|

| 20 | 0.35358 | 0.10099 | 0.13239 |

| 50 | 0.12529 | 0.04051 | 0.04970 |

| 80 | 0.08197 | 0.02478 | 0.03056 |

| n | |||

|---|---|---|---|

| 20 | 0.24870 | 0.09654 | 0.11228 |

| 50 | 0.13200 | 0.03767 | 0.04773 |

| 80 | 0.08747 | 0.02391 | 0.03031 |

| n | |||

|---|---|---|---|

| 20 | 0.09299 | 0.03291 | 0.04278 |

| 50 | 0.03544 | 0.01313 | 0.01598 |

| 80 | 0.02172 | 0.00799 | 0.00980 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Duman, O.; Vecchia, B.D. Vector-Valued Shepard Processes: Approximation with Summability. Axioms 2023, 12, 1124. https://doi.org/10.3390/axioms12121124

Duman O, Vecchia BD. Vector-Valued Shepard Processes: Approximation with Summability. Axioms. 2023; 12(12):1124. https://doi.org/10.3390/axioms12121124

Chicago/Turabian StyleDuman, Oktay, and Biancamaria Della Vecchia. 2023. "Vector-Valued Shepard Processes: Approximation with Summability" Axioms 12, no. 12: 1124. https://doi.org/10.3390/axioms12121124