A Survey of Methods for Symmetry Detection on 3D High Point Density Models in Biomedicine

Abstract

:1. Introduction

- -

- 2D symmetry: symmetry line

- -

- 3D symmetry: symmetry plane

2. Symmetry Line

- -

- Cutaneous marking-based methods

- -

- Parallel sections-based methods

- -

- Adaptive sections-based methods

2.1. Cutaneous Marking-Based Methods

2.2. Parallel Sections-Based Methods

- -

- ;

- -

- .

2.3. Adaptive Sections-Based Methods

3. Symmetry Plane

- Extended Gaussian image (EGI)

- Mirroring and registration

3.1. Adaptive Sections-Based Methods

3.2. Adaptive Sections-Based Methods

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Furferi, R.; Governi, L.; Uccheddu, M.F.; Volpe, Y. A RGB-D based instant body-scanning solution for compact box installation. Adv. Mech. Des. Eng. Manuf. 2017, 387–396. [Google Scholar] [CrossRef]

- Fatuzzo, G.; Sequenzia, G.; Oliveri, S.M. Virtual anthropology and rapid prototyping: A study of Vincenzo Bellini’s death masks in relation to autopsy documentation. Digit. Appl. Archaeol. Cult. Herit. 2016, 3, 117–125. [Google Scholar] [CrossRef]

- Thompson, D.W. On Growth and Form; Cambridge University Press: Cambridge, UK, 1942. [Google Scholar]

- Martinet, A.; Soler, C.; Holzschuch, N.; Sillion, F.X. Accurate detection of symmetries in 3D shapes. ACM Trans. Graph. Assoc. Comput. Mach. 2006, 25, 439–464. [Google Scholar] [CrossRef] [Green Version]

- Mitra, N.J.; Pauly, M.; Wand, M.; Ceylan, D. Symmetry in 3D Geometry: Extraction and Applications. In Eurographics State-of-the-Art Report; The Eurographics Association: London, UK, 2012; pp. 1–23. [Google Scholar]

- Jiang, W.; Xu, K.; Cheng, Z.Q.; Zhang, H. Skeleton-based intrinsic symmetry detection on point clouds. Graph. Models 2013, 75, 177–188. [Google Scholar] [CrossRef]

- Mitra, N.J.; Pauly, M.; Wand, M.; Ceylan, D. Symmetry in 3D geometry: Extraction and applications. Comput. Graph. Forum 2013, 32, 1–23. [Google Scholar] [CrossRef]

- Bokeloh, M.; Berner, A.; Wand, M.; Seidel, H.-P. Andreas schilling: Symmetry detection using feature lines. Comput. Graph. Forum 2009, 28, 697–706. [Google Scholar] [CrossRef]

- Ovsjanikov, M.; Sun, J.; Guibas, L. Global Intrinsic Symmetries of Shapes. Comput. Graph. Forum 2008, 27, 1341–1348. [Google Scholar] [CrossRef] [Green Version]

- Drerup, B.; Hierholzer, E. Back shape measurement using video rasterstereography and 3-dimensional reconstruction of spinal shape. Clin. Biomech. 1994, 9, 28–36. [Google Scholar] [CrossRef]

- Di Angelo, L.; Di Stefano, P. A computational method for bilateral symmetry recognition in asymmetrically scanned human faces. Comput. Aided Des. Appl. 2014, 1, 275–283. [Google Scholar] [CrossRef]

- Turner-Smith, A.R.; Harris, J.D.; Houghton, G.R.; Jefferson, R.J. A method for analysis of back shape in scoliosis. J. Biomech. 1988, 21, 497–509. [Google Scholar] [CrossRef]

- Sotoca, J.M.; Buendia, M.; Inesta, J.M.; Ferri, F.J. Geometric properties of the 3D spine curve. Lect. Notes Comput. Sci. 2003, 2652, 1003–1011. [Google Scholar]

- Huysmans, T.; Haex, B.; Van Audekercke, R.; Vander Sloten, J.; Van der Perre, G. Three-dimensional mathematical reconstruction of the spinal shape based on active contours. J. Biomech. 2004, 7, 1793–1798. [Google Scholar] [CrossRef] [PubMed]

- Santiesteban, Y.; Sanchez, J.M.; Sotoca, J.M. A method for detection and modelling of the human spine based on principal curvature. Prog. Pattern Recognit. Image Anal. Appl. 2006, 168–177. [Google Scholar] [CrossRef]

- Di Angelo, L.; Di Stefano, P.; Vinciguerra, M.G. Experimental validation of a new method for symmetry line detection. Comput. Aided Des. Appl. 2011, 8, 71–86. [Google Scholar] [CrossRef]

- Di Angelo, L.; Di Stefano, P.; Spezzaneve, A. An iterative method to detect symmetry line falling far outside the sagittal plane. Int. J. Interact. Des. Manuf. 2012, 4, 233–240. [Google Scholar] [CrossRef]

- Di Angelo, L.; Di Stefano, P.; Spezzaneve, A. Symmetry line detection for non-erected postures. Int. J. Interact. Des. Manuf. 2013, 7, 271–276. [Google Scholar] [CrossRef]

- Di Angelo, L.; Di Stefano, P.; Spezzaneve, A. A method for 3D symmetry line detection in asymmetric postures. Comput. Methods Biomech. Biomed. Eng. 2013, 16, 1213–1220. [Google Scholar] [CrossRef] [PubMed]

- Sun, C.; Sherrah, J. 3D symmetry detection using the extended Gaussian image. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 164–168. [Google Scholar] [CrossRef] [Green Version]

- Pan, G.; Wang, Y.; Qi, Y.; Wu, Z. Finding symmetry plane of 3D face shape. In Proceedings of the International Conference on Pattern Recognition, Hong Kong, China, 20–24 August 2006; pp. 1143–1146. [Google Scholar]

- Ikemitsu, H.; Zeze, R.; Yuasa, K.; Izumi, K. The relationship between jaw deformity and scoliosis. Oral Radiol. 2006, 22, 14–17. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. A method for registration of 3D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Benz, M.; Laboureu, X.; Maier, T.; Nkenke, E.; Seeger, S.; Neukam, F.W.; Häusler, G. The symmetry of faces. In Proceedings of the Vision, Modeling, and Visualization Conference 2002 (VMV 2002), Erlangen, Germany, 20–22 November 2002. [Google Scholar]

- Seeger, S.; Laboureux, X.; Häusler, G. An accelerated ICP algorithm. In Lehrstuhl für Optik; Annual Report; Friedrich-Alexander-Universität: Erlangen, Germany, 2001. [Google Scholar]

- De Momi, E.; Chapuis, J.; Pappas, I.; Ferrigno, G.; Hallermann, W.; Schramm, A.; Caversaccio, M. Automatic extraction of the mid-facial plane for craniomaxillofacial surgery planning. Int. J. Maxillofac. Surg. 2006, 35, 636–642. [Google Scholar] [CrossRef] [PubMed]

- Colbry, D.; Stockman, G. Canonical face depth map: A robust 3D representation for face verification. In Proceedings of the 2007 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’07), Minneapolis, MN, USA, 17–22 June 2007; pp. 1–7. [Google Scholar]

- Pearson, K. On lines and planes of closest fit to systems of points in space. Phil. Mag. 1901, 2, 559–572. [Google Scholar] [CrossRef]

- Tang, X.M.; Chen, J.S.; Moon, Y.S. Accurate 3D face registration based on the symmetry plane analysis on nose regions. In Proceedings of the 16th European Signal Processing Conference (EUSIPCO), Lausanne, Switzerland, 25–29 August 2008. [Google Scholar]

- Zhang, L.; Razdan, A.; Farin, G.; Bae, M.S.; Femiani, J. 3D face authentication and recognition based in bilateral symmetry analysis. J. Vis. Comput. 2006, 22, 43–55. [Google Scholar] [CrossRef]

- Combès, B.; Hennessy, R.; Waddington, J.; Roberts, N.; Prima, S. An algorithm to map asymmetries of bilateral objects in point clouds. In Proceedings of the International Symposium on Biomedical Imaging: From Nano to Macro, Paris, France, 14–17 May 2008. [Google Scholar]

- Combès, B.; Hennessy, R.; Waddington, J.; Roberts, N.; Prima, S. Automatic symmetry plane estimation of bilateral objects in point clouds. In Proceedings of the International Conference on Computer Vision and Pattern Recognition, Anchorage, ANC, USA, 23–28 June 2008. [Google Scholar]

- Combès, B.; Prima, S. New algorithms to map asymmetries of 3D surfaces. In Proceedings of the International Conference on Medical Image Computing and Computer Assisted Intervention, New York, NY, USA, 6–10 September 2008. [Google Scholar]

- Black, M.J.; Rangarajan, A. On the unification of line processes, outlier rejection, and robust statistics. Int. J. Comput. Vis. 1996, 19, 57–91. [Google Scholar] [CrossRef]

- Spreeuwers, L. Fast and accurate 3D face recognition. Int. J. Comput. Vis. 2011, 93, 389–414. [Google Scholar] [CrossRef]

- Barone, S.; Paoli, A.; Razionale, A.V. A coded structured light system based on primary color stripe projection and monochrome imaging. Sensors 2013, 13, 13802–13819. [Google Scholar] [CrossRef] [PubMed]

- Di Angelo, L.; Di Stefano, P. Bilateral symmetry estimation of human face. Int. J. Interact. Des. Manuf. 2013, 7, 217–225. [Google Scholar] [CrossRef]

- Carfagni, M.; Furferi, R.; Governi, L.; Servi, M.; Uccheddu, F.; Volpe, Y. On the performance of the intel SR300 depth camera: Metrological and critical characterization. IEEE Sens. J. 2017, 17, 4508–4519. [Google Scholar] [CrossRef]

- Weiss, H.R.; Elobeidi, N. Comparison of the kyphosis angle evaluated by video rasterstereography (VRS) with x-ray measurements. Stud. Health Technol. Inform. 2008, 140, 137–139. [Google Scholar] [PubMed]

| Authors | Description | Advantages | Limitations—Aspects to Be Improved | Performance | |

|---|---|---|---|---|---|

| CUTANEOUS MARKING BASED METHODS | Turner-Smith [12] | 3D position of landmarks manually acquired. Symmetry line evaluated as the broken line jointing the barycentre of each marker. | Early approach for determining the symmetry line based on the 3D data of the back. Good accuracy (estimated in 5 mm). | Need to manually identify the apophyses and apply markers. | Asymmetry correlated with Cobb angle with sample correlation coefficient r = [0.77 – 0.94] and p-value p < 0.0001. r values depending on the number of patients used for the experimentation |

| Sotoca et al. [13] | Spine curve obtained by: - Cutaneous markers positioned in correspondence of the vertebral spinous processes, from C7 to the lumbar vertebra L5; - Projecting the x-ray images on the topographic representation of the surface. Markers location approximated with a polynomial curve. | High correlation (r = 0.89) between this method and the X-ray based one. Good accuracy (estimated in 5 mm). | Asymmetry correlated with Cobb angle with sample correlation coefficient r = 0.89 and p-value p < 0.0001 | ||

| PARALLEL SECTIONS BASED METHODS | Drerup-Hierholzer [10] | Coordinate system associated to the subject’s back. Slicing of the back surface using parallel planes normal to the vertical axis. Position of the spine associated to the minimum value of the lateral asymmetry function. | First methodology based on symmetry properties of the horizontal sections of the subject’s back. | Results could be not compatible with biomechanical constraints. Thousands of instances to be explored to select the most promising set of points, with respect of the work proposed by Santiesteban et al. | Asymmetry correlated with Cobb angle with sample correlation coefficient r = 0.9 and p-value p < 0.0001 |

| Huysmans et al. [14] | Lateral asymmetry function integrated with, blending, curvatures, torsions and biomechanical constraints. | Compared to the work of Drerup—Hierholzer: - Avoids results that could be not compatible with biomechanical constraints; - Applicable to different postures; - Biomechanical constraints and information from previous measurements; - More robust and reliable location of the anatomical landmarks. | Evaluation of six coefficients required to adjust the procedure for analysing average individuals. | Mean r.m.s. error of 0.9 mm for the lateral deviation and 0.4° for the axial rotation when compared with the Drerup-Hierholzer method [10]. | |

| Santiesteban et al. [15] | Principal curvatures directions are used as local shape descriptors of the surface. The cutting plane is defined from the set of centroids and from profile directions. | Fewer instances required, with respect to the method of Drerup-Hierholzer. | Need to estimate and quantify asymmetries in the whole back. | Authors provides a method for estimating and modelling human spine from 3D data but no quantitative assessment is provided. | |

| Di Angelo et al. [16] | Symmetry index defined from normal unit vectors of horizontal sections orientation of the back surface. | With respect to the method proposed by Drerup-Hierholzer, lower errors in the lumbar and thoracic tracts and fewer instances required. | Estimation of symmetry line in the cervical tract is not trivial. | Mean error in mm (w.r.t. Drerup-Hierholzer [10]) for patients: - upright standing: 3.2 (lumbar), 3.5 (thoracic), 4.7 (cervical) - sitting: 2.8 (lumbar), 3.6 (thoracic), 5.2 (cervical) | |

| Final Considerations | Suitable to detect the symmetry line in case of erect postures. | Adequate to analyse postures producing spine configurations protruding outside from the sagittal plane. | |||

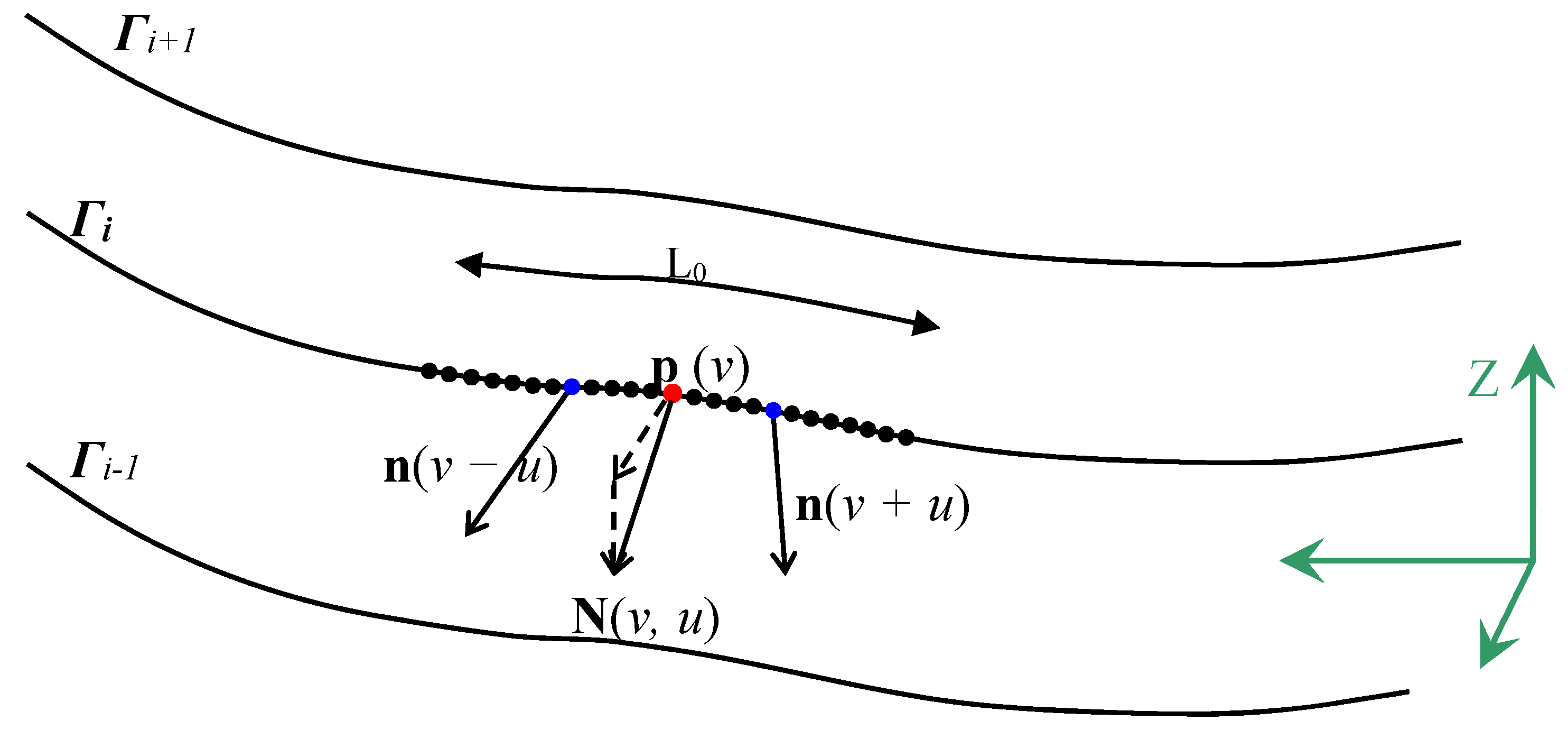

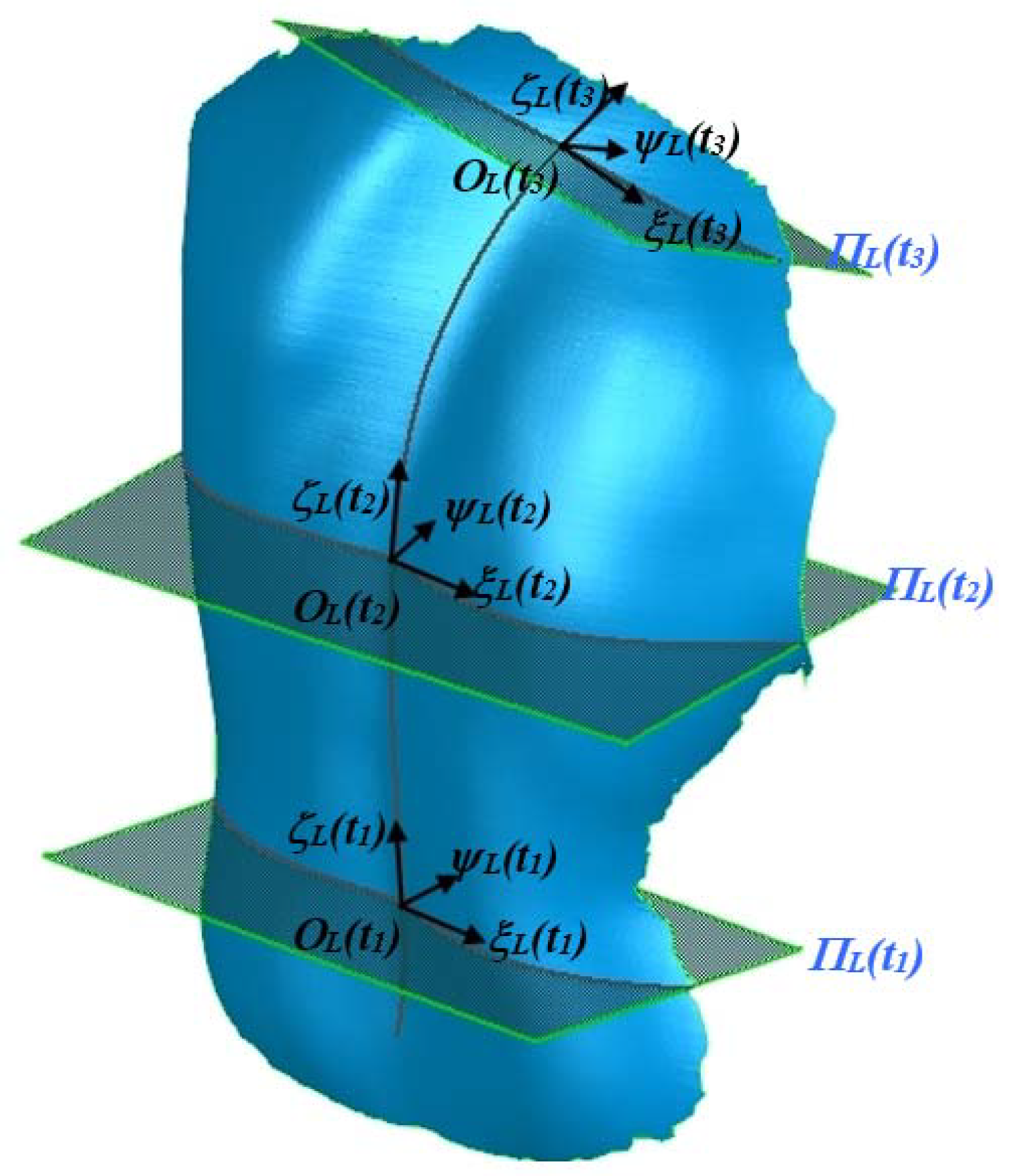

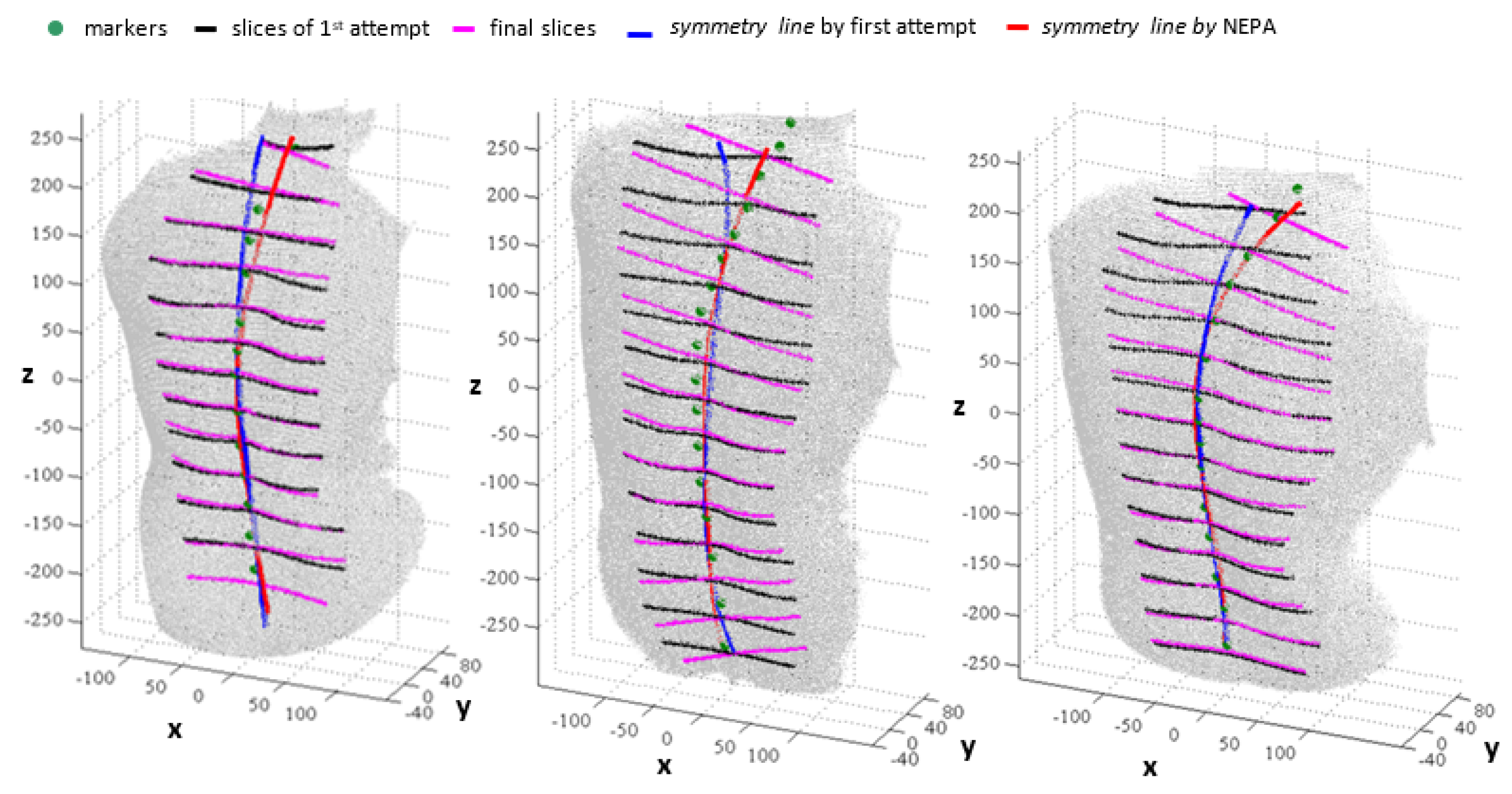

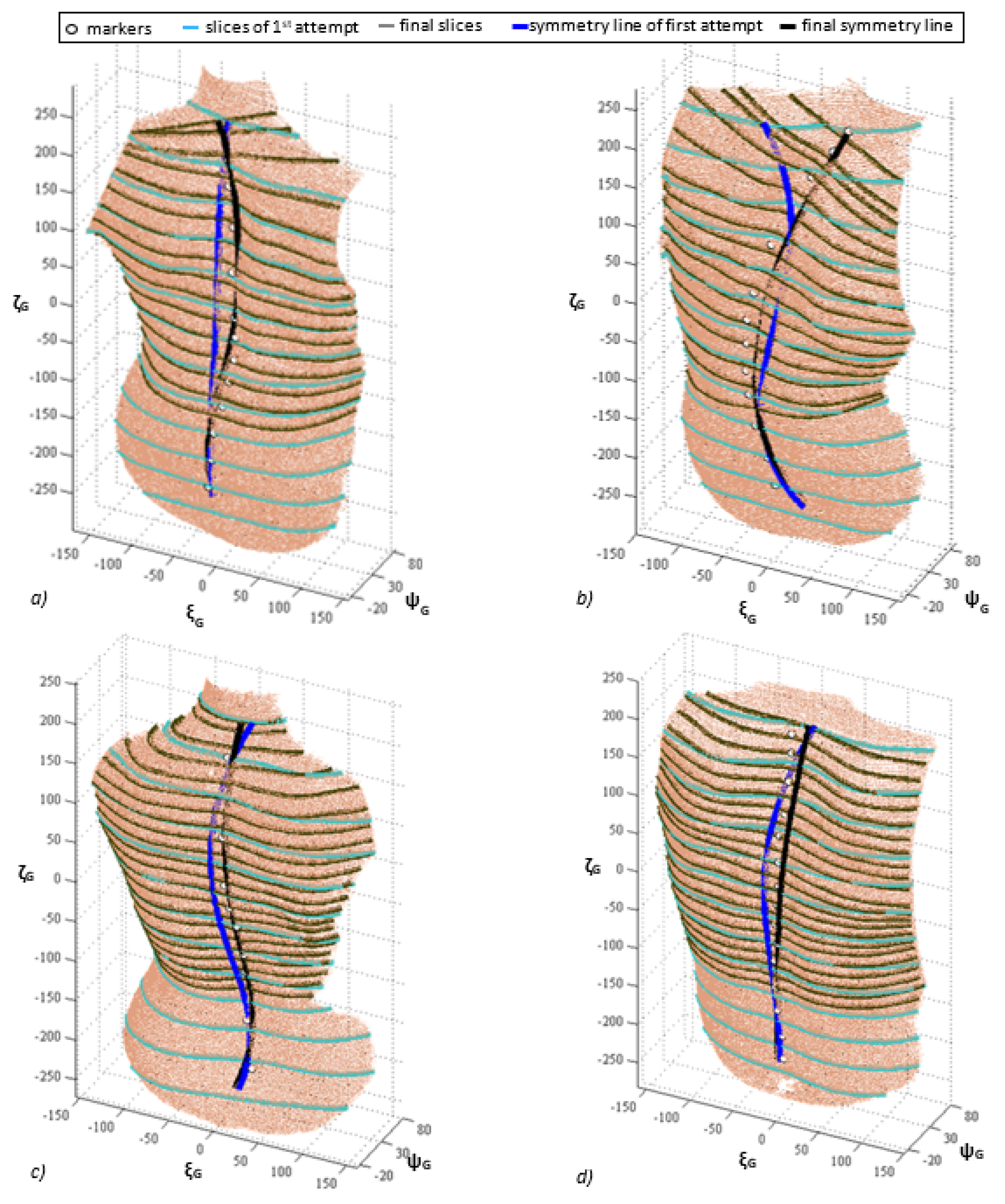

| ADAPTIVE SECTIONS BASED METHODS | Di Angelo et al. [17,18] | Starting from a first attempt symmetry line (C0), the NEPA method, iteratively, finds the set of planes Π(t) which define onto the back surface a set of profiles Γ(t) exhibiting the maximum possible symmetry according to the expression in the Equation (4). | Suitable for asymmetric postures with spine configurations lying far outside the sagittal plane. Improving the symmetry line detection of about 6–7%, with respect to the previous method of the authors. | The method finds the symmetry line under two hypothesis: (1) symmetry line spans from the “most symmetric” points of the back; (2) plane Π(t) slices the back in the “most symmetric” profiles. | Mean error reduction w.r.t. the method in [16] equal to: 1.53% (lumbar), 7.59% (thoracic) |

| Di Angelo et al. [19] | This new method analyses the profiles given by the intersection of the back surface with a set of planes Πk orthogonal to the direction identified by the previous pair of symmetry points (each point being at a given distance (“step”) from the previous and the following) | Correct evaluation of the symmetry line even for extremely asymmetric postures. | Strong influence of the body morphology of the subject, especially by those features that produce asymmetry such as gibbosities or other alterations. In those cases, the method could fail and false symmetries could be detected. | Mean error reduction w.r.t. first-attempt symmetry line equal to: 2.2% (lumbar), 21.8% (thoracic), 34.5% (cervical) | |

| Authors | Description | Advantages | Limitations—Aspects to Be Improved | Performance | |

|---|---|---|---|---|---|

| EGI | Sun-Sherrah [14] | Analysis of the recurrence histogram built from the orientation of normal unit vectors from EGI and examination of the EGI map around the principal axes of inertia. | Early EGI-based approach for determining symmetry plane of solids. Effort to reduce computational costs are made. | Not much robust when noisy data are acquired. | Reflectional symmetry evaluated in 1 min. Rotational symmetry evaluated in 1–5 min. Complex medicalimages requires 100 min. Accuracy comparable to the one obtained using the “sphere resolution” |

| Pan et al. [15] | Computation of the orientation histogram by the inverse of the Gaussian curvature. | Increasing robustness in presence of noisy data. | The proposed method should be further tested. | More than 95% of the models have good detection results. Computational time less than 1s (on a Pentium IV 2.0 GHz) | |

| Final Considerations | Results depend on the symmetry of the acquired data. | EGI-based methods are not able to analyse the object symmetry. | |||

| Mirroring and registration model | Benz et al. [24] | Mirroring and registration method applied to support surgical facial reconstruction from an aesthetical point of view. | Computation of symmetry plane using registration algorithms where the symmetry plane is retrieved even in case of asymmetric geometries. The 3D eyes position is evaluated by a provided procedure. | Need to cover a higher number of experimental tests to validate the results. | Mean deviation (mm) of the mirrored position from actual position equal to 1.3 (along x-axis), −0.75 along y-axis and −0.25 along z-axis. |

| De Momi et al. [26] | Early attempt for the estimation of the symmetry plane from manually selected areas. | Applicable to all 3D models related to any anatomical body area. | Areas manually selected. | Time required to obtain a satisfactory result (on average) equal to 10 min for each skull, including computation of the symmetry plane. Mean deviation (mm) of the mirrored position from actual position equal to 1.5 orbital, 1.4 zygomatic, 1.7 maxillary | |

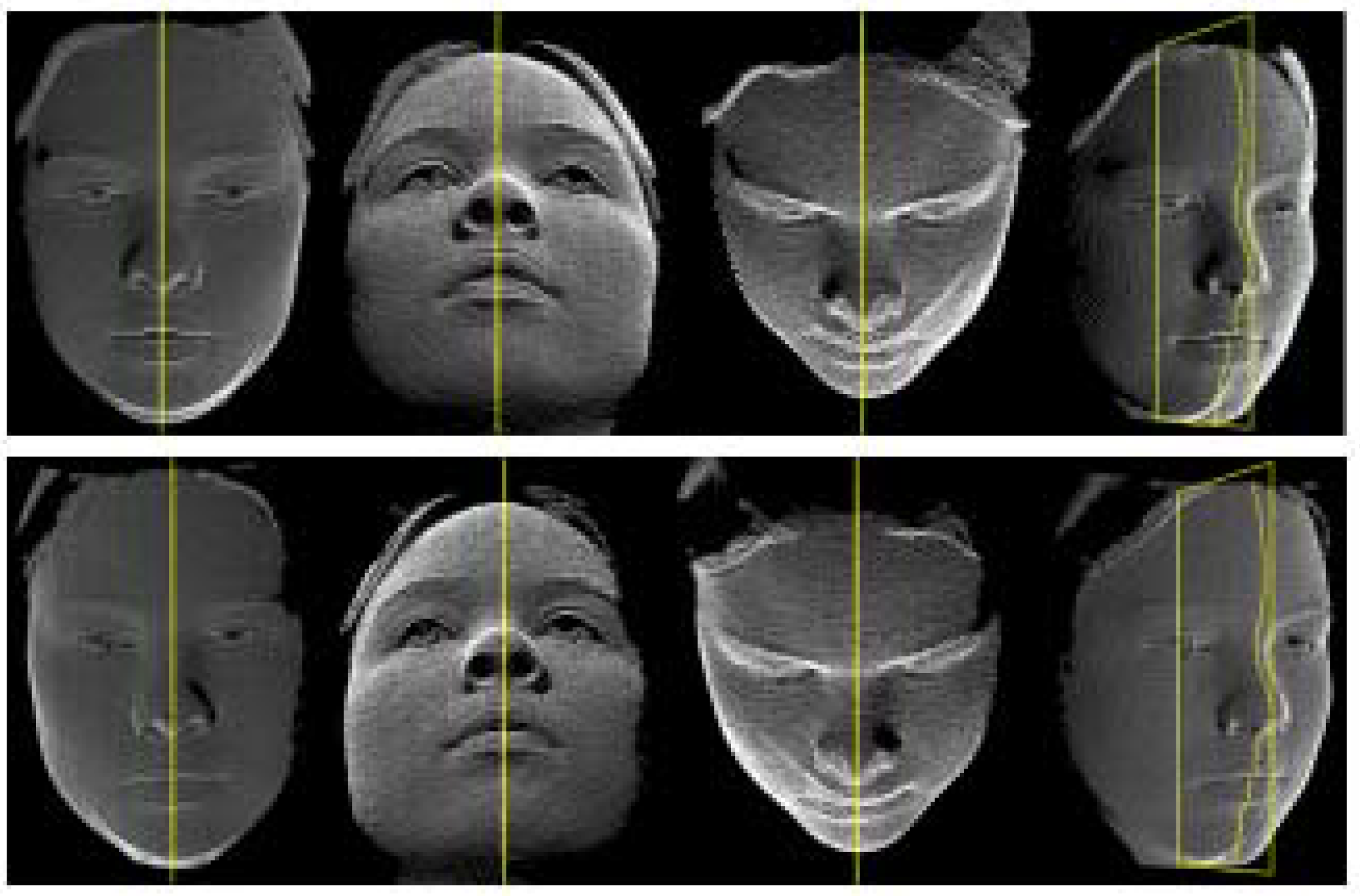

| Colbry-Stockman [27] | First-attempt symmetry plane determined by applying the PCA method. | Fully automatic method. | This approach tends to produce unreliable results when asymmetrically scanned data are used as input (Tang et al.). | Computational time equal to 4 s for 320 × 240 pixel images and to 12 s for 640 × 480 pixel images. Improvement of mid-line normalization over database roll, pitch, and yaw differences equal to, respectively, 0.01° ± 0.58° 0.01° ± 2.01° 0.00° ± 0.79° 2.90 mm ± 7.81 mm | |

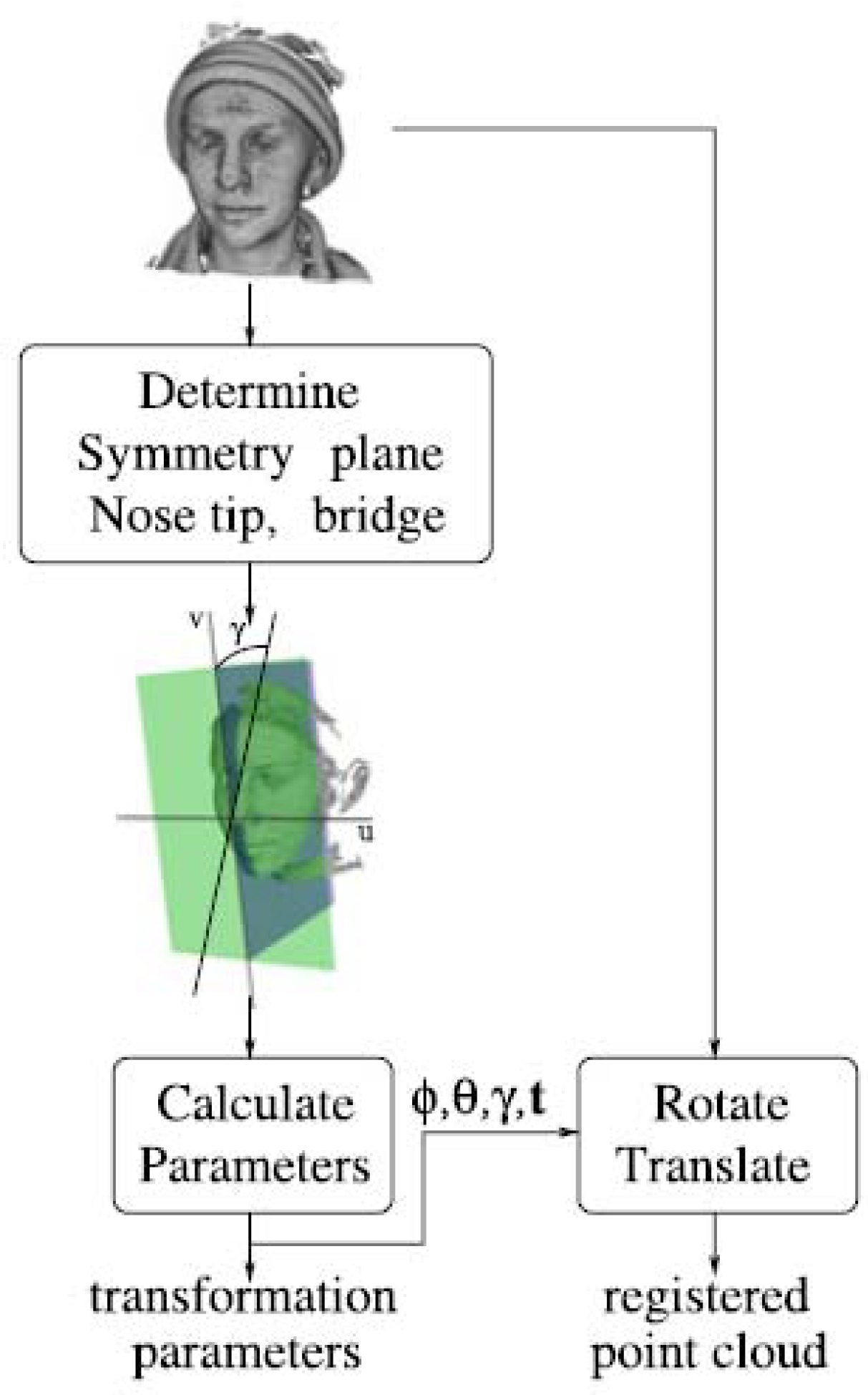

| Tang et al. [29] | - Initially guessed symmetry plane passing through the centroid; - Registration performed by analyzing a symmetric rectangular region selected around the nose. | ICP registration insensitive to asymmetrical data. | - It allows a correct estimation of the symmetry plane only in case the actual plane is aligned with the yz—plane of the 3D scanner used for acquisition; - Valid approach only in the case of undistorted noses - rise in terms of computational costs due to the ICP algorithm. | When compared with the FSP method, the improvements scores from 7.1% to 5.5% for the symmetry curve and from 12.0% to 8.9% for the cheek curve | |

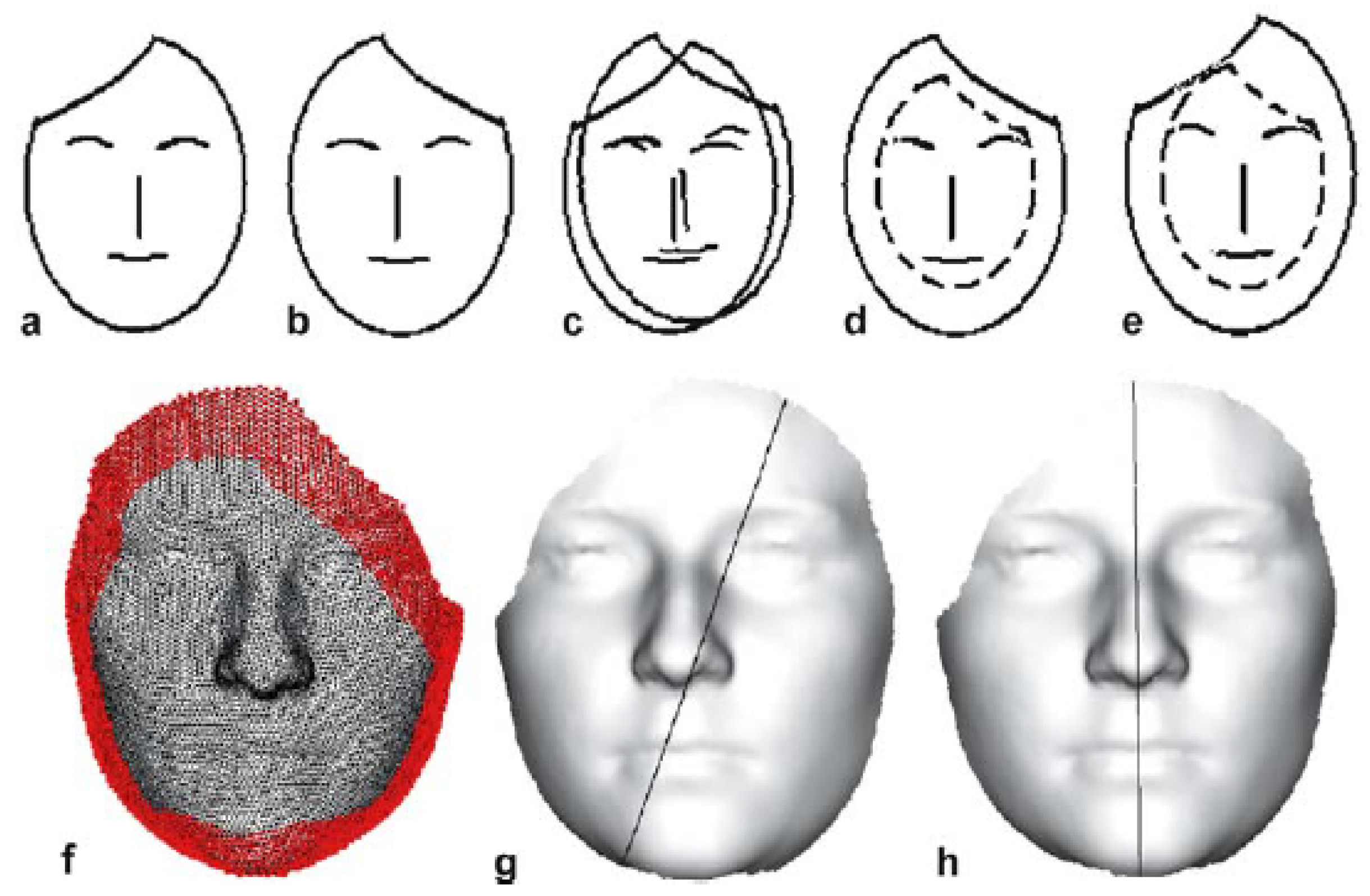

| Zhang et al. [30] | MarkSkirt operator is used to assess the registration on the whole point clouds with the exception of the points belonging to the 10–ring of the boundary. | ICP algorithm does not fail in case irregularities in the face boundary are present. | - Texture information are used as an additional clue during face comparison tasks; - expanded database, managed in a more efficient way; - use of the MarkSkirt operator in the recognition process. | 2.8 s to obtain the symmetry profile representation from a facial mesh (1GHz Pentium IV). 10.8% equal error rate and 87.5% rank-one recognition rate. False results mainly caused by extreme expression | |

| Combès et al. [31,32,33] | Estimation of the symmetry plane without the need of intermediate pre-processing operations such as roto–translation and registration. | Solves the problem of non-symmetrical sampling of the face. | Highly sensitive to non uniform sapling. | Angular and linear errors of the estimated symmetry plane compared to the ground truth solution less than 10−2 deg. | |

| Spreeuwers [35] | Not based on ICP algorithm. Symmetry plane estimated by varying a set of parameters in a given range. | Computationally more efficient than the ICP. | The registration method could be further improved and more advanced approaches to select the best configuration of regions classifiers and to evaluate appropriate weights for the voting process could be applied. | Performance of proposed approach w.r.t. Tang et al. [29] shows equal error rates equal to 0.7% against 7.1% (6.1% using manual procedure). Computational time is equal to 2.5. | |

| Di Angelo et al. [37] | First-attempt estimation of the symmetry plane performed by PCA. | Not sensitive to data asymmetry resulting from the scanning process. | Computationally more onerous of the previous ones. | Computational time equal to 11.2 s (average) against 245 s (average) evaluated in Pan et. Al. [21]. Robustness for reproducibility w.r.t. Pan et al. [21] equal to 1.86° (mean value). | |

| Final Considerations | More recent contributions are insensitive to both asymmetrically 3D data and non-uniformity in terms of point cloud density. | Exception made for contributions. The others are sensitive to asymmetries. | |||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bartalucci, C.; Furferi, R.; Governi, L.; Volpe, Y. A Survey of Methods for Symmetry Detection on 3D High Point Density Models in Biomedicine. Symmetry 2018, 10, 263. https://doi.org/10.3390/sym10070263

Bartalucci C, Furferi R, Governi L, Volpe Y. A Survey of Methods for Symmetry Detection on 3D High Point Density Models in Biomedicine. Symmetry. 2018; 10(7):263. https://doi.org/10.3390/sym10070263

Chicago/Turabian StyleBartalucci, Chiara, Rocco Furferi, Lapo Governi, and Yary Volpe. 2018. "A Survey of Methods for Symmetry Detection on 3D High Point Density Models in Biomedicine" Symmetry 10, no. 7: 263. https://doi.org/10.3390/sym10070263