1. Introduction

Protection of publicly released microdata from individual identification is a primary societal concern. Therefore, statistical disclosure control (SDC) is often applied to microdata before releasing the data publicly [

1,

2]. Microaggregation is an SDC method, which functions by partitioning a dataset into groups of at least

k records each and replacing the records in each group with the centroid of the group. The resulting dataset satisfies the “

k-anonymity constraint,” thus protecting data privacy [

3]. However, replacing a record with its group centroid results in information loss, and the amount of information loss is commonly used to evaluate the effectiveness of a microaggregation method.

A constrained clustering problem underlies microaggregation, in which the objective is to minimize information loss and the constraint is to restrict the size of each group of records to not fewer than

k. This problem can be solved in polynomial time for univariate data [

4]; however, it has been proved non-deterministic polynomial-time hard for multivariate data [

5]. Therefore, most existing approaches for multivariate data are heuristic based and derive a solution within a reasonable timeframe; consequently, no single microaggregation method outperforms other methods for all datasets and

k values.

Numerous microaggregation methods have been proposed, e.g., the Maximum Distance to Average Vector (MDAV) [

6], Diameter-Based Fixed-Size (DBFS) [

7], Centroid-Based Fixed-Size (CBFS) [

7], Two Fixed Reference Points (TFRP) [

8], Multivariate Hansen–Mukherjee (MHM) [

9], Density-Based Algorithm [

10], Successive Group Minimization Selection (GSMS) [

11], and Fast Data-oriented Microaggregation [

12]. They generate a solution that satisfies the

k-anonymity constraint and minimizes the information loss for a given dataset and an integer

k. A few recent studies have focused on refining the solutions generated using existing microaggregation methods [

13,

14,

15,

16]. The most widely used method for refining a microaggregation solution is to determine whether decomposing each group of records in the solution by adding its records to other groups can reduce the information loss of the solution. This method, referred to as TFRP2 in this paper, is originally used in the second phase of the TFRP method [

8] and has been subsequently adopted by many microaggregation approaches [

10,

11].

Because the above microaggregation approaches are based on simple heuristics and do not always yield satisfactory solutions, there is room to improve the results of these existing approaches. Our aim here is to develop an algorithm for refining the results of the existing approaches. The developed algorithm should help the existing approaches to reduce the information loss further.

The remainder of this paper is organized as follows.

Section 2 defines the microaggregation problem.

Section 3 reviews relevant studies on microaggregation approaches.

Section 4 presents the proposed algorithm for refining a microaggregation solution. The experimental results are discussed in

Section 5. Finally, conclusions are presented in

Section 6.

2. Microaggregation Problem

Consider a dataset

D of

n points (records),

xi,

i∈{1,…,

n}, in the

d-dimensional space. For a given positive integer

k ≤

n, the microaggregation problem is to derive a partition

P of

D, such that |

p| ≥

k for each group

p∈

P and

SSE(

P) is minimized. Here,

SSE(

P) denotes the sum of the within-group squared error of all groups in

P and is calculated as follows:

The information loss incurred by the partition

P is denoted as

IL(

P) and is calculated as follows:

Because

SST(

D) is fixed for a given dataset

D, regardless of how

D is partitioned, minimizing

SSE(

P) is equivalent to minimizing

. Furthermore, if a group contains 2

k or more points, it can be split into two or more groups, each with

k or more points, to reduce information loss. Thus, in an optimal partition, each group contains at most 2

k − 1 points [

17].

This study proposed an algorithm for refining the solutions generated using the existing microaggregation methods. The algorithm reduces the information loss of a solution by either decomposing or shrinking a group in the solution. Experimental results obtained using the standard benchmark datasets show that the proposed algorithm effectively improves the solutions generated using state-of-the-art microaggregation approaches.

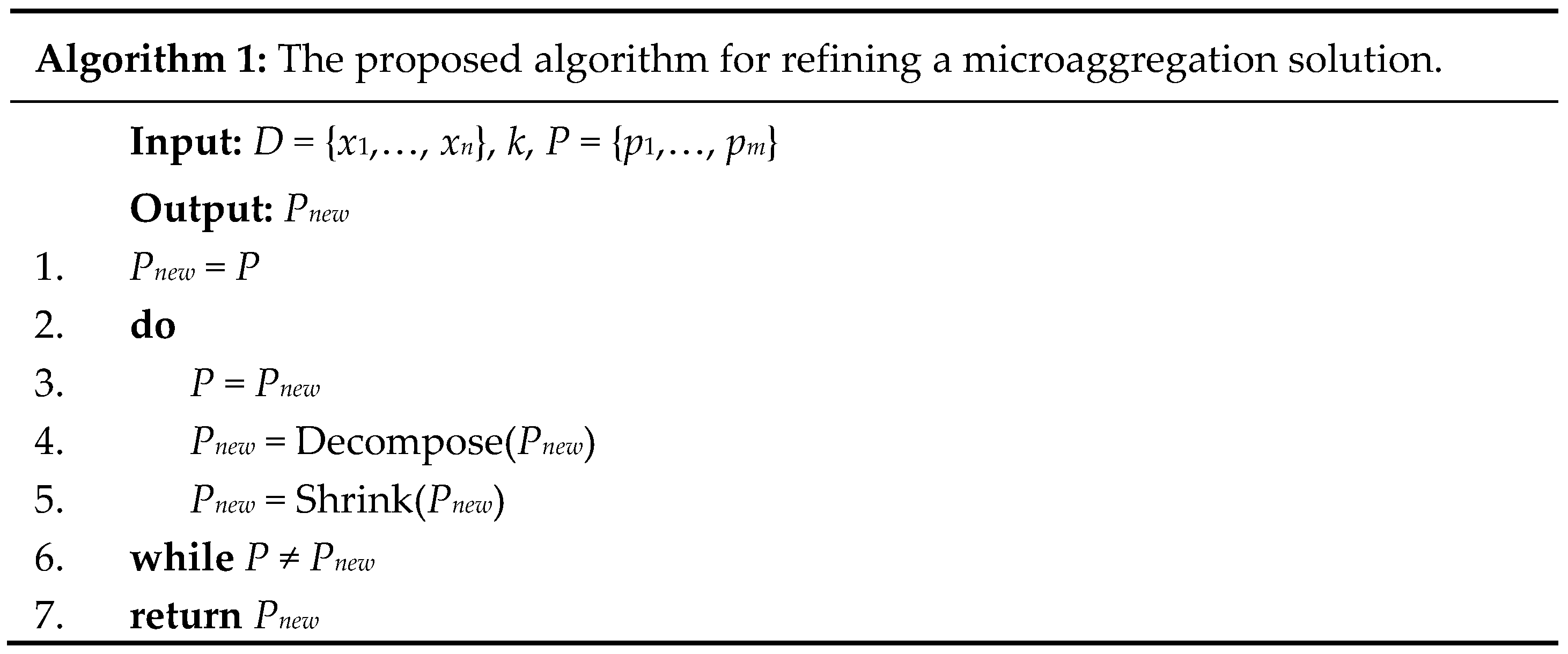

4. Proposed Algorithm

Figure 1 shows the pseudocode of the proposed algorithm for refining a microaggregation solution. The input to the algorithm is a partition

P of a dataset

D generated using a fixed-size microaggregation approach, such as CBFS, MDAV, TFRP, and GSMS. Because a fixed-size microaggregation approach repeatedly generates groups of size

k, it maximizes the number of groups in its solution

P. By using this property, our proposed algorithm focuses only on reducing the number of groups rather than randomly increasing and decreasing the number of groups, as in Ref. [

15].

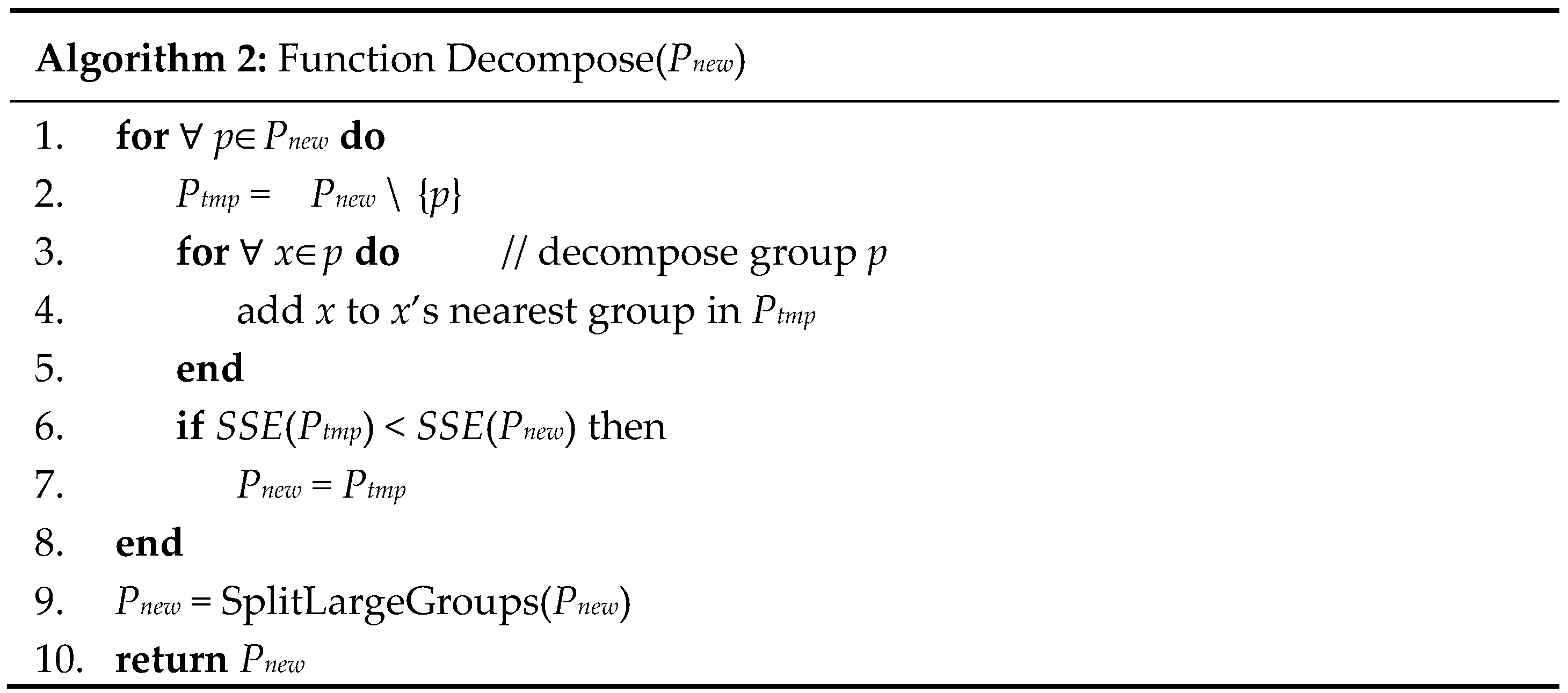

The proposed algorithm repeats two basic operations until both operations cannot yield a new and enhanced partition. The first operation, Decompose (line 4;

Figure 1), fully decomposes each group to other groups if the resulting partition reduces the

SSE (

Figure 2). This operation is similar to the TFRP2 method described in

Section 1. For each group

p, this operation checks whether moving each record in

p to its nearest group reduces the

SSE of the solution.

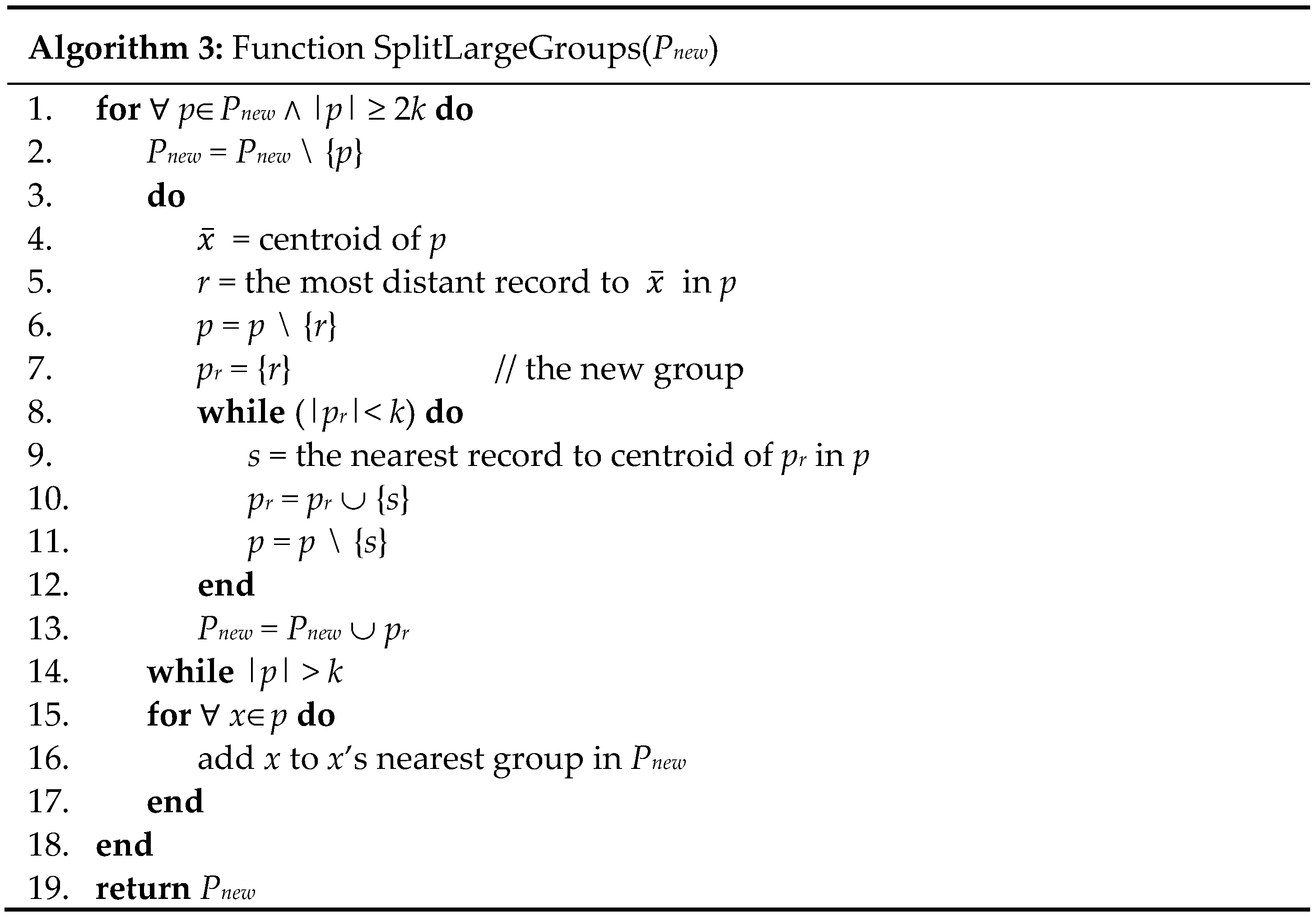

Because the Decompose operation could result in groups with 2

k or more records, at its completion (line 9;

Figure 2), this operation calls the SplitLargeGroups function to split any group with 2

k or more records into several new groups such that the number of records in each new group is between

k and 2

k − 1. The SplitLargeGroups function (

Figure 3) follows the CBFS method [

7]. For any group

p with 2

k or more records, this function finds the record

r∈

p most distant from the centroid of

p and forms a new group

pr = {

r} (lines 4–7;

Figure 3). It then repeatedly adds to

pr the record in

p nearest to the centroid of

pr until |

pr| =

k (lines 8–12;

Figure 3). This process is repeated to generate new groups until |

p| ≤

k (lines 3–14;

Figure 3). The remaining records in

p are added to their nearest groups (lines 15–17;

Figure 3).

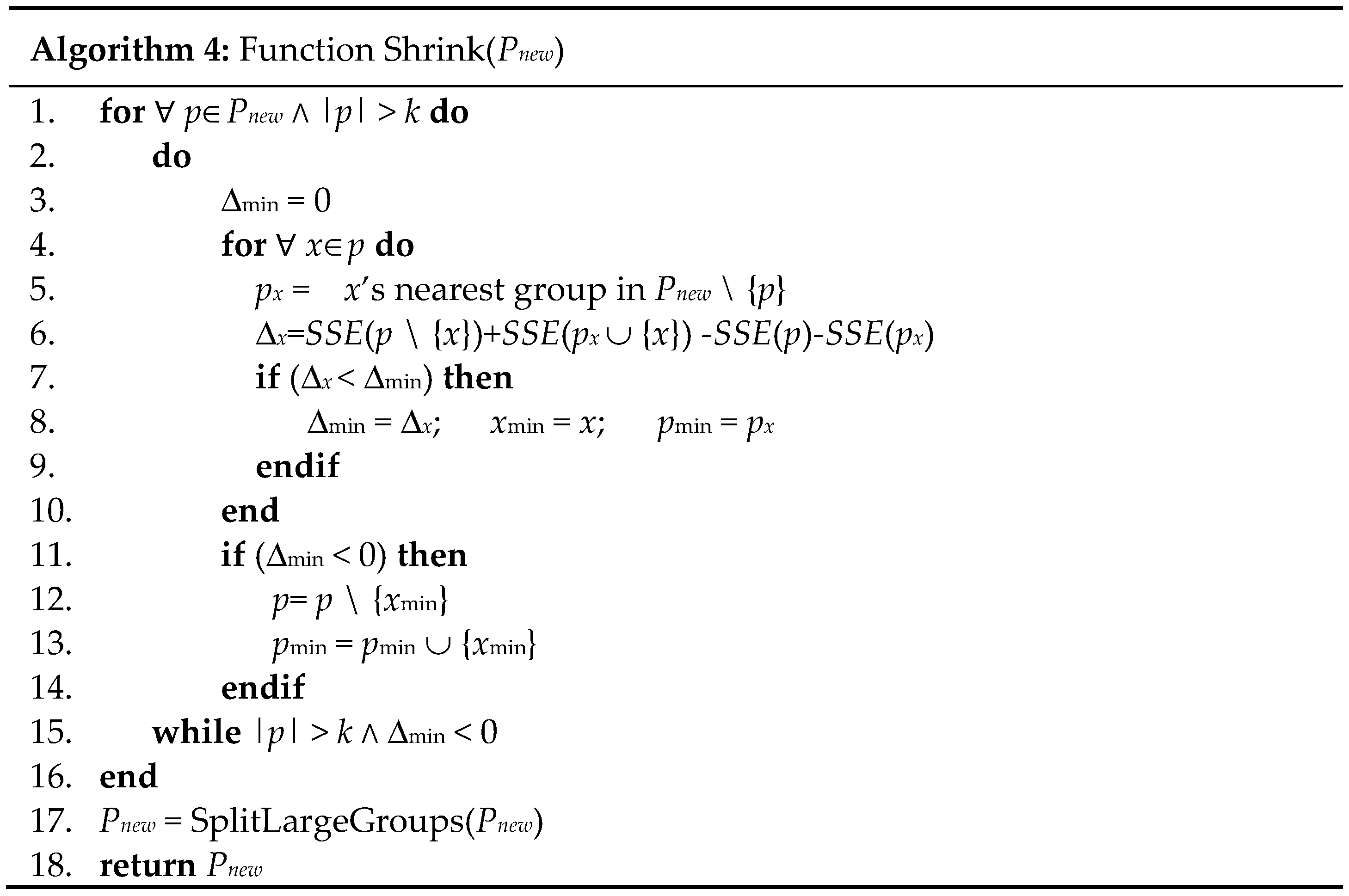

The second operation, Shrink (line 5;

Figure 1), shrinks any group with more than

k records (

Figure 4). For any group

p with more than

k records, this operation searches for and moves the record

xmin∈

p such that moving

xmin to another group reduces the

SSE the most (lines 3–14;

Figure 4). This process is repeated until

p has only

k records remaining or the resulting partition cannot further reduce the

SSE (lines 2–15;

Figure 4). Similar to the Decompose operation, the Shrink operation results in groups with 2

k or more records and calls the SplitLargeGroups function to split these over-sized groups (line 17;

Figure 4).

Notably, at most,

groups are present in a solution. Because lines 1–8 in

Figure 2 and lines 1–16 in

Figure 4 require searching for the nearest group of each record, their time complexity is O (

n2/

k). The time complexity of the SplitLargeGroups function is O (

k2 ×

n/

k). Thus, an iteration of the Decompose and Shrink operations (lines 3–5;

Figure 1) entails O (

n2/

k +

k2 ×

n/

k) = O (

n2) time computation cost.

The proposed algorithm differs from previous work in two folds. First, the Shrink operation explores more opportunities for reducing

SSE.

Figure 5a gives an example. The upper part of

Figure 5a shows the partition of 12 records (represented by small circles) generated by MDAV for

k = 3. First, the Decompose operation decomposes group

p3 and merges its content into groups

p2 and

p4, as shown in the middle part of

Figure 5a. At this moment, the Decompose operation cannot further reduce the

SSE of the partition result. However, the Shrink operation can reduce the

SSE by moving a record from group

p2 to group

p1, as shown in the bottom part of

Figure 5a.

Second, previous work performs the Decompose operation only once and ignores the fact that, after the Decompose operation, the grouping of records may have been changed and consequently new opportunities of reducing the

SSE may appear [

8,

10,

11]. Thus, the proposed algorithm repeatedly performs both Decompose and Shrink operations to explore such possibilities until it cannot improve the

SSE any further.

Figure 5b gives an example. The upper part of

Figure 5b shows the partition of 13 records generated by MDAV for

k = 3. At first, the Decompose operation can only reduce the

SSE by decomposing group

p3 and merging its content into groups

p2 and

p4. Because group

p2 now has 2

k or more records, it is split into two groups,

p21 and

p22, as shown in the middle part of

Figure 5b. The emergence of the group

p21 provides an opportunity to further reduce the

SSE by decomposing group

p21 and merging its content into groups

p1 and

p22, as shown in the bottom part of

Figure 5b.

6. Conclusions

In this paper, we proposed an algorithm to effectively refine the solution generated using a fixed-size microaggregation approach. Although the fixed-size approaches (i.e., methods without a suffix “2” or “3”) do not always generate an ideal solution, the experimental results in

Table 2,

Table 3 and

Table 4 concluded that the refinement methods (i.e., methods with a suffix “2” or “3”) help with improving the information loss of the results of the fixed-size approaches. Moreover, our proposed refinement methods (i.e., methods with a suffix “3”) can further reduce the information loss of the TFRP2 refinement methods (i.e., methods with a suffix “2”) and yield an information loss lower than those reported in the literature [

11].

The TFRP2 refinement heuristic checks each group for the opportunity of reducing the information loss via decomposing the group. Our proposed algorithm (

Figure 1) can discover more opportunities such as this than the TFRP2 refinement heuristic does because the proposed algorithm can not only decompose but also shrink a group. Moreover, the TFRP2 refinement heuristic checks each group only once, but our proposed algorithm checks each group more than once. Because one refinement step could result in another refinement step that did not exist initially, our proposed algorithm is more effective in reducing the information loss than the TFRP2 refinement heuristic does.

The proposed algorithm is essentially a local search method within the feasible domain of the solution space. In other words, we refined a solution while enforcing the k-anonymity constraint (i.e., each group in a solution contains no fewer than k records). However, the local search method could still be trapped in the local optima. A possible solution is to allow the local search method to temporarily step out of the feasible domain. Another possible solution is to allow the information loss to increase within a local search step but at a low probability, similar to the simulated annealing algorithms. The extension of the local search method warrants further research.