A Cluster-Based Boosting Algorithm for Bankruptcy Prediction in a Highly Imbalanced Dataset

Abstract

1. Introduction

2. Preliminaries

2.1. Class Imbalance Problem in Bankruptcy Prediction

2.2. Undersampling Approach Using IHT

3. Materials and Methods

3.1. The Experimental Dataset

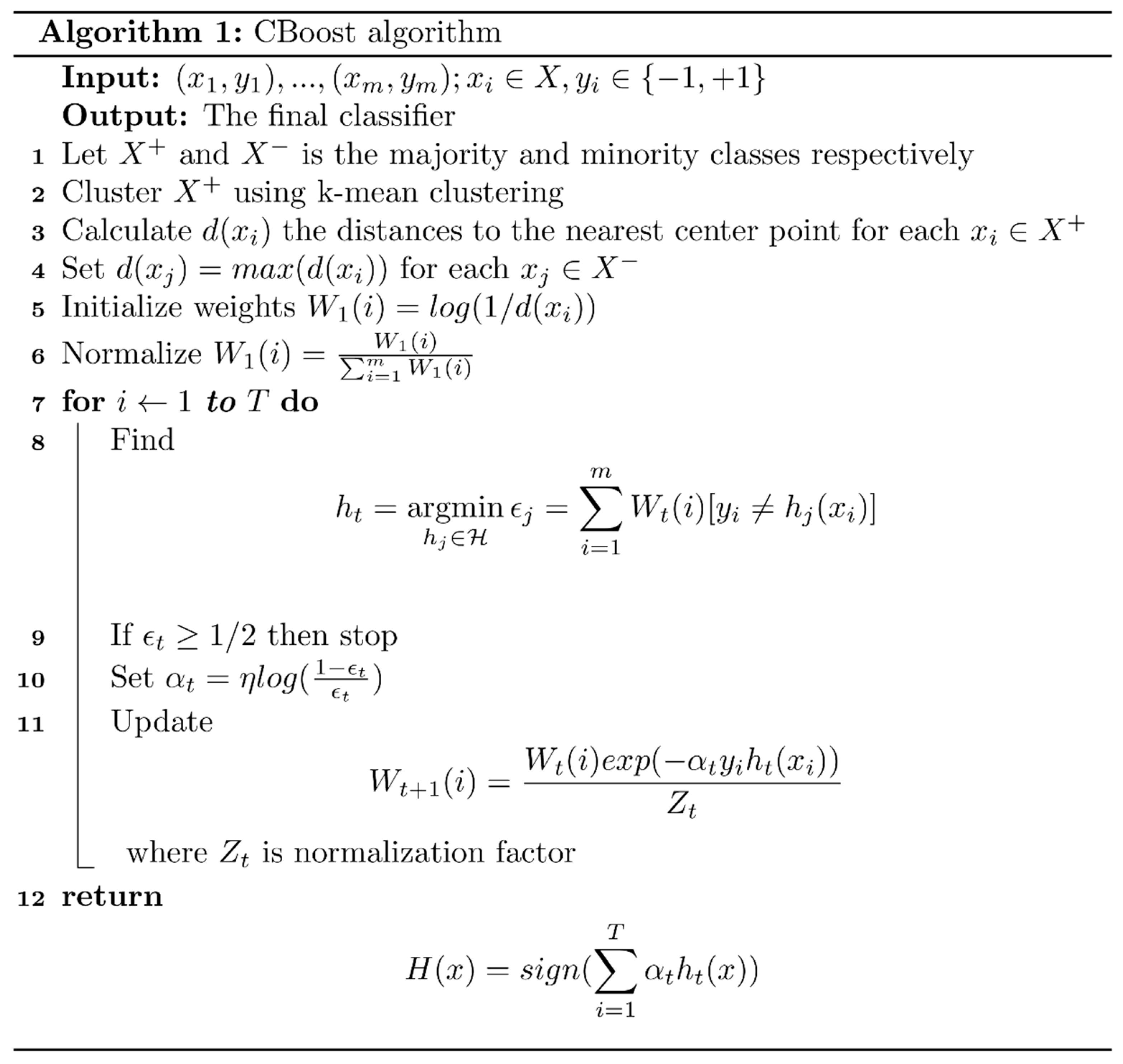

3.2. Cluster-Based Boosting Algorithm

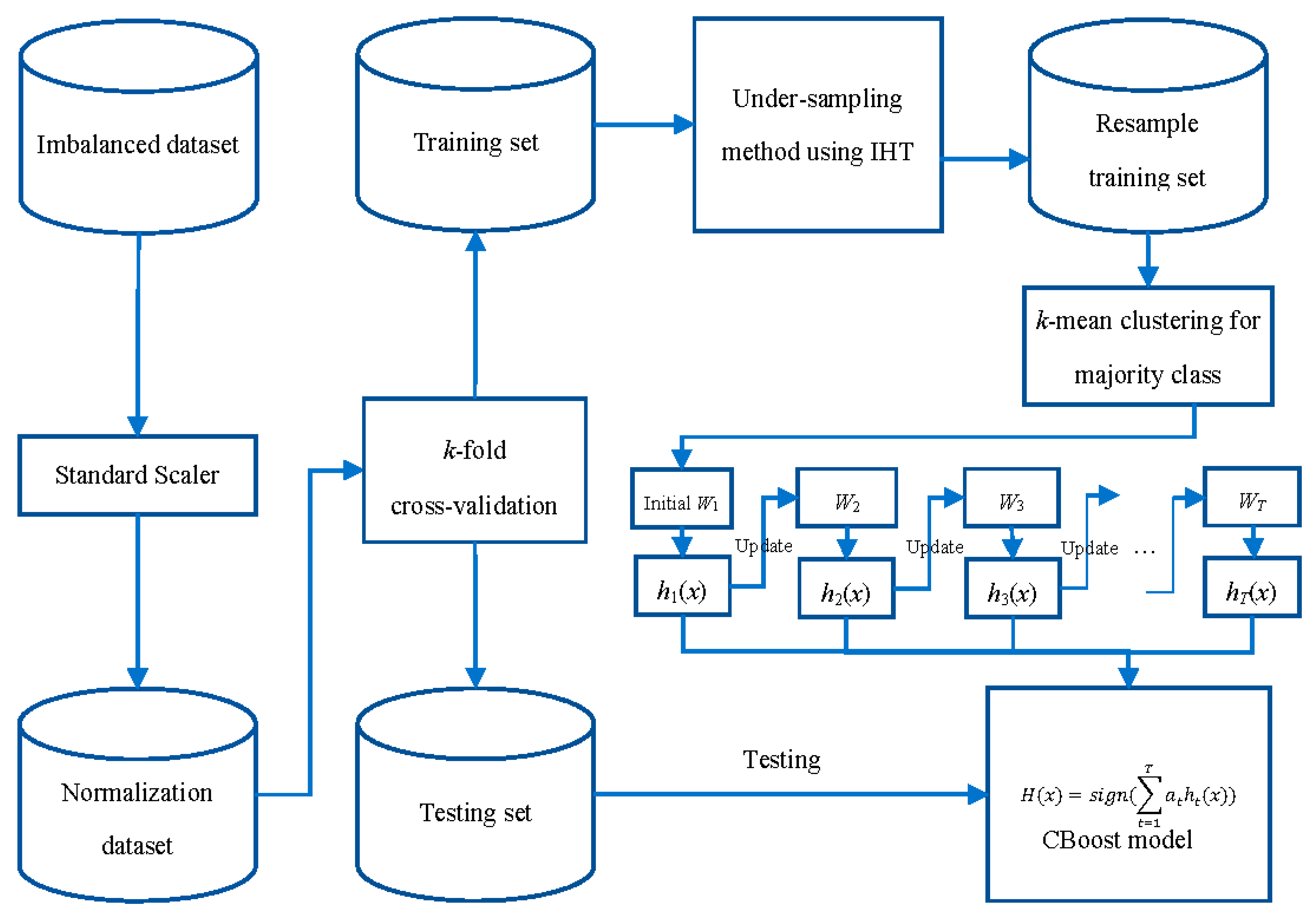

3.3. RFCI Framework

4. Results

4.1. Experimental Setting

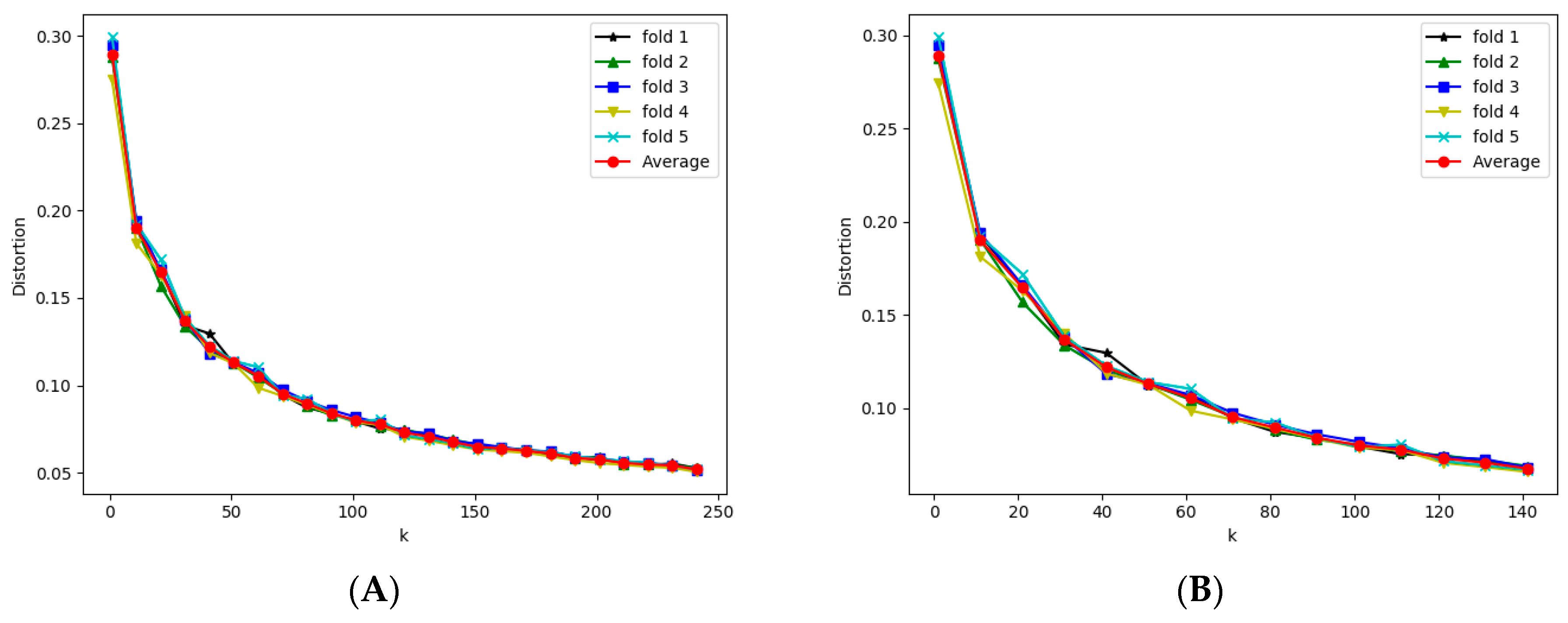

4.2. Identifying k Value Experiment

4.3. Bankruptcy Prediction Results

4.4. Time Analysis

5. Discussion

6. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Cu, N.G.; Le, H.S.; Chiclana, F. Dynamic structural neural network. J. Intell. Fuzzy Syst. 2018, 34, 2479–2490. [Google Scholar]

- Dang, L.M.; Hassan, S.I.; Im, S.; Mehmood, I.; Moon, H. Utilizing text recognition for the defects extraction in sewers CCTV inspection videos. Comput. Ind. 2018, 99, 96–109. [Google Scholar] [CrossRef]

- Dang, L.M.; Syed, I.H.; Suhyeon, I.; Sangaiah, A.; Mehmood, I.; Rho, S.; Seo, S.; Moon, H. UAV based wilt detection system via convolutional neural networks. Sustain. Comput. Inform. Syst. 2018, in press. [Google Scholar] [CrossRef]

- Le, T.; Nguyen, A.; Huynh, B.; Vo, B.; Pedrycz, W. Mining constrained inter-sequence patterns: A novel approach to cope with item constraints. Appl. Intell. 2018, 48, 1327–1343. [Google Scholar] [CrossRef]

- Bui, H.; Vo, B.; Nguyen, H.; Nguyen-Hoang, T.A.; Hong, T.P. A weighted N-list-based method for mining frequent weighted itemsets. Expert Syst. Appl. 2018, 96, 388–405. [Google Scholar] [CrossRef]

- Vo, B.; Le, T.; Coenen, F.; Hong, T.P. Mining frequent itemsets using the N-list and subsume concepts. Int. J. Mach. Learn. Cybern. 2016, 7, 253–265. [Google Scholar] [CrossRef]

- Le, T.; Vo, B.; Baik, S.W. Efficient algorithms for mining top-rank-k erasable patterns using pruning strategies and the subsume concept. Eng. Appl. Artif. Intell. 2018, 68, 1–9. [Google Scholar] [CrossRef]

- Kim, D.; Yun, U. Efficient algorithm for mining high average-utility itemsets in incremental transaction databases. Appl. Intell. 2017, 47, 114–131. [Google Scholar] [CrossRef]

- Vo, B. An Efficient Method for Mining Frequent Weighted Closed Itemsets from Weighted Item Transaction Databases. J. Inf. Sci. Eng. 2017, 33, 199–216. [Google Scholar]

- Mai, T.; Vo, B.; Nguyen, L. A lattice-based approach for mining high utility association rules. Inf. Sci. 2017, 399, 81–97. [Google Scholar] [CrossRef]

- Kim, B.; Kim, J.; Yi, G. Analysis of Clustering Evaluation Considering Features of Item Response Data Using Data Mining Technique for Setting Cut-Off Scores. Symmetry 2017, 9, 62. [Google Scholar] [CrossRef]

- Soleimani, H.; Tomasin, S.; Alizadeh, T.; Shojafar, M. Cluster-head based feedback for simplified time reversal prefiltering in ultra-wideband systems. Phys. Commun. 2017, 25, 100–109. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Tajiki, M.M.; Akbari, B.; Shojafar, M.; Mokari, N. Joint QoS and Congestion Control Based on Traffic Prediction in SDN. Appl. Sci. 2017, 7, 1265. [Google Scholar] [CrossRef]

- Roan, T.N.; Ali, M.; Le, H.S. δ-equality of intuitionistic fuzzy sets: A new proximity measure and applications in medical diagnosis. Appl. Intell. 2018, 48, 499–525. [Google Scholar]

- Singh, K.; Singh, K.; Le, H.S.; Aziz, A. Congestion control in wireless sensor networks by hybrid multi-objective optimization algorithm. Comput. Netw. 2018, 138, 90–107. [Google Scholar] [CrossRef]

- Le, T.; Vo, B.; Duong, T.H. Personalized Facets for Semantic Search Using Linked Open Data with Social Networks. In Proceedings of the 2012 Third International Conference on Innovations in Bio-Inspired Computing and Applications, Kaohsiung, Taiwan, 26–28 September 2012; pp. 312–337. [Google Scholar]

- Nguyen, D.T.; Ali, M.; Le, H.S. A Novel Clustering Algorithm in a Neutrosophic Recommender System for Medical Diagnosis. Cogn. Comput. 2017, 9, 526–544. [Google Scholar]

- Lu, T.C. Interpolation-based hiding scheme using the modulus function and re-encoding strategy. Signal Process. 2018, 142, 244–259. [Google Scholar] [CrossRef]

- Lin, W.C.; Tsai, C.F.; Hu, Y.H.; Jhang, J.S. Clustering-based undersampling in class-imbalanced data. Inf. Sci. 2017, 409, 17–26. [Google Scholar] [CrossRef]

- Zakaryazad, A.; Duman, E. A profit-driven Artificial Neural Network (ANN) with applications to fraud detection and direct marketing. Neurocomputing 2016, 175, 121–131. [Google Scholar] [CrossRef]

- Herndon, N.; Caragea, D. A Study of Domain Adaptation Classifiers Derived from Logistic Regression for the Task of Splice Site Prediction. IEEE Trans. NanoBiosci. 2016, 15, 75–83. [Google Scholar] [CrossRef] [PubMed]

- Luo, J.; Xiao, Q. A novel approach for predicting microRNA-disease associations by unbalanced bi-random walk on heterogeneous network. J. Biomed. Inform. 2017, 66, 194–203. [Google Scholar] [CrossRef] [PubMed]

- Kim, M.J.; Kang, D.K.; Kim, H.B. Geometric mean based boosting algorithm with over-sampling to resolve data imbalance problem for bankruptcy prediction. Expert Syst. Appl. 2015, 42, 1074–1082. [Google Scholar] [CrossRef]

- Zieba, M.; Tomczak, S.K.; Tomczak, J.M. Ensemble boosted trees with synthetic features generation in application to bankruptcy prediction. Expert Syst. Appl. 2016, 58, 93–101. [Google Scholar] [CrossRef]

- Barboza, F.; Kimura, H.; Altman, E. Machine learning models and bankruptcy prediction. Expert Syst. Appl. 2017, 83, 405–417. [Google Scholar] [CrossRef]

- Bennin, K.E.; Keung, J.; Phannachitta, P.; Monden, A.; Mensah, S. MAHAKIL: Diversity based Oversampling Approach to Alleviate the Class Imbalance Issue in Software Defect Prediction. IEEE Trans. Softw. Eng. 2018, 44, 534–550. [Google Scholar] [CrossRef]

- Le, T.; Lee, M.Y.; Park, J.R.; Baik, S.W. Oversampling Techniques for Bankruptcy Prediction: Novel Features from a Transaction Dataset. Symmetry 2018, 10, 79. [Google Scholar] [CrossRef]

- Batista, G.; Prati, R.C.; Monard, M.C. A Study of the Behavior of Several Methods for Balancing Machine Learning Training Data. ACM SIGKDD Explor. Newsl. 2004, 6, 20–29. [Google Scholar] [CrossRef]

- Smith, M.R.; Martinez, T.R.; Giraud-Carrier, C.G. An instance level analysis of data complexity. Mach. Learn. 2014, 95, 225–256. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. A Decision-Theoretic Generalization of On-Line Learning and an Application to Boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Lemaitre, G.; Nogueira, F.; Aridas, C.K. Imbalanced-learn: A Python Toolbox to Tackle the Curse of Imbalanced Datasets in Machine Learning. J. Mach. Learn. Res. 2017, 18, 17:1–17:5. [Google Scholar]

- Thorndike, R.L. Who Belongs in the Family? Psychometrika 1953, 18, 267–276. [Google Scholar] [CrossRef]

| Feature | Description |

|---|---|

| F1 | The current assets of the enterprise |

| F2 | The non-current assets i.e., fixed capital assets |

| F3 | The total assets that sum the current and non-current assets |

| F4 | Current debts that need to pay this year |

| F5 | Long-term debts |

| F6 | The total debts that sum current and long-term debts |

| F7 | Capital |

| F8 | Earned surplus |

| F9 | Total capital |

| F10 | Total capital after debts |

| F11 | Revenue from sale activities |

| F12 | Cost of sales activity |

| F13 | Gross profit from sale activity |

| F14 | Management costs |

| F15 | Operating profit that refers to the profits earned through business operations |

| F16 | Non-operating income |

| F17 | Non-operating costs |

| F18 | Income and loss before taxes |

| F19 | Net income |

| Method | Resample Approach | Classifier | AUC (%) |

|---|---|---|---|

| [20] | Undersampling method based on clustering technique | MLP | 46.3 ± 0.3 |

| Decision Tree | 53.4 ± 0.1 | ||

| Random Forest | 57.7 ± 0.2 | ||

| AdaBoost | 52.7 ± 0.5 | ||

| [28] | Oversampling method using SMOTEENN | MLP | 72.7 ± 0.5 |

| Decision Tree | 81.2 ± 0.5 | ||

| Random Forest | 84.2 ± 0.5 | ||

| AdaBoost | 84.8 ± 0.4 | ||

| [24] | None | GMBoost | 75.3 ± 0.6 |

| RFCI | Undersampling method using IHT concept | CBoost | 86.8 ± 0.3 |

| Method | Resampling Approach | Classifier | Training Time (s) | Testing Time (s) |

|---|---|---|---|---|

| [20] | Undersampling method based on clustering technique | MLP | 134.2 ± 9.5 | 0.03 |

| Decision Tree | 133.2 ± 9.9 | 0.002 | ||

| Random Forest | 134.0 ± 8.9 | 0.01 | ||

| AdaBoost | 135.7 ± 9.3 | 0.15 | ||

| [28] | Oversampling method using SMOTEENN | MLP | 48.3 ± 3.0 | 0.02 |

| Decision Tree | 36.2 ± 1.0 | 0.003 | ||

| Random Forest | 36.7 ± 0.9 | 0.02 | ||

| AdaBoost | 66.4 ± 1.0 | 0.31 | ||

| [24] | None | GMBoost | 13.7 ± 0.1 | 0.3 |

| RFCI | Undersampling method using IHT concept | CBoost | 39.4 ± 0.7 | 0.15 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Le, T.; Hoang Son, L.; Vo, M.T.; Lee, M.Y.; Baik, S.W. A Cluster-Based Boosting Algorithm for Bankruptcy Prediction in a Highly Imbalanced Dataset. Symmetry 2018, 10, 250. https://doi.org/10.3390/sym10070250

Le T, Hoang Son L, Vo MT, Lee MY, Baik SW. A Cluster-Based Boosting Algorithm for Bankruptcy Prediction in a Highly Imbalanced Dataset. Symmetry. 2018; 10(7):250. https://doi.org/10.3390/sym10070250

Chicago/Turabian StyleLe, Tuong, Le Hoang Son, Minh Thanh Vo, Mi Young Lee, and Sung Wook Baik. 2018. "A Cluster-Based Boosting Algorithm for Bankruptcy Prediction in a Highly Imbalanced Dataset" Symmetry 10, no. 7: 250. https://doi.org/10.3390/sym10070250

APA StyleLe, T., Hoang Son, L., Vo, M. T., Lee, M. Y., & Baik, S. W. (2018). A Cluster-Based Boosting Algorithm for Bankruptcy Prediction in a Highly Imbalanced Dataset. Symmetry, 10(7), 250. https://doi.org/10.3390/sym10070250