From Theory to Practice: A Data Quality Framework for Classification Tasks

Abstract

:1. Introduction

2. Background

2.1. Data Quality Framework

2.2. Data Quality Ontology

3. Related Work

3.1. Data Quality Frameworks

- A user-oriented process to address DQ issues: high dimensionality, imbalanced classes, outliers, duplicate instances, mislabeled instances and missing values.

- Recommendations of the suitable data cleaning algorithm/approach to address data quality issues.

3.2. Data Cleaning Ontologies

4. Data Quality Framework for Classification Tasks

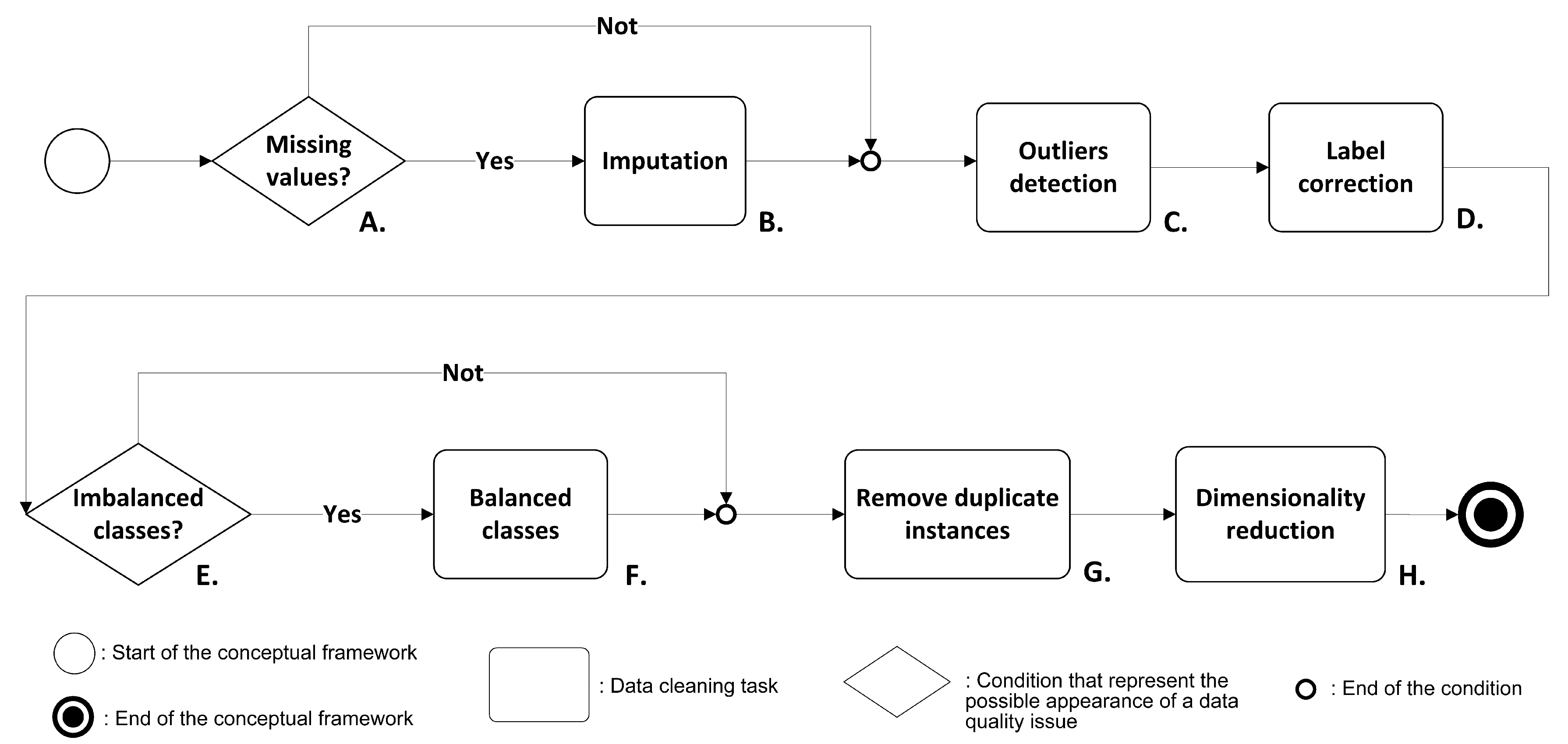

4.1. Conceptual Framework

4.1.1. Mapping the Selected Data Sources

4.1.2. Understanding the Selected Data

- Missing values: refers to missed of values of an attribute, and typically occurs due to faults in the process of data recollection, e.g, data transfer problems, sensor faults, incompleteness in surveys, etc. [55].

- Inconsistency: refers to duplicate instances with different class labels [63].

- Redundancy: in classification tasks, this is referred to as duplicate records [63].

- Amount of data: corresponds to the total of available data for training a classifier [63]; this DQ issue is highly related with high dimensionality. Large datasets with a high number of features can generate high dimensionality, while small datasets can build inaccurate models.

- Heterogeneity: defined as data incompatibility of a variable. This occurs when data from different sources are joined [64].

4.1.3. Identifying and Categorizing Components

- Inconsistency, Redundancy and Timeliness were renamed as Mislabelled class, Duplicate instances and Data obsolescence, respectively.

- According to the Noise definition “irrelevant or meaningless data”, we considered as kinds of Noise: Missing values, Outliers, High dimensionality, Imbalanced class, Mislabelled class and Duplicate instances.

- We redefined Amount of data as lack of information due to the poor process of data collection.

- The amount of data, Heterogeneity and Data obsolescence are issues of the recollection data process. Therefore, these data quality issues were classified in a new category called Provenance, defined by the Oxford English Dictionary as a fact of coming from some particular source or quarter, origin, or derivation.

4.1.4. Integrating Components

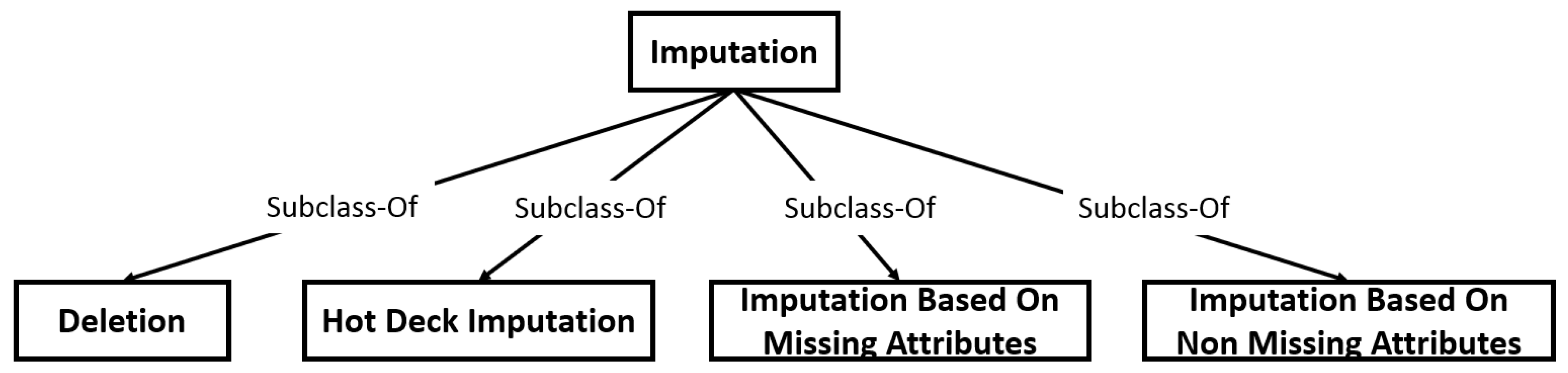

- Imputation: fills missing data with synthetic values. Different approaches are defined for imputing missing values: (i) Deletion: removes all instances with missing values [67]. (ii) Hot deck: missing data are filled with values from the same dataset [68]. (iii) Imputation based on missing attributes: computes a new value from measures of central tendency as median, mode, mean, etc. The computed value is used for filling the missing data. (iv) Imputation based on non-missing attributes: a classification or regression model is built from available data to fill the missing values [69].

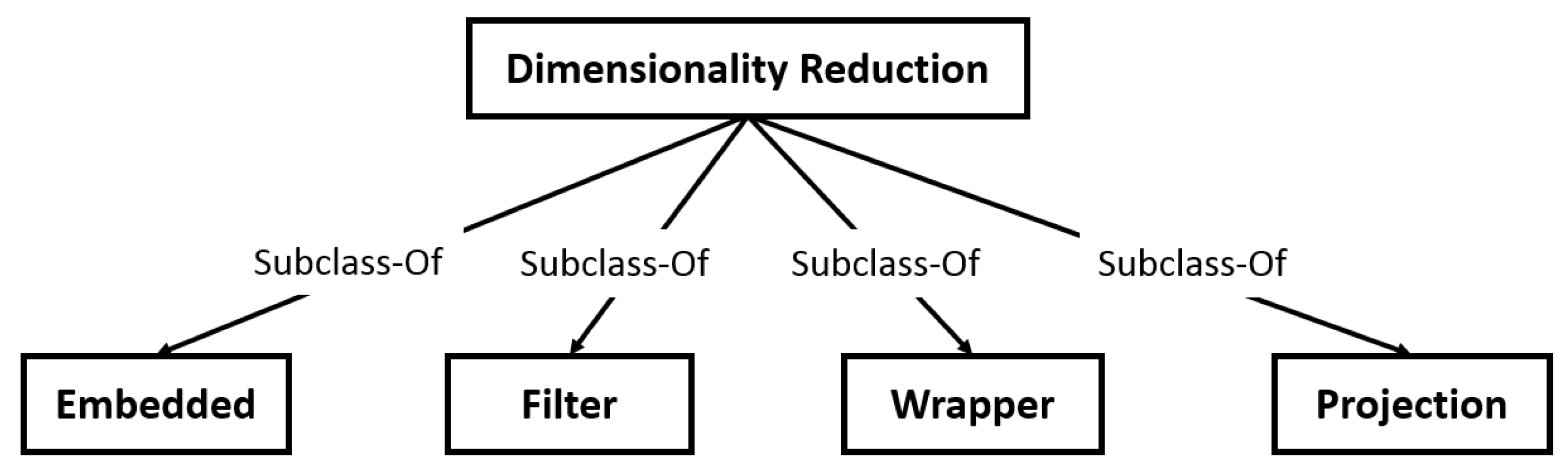

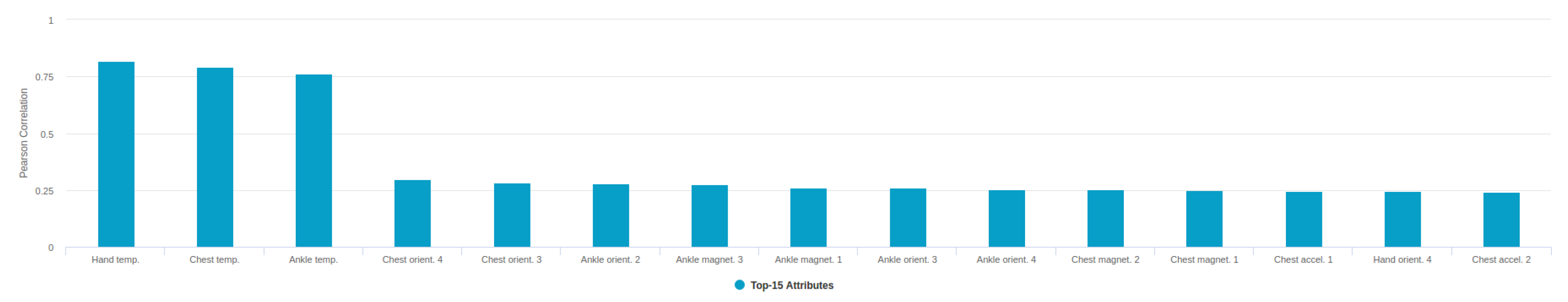

- Dimensionality reduction: selects a subset of relevant attributes to represent the dataset [73] based on attribute importance [59,74]. Three-dimensionality reduction approaches are defined: (i) Filter: computes correlation coefficients between features and class, then it selects the features with highest correlation [74]; (ii) Wrapper: builds models with all combinations of features. The subset of features is selected based on model performance [75]; (iii) Embedded: incorporates the feature selection as part of the training process and reduces the computation time taken up for reclassifying different subsets that are done in wrapper methods [76,77].

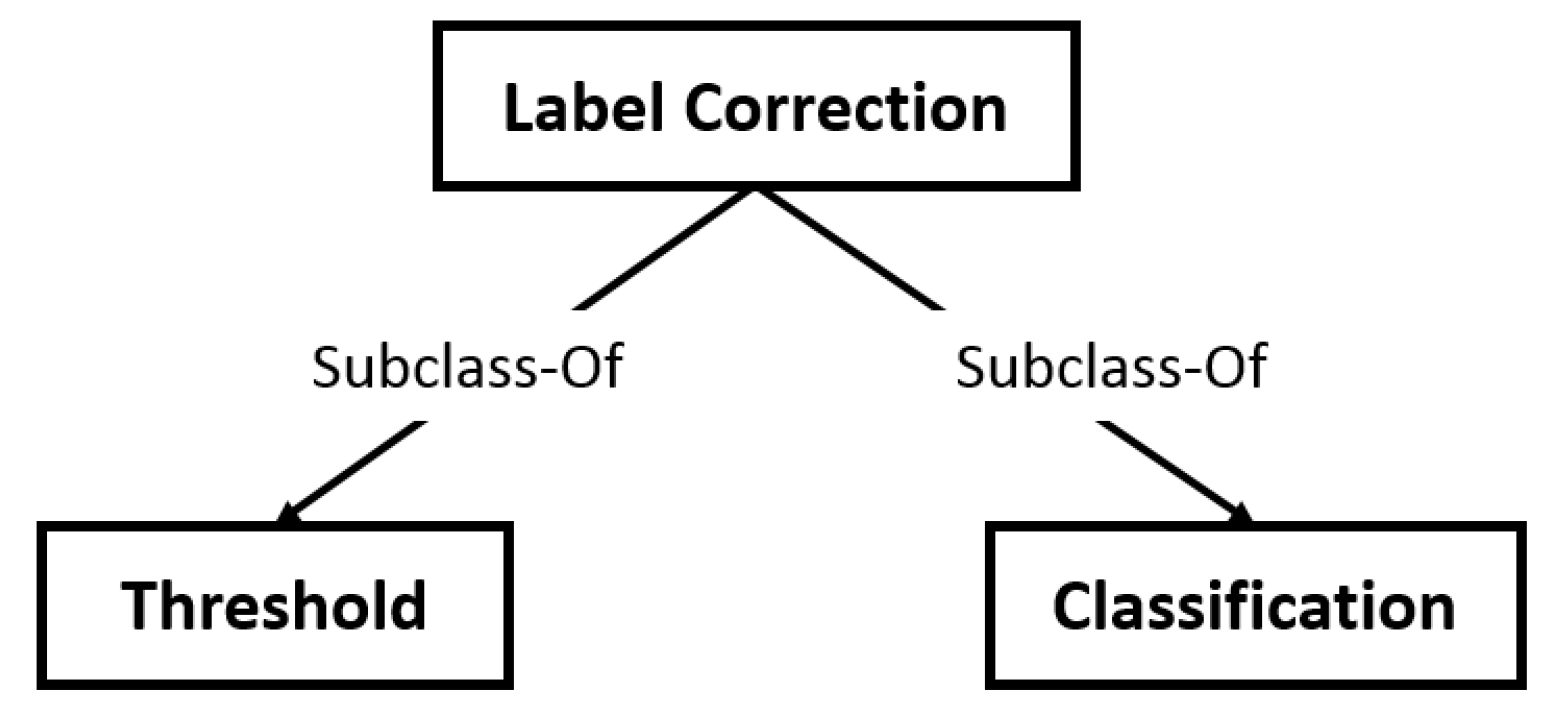

- Label correction: are identified instances with the same values. If classes are different, the label is corrected, or the instance is removed [80].

- Remove duplicate instances: deletes duplicate records from dataset [81].

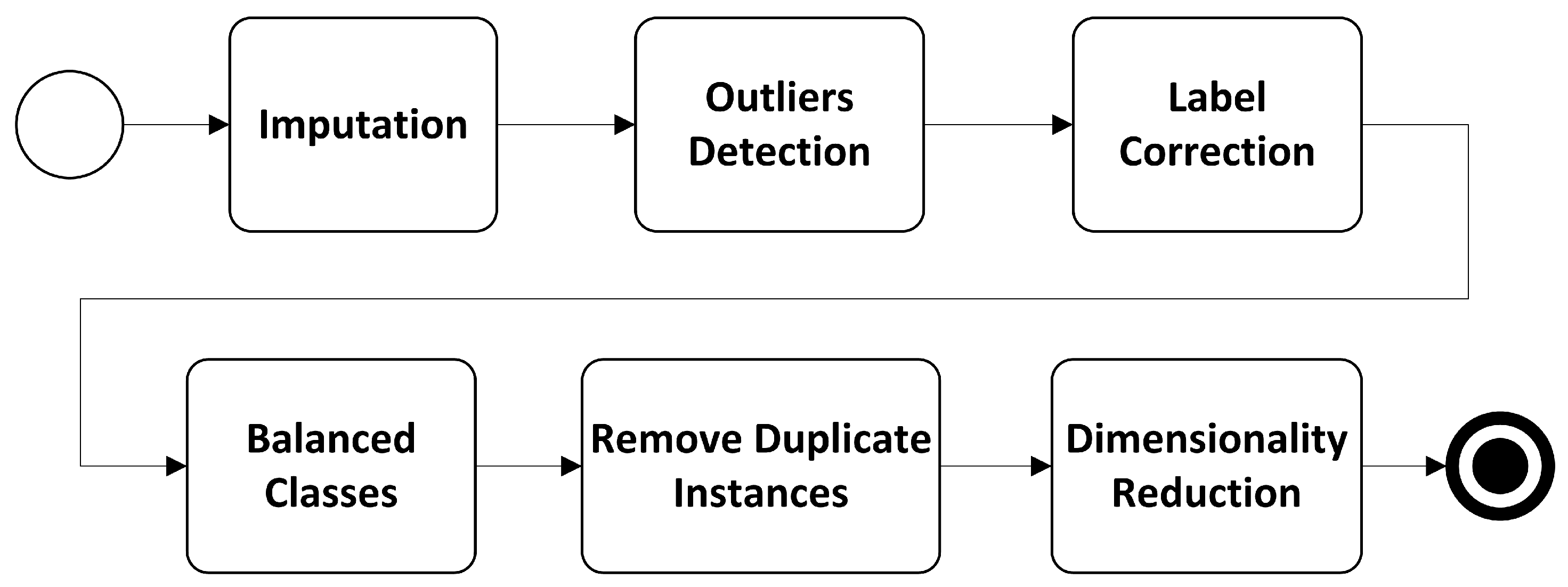

- A

- Check missing values in the dataset.

- B

- In case of the occurrence of missing values, the imputation task must be applied. The new data must be analyzed because the imputation methods can create outliers.

- C

- Subsequently, an outlier detection algorithm is applied with the aim to find candidate outliers in the raw dataset or generated in the previous step.

- D

- Label correction algorithm looks for mislabelled instances in the raw dataset or generated by the Imputation methods.

- E

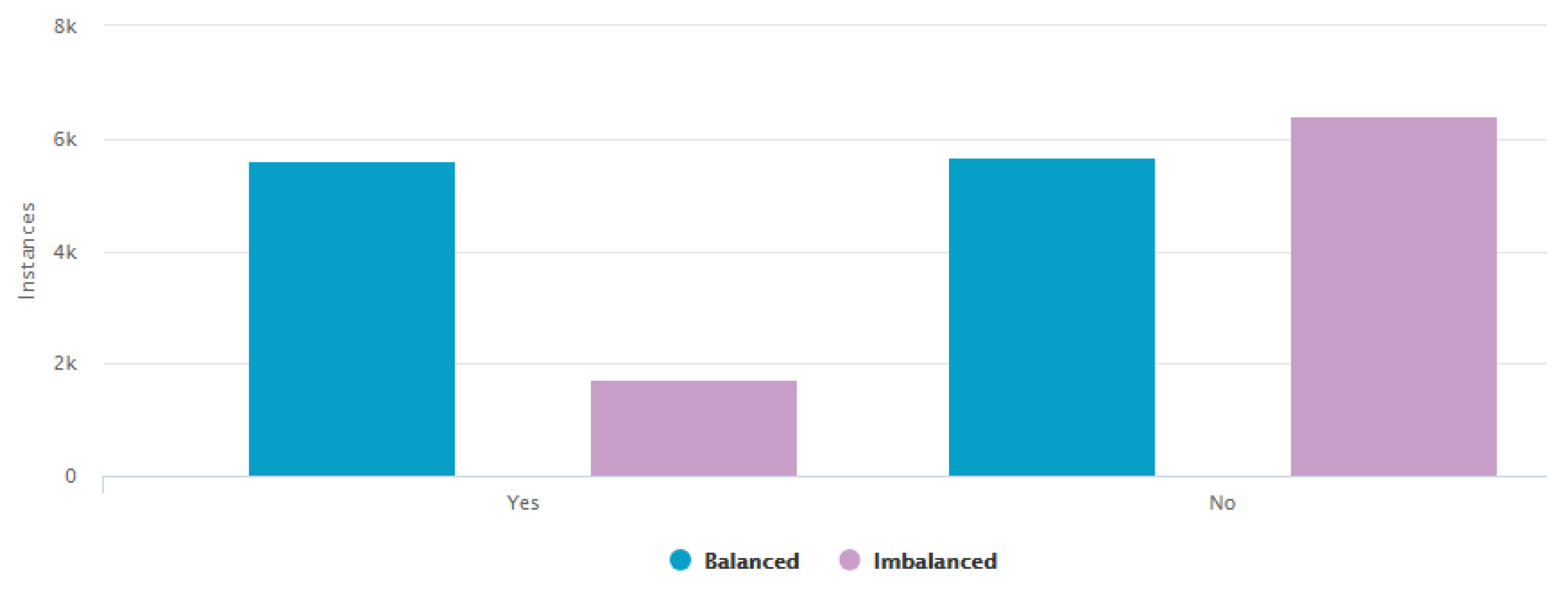

- Verify if the dataset is imbalanced; Imbalance Ratio (IR) is used to measure the distribution of binary class:where represents the size of the majority class and the size of the minority class. A dataset with IR 1 is perfectly balanced, while datasets with a higher IR are more imbalanced [82].In case of the class having more than two labels, Normalized Entropy is used [83]. This measure indicates the degree of uniformity of the distribution of class labels, denoted bywhere is the probability that assumes the ith value , for . We suppose that each label of the class has the same probability of appearing, therefore the theoretical maximum value for the entropy of the class is . Thus, the normalized entropy can be computed as:The class is balanced when is close to 1.

- F

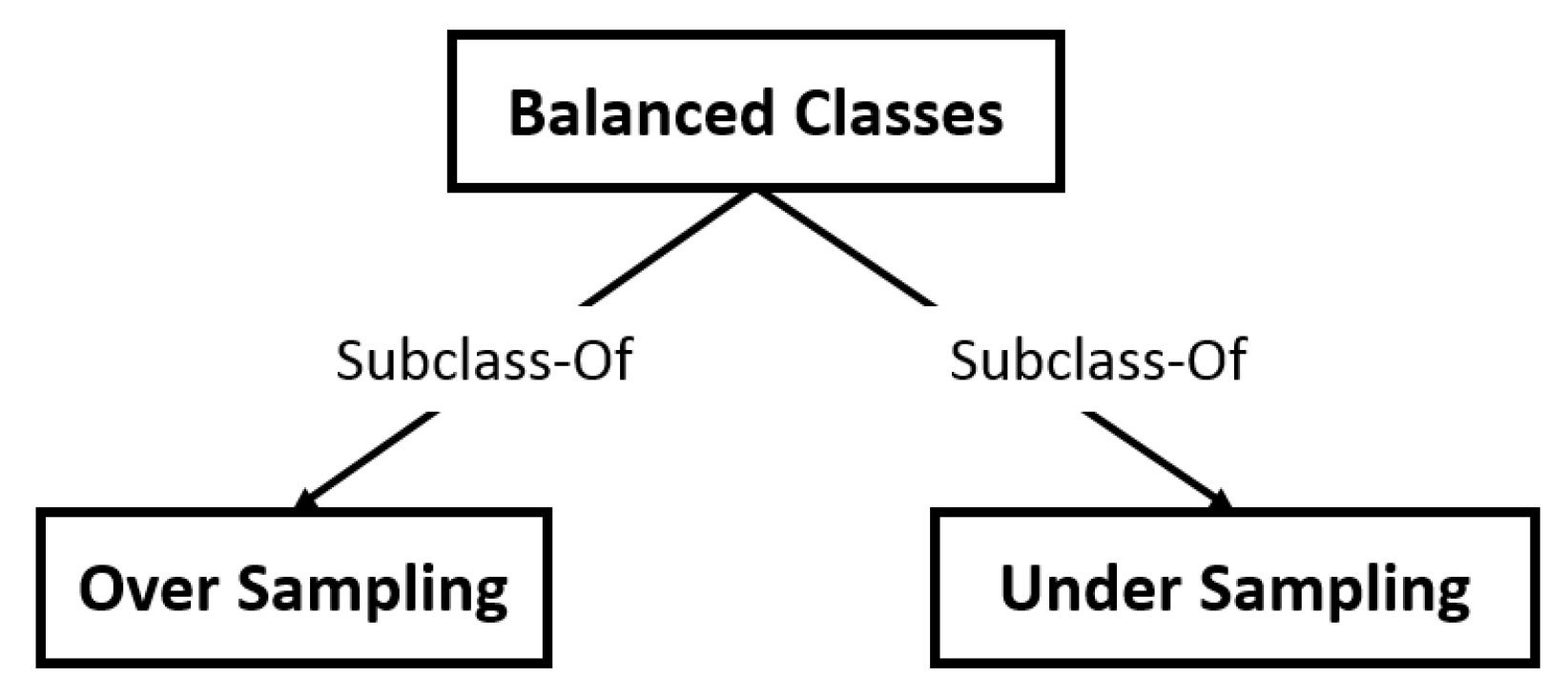

- If the dataset is imbalanced, then we use an algorithm for balanced of classes. This creates synthetic instances (oversampling or undersampling techniques) on the minority class.

- G

- Remove duplicate instances in the raw dataset or generated by previous data cleaning tasks.

- H

- Finally, the algorithms for dimensionality reduction are used for reducing the dimensionality of the dataset.

4.1.5. Validating the Conceptual Framework

4.2. Data Cleaning Ontology

4.2.1. Build Glossary of Terms

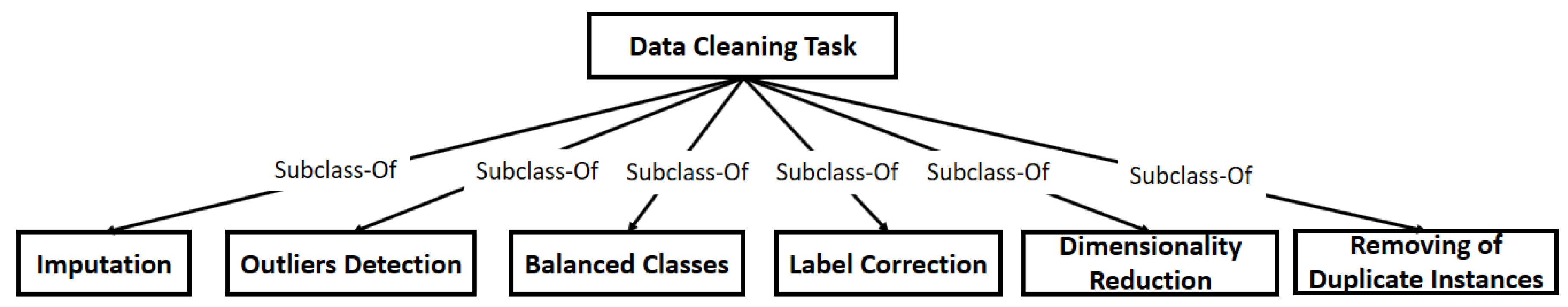

4.2.2. Build Concept Taxonomies

- Imputation is resolved through approaches: Imputation Based on Non-Missing Attributes, Deletion, Hot Deck Imputation, and Imputation Based on Missing Attributes. Figure 5 exposes the approaches that are sub-classes of Imputation tasks.

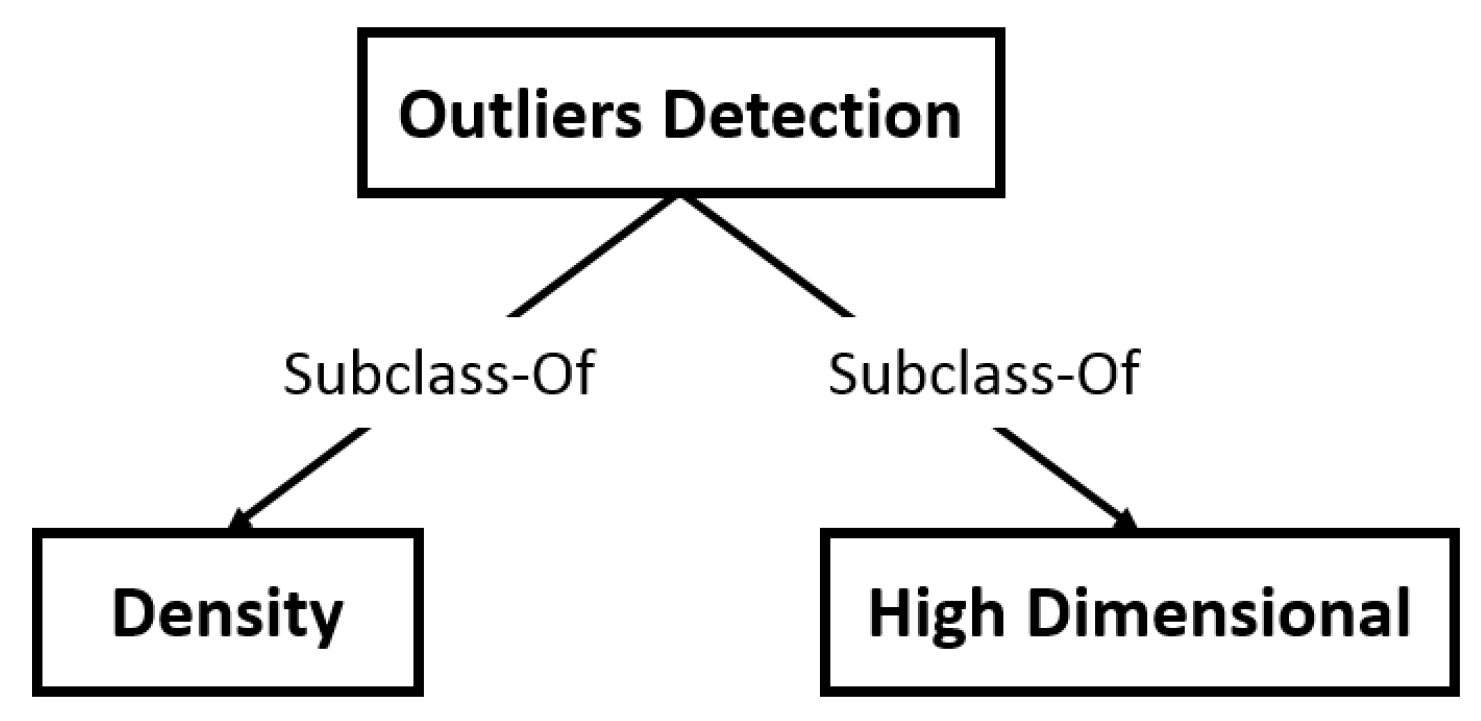

- The Outliers Detection take into account techniques based on Density or High Dimensional (see Figure 6).

- Label Correction is addressed in two ways: approaches based on Threshold or Classification algorithms. Figure 8 shows:

- Approaches as Embedded, Filter, Projection and Wrapper are used to Dimensionality Reduction. Figure 9 lists:

- Up to now, we have not found the classification of techniques of Removing of Duplicate Instances.

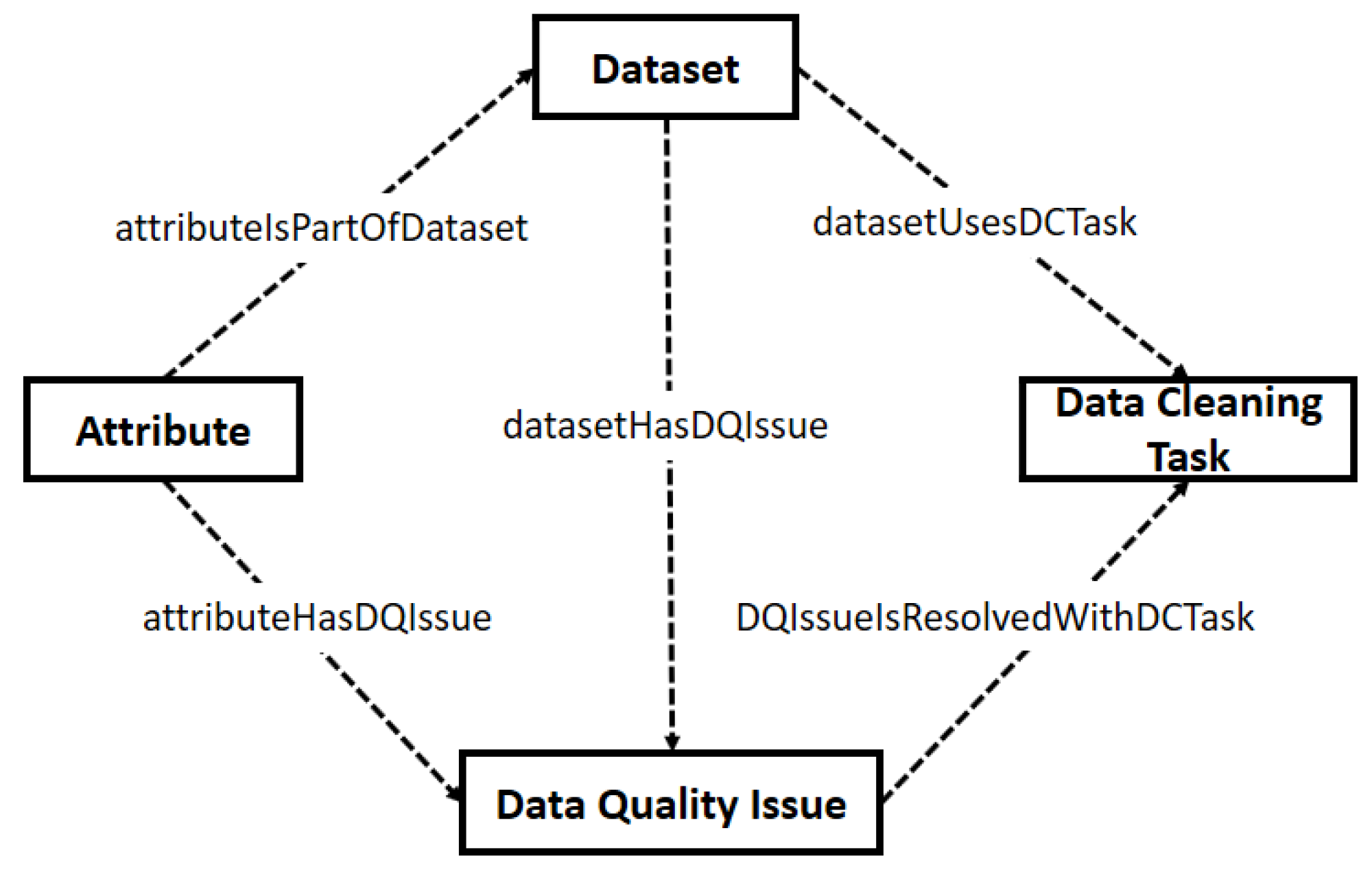

4.2.3. Build Ad Hoc Binary Relation Diagrams

- A Dataset (1..1) has Data Quality Issues (1..*).

- A Data Quality Issue (1..*) is resolved with Data Cleaning Tasks (1..*).

- A Dataset (1..1) uses Data Cleaning Tasks (1..*).

- An Attribute (1..*) is part of a Dataset (1..1).

- An Attribute (1..*) has Data Quality Issues (1..* ).

4.2.4. Build Concept Dictionary

4.2.5. Describe Rules

5. Evaluation

5.1. Experimental Datasets

5.1.1. Physical Activity Monitoring

5.1.2. Occupancy Detection of an Office Room

5.2. Evaluation Process

5.2.1. Physical Activity Monitoring

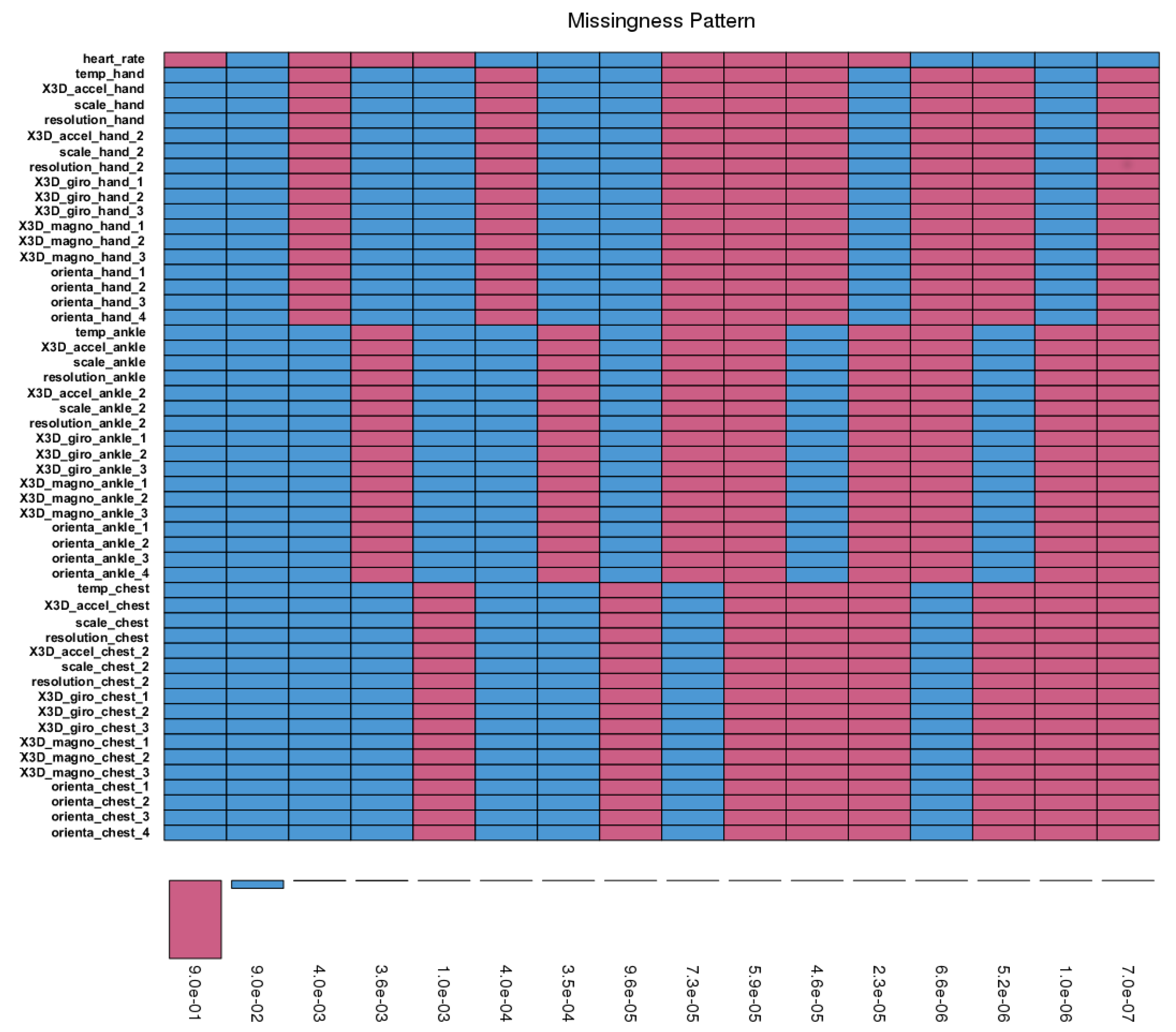

- Use Deletion approach to remove heart_rate attribute and 34 instances. We used List Wise Deletion.

- Use Imputation Based on Non-Missing Attributes on the dataset. We imputed each subject dataset with Linear and Bayesian regression.

5.2.2. Occupancy Detection of an Office Room

5.3. Results

5.3.1. Physical Activity Monitoring

5.3.2. Occupancy Detection of an Office Room

5.4. Comparative Study

6. Conclusions

- Build an integrated data quality framework for several knowledge discovery tasks as regression [37], clustering and association rules. The integrated data quality framework would consider the Big Data paradigm [29] and hence huge datasets. Deletion of redundancies will play a key role in decreasing the computational complexity of the Big Data models.

- Use ontologies of several domains to improve the performance of the data cleaning algorithms—e.g, the dimensionality reduction task. We could use the ontology of cancer diagnosis developed by [47] to select a subset of relevant features in datasets related to cancer.

- Create a case-based reasoning (CBR) for the recommendation of suitable data cleaning algorithms based on past experiences. The case representation would be based on annotation of samples also called dataset meta-features (e.g. mean absolute skewness, mean absolute kurtosis, mutual information, etc. [119]). The meta-features gather knowledge about datasets in order to provide an automatic selection, recommendation, or support for a future task [120]—in this case, recommendation of data cleaning algorithms.

Author Contributions

Acknowledgments

- Project: “Red de formación de talento humano para la innovación social y productiva en el Departamento del Cauca InnovAcción Cauca”. Convocatoria 03-2018 Publicación de artículos en revistas de alto impacto.

- Project: “Alternativas Innovadoras de Agricultura Inteligente para sistemas productivos agrícolas del departamento del Cauca soportado en entornos de IoT - ID 4633” financed by Convocatoria 04C–2018 “Banco de Proyectos Conjuntos UEES-Sostenibilidad” of Project “Red de formación de talento humano para la innovación social y productiva en el Departamento del Cauca InnovAcción Cauca”.

- Spanish Ministry of Economy, Industry and Competitiveness (Projects TRA2015-63708-R and TRA2016-78886-C3-1-R).

Conflicts of Interest

Abbreviations

| DQ | Data Quality |

| DQF4CT | Data Quality Framework for Classification Tasks |

| DCO | Data Cleaning Ontology |

References

- Gantz, J.; Reinsel, D. The Digital Universe in 2020: Big Data, Bigger Digital Shadows, And Biggest Growth in the Far East. Available online: https://www.emc-technology.com/collateral/analyst-reports/idc-the-digital-universe-in-2020.pdf (accessed on 20 April 2018).

- Hu, H.; Wen, Y.; Chua, T.S.; Li, X. Toward Scalable Systems for Big Data Analytics: A Technology Tutorial. IEEE Access 2014, 2, 652–687. [Google Scholar] [Green Version]

- Rajaraman, A.; Ullman, J.D. Mining of Massive Datasets; Cambridge University Press: New York, NY, USA; Cambridge, UK, 2011. [Google Scholar]

- Pacheco, F.; Rangel, C.; Aguilar, J.; Cerrada, M.; Altamiranda, J. Methodological framework for data processing based on the Data Science paradigm. In Proceedings of the 2014 XL Latin American Computing Conference (CLEI), Montevideo, Uruguay, 15–19 September 2014; pp. 1–12. [Google Scholar]

- Sebastian-Coleman, L. Measuring Data Quality for Ongoing Improvement: A Data Quality Assessment Framework; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2012. [Google Scholar]

- Eyob, E. Social Implications of Data Mining and Information Privacy: Interdisciplinary Frameworks and Solutions: Interdisciplinary Frameworks and Solutions; Information Science Reference: Hershey, PA, USA, 2009. [Google Scholar]

- Piateski, G.; Frawley, W. Knowledge Discovery in Databases; MIT Press: Cambridge, MA, USA, 1991. [Google Scholar]

- Chapman, P. CRISP-DM 1.0: Step-By-Step Data Mining Guide. SPSS, 2000. Available online: http://www.crisp-dm.org/CRISPWP-0800.pdf (accessed on 20 April 2018).

- Hall, M.; Frank, E.; Holmes, G.; Pfahringer, B.; Reutemann, P.; Witten, I.H. The WEKA Data Mining Software: An Update. SIGKDD Explor. Newsl. 2009, 11, 10–18. [Google Scholar] [CrossRef]

- Mierswa, I.; Wurst, M.; Klinkenberg, R.; Scholz, M.; Euler, T. YALE: Rapid Prototyping for Complex Data Mining Tasks. In Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Philadelphia, PA, USA, 20–23 August 2006; ACM: New York, NY, USA, 2006; pp. 935–940. [Google Scholar]

- Berthold, M.; Cebron, N.; Dill, F.; Gabriel, T.; Kötter, T.; Meinl, T.; Ohl, P.; Thiel, K.; Wiswedel, B. KNIME—The Konstanz information miner: Version 2.0 and Beyond. ACM SIGKDD Explor. Newsl. 2009, 11, 26–31. [Google Scholar] [CrossRef]

- MATHWORKS. Matlab; The MathWorks Inc.: Natick, MA, USA, 2004. [Google Scholar]

- Ihaka, R.; Gentleman, R. R: A language for data analysis and graphics. J. Comput. Graph. Stat. 1996, 5, 299–314. [Google Scholar]

- Eaton, J.W. GNU Octave Manual; Network Theory Limited: Eastbourne, UK, 2002. [Google Scholar]

- Corrales, D.C.; Ledezma, A.; Corrales, J.C. A Conceptual Framework for Data Quality in Knowledge Discovery Tasks (FDQ-KDT): A Proposal. J. Comput. 2015, 10, 396–405. [Google Scholar] [CrossRef] [Green Version]

- Caballero, I.; Verbo, E.; Calero, C.; Piattini, M. A Data Quality Measurement Information Model Based on ISO/IEC 15939; ICIQ: Cambridge, MA, USA, 2007; pp. 393–408. [Google Scholar]

- Ballou, D.P.; Pazer, H.L. Modeling Data and Process Quality in Multi-Input, Multi-Output Information Systems. Manag. Sci. 1985, 31, 150–162. [Google Scholar] [CrossRef]

- Berti-Équille, L. Measuring and Modelling Data Quality for Quality-Awareness in Data Mining. In Quality Measures in Data Mining; Guillet, F.J., Hamilton, H.J., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 101–126. [Google Scholar]

- Kerr, K.; Norris, T. The Development of a Healthcare Data Quality Framework and Strategy. In Proceedings of the Ninth International Conference on Information Quality (ICIQ-04), Cambridge, MA, USA, 5–7 November 2004; pp. 218–233. [Google Scholar]

- Wang, R.Y.; Strong, D.M. Beyond accuracy: What data quality means to data consumers. J. Manag. Inf. Syst. 1996, 12, 5–33. [Google Scholar] [CrossRef]

- Eppler, M.J.; Wittig, D. Conceptualizing Information Quality: A Review of Information Quality Frameworks from the Last Ten Years. In Proceedings of the 2000 International Conference on Information Quality (IQ 2000), Cambridge, MA, USA, 20–22 October 2000; pp. 83–96. [Google Scholar]

- Gruber, T.R. Toward principles for the design of ontologies used for knowledge sharing? Int. J. Hum. Comput. Stud. 1995, 43, 907–928. [Google Scholar] [CrossRef] [Green Version]

- Uschold, M.; Gruninger, M. Ontologies: Principles, methods and applications. Knowl. Eng. Rev. 1996, 11, 93–136. [Google Scholar] [CrossRef]

- Geisler, S.; Quix, C.; Weber, S.; Jarke, M. Ontology-Based Data Quality Management for Data Streams. J. Data Inf. Qual. 2016, 7, 18:1–18:34. [Google Scholar] [CrossRef]

- Chiang, F.; Sitaramachandran, S. A Data Quality Framework for Customer Relationship Analytics. In Proceedings of the WISE 2015 16th International Conference on Web Information Systems Engineering, Miami, FL, USA, 1–3 November 2015; Wang, J., Cellary, W., Wang, D., Wang, H., Chen, S.C., Li, T., Zhang, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 366–378. [Google Scholar]

- Galhard, H.; Florescu, D.; Shasha, D.; Simon, E. An extensible Framework for Data Cleaning. In Proceedings of the 16th International Conference on Data Engineering, Washington, DC, USA, 28 February–3 March 2000; p. 312. [Google Scholar]

- Sampaio, S.D.F.M.; Dong, C.; Sampaio, P. DQ2S—A framework for data quality-aware information management. Expert Syst. Appl. 2015, 42, 8304–8326. [Google Scholar] [CrossRef]

- Li, W.; Lei, L. An Object-Oriented Framework for Data Quality Management of Enterprise Data Warehouse. In Proceedings of the 9th Pacific Rim International Conference on Artificial Intelligence Trends in Artificial Intelligence (PRICAI 2006), Guilin, China, 7–11 August 2006; Yang, Q., Webb, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1125–1129. [Google Scholar]

- Taleb, I.; Dssouli, R.; Serhani, M.A. Big Data Pre-processing: A Quality Framework. In Proceedings of the 2015 IEEE International Congress on Big Data, New York, NY, USA, 27 June–2 July 2015; pp. 191–198. [Google Scholar]

- Reimer, A.P.; Milinovich, A.; Madigan, E.A. Data quality assessment framework to assess electronic medical record data for use in research. Int. J. Med. Inform. 2016, 90, 40–47. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Almutiry, O.; Wills, G.; Alwabel, A. Toward a framework for data quality in cloud-based health information system. In Proceedings of the International Conference on Information Society (i-Society 2013), Toronto, ON, Canada, 24–26 June 2013; pp. 153–157. [Google Scholar]

- Arts, D.G.; De Keizer, N.F.; Scheffer, G.J. Defining and improving data quality in medical registries: A literature review, case study, and generic framework. J. Am. Med. Inform. Assoc. 2002, 9, 600–611. [Google Scholar] [CrossRef] [PubMed]

- Myrseth, P.; Stang, J.; Dalberg, V. A data quality framework applied to e-government metadata: A prerequsite to establish governance of interoperable e-services. In Proceedings of the 2011 International Conference on E-Business and E-Government (ICEE), Maui, Hawaii, 19–24 June 2011; pp. 1–4. [Google Scholar]

- Vetro, A.; Canova, L.; Torchiano, M.; Minotas, C.O.; Iemma, R.; Morando, F. Open data quality measurement framework: Definition and application to Open Government Data. Gov. Inf. Q. 2016, 33, 325–337. [Google Scholar] [CrossRef]

- Panahy, P.H.S.; Sidi, F.; Affendey, L.S.; Jabar, M.A.; Ibrahim, H.; Mustapha, A. A Framework to Construct Data Quality Dimensions Relationships. Indian J. Sci. Technol. 2013, 6, 4422–4431. [Google Scholar]

- Wang, R.Y.; Storey, V.C.; Firth, C.P. A framework for analysis of data quality research. IEEE Trans. Knowl. Data Eng. 1995, 7, 623–640. [Google Scholar] [CrossRef] [Green Version]

- Corrales, D.C.; Corrales, J.C.; Ledezma, A. How to Address the Data Quality Issues in Regression Models: A Guided Process for Data Cleaning. Symmetry 2018, 10. [Google Scholar] [CrossRef]

- Rasta, K.; Nguyen, T.H.; Prinz, A. A framework for data quality handling in enterprise service bus. In Proceedings of the 2013 Third International Conference on Innovative Computing Technology (INTECH), London, UK, 29–31 August 2013; pp. 491–497. [Google Scholar]

- Olson, D.L.; Delen, D. Advanced Data Mining Techniques; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Schutt, R.; O’Neil, C. Doing Data Science: Straight Talk from the Frontline; O’Reilly Media, Inc.: Newton, MA, USA, 2013. [Google Scholar]

- Wang, X.; Hamilton, H.J.; Bither, Y. An Ontology-Based Approach to Data Cleaning; Technical Report CS-2005-05; Department of Computer Science, University of Regina: Regina, SK, Canada, 2005. [Google Scholar]

- Almeida, R.; Oliveira, P.; Braga, L.; Barroso, J. Ontologies for Reusing Data Cleaning Knowledge. In Proceedings of the 2012 IEEE Sixth International Conference on Semantic Computing, Palermo, Italy, 19–21 September 2012; pp. 238–241. [Google Scholar]

- Brüggemann, S. Rule Mining for Automatic Ontology Based Data Cleaning. In Proceedings of the 10th Asia-Pacific Web Conference ON Progress in WWW Research and Development, Shenyang, China, 26–28 April 2008; Zhang, Y., Yu, G., Bertino, E., Xu, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 522–527. [Google Scholar]

- Kedad, Z.; Métais, E. Ontology-Based Data Cleaning. In Natural Language Processing and Information Systems, Proceedings of the 6th International Conference on Applications of Natural Language to Information Systems, NLDB 2002, Stockholm, Sweden, 27–28 June 2002; Andersson, B., Bergholtz, M., Johannesson, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2002; pp. 137–149. [Google Scholar]

- Johnson, S.G.; Speedie, S.; Simon, G.; Kumar, V.; Westra, B.L. A Data Quality Ontology for the Secondary Use of EHR Data. AMIA Ann. Symp. Proc. 2015, 2015, 1937–1946. [Google Scholar]

- Abarza, R.G.; Motz, R.; Urrutia, A. Quality Assessment Using Data Ontologies. In Proceedings of the 2014 33rd International Conference of the Chilean Computer Science Society (SCCC), Talca, Chile, 8–14 November 2014; pp. 30–33. [Google Scholar]

- Da Silva Jacinto, A.; da Silva Santos, R.; de Oliveira, J.M.P. Automatic and semantic pre-Selection of features using ontology for data mining on datasets related to cancer. In Proceedings of the International Conference on Information Society (i-Society 2014), London, UK, 10–12 November 2014; pp. 282–287. [Google Scholar]

- Garcia, L.F.; Graciolli, V.M.; Ros, L.F.D.; Abel, M. An Ontology-Based Conceptual Framework to Improve Rock Data Quality in Reservoir Models. In Proceedings of the 2016 IEEE 28th International Conference on Tools with Artificial Intelligence (ICTAI), San Jose, CA, USA, 6–8 November 2016; pp. 1084–1088. [Google Scholar]

- Coulet, A.; Smail-Tabbone, M.; Benlian, P.; Napoli, A.; Devignes, M.D. Ontology-guided data preparation for discovering genotype-phenotype relationships. BMC Bioinform. 2008, 9, S3. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jabareen, Y. Building a conceptual framework: Philosophy, definitions, and procedure. Int. J. Qual. Methods 2009, 8, 49–62. [Google Scholar] [CrossRef]

- Guba, E.G.; Lincoln, Y. Competing paradigms in qualitative research. Handb. Qual. Res. 1994, 2, 105. [Google Scholar]

- Corrales, D.C.; Ledezma, A.; Corrales, J.C. A systematic review of data quality issues in knowledge discovery tasks. Rev. Ing. Univ. Medel. 2016, 15. [Google Scholar] [CrossRef]

- Xiong, H.; Pandey, G.; Steinbach, M.; Kumar, V. Enhancing data analysis with noise removal. IEEE Trans. Knowl. Data Eng. 2006, 18, 304–319. [Google Scholar] [CrossRef] [Green Version]

- Chandola, V.; Banerjee, A.; Kumar, V. Anomaly Detection: A Survey. ACM Comput. Surv. 2009, 41, 15:1–15:58. [Google Scholar] [CrossRef]

- Aydilek, I.B.; Arslan, A. A hybrid method for imputation of missing values using optimized fuzzy c-means with support vector regression and a genetic algorithm. Inf. Sci. 2013, 233, 25–35. [Google Scholar] [CrossRef]

- Hawkins, D.M. Identification of Outliers; Springer: Berlin, Germany, 1980; Volume 11. [Google Scholar]

- Barnett, V.; Lewis, T. Outliers in Statistical Data; Wiley: New York, NY, USA, 1994; Volume 3. [Google Scholar]

- Johnson, R.A.; Wichern, D.W. Applied Multivariate Statistical Analysis; Prentice-Hall: Upper Saddle River, NJ, USA, 2014; Volume 4. [Google Scholar]

- Khalid, S.; Khalil, T.; Nasreen, S. A survey of feature selection and feature extraction techniques in machine learning. In Proceedings of the Science and Information Conference (SAI), London, UK, 27–29 August 2014; pp. 372–378. [Google Scholar]

- Tang, J.; Alelyani, S.; Liu, H. Feature selection for classification: A review. In Data Classification: Algorithms and Applications; Chapman and Hall/CRC: Boca Raton, FL, USA, 2014; p. 37. [Google Scholar]

- He, H.; Garcia, E.A. Learning from Imbalanced Data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar] [Green Version]

- Chairi, I.; Alaoui, S.; Lyhyaoui, A. Learning from imbalanced data using methods of sample selection. In Proceedings of the 2012 International Conference on Multimedia Computing and Systems (ICMCS), Tangier, Morocco, 10–12 May 2012; pp. 254–257. [Google Scholar]

- Bosu, M.F.; MacDonell, S.G. A Taxonomy of Data Quality Challenges in Empirical Software Engineering. In Proceedings of the 2013 22nd Australian Software Engineering Conference, Melbourne, Australia, 4–7 June 2013; pp. 97–106. [Google Scholar]

- Hakimpour, F.; Geppert, A. Resolving Semantic Heterogeneity in Schema Integration. In Proceedings of the International Conference on Formal Ontology in Information Systems, Ogunquit, ME, USA, 17–19 October 2001; ACM: New York, NY, USA, 2001; Volume 2001, pp. 297–308. [Google Scholar]

- Finger, M.; Silva, F.S.D. Temporal data obsolescence: Modelling problems. In Proceedings of the Fifth International Workshop on Temporal Representation and Reasoning (Cat. No. 98EX157), Sanibel Island, FL, USA, 16–17 May 1998; pp. 45–50. [Google Scholar]

- Maydanchik, A. Data Quality Assessment; Data Quality for Practitioners Series; Technics Publications: Bradley Beach, NJ, USA, 2007. [Google Scholar]

- Aljuaid, T.; Sasi, S. Proper imputation techniques for missing values in datasets. In Proceedings of the 2016 International Conference on Data Science and Engineering (ICDSE), Cochin, India, 23–25 August 2016; pp. 1–5. [Google Scholar]

- Strike, K.; Emam, K.E.; Madhavji, N. Software cost estimation with incomplete data. IEEE Trans. Softw. Eng. 2001, 27, 890–908. [Google Scholar] [CrossRef] [Green Version]

- Magnani, M. Techniques for dealing with missing data in knowledge discovery tasks. Obtido 2004, 15, 2007. [Google Scholar]

- Breunig, M.M.; Kriegel, H.P.; Ng, R.T.; Sander, J. LOF: Identifying Density-Based Local Outliers; ACM Sigmod Record; ACM: New York, NY, USA, 2000; Volume 29, pp. 93–104. [Google Scholar]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining (KDD-96 Proceedings), Portland, OR, USA, 2–4 August 1996; pp. 226–231. [Google Scholar]

- Kriegel, H.P.; Zimek, A.; Hubert, M.S. Angle-based outlier detection in high-dimensional data. In Proceedings of the 14th ACM SIGKDD International Conference On Knowledge Discovery and Data Mining, Las Vegas, NV, USA, 24–27 August 2008; pp. 444–452. [Google Scholar]

- Fayyad, U.M.; Piatetsky-Shapiro, G.; Smyth, P. Advances in Knowledge Discovery and Data Mining; Chapter from Data Mining to Knowledge Discovery: An Overview; American Association for Artificial Intelligence: Menlo Park, CA, USA, 1996; pp. 1–34. [Google Scholar]

- Ladha, L.; Deepa, T. Feature Selection Methods And Algorithms. Int. J. Comput. Sci. Eng. 2011, 3, 1787–1797. [Google Scholar]

- Chandrashekar, G.; Sahin, F. A survey on feature selection methods. Comput. Electr. Eng. 2014, 40, 16–28. [Google Scholar] [CrossRef]

- Blum, A.L.; Langley, P. Selection of relevant features and examples in machine learning. Artif. Intell. 1997, 97, 245–271. [Google Scholar] [CrossRef]

- Jolliffe, I. Principal Component Analysis; Wiley Online Library: Hoboken, NJ, USA, 2002. [Google Scholar]

- Wang, J.; Xu, M.; Wang, H.; Zhang, J. Classification of Imbalanced Data by Using the SMOTE Algorithm and Locally Linear Embedding. In Proceedings of the 2006 8th international Conference on Signal Processing, Beijing, China, 16–20 November 2006; Volume 3. [Google Scholar]

- He, H.; Ma, Y. Imbalanced Learning: Foundations, Algorithms, and Applications; John Wiley and Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Frenay, B.; Verleysen, M. Classification in the Presence of Label Noise: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 845–869. [Google Scholar] [CrossRef] [PubMed]

- Huang, L.; Jin, H.; Yuan, P.; Chu, F. Duplicate Records Cleansing with Length Filtering and Dynamic Weighting. In Proceedings of the 2008 Fourth International Conference on Semantics, Knowledge and Grid, Beijing, China, 3–5 December 2008; pp. 95–102. [Google Scholar]

- Verbiest, N.; Ramentol, E.; Cornelis, C.; Herrera, F. Improving SMOTE with Fuzzy Rough Prototype Selection to Detect Noise in Imbalanced Classification Data. In Advances in Artificial Intelligence—IBERAMIA 2012, Proceedings of the 13th Ibero-American Conference on AI, Cartagena de Indias, Colombia, 13–16 November 2012; Pavón, J., Duque-Méndez, N.D., Fuentes-Fernández, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 169–178. [Google Scholar]

- Jacquemin, A.P.; Berry, C.H. Entropy measure of diversification and corporate growth. J. Ind. Econ. 1979, 27, 359–369. [Google Scholar] [CrossRef]

- Asuncion, A.; Newman, D.; UCI Machine Learning Repository. Irvine, CA: University of California, School of Information and Computer Science. 2007. Available online: http://www.ics.uci.edu/~{}mlearn/MLRepository.html (accessed on 15 March 2018).

- Candanedo, L.M.; Feldheim, V. Accurate occupancy detection of an office room from light, temperature, humidity and CO2 measurements using statistical learning models. Energy Build. 2016, 112, 28–39. [Google Scholar] [CrossRef]

- Reiss, A.; Stricker, D. Creating and Benchmarking a New Dataset for Physical Activity Monitoring. In Proceedings of the 5th International Conference on PErvasive Technologies Related to Assistive Environments, Heraklion, Greece, 6–8 June 2012; ACM: New York, NY, USA, 2012; pp. 40:1–40:8. [Google Scholar]

- Bautista-Zambrana, M.R. Methodologies to Build Ontologies for Terminological Purposes. Procedia Soc. Behav. Sci. 2015, 173, 264–269. [Google Scholar] [CrossRef]

- Gómez-Pérez, A.; Fernández-López, M.; Corcho, O. Ontological Engineering: With Examples from the Areas of Knowledge Management, e-Commerce and the Semantic Web. (Advanced Information and Knowledge Processing); Springer-Verlag New York, Inc.: Secaucus, NJ, USA, 2007. [Google Scholar]

- Horrocks, I.; Patel-Schneider, P.F.; Bole, H.; Tabet, S.; Grosof, B.; Dean, M. SWRL: A Semantic Web Rule Language Combining OWL and RuleML. 2004. Available online: https://www.w3.org/Submission/SWRL/ (accessed on 1 May 2018).

- Rodríguez, J.P.; Girón, E.J.; Corrales, D.C.; Corrales, J.C. A Guideline for Building Large Coffee Rust Samples Applying Machine Learning Methods. In Proceedings of the International Conference of ICT for Adapting Agriculture to Climate Change, Popayán, Colombia, 22–24 November 2017; pp. 97–110. [Google Scholar]

- Juddoo, S. Overview of data quality challenges in the context of Big Data. In Proceedings of the 2015 International Conference on Computing, Communication and Security (ICCCS), Pamplemousses, Mauritius, 4–5 December 2015; pp. 1–9. [Google Scholar]

- Cai, L.; Zhu, Y. The challenges of data quality and data quality assessment in the big data era. Data Sci. J. 2015, 14. [Google Scholar] [CrossRef]

- Corrales, D.C.; Lasso, E.; Ledezma, A.; Corrales, J.C. Feature selection for classification tasks: Expert knowledge or traditional methods? J. Intell. Fuzzy Syst. 2018, 34, 2825–2835. [Google Scholar] [CrossRef]

- Kuhn, M. Building predictive models in R using the caret package. J. Stat. Softw. 2008, 28, 1–26. [Google Scholar] [CrossRef]

- Dong, Y.; Peng, C.Y.J. Principled missing data methods for researchers. SpringerPlus 2013, 2, 222. [Google Scholar] [CrossRef] [PubMed]

- Schafer, J.L. Multiple imputation: A primer. Stat. Methods Med. Res. 1999, 8, 3–15. [Google Scholar] [CrossRef] [PubMed]

- Grubbs, F.E. Procedures for detecting outlying observations in samples. Technometrics 1969, 11, 1–21. [Google Scholar] [CrossRef]

- Rennie, J.D.M.; Shih, L.; Teevan, J.; Karger, D.R. Tackling the Poor Assumptions of Naive Bayes Text Classifiers. In Proceedings of the Twentieth International Conference on Machine Learning, Washington, DC, USA, 21–24 August 2003; pp. 616–623. [Google Scholar]

- Colonna, J.G.; Cristo, M.; Salvatierra, M.; Nakamura, E.F. An incremental technique for real-time bioacoustic signal segmentation. Expert Syst. Appl. 2015, 42, 7367–7374. [Google Scholar] [CrossRef]

- Colonna, J.G.; Gama, J.; Nakamura, E.F. How to Correctly Evaluate an Automatic Bioacoustics Classification Method. In Advances in Artificial Intelligence; Luaces, O., Gámez, J.A., Barrenechea, E., Troncoso, A., Galar, M., Quintián, H., Corchado, E., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 37–47. [Google Scholar]

- Colonna, J.G.; Gama, J.; Nakamura, E.F. Recognizing Family, Genus, and Species of Anuran Using a Hierarchical Classification Approach. In Discovery Science; Calders, T., Ceci, M., Malerba, D., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 198–212. [Google Scholar]

- Thabtah, F. Autism Spectrum Disorder Screening: Machine Learning Adaptation and DSM-5 Fulfillment. In Proceedings of the 1st International Conference on Medical and Health Informatics, Taichung City, Taiwan, 20–22 May 2017; ACM: New York, NY, USA, 2017; pp. 1–6. [Google Scholar]

- Estrela da Silva, J.; Marques de Sá, J.P.; Jossinet, J. Classification of breast tissue by electrical impedance spectroscopy. Med. Biol. Eng. Comput. 2000, 38, 26–30. [Google Scholar] [CrossRef]

- Ayres-de Campos, D.; Bernardes, J.; Garrido, A.; Marques-de Sa, J.; Pereira-Leite, L. SisPorto 2.0: A program for automated analysis of cardiotocograms. J. Matern.-Fetal Med. 2000, 9, 311–318. [Google Scholar] [PubMed]

- Yeh, I.C.; Lien, C.H. The comparisons of data mining techniques for the predictive accuracy of probability of default of credit card clients. Expert Syst. Appl. 2009, 36, 2473–2480. [Google Scholar] [CrossRef]

- Reyes-Ortiz, J.L.; Oneto, L.; Samà, A.; Parra, X.; Anguita, D. Transition-aware human activity recognition using smartphones. Neurocomputing 2016, 171, 754–767. [Google Scholar] [CrossRef] [Green Version]

- Zhang, K.; Fan, W. Forecasting skewed biased stochastic ozone days: Analyses, solutions and beyond. Knowl. Inf. Syst. 2008, 14, 299–326. [Google Scholar] [CrossRef]

- Abdelhamid, N.; Ayesh, A.; Thabtah, F. Phishing detection based Associative Classification data mining. Expert Syst. Appl. 2014, 41, 5948–5959. [Google Scholar] [CrossRef]

- Zikeba, M.; Tomczak, S.K.; Tomczak, J.M. Ensemble Boosted Trees with Synthetic Features Generation in Application to Bankruptcy Prediction. Expert Syst. Appl. 2016, 58, 93–101. [Google Scholar]

- Moro, S.; Cortez, P.; Rita, P. A data-driven approach to predict the success of bank telemarketing. Decis. Support Syst. 2014, 62, 22–31. [Google Scholar] [CrossRef] [Green Version]

- Mohammad, R.M.; Thabtah, F.; McCluskey, L. Predicting phishing websites based on self-structuring neural network. Neural Comput. Appl. 2014, 25, 443–458. [Google Scholar] [CrossRef]

- Mansouri, K.; Ringsted, T.; Ballabio, D.; Todeschini, R.; Consonni, V. Quantitative structure–activity relationship models for ready biodegradability of chemicals. J. Chem. Inf. Model. 2013, 53, 867–878. [Google Scholar] [CrossRef] [PubMed]

- Fernandes, K.; Cardoso, J.S.; Fernandes, J. Transfer Learning with Partial Observability Applied to Cervical Cancer Screening. In Pattern Recognition and Image Analysis; Alexandre, L.A., Salvador Sánchez, J., Rodrigues, J.M.F., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 243–250. [Google Scholar]

- Fatlawi, H.K. Enhanced Classification Model for Cervical Cancer Dataset based on Cost Sensitive Classifier. Int. J. Comput. Tech. 2007, 4, 115–120. [Google Scholar]

- Kabiesz, J.; Sikora, B.; Sikora, M.; Wróbel, Ł. Application of rule-based models for seismic hazard prediction in coal mines. Acta Montan. Slovaca 2013, 18, 262–277. [Google Scholar]

- Da Rocha Neto, A.R.; de Alencar Barreto, G. On the Application of Ensembles of Classifiers to the Diagnosis of Pathologies of the Vertebral Column: A Comparative Analysis. IEEE Latin Am. Trans. 2009, 7, 487–496. [Google Scholar] [CrossRef]

- Da Rocha Neto, A.R.; Sousa, R.; de A. Barreto, G.; Cardoso, J.S. Diagnostic of Pathology on the Vertebral Column with Embedded Reject Option. In Pattern Recognition and Image Analysis; Vitrià, J., Sanches, J.M., Hernández, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 588–595. [Google Scholar]

- Tsanas, A.; Little, M.A.; Fox, C.; Ramig, L.O. Objective Automatic Assessment of Rehabilitative Speech Treatment in Parkinson’s Disease. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 181–190. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, G.; Song, Q.; Sun, H.; Zhang, X.; Xu, B.; Zhou, Y. A Feature Subset Selection Algorithm Automatic Recommendation Method. J. Artif. Int. Res. 2013, 47, 1–34. [Google Scholar]

- Reif, M.; Shafait, F.; Dengel, A. Meta2-features: Providing meta-learners more information. In Proceedings of the 35th German Conference on Artificial Intelligence, Saarbrücken, Germany, 24 September 2012; pp. 74–77. [Google Scholar]

| DQ Framework | Type | DQ Issues |

|---|---|---|

| [18,25,26,27,28,29] | Databases | Data freshness, integrity constraints, duplicate rows, missing values, inconsistencies, and overloaded table. |

| [30,31,32] | Databases in health systems | Illegible handwriting, incompleteness, unsuitable data format, heterogeneity. |

| [15,33,34,35,36,37] | Conceptual | Missing values, duplicate instances, outliers, high dimensionality, lack of meta-data and timeliness. |

| [38] | ESB | Heterogeneity, incompleteness, timeliness, and inconsistency. |

| Ontology | Type | DQ Issues |

|---|---|---|

| [41,42,43,44] | Databases | Typographical errors, synonymous record problem, missing data, inconsistent data entry format, domain violation, integrity constraint violation, semantic heterogeneity, invalid tuples. |

| [45,46,47] | Databases in health systems | Inconsistency, missing data |

| [47,48,49] | Reservoir models, cancer treatment, genotype-phenotype relationships | Missing values, spelling and format errors, heterogeneity data |

| Methodology | Methodology Step | Data Quality Issue |

|---|---|---|

| KDD [7] | Noise | |

| Preprocessing, Data Cleaning | Missing Values | |

| Outliers | ||

| Data Reduction, Projecton | High Dimensionality | |

| CRISP-DM [8] | Verify Data Quality | Missing Values |

| Clean Data | Missing Values | |

| SEMMA [39] | Modify | Outliers |

| High Dimensionality | ||

| Data Science Process [40] | Clean Data | Missing Values |

| Duplicates | ||

| Outliers |

| Number of Papers | |||||

|---|---|---|---|---|---|

| Data Quality Issues | IEEE Xplore | Science Direct | Springer Link | Total | |

| Redundancy | 24 | 13 | 10 | 8 | 55 |

| Amount of data | 23 | 15 | 10 | 5 | 53 |

| Outliers | 28 | 10 | 7 | 2 | 47 |

| Missing values | 21 | 14 | 4 | 0 | 39 |

| Heterogeneity | 11 | 3 | 1 | 18 | 33 |

| Noise | 15 | 2 | 2 | 0 | 19 |

| Inconsistency | 9 | 5 | 0 | 2 | 16 |

| Timeliness | 2 | 0 | 1 | 1 | 4 |

| Noise Issue | Data Cleaning Task |

|---|---|

| Missing values | Imputation |

| Outliers | Outlier detection |

| High dimensionality | Dimensionality reduction |

| Imbalanced classes | Balanced Classes |

| Mislabelled class | Label correction |

| Duplicate instances | Remove duplicate instances |

| Name | Synonyms | Acronyms | Description |

|---|---|---|---|

| Dataset | – | – | Raw data used by classification tasks. |

| Attribute | Feature | Att | Feature of the application domain that belongs to dataset. |

| Data Quality Issue | – | DQ Issue | Problems presented in the data. |

| Data Cleaning Task | – | DC Task | Task for solving a data quality issue in the dataset. |

| Balanced Classes | – | – | Approach to address imbalanced classes. |

| Dimensionality Reduction | – | – | Approach to address high dimensional spaces. |

| Imputation | – | – | Approach to address missing values. |

| Label Correction | – | – | Approach to address mislabeled instances. |

| Outliers Detection | – | – | Approach to address outliers. |

| Removing of Duplicate Instances | – | – | Approach to address duplicate instances. |

| Class Name | Class Attributes | Instances |

|---|---|---|

| Imputation | – | – |

| Deletion | – | list wise deletion, pair wise deletion |

| Hot Deck Imputation | – | last observation carried forward |

| Imputation Based on Missing Attributes | – | mean, median, mode |

| Imputation Based on Non-Missing Attributes | – | Bayesian linear regression, linear regression, logistic regression |

| Class Name | Class Attributes | Instances |

|---|---|---|

| Outliers Detection | – | – |

| Density | – | dbscan, local outlier factor, optics |

| High Dimensional | – | angle based outlier degree, grid based subspace outlier, sub space outlier degree |

| Removing of Duplicate Instances | – | – |

| Class Name | Class Attributes | Instances |

|---|---|---|

| Balanced Classes | – | – |

| OverSampling | – | random over sampling, smote |

| UnderSampling | – | condensed nearest neighbor rule, edited nearest neighbor rule, neighborhood cleaning rule, one side selection, random under sampling, tome link |

| LabelCorrection | – | – |

| Classification | – | c4.5, k nearest neighbor, support vector machine |

| Threshold | – | entropy conditional distribution, least complex correct hypothesis |

| Class Name | Class Attributes | Instances |

|---|---|---|

| Dimensionality Reduction | – | – |

| Embedded | – | – |

| Filter | – | chi-squared test, gain ratio, information gain, Pearson correlation, spearman correlation |

| Projection | – | principal component analysis |

| Wrapper | – | sequential backward elimination, sequential forward selection |

| Scenario | Method |

|---|---|

| The data analyst has defined the learning algorithm to use in the classification task and he works with high computational resources. | Wrapper |

| The data analyst has defined the learning algorithm to use in the classification task. The computational resources are limited | Embedded |

| The data analyst has not defined the learning algorithm to use in the classification task and he works with low computational resources. | Filter |

| Subject | Instances |

|---|---|

| 1 | 376,383 |

| 2 | 446,908 |

| 3 | 252,805 |

| 4 | 329,506 |

| 5 | 374,679 |

| 6 | 361,746 |

| 7 | 313,545 |

| 8 | 407,867 |

| 9 | 8477 |

| Subject | Missing Values Percentage | Attributes with Missing Values Percentages > 65% |

|---|---|---|

| 1 | 1.95 | heart_rate: 90.86 |

| 2 | 2.07 | heart_rate: 90.87 |

| 3 | 1.83 | heart_rate: 90.86 |

| 4 | 2.01 | heart_rate: 90.86 |

| 5 | 2.00 | heart_rate: 90.86 |

| 6 | 1.92 | heart_rate: 90.86 |

| 7 | 1.96 | heart_rate: 90.86 |

| 8 | 2.10 | heart_rate: 90.88 |

| 9 | 1.91 | heart_rate: 90.84 |

| Subjects | Potential Outliers | Lower Limit | Upper Limit |

|---|---|---|---|

| 1 | 50,961 | 0.956 | 1.059 |

| 2 | 38,454 | 0.878 | 1.203 |

| 3 | 20,706 | 0.884 | 1.191 |

| 4 | 27,618 | 0.881 | 1.198 |

| 5 | 32,607 | 0.888 | 1.182 |

| 6 | 31,079 | 0.873 | 1.214 |

| 7 | 25,329 | 0.879 | 1.204 |

| 8 | 34,068 | 0.876 | 1.209 |

| 9 | 830 | 0.875 | 1.206 |

| Classifier | Physical Activity Monitoring | Our Approach |

|---|---|---|

| Decision tree (C4.5) | 95.54 | 99.30 |

| Boosted C4.5 decision tree | 99.74 | 99.99 |

| Bagging C4.5 decision tree | 96.60 | 99.60 |

| Naive Bayes | 94.19 | 76.51 |

| K nearest neighbor | 99.46 | 99.97 |

| Approach | Classifier | Test 1 | Test 2 |

|---|---|---|---|

| Our approach | RF | 94.90 | 99.25 |

| GBM | 94.78 | 96.68 | |

| CART | 97.75 | 98.70 | |

| LDA | 97.90 | 98.68 | |

| Occupancy detection (original attributes) | RF | 95.05 | 97.16 |

| GBM | 93.06 | 95.14 | |

| CART | 95.57 | 96.47 | |

| LDA | 97.90 | 98.76 | |

| Occupancy detection (preprocessing attributes) | RF | 95.53 | 98.06 |

| GBM | 95.76 | 96.10 | |

| CART | 94.52 | 96.52 | |

| LDA | 97.90 | 99.33 |

| Dataset | Work | Approach | Classifier | Value (%) | Measure |

|---|---|---|---|---|---|

| Anuran families calls | [99,100,101] | DQF4CT | MLP | 97.6 | Precision |

| Authors | MLP | 99.0 | |||

| Anuran species calls | [99,100,101] | DQF4CT | MLP | 98.9 | Precision |

| Authors | MLP | 99.0 | |||

| Autism spectrum disorder in adolescent | [102] | DQF4CT | RF | 99.8 | Precision |

| Authors | RF | 91.4 | |||

| Autism spectrum disorder in adult | [102] | DQF4CT | C4.5 | 99.1 | Precision |

| Authors | C4.5 | 89.8 | |||

| Autism spectrum disorder in child | [102] | DQF4CT | RF | 99.7 | Precision |

| Authors | RF | 85.6 | |||

| Breast tissue detection | [103] | DQF4CT | LDA | 92.2 | AUC |

| Authors | LDA | 87.3 | |||

| Cardiotocography | [104] | DQF4CT | C4.5 | 98.6 | Precision |

| Authors | C4.5 | 97.6 | |||

| Default of credit card clients | [105] | DQF4CT | KNN | 83.6 | AUC |

| Authors | KNN | 68.0 | |||

| Human activity recognition—smartphones | [106] | DQF4CT | SVM | 98.4 | Precision |

| Authors | SVM | 92.4 | |||

| Ozone level detection 1 h | [107] | DQF4CT | Bagging C4.5 | 94.1 | Precision |

| Authors | Bagging C4.5 | 18.5 | |||

| Ozone level detection 8 h | [107] | DQF4CT | Bagging C4.5 | 91.3 | Precision |

| Authors | Bagging C4.5 | 41.6 | |||

| Phishing detection | [108] | DQF4CT | CART | 83.8 | Precision |

| Authors | CART | 90.0 |

| Dataset | Work | Approach | Classifier | Value (%) | Measure |

|---|---|---|---|---|---|

| Polish companies bankruptcy 1 year | [109] | DQF4CT | C4.5 | 77.0 | AUC |

| Authors | C4.5 | 71.7 | |||

| Polish companies bankruptcy 2 years | [109] | DQF4CT | C4.5 | 79.3 | AUC |

| Authors | C4.5 | 65.3 | |||

| Polish companies bankruptcy 3 years | [109] | DQF4CT | C4.5 | 80.5 | AUC |

| Authors | C4.5 | 70.1 | |||

| Polish companies bankruptcy 4 years | [109] | DQF4CT | C4.5 | 80.2 | AUC |

| Authors | C4.5 | 69.1 | |||

| Polish companies bankruptcy 5 years | [109] | DQF4CT | C4.5 | 83.4 | AUC |

| Authors | C4.5 | 76.1 | |||

| Portuguese bank telemarketing | [110] | DQF4CT | MLP | 92.6 | AUC |

| Authors | MLP | 92.9 | |||

| Predicting phishing websites | [111] | DQF4CT | MLP | 98.0 | Precision |

| Authors | MLP | 94.0 | |||

| Ready biodegradability of chemicals | [112] | DQF4CT | Boosting C4.5 | 95.5 | AUC |

| Authors | Boosting C4.5 | 92.1 | |||

| Risk factors cervical cancer | [113,114] | DQF4CT | C4.5 | 93.2 | AUC |

| Authors | C4.5 | 53.3 | |||

| Seismic hazard prediction in coal mines | [115] | DQF4CT | CART | 93.7 | Precision |

| Authors | CART | 87.0 | |||

| Vertebral column diagnostic | [116,117] | DQF4CT | MLP | 85.5 | Precision |

| Authors | MLP | 83.0 | |||

| Vertebral column injury | [116,117] | DQF4CT | SVM | 88.2 | Precision |

| Authors | SVM | 82.1 | |||

| Voice rehabilitation treatment | [118] | DQF4CT | SVM | 88.1 | Precision |

| Authors | SVM | 74.8 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Corrales, D.C.; Ledezma, A.; Corrales, J.C. From Theory to Practice: A Data Quality Framework for Classification Tasks. Symmetry 2018, 10, 248. https://doi.org/10.3390/sym10070248

Corrales DC, Ledezma A, Corrales JC. From Theory to Practice: A Data Quality Framework for Classification Tasks. Symmetry. 2018; 10(7):248. https://doi.org/10.3390/sym10070248

Chicago/Turabian StyleCorrales, David Camilo, Agapito Ledezma, and Juan Carlos Corrales. 2018. "From Theory to Practice: A Data Quality Framework for Classification Tasks" Symmetry 10, no. 7: 248. https://doi.org/10.3390/sym10070248