Incorporation of Remote PM2.5 Concentrations into the Downscaler Model for Spatially Fused Air Quality Surfaces

Abstract

:1. Introduction

2. Methods

3. Data Sources

4. Results

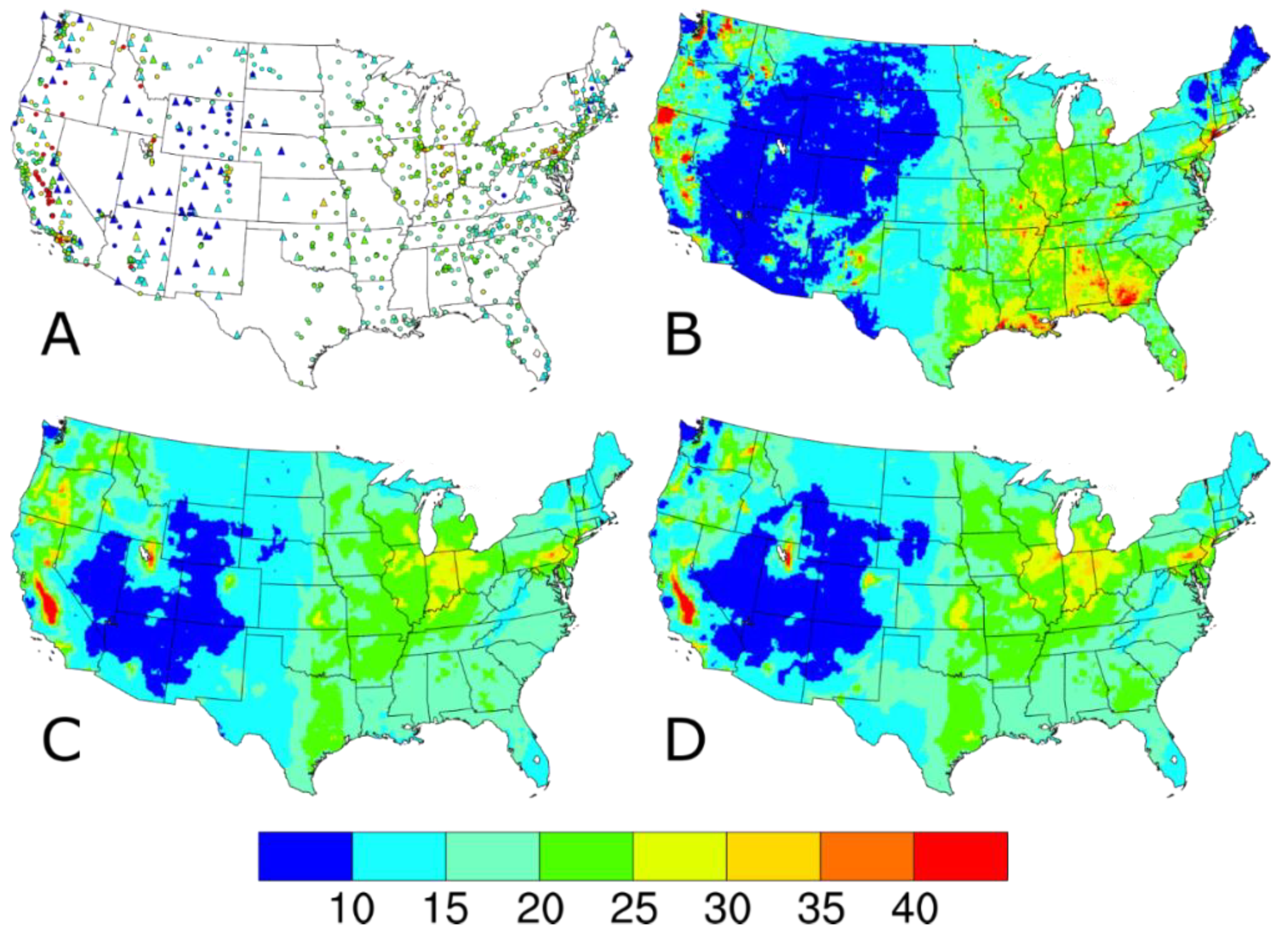

4.1. Concentrations from Downscaler Model Runs

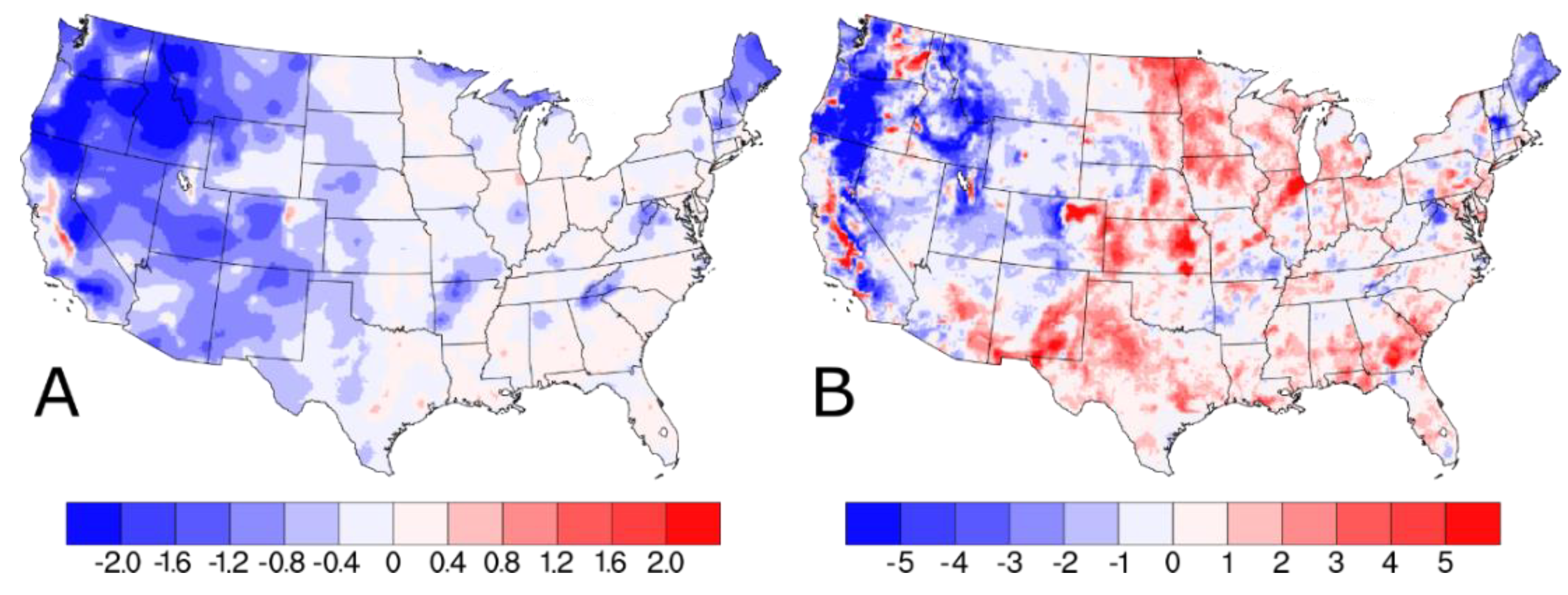

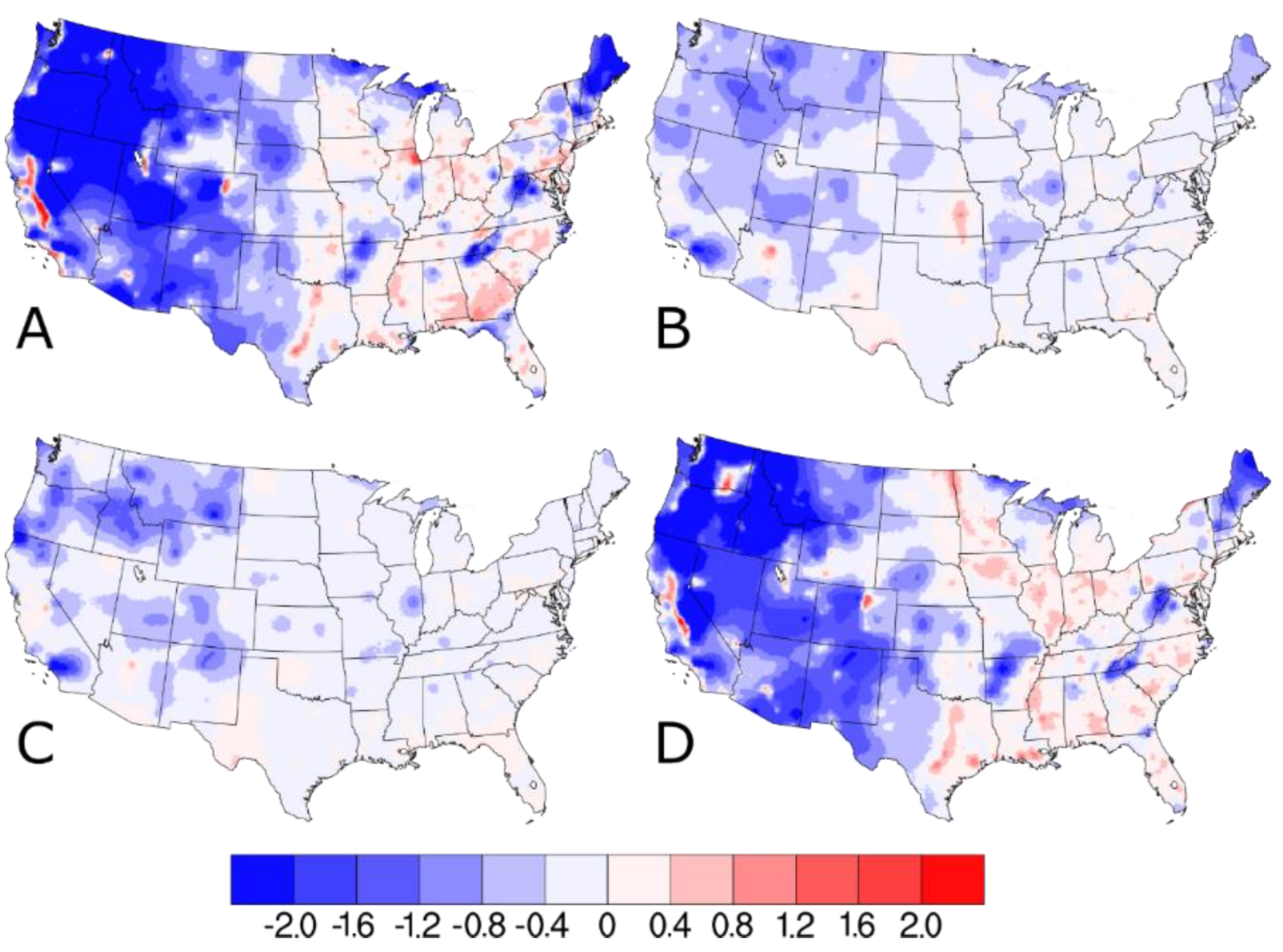

4.2. Differences in Concentrations between Downscaler Model Runs

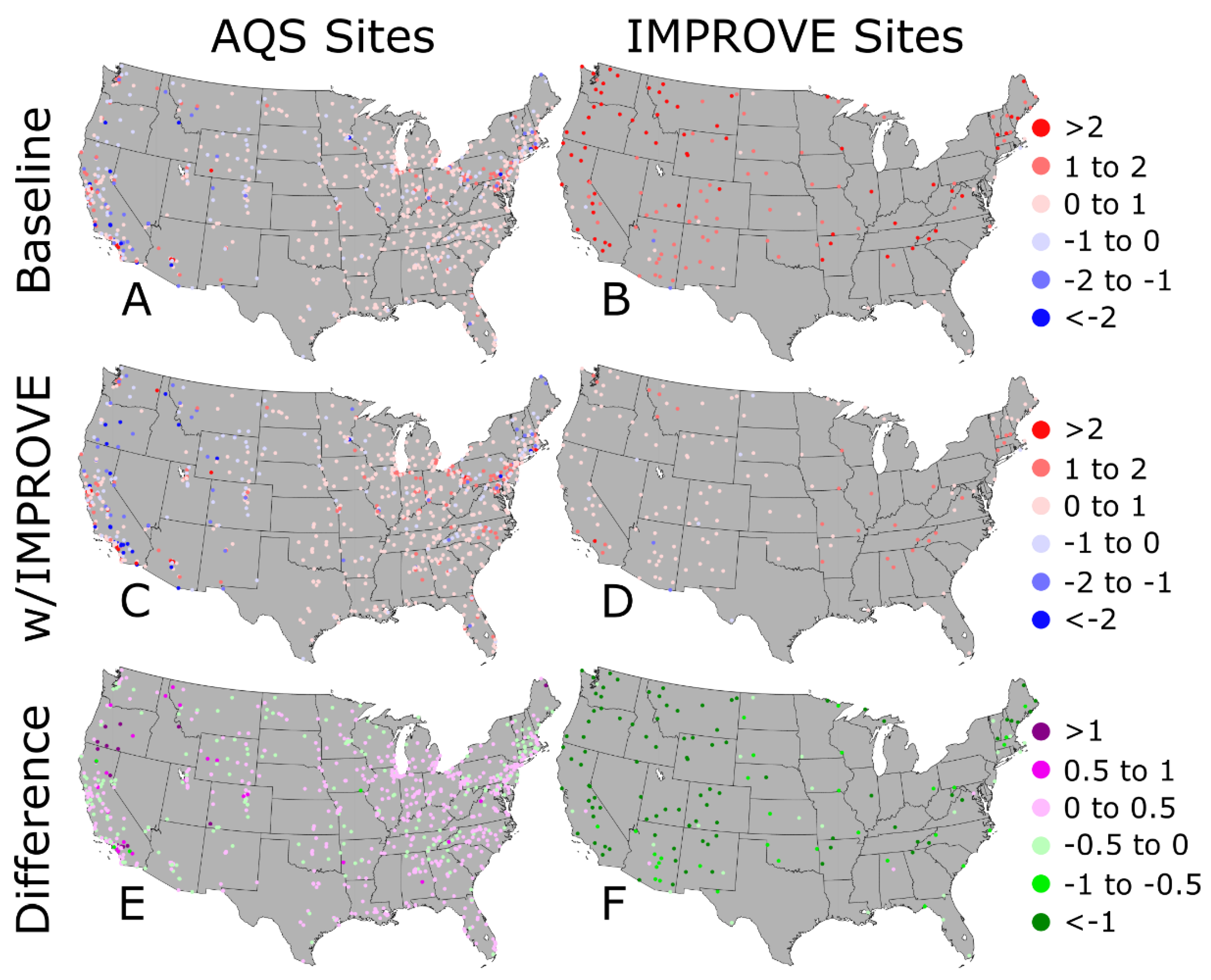

4.3. Downscaler Evaluation

4.4. Case Study for Denver, CO

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Crouse, D.L.; Peters, P.A.; Hystad, P.; Brook, J.R.; van Donkelaar, A.; Martin, R.V.; Villeneuve, P.J.; Jerrett, M.; Goldberg, M.S.; Pope, C.A.; et al. Ambient PM2.5, O3, and NO2 Exposures and Associations with Mortality over 16 Years of Follow-Up in the Canadian Census Health and Environment Cohort (CanCHEC). Environ. Health Perspect. 2015, 123, 1180–1186. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shi, L.H.; Zanobetti, A.; Kloog, I.; Coull, B.A.; Koutrakis, P.; Melly, S.J.; Schwartz, J.D. Low-Concentration PM2.5 and Mortality: Estimating Acute and Chronic Effects in a Population-Based Study. Environ. Health Perspect. 2016, 124, 46–52. [Google Scholar] [CrossRef] [Green Version]

- Di, Q.; Kloog, I.; Koutrakis, P.; Lyapustin, A.; Wang, Y.J.; Schwartz, J. Assessing PM2.5 Exposures with High Spatiotemporal Resolution across the Continental United States. Environ. Sci. Technol. 2016, 50, 4712–4721. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, Y.; Sarnat, J.; Kilaru, V.; Jacob, D.; Koutrakis, P. Estimating ground-level PM2.5 in the eastern United States using satellite remote sensing. Environ. Sci. Technol. 2005, 39, 3269–3278. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, Y.; Paciorek, C.J.; Koutrakis, P. Estimating regional spatial and temporal variability of PM2.5 concentrations using satellite data, meteorology, and land use information. Environ. Health Perspect. 2009, 117, 886–892. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gupta, P.; Christopher, S.A. Particulate matter air quality assessment using integrated surface, satellite, and meteorological products: Multiple regression approach. J. Geophys. Res. 2009, 114, D14205. [Google Scholar] [CrossRef] [Green Version]

- Van Donkelaar, A.; Martin, R.V.; Brauer, M.; Hsu, N.C.; Kahn, R.A.; Levy, R.C.; Lyapustin, A.; Sayer, A.M.; Winker, D.M. Global estimates of fine particulate matter using a combined geophysical-statistical method with information from satellites, models, and monitors. Environ. Sci. Technol. 2016, 50, 3762–3772. [Google Scholar] [CrossRef] [PubMed]

- Shaddick, G.; Thomas, M.L.; Green, A.; Brauer, M.; Van Donkelaar, A.; Burnett, R.; Chang, H.H.; Cohen, A.; Van Dingenen, R.; Dora, C.; et al. Data integration model for air quality: A hierarchical approach to the global estimation of exposures to ambient air pollution. J. R. Stat. Soc. Ser. C (Appl. Stat.) 2018, 67, 231–253. [Google Scholar] [CrossRef]

- Berrocal, V.; Gelfand, A.E.; Holland, D.M. Space-time fusion under error in computer model output: An application to modeling air quality. Biometrics 2012, 68, 837–848. [Google Scholar] [CrossRef] [Green Version]

- Policy Assessment for the Review of the National Ambient Air Quality Standards for Particulate Matter, External Review Draft, Environmental Protection Agency. Available online: https://www.epa.gov/naaqs/particulate-matter-pm-air-quality-standards (accessed on 26 December 2019).

- National Environmental Public Health Tracking Network, Centers for Disease Control and Prevention. Available online: https://www.cdc.gov/nceh/tracking/default.htm (accessed on 26 December 2019).

- Byun, D.W.; Schere, K.L. Review of the Governing Equations, Computational Algorithms, and Other Components of the Models-3 Community Multiscale Air Quality (CMAQ) Modeling System. Appl. Mech. Rev. 2006, 59, 51–77. [Google Scholar] [CrossRef]

- Heaton, M.; Holland, D.M.; Leininger, T. User’s Manual for Downscaler Fusion Software; EPA/600/C-12/002; U.S. Environmental Protection Agency: Washington, DC, USA, 2012.

- Berrocal, V.; Gelfand, A.E.; Holland, D.M. A spatiotemporal downscaler for output from numerical models. J. Agric. Biol. Environ. Stat. 2010, 15, 176–197. [Google Scholar] [CrossRef] [PubMed]

- Berrocal, V.; Gelfand, A.E.; Holland, D.M. A bivariate space-time downscaler under space and time misalignment. Ann. Appl. Stat. 2010, 4, 1942–1975. [Google Scholar] [CrossRef] [PubMed]

- Malm, W.C.; Sisler, J.F.; Huffman, D.; Eldred, R.A.; Cahill, T.A. Spatial and seasonal trends in particle concentration and optical extinction in the U.S. J. Geophys. Res. 1994, 99, 1347–1370. [Google Scholar] [CrossRef]

- Solomon, P.A.; Crumpler, D.; Flanagan, J.B.; Jayanty, R.K.M.; Rickman, E.E.; McDade, C.E. U.S. national PM2.5 chemical speciation monitoring networks-CSN and IMPROVE: Description of networks. J. Air Waste Manag. Assoc. 2014, 64, 1410–1438. [Google Scholar] [CrossRef] [Green Version]

- Hand, J.L.; Schichtel, B.A.; Malm, W.C.; Pitchford, M.; Frank, N.H. Spatial and seasonal patterns in urban influence on regional concentrations of speciated aerosols across the United States. J. Geophys. Res. 2014, 119, 12832–12849. [Google Scholar] [CrossRef]

- Appel, K.W.; Napelenok, S.L.; Foley, K.M.; Pye, H.O.T.; Hogrefe, C.; Luecken, D.J.; Bash, J.O.; Roselle, S.J.; Pleim, J.E.; Foroutan, H.; et al. Description and evaluation of the Community Multiscale Air Quality (CMAQ) modeling system version 5.1. Geosci. Model Dev. 2017, 10, 1703–1732. [Google Scholar] [CrossRef] [Green Version]

- Skamarock, W.C.; Klemp, J.B. A time-split nonhydrostatic atmospheric model for weather research and forecasting applications. J. Comput. Phys. 2008, 227, 3465–3485. [Google Scholar] [CrossRef]

- Massad, R.S.; Nemitz, E.; Sutton, M.A. Review and parameterization of bi-directional ammonia exchange between vegetation and the atmosphere. Atmos. Chem. Phys. 2010, 10, 10359–10386. [Google Scholar] [CrossRef] [Green Version]

- Chan, E.A.W.; Gantt, B.; McDow, S. The reduction of summer sulfate and switch from summertime to wintertime PM2.5 concentration maxima in the United States. Atmos. Environ. 2018, 175, 25–32. [Google Scholar] [CrossRef]

- Roberts, D.R.; Bahn, V.; Ciuti, S.; Boyce, M.S.; Elith, J.; Guillera-Arroita, G.; Hauenstein, S.; Lahoz-Monfort, J.J.; Schröder, B.; Thuiller, W.; et al. Cross-validation strategies for data with temporal, spatial, hierarchical, or phylogenetic structure. Ecography 2017, 40, 913–929. [Google Scholar] [CrossRef]

- Berrocal, V.J.; Guan, Y.; Muyskens, A.; Wang, H.; Reich, B.J.; Mulholland, J.A.; Chang, H.H. A comparison of statistical and machine learning methods for creating national daily maps of ambient PM2.5 concentration. Atmos. Environ. 2019, in press. [Google Scholar] [CrossRef] [Green Version]

| Simulation | Selection | N | Mean Bias | Mean Sq. Error | Coverage |

|---|---|---|---|---|---|

| Baseline | AQS | 18,518 | 0.29 (0.25, 0.33) | 10.85 (9.86, 11.84) | 0.95 (0.95, 0.96) |

| w/IMPROVE | All | 20,257 | 0.28 (0.24, 0.32) | 11.51 (10.43, 12.60) | 0.95 (0.94, 0.95) |

| w/IMPROVE | AQS | 17,317 | 0.18 (0.14, 0.23) | 11.87 (10.65, 13.09) | 0.95 (0.95, 0.95) |

| Baseline | Common | 1889 | 0.35 (0.24, 0.46) | 8.67 (7.42, 9.92) | 0.95 (0.94, 0.96) |

| w/IMPROVE | Common | 1889 | 0.30 (0.19, 0.41) | 8.69 (7.41, 9.98) | 0.96 (0.95, 0.97) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gantt, B.; McDonald, K.; Henderson, B.; Mannshardt, E. Incorporation of Remote PM2.5 Concentrations into the Downscaler Model for Spatially Fused Air Quality Surfaces. Atmosphere 2020, 11, 103. https://doi.org/10.3390/atmos11010103

Gantt B, McDonald K, Henderson B, Mannshardt E. Incorporation of Remote PM2.5 Concentrations into the Downscaler Model for Spatially Fused Air Quality Surfaces. Atmosphere. 2020; 11(1):103. https://doi.org/10.3390/atmos11010103

Chicago/Turabian StyleGantt, Brett, Kelsey McDonald, Barron Henderson, and Elizabeth Mannshardt. 2020. "Incorporation of Remote PM2.5 Concentrations into the Downscaler Model for Spatially Fused Air Quality Surfaces" Atmosphere 11, no. 1: 103. https://doi.org/10.3390/atmos11010103