Individual Building Extraction from TerraSAR-X Images Based on Ontological Semantic Analysis

Abstract

:1. Introduction

- The method extracts individual buildings with different orientations and different structures from a semantic knowledge level by employing the ontology model.

- The meaningful image objects are obtained by a segmentation method that considers characteristics of SAR images, the topology and geometry characteristics of objects have special advantages for characterizing the building primitives.

- It is able to accurately extract the individual buildings using a single SAR image without any ancillary data.

2. Modeling the Individual Buildings in SAR Images

2.1. Characteristic Analysis of Large Individual Building

2.2. Ontological Semantic Analysis

2.3. Some Restrictions

- (1)

- The resolution of the SAR data ranges from 0.5 to 2 m;

- (2)

- The types of the large individual buildings mainly include large factory buildings and public buildings;

- (3)

- Buildings are assumed to have flat roofs and gable roofs. The minimum size of building is about 250 pixels in the meter-resolution SAR images;

- (4)

- Considering the particular scattering model of individual buildings in VHR SAR images, we hold that the individual building is made up of building primitives (layover area, corner reflection, roof area, and shadow area).

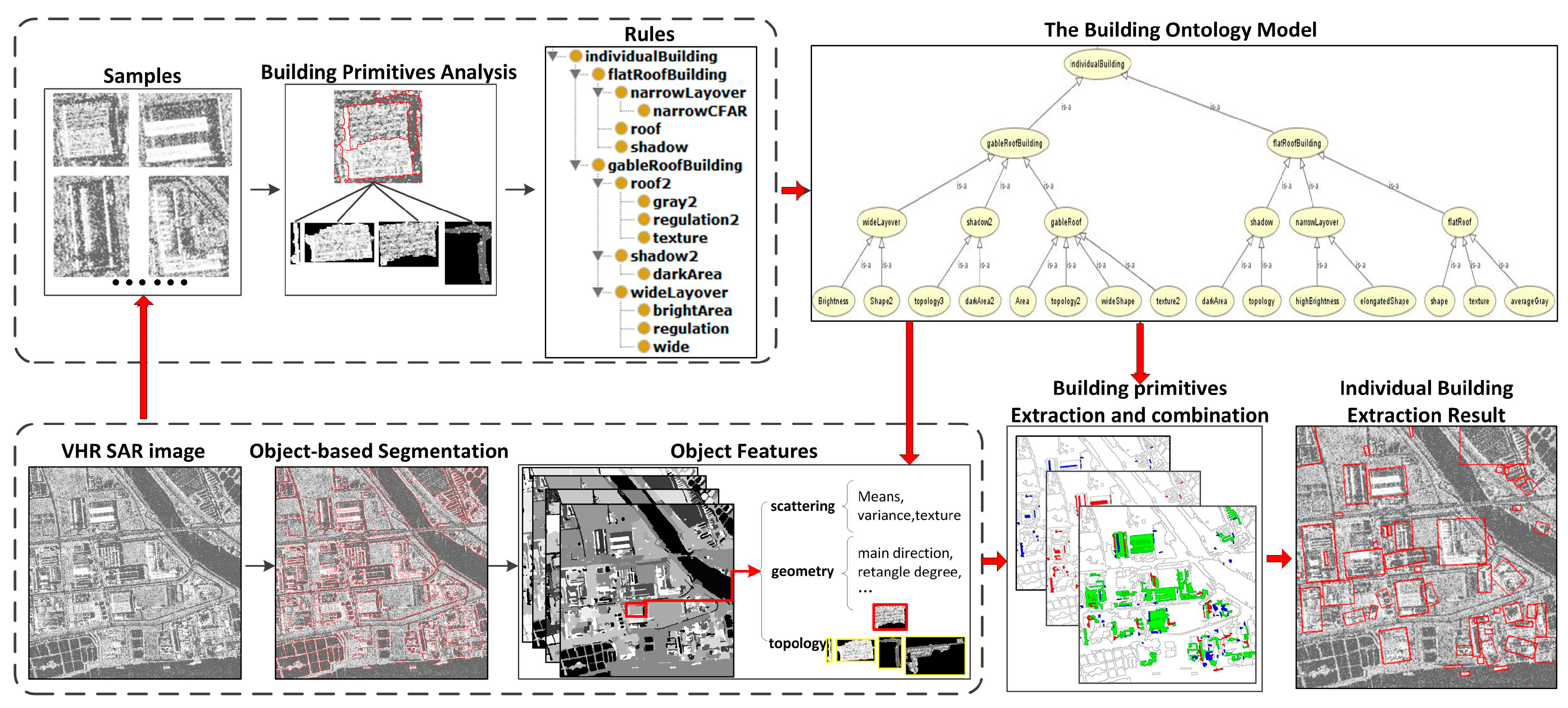

3. Proposed Method for Individual Building Extraction

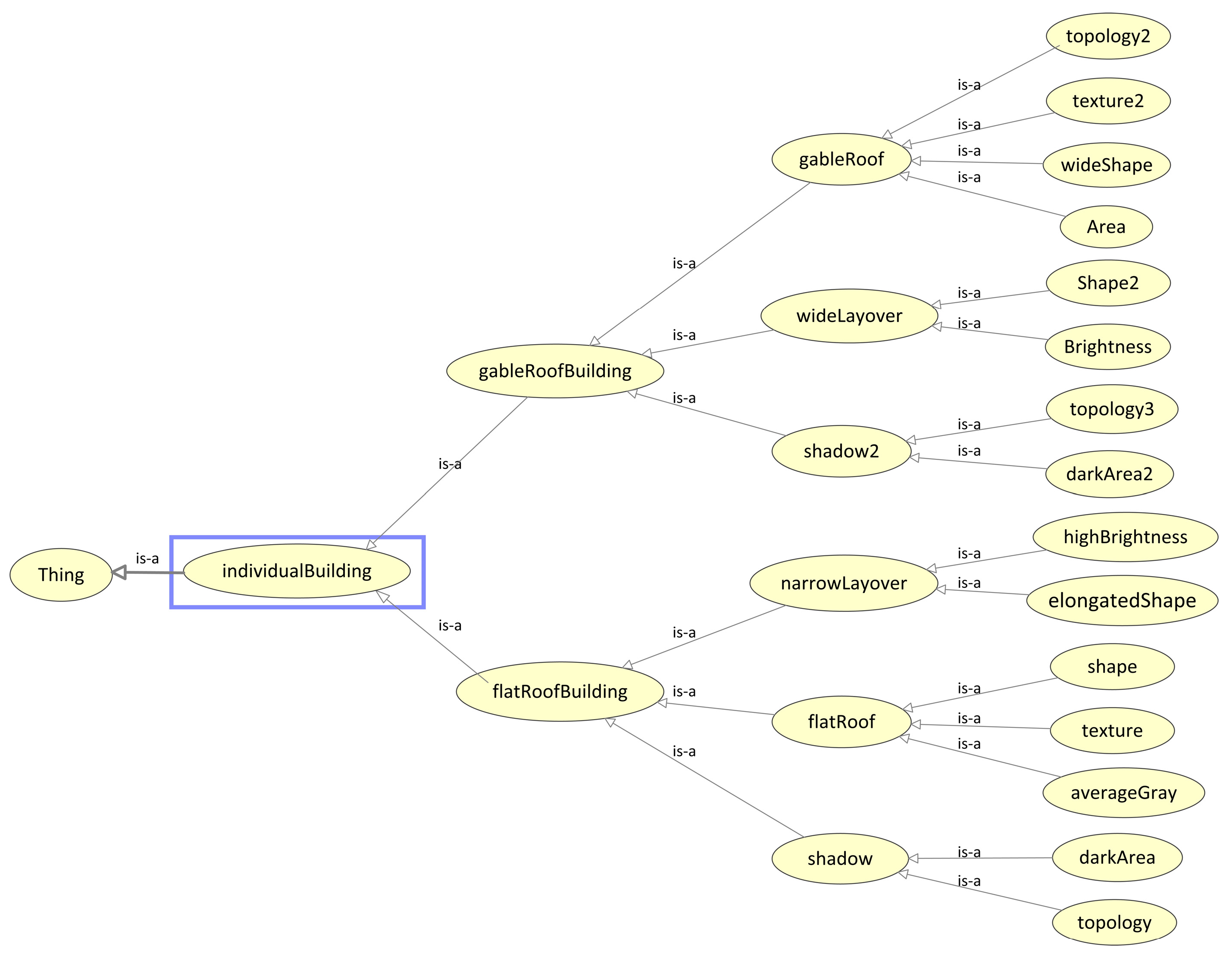

3.1. Ontology Model of Individual Buildings and Building Primitives

- (1)

- An individual building is made up of building primitives (layover area, corner reflection, roof area, and shadow area). The layover area and corner reflection need to identify in the bright area of SAR image. The shadow area belongs to dark area.

- (2)

- The building orientation is determined by the direction of the layover area. It can be divided into three main directions: parallel to SAR flight direction, vertical and inclined.

- (3)

- Associated with the SAR incidence angle, the roof and dark area are in the specific side of the bright area.

- (4)

- The individual building types can be divided into flat-roof and gable-roof building, mainly determined by the width of bright areas.

- (5)

- After obtaining the building primitives, the object topology information and imagery parameter information are used to aggregate the primitives into individual buildings.

- (6)

- Buildings are considered to be large if their planar area is greater than or equal to 250 pixels in the meter-resolution SAR images.

- Bright area: Direction ∩ High brightness ∩ Rectangularity ∩ Area size;

- Roof: Specific side of the bright area ∩ Texture ∩ Area size ∩ Shape;

- Dark area: Low brightness ∩ Shape ∩ Specific side of the roof ∩ Area size.

- Flat-roof building: Narrow layover area ∩ Roof ∩ Total area ∩ Shadow position (Auxiliary);

- Gable-roof building: Wide layover area ∩ Roof ∩ Total area ∩ Shadow position (Auxiliary).

3.2. Object-Based Analysis of High Resolution SAR Image

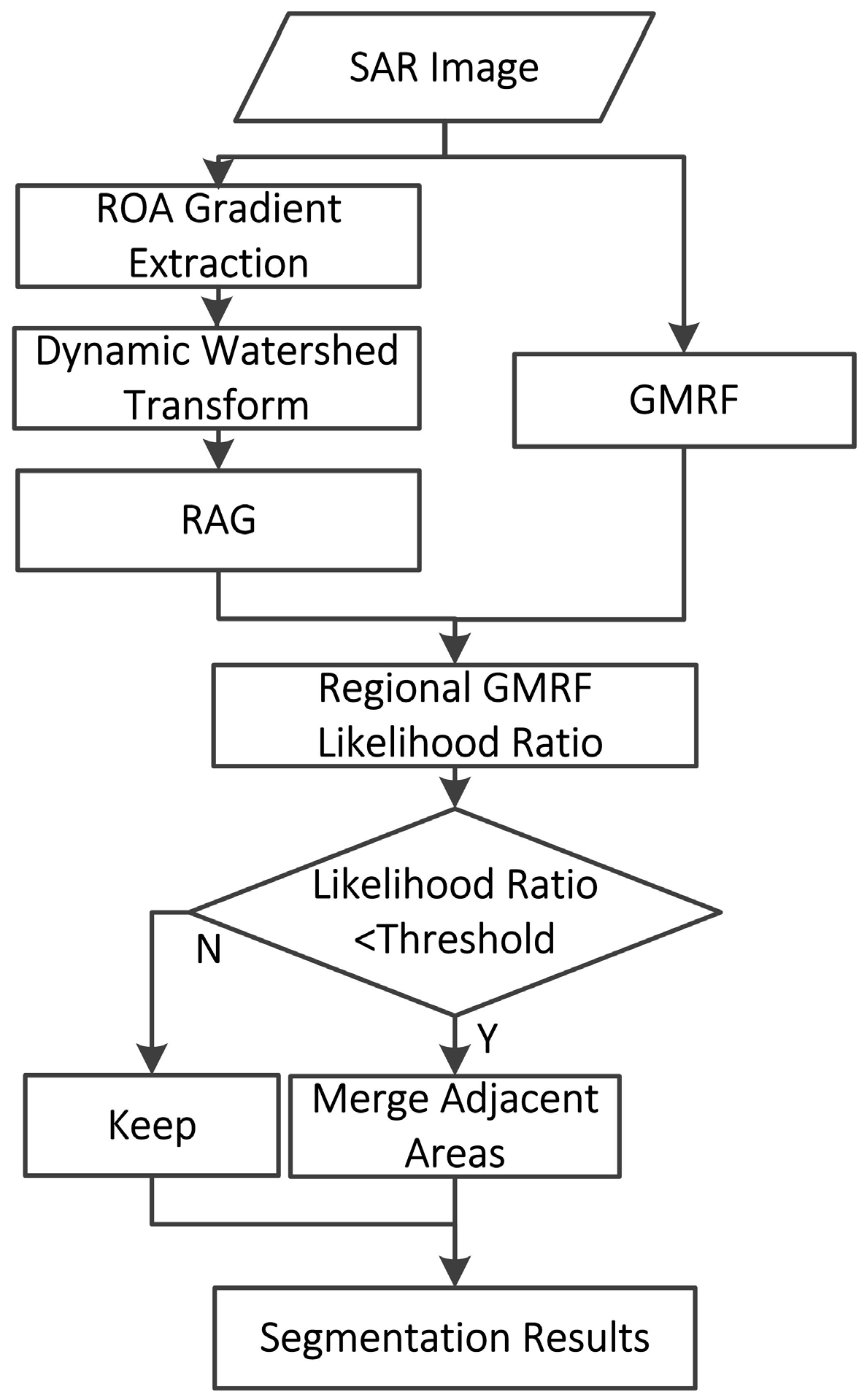

3.2.1. SAR Image Segmentation

3.2.2. Characteristics of Objects

3.3. Individual Building Extraction Based on Ontological Semantic Analysis

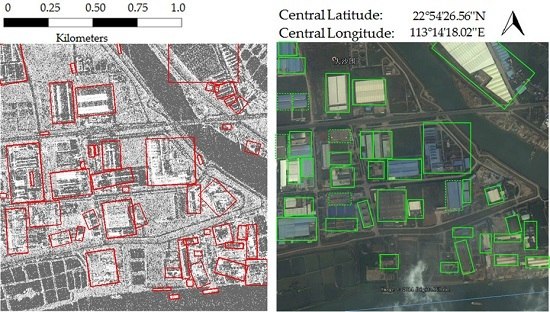

4. Experimental Results

4.1. Properties of Data Sets and Experimental Setting

4.2. Results and Evaluations

5. Discussion

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Liu, W.; Suzuki, K.; Yamazaki, F. Height estimation for high-rise buildings based on InSAR analysis. In Proceedings of the 2015 Joint Urban Remote Sensing Event (JURSE), Lausanne, French, 30 March–1 April 2015; pp. 1–4.

- Ferro, A.; Brunner, D.; Bruzzone, L. Automatic detection and reconstruction of building radar footprints from single VHR SAR images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 935–952. [Google Scholar] [CrossRef]

- Soergel, U.; Schulz, K.; Thoennessen, U.; Stilla, U. Integration of 3D data in SAR mission planning and image interpretation in urban areas. Inf. Fusion 2005, 6, 301–310. [Google Scholar] [CrossRef]

- Franceschetti, G.; Iodice, A.; Riccio, D. A canonical problem in electromagnetic backscattering from buildings. IEEE Trans. Geosci. Remote Sens. 2002, 40, 1787–1801. [Google Scholar] [CrossRef]

- Auer, S.; Donaubauer, A. Buildings in high resolution SAR images—Identification based on CityGML data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 3, 9–16. [Google Scholar] [CrossRef]

- Chen, S.S.; Wang, H.P.; Xu, F.; Jin, Y.Q. Automatic recognition of isolated buildings on single-aspect SAR image using range detector. IEEE Geosci. Remote Sens. Lett. 2015, 12, 219–223. [Google Scholar] [CrossRef]

- Franceschetti, G.; Iodice, A.; Riccio, D.; Ruello, G. SAR raw signal simulation for urban structures. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1986–1995. [Google Scholar] [CrossRef]

- Wang, J.; Qin, Q.; Chen, L.; Ye, X.; Qin, X.; Wang, J.; Chen, C. Automatic building extraction from very high resolution satellite imagery using line segment detector. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGRASS), Melbourne, Vic, Australia, 21–26 July 2013; pp. 212–215.

- Wang, J.; Yang, X.; Qin, X.; Ye, X.; Qin, Q. An efficient approach for automatic rectangular building extraction from very high resolution optical satellite imagery. IEEE Geosci. Remote Sens. Lett. 2015, 12, 487–491. [Google Scholar] [CrossRef]

- Uslu, E.; Albayrak, S. Synthetic aperture radar image clustering with curvelet subband Gauss distribution parameters. Remote Sens. 2014, 6, 5497–5519. [Google Scholar] [CrossRef]

- Sportouche, H.; Tupin, F.; Denise, L. Extraction and three-dimensional reconstruction of isolated buildings in urban scenes from high-resolution optical and SAR spaceborne images. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3932–3946. [Google Scholar] [CrossRef]

- Soergel, U.; Thoennessen, U.; Stilla, U. Reconstruction of buildings from interferometric SAR data of built-up areas. In Proceedings of the ISPRS Conference Photogrammetric Image Analysis, Munich, Germany, 17–19 September 2003; pp. 59–64.

- Cellier, F.; Oriot, H.; Nicolas, J.M. Hypothesis management for building reconstruction from high resolution InSAR imagery. In Proceedings the IEEE International Geoscience and Remote Sensing Symposium (IGRASS), Denver, CO, USA, 31 July–4 August 2003; pp. 3639–3642.

- Thiele, A.; Cadario, E.; Schulz, K.; Thoennessen, U.; Soergel, U. Building recognition from multi-aspect high-resolution InSAR data in urban areas. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3583–3593. [Google Scholar] [CrossRef]

- Xu, F.; Jin, Y.Q. Automatic Reconstruction of building objects from multi-aspect meter-resolution SAR images. IEEE Trans. Geosci. Remote Sens. 2007, 45, 2336–2353. [Google Scholar] [CrossRef]

- Simonetto, E.; Oriot, H.; Garello, R. Rectangular building extraction from stereoscopic airborne radar images. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2386–2395. [Google Scholar] [CrossRef]

- Zhao, L.J.; Zhou, X.G.; Kuang, G.Y. Building detection from urban SAR image using building characteristics and contextual information. EURASIP J. Adv. Signal Proc. 2013, 1, 1–16. [Google Scholar] [CrossRef]

- Quartulli, M.; Datcu, M. Stochastic geometrical modeling for built-up area understanding from a single SAR intensity image with meter resolution. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1996–2003. [Google Scholar] [CrossRef]

- Zhang, F.L.; Shao, Y.; Zhang, X.; Balz, T. Building L-shape footprint extraction from high resolution SAR image. In Proceedings of the IEEE Joint Urban Remote Sensing Event, Munich, Germany, 11–13 April 2011; pp. 273–276.

- Soergel, U.; Thoennessen, U.; Brenner, A.; Stilla, U. High-resolution SAR data: New opportunities and challenges for the analysis of urban areas. IEE Proc. Radar Sonar Navig. 2006, 153, 294–300. [Google Scholar] [CrossRef]

- Ferro, A.; Brunner, D.; Bruzzone, L. Building detection and radar footprint reconstruction from single VHR SAR images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGRASS), Honolulu, HI, USA, 25–30 July 2010; pp. 292–295.

- Blaschke, T. Object based image analysis: A new paradigm in remote sensing? In Proceedings of the American Society for Photogrammetry and Remote Sensing Conference, Baltimore, MD, USA, 24–28 March 2013; pp. 36–43.

- Morandeira, N.S.; Grimson, R.; Kandus, P. Assessment of SAR speckle filters in the context of object-based image analysis. Remote Sens. Lett. 2016, 7, 150–159. [Google Scholar] [CrossRef]

- D'Elia, C.; Ruscino, S.; Abbate, M.; Aiazzi, B.; Baronti, S.; Alparone, L. SAR image classification through information-theoretic textural features, MRF segmentation, and object-oriented learning vector quantization. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1116–1126. [Google Scholar] [CrossRef]

- Belgiu, M.; Tomljenovic, I.; Lampoltshammer, T.J.; Blaschke, T.; Hofle, B. Ontology-based classification of building types detected from airborne laser scanning data. Remote Sens. 2014, 6, 1347–1366. [Google Scholar] [CrossRef]

- Arvor, D.; Durieux, L.; Andrés, S.; Laporte, M.A. Advances in geographic object-based image analysis with ontologies: A review of main contributions and limitations from a remote sensing perspective. ISPRS J. Photogramm. Remote Sens. 2013, 82, 125–137. [Google Scholar] [CrossRef]

- Durand, N.; Derivaux, S.; Forestier, G.; Wemmert, C.; Gancarski, P.; Boussaid, O.; Puissant, A. Ontology-based object recognition for remote sensing image interpretation. In Proceedings of the 19th IEEE International Conference on Tools with Artificial Intelligence (ICTAI), Patras, Greece, 29–31 October 2007; pp. 472–479.

- Bouyerbou, H.; Bechkoum, K.; Benblidia, N.; Lepage, R. Ontology-based semantic classification of satellite images: Case of major disaster. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Quebec, QC, Canada, 13–18 July 2014; pp. 2347–2350.

- Derivaux, S.; Durand, N.; Wemmert, C. On the complementarity of an ontology and a nearest neighbour classifier for remotely sensed image interpretation. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–27 July, 2007; pp. 3983–3986.

- Yang, J.; Jones, T.; Caspersen, J.; He, Y. Object-Based Canopy Gap Segmentation and classification: Quantifying the pros and cons of integrating optical and LiDAR data. Remote Sens. 2015, 7, 15917–15932. [Google Scholar] [CrossRef]

- Nebiker, S.; Lack, N.; Deuber, M. Building change detection from historical aerial photographs using dense image matching and object-based image analysis. Remote Sens. 2014, 6, 8310–8336. [Google Scholar] [CrossRef]

- Soergel, U. Radar Remote Sensing of Urban Areas; Springer Dordrecht Heidelberg: Heidelberg, Germany, 2010; pp. 191–194. [Google Scholar]

- Thiele, A.; Cadario, E.; Schulz, K.; Thoennessen, U.; Soergel, U. Feature extraction of gable-roofed buildings from multi-aspect high-resolution InSAR data. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–27 July 2007; pp. 262–265.

- Brunner, D.; Bruzzone, L.; Ferro, A.; Lemoine, G. Analysis of the reliability of the double bounce scattering mechanism for detecting buildings in VHR SAR images. In Proceedings of the IEEE Radar Conference, Pasadena, CA, USA, 4–8 May 2009; pp. 1–6.

- Amitrano, D.; Martino, G.D.; Iodice, A.; Riccio, D.; Ruello, G. A new framework for SAR multitemporal data RGB representation: Rationale and products. IEEE Trans. Geosci. Remote Sens. 2015, 53, 117–133. [Google Scholar] [CrossRef]

- Datcu, M.; Seidel, K. Human-centered concepts for exploration and understanding of Earth Observation images. IEEE Trans. Geosci. Remote Sens. 2005, 43, 601–609. [Google Scholar] [CrossRef]

- Madhok, V.; Landgrebe, A. A process model for remote sensing data analysis. IEEE Trans. Geosci. Remote Sens. 2002, 40, 680–686. [Google Scholar] [CrossRef]

- Agarwal, P. Ontological considerations in GIScience. Int. J. Geogr. Inf. Sci. 2005, 19, 501–536. [Google Scholar] [CrossRef]

- Lutz, M.; Klien, E. Ontology-based retrieval of geographic information. Int. J. Geogr. Inf. Sci. 2006, 20, 233–260. [Google Scholar] [CrossRef]

- Forestier, G.; Puissant, A.; Wemmert, C.; Gançarski, P. Knowledge-based region labeling for remote sensing image interpretation. Comput. Environ. Urban Syst. 2012, 36, 470–480. [Google Scholar] [CrossRef]

- De Bertrand de Beuvron, F.; Marc-Zwecker, S.; Puissant, A.; Zanni-Merk, C. From expert knowledge to formal ontologies for semantic interpretation of the urban environment from satellite images. Int. J. Knowl. Based Intell. Eng. Syst. 2013, 17, 55–65. [Google Scholar] [CrossRef]

- Dumitru, C.O.; Cui, S.; Schwarz, G.; Datcu, M. Information content of very-high-resolution SAR images: Semantics, geospatial context, and ontologies. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 8, 1635–1650. [Google Scholar] [CrossRef]

- Messaoudi, W.; Farah, I.R.; Solaiman, B. A new ontology for semantic annotation of remotely sensed images. In Proceedings of the 1st International Conference on Advanced Technologies for Signal and Image Processing, Sousse, Tunisia, 17–19 March 2014; pp. 36–41.

- Guarino, N. Formal ontology and information systems. In Proceedings of the International Conference on Formal Ontology in Information Systems, Trento, Italy, 6–8 June 1998; pp. 3–15.

- Chatterjee, R.; Matsuno, F. Robot description ontology and disaster scene description ontology: Analysis of necessity and scope in rescue infrastructure context. Adv. Robot. 2005, 19, 839–859. [Google Scholar] [CrossRef]

- Gruber, T.R. Toward principles for the design of ontologies used for knowledge sharing? Int. J. Hum. Comput. Stud. 1995, 43, 907–928. [Google Scholar] [CrossRef]

- Manola, F.; Miller, E. RDF Primer, W3C Recommendation. World Wide Web Consortium. Available online: https://www.w3.org/TR/rdf-primer/ (accessed on 20 August 2016).

- Brickley, D.; Guha, R.V. RDF Vocabulary Description Language 1.0: RDF Schema, W3C Recommendation. World Wide Web Consortium. Available online: https://www.w3.org/TR/2004/REC-rdf-schema-20040210/ (accessed on 20 August 2016).

- Grau, B.C.; Horrocks, I.; Motik, B.; Parsia, B.; Patel-Schneider, P.; Sattler, U. OWL 2: The next step for OWL. Web Semant. Sci. Serv. Agents World Wide Web 2008, 6, 309–322. [Google Scholar] [CrossRef]

- Isaac, A.; Summers, E. SKOS Simple Knowledge Organization System Primer, W3C Recommendation. World Wide Web Consortium. Available online: https://www.w3.org/TR/skos-primer/ (accessed on 20 August 2016).

- Jyothi, B.N.; Babu, G.R.; Krishna, I.V.M. Object oriented and multi-scale image analysis: Strengths, weaknesses, opportunities and threats-a review. J. Comput. Sci. 2008, 4, 706–712. [Google Scholar] [CrossRef]

- Pesaresi, M.; Bianchin, A. Recognizing settlement structure using mathematical morphology and image texture. In Remote Sensing and Urban Analysis; Donnay, J.P., Barnsley, M.J., Longley, P.A., Eds.; Taylor and Francis: New York, NY, USA, 2001; pp. 55–67. [Google Scholar]

- Li, W.; Benie, G.B.; He, D.C.; Wang, S.R.; Ziou, D.; Gwyn, Q.H.J. Watershed-based hierarchical SAR image segmentation. Int. J. Remote Sens. 1999, 20, 3377–3390. [Google Scholar] [CrossRef]

- Sun, H.; Su, F.; Zhang, Y. Modified ROA algorithm applied to extract linear features in SAR images. In Proceedings of the IEEE 1st International Symposium on Systems and Control in Aerospace and Astronautics, Harbin, China, 19–21 January 2006; pp. 1209–1213.

- Grimaud, M. New measure of contrast: The dynamics. Image Algebra Morphol. Image Proc. III 1992, 1769, 292–305. [Google Scholar]

- Chellappa, R.; Chatterjee, S. Classification of textures using Gaussian Markov random fields. IEEE Trans. Acoust. Speech Signal Proc. 1985, 33, 959–963. [Google Scholar] [CrossRef]

- Christina, C.; Jean-François, F.; Nicolas, B.; Nicolas, V.; Michel, P. Rapid urban mapping using SAR/optical imagery synergy. Sensors 2008, 8, 7125–7143. [Google Scholar]

| Aspect Angle | Scattering Model | Building Sample | Corresponding Optical Image |

|---|---|---|---|

| φ = 90° |  |  |  |

| φ = 90° |  |  |  |

| φ = 0° |  |  |  |

| φ = 0° |  |  |  |

| Category | Name | The Formula of Features | The Significance of Features |

|---|---|---|---|

| Label | Object label | -- | Used to identify objects |

| Gray feature | Mean | The average gray value of objects | |

| Standard deviation | Used to represent gray level distribution of objects | ||

| Texture feature | Homogeneity | Degree of object uniformity | |

| Entropy | The amount of information | ||

| Dissimilarity | Reflect the image sharpness and texture groove depth level | ||

| Energy | Reflect the image gray distribution and texture fineness | ||

| Shape feature | Area | Total pixel number of an object | |

| Wide | -- | Width of the object minimum bounding rectangle | |

| Rectangle degree | Ratio of object area and the object minimum bounding rectangle area | ||

| Solidity | Ratio of object area and the object minimum bounding polygon area | ||

| Density | It describes the extent of the object. The larger the value, the closer the object is square | ||

| Imagery parameter feature | Main direction | -- | The angle between SAR range direction and the major axis of object external ellipse |

| Orientation relationship of objects | -- | Determine the specific position relationship between objects according SAR range direction | |

| Topological feature | adjacent objects | -- | The labels of all adjacent objects with object A |

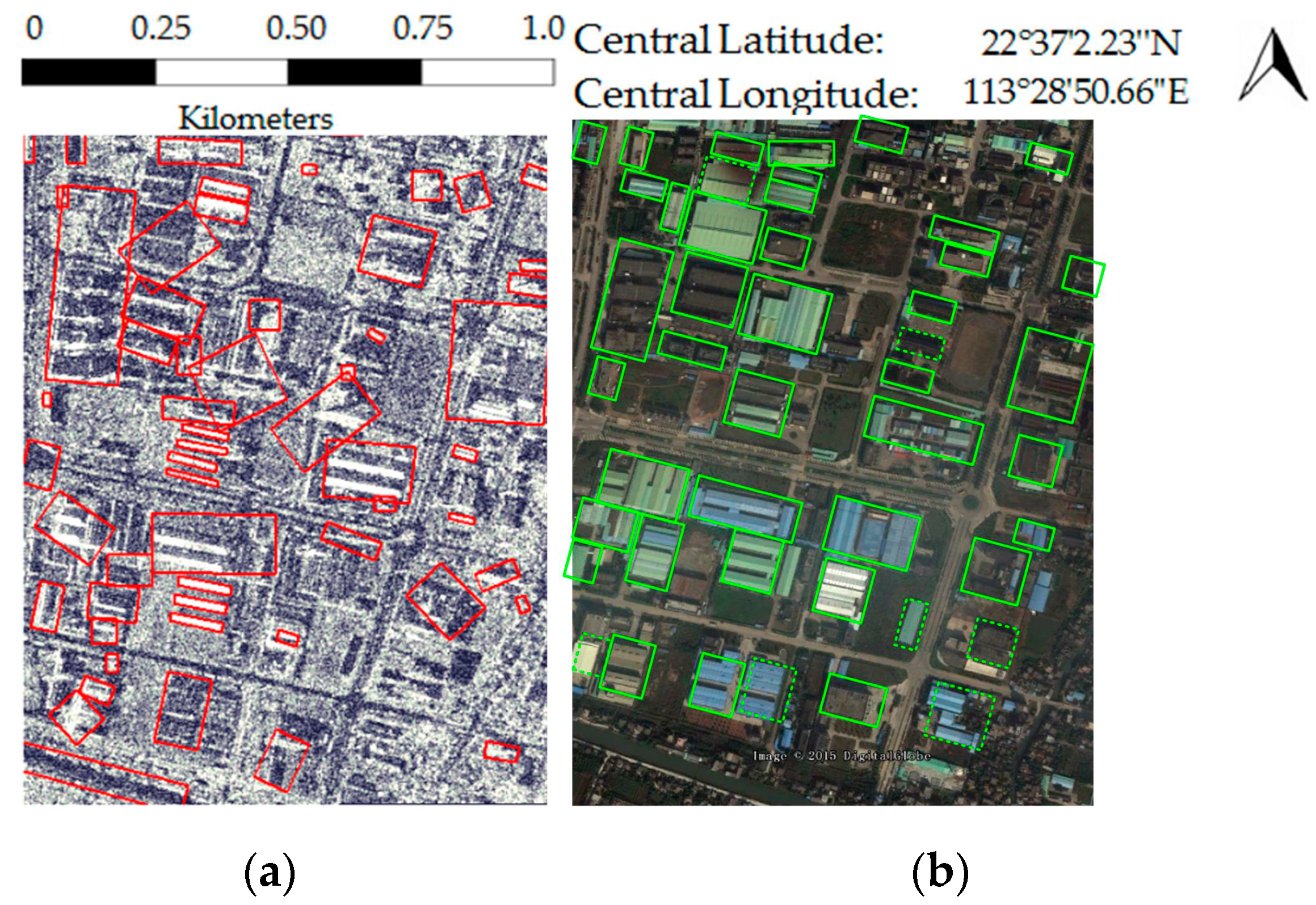

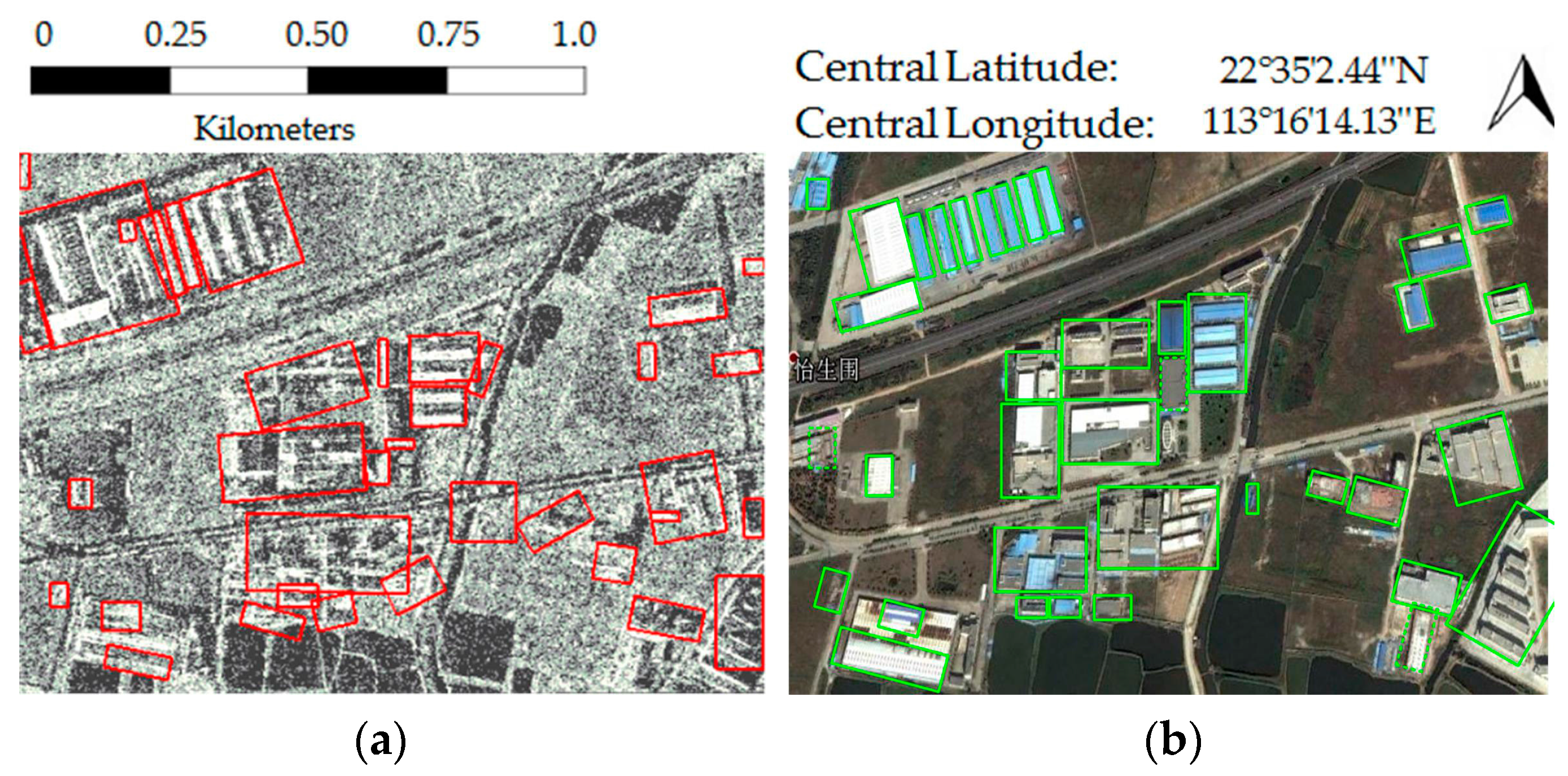

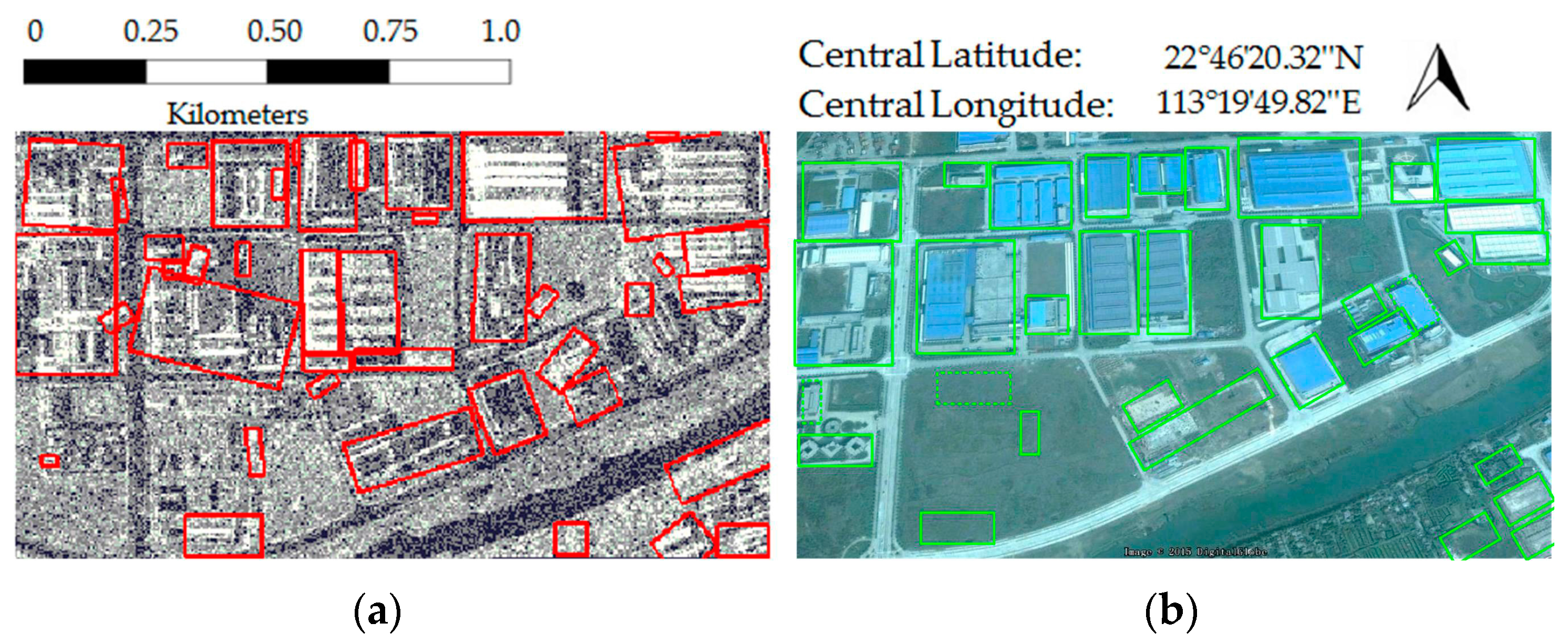

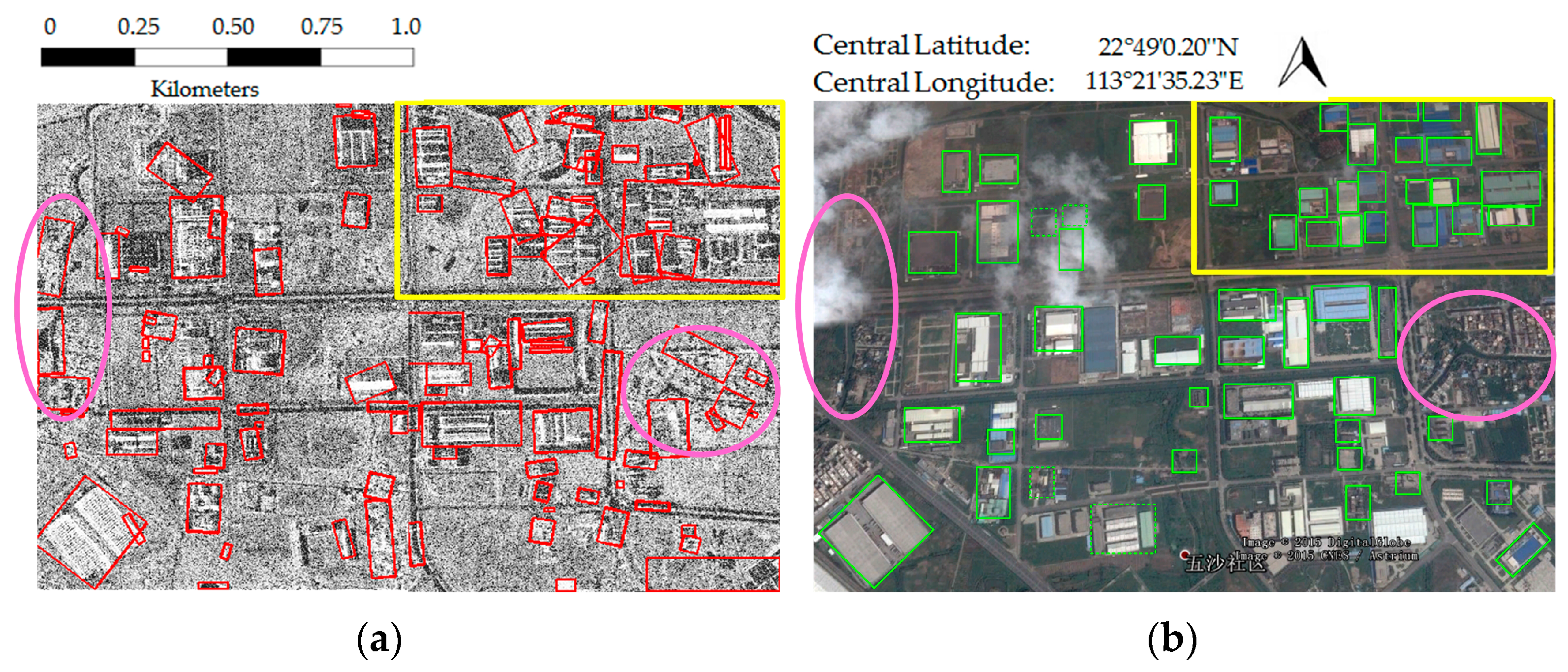

| Experimental Dataset Number | Image Sizes (Pixels) | Degree of Building Orientation Diversity | Other Features of the Buildings |

|---|---|---|---|

| 1 | 800 × 1050 | basically the same | relatively dense |

| 2 | 1100 × 800 | quite different | several clusters |

| 3 | 1200 × 1200 | quite different | different sizes |

| 4 | 1240 × 700 | different | complicated roof |

| 5 | 2040 × 1360 | different | mixed with small houses |

| Experimental Dataset Number | Building Number | Extraction | False Alarms | Split | Merged | Extraction Rate (%) | False Alarm Rate (%) |

|---|---|---|---|---|---|---|---|

| 1 | 46 | 39 | 4 | 2 | 3 | 84.8 | 8.7 |

| 2 | 37 | 35 | 1 | 0 | 6 | 94.6 | 2.7 |

| 3 | 38 | 33 | 7 | 2 | 6 | 86.8 | 18.4 |

| 4 | 33 | 28 | 1 | 1 | 2 | 87.9 | 3.0 |

| 5 | 57 | 53 | 12 | 3 | 8 | 92.9 | 21.1 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gui, R.; Xu, X.; Dong, H.; Song, C.; Pu, F. Individual Building Extraction from TerraSAR-X Images Based on Ontological Semantic Analysis. Remote Sens. 2016, 8, 708. https://doi.org/10.3390/rs8090708

Gui R, Xu X, Dong H, Song C, Pu F. Individual Building Extraction from TerraSAR-X Images Based on Ontological Semantic Analysis. Remote Sensing. 2016; 8(9):708. https://doi.org/10.3390/rs8090708

Chicago/Turabian StyleGui, Rong, Xin Xu, Hao Dong, Chao Song, and Fangling Pu. 2016. "Individual Building Extraction from TerraSAR-X Images Based on Ontological Semantic Analysis" Remote Sensing 8, no. 9: 708. https://doi.org/10.3390/rs8090708