Vineyard Detection and Vine Variety Discrimination from Very High Resolution Satellite Data

Abstract

:1. Introduction

Contribution

2. Materials and Methods

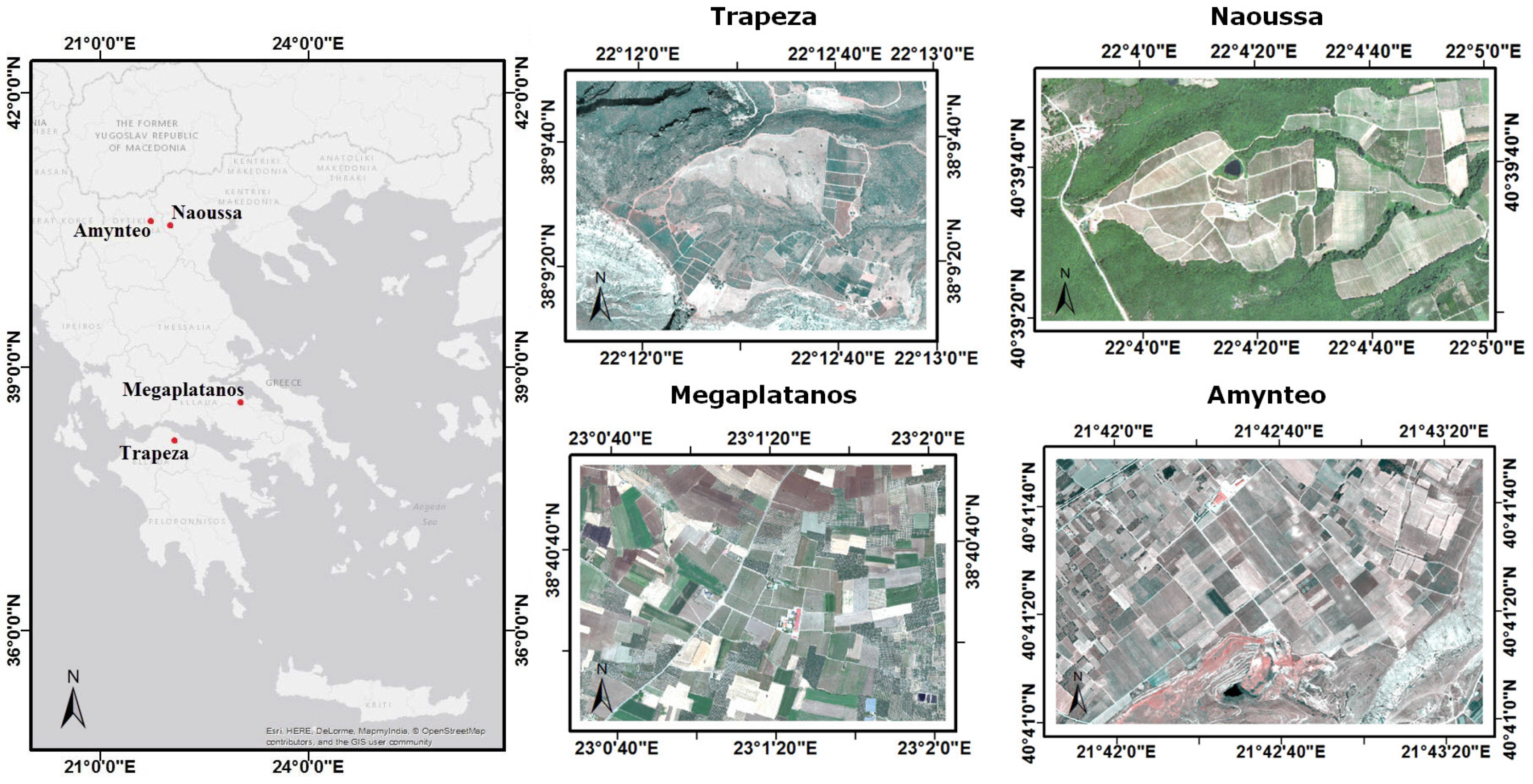

2.1. Study Areas

2.2. Satellite and Field Campaigns

2.3. Data Pre-Processing

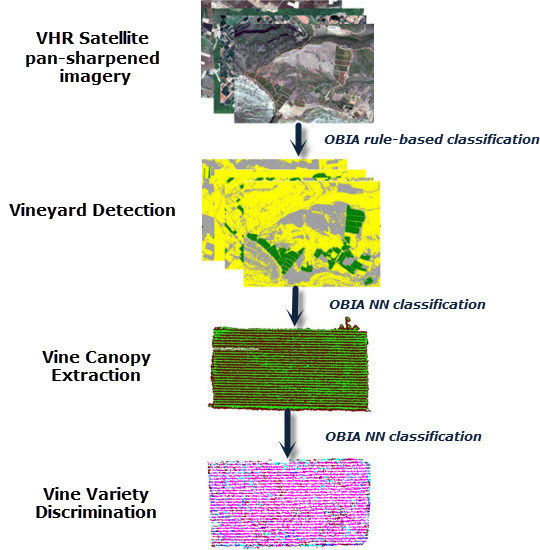

3. Methodology

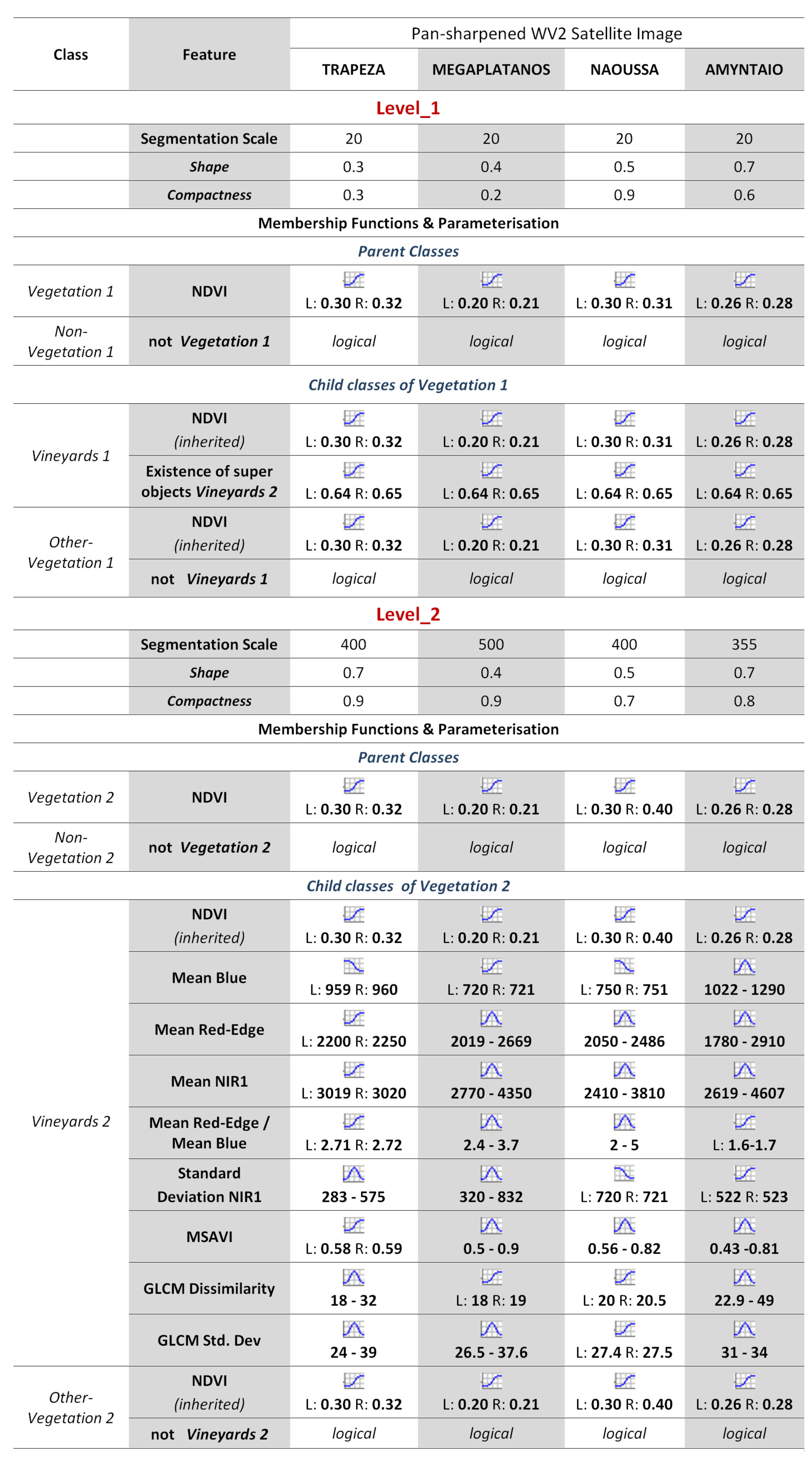

3.1. Vineyard Detection

“larger than”, defined by left and right border values;

“larger than”, defined by left and right border values;  “smaller than”, defined by left and right border values;

“smaller than”, defined by left and right border values;  “full range” of values.

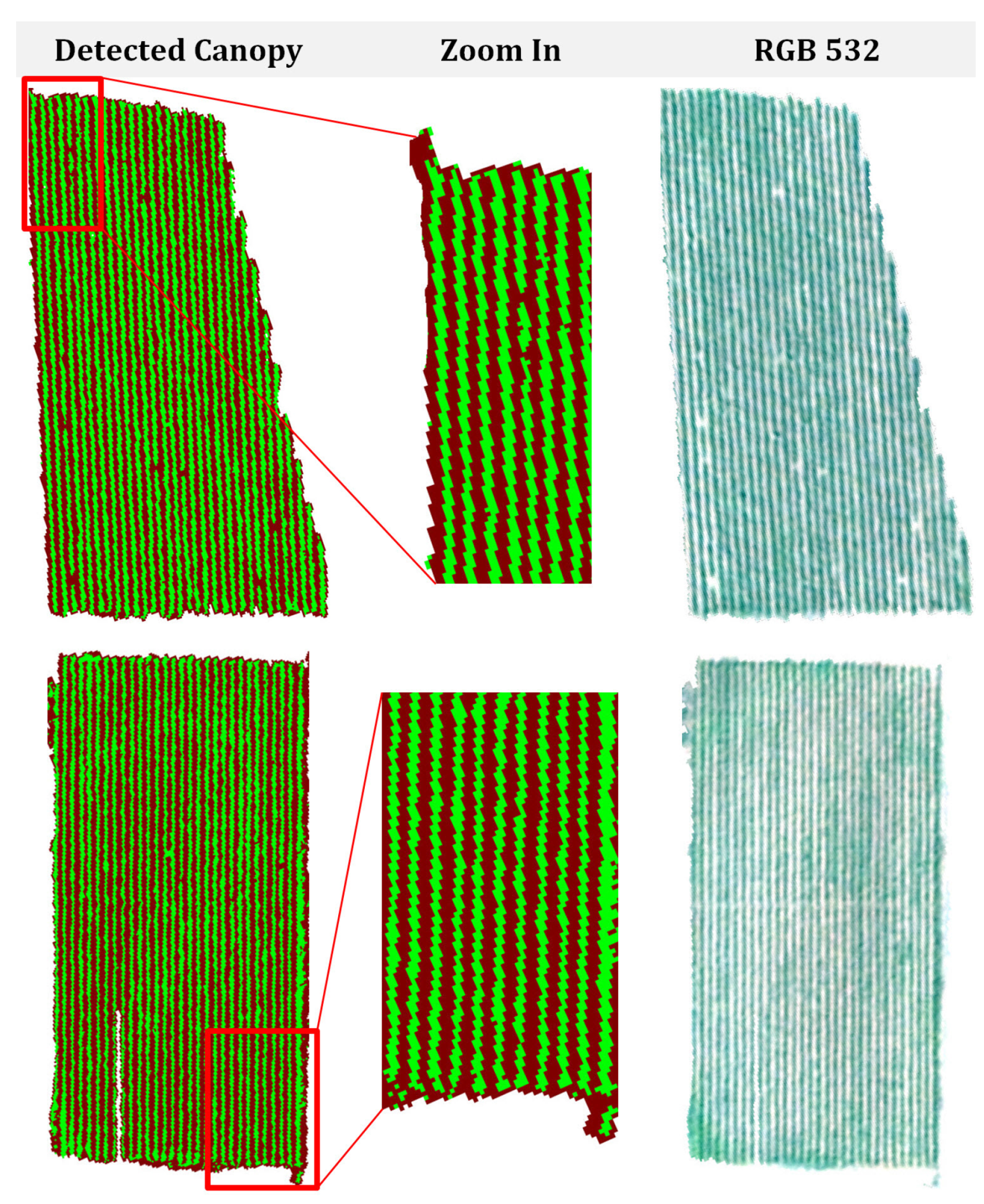

“full range” of values.3.2. Vine Canopy Extraction

- Mean diff. to neighbors (red, NIR1): the mean band intensity value difference between an image object and its neighbor image objects (within a radius of 4 pixels) for bands red and NIR1.

- Mean diff. to brighter/darker neighbors (red, NIR1): the mean band intensity value difference between an image object and its brighter/darker direct neighbor (with a common border) image objects for bands red and NIR1.

- Number of brighter/darker objects (red, NIR1): the number of direct neighboring (with a common border) objects with higher/lower mean band intensity values for bands red and NIR1.

- Relative border to brighter objects (red, NIR1): the ratio of the shared image border of an image object with direct neighbor brighter objects to the total border, for bands red and NIR1.

3.3. Vine Variety Discrimination

- Mean (coastal blue, red, red-edge, NIR1, NIR2): mean band intensity values of an image object for coastal blue, red, red-edge, NIR1, NIR2 bands.

- Ratio: the amount that a given image band contributes to the total brightness of an image object for all 8 bands.

- Mean NIR1/mean red: The ratio of mean band intensity values of band NIR1 to band red of an image object.

- Mean NIR2/mean blue: The ratio of mean band intensity values of band NIR2 to band blue of an image object.

4. Experimental Results and Validation

4.1. Vineyard Detection and Canopy Extraction

Canopy Extraction Using a Pixel-Based Linear SVM

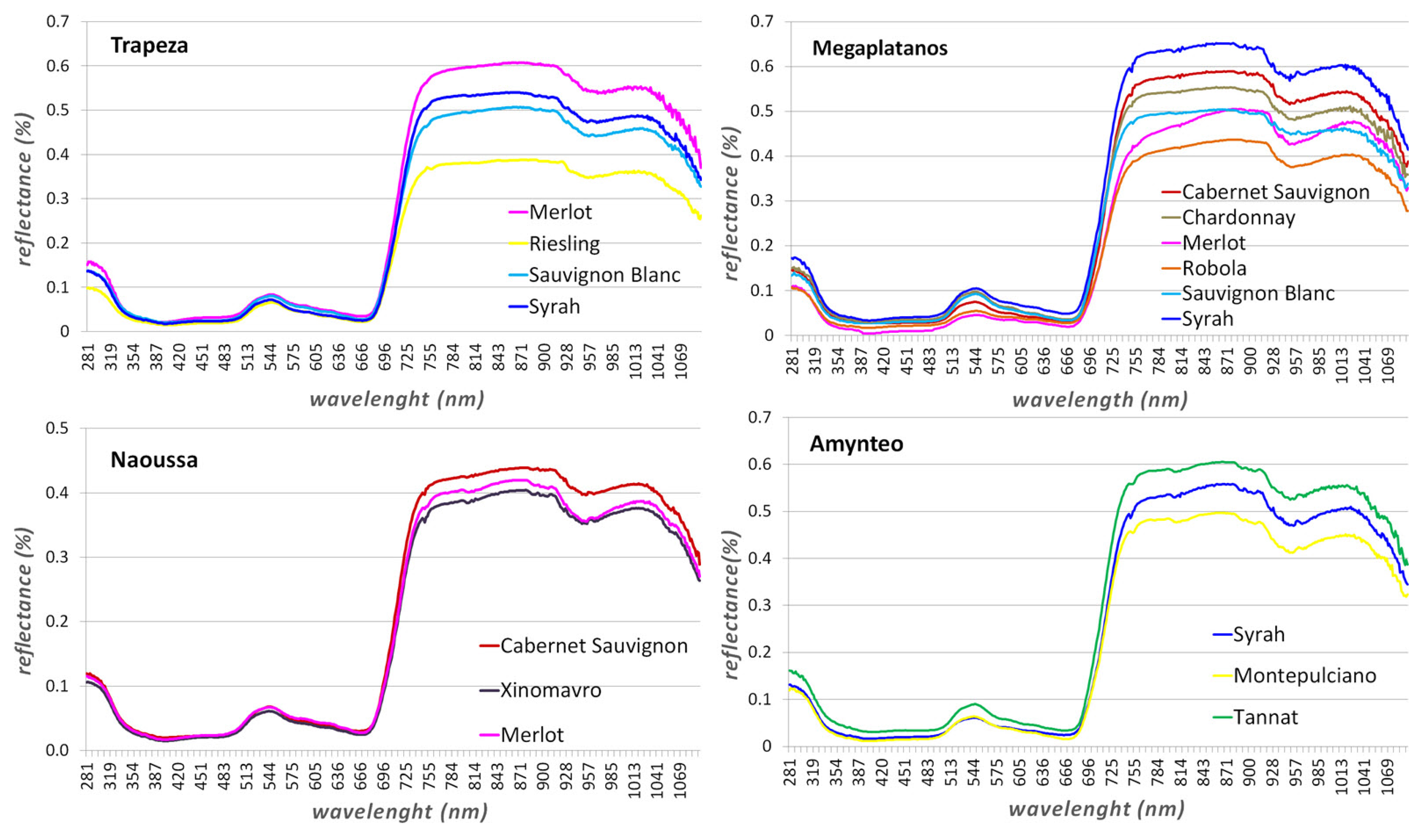

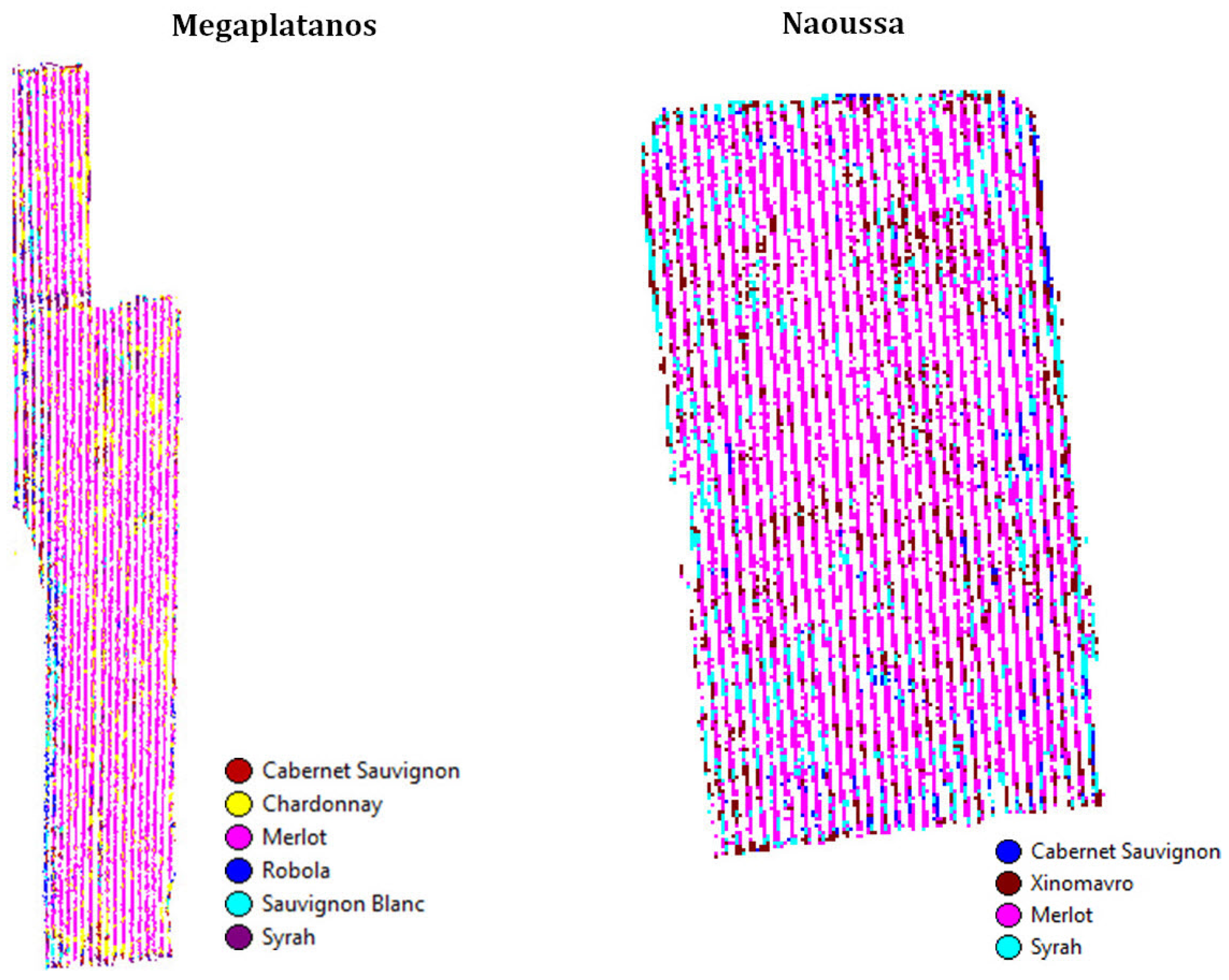

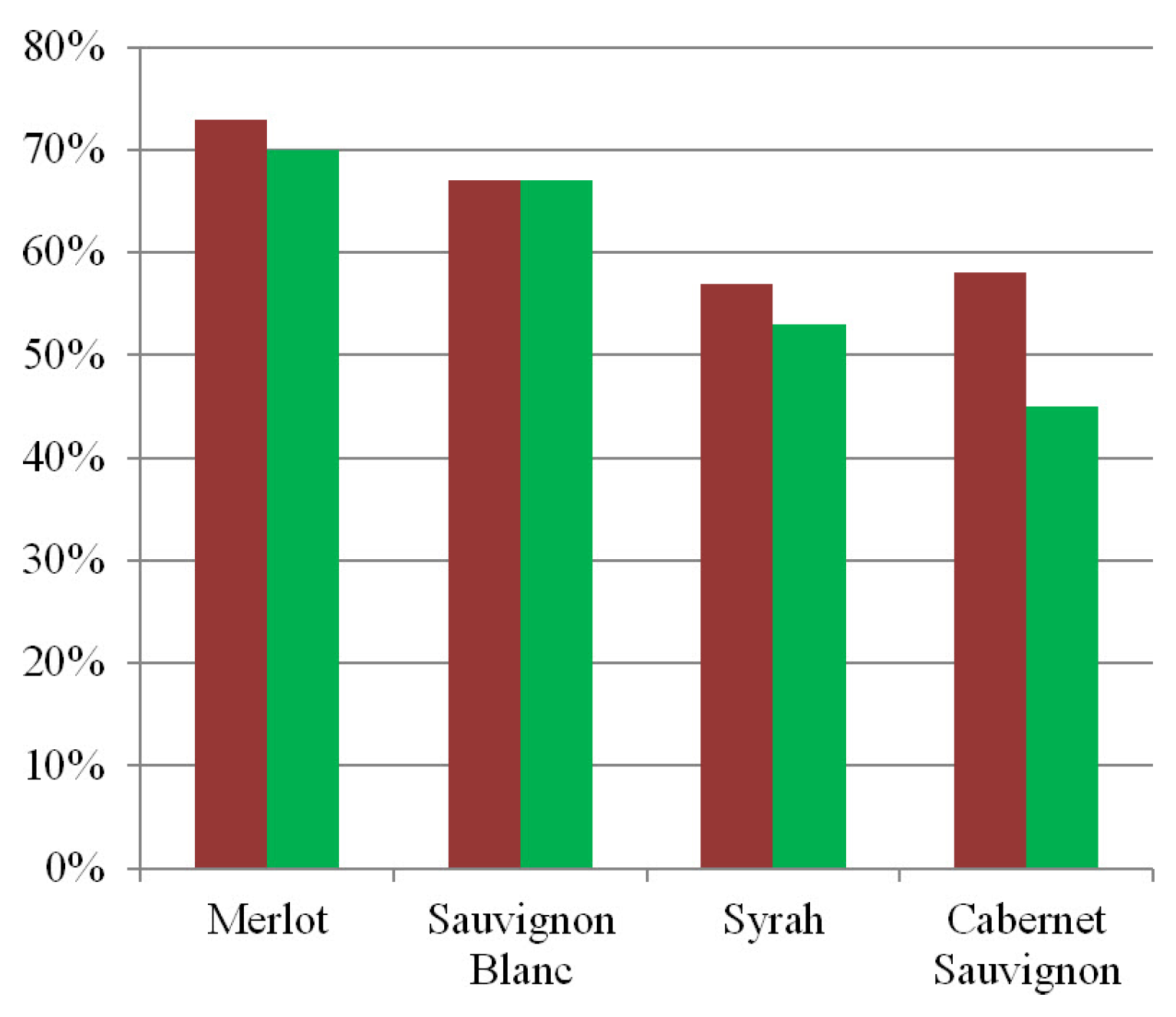

4.2. Vine Variety Discrimination

Variety Discrimination Using a Pixel-Based Linear SVM

5. Discussion

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Rapinel, S.; Clément, B.; Magnanon, S.; Sellin, V.; Hubert-Moy, L. Identification and mapping of natural vegetation on a coastal site using a Worldview-2 satellite image. J. Environ. Manag. 2014, 144, 236–246. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lottering, R.; Mutanga, O. Optimizing the spatial resolution of WorldView-2 imagery for discriminating forest vegetation at subspecies level in KwaZulu-Natal, South Africa. Geocarto Int. 2015. [Google Scholar] [CrossRef]

- Immitzer, M.; Atzberger, C.; Koukal, T. Tree Species Classification with Random Forest Using Very High Spatial Resolution 8-Band WorldView-2 Satellite Data. Remote Sens. 2012, 4, 2661–2693. [Google Scholar] [CrossRef]

- Pu, R.; Landry, S. A comparative analysis of high spatial resolution IKONOS and WorldView-2 imagery for mapping urban tree species. Remote Sens. Environ. 2012, 124, 516–533. [Google Scholar] [CrossRef]

- Ozdemir, I.; Karnieli, A. Predicting forest structural parameters using the image texture derived from WorldView-2 multispectral imagery in a dryland forest, Israel. Int. J. Appl. Earth Obs. Geoinform. 2011, 13, 701–710. [Google Scholar] [CrossRef]

- Eckert, S. Improved Forest Biomass and Carbon Estimations Using Texture Measures from WorldView-2 Satellite Data. Remote Sens. 2012, 4, 810–829. [Google Scholar] [CrossRef]

- Mutanga, O.; Adam, E.; Cho, M.A. High density biomass estimation for wetland vegetation using WorldView-2 imagery and random forest regression algorithm. Int. J. Appl. Earth Obs. Geoinform. 2012, 18, 399–406. [Google Scholar] [CrossRef]

- Upadhyay, P.; Kumar, A.; Roy, P.S.; Ghosh, S.K.; Gilbert, I. Effect on specific crop mapping using WorldView-2 multispectral add-on bands: soft classification approach. J. Appl. Remote Sens. 2012, 6, 063524. [Google Scholar] [CrossRef]

- Schultz, B.; Immitzer, M.; Formaggio, A.R.; Del’ Arco Sanches, I.; Luiz, A.J.B.; Atzberger, C. Self-Guided Segmentation and Classification of Multi-Temporal Landsat 8 Images for Crop Type Mapping in Southeastern Brazil. Remote Sens. 2015, 7, 14482–14508. [Google Scholar] [CrossRef]

- Justice, C.; Becker-Reshef, I. Report from the Workshop on Developing a Strategy for Global Agricultural Monitoring in the Framework of Group on Earth Observations (GEO). In Proceedings of the SPIE Remote Sensing and Modeling of Ecosystems for Sustainability IV. UN FAO, Rome, Italy, 16–18 July 2007.

- Urretavizcaya, I.; Santesteban, L.; Tisseyre, B.; Guillaume, S.; Miranda, C.; Royo, J. Oenological significance of vineyard management zones delineated using early grape sampling. Precis. Agric. 2014, 15, 111–129. [Google Scholar] [CrossRef]

- Bramley, R.; Proffitt, A. Managing variability in viticultural production. Grapegrower and Winemaker. Grapegrow. Winemak. 1999, 427, 11–16. [Google Scholar]

- Hall, A.; Lamb, D.; Holzapfel, B.; Louis, J. Optical remote sensing applications in viticulture - a review. Aust. J. Grape Wine Res. 2002, 8, 36–47. [Google Scholar] [CrossRef]

- Johnson, L.F.; Roczen, D.E.; Youkhana, S.K.; Nemani, R.R.; Bosch, D.F. Mapping vineyard leaf area with multispectral satellite imagery. Comput. Electron. Agric. 2003, 38, 33–44. [Google Scholar] [CrossRef]

- Karakizi, C.; Karantzalos, K.; Kandylakis, Z.; Makris, G.; Georgopoulos, A. Vineyard detection and vine variety discrimination from high resolution satellite data. In Proceedings of the 9th European Conference on Precision Agriculture, la Seu Vella, Barcelona, 8–11 July 2013.

- Karakizi, C.; Oikonomou, M.; Karantzalos, K. Spectral Discrimination and Reflectance Properties of Various Vine Varieties from Satellite, UAV and Proximate Sensors. In Proceedings of the 36th Inter-national Symposium on Remote Sensing of Environment on International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Berlin, Germany, 11–15 May 2015.

- Bramley, R.; Hamilton, R. Understanding variability in winegrape production systems. Aust. J. Grape Wine Res. 2004, 10, 32–45. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.; Berjon, A.; Lopez-Lozano, R.; Miller, J.R.; Martin, P.; Cachorro, V.; González, M.R.; Frutos, A. Assessing vineyard condition with hyperspectral indices: Leaf and canopy reflectance simulation in a row-structured discontinuous canopy. Remote Sens. Environ. 2005, 99, 271–287. [Google Scholar] [CrossRef]

- Acevedo-Opazo, C.; Tisseyre, B.; Guillaume, S.; Ojeda, H. The potential of high spatial resolution information to define within-vineyard zones related to vine water status. Precis. Agric. 2008, 9, 285–302. [Google Scholar] [CrossRef]

- Meggio, F.; Zarco-Tejada, P.; Núnez, L.; Sepulcre-Cantó, G.; González, M.; Martín, P. Grape quality assessment in vineyards affected by iron deficiency chlorosis using narrow-band physiological remote sensing indices. Remote Sens. Environ. 2010, 114, 1968–1986. [Google Scholar] [CrossRef]

- Hall, A.; Lamb, D.W.; Holzapfel, B.P.; Louis, J.P. Within-season temporal variation in correlations between vineyard canopy and winegrape composition and yield. Precis. Agric. 2011, 12, 103–117. [Google Scholar] [CrossRef]

- Yang, C.; Everitt, J.H.; Murden, D. Evaluating high resolution SPOT 5 satellite imagery for crop identification. Comput. Electron. Agric. 2011, 75, 347–354. [Google Scholar] [CrossRef]

- Zheng, B.; Myint, S.W.; Thenkabail, P.S.; Aggarwal, R.M. A support vector machine to identify irrigated crop types using time-series Landsat NDVI data. Int. J. Appl. Earth Obs. Geoinform. 2015, 34, 103–112. [Google Scholar] [CrossRef]

- Pena-Barragán, J.M.; Ngugi, M.K.; Plant, R.E.; Six, J. Object-based crop identification using multiple vegetation indices, textural features and crop phenology. Remote Sens. Environ. 2011, 115, 1301–1316. [Google Scholar] [CrossRef]

- Cruz-Ramírez, M.; Hervás-Martinez, C.; Jurado-Expósito, M.; López-Granados, F. A multi-objective neural network based method for cover crop identification from remote sensed data. Expert Syst. Appl. 2012, 39, 10038–10048. [Google Scholar] [CrossRef]

- Löw, F.; Michel, U.; Dech, S.; Conrad, C. Impact of feature selection on the accuracy and spatial uncertainty of per-field crop classification using Support Vector Machines. ISPRS J. Photogramm. Remote Sens. 2013, 85, 102–119. [Google Scholar] [CrossRef]

- Conrad, C.; Dech, S.; Dubovyk, O.; Fritsch, S.; Klein, D.; Löw, F.; Schorchtd, G.; Zeidlerb, J. Derivation of temporal windows for accurate crop discrimination in heterogeneous croplands of Uzbekistan using multitemporal RapidEye images. Comput. Electron. Agric. 2014, 103, 63–74. [Google Scholar] [CrossRef]

- Delenne, C.; Durrieu, S.; Rabatel, G.; Deshayes, M. From pixel to vine parcel: A complete methodology for vineyard delineation and characterization using remote-sensing data. Comput. Electron. Agric. 2010, 70, 78–83. [Google Scholar] [CrossRef] [Green Version]

- Pedroso, M.; Taylor, J.; Tisseyre, B.; Charnomordic, B.; Guillaume, S. A segmentation algorithm for the delineation of agricultural management zones. Comput. Electron. Agric. 2010, 70, 199–208. [Google Scholar] [CrossRef]

- Vaudour, E.; Carey, V.; Gilliot, J. Digital zoning of South African viticultural terroirs using bootstrapped decision trees on morphometric data and ultitemporal SPOT images. Remote Sens. Environ. 2010, 114, 2940–2950. [Google Scholar] [CrossRef]

- Rabatel, G.; Delenne, C.; Deshayes, M. A non-supervised approach using Gabor filters for vine-plot detection in aerial images. Comput. Electron. Agric. 2008, 62, 159–168. [Google Scholar] [CrossRef]

- Comba, L.; Gay, P.; Primicerio, J.; Aimonino, D.R. Vineyard detection from unmanned aerial systems images. Comput. Electron. Agric. 2015, 114, 78–87. [Google Scholar] [CrossRef]

- Pérez-Lamela, C.; García-Falcón, M.S.; Simal-Gándara, J.; Orriols-Fernández, I. Influence of grape variety, vine system and enological treatments on the colour stability of young red wines. Food Chem. 2007, 101, 601–606. [Google Scholar] [CrossRef]

- Bramley, R. Precision Viticulture: Managing vineyard variability for improved quality outcomes. In Managing Wine Quality: Viticulture and Wine Quality; Reynolds, A.G., Ed.; Woodhead Publishing: Cambridge, UK, 2010; pp. 445–480. [Google Scholar]

- Clavijo, A.; Calderón, I.; Paneque, P. Diversity of Saccharomyces and non-Saccharomyces yeasts in three red grape varieties cultured in the Serrania de Ronda (Spain) vine-growing region. Int. J. Food Microbiol. 2010, 143, 241–245. [Google Scholar] [CrossRef] [PubMed]

- Santesteban, L.; Guillaume, S.; Royo, J.; Tisseyre, B. Are precision agriculture tools and methods relevant at the whole-vineyard scale? Precis. Agric. 2013, 14, 2–17. [Google Scholar] [CrossRef]

- Ramos, M.; Martinez-Casasnovas, J. Soil water variability and its influence on transpirable soil water fraction with two grape varieties under different rainfall regimes. Agric. Ecosyst. Environ. 2014, 185, 253–262. [Google Scholar] [CrossRef]

- Song, J.; Smart, R.; Dambergs, R.; Sparrow, A.; Wells, R.B.; Wang, H.; Qian, M.C. Pinot Noir wine composition from different vine vigour zones classified by remote imaging technology. Food Chem. 2014, 153, 52–59. [Google Scholar] [CrossRef] [PubMed]

- Clarke, O.; Rand, M. Grapes Wines: A Comprehensive Guide to Varieties and Flavours; Sterling Epicure: New York, NY, USA, 2010. [Google Scholar]

- Diago, P.; Fernandes, A.; Millan, B.; Tardaguila, J.; Melo-Pinto, P. Identification of grapevine varieties using leaf spectroscopy and partial least squares. Comput. Electron. Agric. 2013, 99, 7–13. [Google Scholar] [CrossRef]

- Galet, P. A Practical Ampelography: Grapevine Identification; Comstock Publishing (Cornell University Press): Ithaca, NY, USA, 1979. [Google Scholar]

- Sefc, K.; Lefort, F.; Grando, M.; Scott, K.; Steinkellner, H.; Thomas, M. Microsatellite markers for grapevine: A state of the art. In Molecular Biology Biotechnology of Grapevine; Roubelakis-Angelakis, K.A., Ed.; Kluwer Academic Publishers: New York, USA, 2001. [Google Scholar]

- Sanchez, M.; De la Haba, J.; Benitez-Lopez, M.; Fernandez-Novales, J.; Garrido-Varo, A.; Perez-Marin, D. Non-destructive characterization and quality control of intact strawberries based on NIR spectral data. J. Food Eng. 2012, 110, 102–108. [Google Scholar] [CrossRef]

- Lacar, F.; Lewis, M.; Grierson, I. Use of hyperspectral imagery for mapping grape varieties in the Barossa Valley, South Australia. In Proceedings of the International Geoscience and Remote Sensing Symposium, Sydney, Australia, 9–13 July 2001; Volume 6, pp. 2875–2877.

- Ferreiro-Arman, M.; Da Costa, J.P.; Homayouni, S.; Martin-Herrero, J. Hyperspectral image analysis for precision viticulture. Lect. Notes Comput. Sci. 2006, 4142, 730–741. [Google Scholar]

- Ferreiro-Arman, M.; Alba-Castro, J.L.; Homayouni, S.; Da Costa, J.P.; Martin-Herrero, J. Vine variety discrimination with airborne imaging spectroscopy. In Proceedings of the SPIE Remote Sensing and Modeling of Ecosystems for Sustainability IV. SPIE, San Diego, CA, USA, 26 August 2007; Volume 667909.

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Tzotsos, A.; Karantzalos, K.; Argialas, D. Object Based Image Analysis through Nonlinear Scale Space Filtering. ISPRS J. Photogramm. Remote Sens. 2011, 66, 2–16. [Google Scholar] [CrossRef]

- Hall, A.; Louis, J.; Lamb, D. A method for extracting detailed information from high resolution multispectral images of vineyards. In Proceedings of the 6th International Conference on Geocomputation, ISBN 1864995637, University of Queensland, Brisbane, Australia, 24–26 September 2001.

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- Padwick, C.; Deskevich, M.; Pacifici, F.; Smallwood, S. WorldView-2 Pan-Sharpening. In Proceedings of the Annual Conference of the American Society of Photogrammetry and Remote Sensing, San Diego, CA, USA, 26–30 April 2010.

- Schowengerdt, R. Reconstruction of multispatial, multispectral image data using spatial frequency content. Photogramm. Eng. Remote Sens. 1998, 46, 1325–1334. [Google Scholar]

- Yuhendra; Alimuddin, I.; Sumantyo, J.T.S.; Kuze, H. Assessment of pan-sharpening methods applied to image fusion of remotely sensed multi-band data. Int. J. Appl. Earth Obs. Geoinform. 2012, 18, 165–175. [Google Scholar] [CrossRef]

- Tzotsos, A.; Karantzalos, K.; Argialas, D. Multiscale Segmentation and Classification of Remote Sensing Imagery with Advanced Edge and Scale-Space Features (ed Q. Weng). In Scale Issues in Remote Sensing; John Wiley and Sons, Inc.: Hoboken, NJ, USA, 2014. [Google Scholar]

- Jawak, S.D.; Devliyal, P.; Luis, A.J. A Comprehensive Review on Pixel Oriented and Object Oriented Methods for Information Extraction from Remotely Sensed Satellite Images with a Special Emphasis on Cryospheric Applications. Adv. Remote Sens. 2015, 4, 177–195. [Google Scholar] [CrossRef]

- Duro, D.; Franklin, S.; Dube, M.G. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Rouse, J.W., Jr.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with Erts; NASA: Washington, DC, USA, 1974; Volume 351, p. 309. [Google Scholar]

- Qi, J.; Chehbouni, A.; Huete, A.; Keer, Y.H.; Sorooshian, S. A modified soil vegetation adjusted index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Huang, X.; Lu, Q.; Zhang, L.; Plaza, A. New Postprocessing Methods for Remote Sensing Image Classification: A Systematic Study. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7140–7159. [Google Scholar] [CrossRef]

- Dalponte, M.; Ene, L.T.; Marconcini, M.; Gobakken, T.; Næsset, E. Semi-supervised SVM for individual tree crown species classification. ISPRS J. Photogramm. Remote Sens. 2015, 110, 77–87. [Google Scholar] [CrossRef]

- Chang, C.C.; Lin, C.J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 27:1–27:27. Software Available online: http://www.csie.ntu.edu.tw/~cjlin/libsvm (accessed on 10 January 2016). [Google Scholar] [CrossRef]

- Wiedemann, C.; Heipke, C.; Mayer, H.; Hinz, S. Automatic extraction and evaluation of road networks from MOMS-2P imagery. Int. Arch. Photogramm. Remote Sens. 1998, 30, 285–291. [Google Scholar]

- Karantzalos, K.; Paragios, N. Large-Scale Building Reconstruction through Information Fusion and 3-D Priors. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2283–2296. [Google Scholar] [CrossRef]

| Vineyard Detection | Vine Canopy Extraction | Vine Variety Discrimination |

|---|---|---|

| Mean blue | Mean (all 8 bands) | Mean (coastal blue, red, red-edge, NIR1, NIR2) |

| Mean red-edge | Ratio (all 8 bands) | Ratio (all 8 bands) |

| Mean NIR1 | NDVI | Mean NIR1/mean red |

| Mean red-edge/ mean blue | Min. pixel value (red, NIR1) | Mean NIR2/mean red |

| Standard deviation NIR1 | Mean diff.to neighbors (red, NIR1) | Mean NIR1/mean blue |

| MSAVI | Mean diff. to brighter/darker neighbors (red, NIR1) | Mean NIR2/mean blue |

| GLCM Dissimilarity | Number of brighter/darker objects (red, NIR1) | NDVI |

| GLCM Standard Deviation | Rel.border to brighter objects (red, NIR1) |

| Quantitative Evaluation for the Vineyard Detection Task | ||||

|---|---|---|---|---|

| Quality Measures | Trapeza | Megaplatanos | Naoussa | Amynteo |

| Completeness | 91% | 96% | 94% | 89% |

| Correctness | 94% | 93% | 93% | 89% |

| Overall Quality | 86% | 90% | 88% | 80% |

| Trapeza | Megaplatanos | Naoussa | Amynteo | Average | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy Metric | NN | SVM | NN | SVM | NN | SVM | NN | SVM | NN | SVM |

| Overall Accuracy | 97.2% | 97.8% | 99.5% | 98.9% | 100% | 89.5% | 96.2% | 97.5% | 98.2% | 95.9% |

| Producer Accuracy | 97.7% | 96.6% | 99.0% | 97.9% | 100% | 88.0% | 96.2% | 98.7% | 98.2% | 95.3% |

| User Accuracy | 96.6% | 98.8% | 100% | 100% | 100% | 90.0% | 96.2% | 96.3% | 98.2% | 96.3% |

| Study Areas (acquisition year) | Trapeza (2012) | Megaplatanos (2012) | Naoussa (2013) | Amynteo (2013) | |||||||

| number of varieties/clones | 4 | 5 | 6 | 4 | 5 | 6 | 3 | 4 | 3 | 4 | Average |

| Multispectral Data (2-m resolution) | |||||||||||

| Discrimination: OA at parcel level | 100% | 100% | 100% | 94% | 96% | 94% | 91% | 83% | 100% | 100% | 96% |

| Classification: OA at pixel level | 75% | 72% | 61% | 69% | 56% | 45% | 56% | 50% | 62% | 64% | 61% |

| Pan-sharpened Data (50-cm resolution) | |||||||||||

| Discrimination: OA at parcel level | 100% | 100% | 100% | 100% | 100% | 92% | 86% | 85% | 100% | 100% | 96% |

| Classification: OA at pixel level | 75% | 72% | 61% | 56% | 47% | 38% | 59% | 51% | 50% | 52% | 56% |

| Multispectral Data (2-m resolution) | ||||||

| Evaluation at the pixel level | Classified as | |||||

| Ground Truth | Syrah I | Syrah II | Merlot | Sauvignon Blanc II | Sauvignon Blanc I | Riesling |

| Syrah I | 67% | 3% | 1% | 10% | 12% | 7% |

| Syrah II | 10% | 45% | 8% | 2% | 4% | 31% |

| Merlot | 4% | 5% | 65% | 6% | 3% | 17% |

| Sauvignon Blanc II | 16% | 1% | 4% | 78% | 1% | 0% |

| Sauvignon Blanc I | 9% | 4% | 2% | 5% | 76% | 4% |

| Riesling | 14% | 27% | 10% | 1% | 5% | 43% |

| Overall Accuracy | 61% | |||||

| Pan-sharpened Data (50-cm resolution) | ||||||

| Evaluation at the pixel level | Classified as | |||||

| Ground Truth | Syrah I | Syrah II | Merlot | Sauvignon Blanc II | Sauvignon Blanc I | Riesling |

| Syrah I | 55% | 4% | 1% | 11% | 18% | 11% |

| Syrah II | 11% | 49% | 7% | 1% | 4% | 28% |

| Merlot | 4% | 8% | 68% | 7% | 1% | 12% |

| Sauvignon Blanc II | 13% | 3% | 8% | 72% | 2% | 2% |

| Sauvignon Blanc I | 6% | 3% | 1% | 1% | 83% | 6% |

| Riesling | 15% | 32% | 3% | 0% | 1% | 49% |

| Overall Accuracy | 61% | |||||

| Trapeza | Megaplatanos | Naoussa | Amynteo | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| number of varieties/clones | 4 | 5 | 6 | 4 | 5 | 6 | 3 | 4 | 3 | 4 | Average |

| Overall accuracy (OA) at the parcel level | |||||||||||

| Object-based NN and majority voting | 100% | 100% | 100% | 100% | 100% | 92% | 86% | 85% | 100% | 100% | 96% |

| Pixel-based SVM and majority voting | 75% | 78% | 55% | 74% | 56% | 54% | 48% | 63% | 50% | 43% | 60% |

| Overall accuracy (OA) at the pixel level | |||||||||||

| Object-based NN | 75% | 72% | 61% | 56% | 47% | 38% | 59% | 51% | 50% | 52% | 56% |

| Pixel-based SVM | 56% | 53% | 45% | 52% | 45% | 38% | 54% | 43% | 46% | 41% | 47% |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karakizi, C.; Oikonomou, M.; Karantzalos, K. Vineyard Detection and Vine Variety Discrimination from Very High Resolution Satellite Data. Remote Sens. 2016, 8, 235. https://doi.org/10.3390/rs8030235

Karakizi C, Oikonomou M, Karantzalos K. Vineyard Detection and Vine Variety Discrimination from Very High Resolution Satellite Data. Remote Sensing. 2016; 8(3):235. https://doi.org/10.3390/rs8030235

Chicago/Turabian StyleKarakizi, Christina, Marios Oikonomou, and Konstantinos Karantzalos. 2016. "Vineyard Detection and Vine Variety Discrimination from Very High Resolution Satellite Data" Remote Sensing 8, no. 3: 235. https://doi.org/10.3390/rs8030235