Interpretation of Forest Resources at the Individual Tree Level in Japanese Conifer Plantations Using Airborne LiDAR Data

Abstract

:1. Introduction

- To evaluate the possibility of quantifying forest resources at the tree level using airborne laser data by applying the ITC approach;

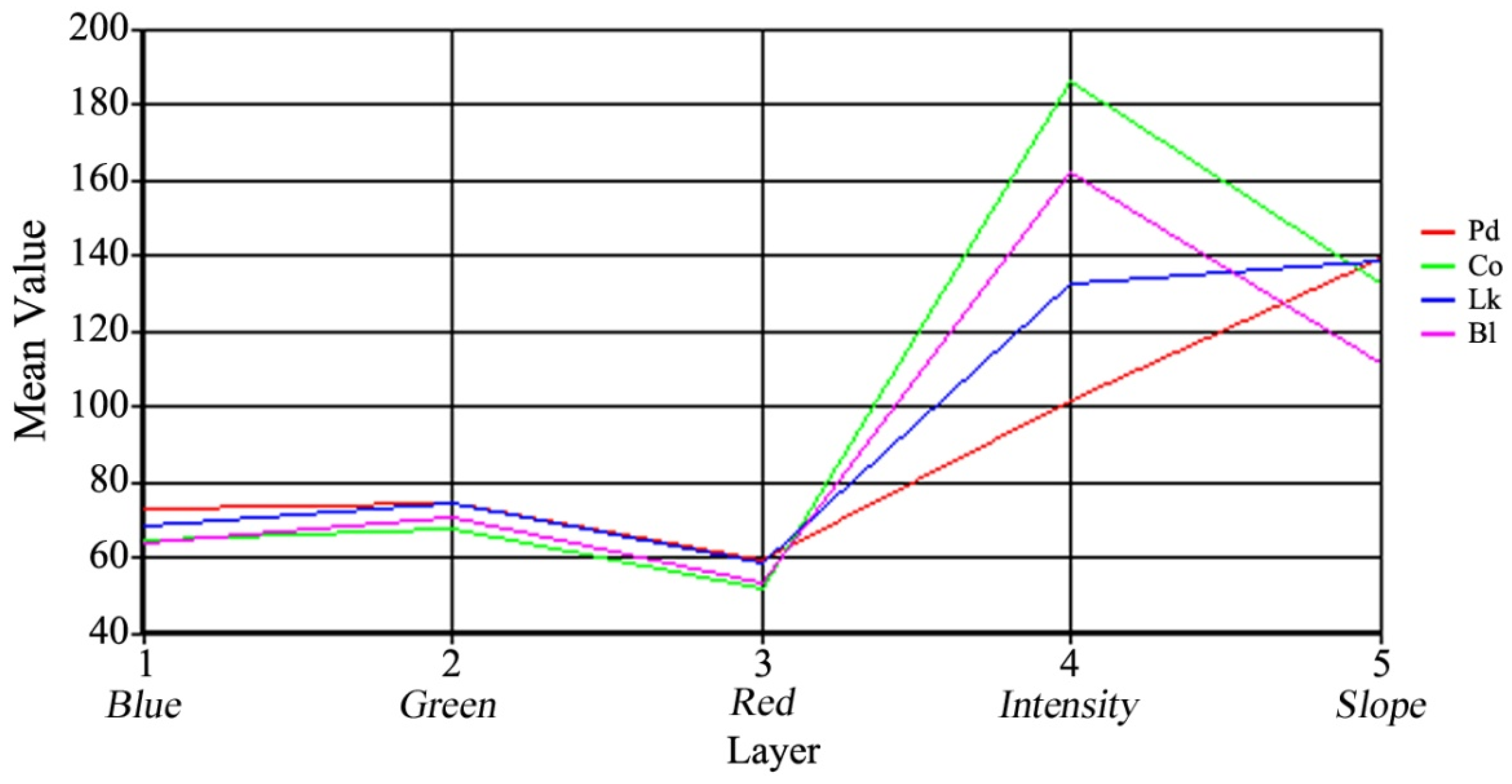

- To determine whether the reflectance of forests on laser scanning and the average slope of tree crowns can contribute to forest classification; and

- To compare the estimation capability of ALS data with that of optical bands for interpreting forest resources.

2. Materials and Methods

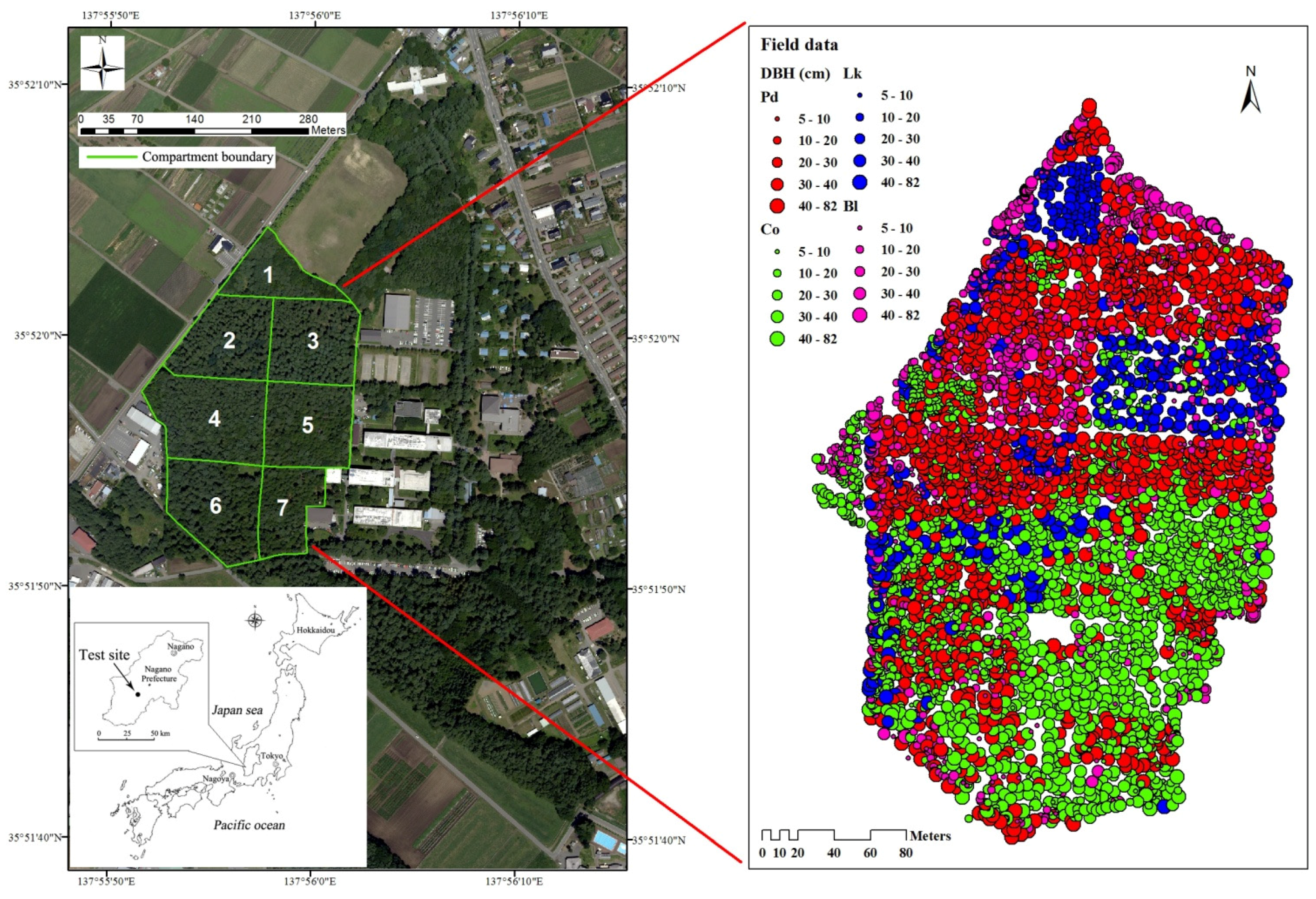

2.1. Study Area

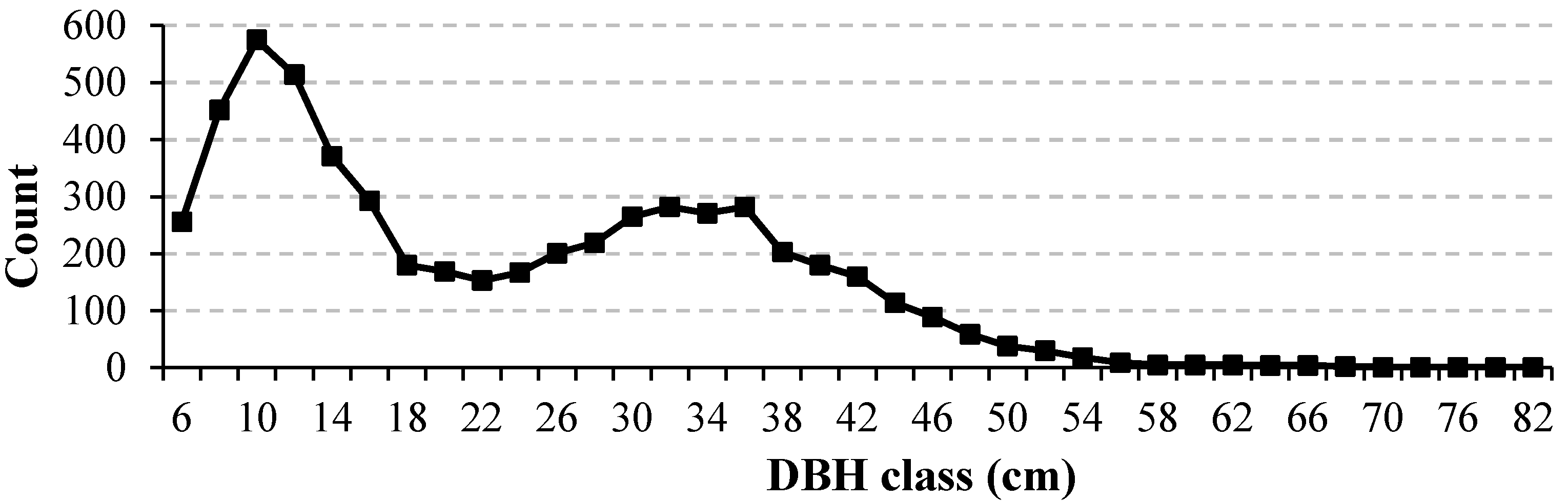

2.2. Field Measurements and Geographic Information System (GIS) Data

2.3. Airborne LiDAR Data

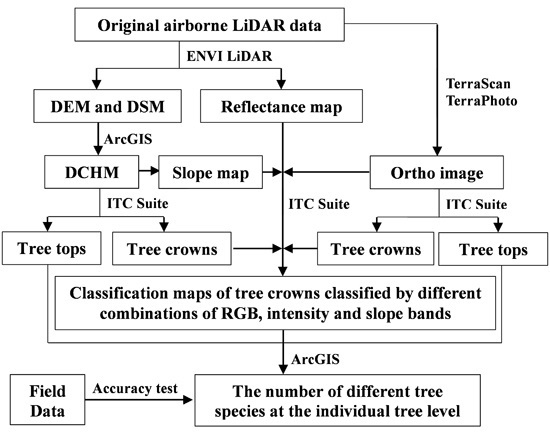

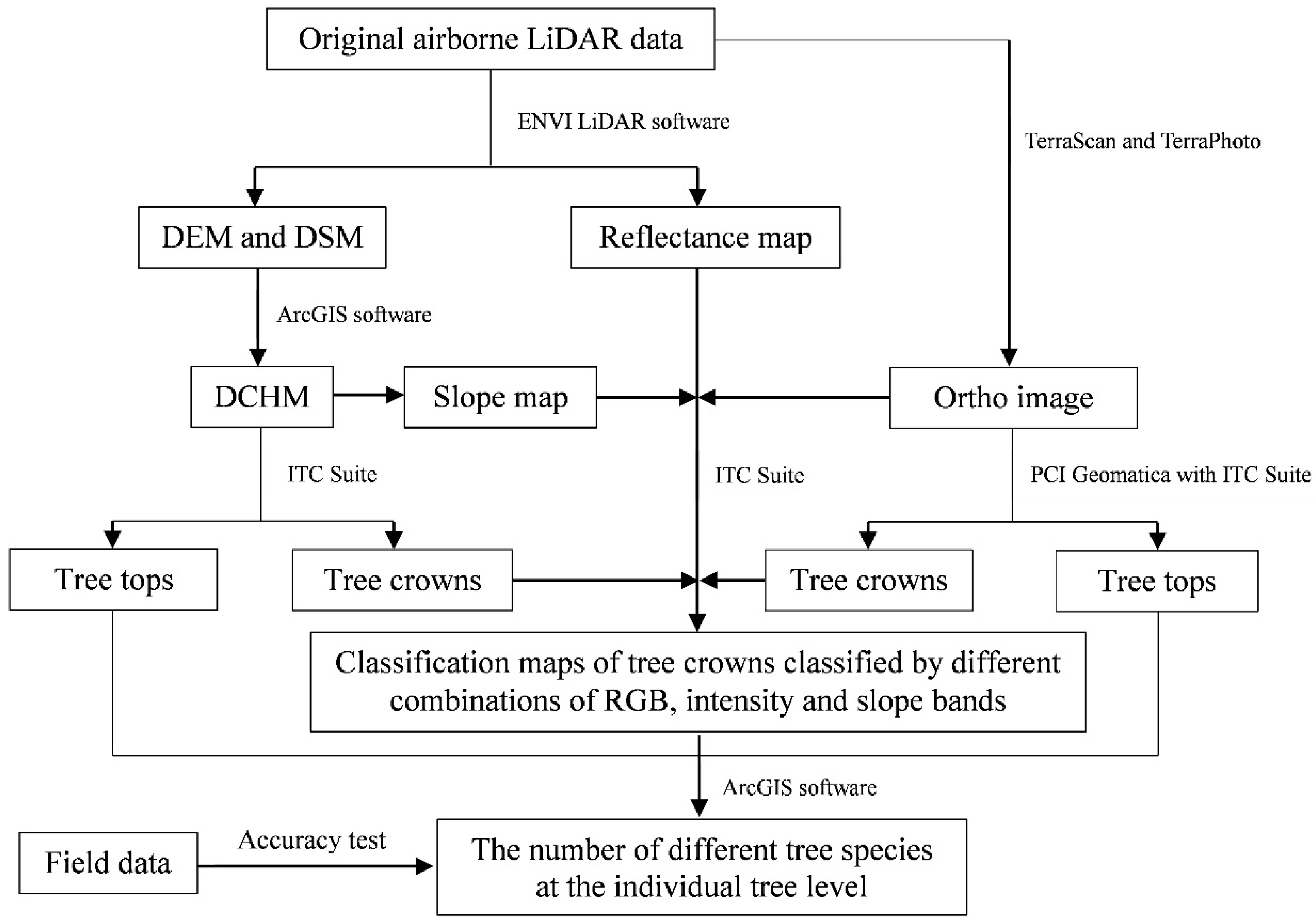

2.4. Data Analyses

2.4.1. Interpretation of Airborne Laser Scanning (ALS) Data

2.4.2. Interpretation of Tree Tops Using the Individual Tree Crown (ITC) Approach

2.4.3. Supervised Classification and Counting for Different Tree Species

3. Results

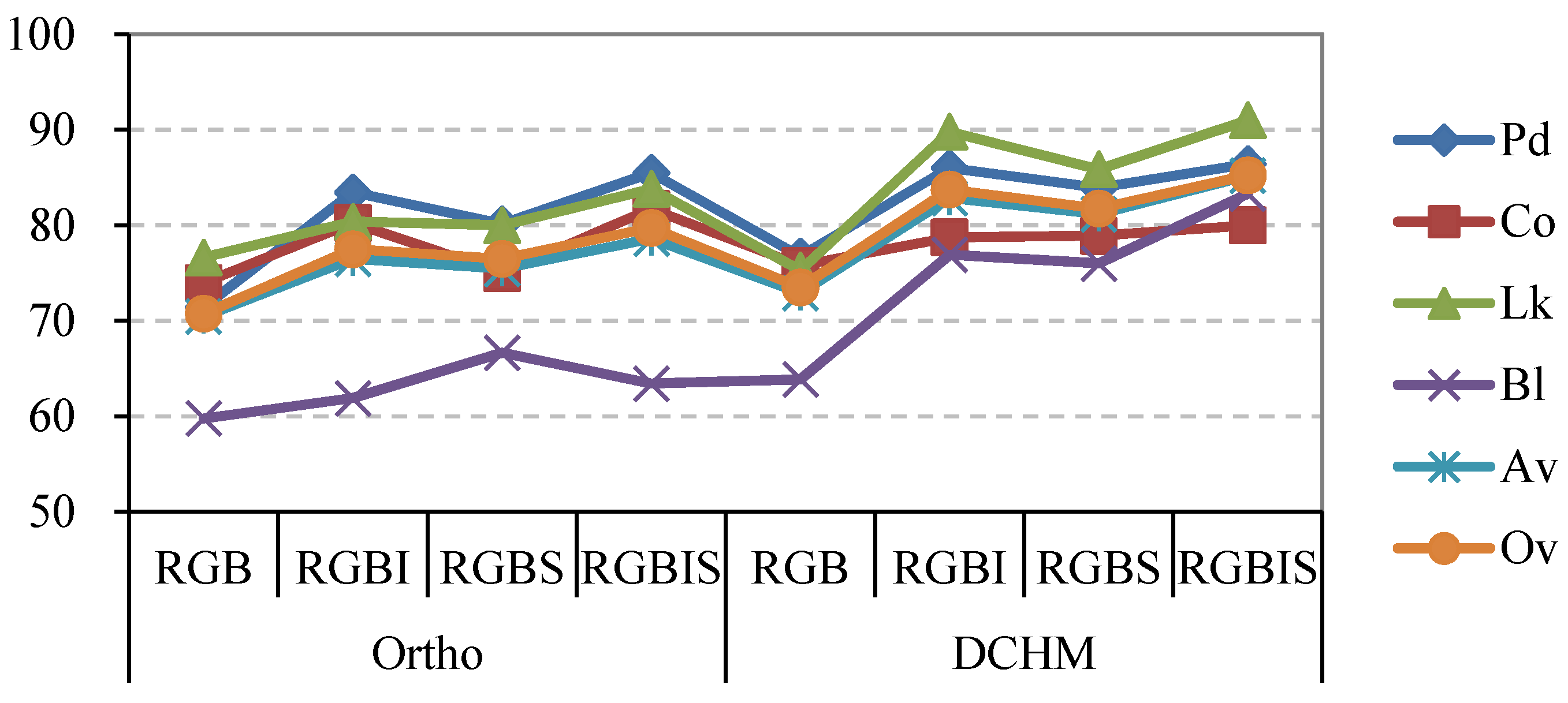

3.1. Object-Based Supervised Classification of Tree Species

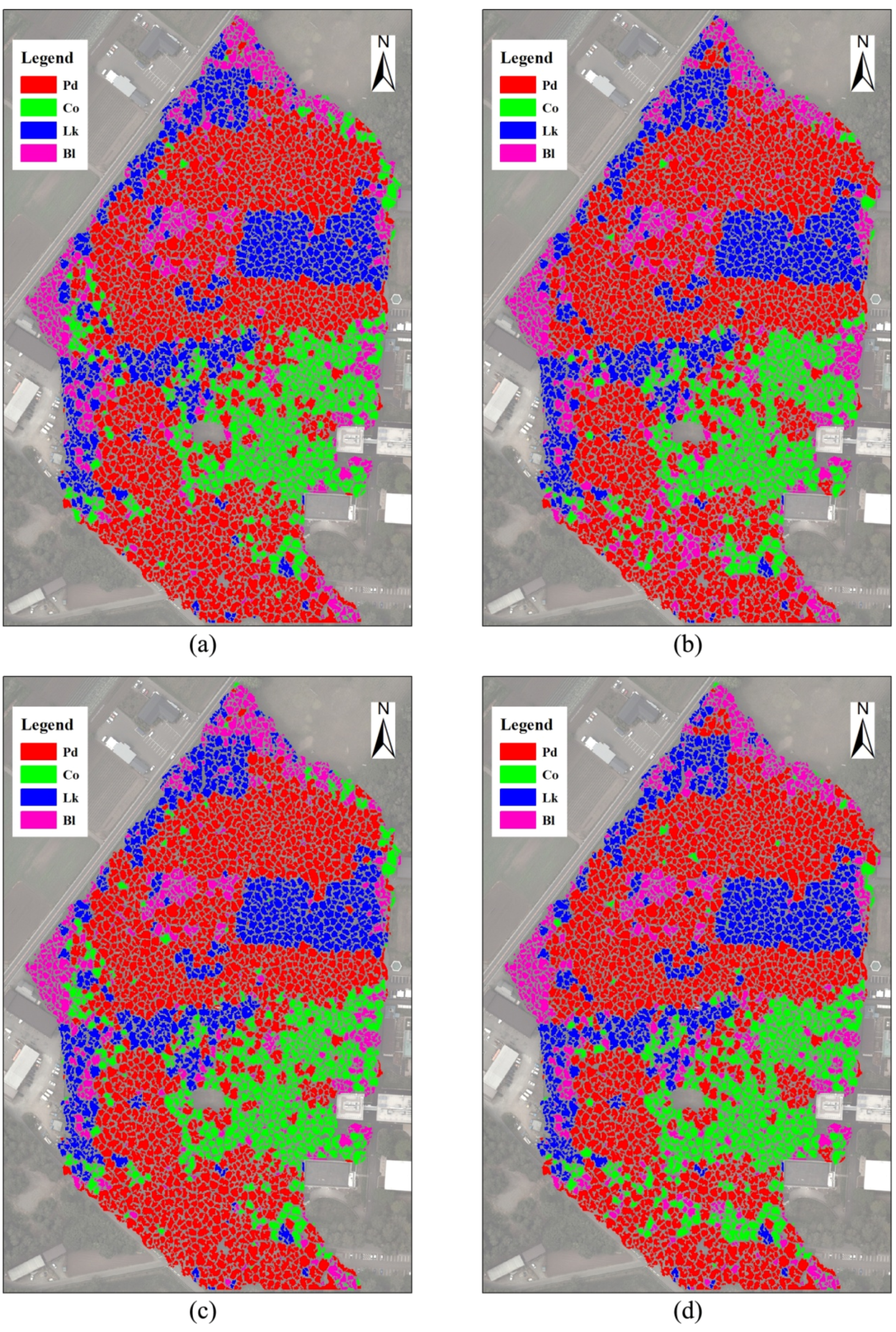

3.1.1. Classification of the Tree Crowns Delineated Using the Green Band

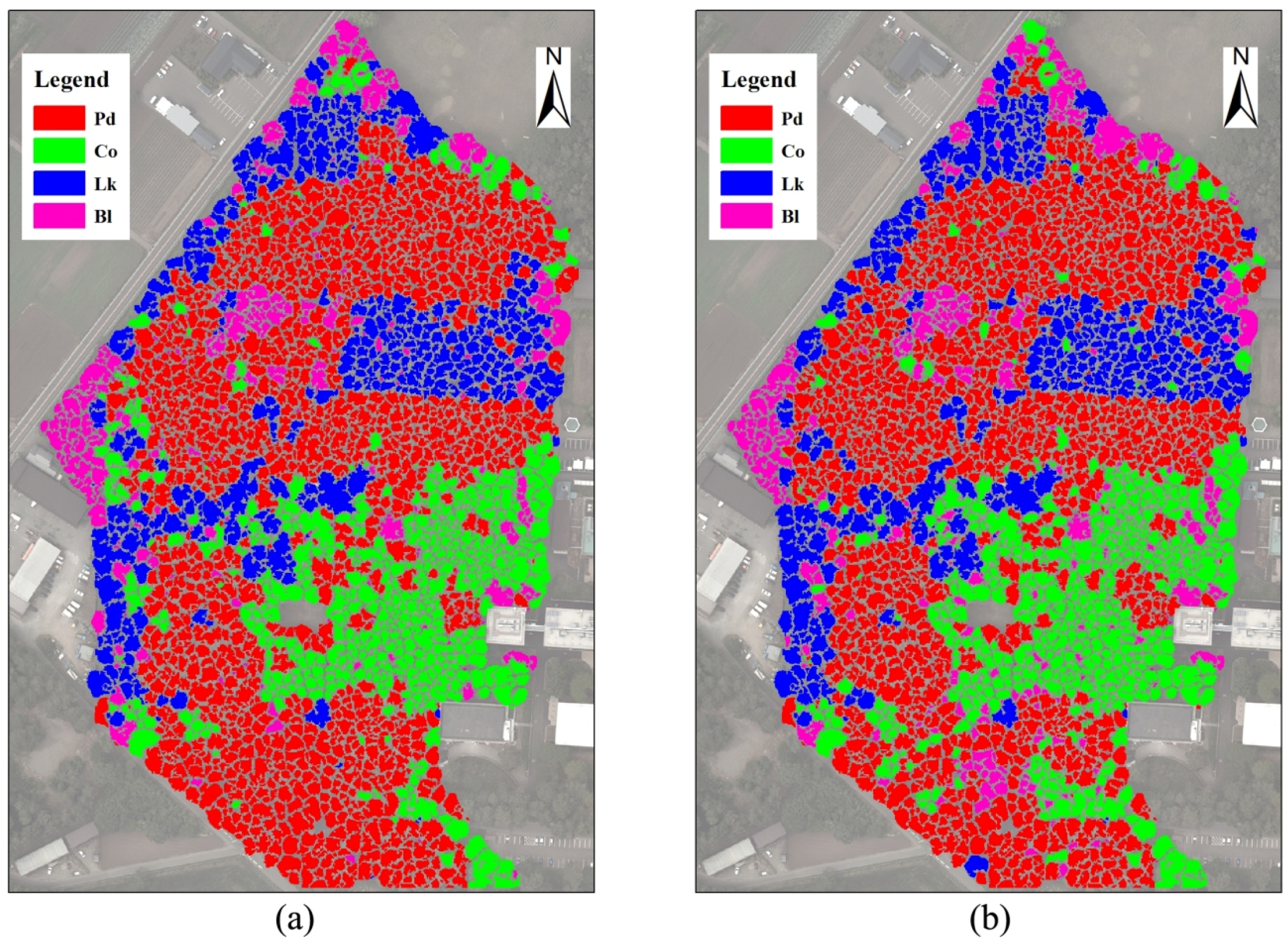

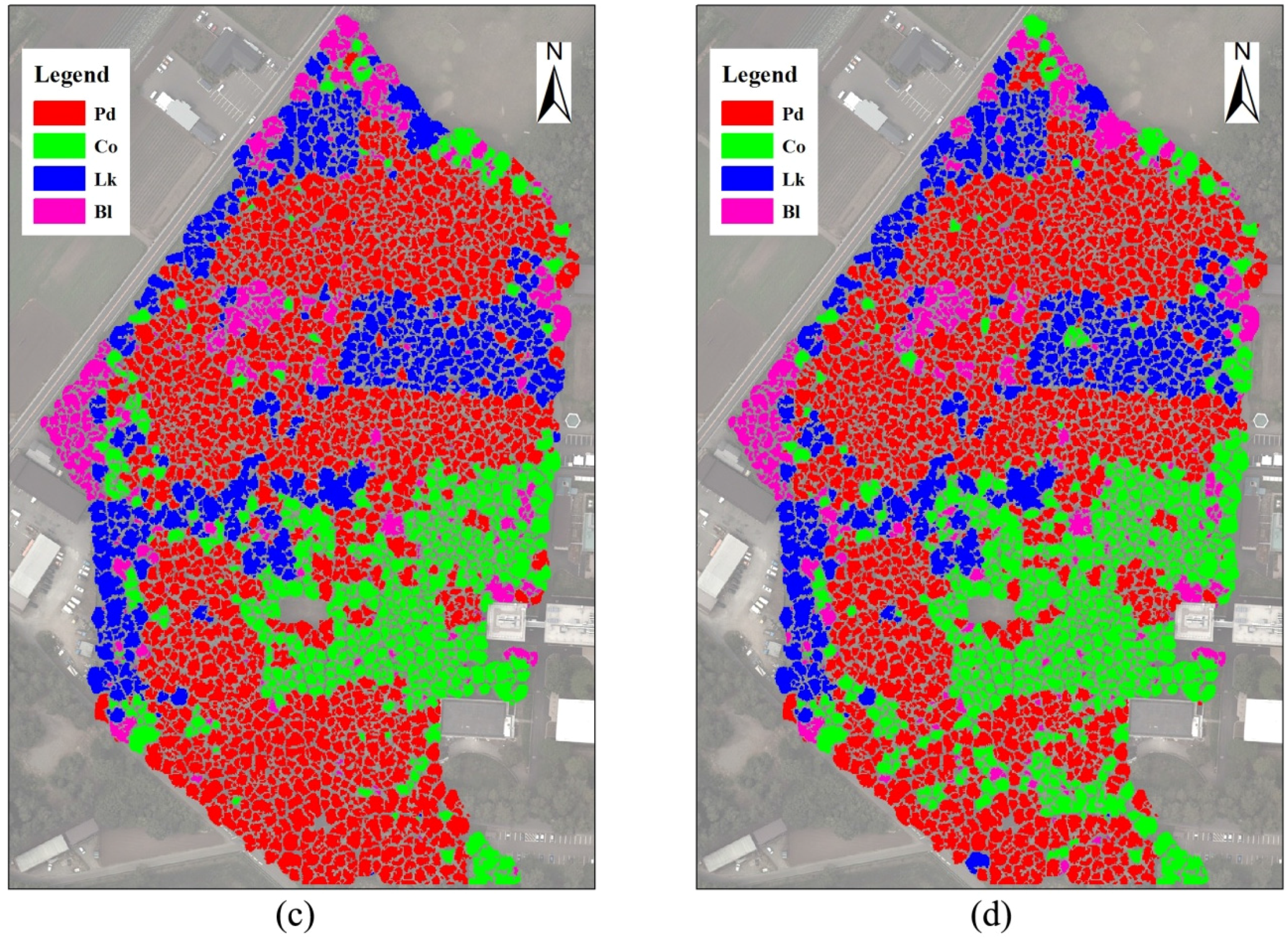

3.1.2. Classification of the Tree Crowns Delineated Using the Digital Canopy Height Model (DCHM)

3.1.3. Comparison of Classifications of the Tree Crowns Detected Using Different Data

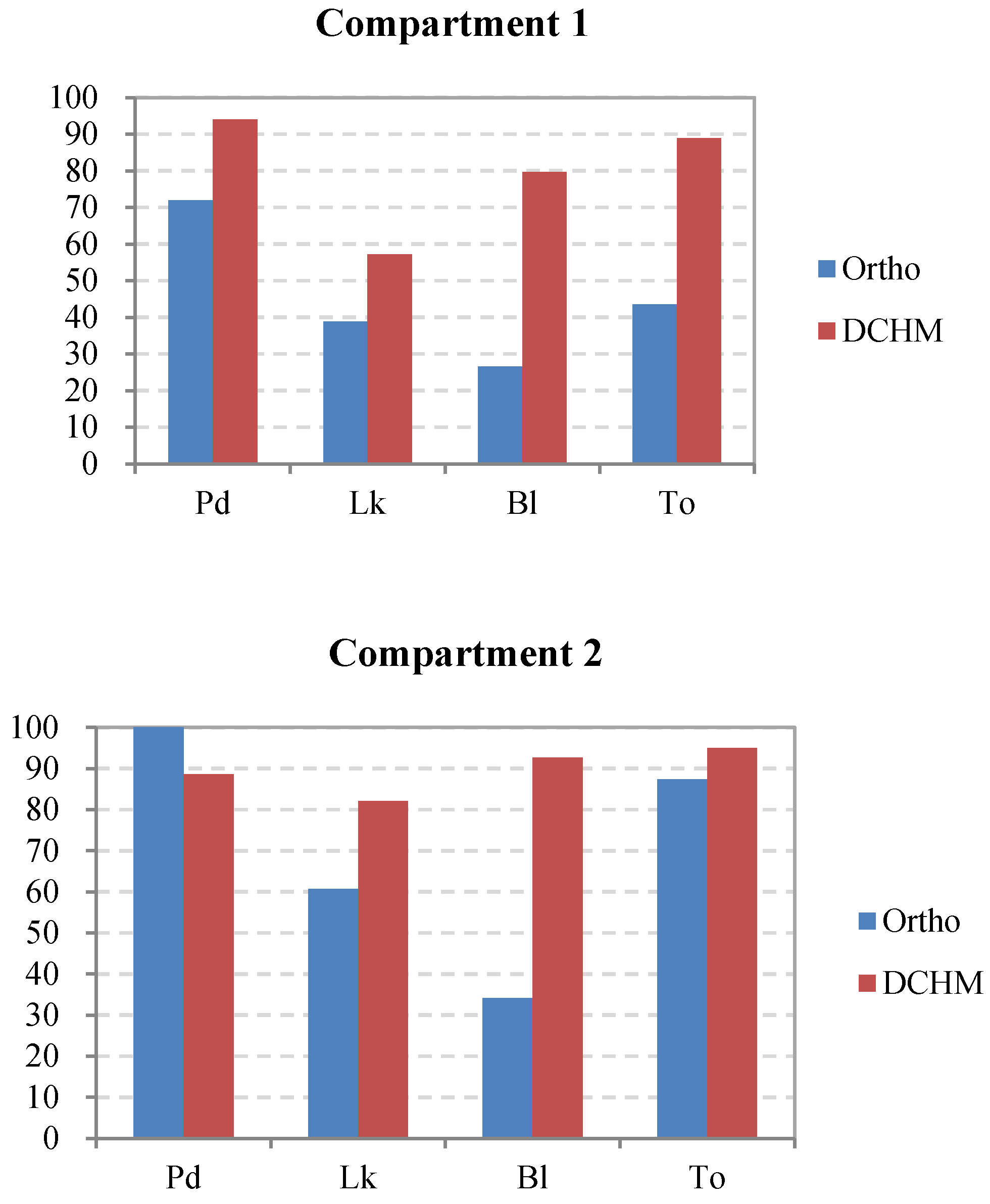

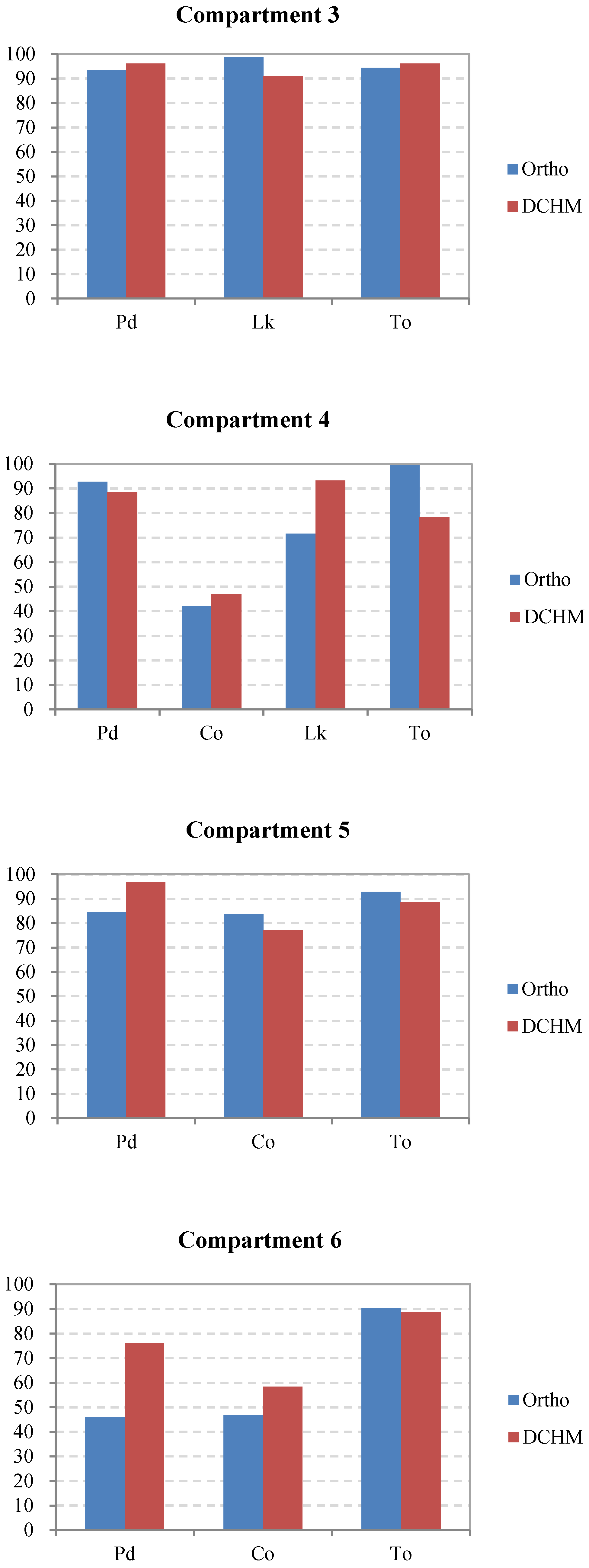

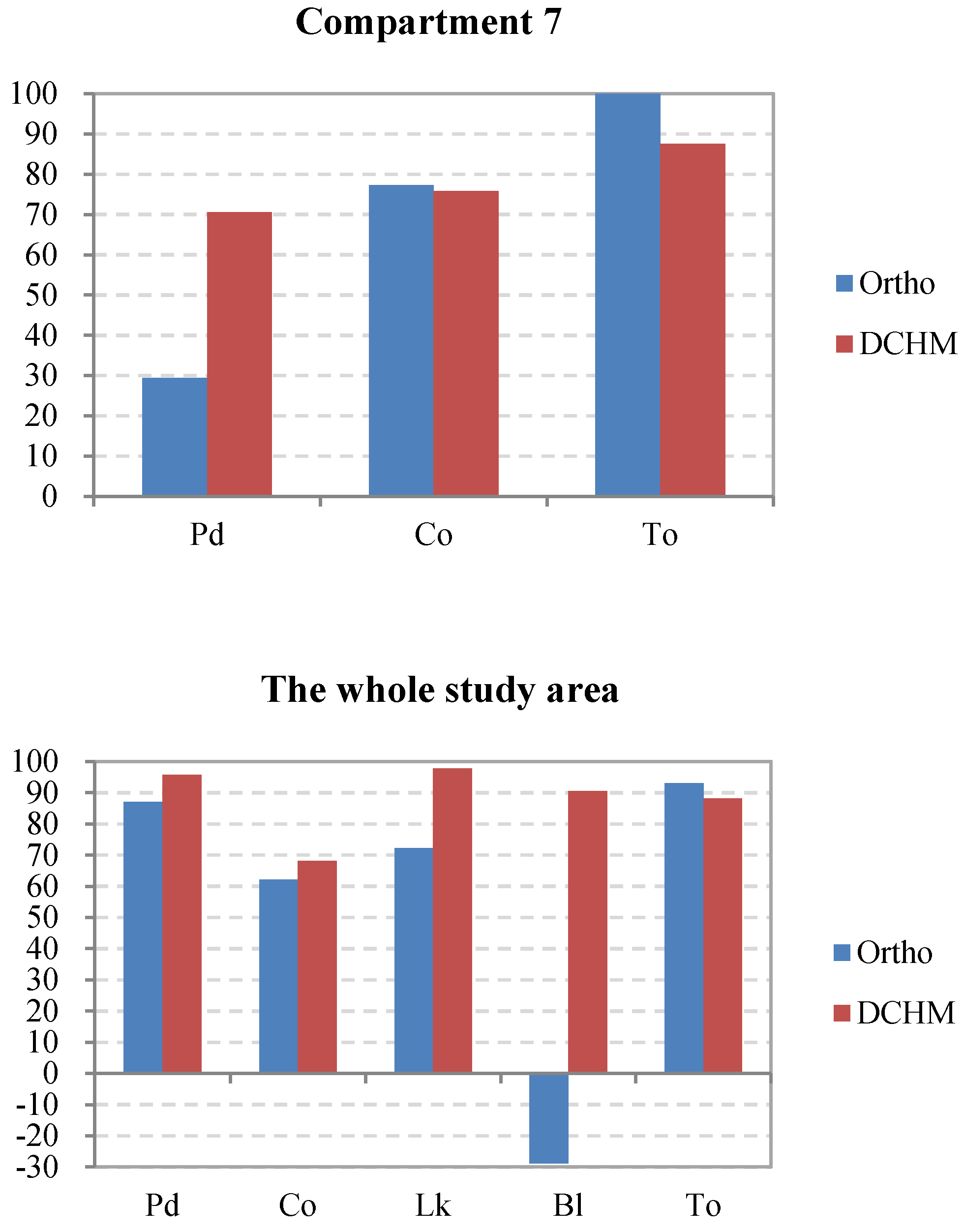

3.2. Counting Trees of Different Species in the Study Area

3.3. Accuracy of Position Matching of Interpreted Trees with Surveyed Trees

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Katoh, M.; Gougeon, F.A.; Leckie, D.G. Application of high-resolution airborne data using individual tree crown in Japanese conifer plantations. J. For. Res. 2009, 14, 10–19. [Google Scholar] [CrossRef]

- Katoh, M.; Gougeon, F.A. Improving the precision of tree counting by combining tree detection with crown delineation and classification on homogeneity guided smoothed high resolution (50 cm) multispectral airborne digital data. Remote Sens. 2012, 4, 1411–1424. [Google Scholar] [CrossRef]

- Zolkos, S.G.; Goetz, S.J.; Dubayah, R. A meta-analysis of terrestrial aboveground biomass estimation using LiDAR remote sensing. Remote Sens. Environ. 2013, 128, 289–298. [Google Scholar] [CrossRef]

- Immitzer, M.; Atzberger, C.; Koukal, T. Tree species classification with random forest using very high spatial resolution 8-band WorldView-2 satellite data. Remote Sens. 2012, 4, 2661–2693. [Google Scholar] [CrossRef]

- Straub, C.; Weinacker, H.; Koch, B. A comparison of different methods for forest resource estimation using information from airborne laser scanning and CIR orthophotos. Eur. J. For. Res. 2010, 129, 1069–1080. [Google Scholar] [CrossRef]

- Nagendra, H. Using remote sensing to assess biodiversity. Int. J. Remote Sens. 2001, 22, 2377–2400. [Google Scholar] [CrossRef]

- Clark, M.L.; Roberts, D.A.; Clark, D.B. Hyperspectral discrimination of tropical rain forest tree species at leaf to crown scales. Remote Sens. Environ. 2005, 96, 375–398. [Google Scholar] [CrossRef]

- Larsen, M. Single tree species classification with a hypothetical multi-spectral satellite. Remote Sens. Environ. 2007, 110, 523–532. [Google Scholar] [CrossRef]

- Hill, D.A.; Leckie, D.G. Forest regeneration: Individual tree crown detection techniques for density and stocking assessments. In Proceedings of the International Forum on Automated Interpretation of High Spatial Resolution Digital Imagery for Forestry, Victoria, BC, Canada, 10–12 February 1998; pp. 169–177.

- Leckie, D.G.; Gillis, M.D. A crown-following approach to the automatic delineation of individual tree crowns in high spatial resolution aerial images. In Proceedings of the International Forum on Airborne Multispectral Scanning for Forestry and Mapping, Chalk River, ON, Canada, 13–16 April 1993; pp. 86–93.

- Pollock, R. A Model-based approach to automatically locating individual tree crowns in high-resolution images of forest canopies. In Proceedings of the First International Airborne Remote Sensing Conference and Exhibition, Strasbourg, France, 12–15 September 1994; pp. 11–15.

- Wang, L.; Gong, P.; Biging, G.S. Individual tree-crown delineation and treetop detection in high-spatial resolution aerial imagery. Photogramm. Eng. Remote Sens. 2004, 70, 351–357. [Google Scholar] [CrossRef]

- Erikson, M. Segmentation of individual tree crowns in color aerial photographs using region growing supported by fuzzy rules. Can. J. For. Res. 2003, 33, 1557–1563. [Google Scholar] [CrossRef]

- Katoh, M. Comparison of high resolution IKONOS imageries to interpret individual trees. J. Jpn. For. Soc. 2002, 84, 221–230. [Google Scholar]

- Gougeon, F.A.; Leckie, D.G. The individual tree crown approach applied to IKONOS images of a coniferous plantation area. Photogramm. Eng. Remote Sens. 2006, 72, 1287–1297. [Google Scholar] [CrossRef]

- Deng, S.; Katoh, M.; Guan, Q.; Yin, N.; Li, M. Interpretation of forest resources at the individual tree level at Purple Mountain, Nanjing City, China, using WorldView-2 imagery by combining GPS, RS and GIS technologies. Remote Sens. 2014, 6, 87–110. [Google Scholar] [CrossRef]

- Lefsky, M.A.; Cohen, W.B.; Acker, S.A.; Parker, G.G.; Spies, T.A.; Harding, D. LiDAR remote sensing of the canopy structure and biophysical properties of Douglas-fir western hemlock forests. Remote Sens. Environ. 1999, 70, 339–361. [Google Scholar] [CrossRef]

- Popescu, S.C.; Wynne, R.H.; Scrivani, J.A. Fusion of small-footprint LiDAR and multi-spectral data to estimate plot-level volume and biomass in deciduous and pine forests in Virginia, USA. For. Sci. 2004, 50, 551–565. [Google Scholar]

- Deng, S.; Katoh, M.; Guan, Q.; Yin, N.; Li, M. Estimating forest aboveground biomass by combining ALOS PALSAR and WorldView-2 data: A case study at Purple Mountain National Park, Nanjing, China. Remote Sens. 2014, 6, 7878–7910. [Google Scholar] [CrossRef]

- Næsset, E.; Gobakken, T. Estimation of above- and below-ground biomass across regions of the boreal forest zone using airborne laser. Remote Sens. Environ. 2008, 112, 3079–3090. [Google Scholar] [CrossRef]

- Zhao, K.G.; Popescu, S.; Nelson, R. LiDAR remote sensing of forest biomass: A scale in variant estimation approach using airborne lasers. Remote Sens. Environ. 2009, 113, 182–196. [Google Scholar] [CrossRef]

- Sun, G.; Ranson, K.J. Modeling LiDAR returns from forest canopies. IEEE Trans. Geosci. Remote Sens. 2000, 38, 2617–2626. [Google Scholar]

- Patenaude, G.; Hill, R.; Milne, R.; Gaveau, D.; Briggs, B.; Dawson, T. Quantifying forest above ground carbon content using LiDAR remote sensing. Remote Sens. Environ. 2004, 93, 368–380. [Google Scholar] [CrossRef]

- Lee, A.; Lucas, R.M. A LiDAR derived canopy density model for tree stem and crown mapping in Australian woodlands. Remote Sens. Environ. 2007, 111, 493–518. [Google Scholar] [CrossRef]

- Mitchard, E.T.A.; Saatchi, S.S.; White, L.J.T.; Abernethy, K.A.; Jeffery, K.J.; Lewis, S.L.; Collins, M.; Lefsky, M.A.; Leal, M.E.; Woodhouse, I.H.; et al. Mapping tropical forest biomass with radar and spaceborne LiDAR in Lopé National Park, Gabon: Overcoming problems of high biomass and persistent cloud. Biogeosciences 2012, 9, 179–191. [Google Scholar] [CrossRef]

- Montesano, P.M.; Cook, B.D.; Sun, G.; Simard, M.; Nelson, R.F.; Ranson, K.J.; Zhang, Z.; Luthcke, S. Achieving accuracy requirements for forest biomass mapping: A spaceborne data fusion method for estimating forest biomass and LiDAR sampling error. Remote Sens. Environ. 2013, 130, 153–170. [Google Scholar] [CrossRef]

- Næsset, E. Estimating timber volume of forest stands using airborne laser scanner data. Remote Sens. Environ. 1997, 61, 246–253. [Google Scholar] [CrossRef]

- Breidenbach, J.; Koch, B.; Kandler, G. Quantifying the influence of slope, aspect, crown shape and stem density on the estimation of tree height at plot level using LiDAR and InSAR data. Int. J. Remote Sens. 2008, 29, 1511–1536. [Google Scholar] [CrossRef]

- Tsuzuki, H.; Nelson, R.; Sweda, T. Estimating timber stock of Ehime Prefecture, Japan using airborne laser profiling. J. For. Plann. 2008, 13, 259–265. [Google Scholar]

- Kodani, E.; Awaya, Y. Estimating stand parameters in manmade coniferous forest stands using low-density LiDAR. J. Jpn. Soc. Photogram. Remote Sens. 2013, 52, 44–55. [Google Scholar] [CrossRef]

- Hayashi, M.; Yamagata, Y.; Borjigin, H.; Bagan, H.; Suzuki, R.; Saigusa, N. Forest biomass mapping with airborne LiDAR in Yokohama City. J. Jpn. Soc. Photogram. Remote Sens. 2013, 52, 306–315. [Google Scholar] [CrossRef]

- Takejima, K. The development of stand volume estimation model using airborne LiDAR for Hinoki (Chamaecyparis obtusa) and Sugi (Cryptomeria japonica). J. Jpn. Soc. Photogram. Remote Sens. 2015, 54, 178–188. [Google Scholar]

- Wulder, M.; Niemann, K.O.; Goodenough, D.G. Local maximum filtering for the extraction of tree locations and basal area for high spatial resolution imagery. Remote Sens. Environ. 2000, 73, 103–114. [Google Scholar] [CrossRef]

- Hyyppä, J.; Kelle, O.; Lehikoinen, M.; Inkinen, M. A segmentation-based method to retrieve stem volume estimates from 3-D tree height models produced by laser scanners. IEEE Trans. Geosci. Remote Sens. 2001, 39, 969–975. [Google Scholar] [CrossRef]

- Takahashi, T.; Yamamoto, K.; Senda, Y.; Tsuzuku, M. Predicting individual stem volumes of sugi (Cryptomeria japonica D. Don) plantations in mountainous areas using small-footprint airborne LiDAR. J. For. Res. 2005, 10, 305–312. [Google Scholar] [CrossRef]

- Chen, Q.; Gong, P.; Baldocchi, D.; Tian, Y. Estimating basal area and stem volume for individual trees from LiDAR data. Photogramm. Eng. Remote Sens. 2007, 73, 1355–1365. [Google Scholar] [CrossRef]

- Kankare, V.; Räty, M.; Yu, X.; Holopainen, M.; Vastaranta, M.; Kantola, T.; Hyyppä, J.; Hyyppä, H.; Alho, P.; Viitala, R. Single tree biomass modelling using airborne laser scanning. ISPRS J. Photogram. Remote Sens. 2013, 85, 66–73. [Google Scholar] [CrossRef]

- Hauglin, M.; Gobakken, T.; Astrup, R.; Ene, L.; Næsset, E. Estimating single-tree crown biomass of Norway spruce by airborne laser scanning: A comparison of methods with and without the use of terrestrial laser scanning to obtain the ground reference data. Forests 2014, 5, 384–403. [Google Scholar] [CrossRef]

- Kankare, V.; Liang, X.; Vastaranta, M.; Yu, X.; Holopainen, M.; Hyyppä, J. Diameter distribution estimation with laser scanning based multisource single tree inventory. ISPRS J. Photogram. Remote Sens. 2015, 108, 161–171. [Google Scholar] [CrossRef]

- Yu, X.; Litkey, P.; Hyyppä, J.; Holopainen, M.; Vastaranta, M. Assessment of low density full-waveform airborne laser scanning for individual tree detection and tree species classification. Forests 2014, 5, 1011–1031. [Google Scholar] [CrossRef]

- Deng, S.; Katoh, M. Change of spatial structure characteristics of the forest in Oshiba Forest Park in 10 years. J. For. Plann. 2011, 17, 9–19. [Google Scholar]

- Leckie, D.G.; Gougeon, F.A.; Walsworth, N.; Paradine, D. Stand delineation and composition estimation using semi-automated individual tree crown analysis. Remote Sens. Environ. 2003, 85, 355–369. [Google Scholar] [CrossRef]

- Leckie, D.G.; Gougeon, F.A.; Tinis, S.; Nelson, T.; Burnett, C.N.; Paradine, D. Automated tree recognition in old growth conifer stands with high resolution digital imagery. Remote Sens. Environ. 2005, 94, 311–326. [Google Scholar] [CrossRef]

- Ke, Y.; Zhang, W.; Quackenbush, L.J. Active contour and hill climbing for tree crown detection and delineation. Photogramm. Eng. Remote Sens. 2010, 76, 1169–1181. [Google Scholar] [CrossRef]

- Katoh, M. The identification of large size trees. In Forest Remote Sensing: Applications from Introduction, 3rd ed.; Japan Forestry Investigation Committee: Tokyo, Japan, 2010; pp. 308–309. [Google Scholar]

- Ke, Y.; Quackenbush, L.J. A comparison of three methods for automatic tree crown detection and delineation methods from high spatial resolution imagery. Int. J. Remote Sens. 2011, 32, 3625–3647. [Google Scholar] [CrossRef]

- Culvenor, D.S. Extracting individual tree information. In Remote Sensing of Forest Environment: Concepts and Case Studies; Wulder, M., Franklin, S.E., Eds.; Kluwer Academic Publishers: Boston, MA, USA; Dordrecht, The Netherlands; London, UK, 2003; pp. 255–278. [Google Scholar]

- Erikson, M.; Olofsson, K. Comparison of three individual tree crown detection methods. Mach. Vis. Appl. 2005, 16, 258–265. [Google Scholar] [CrossRef]

- Gougeon, F.A. A crown following approach to the automatic delineation of individual tree crowns in high spatial resolution aerial images. Can. J. Remote Sens. 1995, 21, 274–284. [Google Scholar] [CrossRef]

- Akiyama, T. Utility of LiDAR data. In Case Studies for Disaster’s Preventation Using Airborne Laser Data; Japan Association of Precise Survey and Applied Technology: Tokyo, Japan, 2013; p. 26. [Google Scholar]

- Gougeon, F.A. The ITC Suite Manual: A Semi-Automatic Individual Tree Crown (ITC) Approach to Forest Inventories; Pacific Forestry Centre, Canadian Forest Service, Natural Resources Canada: Victoria, BC, Canada, 2010; pp. 1–92.

- Saito, K. Output format of LiDAR data. In Airborne Laser Measurement: Applications from Introduction; Japan Association of Precise Survey and Applied Technology: Tokyo, Japan, 2008; p. 131. [Google Scholar]

- Gougeon, F.A.; Leckie, D.G. Forest Information Extraction from High Spatial Resolution Images Using an Individual Tree Crown Approach; Canadian Forest Service: Victoria, BC, Canada, 2003. [Google Scholar]

- Yan, G.; Mas, J.F.; Maathuis, B.H.P.; Zhang, X.; van Dijk, P.M. Comparison of pixel-based and object-oriented image classification approaches—A case study in a coal fire area, Wuda, Inner Mongolia, China. Int. J. Remote Sens. 2006, 27, 4039–4055. [Google Scholar] [CrossRef]

- Whiteside, T.G.; Boggs, G.S.; Maier, S.W. Comparing object-based and pixel-based classifications for mapping savannas. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 884–893. [Google Scholar] [CrossRef]

- Henning, J.G.; Radtke, P.J. Detailed stem measurements of standing trees from ground-based scanning LiDAR. For. Sci. 2006, 52, 67–80. [Google Scholar]

- Lovell, J.L.; Jupp, D.L.B.; Newnham, G.J.; Culvenor, D.S. Measuring tree stem diameters using intensity profiles from ground-based scanning LiDAR from a fixed viewpoint. ISPRS J. Photogramm. Remote Sens. 2011, 66, 46–55. [Google Scholar] [CrossRef]

- Maas, H.G.; Bienert, A.; Scheller, S.; Keane, E. Automatic forest inventory parameter determination from terrestrial laser scanner data. Int. J. Remote Sens. 2008, 29, 1579–1593. [Google Scholar] [CrossRef]

- Bienert, A.; Scheller, S.; Keane, E.; Mohan, F.; Nugent, C. Tree detection and diameter estimations by analysis of forest terrestrial laserscanner point clouds. Int. Arch. Photogramm. Remote Sens. 2007, 36, 50–55. [Google Scholar]

- Liang, X.; Litkey, P.; Hyyppä, J.; Kaartinen, H.; Vastaranta, M.; Holopainen, M. Automatic stem mapping using single-scan terrestrial laser scanning. IEEE Trans. Geosci. Remote Sens. 2012, 50, 661–670. [Google Scholar] [CrossRef]

- Liang, X.; Hyyppä, J. Automatic stem mapping by merging several terrestrial laser scans at the feature and decision levels. Sensors 2013, 13, 1614–1634. [Google Scholar] [CrossRef] [PubMed]

- Heinzel, J.; Koch, B. Exploring full-waveform LiDAR parameters for tree species classification. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 152–160. [Google Scholar] [CrossRef]

- Hollaus, M.; Mücke, W.; Höfle, B.; Dorigo, W.; Pfeifer, N.; Wagner, W.; Bauerhansl, C.; Regner, B. Tree species classification based on full-waveform airborne laser scanning data. In Proceedings of the SilviLaser 2009, the 9th International Conference on LiDAR Applications for Assessing Forest Ecosystems, College Station, TX, USA, 14–16 October 2009; pp. 54–62.

- Höfle, B.; Hollaus, M.; Lehner, H.; Pfeifer, N.; Wagner, W. Area-based parameterization of forest structure using full-waveform airborne laser scanning data. In Proceedings of the SilviLaser 2008, the 8th International Conference on LiDAR Applications in Forest Assessment and Inventory, Edinburgh, Scotland, UK, 17–19 September 2008; pp. 229–235.

- Vaughn, N.R.; Moskal, L.M.; Turnblom, E.C. Tree species detection accuracies using discrete point LiDAR and airborne waveform LiDAR. Remote Sens. 2012, 4, 377–403. [Google Scholar] [CrossRef]

- Kim, S.; McGaughey, R.J.; Andersen, H.E.; Schreuder, G. Tree species differentiation using intensity data derived from leaf-on and leaf-off airborne laser scanner data. Remote Sens. Environ. 2009, 113, 1575–1586. [Google Scholar] [CrossRef]

- Ørka, H.O.; Naesset, E.; Bollandsas, O.M. Classifying species of individual trees by intensity and structure features derived from airborne laser scanner data. Remote Sens. Environ. 2009, 113, 1163–1174. [Google Scholar] [CrossRef]

- Brandtberg, T. Classifying individual tree species under leaf-off and leaf-on conditions using airborne LiDAR. ISPRS J. Photogramm. Remote Sens. 2007, 61, 325–340. [Google Scholar] [CrossRef]

- Holmgren, J.; Persson, A. Identifying species of individual trees using airborne laser scanner. Remote Sens. Environ. 2004, 90, 415–423. [Google Scholar] [CrossRef]

- Morsdorf, F.; Meier, E.; Kötz, B.; Itten, K.I.; Bobbertin, M.; Allgöwer, B. LiDAR-based geometric reconstruction of boreal type forest stands at single tree level for forest and wildland fire management. Remote Sens. Environ. 2004, 92, 353–362. [Google Scholar] [CrossRef]

- Reitberger, J.; Schnörr, C.; Krzystek, P.; Stilla, U. 3D segmentation of single trees exploiting full waveform LiDAR data. ISPRS J. Photogramm. Remote Sens. 2009, 64, 561–574. [Google Scholar] [CrossRef]

- Kaartinen, H.; Hyyppä, J.; Yu, X.; Vastaranta, M.; Hyyppä, H.; Kukko, A.; Holopainen, M.; Heipke, C.; Hirschmugl, M.; Morsdorf, F.; et al. An international comparison of individual tree detection and extraction using airborne laser scanning. Remote Sens. 2012, 4, 950–974. [Google Scholar] [CrossRef] [Green Version]

- Falkowski, M.J.; Smith, A.M.S.; Gessler, P.E.; Hudak, A.T.; Vierling, L.A.; Evans, J.S. The influence of conifer forest canopy cover on the accuracy of two individual tree measurement algorithms using LiDAR data. Can. J. Remote Sens. 2008, 34, 338–350. [Google Scholar] [CrossRef]

| Compartment | Dominant Species | Min DBH (cm) | Max DBH (cm) | Average DBH (cm) | Average Height (m) | Density a (Stem/ha) | Density b (Stem/ha) | Basal Area (m2/ha) |

|---|---|---|---|---|---|---|---|---|

| 1 | Pd, Lk, Bl | 5.4 | 59.0 | 22.8 | 15.2 | 583 | 245 | 31.0 |

| 2 | Pd, Lk, Bl | 7.4 | 56.9 | 21.8 | 15.9 | 822 | 299 | 39.1 |

| 3 | Pd, Lk | 5.0 | 58.7 | 22.3 | 16.7 | 744 | 328 | 37.4 |

| 4 | Pd, Co, Lk | 5.0 | 77.1 | 22.2 | 16.2 | 954 | 405 | 49.6 |

| 5 | Pd, Co | 5.0 | 81.6 | 24.3 | 16.5 | 775 | 385 | 46.8 |

| 6 | Pd, Co | 6.8 | 63.6 | 23.6 | 15.9 | 710 | 294 | 42.6 |

| 7 | Pd, Co | 7.7 | 65.3 | 26.3 | 17.4 | 632 | 362 | 41.8 |

| Bands | Class Name * | Pd | Co | Lk | Bl | Classified Totals | User Accuracy (%) | Overall Accuracy (%) | Kappa Coefficient |

|---|---|---|---|---|---|---|---|---|---|

| RGB | Pd | 95 | 18 | 19 | 1 | 133 | 71.4 | 70.8 | 0.60 |

| Co | 12 | 54 | 1 | 6 | 73 | 74.0 | |||

| Lk | 13 | 8 | 82 | 4 | 107 | 76.6 | |||

| Bl | 17 | 10 | 8 | 52 | 87 | 59.8 | |||

| Total | 137 | 90 | 110 | 63 | 400 | ||||

| Producer Accuracy (%) | 69.3 | 60.0 | 74.6 | 82.5 | |||||

| RGBI | Pd | 111 | 13 | 7 | 2 | 133 | 83.5 | 77.5 | 0.69 |

| Co | 10 | 61 | 0 | 5 | 76 | 80.3 | |||

| Lk | 19 | 0 | 86 | 2 | 107 | 80.4 | |||

| Bl | 7 | 16 | 9 | 52 | 84 | 61.9 | |||

| Total | 147 | 90 | 102 | 61 | 400 | ||||

| Producer Accuracy (%) | 75.5 | 67.8 | 84.3 | 85.3 | |||||

| RGBS | Pd | 121 | 8 | 18 | 4 | 151 | 80.1 | 76.5 | 0.68 |

| Co | 13 | 63 | 1 | 7 | 84 | 75.0 | |||

| Lk | 12 | 3 | 72 | 3 | 90 | 80.0 | |||

| Bl | 5 | 9 | 11 | 50 | 75 | 66.7 | |||

| Total | 151 | 83 | 102 | 64 | 400 | ||||

| Producer Accuracy (%) | 80.1 | 75.9 | 70.6 | 78.1 | |||||

| RGBIS | Pd | 112 | 13 | 4 | 2 | 131 | 85.5 | 79.8 | 0.72 |

| Co | 7 | 67 | 3 | 5 | 82 | 81.7 | |||

| Lk | 15 | 0 | 88 | 2 | 105 | 83.8 | |||

| Bl | 8 | 10 | 12 | 52 | 82 | 63.4 | |||

| Total | 142 | 90 | 107 | 61 | 400 | ||||

| Producer Accuracy (%) | 78.9 | 74.4 | 82.2 | 85.3 |

| Bands | Class Name * | Pd | Co | Lk | Bl | Classified Totals | User Accuracy (%) | Overall Accuracy (%) | Kappa Coefficient |

|---|---|---|---|---|---|---|---|---|---|

| RGB | Pd | 99 | 15 | 11 | 4 | 129 | 76.7 | 73.5 | 0.64 |

| Co | 10 | 72 | 5 | 8 | 95 | 75.8 | |||

| Lk | 13 | 1 | 70 | 9 | 93 | 75.3 | |||

| Bl | 12 | 4 | 14 | 53 | 83 | 63.9 | |||

| Total | 134 | 92 | 100 | 74 | 400 | ||||

| Producer Accuracy (%) | 73.9 | 78.3 | 70.0 | 71.6 | |||||

| RGBI | Pd | 123 | 11 | 4 | 5 | 143 | 86.0 | 83.8 | 0.78 |

| Co | 9 | 74 | 5 | 6 | 94 | 78.7 | |||

| Lk | 2 | 1 | 88 | 7 | 98 | 89.8 | |||

| Bl | 3 | 8 | 4 | 50 | 65 | 76.9 | |||

| Total | 137 | 94 | 101 | 68 | 400 | ||||

| Producer Accuracy (%) | 89.8 | 78.7 | 87.1 | 73.5 | |||||

| RGBS | Pd | 120 | 9 | 13 | 1 | 143 | 83.9 | 81.8 | 0.75 |

| Co | 11 | 71 | 4 | 4 | 90 | 78.9 | |||

| Lk | 8 | 1 | 79 | 4 | 92 | 85.9 | |||

| Bl | 5 | 4 | 9 | 57 | 75 | 76.0 | |||

| Total | 144 | 85 | 105 | 66 | 400 | ||||

| Producer Accuracy (%) | 83.3 | 83.5 | 75.2 | 86.4 | |||||

| RGBIS | Pd | 121 | 11 | 3 | 5 | 140 | 86.4 | 85.3 | 0.80 |

| Co | 13 | 84 | 6 | 2 | 105 | 80.0 | |||

| Lk | 2 | 1 | 81 | 5 | 89 | 91.0 | |||

| Bl | 2 | 5 | 4 | 55 | 66 | 83.3 | |||

| Total | 138 | 101 | 94 | 67 | 400 | ||||

| Producer Accuracy (%) | 87.7 | 83.2 | 86.2 | 82.1 |

| Compartment | Species | Field Data | Ortho | DCHM | Compartment | Species | Field Data | Ortho | DCHM |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Pd | 50 | 64 | 53 | 5 | Pd | 167 | 193 | 172 |

| Co | - | 1 | 7 | Co | 235 | 197 | 181 | ||

| Lk | 49 | 79 | 70 | Lk | 3 | 10 | 2 | ||

| Bl | 64 | 111 | 51 | Bl | 18 | 53 | 20 | ||

| Total | 163 | 255 | 181 | Total | 423 | 453 | 375 | ||

| 2 | Pd | 246 | 246 | 218 | 6 | Pd | 143 | 220 | 177 |

| Co | 2 | 4 | 6 | Co | 190 | 89 | 111 | ||

| Lk | 28 | 39 | 33 | Lk | 18 | 47 | 20 | ||

| Bl | 41 | 68 | 44 | Bl | 8 | 37 | 11 | ||

| Total | 317 | 357 | 301 | Total | 359 | 393 | 319 | ||

| 3 | Pd | 182 | 194 | 175 | 7 | Pd | 51 | 87 | 66 |

| Co | 4 | 4 | 6 | Co | 207 | 160 | 157 | ||

| Lk | 169 | 167 | 154 | Lk | 1 | 1 | - | ||

| Bl | 5 | 15 | 11 | Bl | 7 | 18 | 10 | ||

| Total | 360 | 380 | 346 | Total | 266 | 266 | 233 | ||

| 4 | Pd | 263 | 282 | 233 | All | Pd | 1,138 | 1,286 | 1,090 |

| Co | 143 | 60 | 67 | Co | 785 | 488 | 535 | ||

| Lk | 88 | 113 | 82 | Lk | 357 | 456 | 349 | ||

| Bl | 24 | 60 | 23 | Bl | 170 | 389 | 186 | ||

| Total | 518 | 515 | 405 | Total | 2,450 | 2,619 | 2,160 |

| RS Source | Class Name | Field Data | Total | Commission Accuracy (%) | Overall Accuracy (%) | |||

|---|---|---|---|---|---|---|---|---|

| Pd | Co | Lk | Bl | |||||

| Orthophoto | Pd | 1,051 | 94 | 59 | 45 | 1,249 | 84.1 | 75.6 |

| Co | 63 | 439 | 53 | 36 | 591 | 74.3 | ||

| Lk | 108 | 24 | 421 | 27 | 580 | 72.6 | ||

| Bl | 68 | 72 | 57 | 275 | 472 | 58.3 | ||

| Total | 1,290 | 629 | 590 | 383 | 2,892 | |||

| Omission Accuracy (%) | 81.5 | 69.8 | 71.4 | 71.8 | ||||

| DCHM | Pd | 1,176 | 46 | 41 | 33 | 1,296 | 90.7 | 85.7 |

| Co | 35 | 526 | 39 | 36 | 636 | 82.7 | ||

| Lk | 37 | 22 | 482 | 21 | 562 | 85.8 | ||

| Bl | 42 | 35 | 28 | 293 | 398 | 73.6 | ||

| Total | 1,290 | 629 | 590 | 383 | 2,892 | |||

| Omission Accuracy (%) | 91.2 | 83.6 | 81.7 | 76.5 | ||||

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, S.; Katoh, M. Interpretation of Forest Resources at the Individual Tree Level in Japanese Conifer Plantations Using Airborne LiDAR Data. Remote Sens. 2016, 8, 188. https://doi.org/10.3390/rs8030188

Deng S, Katoh M. Interpretation of Forest Resources at the Individual Tree Level in Japanese Conifer Plantations Using Airborne LiDAR Data. Remote Sensing. 2016; 8(3):188. https://doi.org/10.3390/rs8030188

Chicago/Turabian StyleDeng, Songqiu, and Masato Katoh. 2016. "Interpretation of Forest Resources at the Individual Tree Level in Japanese Conifer Plantations Using Airborne LiDAR Data" Remote Sensing 8, no. 3: 188. https://doi.org/10.3390/rs8030188