1. Introduction

Unmanned aerial vehicles, or UAVs, are useful remote-sensing platforms for infrastructure monitoring and inspection. The small size and maneuverability of UAVs make them highly mobile sensor platforms that can quickly and easily gather information about an environment that would otherwise be difficult to obtain. UAVs provide promising applications in many fields and are providing increasingly valuable services to industry. Although historically, UAVs have been used largely in military applications, new industrial opportunities may utilize UAVs as remote-sensing tools in areas as diverse as precision agriculture, landslide observation, pipeline surveillance, photogrammetric modeling and infrastructure monitoring [

1,

2,

3,

4,

5,

6,

7]. UAVs have an advantage over manned aircraft in these applications due to autonomy, the ability to capture data at close range and high resolution and reduced cost [

8].

One particularly attractive use of UAVs for many industries is as a highly mobile sensor platform for 3D reconstruction [

9]. Images collected during UAV missions can be processed to create 3D models of a scene using techniques, such as structure from motion (SfM) [

10]. The resulting models are useful for observation of terrain changes [

11], inspection of existing infrastructure [

12] and environmental monitoring [

13].

Currently, many civilian UAV missions are flown using manual pilot control, making the quality of the collected data heavily dependent on the skill and judgment of the operator or the weather conditions that make precision flying more difficult. This can often lead to gaps between the collected images or areas where insufficient images are captured for 3D reconstruction. 3D reconstruction using SfM, for example, is particularly sensitive to the overlap and angles of the provided images [

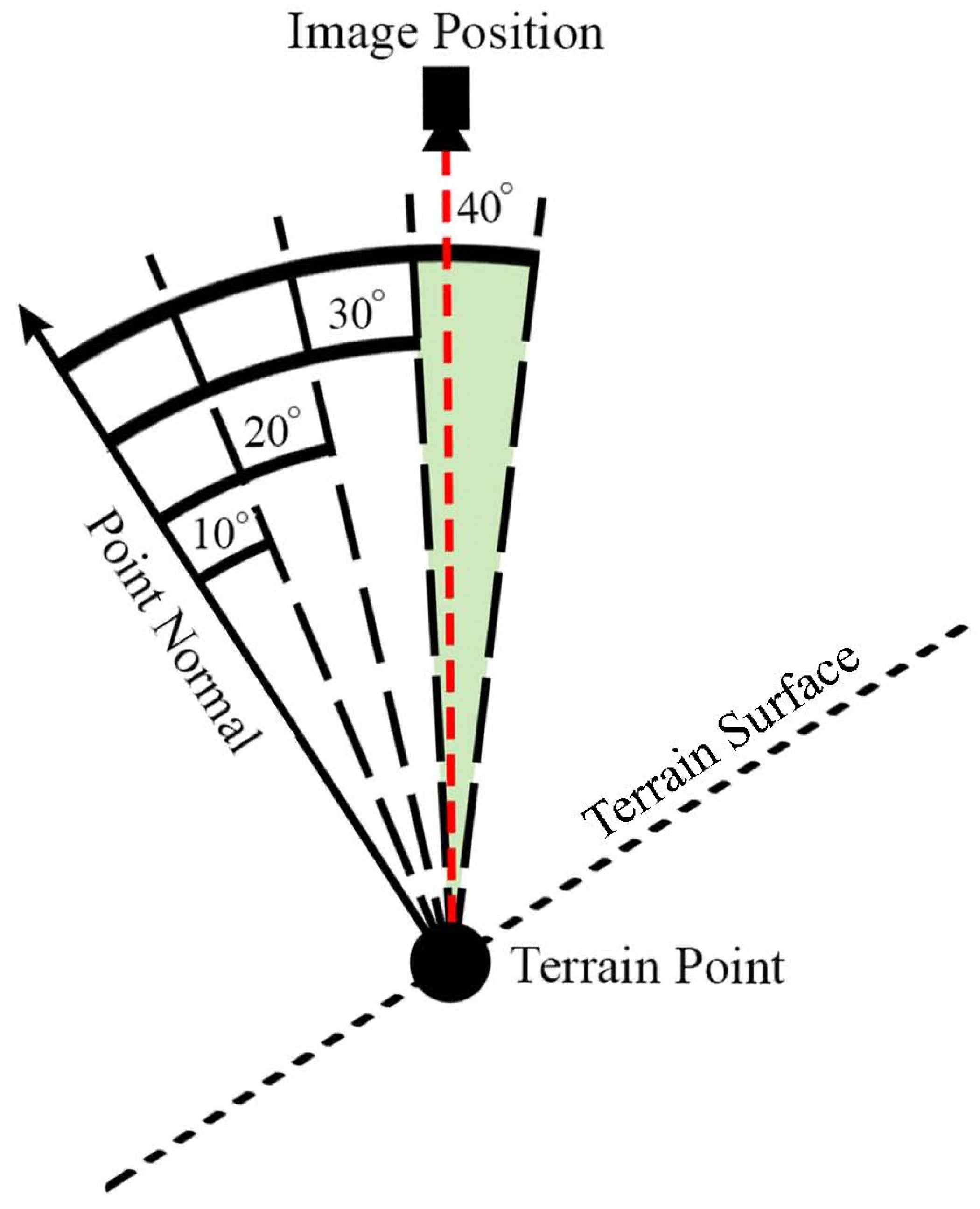

14]. Although automated flights are becoming increasingly more common, most widely-available flight planners use a simple grid or “lawnmower” flight pattern. While easily adjustable, these patterns often take little account of the 3D geometry of a scene, leading to potential gaps and areas of reduced accuracy. These problems are alleviated through a new view planning optimization algorithm described in this paper.

View planning refers to identifying the best sensor locations for observing an object or site and is also known as active vision, active sensing, active perception or a photogrammetric network design [

15]. Early approaches have roots in the “art gallery” problem, which deals with optimally placing museum guards to protect an exhibit [

16]. Other early motivations included industrial inspection and quality control for parts manufacturing [

17].

View planning is divided into two general categories: model-based and exploratory. Exploratory view planning, also known as next-best view planning, does not rely on any prior knowledge about the scene. Early works on the subject include that of Remagnino

et al. on active camera control [

18] and Kristensen on sensor planning in partially-known environments [

19]. Dunn and Frahm develop an algorithm for next-best view planning in generic scenes [

20]. Krainin

et al. present an active vision planner in which a robot manipulates an object in front of the camera to achieve a complete inspection [

21]. Some work, such as that by Trummer

et al. and Wenhardt

et al., has also been performed using various uncertainty criteria to plan the next viewing position for the sensor [

22,

23].

This research deals primarily with model-based view planning, which presumes some prior knowledge about the scene. For UAV applications, this is a good assumption, as rough elevation data are generally available for most areas of interest to Earth science and infrastructure monitoring. Furthermore, view planning is effective even with only a simplified version of the model geometry [

24]. In UAV applications, a rough model may also be created quickly using a pre-programmed flyover of the area [

14].

Early works in model-based view planning include those by Cowan and Koveski [

25], Tarbox and Gottschlich [

26] and Tarabanis

et al. [

27]. Model-based view planning consists of two parts [

28]. The first is the generation and selection of an acceptable set of viewpoints to cover the scene. The second is the calculation of a path to reach the desired viewpoints efficiently. The second step is also known as the traveling salesman problem (TSP). These steps can occur separately or simultaneously in a global optimization. A global solution is desirable, but often impractical to compute [

29]. The current work follows the common approach of decoupling the two steps into separate optimization problems. Scott showed that model-based view planning is analogous to the set covering problem, which is NP-complete [

30]. NP refers to nondeterministic polynomial time. NP-complete is a class containing the hardest problems in NP. This classification means while any given solution can be quickly checked, no known, efficient method for finding a solution exists. For more information, see [

31]. Although the view planning problem is complex, a number of researchers have proposed potential solutions that approach optimality.

Scott (2007) presents a theoretical framework for model-based view planning for range cameras. He poses the problem as a set covering problem and develops a four-part “modified measurability matrix” algorithm for its solution. He separates viewpoint selection and path planning, using a greedy algorithm to solve the first and a heuristic approximation algorithm to solve the second. Greedy algorithms work by choosing a locally-optimal option at each decision stage. Heuristic algorithms utilize “rules of thumb” that can produce good results, but have no guarantees of optimality. Neither algorithm guarantees a global optimum, but are instead chosen by Scott as a balance between solution quality and efficiency [

32]. Due to the general nature of this work, these results can be extended to other sensor types in addition to range scanners. The components of the fitness function described in

Section 2.1.4 of the current work are derived in part from Scott’s measurability criteria.

Blaer and Allen (2007) perform view planning for ground robot site inspections using a unique voxel space and ray tracing approach to represent the solution space. The robot first uses a 2D site map to plan an initial inspection path and generate a rough 3D map. Viewpoints are then selected sequentially using a greedy algorithm, and the robot path is generated using a Voronoi diagram-based method. The authors test their algorithm on a large historic structure. Their tests show the algorithm to be effective, but quite slow, requiring 15–20 min to compute the next viewpoint [

33].

The field of photogrammetric network design closely overlaps the field of view planning, but focuses more closely on the requirements taken from photogrammetry for accurate reconstruction. This type of design aims for well-distributed imaging network geometry and often employs carefully-designed targets and scale bars. This approach has been shown to produce very good results in small scenes, with Alsadik

et al. demonstrating accuracies of up to 1 mm in cultural heritage preservation projects [

34,

35]. The current work does not attempt to produce a rigorous network design, focusing instead on providing sufficient coverage of large scenes for 3D reconstruction to take place.

Scott

et al. identify highly mobile, six degree of freedom positioning systems as an open problem in view planning research [

30]. UAVs fill that need, but introduce additional challenges. Past work in view planning often focuses on small-scale industrial inspections, where a fixed robotic positioning system manipulates the sensor. The size of the inspected object in such systems rarely exceeds the sensor viewing area [

24]. However, in UAV applications, the observed surface is often much larger than the sensor viewing area. Work done on view planning for site inspection commonly uses ground robots, with viewpoints in a 2D plane [

36,

37]. In contrast, UAVs move in three dimensions, allowing viewpoints in an additional dimension. As a result, UAV view plans require more viewpoints to cover a 3D surface. This increases the computational complexity compared to that for the manufacturing inspection case for some portions of the view planning process, including visibility analysis, view point selection and the traveling salesman problem [

15].

Some work addresses these challenges. Schmid

et al. present a multicopter view planning algorithm that uses a heuristic view selection approach to create a set of viewpoints [

14]. The viewpoints cover a desired area while meeting the constraints for multi-view stereo reconstruction. The algorithm approximates the shortest path to the chosen viewpoints using a farthest-insertion-heuristic. The authors test the algorithm on a medium- and a large-scale scene with acceptable results for 2.5D reconstructions. However, while model resolution is reported, no qualitative analysis is performed to establish the accuracy of the models. Hoppe

et al. develop a similar algorithm that differs by including an analysis of anticipated reconstruction error using the E -optimality criterion, which maximizes the minimum eigenvalue of the information matrix. They plan the path using a greedy algorithm with angle constraints and test their algorithm on a house under construction. The authors obtain errors of less than 5 cm across 92% of the reconstructed points [

38]. Both of these projects are closely related to the current work and provide alternate methods for achieving similar goals. The lack of numerical analysis by Schmid

et al. makes direct comparison difficult, but the results of the current paper are compared to those obtained by Hoppe

et al. in

Section 4.

In a more theoretically-based approach, Englot and Hover present a view planning algorithm for underwater robot inspections, which share a common scale and dimensionality with many UAV planning problems. The solution to the set cover problem is found using both a greedy algorithm and linear programming relaxation with rounding. The algorithm efficiently computes a sensor plan that gives 100% coverage of complex structures [

39]. In a subsequent paper, the authors revisit the same problem, this time fitting the input model with a Gaussian surface, modeling uncertainty in the surface using Bayesian regression and planning views that minimize uncertainty in the surface [

40]. This is related to the objective of the fitness function in the current work, which seeks to maximize the number of terrain point locations that can successfully be estimated using SfM.

A promising approach for large-scale view planning is evolutionary or genetic algorithms, which use stochastic processes to iteratively progress toward an optimum. Genetic algorithms are especially useful in this application because of the nonlinear and non-convex nature of the problem, which can cause difficulties for traditional gradient-based optimization techniques [

17]. Olague uses this approach to develop a camera network design for a robotic camera positioning system [

41]. Chen and Li (2004) also apply this approach, using a genetic algorithm to choose viewpoint sets with a min-max objective. The viewpoint sets are evaluated based on the number of viewpoints, the visibility of model features and sensor constraints [

17]. Once a set of viewpoints is selected, the shortest path is estimated using the Christofides algorithm, which provides a solution no greater than three halves of the optimum [

42].

In a study closely related to the subject of this paper, Yang

et al. develop a genetic optimization algorithm that selects camera positions for UAV inspection of transmission tower equipment [

43]. The algorithm discretizes both the tower and a cylindrical surface around the tower. Each point on the cylindrical surface becomes a potential viewpoint. The genetic algorithm then searches for the optimal viewpoint for each portion of the tower surface, evaluating viewpoints based on visibility, viewing quality and distance. The optimized viewpoints are then compared to an evenly-distributed set of viewpoints. The authors found that the optimized viewpoints performed better than the evenly-distributed set in all three evaluation metrics. The current project performs similar tests for terrain features and structures and additionally adds image orientation to the list of optimized variables.

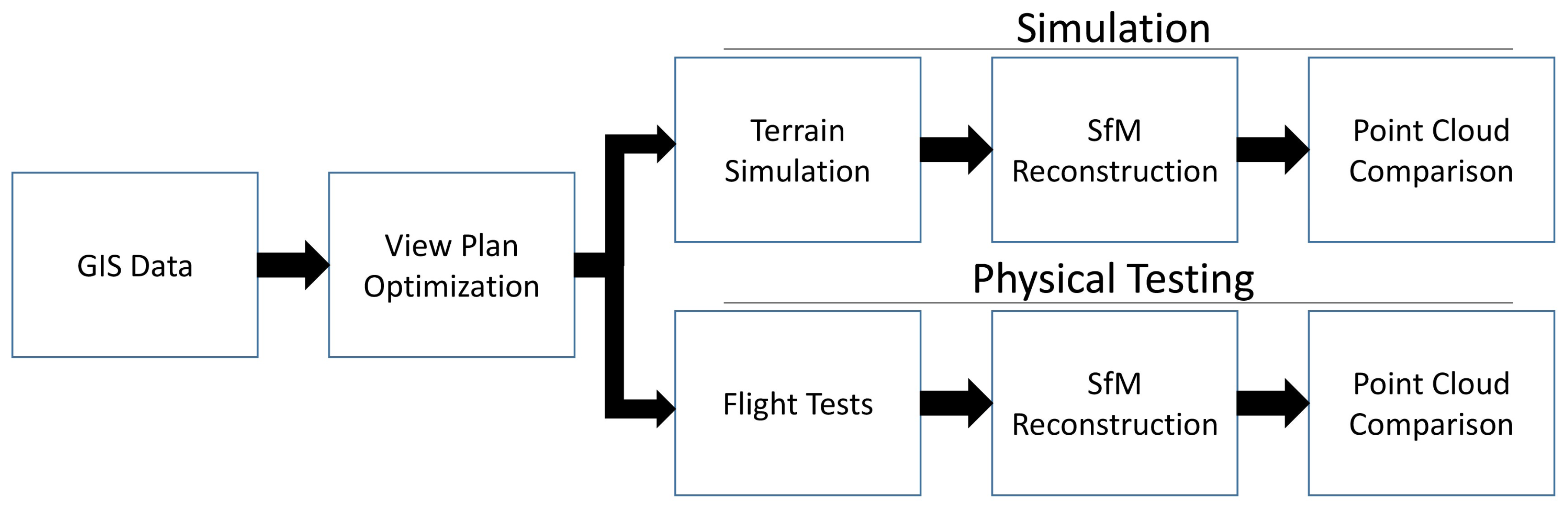

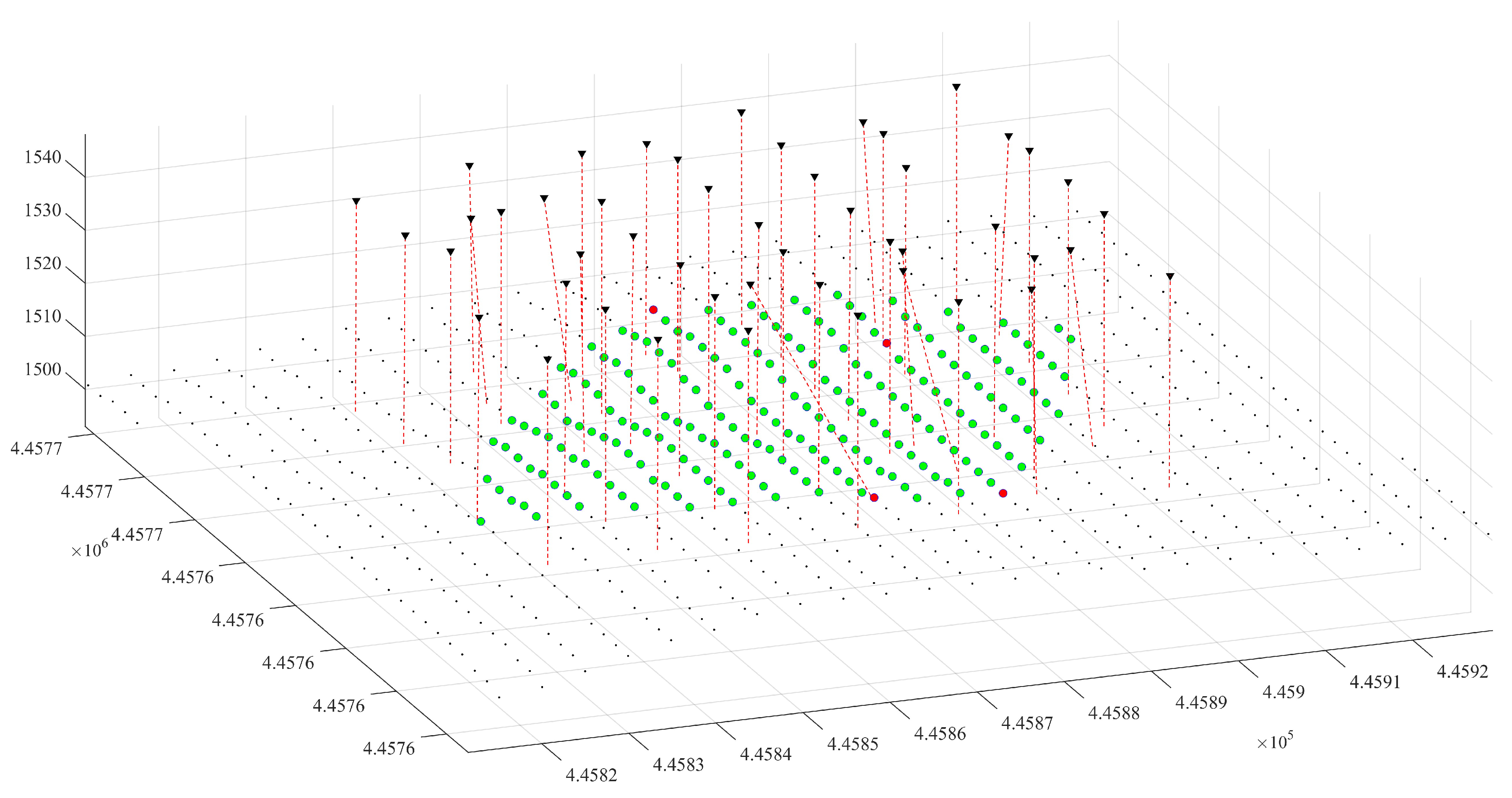

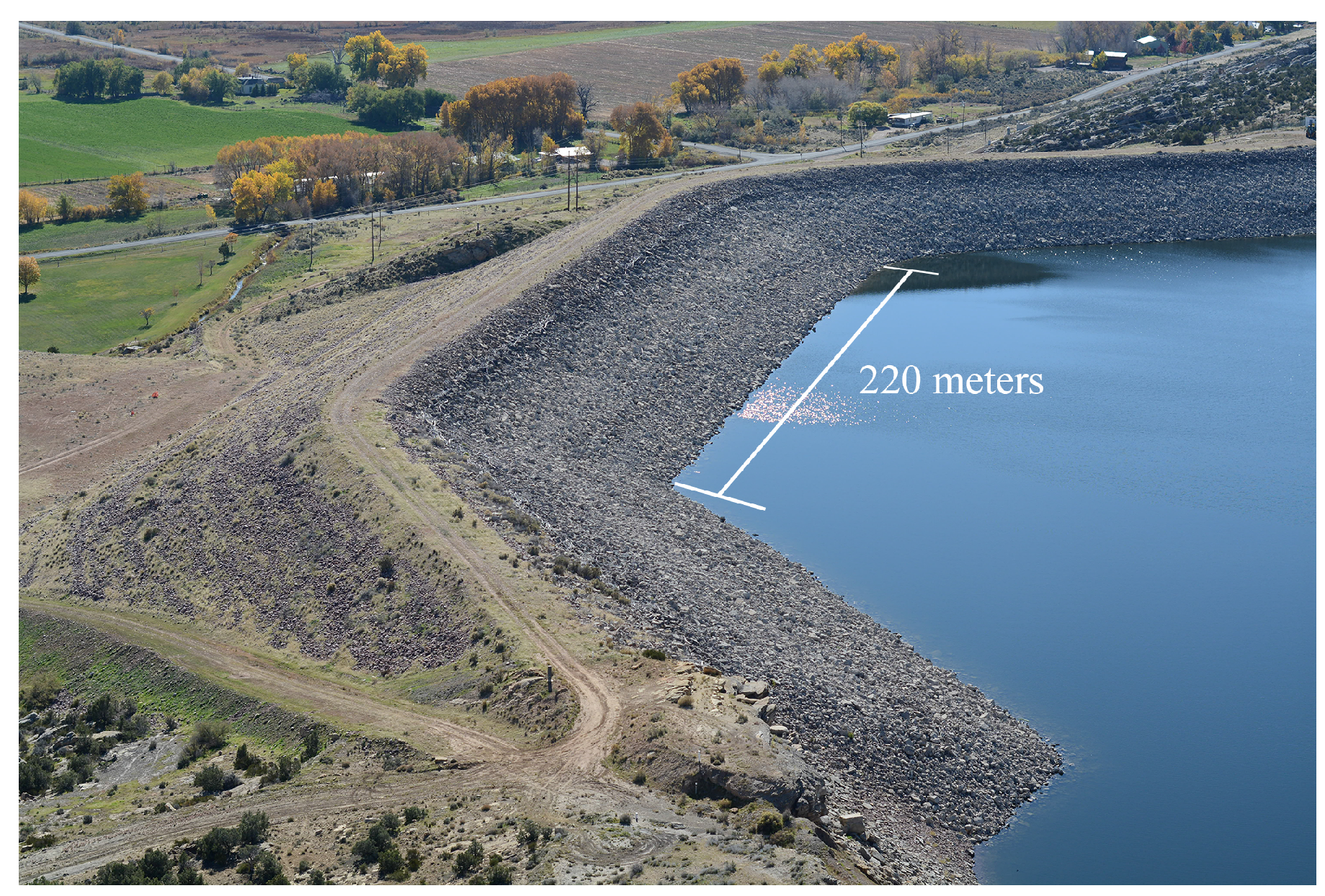

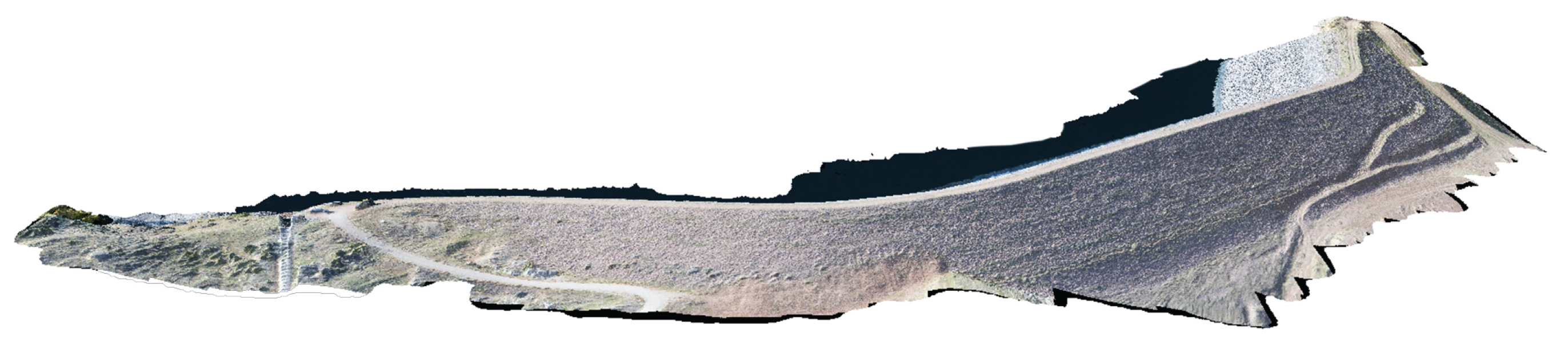

The current study extends and improves upon previous work by using a genetic algorithm for view planning in a large and unstructured environments common in UAV terrain modeling [

44]. Contrasting with previous evolutionary-based work, the view space is formulated as continuous rather than discretized, allowing more flexibility in the solution and the possibility of a more optimal result [

45]. A novel simulation environment using terrain-generation software is also developed for UAV view plan testing. In addition, a quantitative analysis is performed to compare the models created using an optimal view set to those created using three alternative flight patterns in terms of both accuracy, coverage and model completeness.

4. Discussion

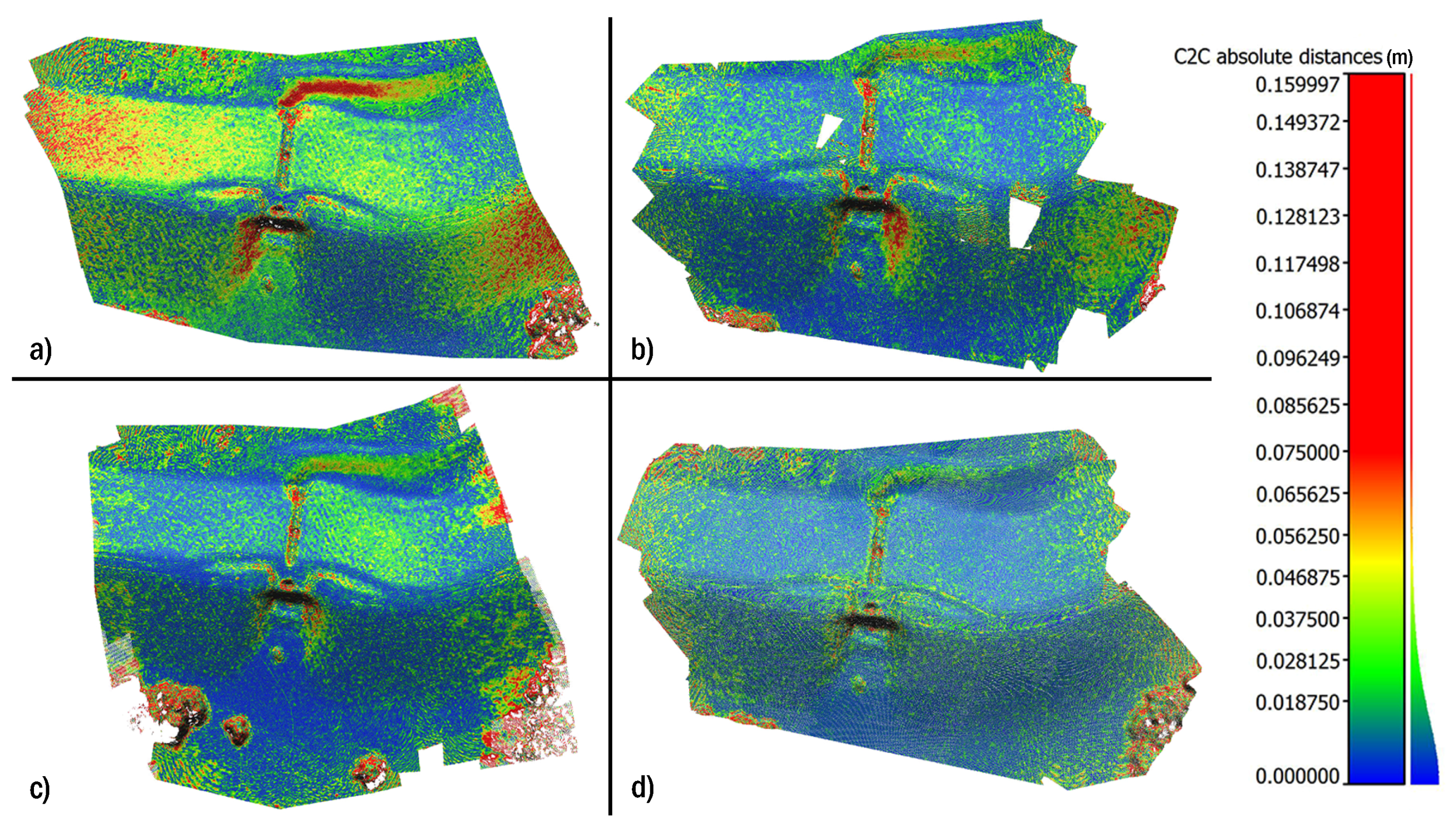

The results of the simulated case studies are summarized in

Table 11.

Table 11.

Simulation results summary.

Table 11.

Simulation results summary.

| View Plan | Gaussian Mean Error (cm) | Standard Deviation (cm) | 95th Percentile (cm) | Model Coverage (%) | Model Completeness (%) |

|---|

| Case 1 | Grid | 3.2 | 3.6 | 9.5 | 100% | 93.67% |

| Grid with Noise | 1.8 | 1.8 | 5.3 | 100% | 93.25% |

| Arc | 2.1 | 3.8 | 6.6 | 100% | 97.72% |

| Optimized | 1.6 | 1.7 | 4.8 | 100% | 99.91% |

| Case 2 | Grid | 10.8 | 11.1 | 33.3 | 100% | 87.94% |

| Grid with Noise | 9.20 | 8.90 | 25.2 | 100% | 87.10% |

| Arc | 11.1 | 20.0 | 39.2 | 68% | 87.64% |

| Optimized | 8.30 | 9.00 | 24.6 | 100% | 88.02% |

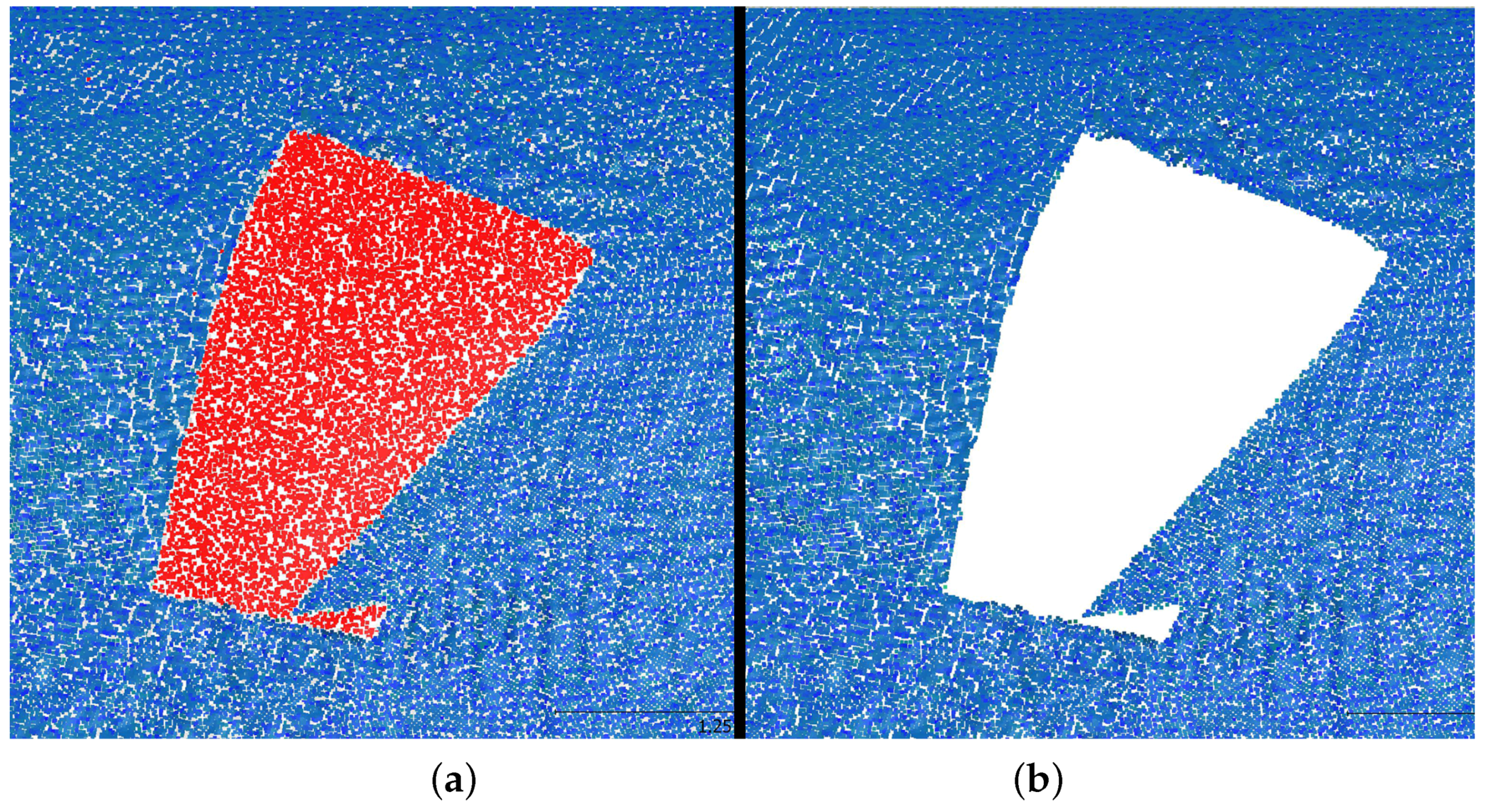

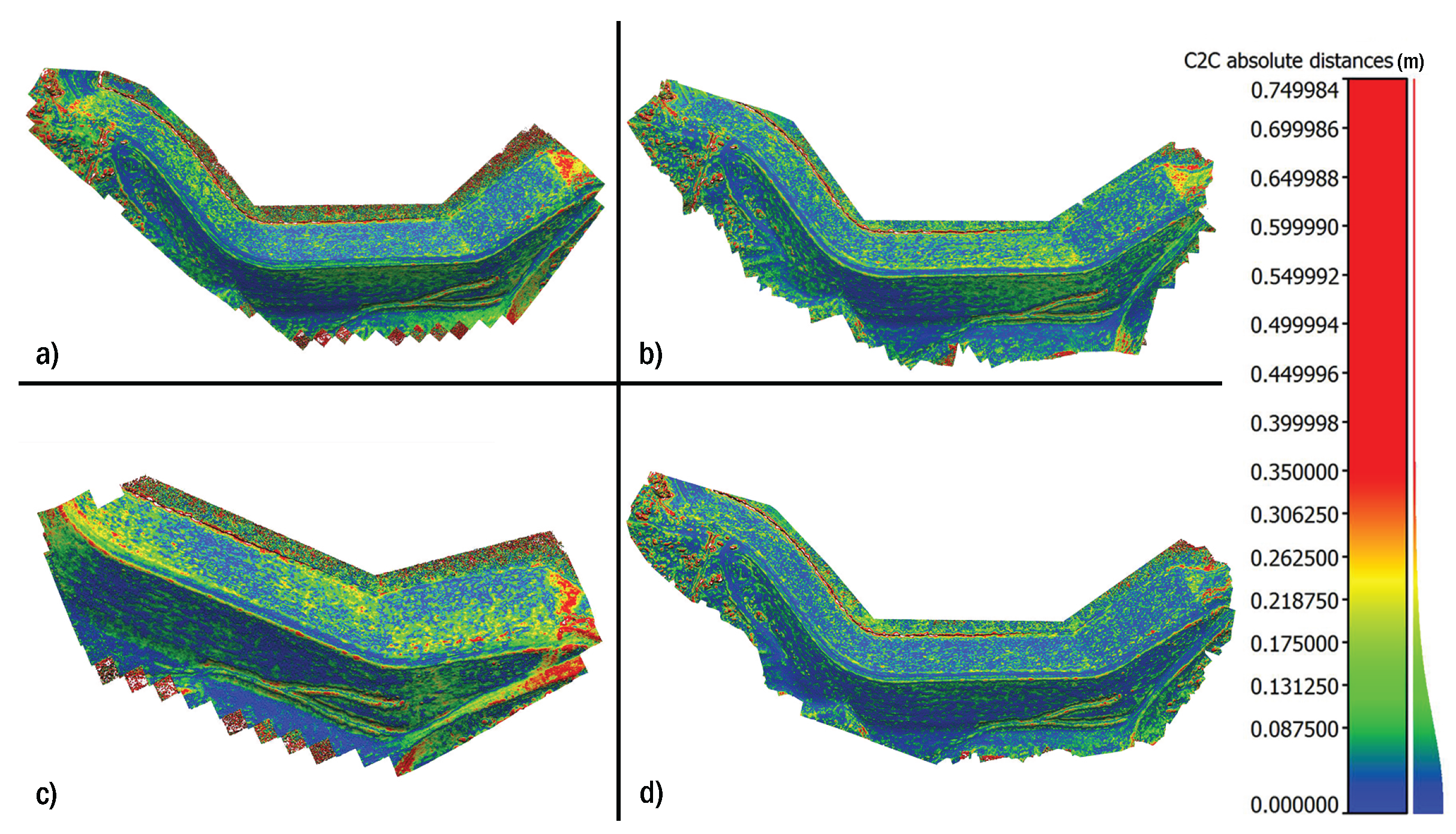

From the results shown, it can be seen that the optimized view planner succeeds in meeting the objectives of increasing model accuracy and completeness. The average error decreased by 43% in the first case and 23% in the second case when compared to the basic grid survey. The standard deviation of the error is also decreased significantly, by 29% in Case 1 and 19% in Case 2, and model completeness is improved in both cases. The optimized solution also compares favorably with the other two alternative flight paths. The increase in accuracy obtained by simply perturbing the grid solution is a particularly interesting result and potentially indicates a simple way to achieve meaningful increases in accuracy during surveying. The increase in accuracy in both this and the optimized case can most likely be attributed to the additional viewing angles provided, which increases the geometric strength of the photogrammetric network. Note that the results of the optimized view plan in the small case study are comparable to those obtained by Hoppe

et al. in their physical survey of a site of similar size, where an accuracy of 5 cm was achieved over 92% of the desired points [

38].

The tested arc pattern seems to provide inconsistent results, with good performance in the first case and poor performance in the second. The lack of coverage achieved by the arc pattern in the second test case is surprising, as intuitively adding additional images should improve performance, not reduce it. The authors are unsure of the meaning of this result. It is possible that this flight path is not well suited to long rectangular sites or perhaps some error was made in this portion of the experiment.

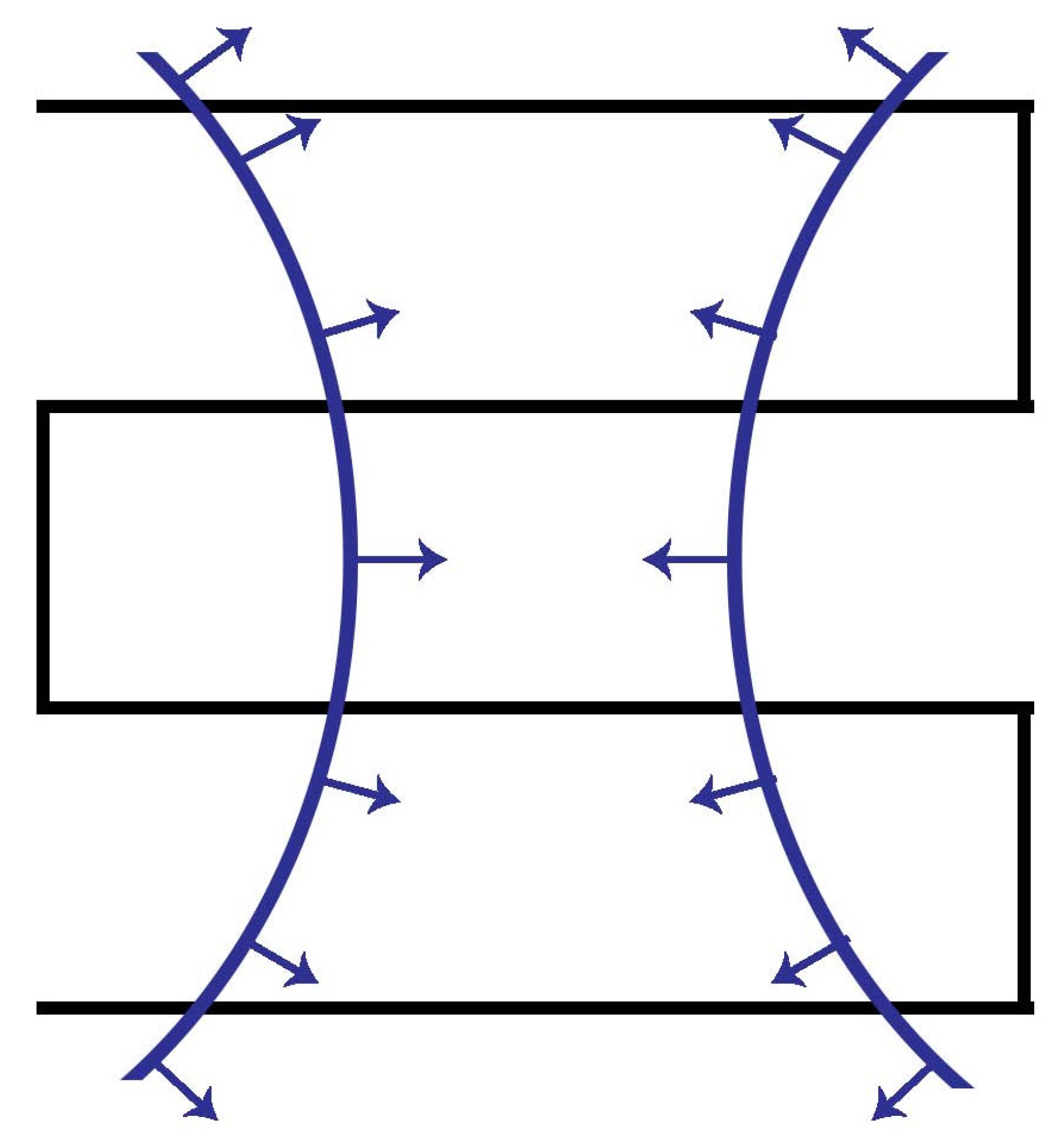

It should also be noted that the simulations have yet to be validated through physical flight tests, and it is expected that the physical error values will decrease appreciably from the simulated error due to the additional detail available for feature matching in real-world images. However, it is expected that the degree of improvement between the grid and optimized view plans will be similar to the simulated case. Another important note in transitioning to physical tests is the possibility of systematic error in the results due to self-calibration of the camera parameters during reconstruction. This issue is described in detail by James and Robson [

66]. The minimal systematic error observed in the current results may be due to reduced sub-pixel effects in the simulated environment used. This leads to less noise in feature matching, which can improve the performance of the auto-calibrating bundle adjustment and result in less systematic error. While not greatly impacting the current work, the effects described by James and Robson will be an important aspect in evaluating the results of future work in real environments.

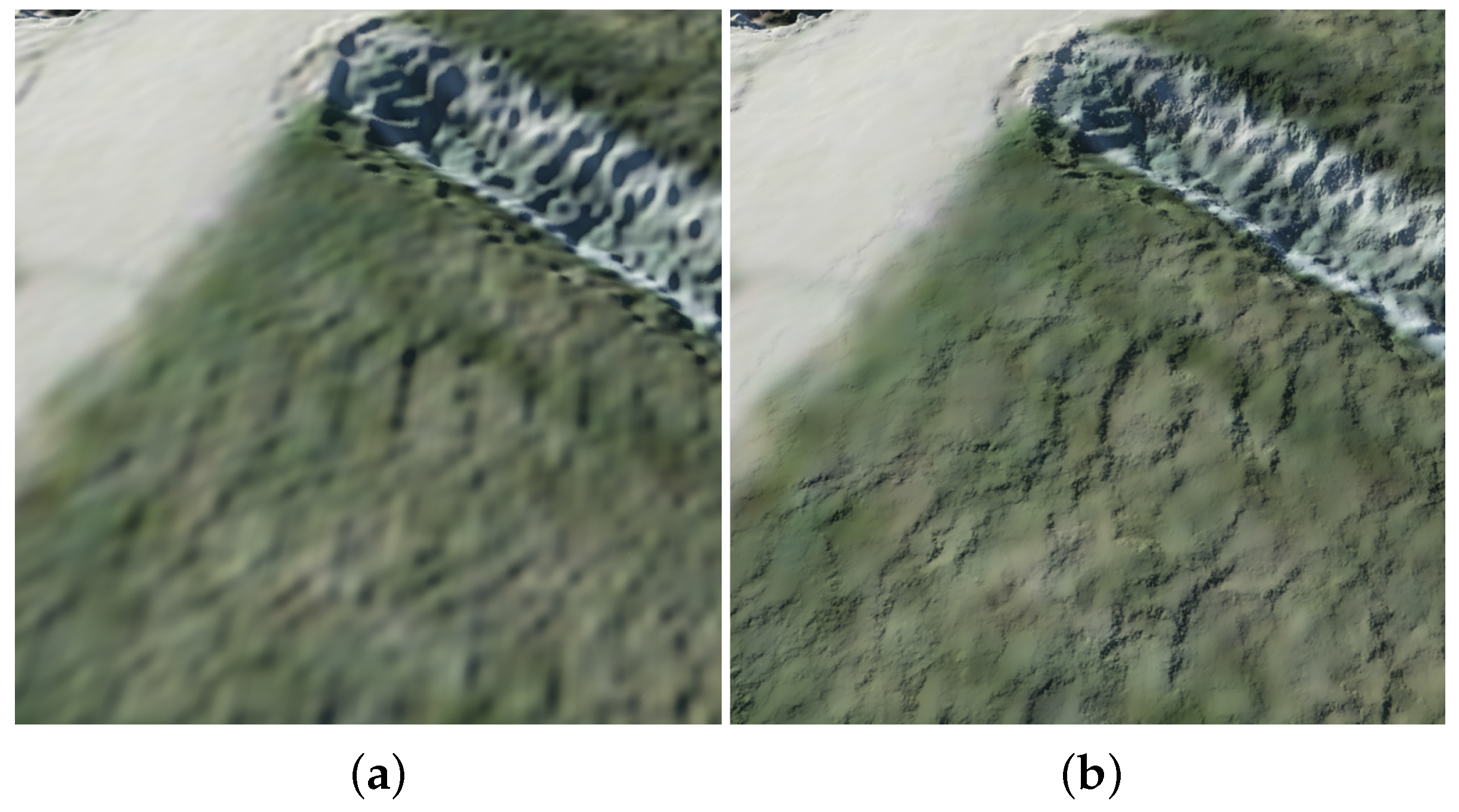

As stated above, it is probable that the simulation environment itself has some effect on the results produced, and this will be explored further in future work. It is readily apparent that the simulation does not reproduce fine surface details, such as grass and gravel, with a high degree of fidelity, and this is the reason the artificial surface roughness was introduced. The exact effect of this situation is less clear. Because of the close range, the resolution of the rendered imagery is higher than the draped imagery, meaning that a large amount of interpolation is occurring. Some areas of the orthoimagery, such as the rocks in the spillway, have large texture variations and provide sufficient features for matching. Other areas, such as the grass next to the spillway, are largely homogeneous, and the feature matching depends almost entirely on the artificial detail, which could potentially impact the accuracy of these areas. This is a larger issue in the first simulation than in the second, where higher resolution elevation data and imagery were used. These sites were chosen not only for the simulations in the current work, but also as sites for flight tests in future work. The authors plan not only to validate the optimized flight paths in future tests, but also to explore the relationship between results in the simulated environment and those from the physical sites. The large variety of features and surfaces at these sites should provide an instructive comparison and bring to light any surface effects masked by the simulator.

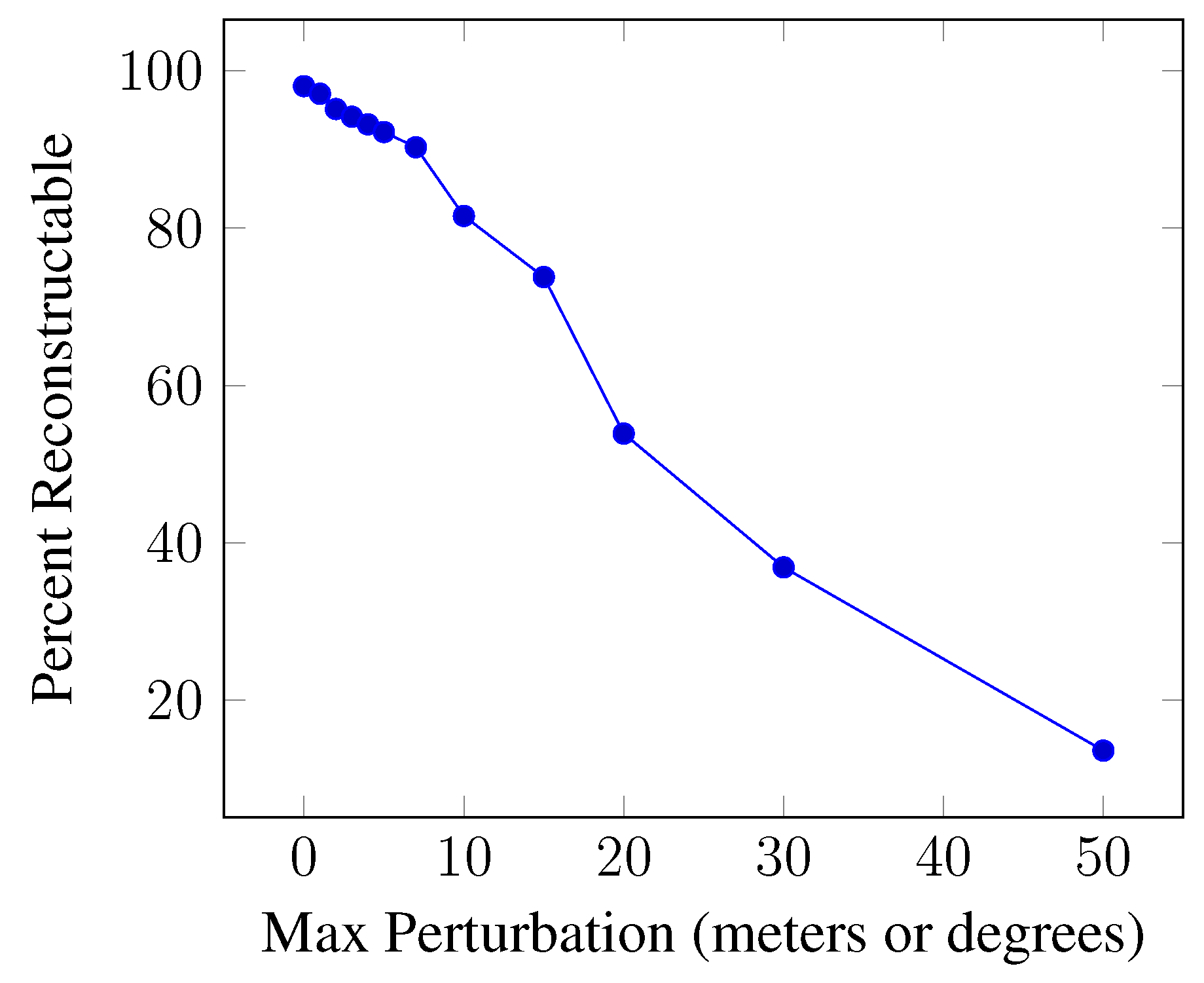

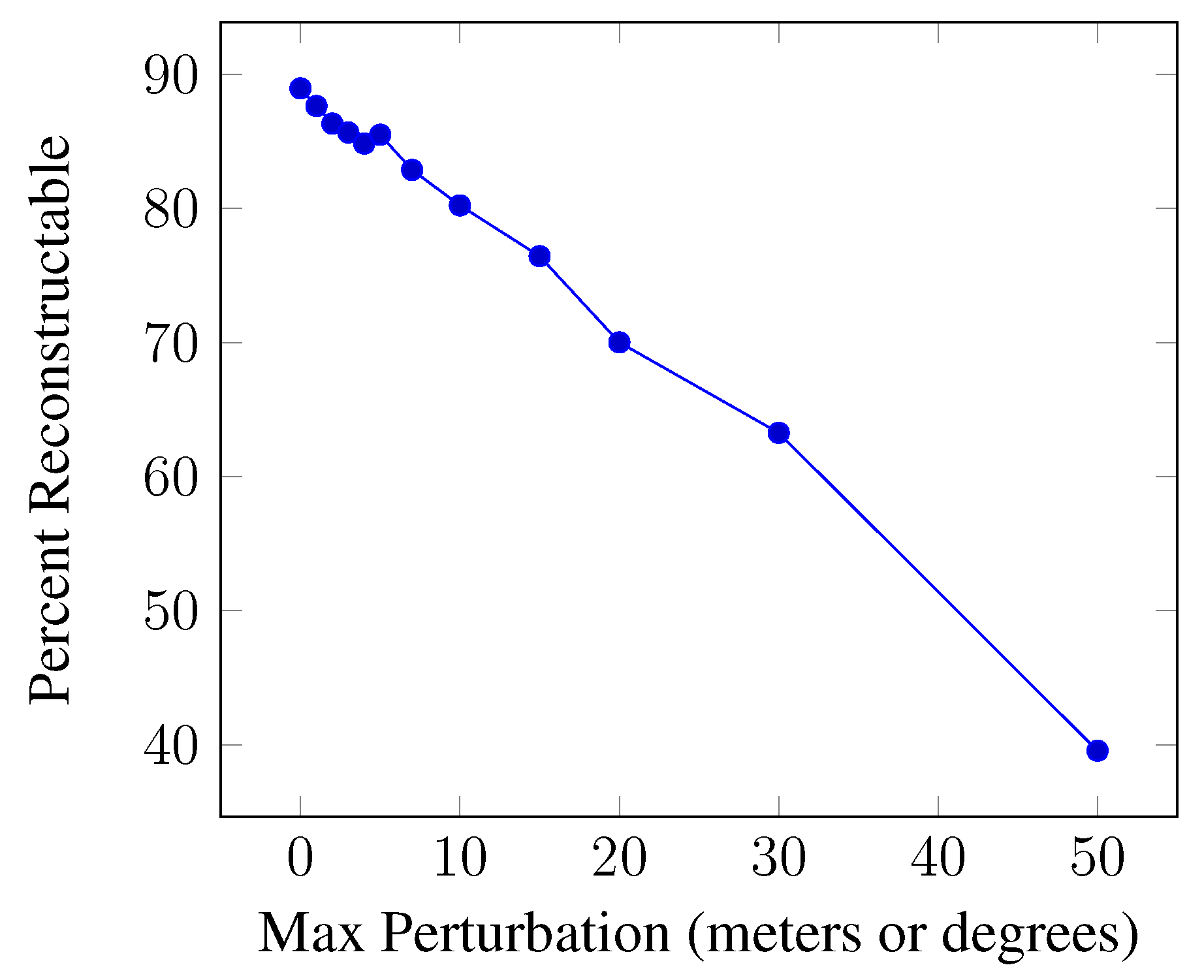

It is noted that the predicted percentages of terrain reconstruction are lower than the percentages reconstructed in the simulations, particularly for the grid surveys. There are several possible explanations for this discrepancy. The first is that the camera model used for planning and scoring in MATLAB does not perfectly represent the actual camera. In particular, the maximum camera range for each case is set to ensure a minimum GSD of 1 cm. This means that any terrain points beyond the maximum range are considered invisible by the camera. This is helpful in driving the optimized solution towards the minimum sampling distance, but could potentially underestimate the number of visible terrain points, as points beyond the maximum range are still actually visible, just at a larger GSD. This effect may be particularly pronounced in the grid survey, as all of the camera locations are on a flat plane, and the terrain is not flat.

A second possible reason for the low percent reconstructable values is that the name percent reconstructable may be something of a misnomer. It would be more accurate to say that the metric represents the percentage of terrain points that are visible from at least three camera locations at three distinct angles. The metric was intended to represent the minimum criteria for a point to be reconstructed using structure from motion, but does not fully capture the dense stereo pair matching used in multi-view stereo. Because Photoscan uses both techniques in its reconstruction pipeline, the percent reconstructable metric does not always represent all of the areas reconstructed in the final model.

Despite these shortcomings, the percent reconstructable metric (while not a perfect reflection of the final level of model reconstruction) does appear to trend generally with the final model accuracy and, thus, is helpful in motivating the genetic algorithm towards more accurate solutions.