1. Introduction

Detection and tracking of moving targets is a process that involves finding targets of interest in every frame of an image sequence. Infrared technology has been used in research into target detection and tracking because of its advantages, including the ability to penetrate through fog, 24-h all-weather observations and imaging, and lack of sensitivity to changes in light conditions. However, infrared images have relatively low contrast and signal-to-noise ratios (SNR) and also contain little target information, and so the detection and tracking of moving targets using infrared imagery is difficult. In addition, the use of moving imaging platforms such as aircraft gives rise to the problems of background motion and low target resolution [

1,

2], and correspondingly raises the requirements for the detection and tracking technology that is used.

As far as studies to date are concerned, infrared moving target detection algorithms can be roughly divided into background modeling [

3,

4,

5], optical flow [

6,

7,

8] and frame differencing [

9,

10,

11] methods. For example, Akula

et al. [

12] used an initial set of frames without targets to construct a statistical background model and proposed an adaptive contour-based background subtraction technique for accurate moving target detection in infrared image sequences by producing binarized thin contour saliency map. Xu

et al. [

13] intelligently combined the Lucas Kanade optical flow method and the frame differencing method to effectively detect infrared targets in simulations where the detector was either static or moving. Bhattacharya

et al. [

14] analyzed and solved the problem of the traditional symmetric frame differencing algorithm using only three frames for moving target detection, and proposed that the frames used in cumulative-differencing detection can be determined by the image conditions so that the target region in an infrared aerial sequence can be easily detected.

In the field of infrared target tracking, good results have been obtained in previous research using region-based [

15,

16], contour-based [

17,

18], model-based [

19,

20] and feature-based [

21,

22] algorithms. For example, Ling

et al. [

23] defined the evaluation criterion for the tracking effect and searched for the relatively accurate region similar to the reference region by maximizing the eigenvalues of the covariance matrix of the local complexity when the tracking error was large. Based on active contours, Salah

et al. [

24] combined a kernel photometric tracking term and a model-free shape tracking term to track several objects independently and accurately in infrared image sequences. Using a particle filter, Tang

et al. [

25] described the infrared target as being sparsely represented in an over-complete dictionary and thus effectively suppressed the influence of background and noise on target tracking. Yilmaz

et al. [

26], in contrast, tracked infrared targets in aerial sequences using both the distribution and intensity of the local standard deviation as target features in order to build the dual kernel density estimation of the mean shift.

There have been many studies of infrared target detection and tracking and much progress has been made. However, not enough research using aerial moving platforms has been done. In the face of problems such as platform motion and scene change, detection and tracking algorithms with a high accuracy and good real-time performance remain undeveloped. Especially when the imaging scene is complex and contains targets with different attributes and motion characteristics, the rapid detection and accurate location of every moving target of interest becomes a real challenge.

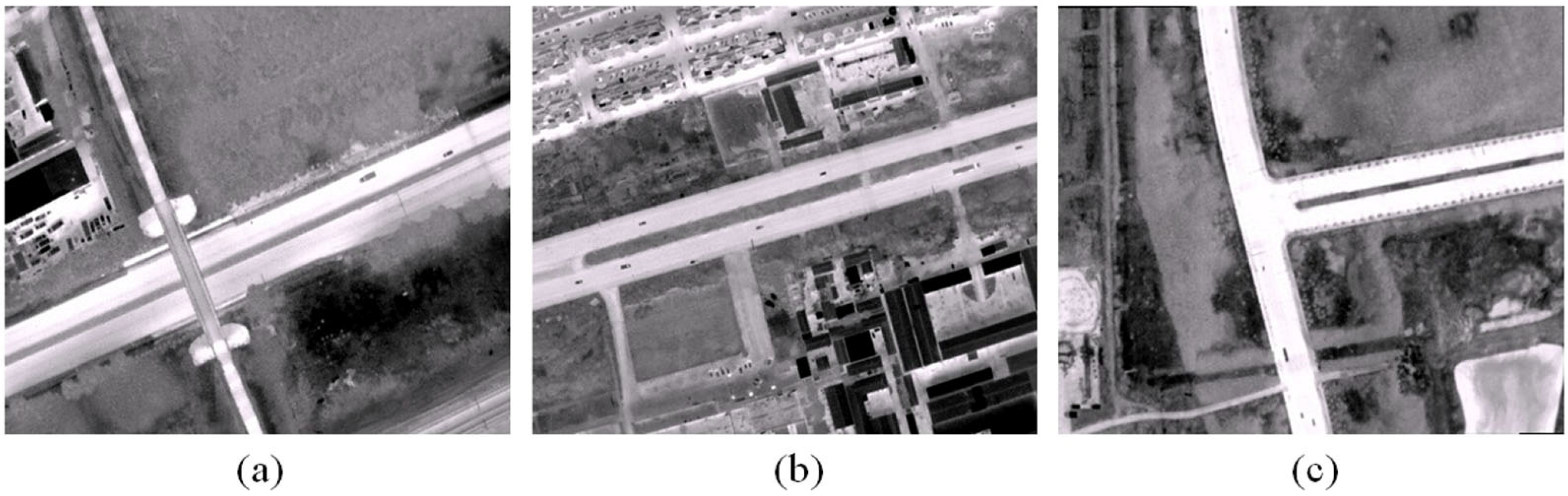

In view of the problems described above and the imaging characteristics of aerial infrared sequences, especially those acquired by vertical photography, a moving target detection algorithm (

Section 2) and an improved target tracking algorithm (

Section 3) for vehicle targets in aerial infrared sequences are proposed in this paper. The validity of the new algorithms is tested using three real aerial mid-infrared sequences (

Section 4) after registration. After an analysis of the experimental results (

Section 5), the practical application of the proposed algorithms is evaluated (

Section 6).

2. Moving Target Detection

The movement of the observation platform leads to big changes in the imaged scene in aerial sequences. In other words, the region covered by each image in a sequence varies as the camera moves. This limits the number of frames that have regions in common and produces pixel-level differences between images even after registration is carried out to compensate for the background motion. These problems cause difficulties for target detection methods such as background modeling. The frame differencing algorithm can reduce these problems to some extent and calculations made in real-time moving target detection using this algorithm are remarkably efficient [

14]. For this reason, in this paper the traditional frame differencing algorithm is improved and developed to produce a new infrared moving target detection algorithm for aerial sequences. The proposed algorithm is given the name Symmetric Frame Differencing Target Detection Based on Local Clustering Segmentation (SFDLC).

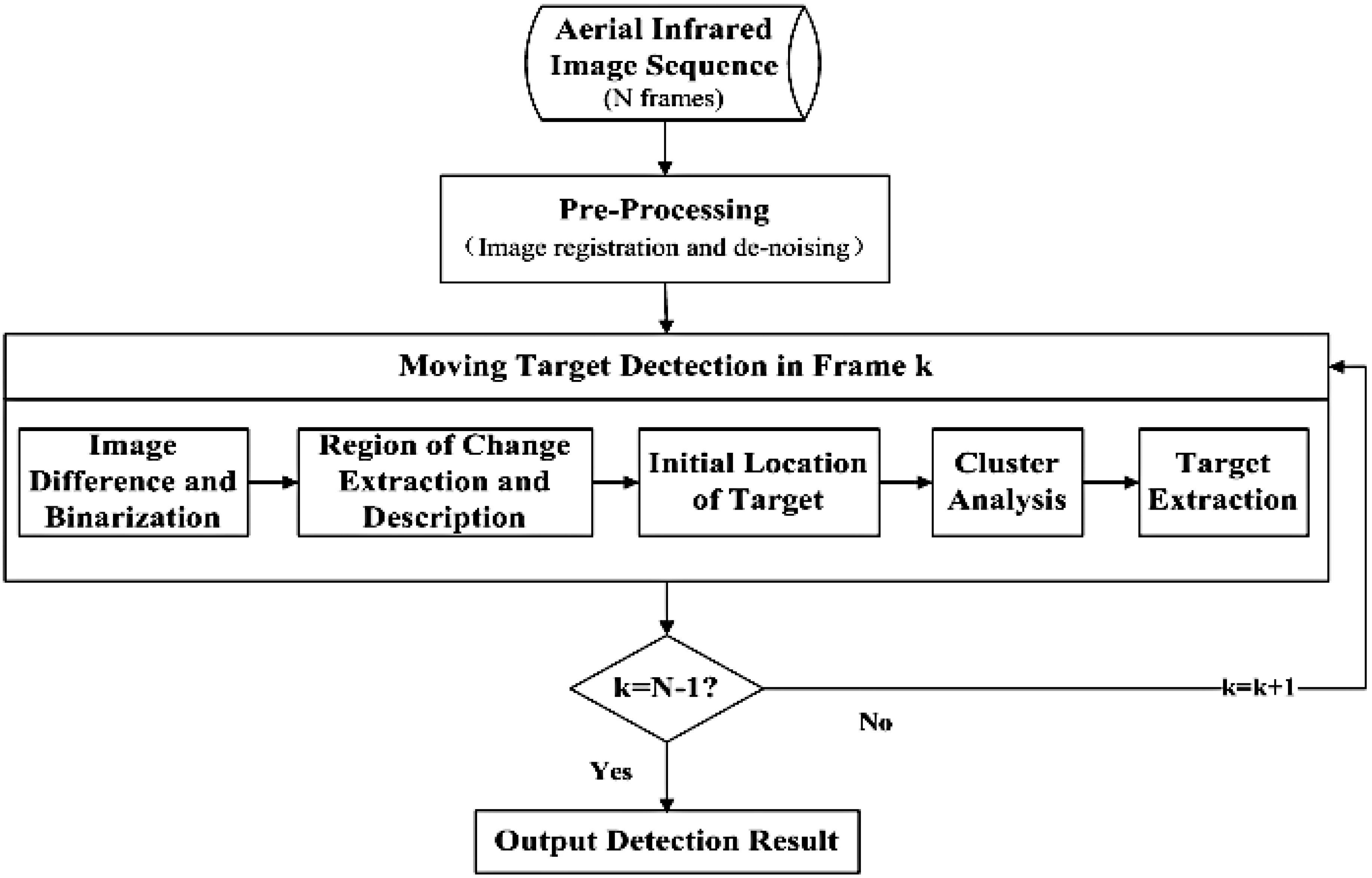

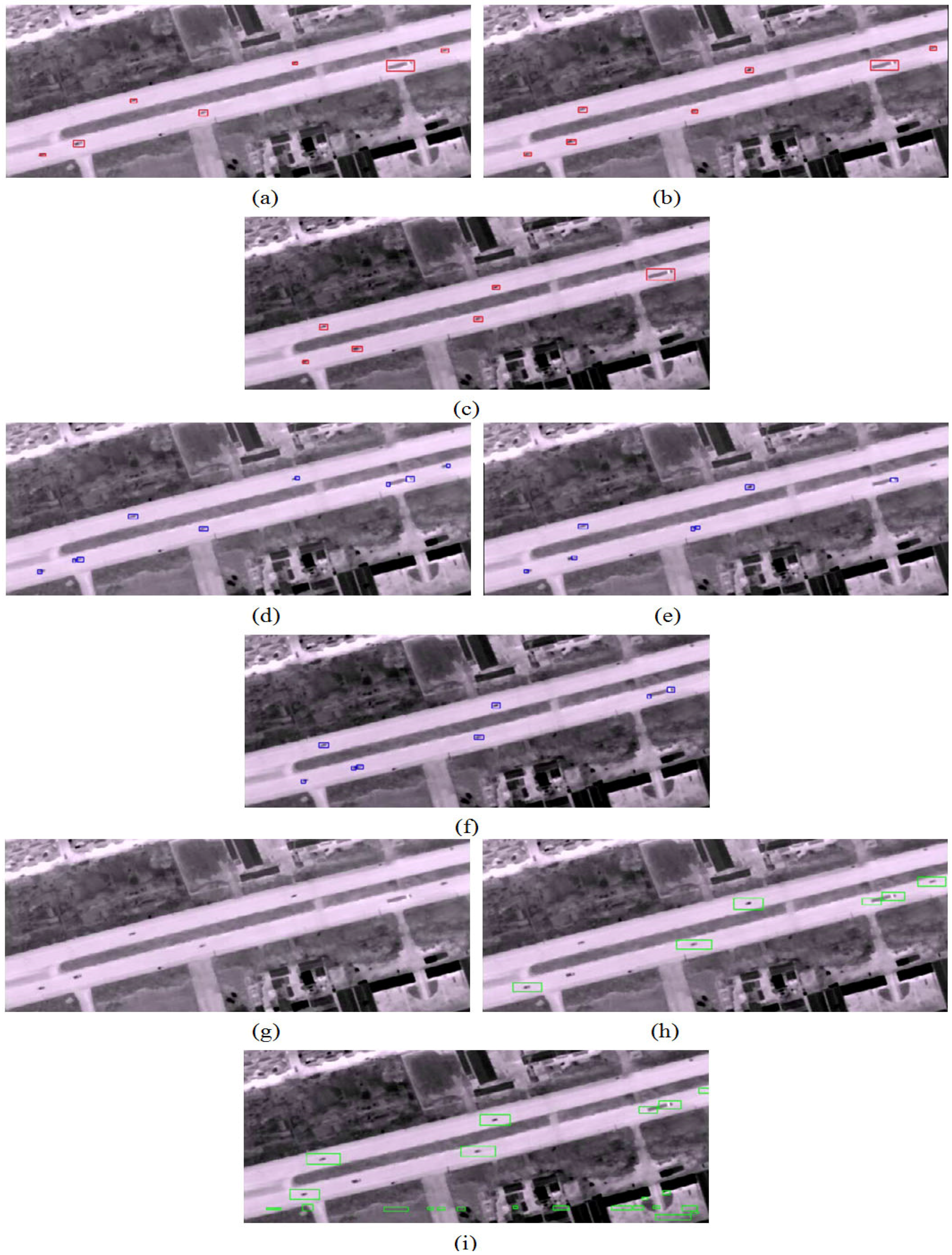

Figure 1 shows the flow of target detection using SFDLC.

Before introducing the SFDLC, it is necessary to discuss the traditional symmetric frame differencing algorithm (SFD). SFD [

27] is an improved detection method of traditional frame differencing that uses differencing between two frames. It chooses three successive frames in the image sequence to carry out the difference operation. Thus, the SFD can eliminate background detection caused by movement to accurately extract the target location and contour information. Setting

fk-1,

fk,

fk+1 as the three successive frames, SFD is described by the following three equations:

where

d is the difference image, and ⊗ signifies “AND” operation.

Figure 1.

Flow chart of Symmetric Frame Differencing Target Detection Based on Local Clustering Segmentation (SFDLC) for target detection in aerial infrared image sequence.

Figure 1.

Flow chart of Symmetric Frame Differencing Target Detection Based on Local Clustering Segmentation (SFDLC) for target detection in aerial infrared image sequence.

In most cases, SFD is simple to use and it performs moving target detection efficiently for complex scenes. However, there are some situations in which traditional SFD cannot produce satisfactory detection results. The first problem is that, because of the motion of the platform, the aerial camera acquires images at a high frequency in order to acquire continuous, real-time information about the target. The real geographical location and state of motion of the target thus change very little between successive frames. In other words, the target displacement between the successive frames after registration is small, or the target is slow-moving. When SFD is used to detect these “slow-moving” targets, problems such as the “Hole Effect” and false targets arise [

28]. As shown in

Figure 2, the diagonal-filled region of change caused by the motion of target is obtained by differencing between

fk and

fk-1. The vertical line-filled region is obtained by differencing between

fk and

fk+1. Clearly, there is a small area of overlap between these two regions and a detection “hole” appears in the middle of the target detected in

fk.

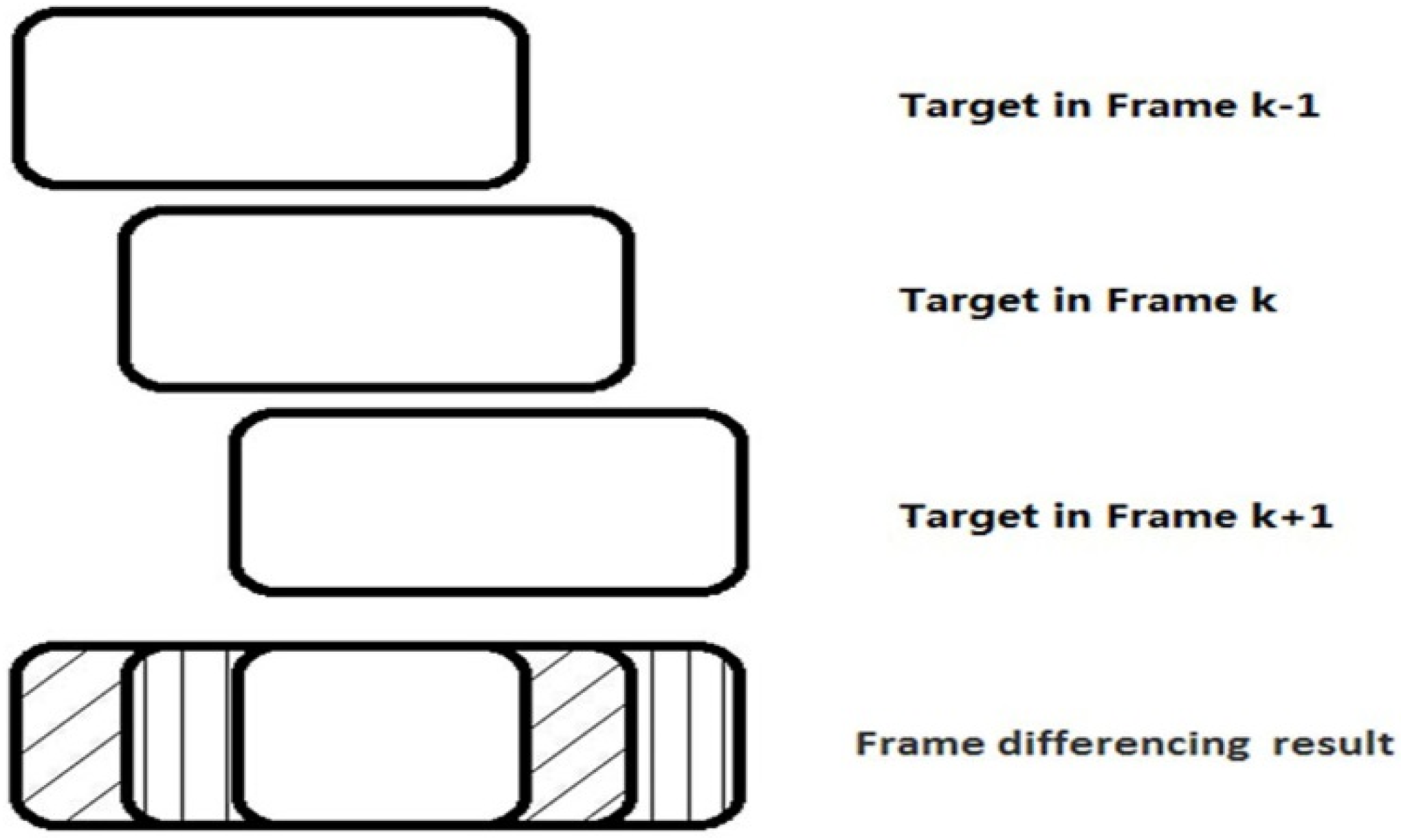

Figure 2.

“Slow-moving” target in three consecutive frames. In frame differencing result, the diagonal-filled region is obtained by differencing between fk and fk-1; the vertical line-filled region is obtained by differencing between fk and fk+1.

Figure 2.

“Slow-moving” target in three consecutive frames. In frame differencing result, the diagonal-filled region is obtained by differencing between fk and fk-1; the vertical line-filled region is obtained by differencing between fk and fk+1.

Because of the problem just described, this paper improves SFD by introducing the idea that clustering follows locating, and therefore proposes SFDLC to detect “slow-moving” targets in real time. On account of the grayscale consistency for a single target in the infrared image, SFDLC first locates the target by improved symmetrical differencing and then separates the entire target out by cluster analysis based on the preliminary location result. The SFDLC algorithm can be described in more detail as follows.

Step 1.

Image Difference and Binarization. The difference images

d1 and

d2 are calculated by carrying out symmetric differencing on three successive infrared images after registration,

fk-1,

fk and

fk+1. Then

d1 and

d2 are converted to binary images according to Equation (4), which sets a threshold

T to distinguish between region of change caused by the motion of target and noise:

where

T was set as 10 for the empirical experimental value.

Step 2.

Region of Change Extraction and Description. First the small amount of noise needs to be removed by median filtering. Then the non-zero pixel blocks in

d1 and

d2 are extracted to represent region of change caused by the motion of target, and the contours of these blocks are described by minimum enclosing rectangles (the dashed rectangles in

Figure 3a). Due to the irregular shapes of most of the real regions of change, the use of minimum enclosing rectangles can help enlarge real regional contours to ensure a larger overlap (the red areas in

Figure 3b) between the regions of change in

d1 and

d2 produced by the same target. From the perspective of the traditional SFD algorithm, which may not produce any overlap such as that shown in

Figure 2, using minimum enclosing rectangles is a key way to generate

d in Equation (3).

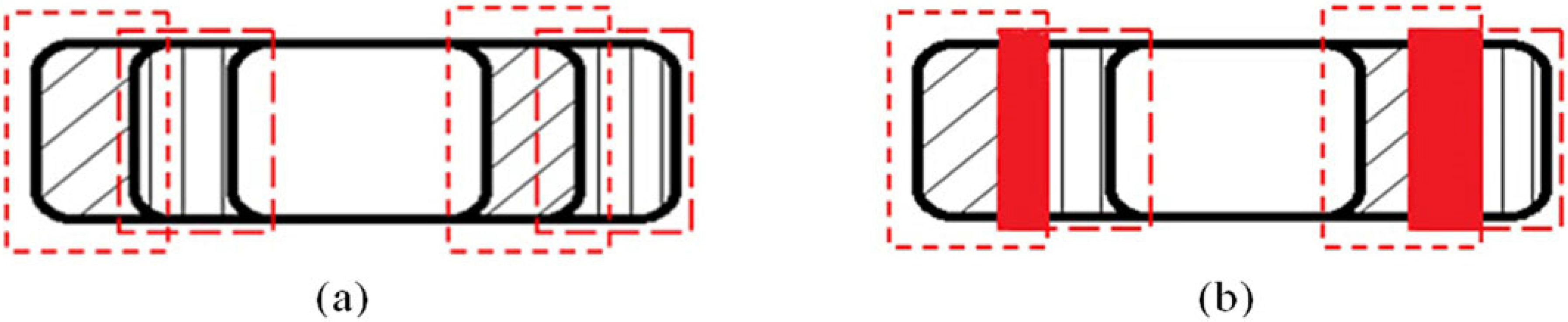

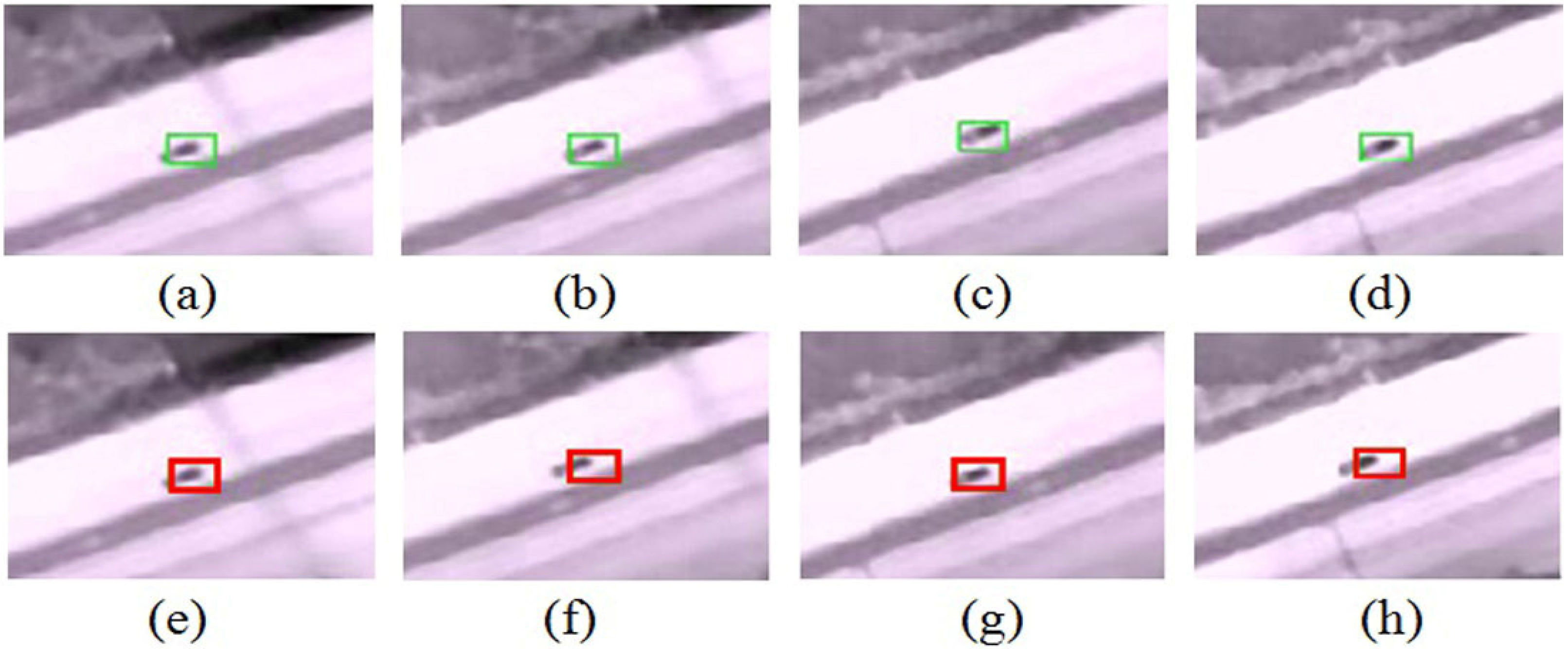

Figure 3.

(a) Regions (dashed rectangles) of change produced by the motion of target; (b) Initial location of the target (red areas).

Figure 3.

(a) Regions (dashed rectangles) of change produced by the motion of target; (b) Initial location of the target (red areas).

Step 3.

Initial Location of the Target. The initial location of the moving target in

fk is acquired from the calculation of

d (the red areas in

Figure 3b), which is the set of pixels corresponding to the overlap between the rectangles enclosing the areas of change in

d1 and

d2 Because

d intersects with the real target to be detected in

fk, in SFDLC, the location of

d is taken as the initial location of the moving target.

Step 4.

Cluster Analysis. In order to extract the entire target in

fk, a region of interest which is regarded as the likeliest area for the target to be present needs to be set first. In order to cover the initial location described in Step 3, this region is centered on

d and defined as square because the direction of motion of the target is not yet set. The size of this region is determined by the size of the real target and also the image resolution, as described by Equation (5):

where

L is the side length of the region of interest;

l,

w,

h are respectively the length, width and height of the target and

c is the image resolution. Next, pixel clustering is carried out in the regions of interest centered on

d using the K-means algorithm [

29]. In this way, the regions of interest are divided into different clustering objects according to the different grayscales of the various targets in the infrared image. Also, the number of clustering categories used in the K-means algorithm is defined by the image. Because of the remote imaging distance and the uniform grayscale of the target, and as there are few occlusions, this number is usually set as 2 for vertical aerial photographs.

Step 5. Target extraction. Because of the uniform grayscale of the target and the overlap between d and the target, the clustering objects that match d both in terms of grayscale category and location are taken to be the target candidates. On this basis, the target detection results are filtered out from the target candidates according to the possible area range of the real targets and these detected targets are represented in the final image, fk, by minimum enclosing rectangles.

According to the above steps, SFDLC can be carried out to detect targets in every image of aerial infrared image sequence.

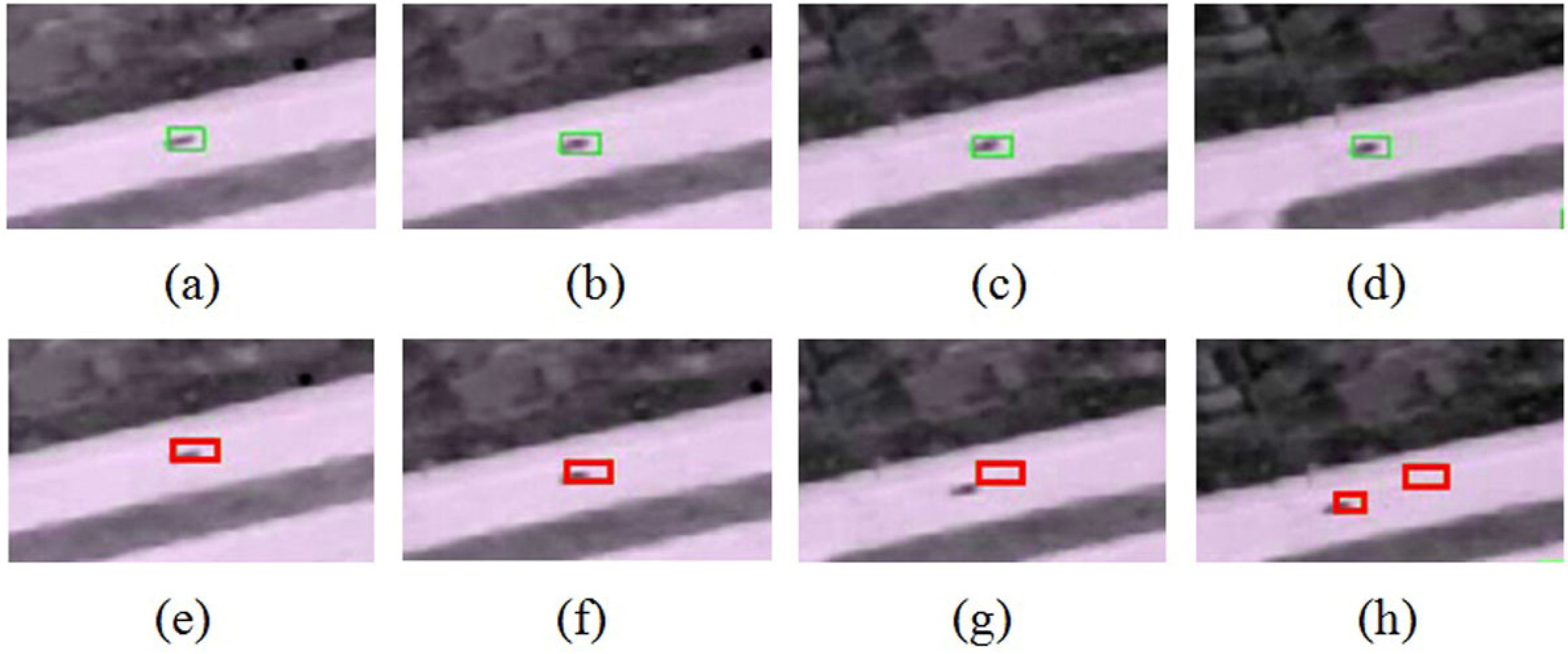

3. Moving Target Tracking

Because of the lack of real-time information about the target, currently used tracking algorithms have difficulty in tracking infrared targets especially when the characteristics of the target and background change in complicated scenes. In this study, we aimed to produce a tracking method that is highly robust and accurate by drawing on the idea of Tracking Learning Detection (TLD) [

30,

31,

32] and combining target tracking with real-time detection in order to realize real-time updating of the target model. Based on these ideas and the characteristics of infrared images, we investigated the use of kernel-based tracking theory [

33], which has previously performed well in infrared target tracking [

23,

26]. As a result, a novel tracking algorithm referred to as the Kernel-Based Mean Shift Target Tracking Based on Detection Updates (MSDU) is proposed to realize stable target tracking in infrared aerial sequences.

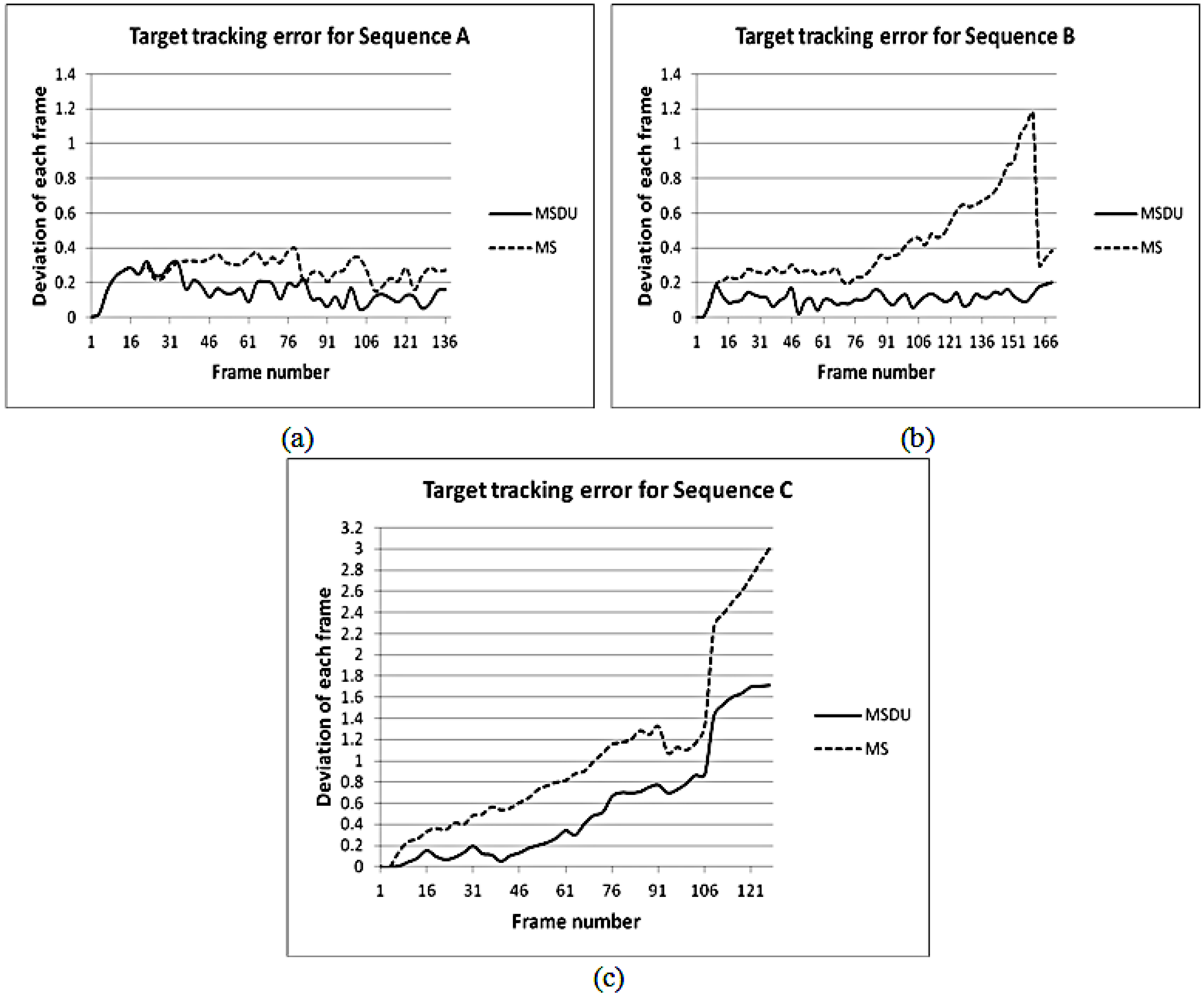

Kernel-based tracking theory, and the kernel-based mean shift target tracking (MS) algorithm, are based on the target features. Specifically, MS describes the target using a statistical distribution of features such as color; it takes the Bhattacharyya coefficient as the similarity measurement and searches for the pattern most similar to the target by gradient descent of the mean shift vector. In general, the MS algorithm involves little calculation, is highly robust and is well suited to tracking targets where there is little change in position. These characteristics are precisely the characteristics of targets in high-frequency aerial image sequences. However, MS easily produces the wrong convergence and finally leads to divergence when the overlap in features between the target and background is large or, in other words, when the contrast between target and background is low [

26,

34].

For better target tracking using infrared aerial sequences, the MSDU algorithm is proposed as an improvement of MS and brings the real-time target detection in the tracking process. In MSDU, the detection result is first used to discover and track the emerging target in good time; it is also used to selectively update the tracking model of the tracked target to produce improved tracking of the target and its trajectory.

The theory relevant to the MSDU algorithm and the steps involved in the algorithm are discussed in detail below.

3.1. Target Description Based on the Kernel Function Histogram

In MSDU, the gray space is chosen as the feature space of the infrared target and the histogram of gray levels based on the kernel function is accordingly taken as the descriptive model of the infrared target area in the image. The attributes of a specific target are represented by a rectangle describing the target’s location and size and so the target area is also a rectangle.

The model of the target is thus assumed to be a rectangular region centered on the point

y* and consisting of

n points expressed as

. By dividing the gray space of this rectangular region into

m equal divisions, the kernel function histogram of the target model can be written as

:

where

C1 denotes the normalization constant such that

;

k(x) is defined as the profile function of the kernel function;

h1 is the window width of

k(x); δ is Kronecker Delta function satisfying

; and

is the quantized value of the pixel at

.

Similarly, the target candidate centered on point y can be described by the kernel function histogram as

:

where

C2 denotes the normalization constant such that

, s denotes the total number of points in rectangular region of the target candidate, and

h2 is the window width of

k(x).

In this study, the Epanechnikov kernel function, expressed as

KE(x), was selected to calculate

k(x). In terms of the integral mean square error,

KE(x) is the most suitable of the commonly used kernel functions; it can be calculated according to:

where

cd denotes the volume of a d-dimensional sphere and can be set to π.

3.2. Target Location Based on Mean Shift

Mean shift is a method of estimating probability density extrema by continuously moving the point estimation to the position of the sampling mean. In MSDU, mean shift theory is used to move the target candidate to the location most similar to that of the target model. In fact, this location corresponds exactly to the most likely new target location. To find this location, the Bhattacharyya coefficient, written as , is chosen as the similarity measure between target candidate and target model. gets larger as the similarity increases and the location where reaches its maximum is the correct target location.

In the process of target location,

relating

and

is expressed as:

In addition, the iterative calculation of the new target candidate location (

) using the mean shift vector can be written as:

where

, and

denotes the weight coefficient which can be calculated as:

According to Equation (10), the location of the target candidate is iteratively calculated until the calculated location maximizes .

3.3. Target Model Updating Based on Detection

During the tracking process, a conventional tracking algorithm such as MS assumes that the target model is invariable. Therefore, the tracking will be adversely affected by changes in the target and background during the process. However, this effect can be controlled by taking advantage of real-time target information for model updating. For the collection of real-time target information, MSDU draws lessons from TLD and uses the real-time target detection results as a priori knowledge. To be specific, the detection results give real-time information about the target, and consequently, if there is an obvious difference between the detection results and the tracking results, the tracking results are probably not believable. Because of this, in MSDU, the tracking results are compared with their nearest-neighbor matched detection results in order to decide whether the tracking is effective and whether to update the current target model. Once the effectiveness of the tracking has been shown to be low, the target model is updated using the detection results in order to give accurate target tracking.

In practice, the target model is updated according to the following two criteria.

3.3.1. Tracking Effectiveness Evaluation Criterion

The tracking effectiveness is evaluated by taking similarity in the spatial domain, δ, as measure. The δ between the detection result and its nearest-neighbor matched tracking prediction is expressed as the Euclidean distance between their centers:

where (

xdtc, y

dtc) is the detection central point, (

xtrk, y

trk) is the tracking central point,

dtrk is the tracking diameter. As shown in Equation (12), a higher value of δ indicates a bigger difference between the detection result and its matched prediction.

In MSDU, ε is defined as the threshold of difference in the spatial domain between the detection result and its matched tracking result. If δ is greater than ε, the similarity between the detection and tracking results is low. In this case, the tracking is more likely to be inaccurate and the tracking effectiveness will be evaluated as poor. In contrast, if δ is smaller than ε, the tracking effectiveness will be evaluated as good.

The value of ε can be set according to the requirement of tracking accuracy in practical application. The higher the accuracy requirement is, the smaller the value of ε needs to be set. In this case, the minor difference between the detection result and the tracking result can be valued and the frequency of tracking model updating may increase. In contrast, the bigger value of ε may lead to a lower tracking accuracy. On account of the detection result used in MSDU not being exactly the same as the true target, ε was set as 0.1 in the experiment to avoid the unreliable evaluation on tracking effectiveness caused by the detection results, and also to find the possibly inaccurate tracking result in time for ensuring a high tracking accuracy.

3.3.2. Tracking Model Updating Criterion

Once δ is greater than ε, MSDU begins to seek accurate detection results in the subsequent frames as the real-time target information to be used for the correction of the tracking. As the target shape and size vary little between multiple consecutive frames in a high-frequency sequence, the detection result is considered to be accurate if there is a nearest-neighbor matched tracking result and the following morphological difference formula is satisfied:

where

widthtrk and

heighttrk, respectively, denote the width and height of the tracking result;

widthdtc and

heightdtc, respectively, denote the width and height of the detection result; τ is the shape stability threshold.

Once the accurate detection result has been obtained, the target model needs to be replaced and updated; the status attributes, such as the location, of the target are then determined and changed accordingly.

In MSDU, τ can be set according to the target detection effectiveness. Due to the slow change of target between the successive frames in the high-frequency image sequence, the more accurate the detection result is, the smaller the value of τ can be set to find a satisfactory detection result for updating the tracking model in a timely manner, and the model turns out to be more reliable. In contrast, the bigger the value of τ is, the less accurate the target detection needs to be, and the tracking model can be updated more frequently but less reliably. However, the inexact detection result with rather low accuracy has little practical significance and cannot be taken as the tracking model. Therefore, in the experiment, it was assumed that the detection result was available if the difference between the height and also the width of the detection result and the real height and width of target was smaller than 10% of the real ones. In this case, τ was set as 0.1 under the premise of only selecting detection results with relatively high precision for updating, to improve the accuracy and stability of target tracking by a timely updating tracking model.

3.4. Kernel-Based Mean Shift Target Tracking Based on Detection Updates (MSDU) Process

Setting

as the target model and

as the target position in the preceding frame, MSDU is implemented according to the following steps.

- (1)

The target position in the current frame is initialized as and, accordingly, the target candidate can be expressed as , calculated by Equation (7).

- (2)

The value of is calculated according to Equation (9).

- (3)

By computing and using , the new position, , of the target candidate is estimated using Equation (10).

- (4)

The target candidate is updated as and is recalculated.

- (5)

If the condition is satisfied, is performed until this condition is not met or . Through this iteration process, the similarity coefficient between the target model and candidate reaches a maximum and thus the final location of the target candidate is just the tracking result.

- (6)

All targets are tracked respectively according to the above steps, and the tracking results are nearest-neighbor matched with the target detection results to determine whether a new emerging target has been detected. Once a new target exists, timely tracking of it is necessary.

- (7)

A judgment regarding the validity of the tracking is made based on the tracking effectiveness evaluation criterion.

- (8)

If the tracking effectiveness is poor, is updated according to the tracking model updating criterion. If this updating succeeds, a new will be used for subsequent tracking.