Semi-Automated Object-Based Classification of Coral Reef Habitat using Discrete Choice Models

Abstract

:1. Introduction

2. Methodology

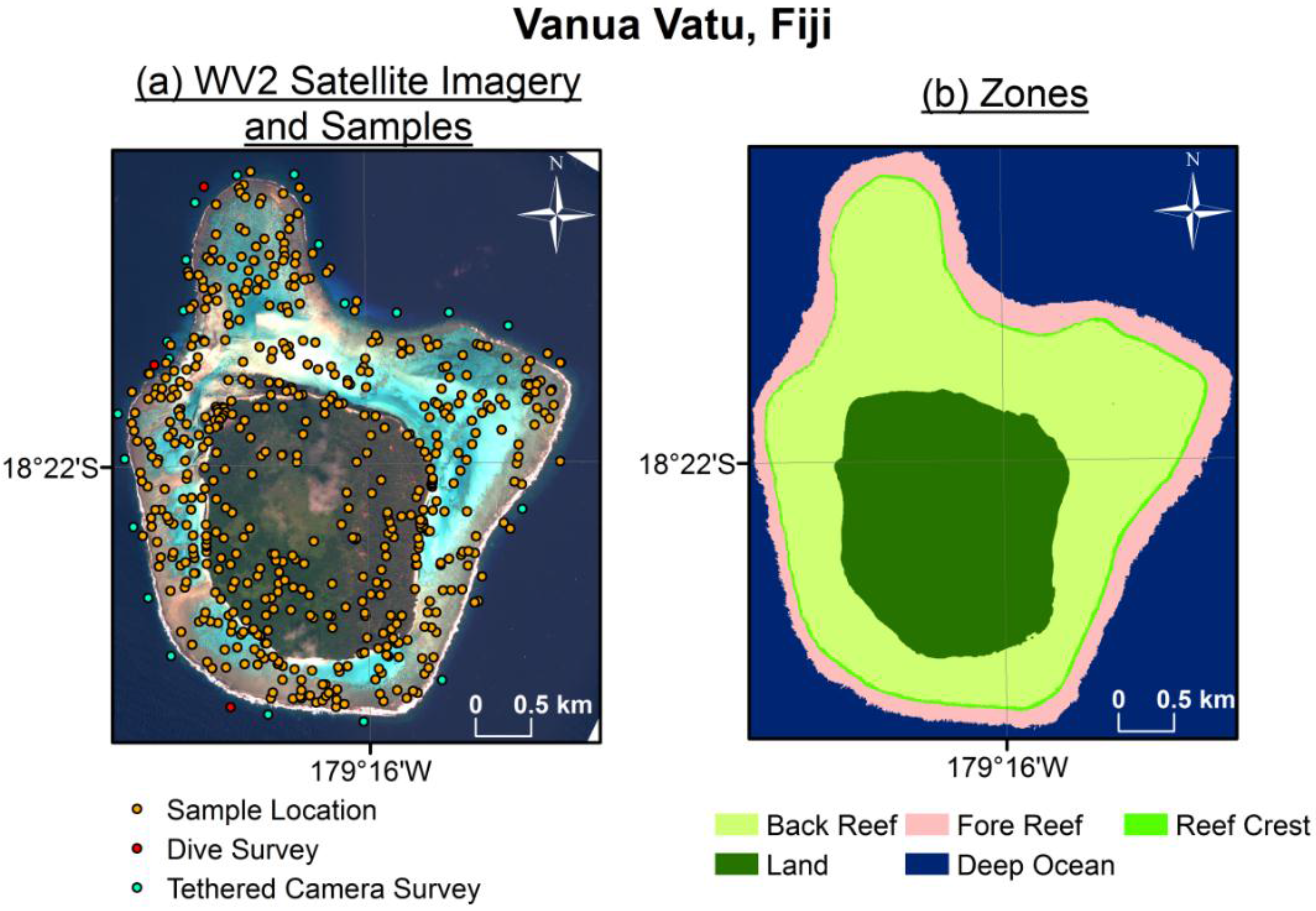

2.1. Data Acquisition

2.2. Image Segmentation, Habitat Definition, and Sample Selection

| Color Key | Habitat Name | Description | ||

|---|---|---|---|---|

| Fore Reef Coral | Diverse, coral-dominated benthic community with a substantial macroalgae component. Dominant scleractinian community differs by depth and location, with shallow areas more dominated by branching acroporids and (sub)massive poritids, and deeper areas with more plating morphologies. | ||

| Fore Reef Sand | Areas of low relief, with mobile and unconsolidated substrate dominated by interstitial fauna. | ||

| Reef Crest | Crustose coralline algae with limited coral growth due to high wave action and episodic aerial exposure. | ||

| Back Reef Rubble | Low biotic cover with mobile and unconsolidated substrate; algae dominated with isolated, small coral colonies. Breaking waves transport water, sediment, and rubble into this habitat. | ||

| Back Reef Sediment | Shallow sediment with low biotic cover and very sparse algae. | ||

| Back Reef Coral | Coral framework with variable benthic community composition and size. May be composed of dense acroporids, massive poritid and agariciids, or be a plantation hardground colonized by isolated colonies and turf algae. | ||

| Lagoonal Floor Barren | Sediment dominated ranging from poorly sorted, coral gravel to poorly sorted muddy sands. Intense bioturbation by callianassid shrimps and isolated colonies of sediment-tolerant low-light adapted colonies. Fleshy macroalgae may grow in sediment. | ||

| Lagoon Coral | Coral framework aggraded from the lagoon floor to the water surface. Coral community composition is determined by the development stage of the reef and may be composed of dense acroporid thickets, massive poritids, and agariciids forming microatolls whose tops are colonized by submassive, branching, and foliose coral colonies, or a mixture of the two. | ||

| Seagrass | An expanse of seagrass comprised primarily by Halophila decipiens, H. ovalis, H. ovalis subspecies bullosa, Halodule pinifolia, Halodule uninervis, and Syringodium isoetifolium. | ||

| Macroalgae | An expanse of dense macroalgae in which thalli are interspersed by unconsolidated sediment. | ||

| Mangroves | Coastal area dominated by mangroves. Predominate species in Fiji include Rhizophora stylosa, Rhizophora samoensis, Rhizophora x selala, Bruguiera gymnorhiza, Lumnitzera littorea, Excoecaria agallocha, and Xylocarpus granatum. | ||

| Mud flats | Area of very shallow, fine grained sediment with primarily microbial benthic community. | ||

| Deep Ocean Water | Submerged areas seaward of the fore reef that are too deep for observation via satellite. | ||

| Deep Lagoon Water | Submerged areas leeward of the fore reef that are too deep for observation via satellite. | ||

| Terrestrial Vegetation | Expanses of vegetation (e.g., palm trees, tropical hardwoods, grasses, shrubs) on emergent features or islands. | ||

| Beach sand | Accumulations of unconsolidated sand at the land-sea interface on emergent features or islands. | ||

| Inland waters | Bodies of fresh or briny water surrounded by emergent features that may either be isolated from or flow into marine waters. | ||

| Urban | Man-made structures such as buildings, docks, and roads. | ||

| Unvegetated Terrestrial | Soil or rock on islands with no discernible vegetative cover. | ||

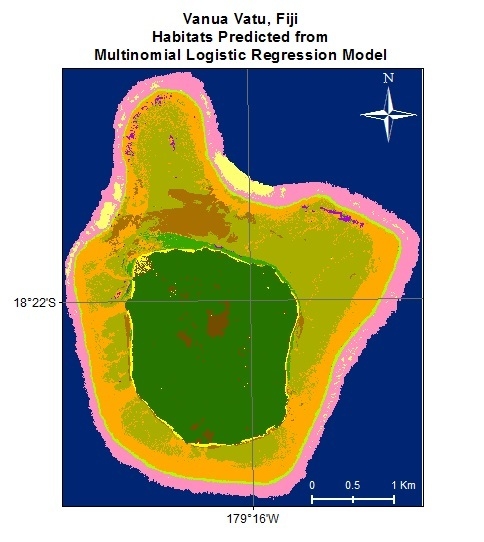

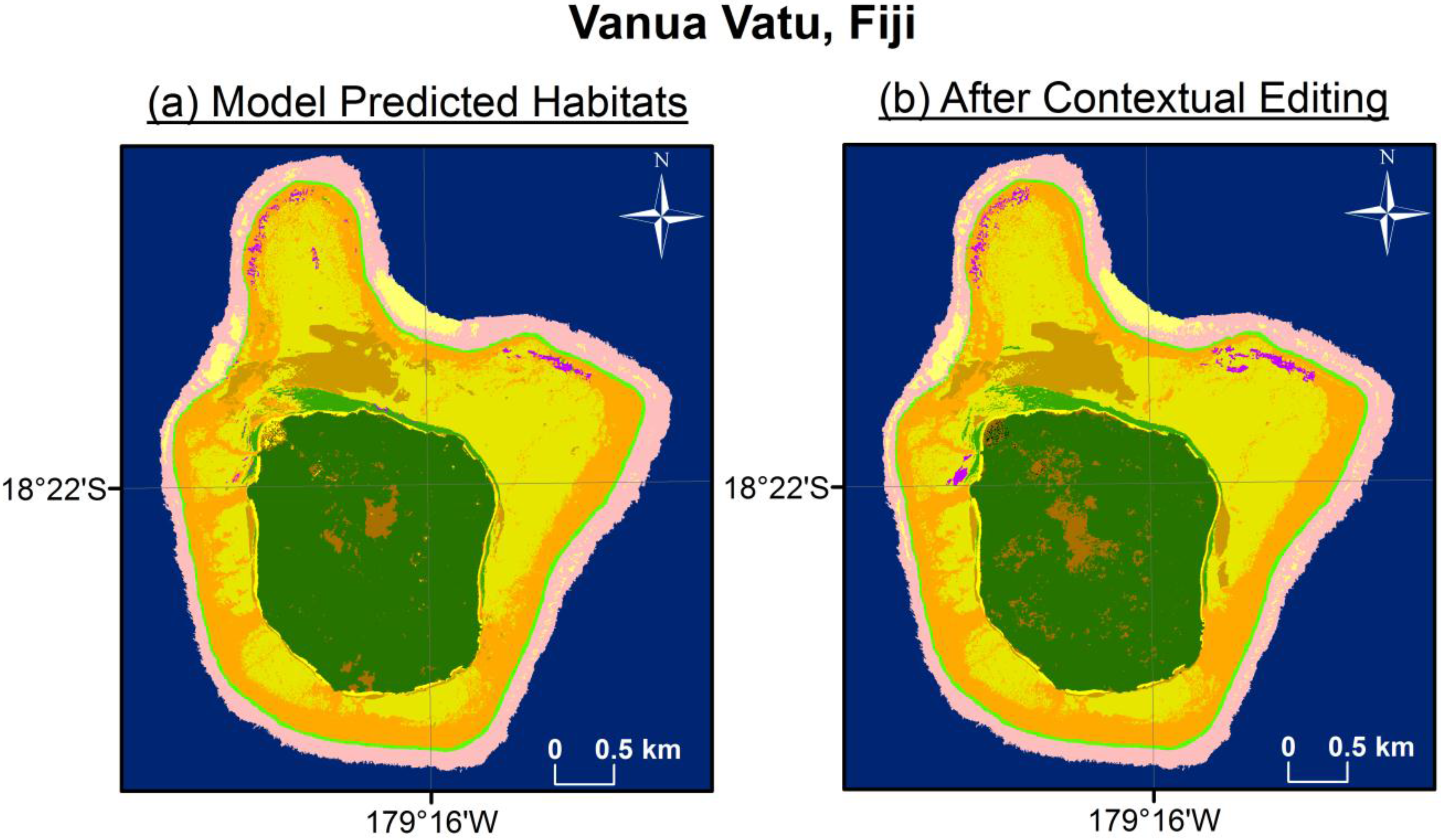

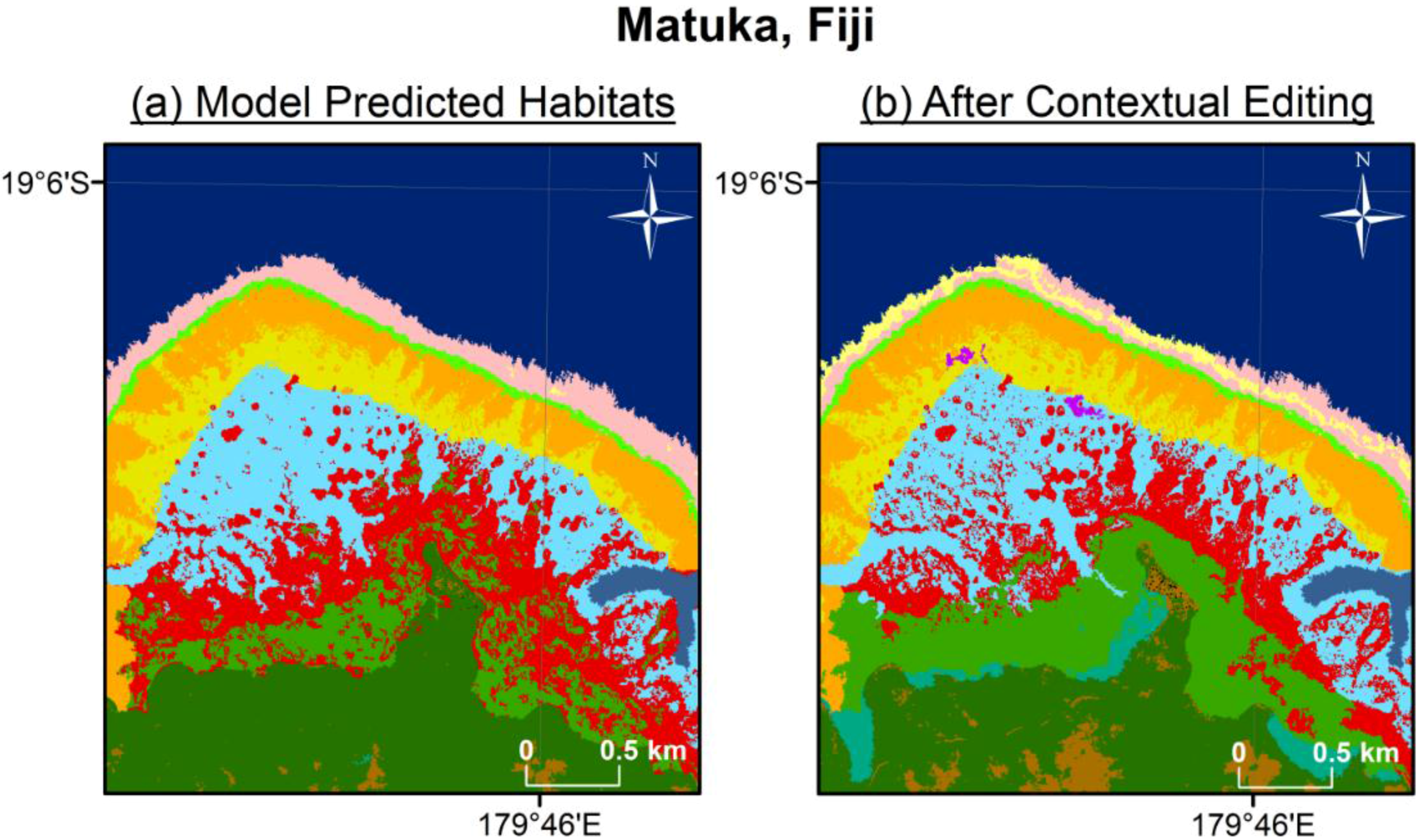

2.3. Multinomial Logit Model

2.4. Accuracy Assessment

3. Results

| Habitat | Back Reef Coral | Rubble | Back Reef Sed. | Beach Sand | Macro-Algae | Sea-Grass | Fore Reef Sed. | Fore Reef Coral | Terres. Veg. | Unveg. Terres. | Urban | Row Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Back Reef Coral | 1006 | 143 | 23 | 0 | 17 | 11 | 0 | 0 | 0 | 0 | 0 | 1200 |

| Rubble | 99 | 934 | 27 | 0 | 1 | 5 | 0 | 0 | 0 | 0 | 0 | 1066 |

| Back Reef Sediment | 31 | 22 | 113 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 166 |

| Beach Sand | 0 | 0 | 0 | 196 | 0 | 0 | 0 | 0 | 3 | 39 | 15 | 253 |

| Macroalgae | 1 | 0 | 0 | 0 | 9 | 3 | 0 | 0 | 0 | 0 | 0 | 13 |

| Seagrass | 4 | 7 | 2 | 0 | 1 | 69 | 0 | 0 | 0 | 0 | 0 | 83 |

| Fore Reef Sediment | 0 | 0 | 0 | 0 | 0 | 0 | 31 | 9 | 0 | 0 | 0 | 40 |

| Fore Reef Coral | 0 | 0 | 0 | 0 | 0 | 0 | 15 | 674 | 0 | 0 | 0 | 689 |

| Terrestrial Vegetation | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 514 | 75 | 0 | 590 |

| Unvegetated Terrestrial | 0 | 0 | 0 | 27 | 0 | 0 | 0 | 0 | 30 | 51 | 0 | 108 |

| Urban | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Column Total | 1141 | 1106 | 165 | 224 | 28 | 88 | 46 | 683 | 547 | 165 | 15 | 4208 |

| Habitat | Model Fit vs. Training Test Data | |

|---|---|---|

| User | Producer | |

| Back Reef Coral | 0.84 | 0.88 |

| Rubble | 0.88 | 0.84 |

| Back Reef Sediment | 0.68 | 0.68 |

| Beach Sand | 0.77 | 0.88 |

| Macroalgae | 0.69 | 0.32 |

| Seagrass | 0.83 | 0.78 |

| Fore Reef Sediment | 0.78 | 0.67 |

| Fore Reef Coral | 0.98 | 0.99 |

| Terrestrial Vegetation | 0.87 | 0.94 |

| Unvegetated Terrestrial | 0.47 | 0.31 |

| Urban | 0 | 0 |

4. Discussion

5. Conclusions

Supplementary Files

Supplementary File 1Acknowledgments

Author Contributions

Conflicts of Interest

References

- Brander, L.M.; Eppink, F.V.; Schagner, P.; van Beukering, J.H.; Wagtendonk, A. GIS-based mapping of ecosystem services: The case of coral reefs. In Benefit Transfer of Environmental and Resource Values, The Economics of Non-Market Goods and Resources; Johnson, R.J., Rolfe, J., Rosenberger, R.S., Brouwer, R., Eds.; Springer: New York, NY, USA, 2015; Volume 14, pp. 465–485. [Google Scholar]

- Moberg, F.; Folke, C. Ecological goods and services of coral reef ecosystems. Ecol. Econ. 1999, 29, 215–233. [Google Scholar] [CrossRef]

- Done, T.J.; Ogden, J.C.; Wiebe, W.J.; Rosen, B.R. Biodiversity and ecosystem function of coral reefs. In Functional Roles of Biodiversity: A Global Perspective; Mooney, H.A., Cushman, J.H., Medina, E., Sala, O.E., Schulze, E., Eds.; Wiley: Chichester, UK, 1996; pp. 393–429. [Google Scholar]

- Shucksmith, R.; Lorraine, G.; Kelly, C.; Tweddle, J.R. Regional marine spatial planning—The data collection and mapping process. Mar. Policy 2014, 50, 1–9. [Google Scholar] [CrossRef]

- Shucksmith, R.; Kelly, C. Data collection and mapping—Principles, processes and application in marine spatial planning. Mar. Policy 2014, 50, 27–33. [Google Scholar] [CrossRef]

- Rowlands, G.; Purkis, S.J. Tight coupling between coral reef morphology and mapped resilience in the Red Sea. Mar. Pollut. Bull. 2016, 103, 1–23. [Google Scholar]

- Riegl, B.R.; Purkis, S.J. Coral population dynamics across consecutive mass mortality events. Glob. Chang. Biol. 2015, 21, 3995–4005. [Google Scholar] [CrossRef] [PubMed]

- Purkis, S.J.; Roelfsema, C. Remote sensing of submerged aquatic vegetation and coral reefs. In Wetlands Remote Sensing: Applications and Advances; Tiner, R., Lang, M., Klemas, V., Eds.; CRC Press—Taylor and Francis Group: Boca Raton, FL, USA, 2015; pp. 223–241. [Google Scholar]

- Rowlands, G.; Purkis, S.J.; Riegl, B.; Metsamaa, L.; Bruckner, A.; Renaud, P. Satellite imaging coral reef resilience at regional scale. A case-study from Saudi Arabia. Mar. Pollut. Bull. 2012, 64, 1222–1237. [Google Scholar] [CrossRef] [PubMed]

- Purkis, S.J.; Graham, N.A.J.; Riegl, B.M. Predictability of reef fish diversity and abundance using remote sensing data in Diego Garcia (Chagos Archipelago). Coral Reefs 2008, 27, 167–178. [Google Scholar] [CrossRef]

- Riegl, B.M.; Purkis, S.J.; Keck, J.; Rowlands, G.P. Monitored and modelled coral population dynamics and the refuge concept. Mar. Pollut. Bull. 2009, 58, 24–38. [Google Scholar] [CrossRef] [PubMed]

- Riegl, B.M.; Purkis, S.J. Model of coral population response to accelerated bleaching and mass mortality in a changing climate. Ecol. Model. 2009, 220, 192–208. [Google Scholar] [CrossRef]

- Purkis, S.J.; Riegl, B. Spatial and temporal dynamics of Arabian Gulf coral assemblages quantified from remote-sensing and in situ monitoring data. Mar. Ecol. Prog. Ser. 2005, 287, 99–113. [Google Scholar] [CrossRef]

- Mumby, P.J.; Gray, D.A.; Gibson, J.P.; Raines, P.S. Geographical information systems: A tool for integrated coastal zone management in Belize. Coast. Manag. 1995, 23, 111–121. [Google Scholar] [CrossRef]

- Rowlands, G.; Purkis, S.J.; Bruckner, A. Diversity in the geomorphology of shallow-water carbonate depositional systems in the Saudi Arabian Red Sea. Geomorphology 2014, 222, 3–13. [Google Scholar] [CrossRef]

- Purkis, S.J.; Kerr, J.; Dempsey, A.; Calhoun, A.; Metsamaa, L.; Riegl, B.; Kourafalou, V.; Bruckner, A.; Renaud, P. Large-scale carbonate platform development of Cay Sal Bank, Bahamas, and implications for associated reef geomorphology. Geomorphology 2014, 222, 25–38. [Google Scholar] [CrossRef]

- Schlager, W.; Purkis, S.J. Bucket structure in carbonate accumulations of the Maldive, Chagos and Laccadive archipelagos. Int. J. Earth Sci. 2013, 102, 2225–2238. [Google Scholar] [CrossRef]

- Glynn, P.W.; Riegl, B.R.; Purkis, S.J.; Kerr, J.M.; Smith, T. Coral reef recovery in the Galápagos Islands: The northern-most islands (Darwin and Wenman). Coral Reefs 2015, 34, 421–436. [Google Scholar] [CrossRef]

- Knudby, A.; Pittman, S.J.; Maina, J.; Rowlands, G. Remote sensing and modeling of coral reef resilience. In Remote Sensing and Modeling, Advances in Coastal and Marine Resources; Finkl, C.W., Makowski, C., Eds.; Springer: New York, NY, USA, 2014; pp. 103–134. [Google Scholar]

- Xu, J.; Zhao, D. Review of coral reef ecosystem remote sensing. Acta Ecol. Sin. 2014, 34, 19–25. [Google Scholar] [CrossRef]

- Mumby, P.J.; Skirving, W.; String, A.E.; Hardy, J.T.; LeDrew, E.F.; Hochberg, E.J.; Stumpf, R.P.; David, L.T. Remote sensing of coral reefs and their physical environment. Mar. Pollut. Bull. 2004, 48, 219–228. [Google Scholar] [CrossRef] [PubMed]

- Bruckner, A.; Kerr, J.; Rowlands, G.; Dempsey, A.; Purkis, S.J.; Renaud, P. Khaled bin Sultan Living Oceans Foundation Atlas of Shallow Marine Habitats of Cay Sal Bank, Great Inagua, Little Inagua and Hogsty Reef, Bahamas; Panoramic Press: Phoenix, AZ, USA, 2015; pp. 1–304. [Google Scholar]

- Bruckner, A.; Rowlands, G.; Riegal, B.; Purkis, S.J.; Williams, A.; Renaud, P. Khaled bin Sultan Living Oceans Foundation Atlas of Saudi Arabian Red Sea Marine Habitats; Panoramic Press: Phoenix, AZ, USA, 2012; pp. 1–262. [Google Scholar]

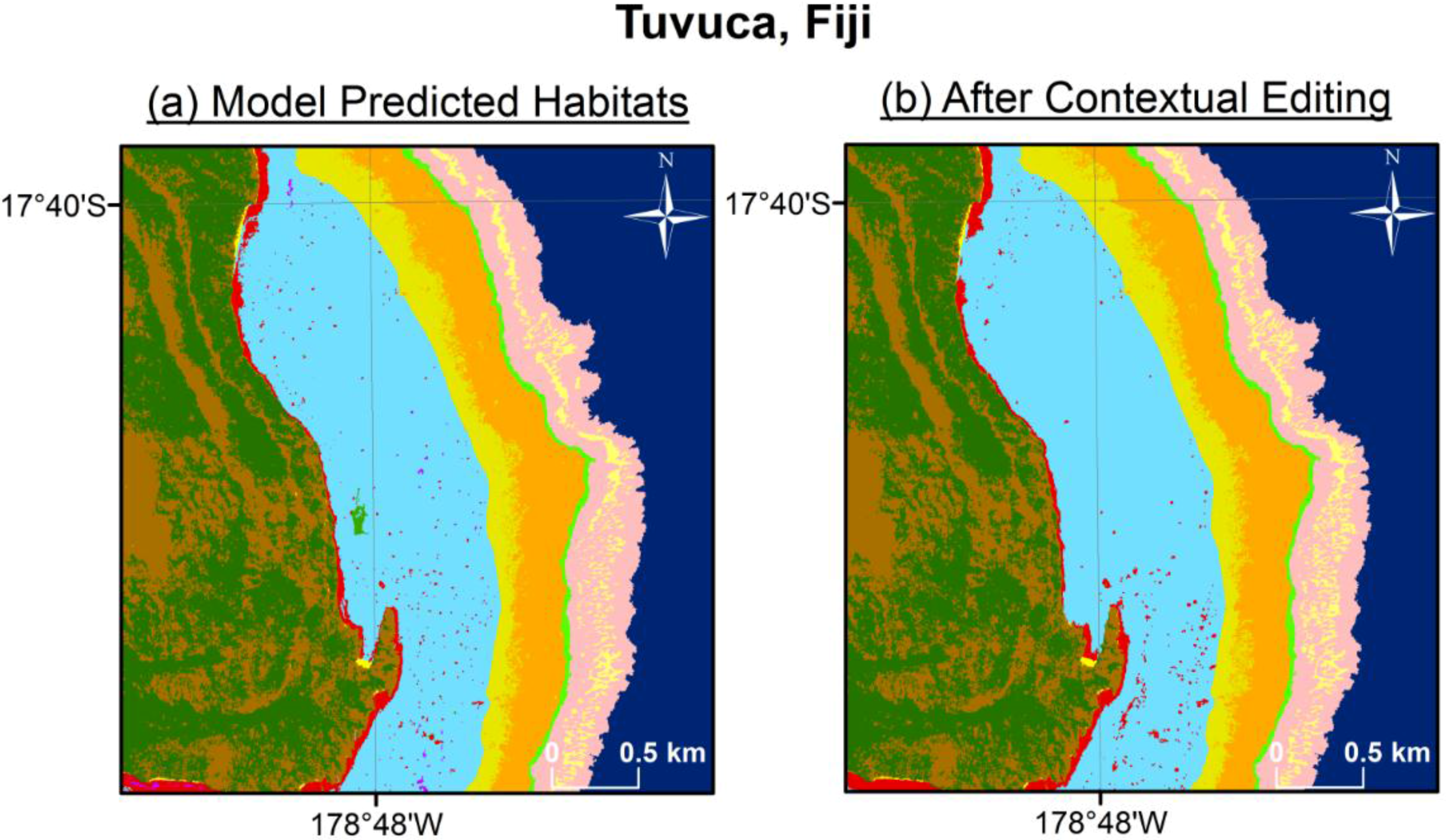

- Mumby, P.J.; Clark, C.D.; Green, E.P.; Edwards, A.J. Benefits of water column correction and contextual editing for mapping coral reefs. Int. J. Remote Sens. 1998, 19, 203–210. [Google Scholar] [CrossRef]

- Andrefouet, S. Coral reef habitat mapping using remote sensing: A user vs. producer perspective, implications for research, management and capacity building. J. Spat. Sci. 2008, 53, 113–129. [Google Scholar] [CrossRef]

- Purkis, S.J.; Pasterkamp, R. Integrating in situ reef-top reflectance spectra with Landsat TM imagery to aid shallow-tropical benthic habitat mapping. Coral Reefs 2004, 23, 5–20. [Google Scholar] [CrossRef]

- Purkis, S.J.; Kenter, J.A.M.; Oikonomou, E.K.; Robinson, I.S. High-resolution ground verification, cluster analysis and optical model of reef substrate coverage on Landsat TM imagery (Red Sea, Egypt). Int. J. Remote Sens. 2002, 23, 1677–1698. [Google Scholar] [CrossRef]

- Karpouzli, E.; Malthus, T.J.; Place, C.J. Hyperspectral discrimination of coral reef benthic communities in the western Caribbean. Coral Reefs 2004, 23, 141–151. [Google Scholar] [CrossRef]

- Purkis, S.J. A “reef-up” approach to classifying coral habitats from IKONOS imagery. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1375–1390. [Google Scholar] [CrossRef]

- Hochberg, E.J.; Atkinson, M.J.; Andréfouët, S. Spectral reflectance of coral reef bottom-types worldwide and implications for coral reef remote sensing. Remote Sens. Environ. 2003, 85, 159–173. [Google Scholar] [CrossRef]

- Andréfouët, S.; Kramer, P.; Torres-Pulliza, D.; Joyce, K.E.; Hochberg, E.J.; Garza-Pérez, R.; Mumby, P.J.; Riegl, B.; Yamano, H.; White, W.H.; et al. Multi-site evaluation of IKONOS data for classification of tropical coral reef environments. Remote Sens. Environ. 2003, 88, 128–143. [Google Scholar] [CrossRef]

- Goodman, J.; Ustin, S. Classification of benthic composition in a coral reef environment using spectral unmixing. J. Appl. Remote Sens. 2007, 1, 1–17. [Google Scholar]

- Purkis, S.J. Calibration of Satellite Images of Reef Environments. Ph.D. Thesis, Vrije Universiteit, Amsterdam, The Netherlands, June 2004. [Google Scholar]

- Hedley, J.D.; Mumby, P.J. A remote sensing method for resolving depth and subpixel composition of aquatic benthos. Limnol. Oceanogr. 2003, 48, 480–488. [Google Scholar] [CrossRef]

- Maeder, J.; Narumalani, S.; Rundquist, D.C.; Perk, R.L.; Schalles, J.; Hutchins, K.; Keck, J. Classifying and mapping general coral-reef structure using IKONOS data. Photogramm. Eng. Remote Sens. 2002, 68, 1297–1305. [Google Scholar]

- Hedley, J.; Harborne, A.; Mumby, P. Simple and robust removal of sun glint for mapping shallow-water benthos. Int. J. Remote Sens. 2005, 26, 2107–2112. [Google Scholar] [CrossRef]

- Hochberg, E.J.; Andréfouët, S.; Tyler, M.R. Sea surface correction of high spatial resolution Ikonos images to improve bottom mapping in near-shore environments. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1724–1729. [Google Scholar] [CrossRef]

- Wedding, L.; Lepczyk, S.; Pittman, S.; Friedlander, A.; Jorgensen, S. Quantifying seascape structure: extending terrestrial spatial pattern metrics to the marine realm. Mar. Ecol. Prog. Ser. 2011, 427, 219–232. [Google Scholar] [CrossRef]

- Benfield, S.L.; Guzman, H.M.; Mair, J.M.; Young, J.A.T. Mapping the distribution of coral reefs and associated sublittoral habitats in Pacific Panama: a comparison of optical satellite sensors and classification methodologies. Int. J. Remote Sens. 2007, 28, 5047–5070. [Google Scholar] [CrossRef]

- Lunetta, R.S.; Congalton, R.G.; Fenstermaker, L.K.; Jensen, J.R.; McGwire, K.C.; Tinney, L.R. Remote sensing and geographic information systems data integration: error sources and research ideas. Photogramm. Eng. Remote Sens. 1991, 53, 1259–1263. [Google Scholar]

- Shao, G.; Wu, J. On the accuracy of landscape pattern analysis using remote sensing data. Landsc. Ecol. 2008, 23, 505–511. [Google Scholar] [CrossRef]

- Foody, G.M. Harshness in image classification accuracy assessment. Int. J. Remote Sens. 2008, 29, 3137–3159. [Google Scholar] [CrossRef]

- Foody, G.M. Assessing the accuracy of land cover change with imperfect ground reference data. Remote Sens. Environ. 2010, 114, 2271–2285. [Google Scholar] [CrossRef]

- Hochberg, E.J.; Atkinson, M.J. Spectral discrimination of coral reef benthic communities. Coral Reefs 2000, 19, 164–171. [Google Scholar] [CrossRef]

- Hogland, J.; Billor, N.; Anderson, N. Comparison of standard maximum likelihood classification and polytomous logistic regression used in remote sensing. Eur. J. Remote Sens. 2013, 46, 623–640. [Google Scholar] [CrossRef]

- Pal, M. Multinomial logistic regression-based feature selection for hyperspectral data. Int. J. Appl. Earth Obs. Geoinform. 2012, 14, 214–220. [Google Scholar] [CrossRef]

- Debella-Gilo, M.; Etzelmuller, B. Spatial prediction of soil classes using digital terrain analysis and multinomial logistic regression modeling integrated in GIS: examples from Vestfold County, Norway. Catena 2009, 77, 8–18. [Google Scholar] [CrossRef]

- Li, J.; Marpu, P.R.; Plaza, A.; Bioucas-Dias, J.M.; Benediktsson, J.A. Generalized composit kernel framework for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4816–4829. [Google Scholar] [CrossRef]

- Pal, M.; Foody, G.M. Evaluation of SVM, RVM and SMLR for accurate image classification with limited ground data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1344–1355. [Google Scholar] [CrossRef]

- Mishra, D.R.; Narumalani, S.; Rundquist, D.; Lawson, M.; Perk, R. Enhancing the detection and classification of coral reef and associated benthic habitats: a hyperspectral remote sensing approach. J. Geophys. Res. 2007, 112, 1–18. [Google Scholar] [CrossRef]

- Bernabé, S.; Marpu, P.R.; Plaza, A.; Mura, M.D.; Benediktsson, J.A. Spectral-spatial classification of multispectral images using kernal feature space representation. IEEE Geosci. Remote Sens. Lett. 2014, 11, 288–292. [Google Scholar] [CrossRef]

- Contreras-Silva, A.I.; López-Caloca, A.A.; Tapia-Silva, F.O.; Cerdeira-Estrada, S. Satellite remote sensing of coral reef habitat mapping in shallow waters at Banco Chinchorro Reefs, México: A classification approach. In Remote Sensing—Applications; Escalante, B., Ed.; InTech: Rijeka, Croatia, 2012; pp. 331–354. [Google Scholar]

- Purkis, S.J.; Myint, S.; Riegl, B. Enhanced detection of the coral Acropora cervicornis from satellite imagery using a textural operator. Remote Sens. Environ. 2006, 101, 82–94. [Google Scholar] [CrossRef]

- Holden, H.; Derksen, C.; LeDrew, E.; Wulder, M. An examination of spatial autocorrelation as a means of monitoring coral reef ecosystems. In Proceedings of the IEEE 2001 International Geoscience and Remote Sensing Symposium, Sydney, Australia, 9–13 July 2001; Volume 2, pp. 622–624.

- Keck, J.; Houston, R.S.; Purkis, S.J.; Riegl, B. Unexpectedly high cover of Acropora cervicornis on offshore reefs in Roatan (Honduras). Coral Reefs 2005, 24, 509. [Google Scholar] [CrossRef]

- LeDrew, E.F.; Holden, H.; Wulder, M.A.; Derksen, C.; Newman, C. A spatial operator applied to multidate satellite imagery for identification of coral reef stress. Remote Sens. Environ. 2004, 91, 271–279. [Google Scholar] [CrossRef]

- Roelfsema, C.; Phinn, S.; Jupiter, S.; Comley, J. Mapping coral reefs at reef to reef-system scales, 10s-1000s km2, using object-based image analysis. Int. J. Remote Sens. 2013, 18, 6367–6388. [Google Scholar] [CrossRef]

- Roelfsema, C.; Phinn, S.; Jupiter, S.; Comley, J.; Beger, M.; Patterson, E. The application of object-based analysis of high spatial resolution imagery for mapping large coral reef systems in the West Pacific at geomorphic and benthic community scales. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010; Volume 11, pp. 4346–4349.

- Phinn, S.R.; Roelfsema, C.M.; Mumby, P.J. Multi-scale, object-based image analysis for mapping geomorphic and ecological zones on coral reefs. Int. J. Remote Sens. 2012, 33, 3768–3797. [Google Scholar] [CrossRef]

- Knudby, A.; Roelfsema, C.; Lyons, M.; Phinn, S.; Jupiter, S. Mapping fish community variables by integrating field and satellite data, object-based image analysis and modeling in a traditional Fijian fisheries management area. Remote Sens. 2011, 3, 460–483. [Google Scholar] [CrossRef]

- Harris, P.M.; Purkis, S.J.; Ellis, J. Analyzing spatial patterns in modern carbonate sand bodies from Great Bahama Bank. J. Sediment. Res. 2011, 81, 185–206. [Google Scholar] [CrossRef]

- Purkis, S.J.; Harris, P.M.; Ellis, J. Patterns of sedimentation in the contemporary Red Sea as an analog for ancient carbonates in rift settings. J. Sediment. Res. 2012, 82, 859–870. [Google Scholar] [CrossRef]

- Harris, P.M.; Purkis, S.J.; Ellis, J.; Swart, P.K.; Reijmer, J.J.G. Mapping water-depth and depositional facies on Great Bahama Bank. Sedimentology 2015, 62, 566–589. [Google Scholar] [CrossRef]

- Purkis, S.J.; Casini, G.; Hunt, D.; Colpaert, A. Morphometric patterns in Modern carbonate platforms can be applied to the ancient rock record: Similarities between Modern Alacranes Reef and Upper Palaeozoic platforms of the Barents Sea. Sediment. Geol. 2015, 321, 49–69. [Google Scholar] [CrossRef]

- Purkis, S.J.; Rowlands, G.P.; Riegl, B.M.; Renaud, P.G. The paradox of tropical karst morphology in the coral reefs of the arid Middle East. Geology 2010, 38, 227–230. [Google Scholar] [CrossRef]

- Peña, J.M.; Gutiérrez, P.A.; Hervás-Martínez, C.; Six, J.; Plant, R.E.; López-Granados, F. Object-based image classification of summer crops with machine learning methods. Remote Sens. 2014, 6, 5019–5041. [Google Scholar] [CrossRef]

- Vatsavai, R.R. High-resolution urban image classification using extended features. In Proceedings of the IEEE International Conferene on Data Mining Workshops, Vancouver, BC, USA, 11 December 2011; Volume 11, pp. 869–876.

- Zhang, C.; Xie, Z. Combining object-based texture measures with a neural network for vegetation mapping in the Everglades from hyperspectral imagery. Remote Sens. Environ. 2012, 124, 310–320. [Google Scholar] [CrossRef]

- Semi-automatic classification of tree species in different forest ecosystems by spectral and geometric variables derived from Airborne Digital Sensor (ADS40) and RC30 data. Remote Sens. Environ. 2011, 115, 76–85.

- Pant, P.; Heikkinen, V.; Korpela, I.; Hauta-Kasari, M.; Tokola, T. Logistic regression-based spectral band selection for tree species classification: Effects of spatial scale and balance in training samples. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1604–1608. [Google Scholar] [CrossRef]

- Zhang, C.; Selch, D.; Xie, Z.; Roberts, C.; Cooper, H.; Chen, G. Object-based benthic habitat mapping in the Florida Keys from hyperspectral imagery. Estuar. Coastal Shelf Sci. 2013, 134, 88–97. [Google Scholar] [CrossRef]

- Zhang, C. Applying data fusion techniques for benthic habitat mapping and monitoring in a coral reef ecosystem. ISPRS J. Photogramm. Remote Sens. 2015, 104, 213–223. [Google Scholar] [CrossRef]

- Wahidin, N.; Siregar, V.P.; Nababan, B.; Jaya, I.; Wouthuyzen, S. Object-based image analysis for coral reef benthic hábitat mapping with several classification algorithms. Procedia Environ. Sci. 2015, 24, 222–227. [Google Scholar] [CrossRef]

- Jensen, J. Introductory Digital Image Processing: A Remote Sensing Perspective, 3rd ed.; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2005; pp. 1–526. [Google Scholar]

- Purkis, S.J.; Rowlands, G.; Kerr, J.M. Unravelling the influence of water depth and wave energy on the facies diversity of shelf carbonates. Sedimentology 2015, 62, 541–565. [Google Scholar] [CrossRef]

- MacFaden, D. Conditional logit analysis of qualitative choice behavior. In Frontiers in Econometrics; Zarembka, P., Ed.; Academic Press: New York, NY, USA, 1974; pp. 105–142. [Google Scholar]

- Train, K.E. Discrete Choice Methods with Simulation, 2nd ed.; Cambridge University Press: New Yok, NY, USA, 2009; pp. 1–75. [Google Scholar]

- Cawley, G.C.; Talbot, N.L.C. Gene selection in cancer classification using sparse logistic regression with Bayesian regularisation. Bioinformatics 2006, 22, 2348–2355. [Google Scholar] [CrossRef] [PubMed]

- Zhong, P.; Zhang, P.; Wang, R. Dynamic learning of SMLR for feature selection and classification of hyperspectral data. IEEE Geosci. Remote Sens. Lett. 2008, 5, 280–284. [Google Scholar] [CrossRef]

- Train, K.; Winston, C. Vehicle choice behavior and the declining market share of U.S. automakers. Int. Econom. Rev. 2007, 48, 1469–1496. [Google Scholar] [CrossRef]

- Train, K.; McFadden, D. The goods-leisure tradeoff and disaggregate work trip mode choice models. Transp. Res. 1978, 12, 349–353. [Google Scholar] [CrossRef]

- Smith, M.D. State dependence and heterogeneity in fishing location choice. J. Environ. Econom. Manag. 2005, 50, 319–340. [Google Scholar] [CrossRef]

- Burnham, K.P.; Anderson, D.R. Model Selection and Multinomial Inference: A Practical Information-Theoretic Approach, 2nd ed.; Springer: New York, NY, USA, 2002; pp. 41–96. [Google Scholar]

- Kerr, J.M. Worldview-2 Offers New Capabilities for the Monitoring of Threatened Coral Reefs. Available online: http://www.watercolumncorrection.com/documents/Kerr_2010_Bathymetry_from_WV2.pdf (accessed on 16 September 2015).

- Myint, S.W.; Wentz, L.; Purkis, S.J. Employing Spatial Metrics in Urban Land-use/Land-cover Mapping: Comparing the Getis and Geary Indices. Photogramm. Eng. Remote Sens. 2007, 73, 1403–1415. [Google Scholar] [CrossRef]

- Purkis, S.J.; Klemas, V. Remote Sensing and Global Environmental Change; Wiley-Blackwell: Oxford, UK, 2011; pp. 1–368. [Google Scholar]

- Schlager, W.; Purkis, S.J. Reticulate reef patterns−antecedent karst versus self-organization. Sedimentology 2015, 62, 501–515. [Google Scholar] [CrossRef]

- Roberts, C.M.; Andelman, S.; Branch, G.; Bustamante, R.H.; Castilla, J.C.; Dugan, J.; Halpern, B.S.; Lafferty, K.D.; Leslie, H.; Lubchenco, J.; et al. Ecological criteria for evaluating candidate sites for marine reserves. Ecol. Appl. 2003, 13, 199–214. [Google Scholar] [CrossRef]

- Ward, T.J.; Vanderklift, M.A.; Nicholls, A.O.; Kechington, R.A. Selecting marine reserves using habitats and species assemblages as surrogates for biological diversity. Ecol. Appl. 1999, 9, 691–698. [Google Scholar] [CrossRef]

- Stevens, T.; Connolly, R.M. Local-scale mapping of benthic habitats to assess representation in a marine protected area. Mar. Freshw. Res. 2004, 56, 111–123. [Google Scholar] [CrossRef]

- Pittman, S.J.; Kneib, R.T.; Simenstad, C.A. Practicing coastal seascape ecology. Mar. Ecol. Prog. Ser. 2011, 427, 187–190. [Google Scholar] [CrossRef]

- Mumby, P.J.; Broad, K.; Brumbaugh, D.R.; Dahlgren, C.P.; Harborne, A.R.; Hastings, A.; Holmes, K.E.; Kappel, C.V.; Micheli, F.; Sanchirico, J.N. Coral reef habitats as surrogates of species, ecological functions, and ecosystem services. Conserv. Biol. 2008, 22, 941–951. [Google Scholar] [CrossRef] [PubMed]

- Murphy, H.M.; Jenkinsm, G.P. Observational methods used in marine spatial monitoring of fishes and associated habitats: a review. Mar. Freshw. Res. 2009, 61, 236–252. [Google Scholar] [CrossRef]

- Cushman, S.A.; Gutzweiler, K.; Evans, J.S.; McGarigal, K. The gradient paradigm: a conceptual and analytical framework for landscape ecology. In Spatial Complexity, Informatics, and Wildlife Conservation; Huettmann, F., Cushman, S.A., Eds.; Springer: New York, NY, USA, 2009; pp. 83–107. [Google Scholar]

- Mumby, P.J.; Hedley, J.D.; Chisholm, J.R.M.; Clark, C.D.; Ripley, H.; Jaubert, J. The cover of living and dead corals from airborne remote sensing. Coral Reefs 2004, 23, 171–183. [Google Scholar] [CrossRef]

- Kutser, T.; Miller, I.; Jupp, D.L.B. Mapping coral reef benthic substrates using hyperspectral space-borne images and spectral libraries. Estuarine Coast. Shelf Sci. 2006, 70, 449–460. [Google Scholar] [CrossRef]

- Goodman, J.; Ustin, S.L. Classification of benthic composition in a coral reef environment using spectral unmixing. J. Appl. Remote Sens. 2007, 1, 1–17. [Google Scholar]

- Torres-Madronero, M.C.; Velez-Reyes, M.; Goodman, J.A. Subsurface unmixing for benthic habitat mapping using hyperspecgtral imagery and lidar-derived bathymetry. Proc. SPIE 2014, 9088, 90880M:1–90880M:15. [Google Scholar]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Kutser, T.; Skirving, W.; Parslow, J.; Clementson, L.; Done, T.; Wakeford, M.; Miller, I. Spectral discrimination of coral reef bottom types. Geosci. Remote Sens. Symp. 2001, 6, 2872–2874. [Google Scholar]

- Barott, K.; Smith, J.; Dinsdale, E.; Hatay, M.; Sandin, S.; Rohwer, F. Hyperspectral and Physiological Analyses of Coral-Algal Interactions. PLoS ONE 2009, 4, e8043. [Google Scholar] [CrossRef] [PubMed]

- Myers, M.R.; Hardy, J.T.; Mazel, C.H.; Dustan, P. Optical spectra and pigmentation of Caribbean reef corals and macroalgae. Coral Reefs 1999, 18, 179–186. [Google Scholar] [CrossRef]

- Rowlands, G.P.; Purkis, S.J.; Riegl, B.M. The 2005 coral-bleaching event Roatan (Honduras): Use of pseudo-invariant features (PIFs) in satellite assessments. J. Spat. Sci. 2008, 53, 99–112. [Google Scholar] [CrossRef]

- Mumby, P.J.; Harborne, A.R. Development of a systematic classification scheme of marine habitats to facilitate regional management and mapping of Caribbean coral reefs. Biol. Conserv. 1999, 88, 155–163. [Google Scholar] [CrossRef]

- Joyce, K.E. A Method for Mapping Live Coral Cover Using Remote Sensing. Ph.D Thesis, The University of Queensland, Queensland, Australia, 2005. [Google Scholar]

- Stumpf, R.; Holderied, K.; Sinclair, M. Determination of water depth with high-resolution satellite imagery over variable bottom types. Limnol. Oceanogr. 2003, 48, 547–556. [Google Scholar] [CrossRef]

- Heumann, B.W. Satellite remote sensing of mangrove forests: Recent advances and future opportunities. Prog. Phys. Geogr. 2011, 35, 87–108. [Google Scholar] [CrossRef]

- Kuenzer, C.; Buemel, A.; Gebhardt, S.; Zuoc, T.V.; Dech, S. Remote sensing of mangrove ecosystems: A review. Remote Sens. 2011, 3, 878–928. [Google Scholar] [CrossRef]

- Simard, M.; Zhang, K.; Rivera-Monroy, V.H.; Ross, M.S.; Ruiz, P.L.; Castañeda-Moya, E.; Twilley, R.; Rodriguez, E. Mapping height and biomass of mangrove forests in Everglades National Park with SRTM elevation data. Photogramm. Eng. Remote Sens. 2006, 3, 299–311. [Google Scholar] [CrossRef]

- Zhang, K. Identification of gaps in mangrove forests with airborne LIDAR. Remote Sens. Environ. 2008, 112, 2309–2325. [Google Scholar] [CrossRef]

- Knight, J.M.; Dale, P.E.R.; Spencer, J.; Griffin, L. Exploring LiDAR data for mapping the micro-topography and tidal hydro-dynamics of mangrove systems: an example from southeast Queensland, Australia. Estuar. Coast. Shelf Sci. 2009, 85, 593–600. [Google Scholar] [CrossRef]

- Wang, L.; Sousa, W.P.; Gong, P. Integration of object-based and pixel-based classification for mapping mangroves with IKONOS imagery. Int. J. Remote Sens. 2004, 25, 5655–5668. [Google Scholar] [CrossRef]

- Alatorre, L.C.; Sánchez-Andrés, R.; Cirujano, S.; Beguería, S.; Sánchez-Carrillo, S. Identification of mangrove areas by remote sensing: The ROC curve technique applied to the northwestern Mexico Coastal Zone using Landsat imagery. Remote Sens. 2011, 3, 1568–1583. [Google Scholar] [CrossRef]

- Rebelo-Mochel, F.; Ponzoni, F.J. Spectral characterization of mangrove leaves in the Brazilian Amazonian Coast: Turiacu Bay, Maranhao State. Ann. Braz. Acad. Sci. 2007, 79, 683–692. [Google Scholar] [CrossRef]

- Kamaruzaman, J.; Kasawani, I. Imaging spectrometry on mangrove species identification and mapping in Malaysia. WSEAS Trans. Biol. Biomed. 2007, 8, 118–126. [Google Scholar]

- Kamal, M.; Phinn, S.; Johansen, K. Characterizing the spatial structure of mangrove features for optimizing image-based mangrove mapping. Remote Sens. 2014, 6, 984–1006. [Google Scholar] [CrossRef]

- Kamal, M.; Phinn, S.; Johansen, K. Extraction of multi-scale mangrove features from WorldView-2 image data: an object-based image analysis approach. In Proceedings of the 34th Asian Conference on Remote Sensing, Bali, Indonesia, 20–24 October 2013; Volume 34, pp. 653–659.

- Heenkenda, M.K.; Joyce, K.E.; Maier, S.W.; Bartolo, R. Mangrove species identification: Comparing WorldView-2 with aerial photographs. Remote Sens. 2014, 6, 6064–6088. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saul, S.; Purkis, S. Semi-Automated Object-Based Classification of Coral Reef Habitat using Discrete Choice Models. Remote Sens. 2015, 7, 15894-15916. https://doi.org/10.3390/rs71215810

Saul S, Purkis S. Semi-Automated Object-Based Classification of Coral Reef Habitat using Discrete Choice Models. Remote Sensing. 2015; 7(12):15894-15916. https://doi.org/10.3390/rs71215810

Chicago/Turabian StyleSaul, Steven, and Sam Purkis. 2015. "Semi-Automated Object-Based Classification of Coral Reef Habitat using Discrete Choice Models" Remote Sensing 7, no. 12: 15894-15916. https://doi.org/10.3390/rs71215810

APA StyleSaul, S., & Purkis, S. (2015). Semi-Automated Object-Based Classification of Coral Reef Habitat using Discrete Choice Models. Remote Sensing, 7(12), 15894-15916. https://doi.org/10.3390/rs71215810