Object-Based Analysis of Airborne LiDAR Data for Building Change Detection

Abstract

:1. Introduction

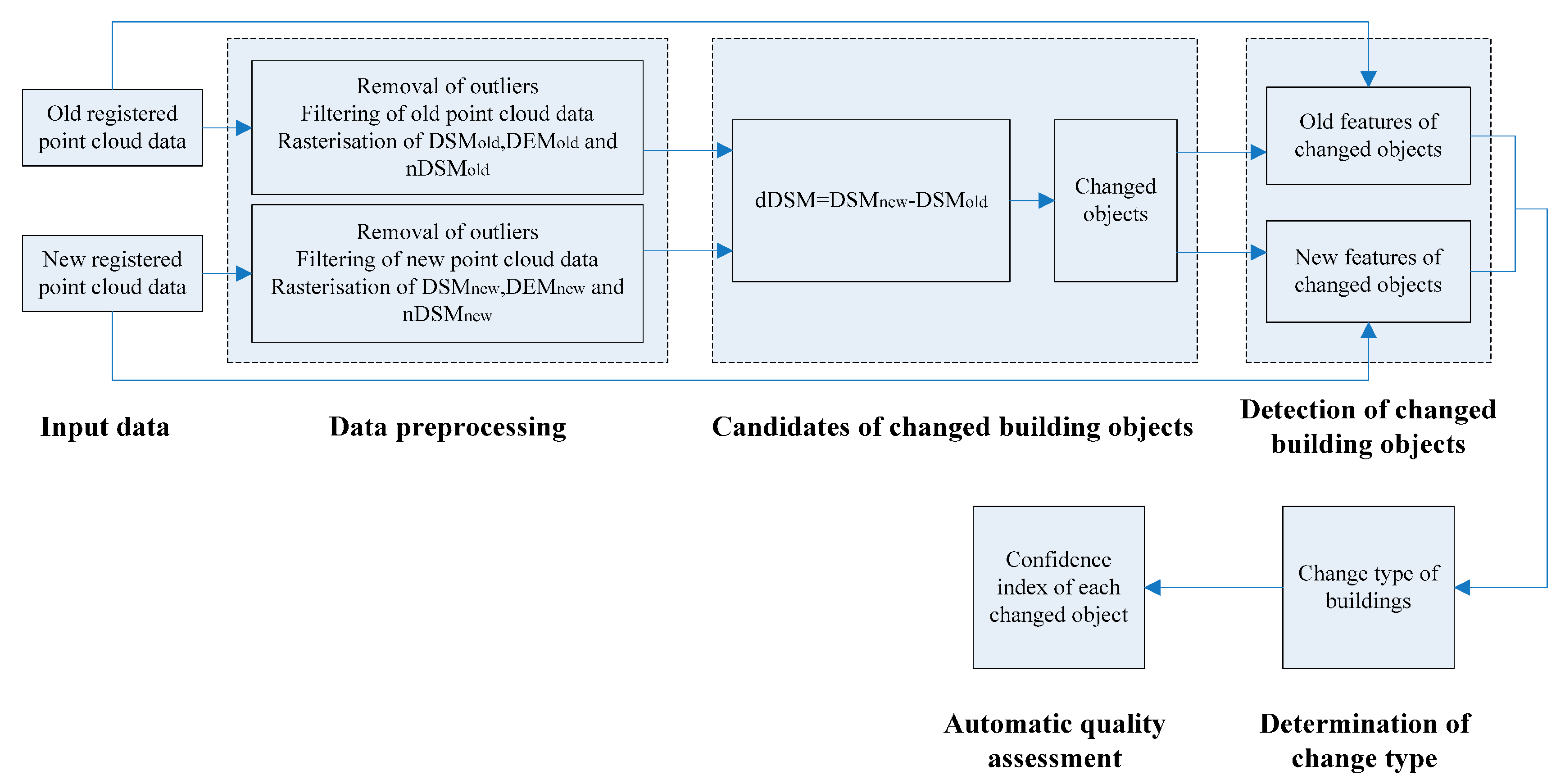

2. Object-Based Analysis for Building Change Detection

2.1. Data Preprocessing

- Removal of outliers. A DEM surface interpolated by a point cloud with outliers (extremely low or high points) will be distorted and deformed. Therefore, removing outliers from old and new point clouds is necessary. In this study, the elevation histogram method [22] is first used to exclude evident outliers. The Delaunay triangulation [23,24,25] is then applied to determine the less evident outliers.

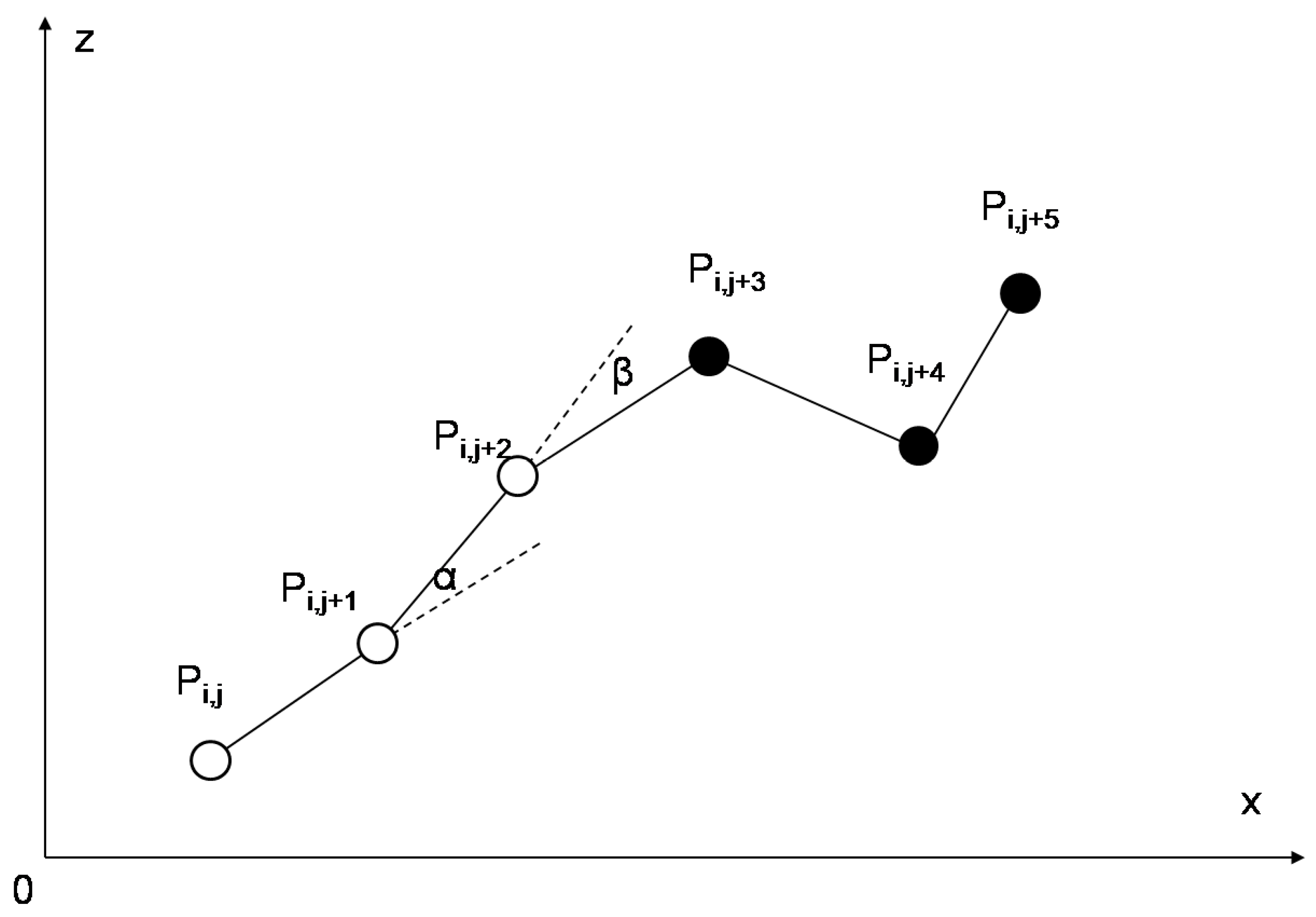

- Point cloud data filtering. Point cloud data filtering distinguishes ground points from non-ground points and has recently become a relatively mature technology. We used the commercial software TerraSolid to filter the data with the following basic principles [26,27,28]. First, a sparse triangulated irregular network (TIN) is first created from seed points. Thereafter, on the basis of the parameters derived from the data, the TIN is progressively densified in an iterative process. The iteration stops when no more points meet the thresholds.

- Rasterization of DSMs and DEMs from the old and new point cloud data. The TIN algorithm [26] is used to interpolate gridded DSMs and DEMs. By taking the generation of a new DSM as an example, the points are first arranged into a grid (the grid cell size used in this study is set to 1.0 m). Thereafter, the lowest point of each grid cell is selected to build the triangulation. Finally, the height of the gridded points of the DSM is interpolated on the basis of the three points of the located triangle. The same technique is used to acquire an old gridded DSM. A similar method is used for the rasterization of new and old DEMs, but only the ground points are used during triangulation.

- Generation of nDSMold and nDSMnew. nDSM is obtained by subtracting the appropriate DEM from the DSM:

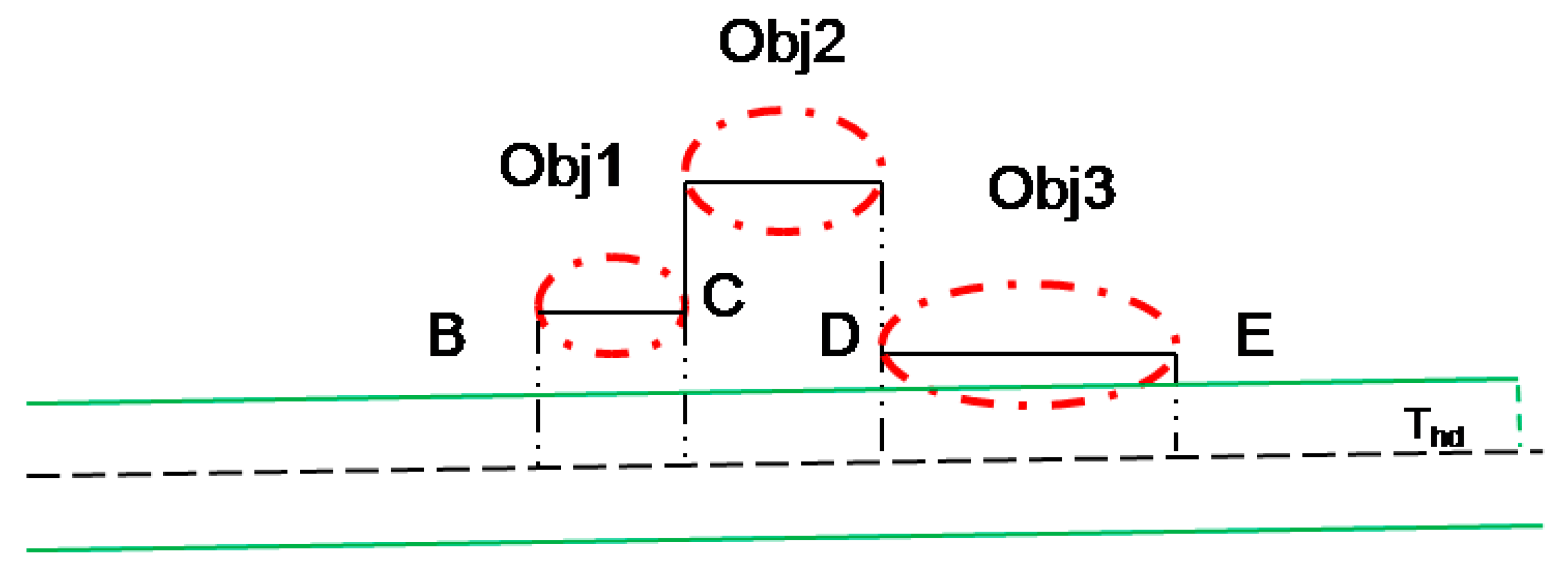

2.2. Extraction of Candidates for Changed Building Objects

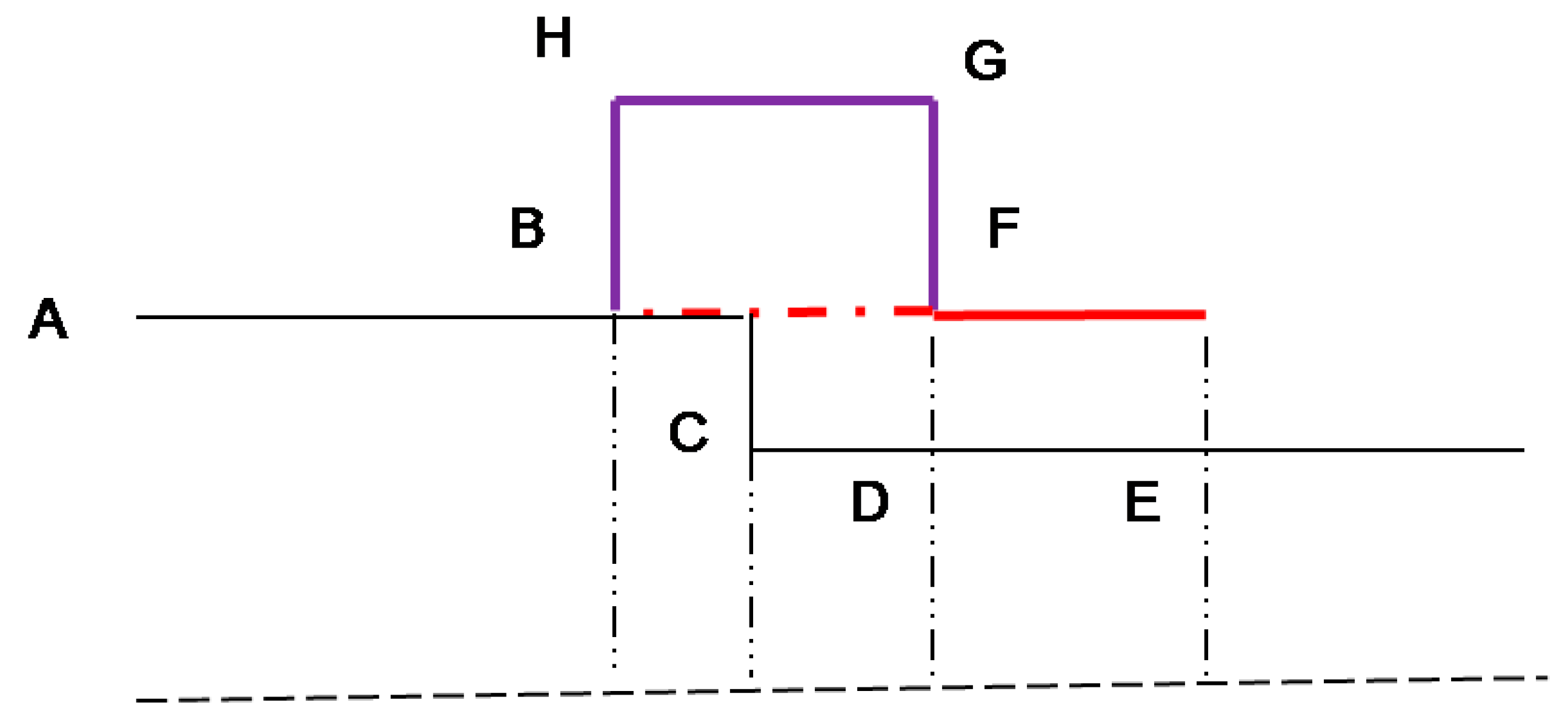

2.3. Detection of Changed Building Objects

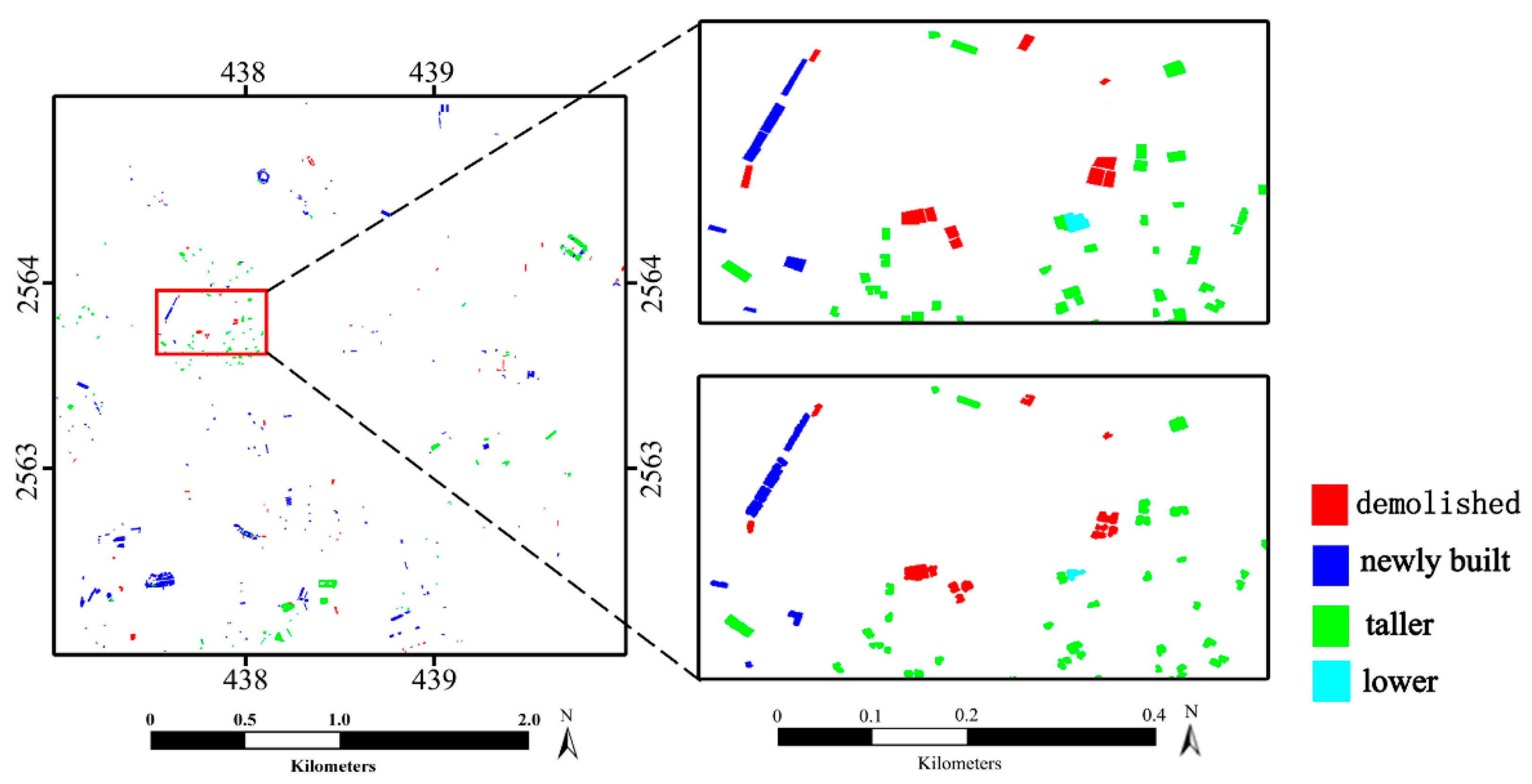

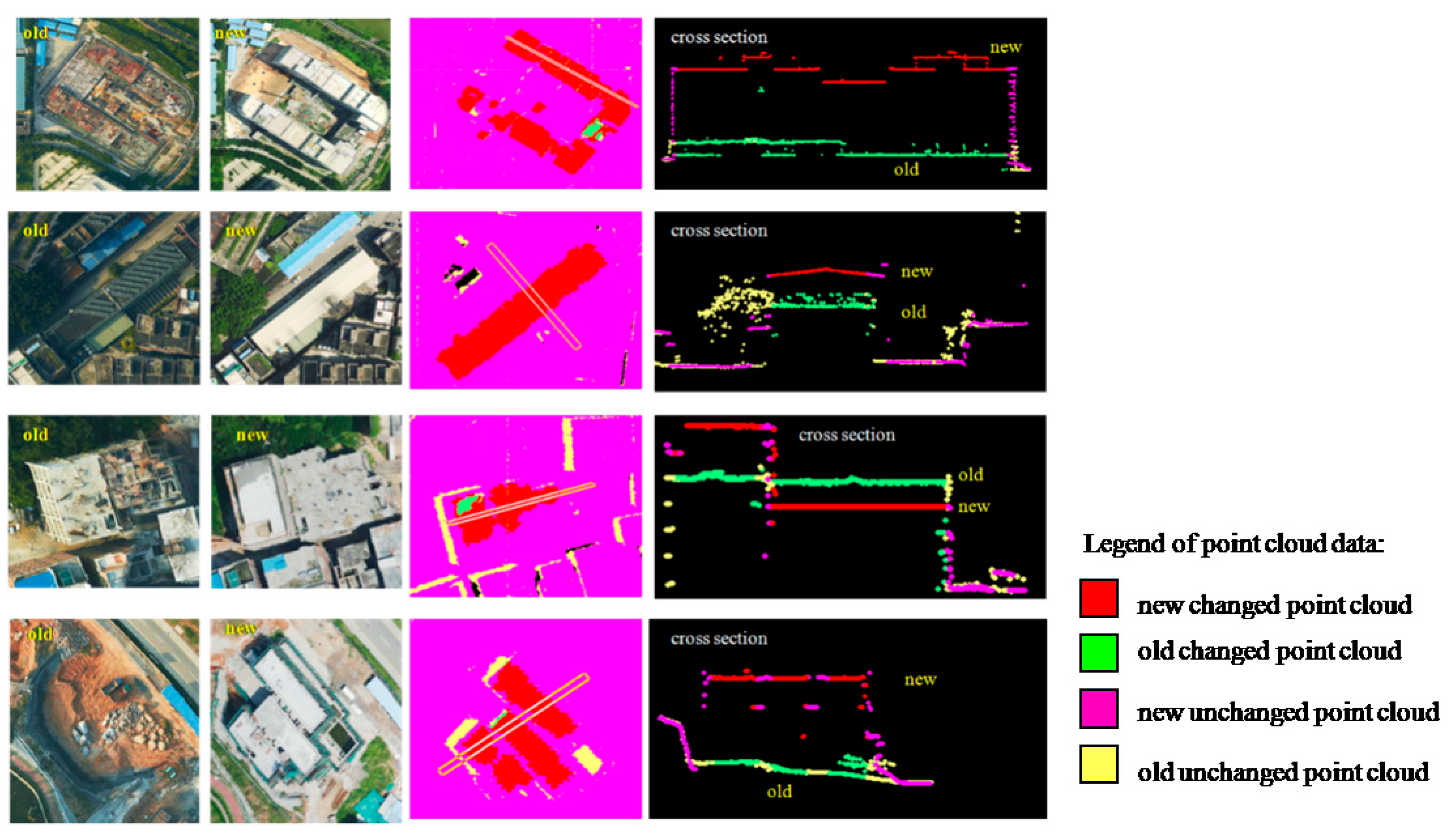

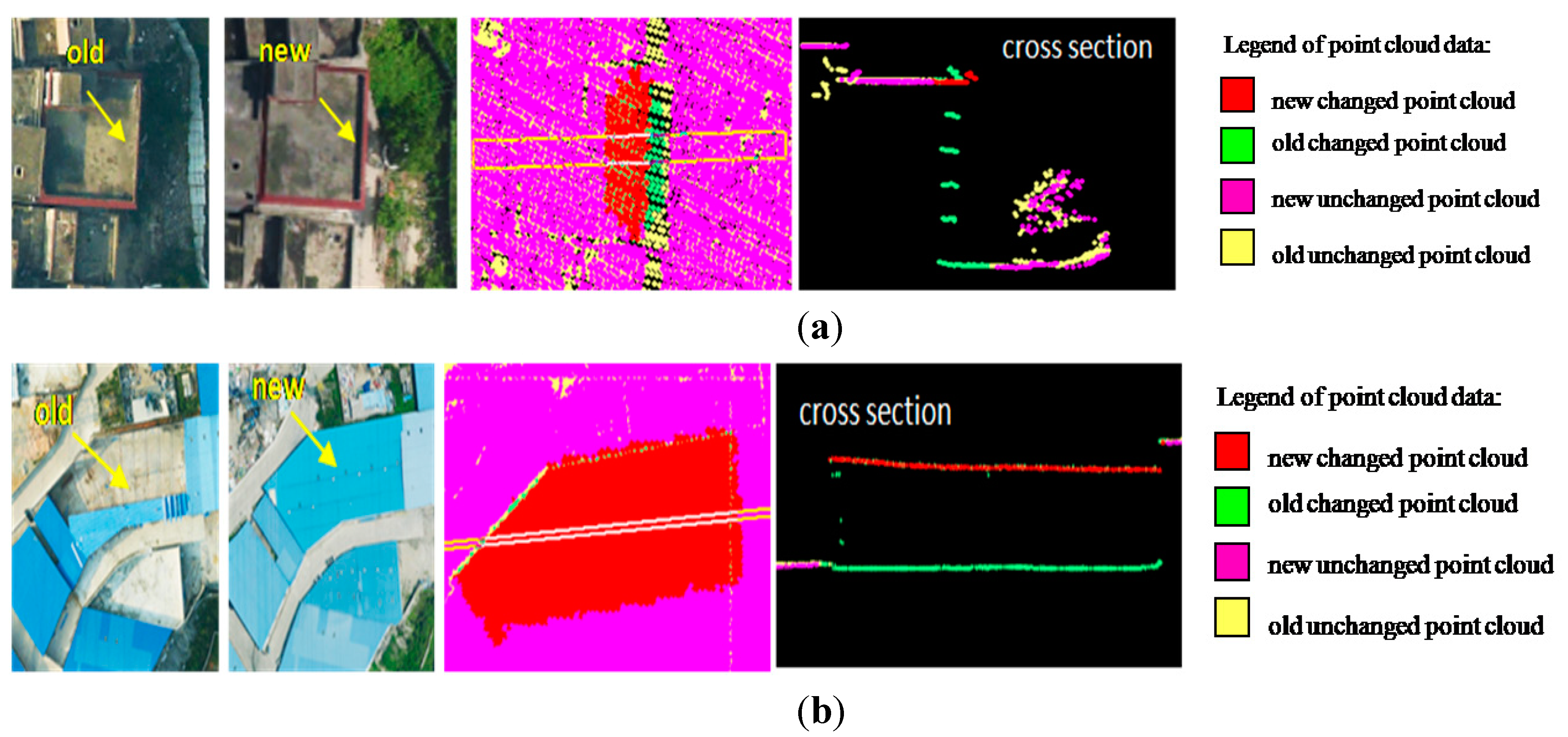

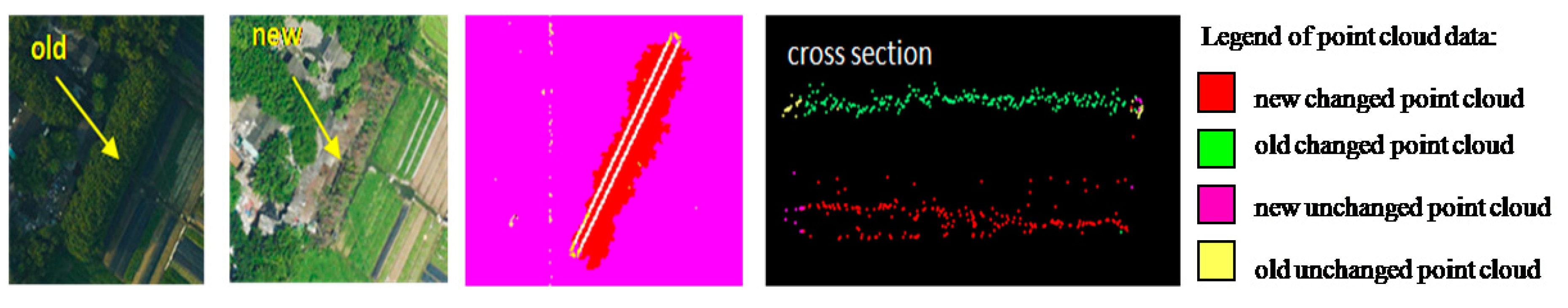

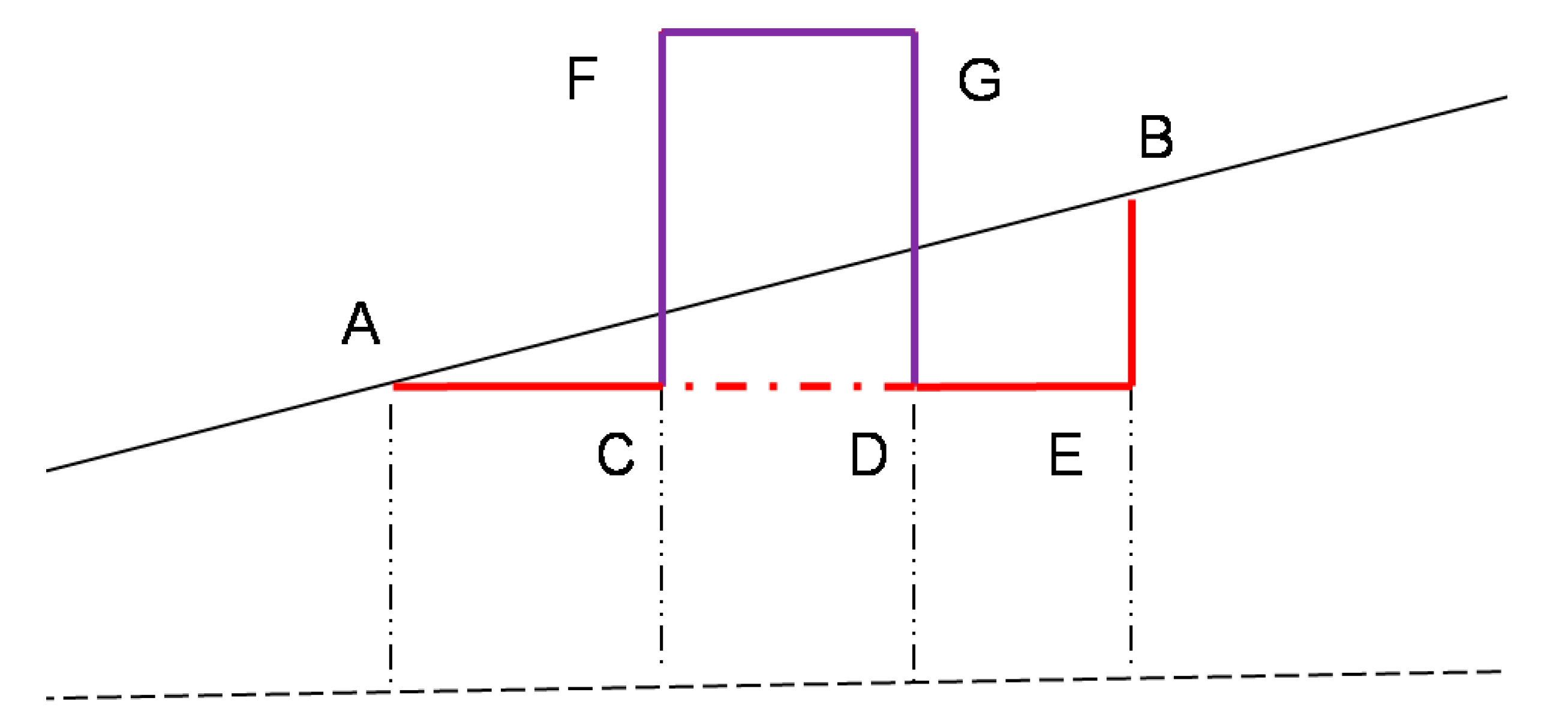

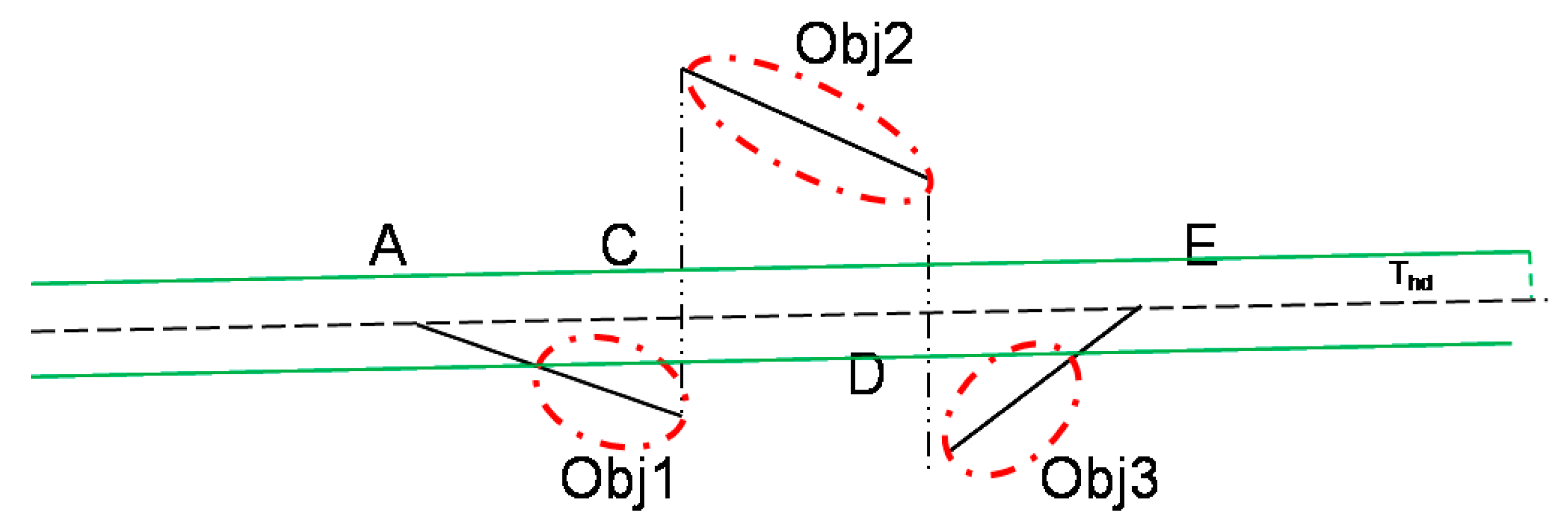

2.4. Determination of Change Type

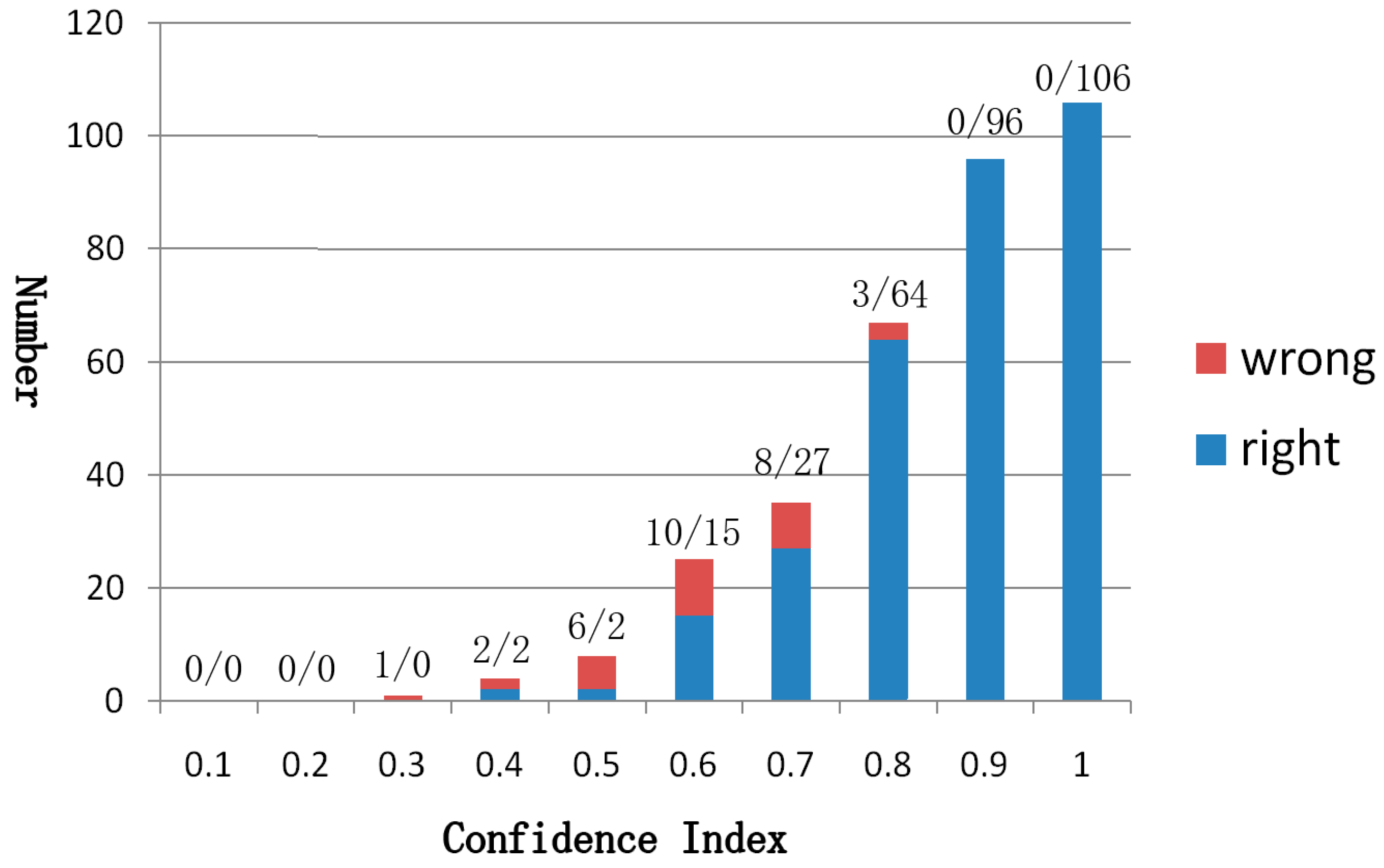

2.5. Automatic Quality Assessment of Building Change Detection

3. Experimental Results and Discussion

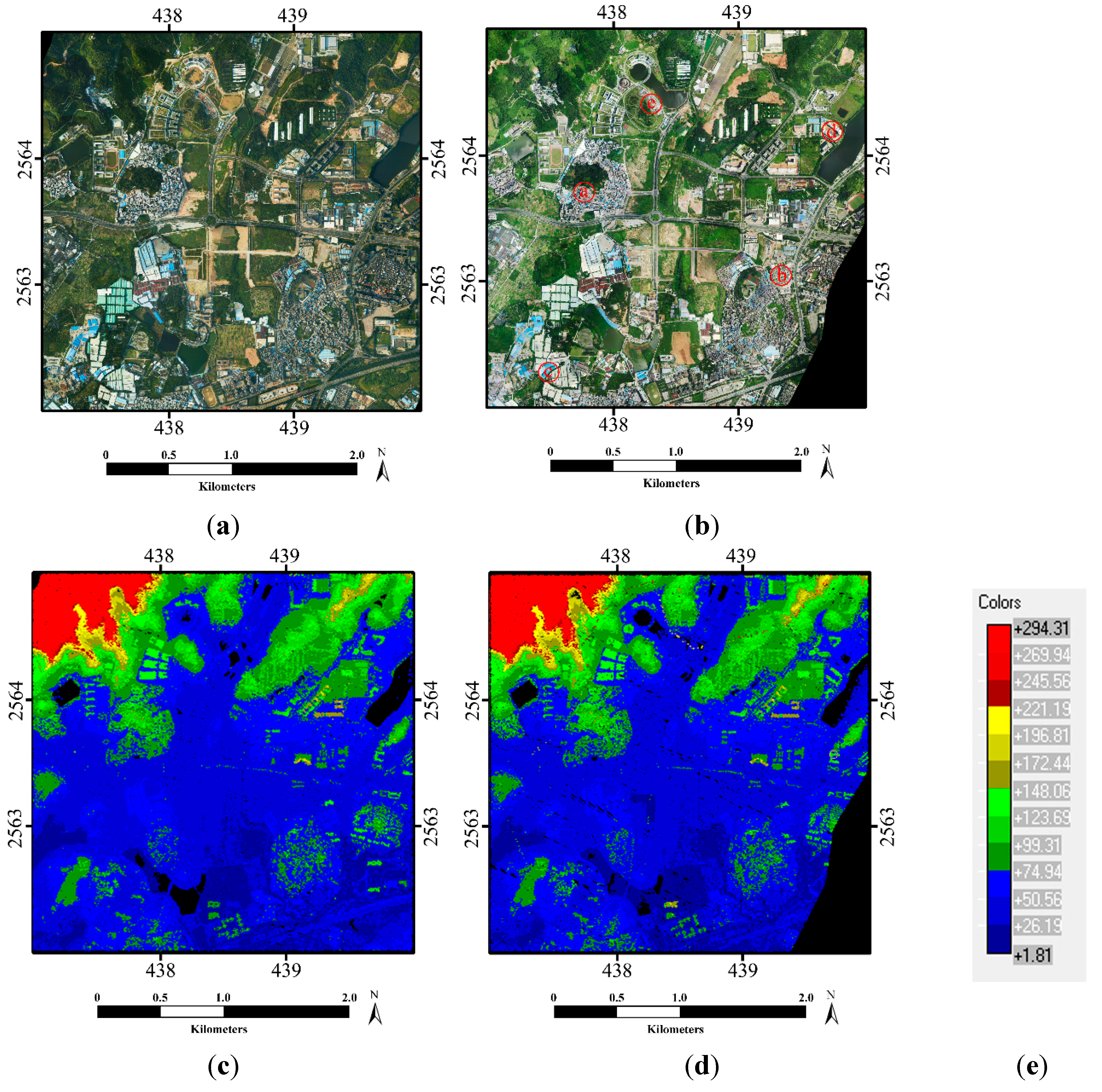

3.1. Data Set

3.2. Parameters

| Parameter | Threshold |

|---|---|

| Gridcell size of DSM/DEM/nDSM | 1.0 m |

| Angle for detection of the points of smooth areas | 10° |

| Minimum size of changed building objects | 25 |

| Minimum height of building object | 3.0 m |

| Maximum distance from the point to the fitting plane of RANSAC | 0.15 m |

| Minimum planarity ratio for building confirmation | 0.6 |

3.3. Performance

| Algorithm/True | No Change | Newly Built | Taller | Demolished | Lower |

|---|---|---|---|---|---|

| No change | 0 | 3 | 2 | 2 | 0 |

| Newly built | 17 | 140 | 0 | 0 | 0 |

| Taller | 1 | 0 | 118 | 0 | 0 |

| Demolished | 12 | 0 | 0 | 53 | 0 |

| Lower | 0 | 0 | 0 | 0 | 1 |

3.4. Discussion

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Brunner, D.; Lemoine, G.; Bruzzone, L. Earthquake damage assessment of buildings using VHR optical and SAR imagery. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2403–2420. [Google Scholar] [CrossRef]

- Bovolo, F.; Marin, C.; Bruzzone, L. A novel approach to building change detection in very high resolution SAR images. Proc. SPIE 2012, 8537. [Google Scholar] [CrossRef]

- Vu, T.T.; Ban, Y. Context-based mapping of damaged buildings from high-resolution optical satellite images. Int. J. Remote Sens. 2010, 31, 3411–3425. [Google Scholar] [CrossRef]

- Meng, Y.; Zhao, Z.; Du, X.; Peng, S. Building change detection based on similarity calibration. In Proceedings of the IEEE Fifth International Conference on Fuzzy Systems and Knowledge Discovery, Jinan, China, 18–20 October 2008; 2008. FSKD ’08. pp. 527–531. [Google Scholar]

- Argialas, D.P.; Michailidou, S.; Tzotsos, A. Change detection of buildings in suburban areas from high resolution satellite data developed through object based image analysis. Surv. Rev. 2013, 45, 441–450. [Google Scholar] [CrossRef]

- Li, P.; Xu, H.; Guo, J. Urban building damage detection from very high resolution imagery using OCSVM and spatial features. Int. J. Remote Sens. 2010, 31, 3393–3409. [Google Scholar] [CrossRef]

- Tang, Y.; Huang, X.; Zhang, L. Fault-tolerant building change detection from urban high-resolution remote sensing imagery. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1060–1064. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L.; Zhu, T. Building change detection from multitemporal high-resolution remotely sensed images based on a morphological building index. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 105–115. [Google Scholar] [CrossRef]

- Murakami, H.; Nakagawa, K.; Hasegawa, H. Change detection of buildings using an airborne laser scanner. ISPRS J. Photogramm. Remote Sens. 1999, 54, 148–152. [Google Scholar] [CrossRef]

- Vu, T.T.; Matsuoka, M.; Yamazaki, F. LIDAR-based change detection of buildings in dense urban areas. In Proceedings of 2004 IEEE International Geoscience and Remote Sensing Symposium, IGARSS ’04. Anchorage, AK, USA, 20–24 September 2004; Volume 5, pp. 3413–3416.

- Jung, F. Detecting building changes from multitemporal aerial stereo pairs. ISPRS J. Photogramm. Remote Sens. 2004, 58, 187–201. [Google Scholar] [CrossRef]

- Teo, T.; Shih, T. Lidar-based change detection and change type determination in urban areas. Int. J. Remote Sens. 2013, 34, 968–981. [Google Scholar] [CrossRef]

- Rottensteiner, F. Automated updating of building data bases from digital surface models and multi-spectral images: Potential and limitations. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, XXXVII, 265–270. [Google Scholar]

- Hermosilla, T.; Ruiz, L.A.; Recio, J.A.; Estornell, J. Evaluation of automatic building detection approaches combining high resolution images and LiDAR data. Remote Sens. 2011, 3, 1188–1210. [Google Scholar] [CrossRef]

- Grigillo, D.; Kosmatin Fras, M.; Petrovič, D. Automatic extraction and building change detection from digital surface model and multispectral orthophoto. Geod. Vestn. 2011, 55, 11–27. [Google Scholar] [CrossRef]

- Malpica, J.A.; Alonso, M.C.; Papí, F.; Arozarena, A.; Martínez De Agirre, A. Change detection of buildings from satellite imagery and lidar data. Int. J. Remote Sens. 2013, 34, 1652–1675. [Google Scholar] [CrossRef]

- Tian, J.; Cui, S.; Reinartz, P. Building change detection based on satellite stereo imagery and digital surface models. IEEE Trans. Geosci. Remote Sens. 2014, 52, 406–417. [Google Scholar] [CrossRef]

- Knudsen, T.; Olsen, B.P. Automated change detection for updates of digital map databases. Photogramm. Eng. Remote Sens. 2003, 69, 1289–1296. [Google Scholar] [CrossRef]

- Bouziani, M.; Goïta, K.; He, D. Automatic change detection of buildings in urban environment from very high spatial resolution images using existing geodatabase and prior knowledge. ISPRS J. Photogramm. Remote Sens. 2010, 65, 143–153. [Google Scholar] [CrossRef]

- Liu, Z.; Gong, P.; Shi, P.; Chen, H.; Zhu, L.; Sasagawa, T. Automated building change detection using UltraCamD images and existing CAD data. Int. J. Remote Sens. 2010, 31, 1505–1517. [Google Scholar] [CrossRef]

- Matikainen, L.; Hyyppä, J.; Ahokas, E.; Markelin, L.; Kaartinen, H. Automatic detection of buildings and changes in buildings for updating of maps. Remote Sens. 2010, 2, 1217–1248. [Google Scholar] [CrossRef]

- Silván-Cárdenas, J.L.; Wang, L. A multi-resolution approach for filtering LiDAR altimetry data. ISPRS J. Photogramm. Remote Sens. 2006, 61, 11–22. [Google Scholar] [CrossRef]

- Meng, X.; Wang, L.; Silván-Cárdenas, J.L.; Currit, N. A multi-directional ground filtering algorithm for airborne LIDAR. ISPRS J. Photogramm. Remote Sens. 2009, 64, 117–124. [Google Scholar] [CrossRef]

- Meng, X.; Wang, L.; Currit, N. Morphology-based building detection from airborne LIDAR data. Photogramm. Eng. Remote Sens. 2009, 75, 427–442. [Google Scholar]

- Zhang, J.; Lin, X.; Ning, X. SVM-based classification of segmented airborne LiDAR point clouds in urban areas. Remote Sens. 2013, 5, 3749–3775. [Google Scholar] [CrossRef]

- Axelsson, P. DEM generation from laser scanner data using adaptive TIN models. Int. Arch. Photogramm. Remote Sens. 2000, 33, 111–118. [Google Scholar]

- Kang, X.; Liu, J.; Lin, X. Streaming progressive TIN densification filter for airborne LiDAR point clouds using multi-core architectures. Remote Sens. 2014, 6, 7212–7232. [Google Scholar] [CrossRef]

- Zhu, X.; Toutin, T. Land cover classification using airborne LiDAR products in beauport, Québec, Canada. Int. J. Image Data Fusion 2013, 4, 252–271. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. A method for registration of 3D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pang, S.; Hu, X.; Wang, Z.; Lu, Y. Object-Based Analysis of Airborne LiDAR Data for Building Change Detection. Remote Sens. 2014, 6, 10733-10749. https://doi.org/10.3390/rs61110733

Pang S, Hu X, Wang Z, Lu Y. Object-Based Analysis of Airborne LiDAR Data for Building Change Detection. Remote Sensing. 2014; 6(11):10733-10749. https://doi.org/10.3390/rs61110733

Chicago/Turabian StylePang, Shiyan, Xiangyun Hu, Zizheng Wang, and Yihui Lu. 2014. "Object-Based Analysis of Airborne LiDAR Data for Building Change Detection" Remote Sensing 6, no. 11: 10733-10749. https://doi.org/10.3390/rs61110733