Spectral Difference in the Image Domain for Large Neighborhoods, a GEOBIA Pre-Processing Step for High Resolution Imagery

Abstract

:1. Introduction

1.1. Contrast in Visual Interpretation

1.2. Simulating Human Vision

1.3. Adding Artificial Layers

2. Contextual Analysis for Single Pixels

2.1. Convolution

| 0 | −1 | 0 |

| −1 | 4 | −1 |

| 0 | −1 | 0 |

2.2. Example of Contrast within GEOBIA

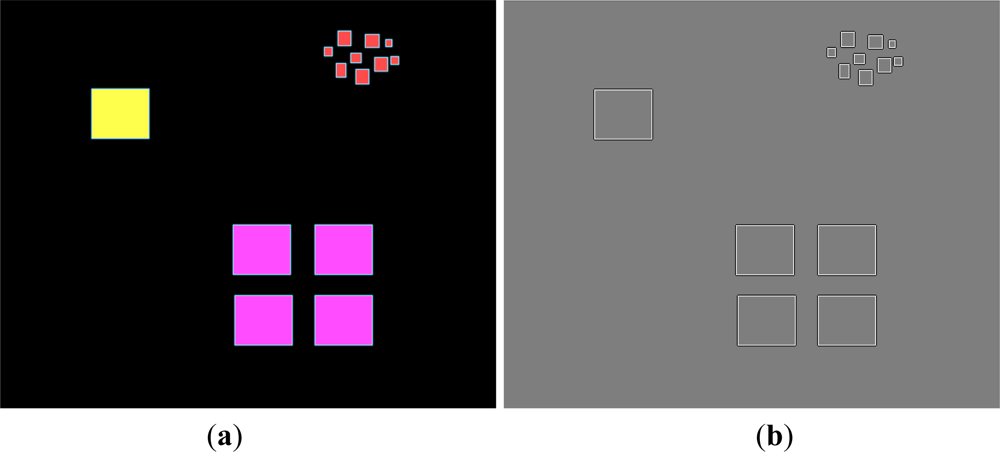

2.2.1. Mean Difference to Neighbor

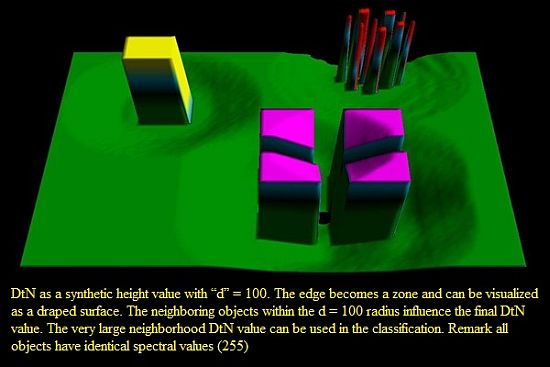

2.2.2. Enlarging the Context

2.2.3. Response to Self-Repeatability

3. Experimental Section

3.1. Homogeneous Objects

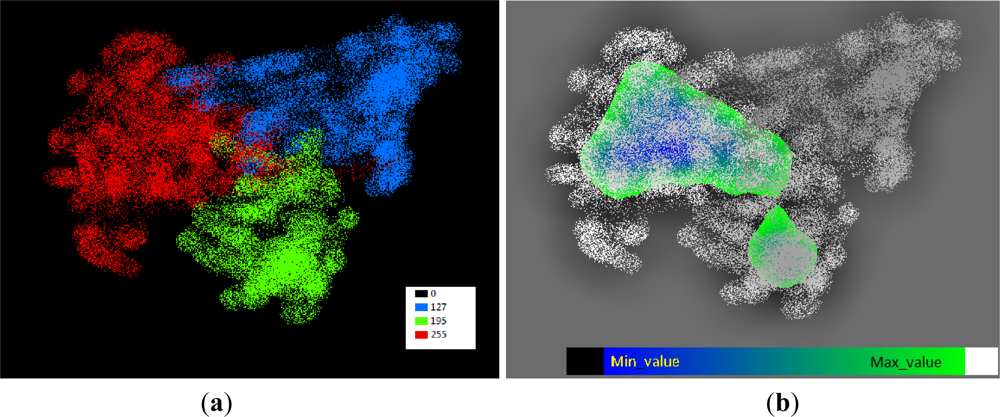

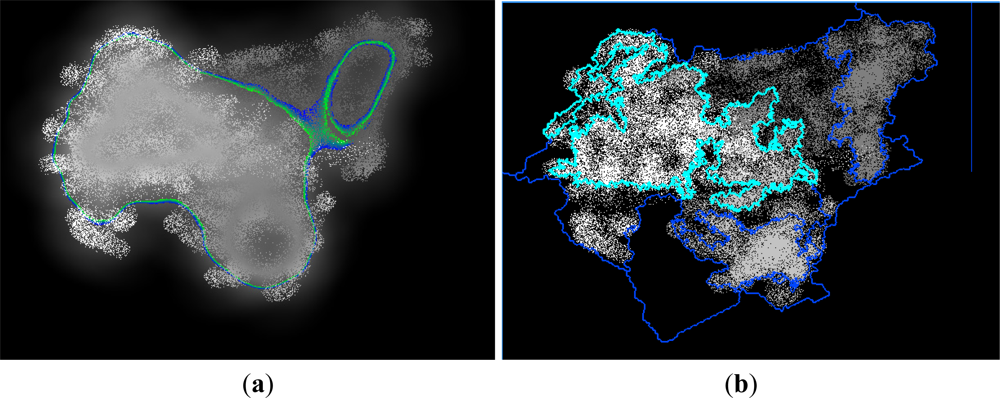

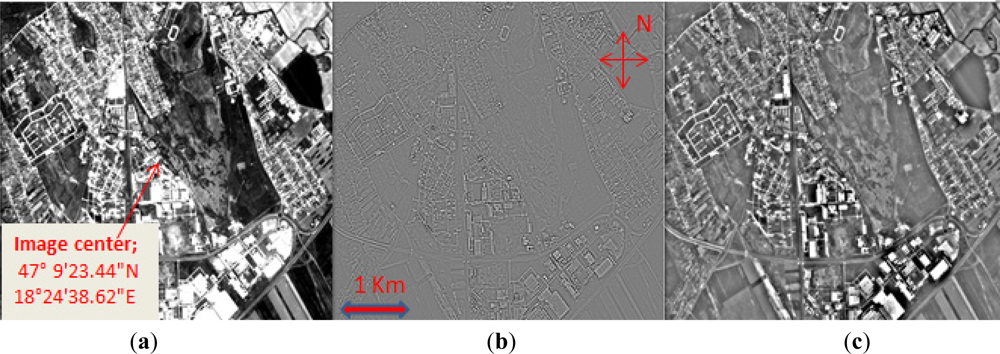

3.2. Delineation of Fuzzy Objects

3.2.1. Density Slicing of Zones

3.2.2. Comparing non-Competing Algorithms

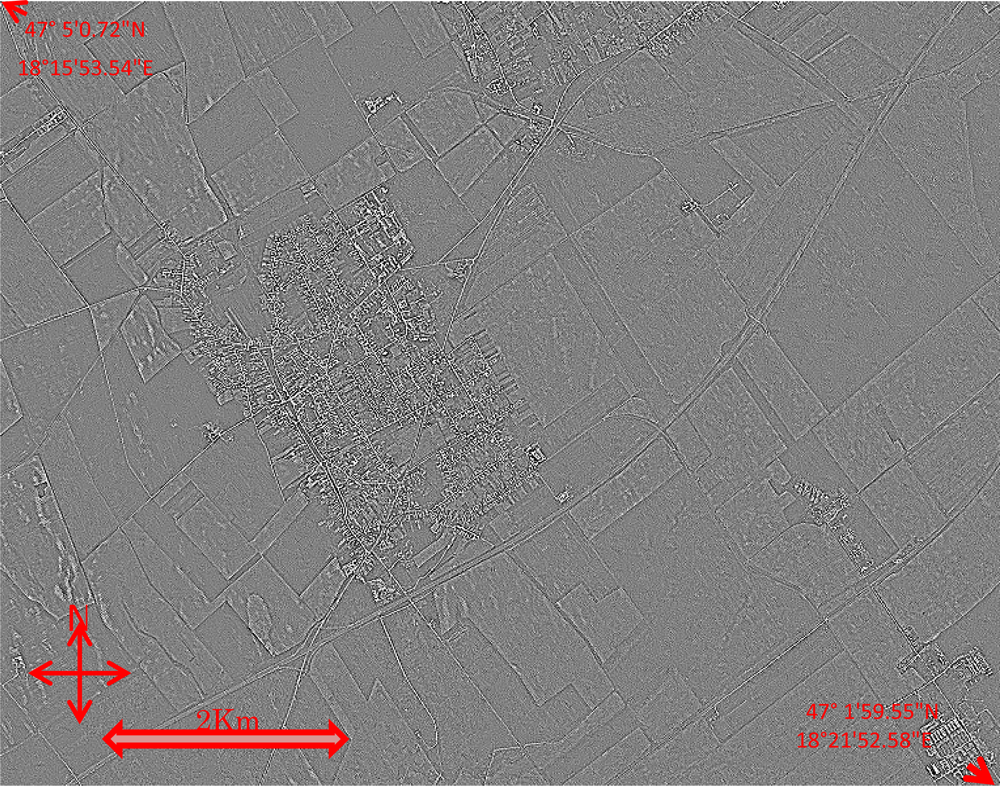

3.3. An Example with the Red Band of RapidEye

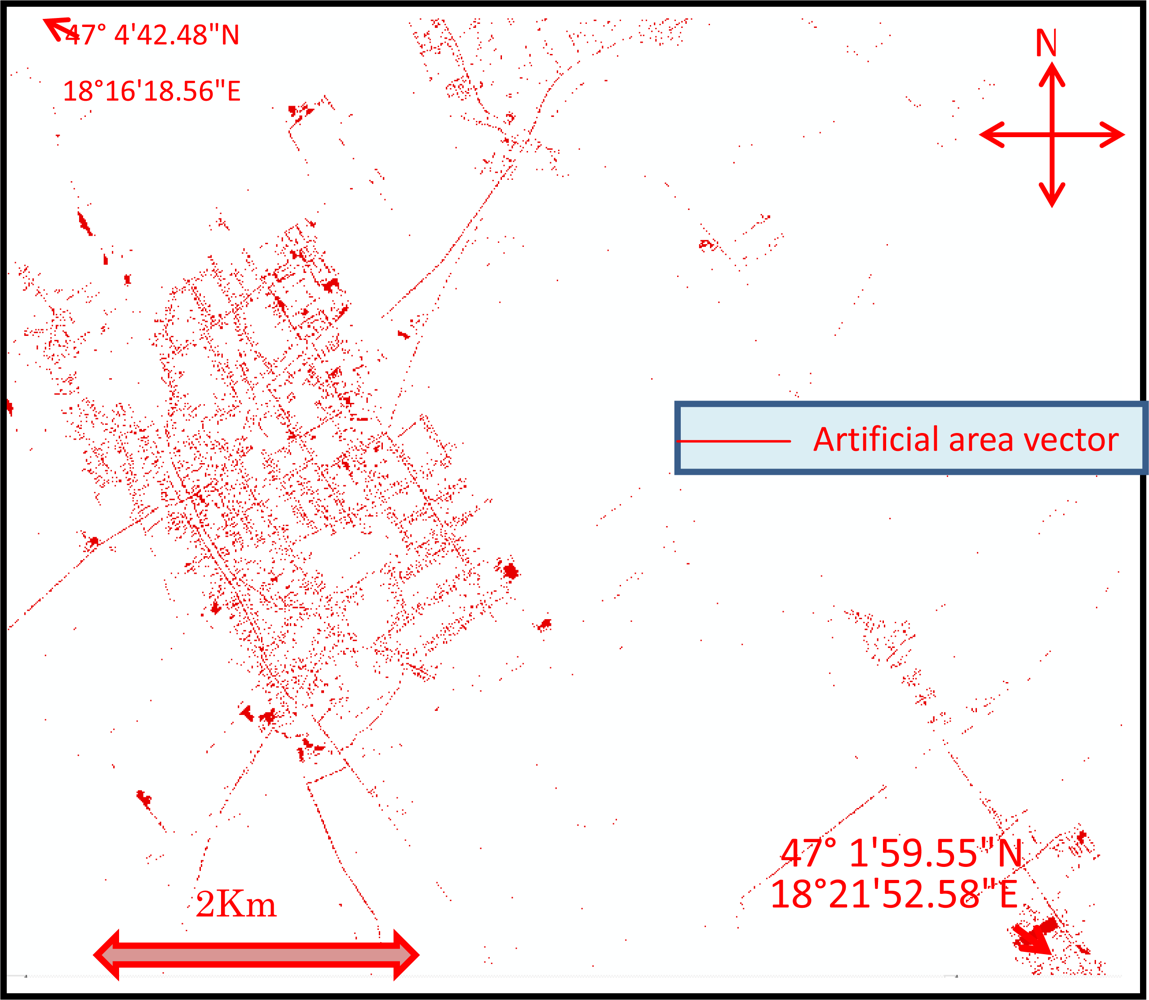

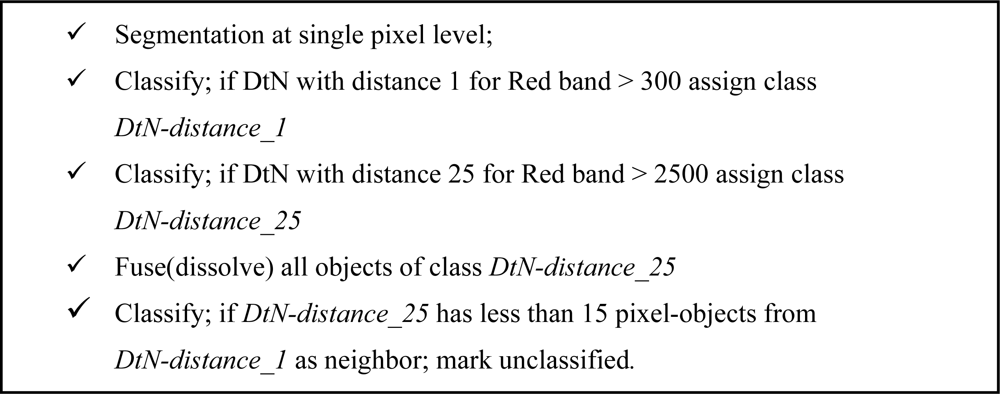

3.4. Extracting Artificial Areas

3.4.1. Visual Confirmation on GoogleEarth™

3.4.2. A Simplified Protocol

3.5. Hardware and Technical Specifications

4. Discussion

4.1. Preserving Information before GEOBIA Aggregation

- The GEOBIA focus on the classification of local homogeneous pixel populations [1,12]. The first step in GEOBIA analysis normally concentrates on segmenting useful image objects containing local pixel populations with a mean value and standard deviation. A deviation of this standard practice is suggested in order to preserve the feature attributes of single pixel objects.

- In the selection of useful feature attributes for GEOBIA classification, there is no suggestion on the optimal setting for the diameter search radius in DtN. This paper recommends the inclusion of large neighborhood analysis in addition to common contrast analysis on local neighborhoods.

- The radius “d” = 25 for satellite imagery with 5-m pixel size is chosen to obtain a result in a matter of 10 to 15 min for each calculation but “d” = 50 or “d” = 100 could be useful if extensive hardware resources are available. The design of feature attributes in this respect remains a problem to be solved by expert design based upon domain knowledge.

- DtN values for single pixels with large “d” search radius must explicitly be set as a parameter. Offering a large set of features to an automatic feature mining procedure risks that only the direct neighborhood of DtN using low values for “d” will be evaluated and the advantages of large neighborhood for DtN are then at risk of being neglected.

- The characterizing of a single value for radius “d” is not sufficient for the context of a single-pixel object, but a series of values for close and far neighborhoods is required. The optimal sequence is part of ongoing research.

4.2. Agricultural Application with Urban Mapping Extensions

4.3. Potential for Additional Applications of DtN

5. Conclusions

Acknowledgments

References and Notes

- Blaschke, T.; Lang, S.; Hay, G.J. (Eds.) Object Based Image Analysis; Springer: Heidelberg/Berlin, Germany, 2008.

- Gorte, B. Multi-spectral quadtree based image segmentation. Int. Arch. Photogramm. Remote Sens 1996, 31, Part B3,. 251–256. [Google Scholar]

- Burt, P.J.; Adelson, E.H. The Laplacian Pyramid as a compact image code. IEEE Trans. Commun 1983, 31, 532–540. [Google Scholar]

- Walter, V. Object-based classification of remote sensing data for change detection. ISPRS J. Photogramm 2004, 58, 225–238. [Google Scholar]

- Marr, D. Vision; Freeman Publishers: New York, NY, USA, 1982. [Google Scholar]

- Dykes, S.G.; Zhang, X. Folding Spatial Image Filters on the CM-5. Proceedings of 9th International Parallel Processing Symposium, Santa Barbara, CA, USA, 25–28 April 1995.

- Wijaya, A.; Marpu, P.R.; Gloaguen, R. Geostatistical, Texture Classification of Tropical Rainforests in Indonesia. Proceedings of 5th International Symposium in Spatial Data Quality, Enschede, The Netherlands, 13–15 June 2007.

- Trimble/Definiens. Definiens, eCognition Developer 8.1, Reference Book, Accompany Version 8.1.0; Build 1653 x64, 17 September 2010. (Part of the software installation package).

- Horne, J.H. A Tasseled Cap Transformation for IKONOS Images. Proceedings of ASPRS 2003 Conference, Anchorage, AK, USA, 4–9 May 2003.

- Baatz, M.; Schaepe, A. Multiresolution Segmentation—An Optimization Approach for High Quality Multi-Scale Segmentation. In Angewandte Geographische Informationsverarbeitung XII; Strobl, J., Blaschke, T., Griesebner, G., Eds.; Wichmann Verlag: Karlsruhe, Germany, 2000; pp. 12–23. [Google Scholar]

- Zhang, R.; Zhu, D. Study of land cover classification based on knowledge rules using high-resolution remote sensing images. Expert Syst. Appl 2011, 38, 3647–3652. [Google Scholar]

- Blaschke, T.; Hay, G.H.; Weng, Q.; Resch, B. Collective sensing: Integrating geospatial technologies to understand urban systems—An overview. Remote Sens 2011, 3, 1743–1776. [Google Scholar]

- Shorter, N.; Kasparis, T. Automatic vegetation identification and building detection from a single nadir aerial image. Remote Sens 2009, 1, 731–757. [Google Scholar]

- de Kok, R.; Tasdemir, K. Contrast Analysis in High-Resolution Imagery for Near and Far Neighborhoods. Proceedings of GEOBIA 2012, Rio de Janeiro, Brazil, 7–9 May 2012; pp. 196–200.

- Novack, T.; Esch, T.; Kux, H.; Stilla, U. Machine learning comparison between WorldView-2 and QuickBird-2-simulated imagery regarding object-based urban land cover classification. Remote Sens 2011, 3, 2263–2282. [Google Scholar]

- Tarantino, E.; Figorito, B. Extracting buildings from true color stereo aerial images using a decision making strategy. Remote Sens 2011, 3, 1553–1567. [Google Scholar]

- Chidiac, H.; Ziou, D. Classification of Image Edges. Proceedings of Vision Interface ’99, Trois-Rivieres, QC, Canada, 19–21 May 1999; pp. 17–24.

- Leukert, K. Übertragbarkeit der Objektbasierten Analyse bei der Gewinnung von GIS-Daten aus Satellitenbildern Mittlerer Auflösung. University of the German Federal Armed Forces, Munich, Germany, 2005. [Google Scholar]

- Bossard, M.; Feranec, J.; Otahel, J. CORINE Land Cover Technical Guide; Addendum, EEA: Copenhagen, Denmark, 2000. [Google Scholar]

Share and Cite

De Kok, R. Spectral Difference in the Image Domain for Large Neighborhoods, a GEOBIA Pre-Processing Step for High Resolution Imagery. Remote Sens. 2012, 4, 2294-2313. https://doi.org/10.3390/rs4082294

De Kok R. Spectral Difference in the Image Domain for Large Neighborhoods, a GEOBIA Pre-Processing Step for High Resolution Imagery. Remote Sensing. 2012; 4(8):2294-2313. https://doi.org/10.3390/rs4082294

Chicago/Turabian StyleDe Kok, Roeland. 2012. "Spectral Difference in the Image Domain for Large Neighborhoods, a GEOBIA Pre-Processing Step for High Resolution Imagery" Remote Sensing 4, no. 8: 2294-2313. https://doi.org/10.3390/rs4082294