Mapping Slums in Mumbai, India, Using Sentinel-2 Imagery: Evaluating Composite Slum Spectral Indices (CSSIs)

Abstract

:1. Introduction

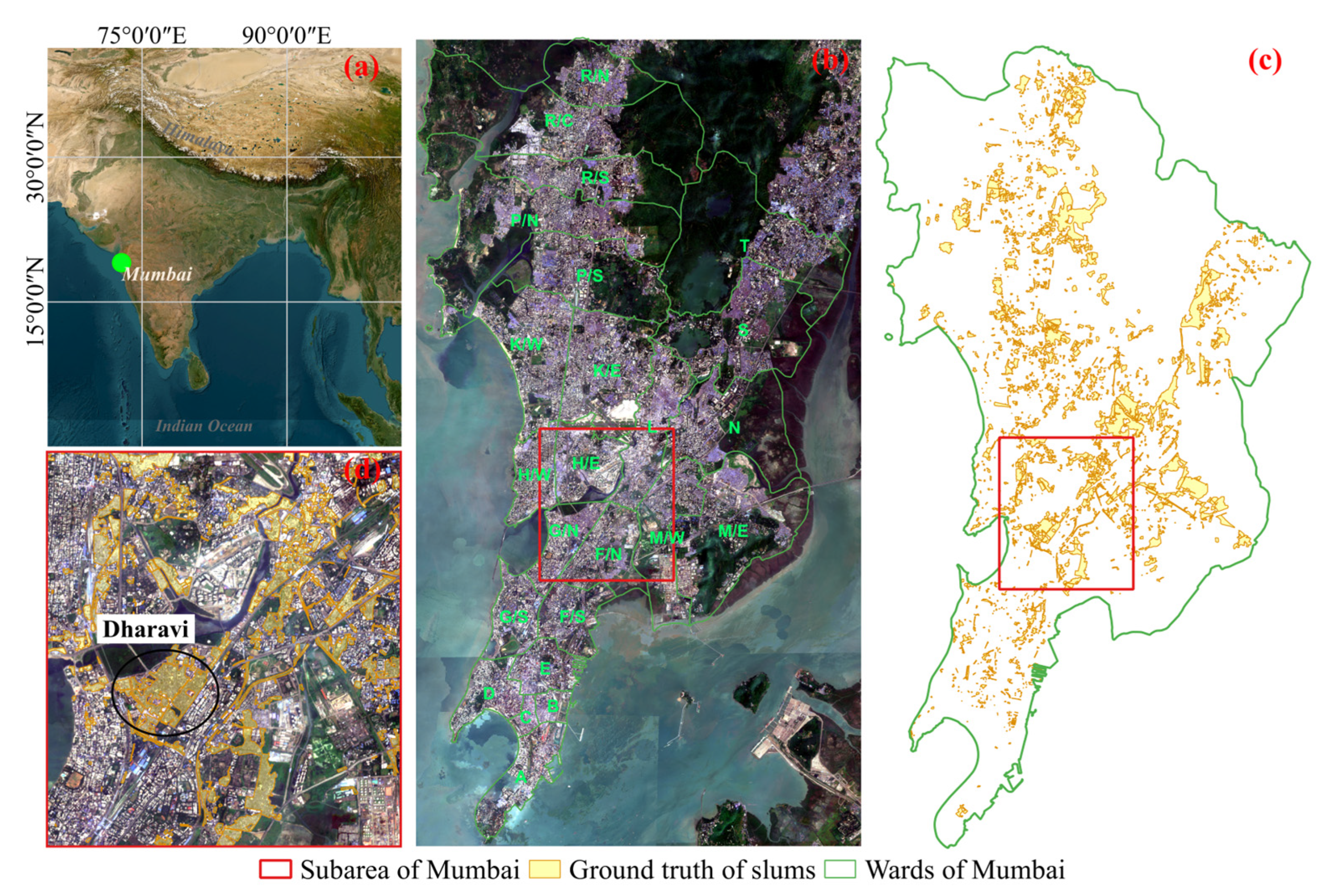

2. Study Area and Data

3. Methods

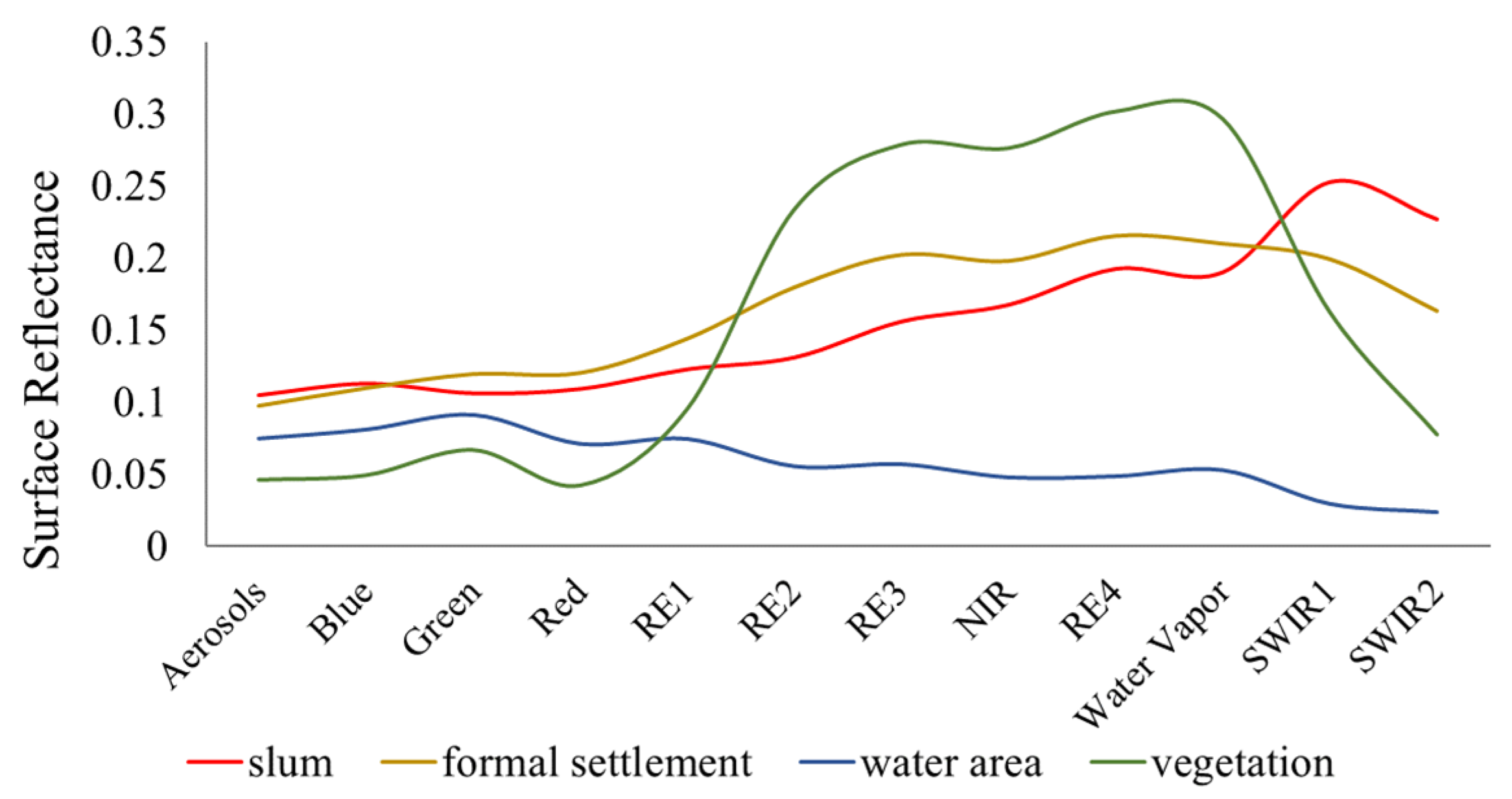

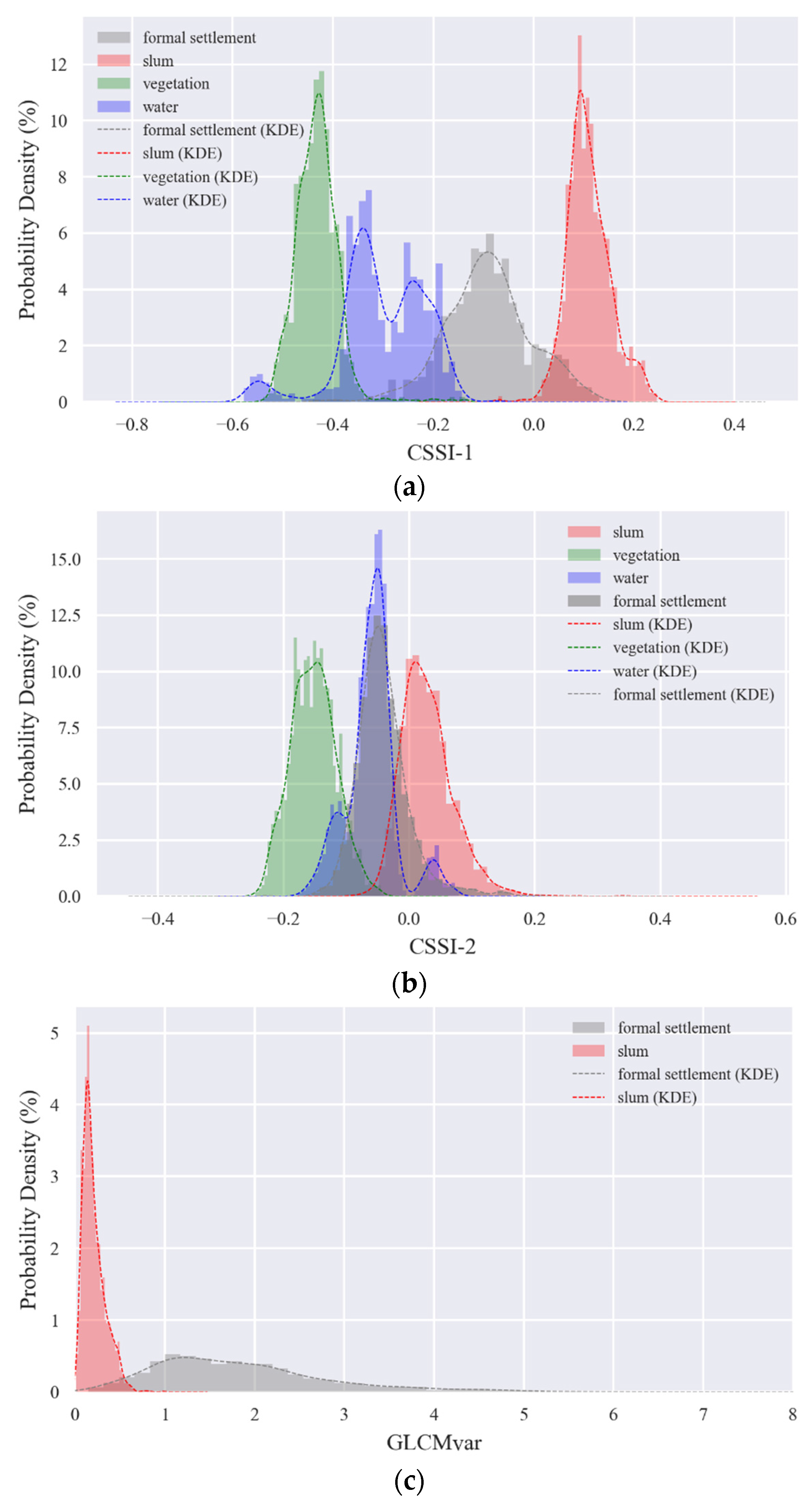

3.1. Calculation of CSSIs

3.2. Calculation of Textural Features

3.3. Approach 1: Usage of CSSIs for Threshold-Based Classification

3.4. Approach 2: Usage of CSSIs for ML-Based Classification

4. Analysis and Results

4.1. Experimental Setting

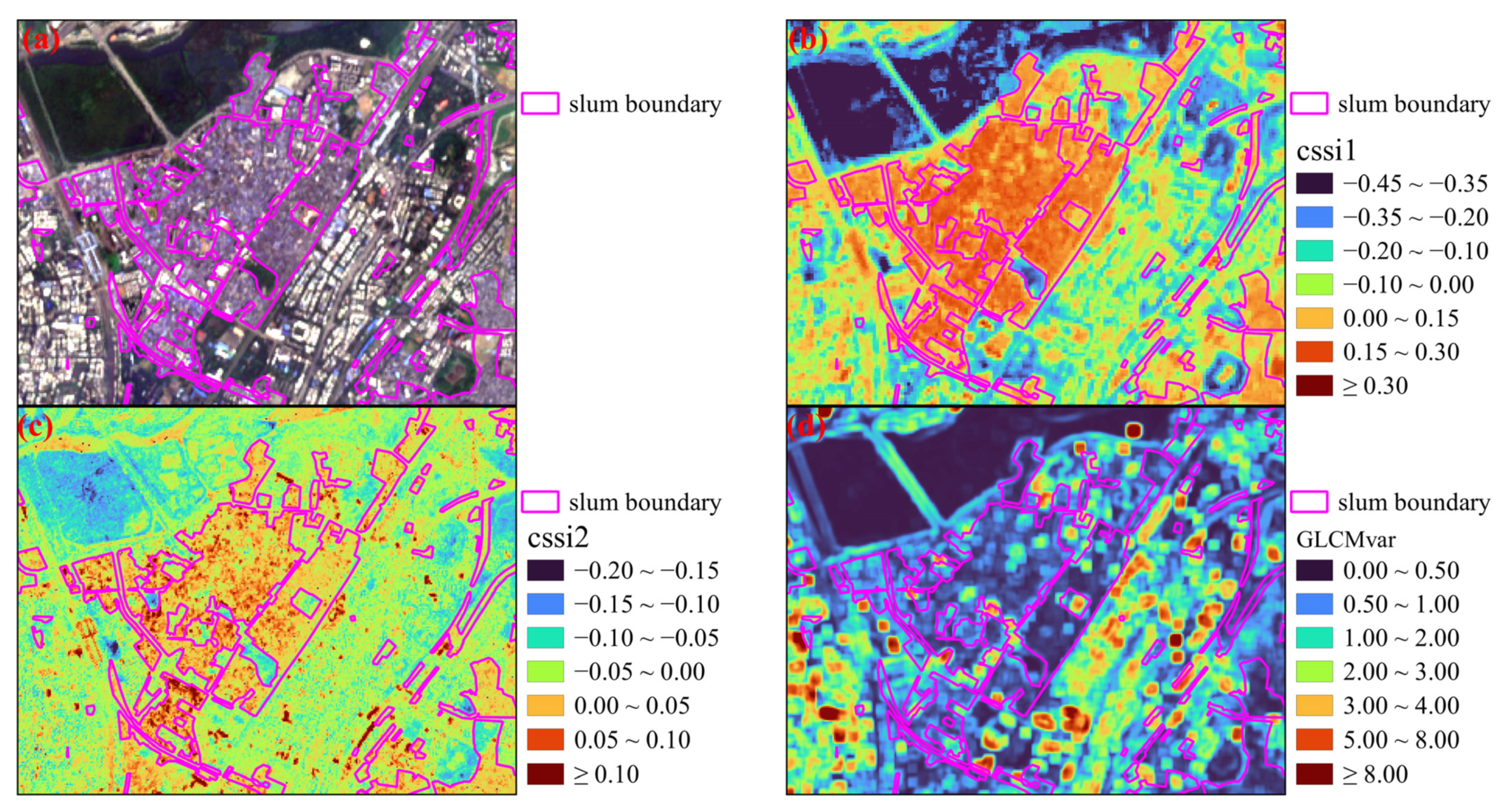

4.2. Spectral and Textural Feature Maps

4.3. Slum Mapping Results in Mumbai

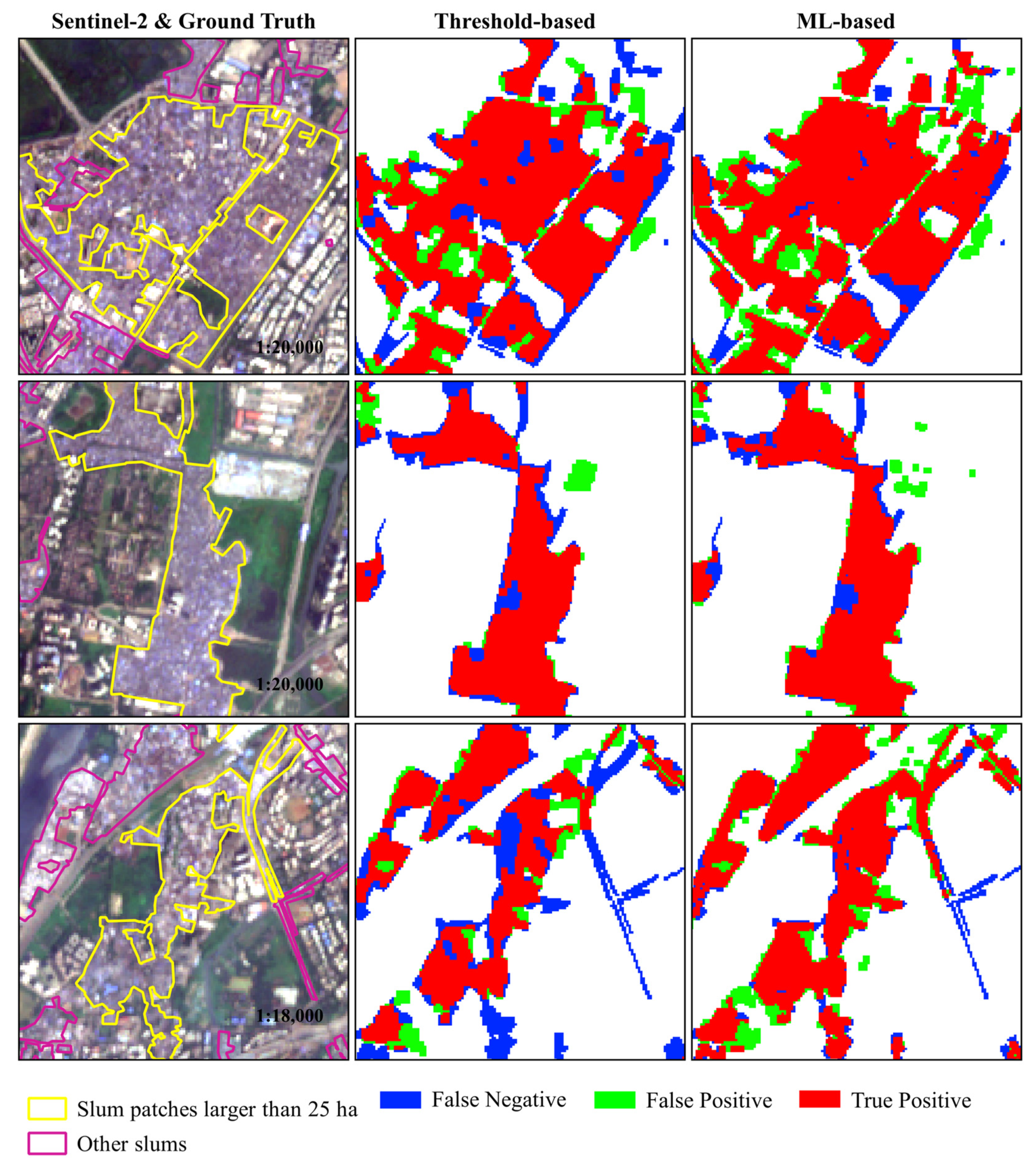

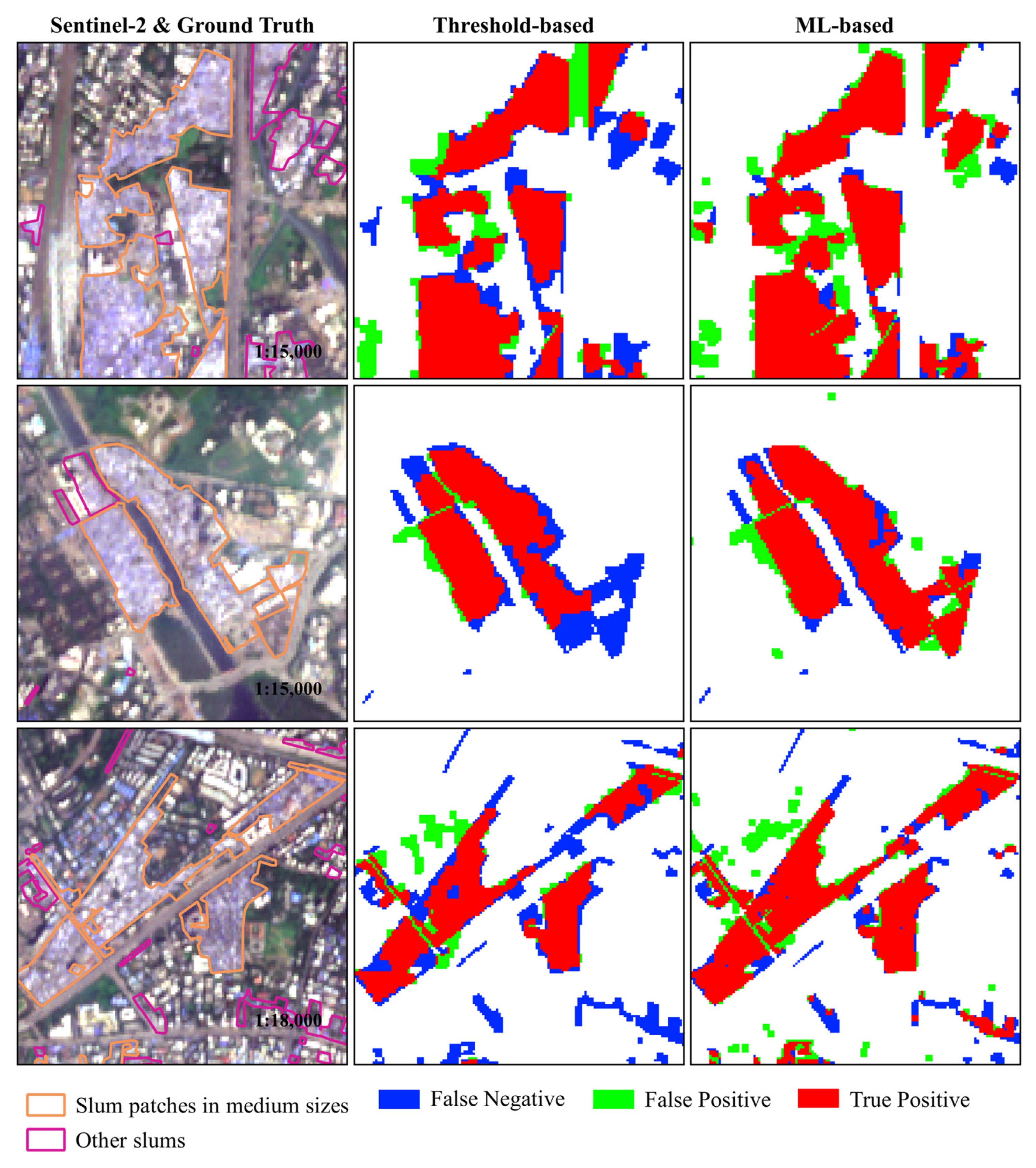

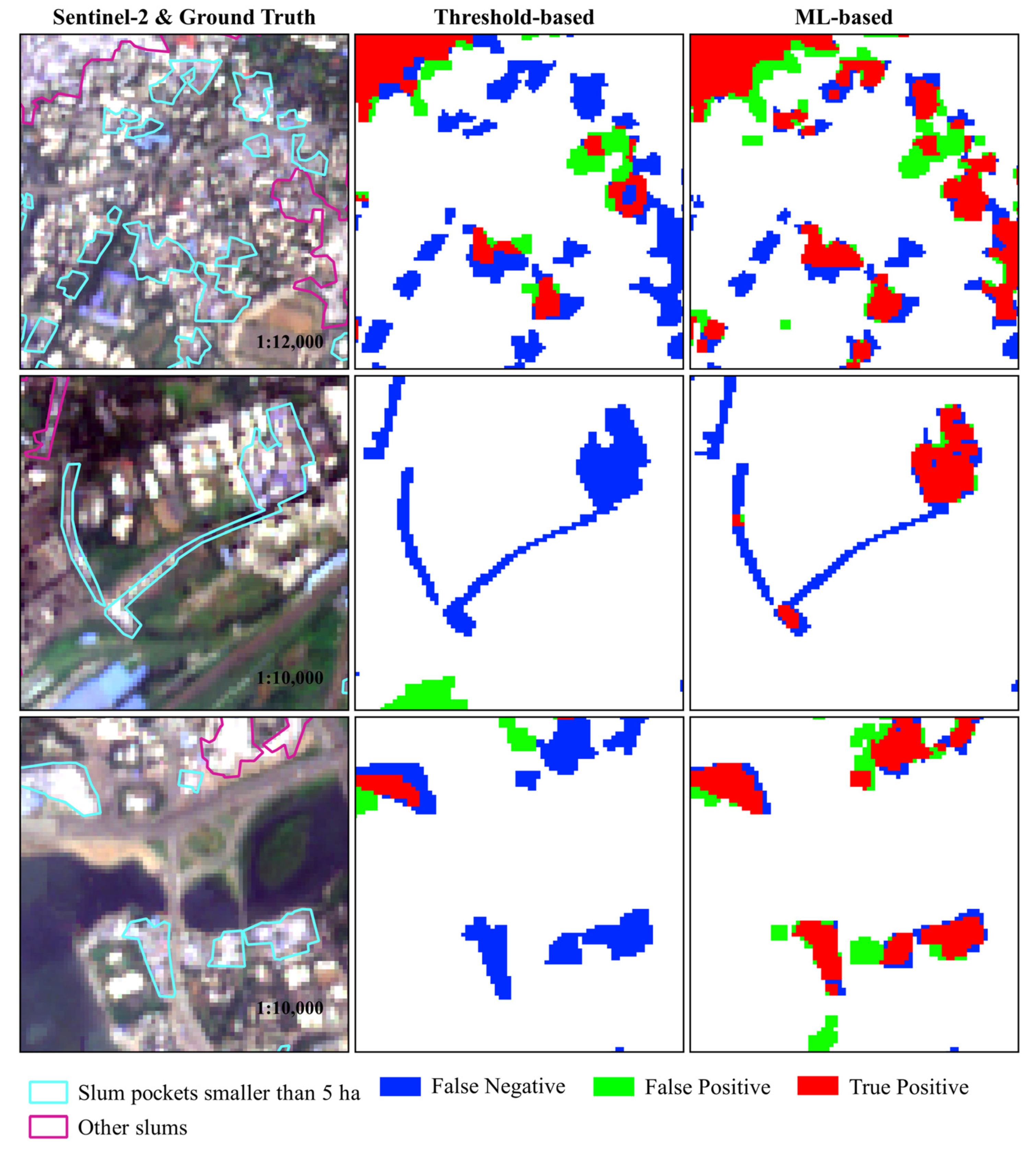

4.4. Slum Mapping Results in the Subarea of Mumbai

4.5. Results of Patch-Based Accuracy Assessment

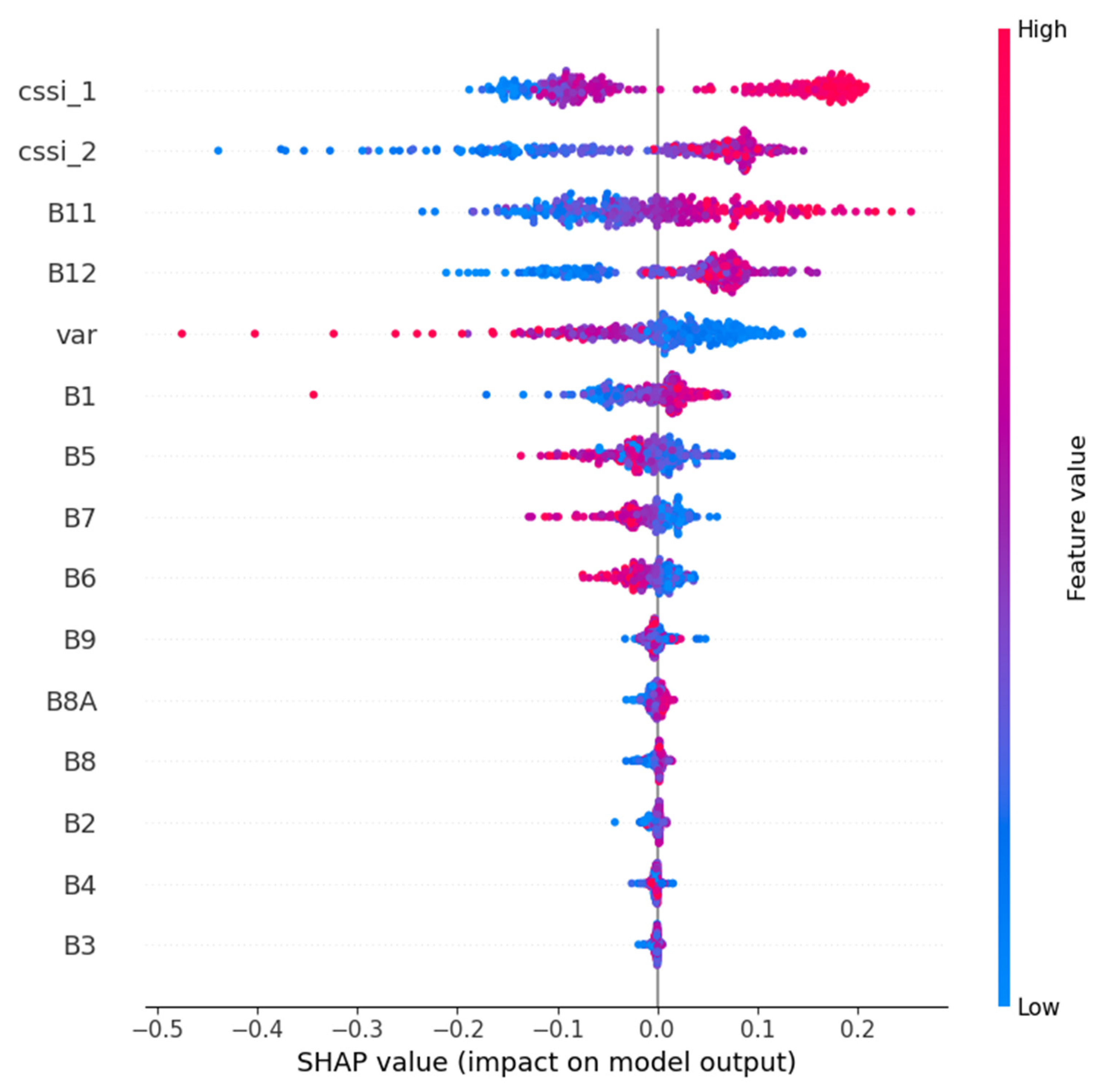

4.6. Importance of Features for Slum Mapping

5. Discussion

5.1. Performance of Our Methods with CSSIs on Slum Mapping

5.2. Generality and Limitations of Our Methods with CSSIs

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Secretary-General, U.N. Progress towards the Sustainable Development Goals: Report of the Secretary-General. 2017. Available online: https://policycommons.net/artifacts/127525/progress-towards-the-sustainable-development-goals/182695/ (accessed on 18 September 2023).

- UN-Habitat Slum Almanac 2015–2016: Tracking Improvement in the Lives of Slum Dwellers. Participatory Slum Upgrading Programme 2016. Available online: https://unhabitat.org/slum-almanac-2015-2016-0 (accessed on 18 September 2023).

- UN-Habitat The Challenge of Slums: Global Report on Human Settlements 2003. Available online: https://unhabitat.org/the-challenge-of-slums-global-report-on-human-settlements-2003 (accessed on 18 September 2023).

- Tjia, D.; Coetzee, S. Geospatial Information Needs for Informal Settlement Upgrading—A Review. Habitat Int. 2022, 122, 102531. [Google Scholar] [CrossRef]

- Thomson, D.R.; Stevens, F.R.; Chen, R.; Yetman, G.; Sorichetta, A.; Gaughan, A.E. Improving the Accuracy of Gridded Population Estimates in Cities and Slums to Monitor SDG 11: Evidence from a Simulation Study in Namibia. Land Use Policy 2022, 123, 106392. [Google Scholar] [CrossRef]

- Daneshyar, E.; Keynoush, S. Developing Adaptive Curriculum for Slum Upgrade Projects: The Fourth Year Undergraduate Program Experience. Sustainability 2023, 15, 4877. [Google Scholar] [CrossRef]

- Leonita, G.; Kuffer, M.; Sliuzas, R.; Persello, C. Machine Learning-Based Slum Mapping in Support of Slum Upgrading Programs: The Case of Bandung City, Indonesia. Remote Sens. 2018, 10, 1522. [Google Scholar] [CrossRef]

- Rehman, M.F.U.; Aftab, I.; Sultani, W.; Ali, M. Mapping Temporary Slums From Satellite Imagery Using a Semi-Supervised Approach. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Prabhu, R.; Parvathavarthini, B. Morphological Slum Index for Slum Extraction from High-Resolution Remote Sensing Imagery over Urban Areas. Geocarto Int. 2022, 37, 13904–13922. [Google Scholar] [CrossRef]

- Luo, E.; Kuffer, M.; Wang, J. Urban Poverty Maps-From Characterising Deprivation Using Geo-Spatial Data to Capturing Deprivation from Space. Sustain. Cities Soc. 2022, 84, 104033. [Google Scholar] [CrossRef]

- Trento Oliveira, L.; Kuffer, M.; Schwarz, N.; Pedrassoli, J.C. Capturing Deprived Areas Using Unsupervised Machine Learning and Open Data: A Case Study in São Paulo, Brazil. Eur. J. Remote Sens. 2023, 56, 2214690. [Google Scholar] [CrossRef]

- Alrasheedi, K.G.; Dewan, A.; El-Mowafy, A. Using Local Knowledge and Remote Sensing in the Identification of Informal Settlements in Riyadh City, Saudi Arabia. Remote Sens. 2023, 15, 3895. [Google Scholar] [CrossRef]

- Dabra, A.; Kumar, V. Evaluating Green Cover and Open Spaces in Informal Settlements of Mumbai Using Deep Learning. Neural Comput. Appl. 2023, 35, 11773–11788. [Google Scholar] [CrossRef]

- Prabhu, R.; Alagu Raja, R.A. Urban Slum Detection Approaches from High-Resolution Satellite Data Using Statistical and Spectral Based Approaches. J. Indian Soc. Remote Sens. 2018, 46, 2033–2044. [Google Scholar] [CrossRef]

- Owen, K.K.; Wong, D.W. An Approach to Differentiate Informal Settlements Using Spectral, Texture, Geomorphology and Road Accessibility Metrics. Appl. Geogr. 2013, 38, 107–118. [Google Scholar] [CrossRef]

- Kuffer, M.; Thomson, D.R.; Boo, G.; Mahabir, R.; Grippa, T.; Vanhuysse, S.; Engstrom, R.; Ndugwa, R.; Makau, J.; Darin, E. The Role of Earth Observation in an Integrated Deprived Area Mapping “System” for Low-to-Middle Income Countries. Remote Sens. 2020, 12, 982. [Google Scholar] [CrossRef]

- Olivatto, T.F.; Inguaggiato, F.F.; Stanganini, F.N. Urban Mapping and Impacts Assessment in a Brazilian Irregular Settlement Using UAV-Based Imaging. Remote Sens. Appl. Soc. Environ. 2023, 29, 100911. [Google Scholar] [CrossRef]

- Ajami, A.; Kuffer, M.; Persello, C.; Pfeffer, K. Identifying a Slums’ Degree of Deprivation from VHR Images Using Convolutional Neural Networks. Remote Sens. 2019, 11, 1282. [Google Scholar] [CrossRef]

- Chan, C.Y.-C.; Weigand, M.; Alnajar, E.A.; Taubenböck, H. Investigating the Capability of UAV Imagery for AI-Assisted Mapping of Refugee Camps in East Africa. Proc. Acad. Track State Map 2022, 2022, 45–48. [Google Scholar]

- Kit, O.; Lüdeke, M.; Reckien, D. Texture-Based Identification of Urban Slums in Hyderabad, India Using Remote Sensing Data. Appl. Geogr. 2012, 32, 660–667. [Google Scholar] [CrossRef]

- Kit, O.; Lüdeke, M. Automated Detection of Slum Area Change in Hyderabad, India Using Multitemporal Satellite Imagery. ISPRS J. Photogramm. Remote Sens. 2013, 83, 130–137. [Google Scholar] [CrossRef]

- Kohli, D.; Sliuzas, R.; Stein, A. Urban Slum Detection Using Texture and Spatial Metrics Derived from Satellite Imagery. J. Spat. Sci. 2016, 61, 405–426. [Google Scholar] [CrossRef]

- Kuffer, M.; Pfeffer, K.; Sliuzas, R.; Baud, I. Extraction of Slum Areas from VHR Imagery Using GLCM Variance. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1830–1840. [Google Scholar] [CrossRef]

- Hofmann, P. Detecting Informal Settlements from IKONOS Image Data Using Methods of Object Oriented Image Analysis-an Example from Cape Town (South Africa). In Remote Sensing of Urban Areas/Fernerkundung in urbanen Räumen; Jürgens, C., Ed.; Institut für Geographie an der Universität Regensburg: Regensburg, Germany, 2001; pp. 41–42. [Google Scholar]

- Rhinane, H.; Hilali, A.; Berrada, A.; Hakdaoui, M. Detecting Slums from SPOT Data in Casablanca Morocco Using an Object Based Approach. J. Geogr. Inf. Syst. 2011, 3, 217. [Google Scholar] [CrossRef]

- Khelifa, D.; Mimoun, M. Object-Based Image Analysis and Data Mining for Building Ontology of Informal Urban Settlements. In Proceedings of the Image and Signal Processing for Remote Sensing XVIII, Edinburgh, UK, 24–27 September 2012; SPIE: Edinburgh, UK, 2012; Volume 8537, pp. 414–426. [Google Scholar]

- Kuffer, M.; Pfeffer, K.; Sliuzas, R.; Baud, I.; Van Maarseveen, M. Capturing the Diversity of Deprived Areas with Image-Based Features: The Case of Mumbai. Remote Sens. 2017, 9, 384. [Google Scholar] [CrossRef]

- Duque, J.C.; Patino, J.E.; Betancourt, A. Exploring the Potential of Machine Learning for Automatic Slum Identification from VHR Imagery. Remote Sens. 2017, 9, 895. [Google Scholar] [CrossRef]

- Matarira, D.; Mutanga, O.; Naidu, M. Google Earth Engine for Informal Settlement Mapping: A Random Forest Classification Using Spectral and Textural Information. Remote Sens. 2022, 14, 5130. [Google Scholar] [CrossRef]

- Li, Y.; Huang, X.; Liu, H. Unsupervised Deep Feature Learning for Urban Village Detection from High-Resolution Remote Sensing Images. Photogramm. Eng. Remote Sens. 2017, 83, 567–579. [Google Scholar] [CrossRef]

- Prabhu, R.; Parvathavarthini, B.; Alaguraja, A.R. Integration of Deep Convolutional Neural Networks and Mathematical Morphology-Based Postclassification Framework for Urban Slum Mapping. J. Appl. Remote Sens. 2021, 15, 014515. [Google Scholar] [CrossRef]

- Li, Z.; Xie, Y.; Jia, X.; Stuart, K.; Delaire, C.; Skakun, S. Point-to-Region Co-Learning for Poverty Mapping at High Resolution Using Satellite Imagery. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 8–10 August 2023; Volume 37, pp. 14321–14328. [Google Scholar]

- Williams, T.K.-A.; Wei, T.; Zhu, X. Mapping Urban Slum Settlements Using Very High-Resolution Imagery and Land Boundary Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 13, 166–177. [Google Scholar] [CrossRef]

- Huang, Y.; Wang, Y.; Li, Z.; Li, Z.; Yang, G. Simultaneous Update of High-Resolution Land-Cover Mapping Attempt: Wuhan and the Surrounding Satellite Cities Cartography Using L2HNet. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 2492–2503. [Google Scholar] [CrossRef]

- Wang, P.; Wang, L.; Leung, H.; Zhang, G. Super-Resolution Mapping Based on Spatial–Spectral Correlation for Spectral Imagery. IEEE Trans. Geosci. Remote Sens. 2020, 59, 2256–2268. [Google Scholar] [CrossRef]

- Verma, D.; Jana, A.; Ramamritham, K. Transfer Learning Approach to Map Urban Slums Using High and Medium Resolution Satellite Imagery. Habitat Int. 2019, 88, 101981. [Google Scholar] [CrossRef]

- Dufitimana, E.; Niyonzima, T. Leveraging the Potential of Convolutional Neural Network and Satellite Images to Map Informal Settlements in Urban Settings of the City of Kigali, Rwanda. Rwanda J. Eng. Sci. Technol. Environ. 2023, 5, 1–22. [Google Scholar] [CrossRef]

- United Nations. The World’s Cities in 2018. Department of Economic and Social Affairs, Population Division, World Urbanization Prospects. 2018, pp. 1–34. Available online: https://www.un.org/en/development/desa/population/publications/pdf/urbanization/the_worlds_cities_in_2018_data_booklet.pdf (accessed on 18 September 2023).

- Fisher, T.; Gibson, H.; Liu, Y.; Abdar, M.; Posa, M.; Salimi-Khorshidi, G.; Hassaine, A.; Cai, Y.; Rahimi, K.; Mamouei, M. Uncertainty-Aware Interpretable Deep Learning for Slum Mapping and Monitoring. Remote Sens. 2022, 14, 3072. [Google Scholar] [CrossRef]

- Owusu, M.; Kuffer, M.; Belgiu, M.; Grippa, T.; Lennert, M.; Georganos, S.; Vanhuysse, S. Towards User-Driven Earth Observation-Based Slum Mapping. Comput. Environ. Urban Syst. 2021, 89, 101681. [Google Scholar] [CrossRef]

- Wurm, M.; Stark, T.; Zhu, X.X.; Weigand, M.; Taubenböck, H. Semantic Segmentation of Slums in Satellite Images Using Transfer Learning on Fully Convolutional Neural Networks. ISPRS J. Photogramm. Remote Sens. 2019, 150, 59–69. [Google Scholar] [CrossRef]

- Elmore, A.J.; Mustard, J.F.; Manning, S.J.; Lobell, D.B. Quantifying Vegetation Change in Semiarid Environments: Precision and Accuracy of Spectral Mixture Analysis and the Normalized Difference Vegetation Index. Remote Sens. Environ. 2000, 73, 87–102. [Google Scholar] [CrossRef]

- Jitt-Aer, K.; Miyazaki, H. Urban Classification Based on Sentinel-2 Satellite Data for Slum Identification. In Proceeding of the 7th TICC International Conference, Tainan, Taiwan, 28–30 June 2023; pp. 95–104. [Google Scholar]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-Scale Geospatial Analysis for Everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, 6, 610–621. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random Forest in Remote Sensing: A Review of Applications and Future Directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Nohara, Y.; Matsumoto, K.; Soejima, H.; Nakashima, N. Explanation of Machine Learning Models Using Improved Shapley Additive Explanation. In Proceedings of the 10th ACM International Conference on Bioinformatics, Computational Biology and Health Informatics, Niagara Falls, NY, USA, 7–10 September 2019; p. 546. [Google Scholar]

- Descals, A.; Verger, A.; Yin, G.; Filella, I.; Peñuelas, J. Local Interpretation of Machine Learning Models in Remote Sensing with SHAP: The Case of Global Climate Constraints on Photosynthesis Phenology. Int. J. Remote Sens. 2023, 44, 3160–3173. [Google Scholar] [CrossRef]

- Brenning, A. Interpreting Machine-Learning Models in Transformed Feature Space with an Application to Remote-Sensing Classification. Mach. Learn. 2023, 112, 3455–3471. [Google Scholar] [CrossRef]

- Gram-Hansen, B.J.; Helber, P.; Varatharajan, I.; Azam, F.; Coca-Castro, A.; Kopackova, V.; Bilinski, P. Mapping Informal Settlements in Developing Countries Using Machine Learning and Low Resolution Multi-Spectral Data. In Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society, Honolulu, HI, USA, 27–28 January 2019; pp. 361–368. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 1–26 July 2016; pp. 2818–2826. [Google Scholar]

- Rossi, C.; Gholizadeh, H. Uncovering the Hidden: Leveraging Sub-Pixel Spectral Diversity to Estimate Plant Diversity from Space. Remote Sens. Environ. 2023, 296, 113734. [Google Scholar] [CrossRef]

- Li, W.; Cao, D.; Peng, Y.; Yang, C. MSNet: A Multi-Stream Fusion Network for Remote Sensing Spatiotemporal Fusion Based on Transformer and Convolution. Remote Sens. 2021, 13, 3724. [Google Scholar] [CrossRef]

- Liu, S.; Zhou, J.; Qiu, Y.; Chen, J.; Zhu, X.; Chen, H. The FIRST Model: Spatiotemporal Fusion Incorrporting Spectral Autocorrelation. Remote Sens. Environ. 2022, 279, 113111. [Google Scholar] [CrossRef]

- Chen, S.; Wang, J.; Gong, P. ROBOT: A Spatiotemporal Fusion Model toward Seamless Data Cube for Global Remote Sensing Applications. Remote Sens. Environ. 2023, 294, 113616. [Google Scholar] [CrossRef]

- Helber, P.; Gram-Hansen, B.; Varatharajan, I.; Azam, F.; Coca-Castro, A.; Kopackova, V.; Bilinski, P. Generating Material Maps to Map Informal Settlements. arXiv 2018, arXiv:1812.00786. [Google Scholar]

- Kotthaus, S.; Smith, T.E.; Wooster, M.J.; Grimmond, C.S.B. Derivation of an Urban Materials Spectral Library through Emittance and Reflectance Spectroscopy. ISPRS J. Photogramm. Remote Sens. 2014, 94, 194–212. [Google Scholar] [CrossRef]

- Najmi, A.; Gevaert, C.M.; Kohli, D.; Kuffer, M.; Pratomo, J. Integrating Remote Sensing and Street View Imagery for Mapping Slums. ISPRS Int. J. Geo-Inf. 2022, 11, 631. [Google Scholar] [CrossRef]

- MacTavish, R.; Bixby, H.; Cavanaugh, A.; Agyei-Mensah, S.; Bawah, A.; Owusu, G.; Ezzati, M.; Arku, R.; Robinson, B.; Schmidt, A.M. Identifying Deprived “Slum” Neighbourhoods in the Greater Accra Metropolitan Area of Ghana Using Census and Remote Sensing Data. World Dev. 2023, 167, 106253. [Google Scholar] [CrossRef]

- Li, C.; Yu, L.; Hong, J. Monitoring Slum and Urban Deprived Area in Sub-Saharan Africa Using Geospatial and Socio-Economic Data; Copernicus Meetings. 2023. Available online: https://meetingorganizer.copernicus.org/EGU23/EGU23-10872.html (accessed on 18 September 2023).

| Band Name | Wavelength (nm) | Spatial Resolution (m) |

|---|---|---|

| Aerosols | 442.3 | 60 |

| Blue | 492.1 | 10 |

| Green | 559 | 10 |

| Red | 665 | 10 |

| Red Edge1 | 703.8 | 20 |

| Red Edge2 | 739.1 | 20 |

| Red Edge3 | 779.7 | 20 |

| NIR | 833 | 10 |

| Red Edge4 | 864 | 20 |

| Water vapor | 943.2 | 60 |

| SWIR1 | 1610.4 | 20 |

| SWIR2 | 2185.7 | 20 |

| Imagery | Methods | IoU (%) | P (%) | R (%) |

|---|---|---|---|---|

| Sentinel-2 (10 m) | Ours (Threshold-based) | 43.89 | 63.86 | 58.38 |

| Ours (ML-based) | 54.45 | 61.56 | 82.50 | |

| CNN + TL (Verma’s [36]) | 43.20 | - | - | |

| CCF (Gram-Hansen’s [52]) | 40.30 | - | - | |

| Pleiades (0.5 m) | CNN (Verma’s [36]) | 58.30 | - | - |

| Imagery | Methods | IoU (%) | P (%) | R (%) |

|---|---|---|---|---|

| Sentinel-2 (10 m) | Ours (Threshold-based) | 46.90 | 70.05 | 58.66 |

| Ours (ML-based) | 60.51 | 71.18 | 80.13 | |

| FCN (Wurm’s [41]) | 35.51 | 78.82 | 38.21 | |

| FCN-TL (Wurm’s [41]) | 51.23 | 85.25 | 55.47 | |

| Quickbird (0.5 m) | FCN (Wurm’s [41]) | 77.02 | 88.39 | 85.07 |

| Imagery | Method | Small Slums (<5 ha) | Medium Slums (5~25 ha) | Large Slums (≥25 ha) |

|---|---|---|---|---|

| Sentinel-2 (10 m) | Ours (Threshold-based) | 39.67 | 63.97 | 77.21 |

| Ours (ML-based) | 62.53 | 84.83 | 90.45 | |

| FCN (Wurm’s [41]) | 9.32 | 28.19 | 47.18 | |

| FCN-TL (Wurm’s [41]) | 24.67 | 50.64 | 62.46 | |

| Quickbird (0.5 m) | FCN (Wurm’s [41]) | 78.57 | 83.63 | 88.39 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, F.; Lu, W.; Hu, Y.; Jiang, L. Mapping Slums in Mumbai, India, Using Sentinel-2 Imagery: Evaluating Composite Slum Spectral Indices (CSSIs). Remote Sens. 2023, 15, 4671. https://doi.org/10.3390/rs15194671

Peng F, Lu W, Hu Y, Jiang L. Mapping Slums in Mumbai, India, Using Sentinel-2 Imagery: Evaluating Composite Slum Spectral Indices (CSSIs). Remote Sensing. 2023; 15(19):4671. https://doi.org/10.3390/rs15194671

Chicago/Turabian StylePeng, Feifei, Wei Lu, Yunfeng Hu, and Liangcun Jiang. 2023. "Mapping Slums in Mumbai, India, Using Sentinel-2 Imagery: Evaluating Composite Slum Spectral Indices (CSSIs)" Remote Sensing 15, no. 19: 4671. https://doi.org/10.3390/rs15194671