SiamCAF: Complementary Attention Fusion-Based Siamese Network for RGBT Tracking

Abstract

:1. Introduction

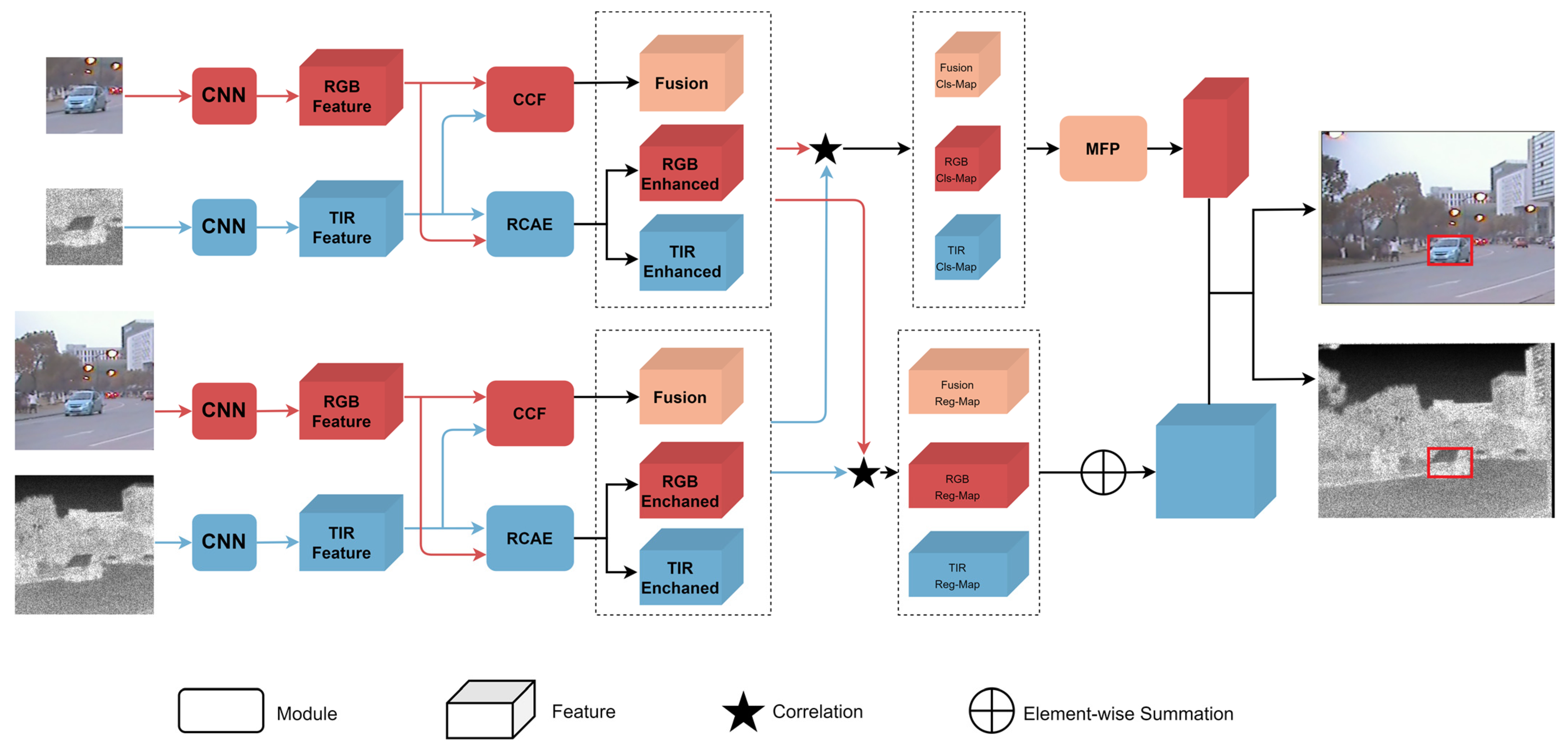

- We extended the Siamese network to RGBT tracking for better utilization of the information of two modalities. As a result, our proposed method demonstrates an outstanding performance and speed (105 FPS), surpassing most advanced RGBT trackers based on the current mainstream datasets.

- We designed a Complementary Coupling Feature fusion module (CCF) which can extract similar features and reduce modality differences to fuse features better. Simultaneously, the features are enhanced using the Residual Channel Attention Enhanced module (RCAE) to amplify the characteristics of visible light and thermal infrared modalities.

- We propose a Maximum Fusion Prediction module (MFP) in the response map fusion stage which enables us to effectively accomplish the response level fusion.

2. Related Works

2.1. Visual Object Tracking

2.2. RGBT Object Tracking

2.3. Attention Mechanisms

3. Our Method

3.1. Dual-Modal Siamese Subnetwork for Feature Extraction

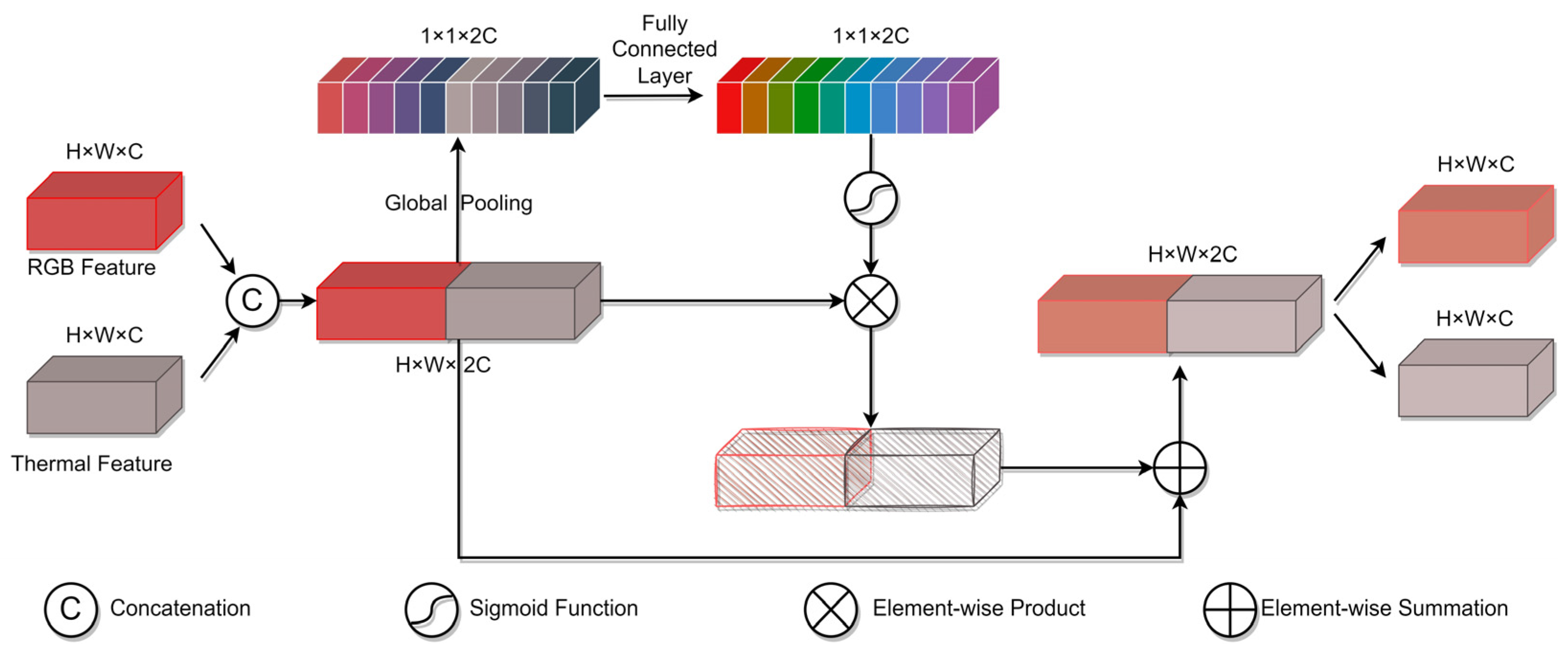

3.2. Complementary Coupling Feature Fusion Module

3.3. Residual Channel Attention Enhanced Module

3.4. Maximum Fusion Prediction Module

4. Experiment and Result Analysis

4.1. Evaluation Dataset and Evaluation Metrics

4.1.1. GTOT Dataset and Metrics

4.1.2. RGBT234 Dataset and Metrics

4.1.3. VTUAV Dataset and Metrics

4.2. Implementation Details

4.3. Result Comparisons on GTOT

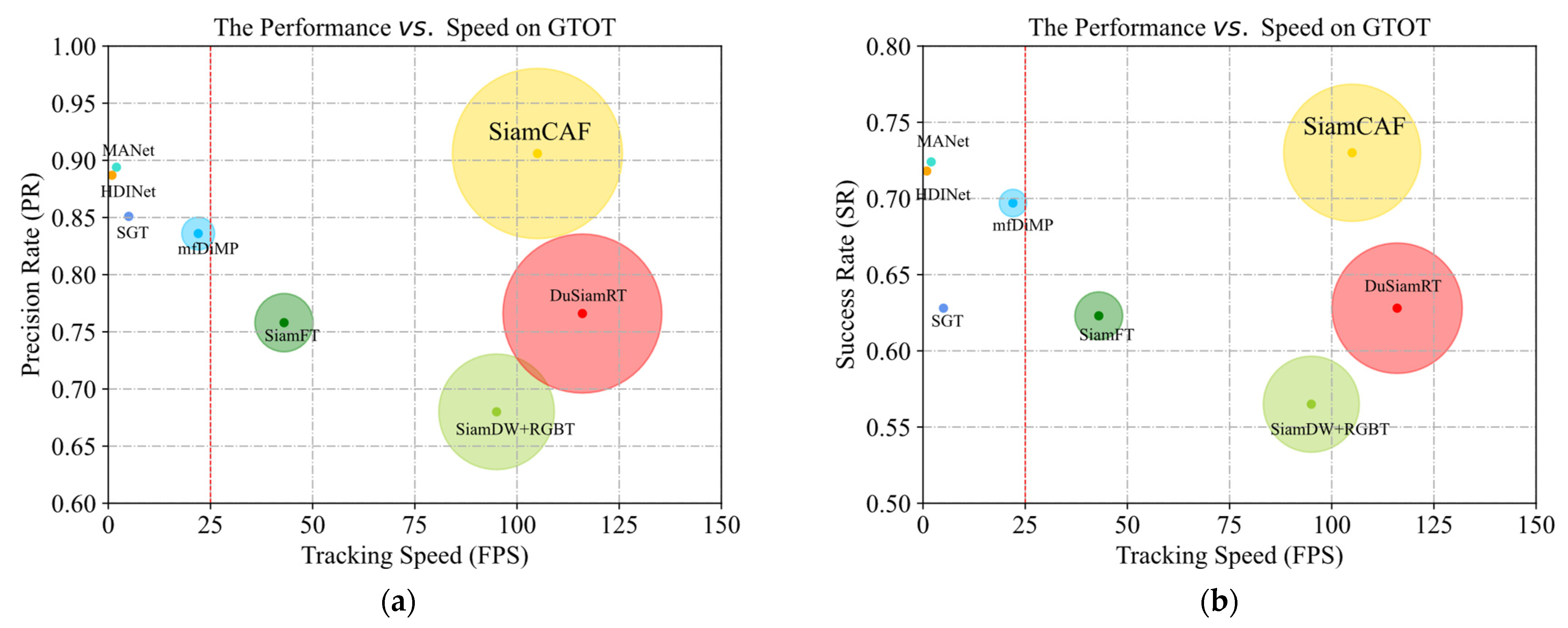

4.3.1. Overall Performance

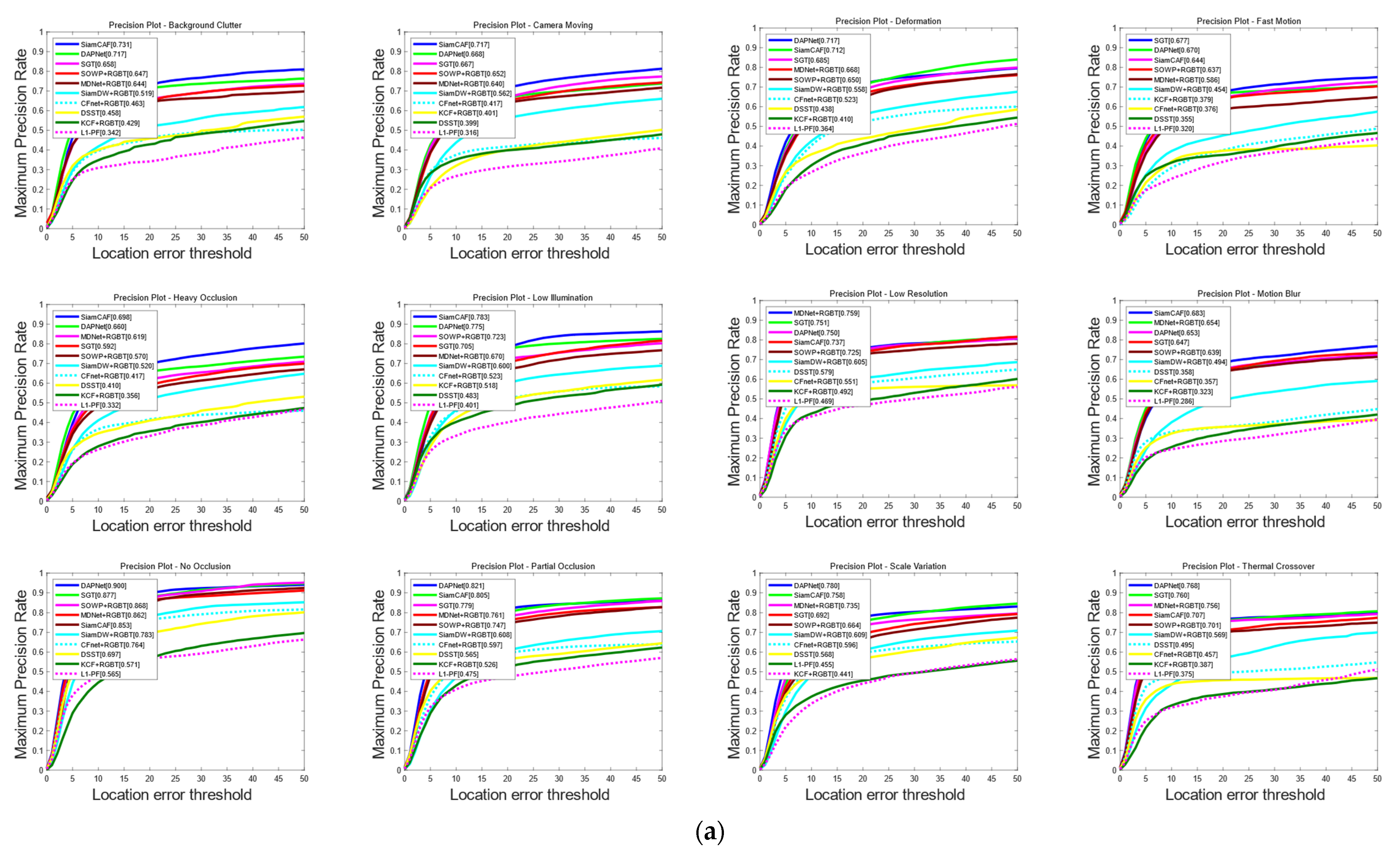

4.3.2. Attribute-Based Performance

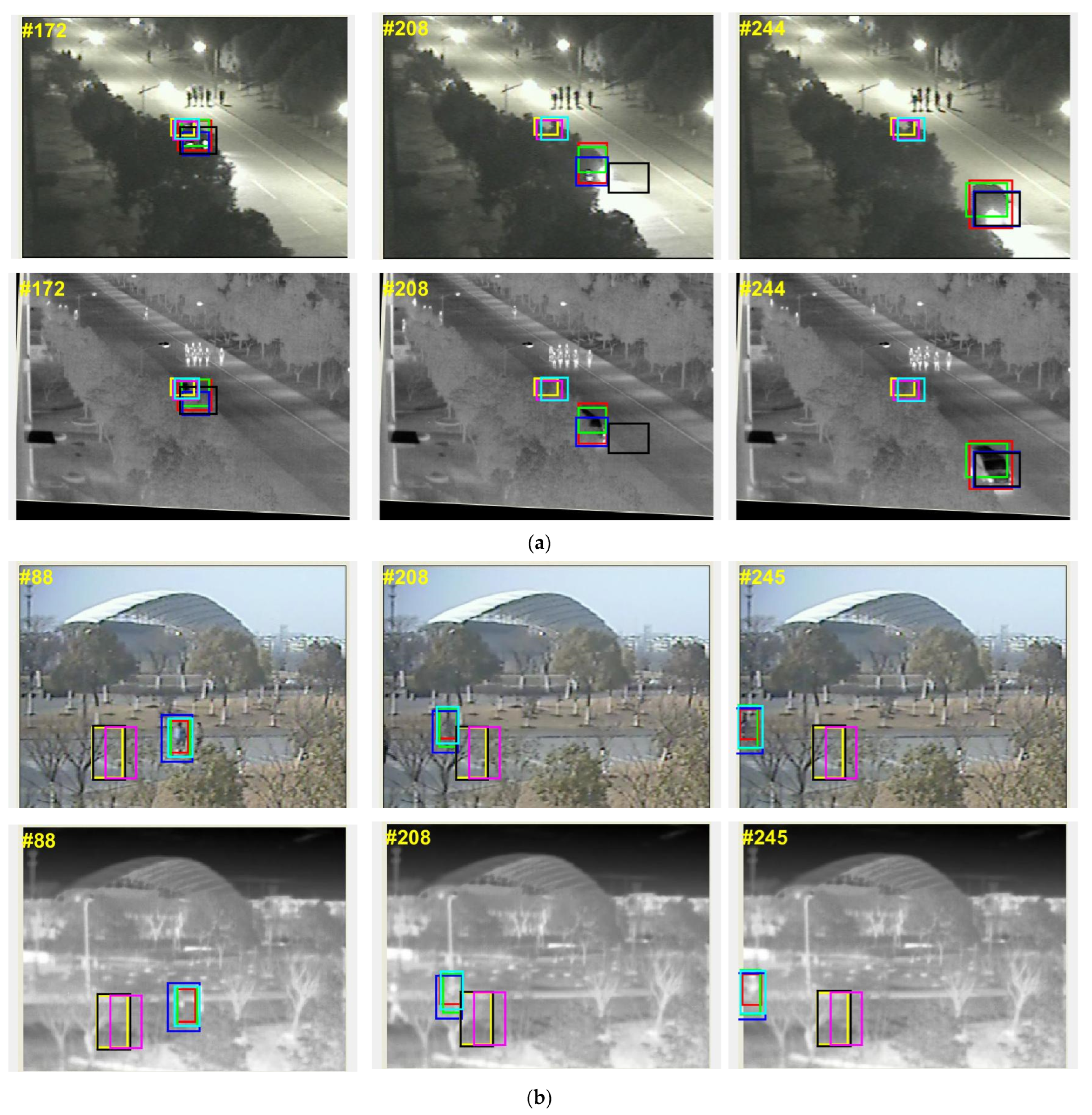

4.3.3. Visual Comparison

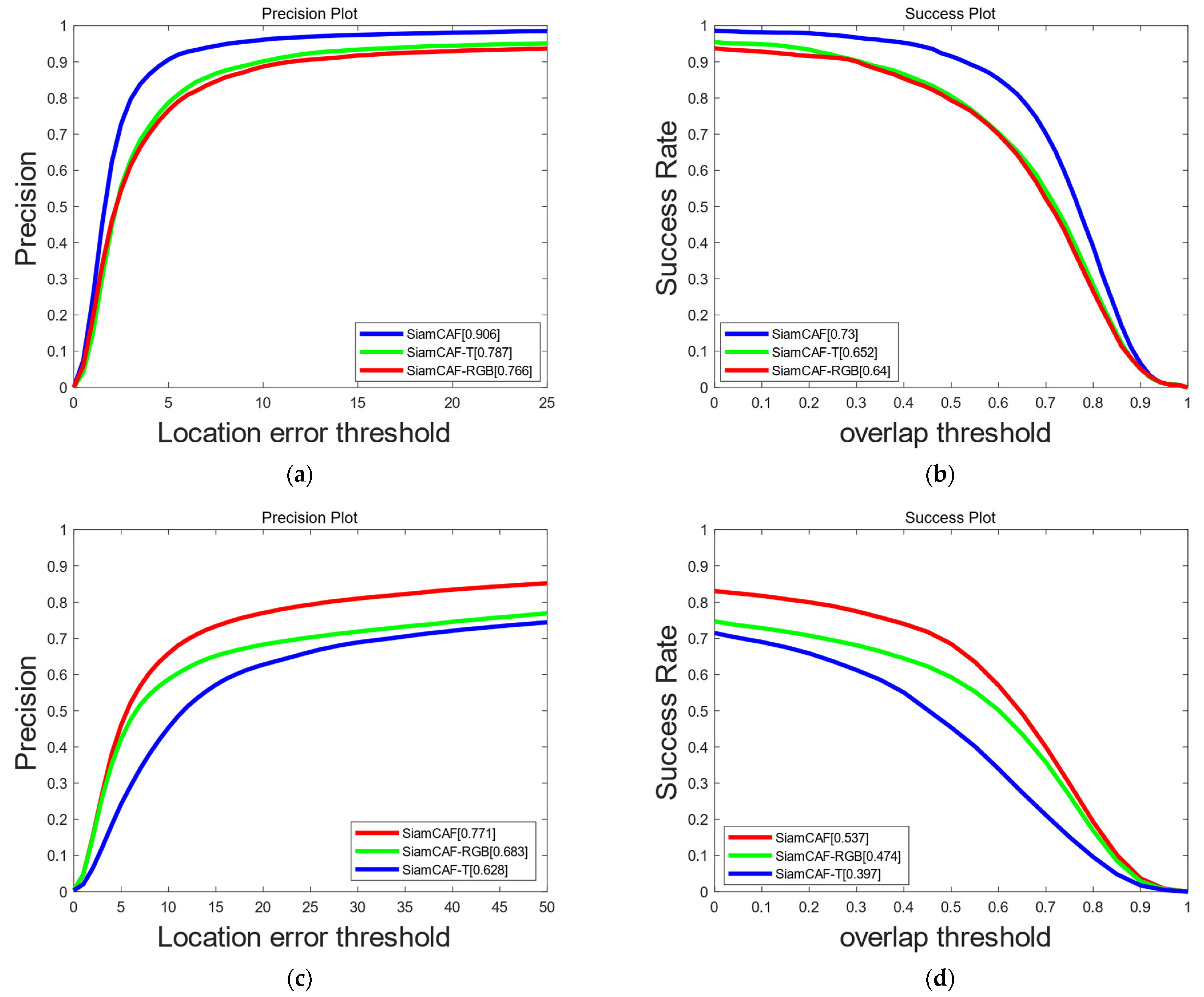

4.4. Result Comparisons on RGBT234

4.4.1. Overall Performance

4.4.2. Attribute-Based Performance

4.5. Result Comparisons on VTUAV

4.5.1. Overall Performance

4.5.2. Visual Comparison

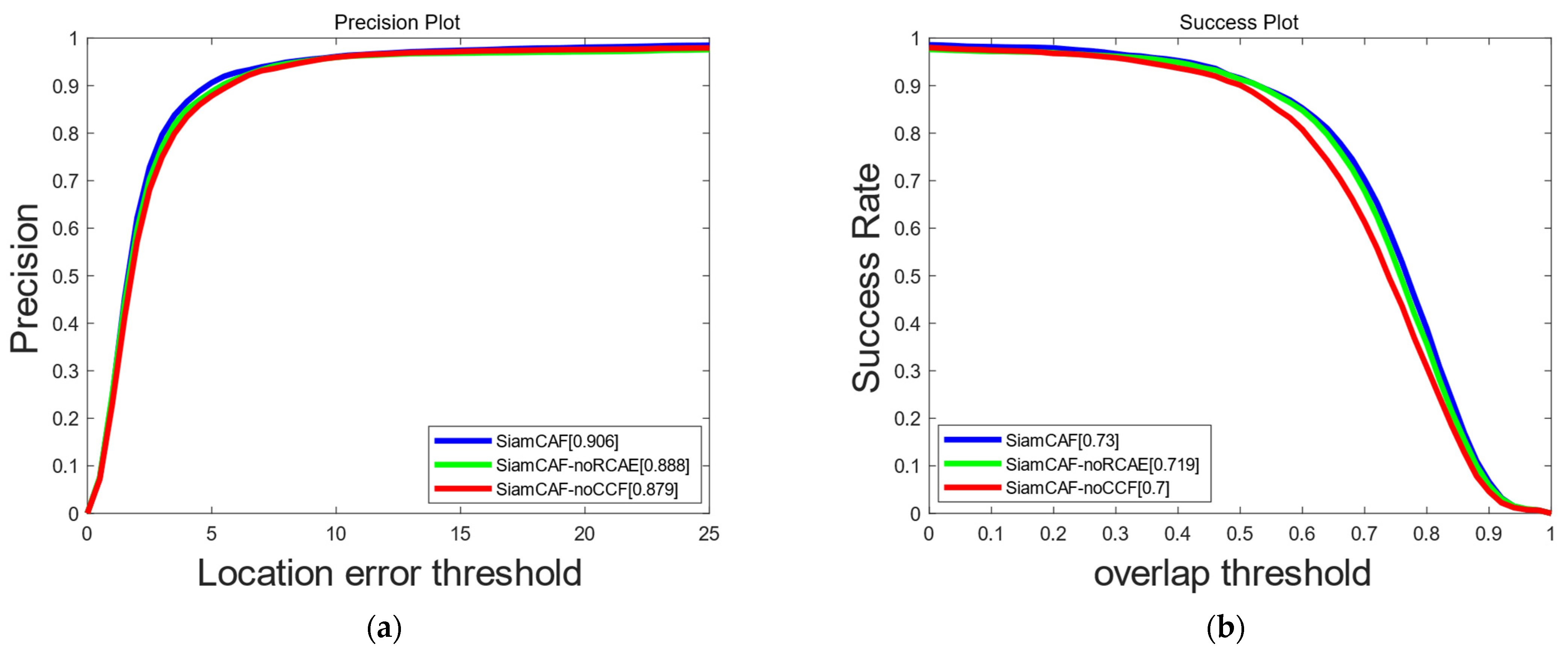

4.6. Ablation Study

4.7. Efficiency Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gade, R.; Moeslund, T.B. Thermal cameras and applications: A survey. Mach. Vis. Appl. 2013, 25, 245–262. [Google Scholar] [CrossRef] [Green Version]

- Li, C.; Cheng, H.; Hu, S.; Liu, X.; Tang, J.; Lin, L. Learning Collaborative Sparse Representation for Grayscale-Thermal Tracking. IEEE Trans. Image Process. 2016, 25, 5743–5756. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Liang, X.; Lu, Y.; Zhao, N.; Tang, J. RGB-T object tracking: Benchmark and baseline. Pattern Recognit. 2019, 96, 106977. [Google Scholar] [CrossRef] [Green Version]

- Li, C.; Xue, W.; Jia, Y.; Qu, Z.; Luo, B.; Tang, J.; Sun, D. LasHeR: A Large-Scale High-Diversity Benchmark for RGBT Tracking. IEEE Trans. Image Process. 2022, 31, 392–404. [Google Scholar] [CrossRef] [PubMed]

- Zhang, P.; Zhao, J.; Wang, D.; Lu, H.; Ruan, X. Visible-Thermal UAV Tracking: A Large-Scale Benchmark and New Baseline. arXiv 2022, arXiv:2204.04120. [Google Scholar]

- Kulikov, G.Y.; Kulikova, M.V. The Accurate Continuous-Discrete Extended Kalman Filter for Radar Tracking. IEEE Trans. Signal Process. 2016, 64, 948–958. [Google Scholar] [CrossRef]

- Changjiang, Y.; Duraiswami, R.; Davis, L. Fast multiple object tracking via a hierarchical particle filter. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV), Beijing, China, 17–21 October 2005; pp. 212–219. [Google Scholar]

- Li, Z.; Gao, J.; Tang, Q.; Sang, N. Improved mean shift algorithm for multiple occlusion target tracking. Opt. Eng. 2008, 47, 086402. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Cheung, W.; Hamarneh, G. n-SIFT: N-Dimensional Scale Invariant Feature Transform. IEEE Trans. Image Process. 2009, 18, 2012–2021. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jia, C.; Wang, Z.; Wu, X.; Cai, B.; Huang, Z.; Wang, G.; Zhang, T.; Tong, D. A Tracking-Learning-Detection (TLD) method with local binary pattern improved. In Proceedings of the 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 1625–1630. [Google Scholar]

- Nam, H.; Han, B. Learning Multi-domain Convolutional Neural Networks for Visual Tracking. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4293–4302. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. Fully-convolutional siamese networks for object tracking. In Proceedings of the European Conference on Computer Vision Workshop (ECCVW), Amsterdam, The Netherlands, 8–16 October 2016; pp. 850–865. [Google Scholar]

- Guo, C.; Yang, D.; Li, C.; Song, P. Dual Siamese network for RGBT tracking via fusing predicted position maps. Vis. Comput. 2021, 38, 2555–2567. [Google Scholar] [CrossRef]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A. Visual object tracking using adaptive correlation filters. In Proceedings of the 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the Circulant Structure of Tracking-by-Detection with Kernels. In Proceedings of the Computer Vision–ECCV 2012: 12th European Conference, Florence, Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7575, pp. 702–715. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Danelljan, M.; Khan, F.S.; Felsberg, M.; Weijer, J.V.D. Adaptive Color Attributes for Real-Time Visual Tracking. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 1090–1097. [Google Scholar]

- Danelljan, M.; Robinson, A.; Shahbaz Khan, F.; Felsberg, M. Beyond Correlation Filters: Learning Continuous Convolution Operators for Visual Tracking. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; Volume 9909, pp. 472–488. [Google Scholar]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High Performance Visual Tracking with Siamese Region Proposal Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8971–8980. [Google Scholar]

- Zhang, X.; Ye, P.; Qiao, D.; Zhao, J.; Peng, S.; Xiao, G. Object Fusion Tracking Based on Visible and Infrared Images Using Fully Convolutional Siamese Networks. In Proceedings of the 2019 22th International Conference on Information Fusion (FUSION), Ottawa, ON, Canada, 2–5 July 2019; pp. 1–8. [Google Scholar]

- Zhang, X.; Ye, P.; Peng, S.; Liu, J.; Gong, K.; Xiao, G. SiamFT: An RGB-Infrared Fusion Tracking Method via Fully Convolutional Siamese Networks. IEEE Access 2019, 7, 122122–122133. [Google Scholar] [CrossRef]

- Zhang, X.; Ye, P.; Peng, S.; Liu, J.; Xiao, G. DSiamMFT: An RGB-T fusion tracking method via dynamic Siamese networks using multi-layer feature fusion. Signal Process. Image Commun. 2020, 84, 115756. [Google Scholar] [CrossRef]

- Zhang, T.; Liu, X.; Zhang, Q.; Han, J. SiamCDA: Complementarity- and Distractor-Aware RGB-T Tracking Based on Siamese Network. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 1403–1417. [Google Scholar] [CrossRef]

- Feng, M.; Su, J. Learning reliable modal weight with transformer for robust RGBT tracking. Knowl.-Based Syst. 2022, 249, 108945. [Google Scholar] [CrossRef]

- Peng, J.; Zhao, H.; Hu, Z.; Zhuang, Y.; Wang, B. Siamese infrared and visible light fusion network for RGB-T tracking. Int. J. Mach. Learn. Cybern. 2023. [Google Scholar] [CrossRef]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial transformer networks. In Proceedings of the 28th International Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 7–12 December 2015; pp. 2017–2025. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective Kernel Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 510–519. [Google Scholar]

- Zhang, L.; Danelljan, M.; Gonzalez-Garcia, A.; Weijer, J.v.d.; Khan, F.S. Multi-Modal Fusion for End-to-End RGB-T Tracking. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019; pp. 2252–2261. [Google Scholar]

- Li, Y.; Zhao, H.; Hu, Z.; Wang, Q.; Chen, Y. IVFuseNet: Fusion of infrared and visible light images for depth prediction. Inf. Fusion 2020, 58, 1–12. [Google Scholar] [CrossRef]

- Li, C.; Zhao, N.; Lu, Y.; Zhu, C.; Tang, J. Weighted Sparse Representation Regularized Graph Learning for RGB-T Object Tracking. In Proceedings of the 25th ACM international conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1856–1864. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ECO: Efficient Convolution Operators for Tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6931–6939. [Google Scholar]

- Li, C.; Liu, L.; Lu, A.; Ji, Q.; Tang, J. Challenge-Aware RGBT Tracking. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; Volume 12367, pp. 222–237. [Google Scholar]

- Li, C.L.; Lu, A.; Zheng, A.H.; Tu, Z.; Tang, J. Multi-Adapter RGBT Tracking. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019; pp. 2262–2270. [Google Scholar]

- Zhu, Y.; Li, C.; Luo, B.; Tang, J.; Wang, X. Dense Feature Aggregation and Pruning for RGBT Tracking. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 465–472. [Google Scholar]

- Mei, J.; Zhou, D.; Cao, J.; Nie, R.; Guo, Y. HDINet: Hierarchical Dual-Sensor Interaction Network for RGBT Tracking. IEEE Sens. J. 2021, 21, 16915–16926. [Google Scholar] [CrossRef]

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Learning Spatially Regularized Correlation Filters for Visual Tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4310–4318. [Google Scholar]

- Jung, I.; Son, J.; Baek, M.; Han, B. Real-Time MDNet. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 83–98. [Google Scholar]

- Zhang, Z.; Peng, H. Deeper and Wider Siamese Networks for Real-Time Visual Tracking. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4586–4595. [Google Scholar]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H.S. End-to-End Representation Learning for Correlation Filter Based Tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5000–5008. [Google Scholar]

- Kim, H.U.; Lee, D.Y.; Sim, J.Y.; Kim, C.S. SOWP: Spatially Ordered and Weighted Patch Descriptor for Visual Tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3011–3019. [Google Scholar]

- Wu, Y.; Blasch, E.; Chen, G.; Bai, L.; Ling, H. Multiple source data fusion via sparse representation for robust visual tracking. In Proceedings of the 14th International Conference on Information Fusion, Chicago, IL, USA, 5–8 July 2011; pp. 1–8. [Google Scholar]

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Accurate Scale Estimation for Robust Visual Tracking. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014; BMVA Press: London, UK, 2014. [Google Scholar]

- Huang, L.; Zhao, X.; Huang, K. GlobalTrack: A Simple and Strong Baseline for Long-Term Tracking. Proc. AAAI Conf. Artif. Intell. 2020, 34, 11037–11044. [Google Scholar] [CrossRef]

- Yan, B.; Zhao, H.; Wang, D.; Lu, H.; Yang, X. ‘Skimming-Perusal’ Tracking: A Framework for Real-Time and Robust Long-Term Tracking. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2385–2393. [Google Scholar]

- Cao, Z.; Fu, C.; Ye, J.; Li, B.; Li, Y. HiFT: Hierarchical Feature Transformer for Aerial Tracking. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 15437–15446. [Google Scholar]

- Lukežič, A.; Matas, J.; Kristan, M. D3S—A Discriminative Single Shot Segmentation Tracker. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 7131–7140. [Google Scholar]

- Gao, Y.; Li, C.; Zhu, Y.; Tang, J.; He, T.; Wang, F. Deep Adaptive Fusion Network for High Performance RGBT Tracking. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October; pp. 91–99.

- Zhang, P.; Wang, D.; Lu, H.; Yang, X. Learning Adaptive Attribute-Driven Representation for Real-Time RGB-T Tracking. Int. J. Comput. Vis. 2021, 129, 2714–2729. [Google Scholar] [CrossRef]

| Attributes | OCC | LSV | FM | LI | TC | SO | DEF | ALL |

|---|---|---|---|---|---|---|---|---|

| KCF+RGBT | 52.2/35.9 | 55.4/41.4 | 42.6/34.2 | 45.9/37.8 | 44.9/36.1 | 44.4/30.9 | 49.2/40.0 | 49.6/39.6 |

| SRDCF+RGBT | 72.7/58.0 | 80.4/68.1 | 68.3/61.1 | 71.7/59.4 | 70.5/58.0 | 80.5/57.5 | 66.6/53.7 | 71.9/59.1 |

| RT-MDNet | 73.3/57.6 | 79.1/63.7 | 78.1/64.1 | 77.2/63.8 | 73.7/59.0 | 85.6/63.4 | 73.1/61.0 | 74.5/61.3 |

| DuSiamRT | 72.8/57.7 | 80.9/64.5 | 72.1/58.0 | 76.2/62.3 | 78.1/61.4 | 84.4/64.2 | 76.4/62.9 | 76.6/62.8 |

| SGT | 81.0/56.7 | 82.6/55.7 | 82.0/55.7 | 84.3/59.0 | 84.4/59.6 | 85.7/60.0 | 86.7/62.1 | 85.1/62.8 |

| DAPNet | 87.3/67.4 | 84.7/66.1 | 82.3/65.3 | 90.0/67.7 | 89.3/68.0 | 93.7/68.2 | 91.9/69.6 | 88.2/70.7 |

| HDINet | 86.3/66.9 | 87.9/70.8 | 88.2/70.4 | 91.9/74.5 | 87.0/68.9 | 95.3/70.5 | 90.3/73.8 | 88.8/71.8 |

| MANet | 88.2/69.6 | 87.6/70.1 | 87.6/69.9 | 89.0/71.2 | 89.0/71.0 | 89.7/70.8 | 90.1/71.5 | 89.4/72.4 |

| SiamCAF | 89.8/69.9 | 88.0/69.5 | 88.0/68.6 | 91.8/74.4 | 90.1/71.6 | 91.7/70.5 | 92.2/75.4 | 90.6/73.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xue, Y.; Zhang, J.; Lin, Z.; Li, C.; Huo, B.; Zhang, Y. SiamCAF: Complementary Attention Fusion-Based Siamese Network for RGBT Tracking. Remote Sens. 2023, 15, 3252. https://doi.org/10.3390/rs15133252

Xue Y, Zhang J, Lin Z, Li C, Huo B, Zhang Y. SiamCAF: Complementary Attention Fusion-Based Siamese Network for RGBT Tracking. Remote Sensing. 2023; 15(13):3252. https://doi.org/10.3390/rs15133252

Chicago/Turabian StyleXue, Yingjian, Jianwei Zhang, Zhoujin Lin, Chenglong Li, Bihan Huo, and Yan Zhang. 2023. "SiamCAF: Complementary Attention Fusion-Based Siamese Network for RGBT Tracking" Remote Sensing 15, no. 13: 3252. https://doi.org/10.3390/rs15133252