High-Resolution Inundation Mapping for Heterogeneous Land Covers with Synthetic Aperture Radar and Terrain Data

Abstract

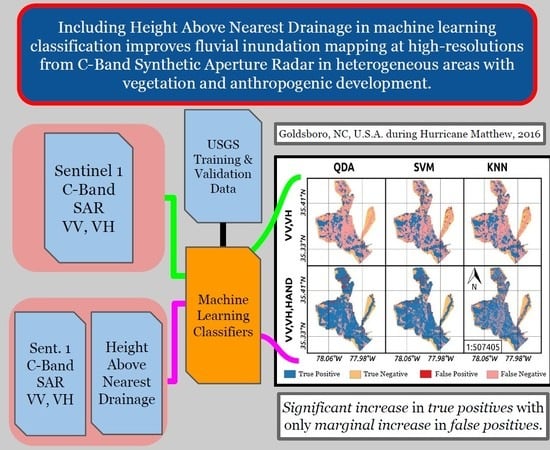

:1. Introduction

2. Materials and Methods

2.1. Study Areas

2.2. Overview

2.3. Features and Training Samples

2.4. Classification

2.4.1. Quadratic Discriminant Analysis (QDA)

2.4.2. Support Vector Machine (SVM)

2.4.3. K-Nearest Neighbors (KNN)

2.5. Performance Metrics

3. Results

3.1. Primary Metrics

3.2. Secondary Metrics

3.3. Decision Boundaries

3.4. Training and Classification Performance Times

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

References

- Sanderson, D.; Anshu, S. Resilience: Saving Lives Today, Investing for Tomorrow; World Disasters Report 2016; International Federation of Red Cross and Red Crescent Societies: Geneva, Switzerland, 2016. [Google Scholar]

- Nigro, J.; Slayback, D.; Policelli, F.; Brakenridge, G.R. NASA/DFO MODIS Near Real-Time (NRT) Global Flood Mapping Product Evaluation of Flood and Permanent Water Detection. Available online: https://floodmap.modaps.eosdis.nasa.gov//documents/NASAGlobalNRTEvaluationSummary_v4.pdf (accessed on 14 October 2014).

- De Groeve, T.; Brakenridge, G.R.; Paris, S. Global Flood Detection System Data Product Specifications; Joint Research Centre: Brussels, Belgium, 2015. [Google Scholar]

- Martinis, S. Automatic Near Real-Time Flood Detection in High Resolution X-Band Synthetic Aperture Radar Satellite Data Using Context-Based Classification on Irregular Graphs. Ph.D. Thesis, LMU, Munich, Germany, 2010. [Google Scholar]

- Kudahetty, C. Flood Mapping Using Synthetic Aperture Radar in the Kelani Ganga and the Bolgoda Basins, Sri Lanka; University of Twente Faculty of Geo-Information and Earth Observation (ITC): Enschede, the Netherlands, 2012. [Google Scholar]

- Stefan, S.; Matgen, P.; Hollaus, M.; Wagner, W. Flood detection from multi-temporal SAR data using harmonic analysis and change detection. Int. J. Appl. Earth Obs. Geoinf. 2015, 38, 15–24. [Google Scholar]

- Saatchi, S. SAR Methods for Mapping and Monitoring Forest Biomass. In SAR Handbook: Comprehensive Methodologies for Forest Mapping and Biomass Estimation; Flores, A., Herndon, K., Thapa, R., Cherrington, E., Eds.; in review; NASA: Washingtong, DC, USA, 2020. [Google Scholar]

- Yukihiro, S.; Rangoonwala, A.; Elijah, W.R., III. Monitoring Coastal Inundation with Synthetic Aperture Radar Satellite Data; No. 2011-1208; US Geological Survey: Reston, VI, USA, 2011.

- SENTINEL-1 SAR User Guide, ESA. Available online: https://sentinel.esa.int/web/sentinel/user-guides/sentinel-1-sar (accessed on 1 January 2019).

- Kasischke, S.E.; Smith, K.B.; Bourgeau-Chavez, L.L.; Romanowicz, E.A.; Brunzell, S.; Richardson, C.J. Effects of seasonal hydrologic patterns in south Florida wetlands on radar backscatter measured from ERS-2 SAR imagery. Remote Sens. Environ. 2003, 88, 423–441. [Google Scholar] [CrossRef]

- Hess, L.L.; Melack, J.M.; Novo, E.M.L.M.; Barbosa, C.C.F.; Gastil, M. Dual-season mapping of wetland inundation and vegetation for the central Amazon basin. Remote Sens. Environ. 2003, 87, 404–428. [Google Scholar] [CrossRef]

- Julie, B.; Rapinel, S.; Corgne, S.; Pottier, E.; Hubert-Moy, L. TerraSAR-X dual-pol time-series for mapping of wetland vegetation. ISPRS J. Photogramm. Remote Sens. 2015, 107, 90–98. [Google Scholar]

- Cécile, C.; Rapinel, S.; Frison, Pi.; Bonis, A.; Mercier, G.; Mallet, C.; Corgne, S.; Rudant, J. Mapping and characterization of hydrological dynamics in a coastal marsh using high temporal resolution Sentinel-1A images. Remote Sens. 2016, 8, 570. [Google Scholar]

- Marco, C.; Pelich, R.; Pulvirenti, L.; Pierdicca, N.; Hostache, R.; Matgen, P. Sentinel-1 InSAR coherence to detect floodwater in urban areas: Houston and Hurricane Harvey as a test case. Remote Sens. 2019, 11, 107. [Google Scholar]

- Fabio, C.; Marconcini, M.; Ceccato, P. Normalized Difference Flood Index for rapid flood mapping: Taking advantage of EO big data. Remote Sens. Environ. 2018, 209, 712–730. [Google Scholar]

- Jean-Michel, M.; Le Toan, T. Mapping of flood dynamics and spatial distribution of vegetation in the Amazon floodplain using multitemporal SAR data. Remote Sens. Environ. 2007, 108, 209–223. [Google Scholar]

- Sandro, M.; Twele, A. A hierarchical spatio-temporal Markov model for improved flood mapping using multi-temporal X-band SAR data. Remote Sens. 2010, 2, 2240–2258. [Google Scholar]

- Pulvirenti, L.; Chini, M.; Pierdicca, N.; Guerriero, L.; Ferrazzoli, P. Flood monitoring using multi-temporal COSMO-SkyMed data: Image segmentation and signature interpretation. Remote Sens. Environ. 2011, 115, 990–1002. [Google Scholar] [CrossRef]

- Luca, P.; Pierdicca, N.; Chini, M.; Guerriero, L. Monitoring flood evolution in vegetated areas using COSMO-SkyMed data: The Tuscany 2009 case study. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 1807–1816. [Google Scholar]

- Viktoriya, T.; Martinis, S.; Marzahn, P.; Ludwig, R. Detection of temporary flooded vegetation using Sentinel-1 time series data. Remote Sens. 2018, 10, 1286. [Google Scholar]

- Antara, D.; Grimaldi, S.; Ramsankaran, R.A.A.J.; Pauwels, V.R.N.; Walker, J.P. Towards operational SAR-based flood mapping using neuro-fuzzy texture-based approaches. Remote Sens. Environ. 2018, 215, 313–329. [Google Scholar]

- Simon, P.; Jüssi, M.; Martinis, S.; Twele, A. Mapping of flooded vegetation by means of polarimetric Sentinel-1 and ALOS-2/PALSAR-2 imagery. Int. J. Remote Sens. 2017, 38, 3831–3850. [Google Scholar]

- Ward, D.P.; Petty, A.; Setterfield, S.A.; Douglas, M.M.; Ferdinands, K.; Hamilton, S.K.; Phinn, S. Floodplain inundation and vegetation dynamics in the Alligator Rivers region (Kakadu) of northern Australia assessed using optical and radar remote sensing. Remote Sens. Environ. 2014, 147, 43–55. [Google Scholar] [CrossRef]

- Chayma, C.; Chini, M.; Abdelfattah, R.; Hostache, R.; Chokmani, K. Flood mapping in a complex environment using bistatic TanDEM-X/TerraSAR-X InSAR coherence. Remote Sens. 2018, 10, 1873. [Google Scholar]

- Stefania, G.; Xu, J.; Li, Y.; Pauwels, V.R.N.; Walker, J.P. Flood mapping under vegetation using single SAR acquisitions. Remote Sens. Environ. 2020, 237, 111582. [Google Scholar]

- Donato, N.A.; Cuartas, L.A.; Hodnett, M.; Rennó, C.D.; Rodrigues, G.; Silveira, A.; Waterloo, M.; Saleska, S. Height Above the Nearest Drainage–a hydrologically relevant new terrain model. J. Hydrol. 2011, 404, 13–29. [Google Scholar]

- Daleles, R.C.; Nobre, A.D.; Cuartas, L.A.; Soares, J.V.; Hodnett, M.G.; Tomasella, J.; Waterloo, M.J. HAND, a new terrain descriptor using SRTM-DEM: Mapping terra-firme rainforest environments in Amazonia. Remote Sens. Environ. 2008, 112, 3469–3481. [Google Scholar]

- Liu, Y.Y.; Maidment, D.R.; Tarboton, D.G.; Zheng, X.; Wang, S. A CyberGIS integration and computation framework for high-resolution continental-scale flood inundation mapping. JAWRA J. Am. Water Resour. Assoc. 2018, 54, 770–784. [Google Scholar] [CrossRef] [Green Version]

- André, T.; Cao, W.; Plank, S.; Martinis, S. Sentinel-1-based flood mapping: A fully automated processing chain. Int. J. Remote Sens. 2016, 37, 2990–3004. [Google Scholar]

- Chang, H.; Nguyen, B.D.; Zhang, S.; Cao, S.; Wagner, W. A comparison of terrain indices toward their ability in assisting surface water mapping from Sentinel-1 data. ISPRS Int. J. Geo Inf. 2017, 6, 140. [Google Scholar]

- United States Geological Survey. Boundary Descriptions and Names of Regions, Subregions, Accounting Units and Cataloging Units. 2017. Available online: https://water.usgs.gov/GIS/huc_name.html (accessed on 1 January 2019).

- Musser, J.W.; Watson, K.M.; Gotvald, A.J. Characterization of Peak Streamflows and Flood Inundation at Selected Areas in North Carolina following Hurricane Matthew, October 2016; No. 2017-1047; US Geological Survey: Reston, VI, USA, 2017.

- North Carolina Floodplain Mapping Program. North Carolina’s Spatial Data Download: North Carolina Emergency Management Web Page. 2016. Available online: https://rmp.nc.gov/sdd/ (accessed on 5 December 2016).

- U.S. Geological Survey. National Water Information System Data Available on the World Wide Web (USGS Water Data for the Nation). 2016. Available online: http://waterdata.usgs.gov/nwis/ (accessed on 13 January 2019).

- QGIS Development Team. QGIS Geographic Information System. Open Source Geospatial Foundation. 2009. Available online: http://qgis.osgeo.org (accessed on 1 January 2019).

- R Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria. Available online: https://www.R-project.org/ (accessed on 1 January 2017).

- Roger, B.; Keitt, T.; Rowlingson, B. Rgdal: Bindings for the ‘Geospatial’ Data Abstraction Library. R Package Version 1.2-15. Available online: https://CRAN.R-project.org/package=rgdal (accessed on 1 January 2017).

- Geospatial Data Abstraction Library (GDAL). OSGEO Project. Open Source. 2017. Available online: http://www.gdal.org (accessed on 1 January 2017).

- ESRI: 102003. USA Contiguous Albers Equal Area Conic: ESRI Projection—Spatial Reference. Available online: http://spatialreference.org/ref/esri/102003/ (accessed on 29 May 2019).

- SENTINEL-1 SAR Technical Guide, ESA. Available online: https://sentinel.esa.int/web/sentinel/technical-guides/sentinel-1-sar (accessed on 1 January 2017).

- Sikiru, Y.A.; Liu, R.; Wu, S. SAR image despeckling using refined Lee filter. In Proceedings of the 7th International Conference on Intelligent Human-Machine Systems and Cybernetics, Hangzhou, China, 26–27 August 2015; Volume 2, pp. 260–265. [Google Scholar]

- Sentinel Application Platform (SNAP). European Space Agency (ESA). 2017. Available online: http://step.esa.int/main/toolboxes/snap/ (accessed on 1 January 2017).

- John, H.; Billor, N.; Anderson, N. Comparison of standard maximum likelihood classification and polytomous logistic regression used in remote sensing. Eur. J. Remote Sens. 2013, 46, 623–640. [Google Scholar]

- Hong, H.; Naghibi, S.A.; Dashtpagerdi, M.M.; Pourghasemi, H.R.; Chen, W. A comparative assessment between linear and quadratic discriminant analyses (LDA-QDA) with frequency ratio and weights-of-evidence models for forest fire susceptibility mapping in China. Arab. J. Geosci. 2017, 10, 167. [Google Scholar] [CrossRef]

- Giorgos, M.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar]

- Hector, F.-L.; Ek, A.R.; Bauer, M.E. Estimation and mapping of forest stand density, volume, and cover type using the k-nearest neighbors method. Remote Sens. Environ. 2001, 77, 251–274. [Google Scholar]

- Li, Y.; Cheng, B. An improved k-nearest neighbor algorithm and its application to high resolution remote sensing image classification. In Proceedings of the 17th International Conference on Geoinformatics, Fairfax, VA, USA, 12–14 August 2009; pp. 1–4. [Google Scholar]

- James, G. An Introduction to Statistical Learning: With Applications in R; Springer Verlag: Berlin, Germany, 2010. [Google Scholar]

- Chang, C.-C.; Lin, C.-J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. Tist 2011, 2, 27. [Google Scholar] [CrossRef]

- Collin, H.; Dewitz, J.; Yang, L.; Jin, S.; Danielson, P.; Xian, G.; Coulston, J.; Herold, N.; Wickham, J.; Megown, K. Completion of the 2011 National Land Cover Database for the conterminous United States–representing a decade of land cover change information. Photogramm. Eng. Remote Sens. 2015, 81, 345–354. [Google Scholar]

- National Land Cover Database (NLCD). United States Geological Survey (USGS). Multi-Resolution Land Characteristics (MRLC) Consortium. 2017. Available online: https://nationalmap.gov/landcover.html (accessed on 1 January 2017).

- Richard, A.J. A Land Use and Land Cover Classification System for Use with Remote Sensor Data; US Government Printing Office: Washinton, DC, USA, 1976; Volume 964.

- Eric, B.J.; Kim, S.D. Verification of the weather channel probability of precipitation forecasts. Mon. Weather Rev. 2008, 136, 4867–4881. [Google Scholar]

- Sagy, C.; Brakenridge, G.R.; Kettner, A.; Bates, B.; Nelson, J.; McDonald, R.; Huang, Y.; Munasinghe, D.; Zhang, J. Estimating floodwater depths from flood inundation maps and topography. JAWRA J. Am. Water Resour. Assoc. 2018, 54, 847–858. [Google Scholar]

- Anna, S.; Radice, A.; Molinari, D. A New Tool to Estimate Inundation Depths by Spatial Interpolation (RAPIDE): Design, Application and Impact on Quantitative Assessment of Flood Damages. Water 2018, 10, 1805. [Google Scholar]

| Site Name. | Area (km2) | USGS Station Number | Peak Date (MM/DD/YY) | Peak Gage Height (m) | SAR Observation Gage Height (m) | Prevalence3 |

|---|---|---|---|---|---|---|

| Smithfield | 21.8 | 02087570 | 10/09/16 | 8.87 | 5.83 | 0.798 |

| Goldsboro | 89.2 | 02089000 | 10/12/16 | 9.06 | 9.06 | 0.877 |

| Kinston | 28.8 | 02089500 | 10/14/16 | 8.63 | 6.98 | 0.620 |

| Total | 139.8 | Overall | 0.787 |

| Name Formula | Definition |

|---|---|

| True Positive (TP) | Predicted I and observed I. |

| True Negative (TN) | Predicted NI and observed NI. |

| False Positive (FP) | Predicted I but observed NI. |

| False Negative (FN) | Predicted NI but observed I. |

| True Positive Rate (TPR) TP / (TP + FN) | Proportion of observed I areas predicted as I. |

| True Negative Rate (TNR) TN / (TN + FP) | Proportion of observed NI areas predicted as NI. |

| Positive Predictive Value (PPV) TP / (TP + FP) | Proportion of areas predicted as I correctly classified as I. |

| Negative Predictive Value (NPV) TN / (FN + TN) | Proportion of areas predicted as NI correctly classified as NI. |

| Critical Success Index (CSI) TP / (TP + FN + FP) | Proportion of areas observed I plus FN correctly classified as I. |

| Overall Accuracy (ACC) (TP + TN) / (TP + FN + FP + TN) | Proportion of all areas classified correctly. |

| Land Cover Group | Level II Classes | Fractional Coverage | Area (km2) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| S1 | G2 | K3 | T4 | S1 | G2 | K3 | T4 | ||

| Developed | 21, 22, 23, 24 | 0.15 | 0.22 | 0.21 | 0.21 | 3.4 | 19.3 | 6.2 | 28.9 |

| Canopy | 41, 42, 43, 52, 90 | 0.45 | 0.34 | 0.51 | 0.40 | 9.7 | 30.5 | 14.7 | 55.0 |

| Agriculture | 81, 82 | 0.18 | 0.22 | 0.07 | 0.18 | 3.8 | 20.0 | 2.1 | 26.0 |

| Other | 11,12,31,51,71,72,73,74,95 | 0.22 | 0.22 | 0.20 | 0.21 | 4.8 | 19.5 | 5.7 | 30.0 |

| Totals By Site | 0.16 | 0.61 | 0.23 | 1.00 | 21.8 | 89.2 | 28.8 | 139.8 | |

| Validation | |||||

|---|---|---|---|---|---|

| Non-Inundated | Inundated | Totals | |||

| Predicted | VV & VH | Non-Inundated | 71.1 | 422.6 | 493.7 |

| Inundated | 12.3 | 279.9 | 292.2 | ||

| VV, VH & HAND | Non-Inundated | 59.1 | 127.5 | 186.6 | |

| Inundated | 24.3 | 575 | 599.3 | ||

| Totals | 83.4 | 702.5 | 785.9 | ||

| Classification Algorithm | Classification + Training Time (seconds) | |

|---|---|---|

| Mean | Standard Deviation | |

| QDA | 3.92 | 1.59 |

| SVM | 3.21 | 1.22 |

| KNN | 1.22 | 0.54 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aristizabal, F.; Judge, J.; Monsivais-Huertero, A. High-Resolution Inundation Mapping for Heterogeneous Land Covers with Synthetic Aperture Radar and Terrain Data. Remote Sens. 2020, 12, 900. https://doi.org/10.3390/rs12060900

Aristizabal F, Judge J, Monsivais-Huertero A. High-Resolution Inundation Mapping for Heterogeneous Land Covers with Synthetic Aperture Radar and Terrain Data. Remote Sensing. 2020; 12(6):900. https://doi.org/10.3390/rs12060900

Chicago/Turabian StyleAristizabal, Fernando, Jasmeet Judge, and Alejandro Monsivais-Huertero. 2020. "High-Resolution Inundation Mapping for Heterogeneous Land Covers with Synthetic Aperture Radar and Terrain Data" Remote Sensing 12, no. 6: 900. https://doi.org/10.3390/rs12060900