Urban Flood Mapping Using SAR Intensity and Interferometric Coherence via Bayesian Network Fusion

Abstract

:1. Introduction

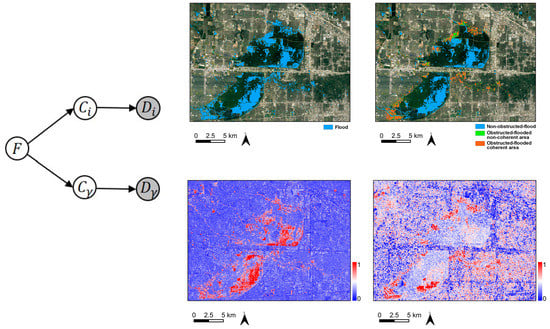

2. Methods

3. Data and Experiments

4. Results and Discussion

4.1. Houston Flood Case

4.2. Joso Flood Case

4.3. Limitations and Future Directions

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- IDMC. Global Report on Internal Displacement. 2016. Available online: http://www.internal-displacement.org/globalreport2016/pdf/2016-global-report-internal-displacement-IDMC.pdf (accessed on 16 September 2019).

- Willner, S.N.; Levermann, A.; Zhao, F.; Frieler, K. Adaptation required to preserve future high-end river flood risk at present levels. Sci. Adv. 2018, 4, eaao1914. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Willner, S.N.; Otto, C.; Levermann, A. Global economic response to river floods. Nat. Clim. Chang. 2018, 8, 594–598. [Google Scholar]

- Covello, F.; Battazza, F.; Coletta, A.; Lopinto, E.; Fiorentino, C.; Pietranera, L.; Valentini, G.; Zoffoli, S. COSMO-SkyMed an existing opportunity for observing the Earth. J. Geodyn. 2010, 49, 171–180. [Google Scholar] [Green Version]

- Werninghaus, R.; Buckreuss, S. The TerraSAR-X Mission and System Design. IEEE Trans. Geosci. Remote Sens. 2009, 48, 606–641. [Google Scholar] [CrossRef]

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.Ö.; Floury, N.; Brown, M.; et al. GMES Sentinel-1 mission. Remote Sens. Environ. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- Morena, L.C.; James, K.V.; Beck, J. An introduction to the RADARSAT-2 mission. Can. J. Remote Sens. 2004, 30, 221–234. [Google Scholar] [CrossRef]

- Kankaku, Y.; Suzuki, S.; Osawa, Y. ALOS-2 mission and development status. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium—IGARSS, Melbourne, Australia, 21–26 July 2013; pp. 2396–2399. [Google Scholar]

- Matgen, P.; Hostache, R.; Schumann, G.; Pfister, L.; Hoffmann, L.; Savenije, H.H.G. Towards an automated SAR-based flood monitoring system: Lessons learned from two case studies. Phys. Chem. Earth Parts A/B/C 2011, 36, 241–252. [Google Scholar] [CrossRef]

- Martinis, S.; Twele, A.; Voigt, S. Towards operational near real-time flood detection using a split-based automatic thresholding procedure on high resolution TerraSAR-X data. Nat. Hazards Earth Syst. Sci. 2009, 9, 303–314. [Google Scholar]

- Shen, X.; Wang, D.; Mao, K.; Anagnostou, E.; Hong, Y. Inundation Extent Mapping by Synthetic Aperture Radar: A Review. Remote Sens. 2019, 11, 879. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Chini, M.; Pierdicca, N.; Guerriero, L.; Ferrazzoli, P. Flood monitoring using multi-temporal COSMO-SkyMed data: Image segmentation and signature interpretation. Remote Sens. Environ. 2011, 115, 990–1002. [Google Scholar]

- Martinis, S.; Twele, A.; Voigt, S. Unsupervised Extraction of Flood-Induced Backscatter Changes in SAR Data Using Markov Image Modeling on Irregular Graphs. IEEE Trans. Geosci. Remote Sens. 2011, 49, 251–263. [Google Scholar] [CrossRef]

- Insom, P.; Cao, C.; Boonsrimuang, P.; Liu, D.; Saokarn, A.; Yomwan, P.; Xu, Y. A Support Vector Machine-Based Particle Filter Method for Improved Flooding Classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1943–1947. [Google Scholar] [CrossRef]

- Twele, A.; Cao, W.; Plank, S.; Martinis, S. Sentinel-1-based flood mapping: A fully automated processing chain. Int. J. Remote Sens. 2016, 37, 2990–3004. [Google Scholar] [CrossRef]

- Chini, M.; Hostache, R.; Giustarini, L.; Matgen, P. A Hierarchical Split-Based Approach for Parametric Thresholding of SAR Images: Flood Inundation as a Test Case. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6975–6988. [Google Scholar]

- Cao, W.; Twele, A.; Plank, S.; Martinis, S. A three-class change detection methodology for SAR-data based on hypothesis testing and Markov Random field modelling. Int. J. Remote Sens. 2018, 39, 488–504. [Google Scholar]

- Li, Y.; Martinis, S.; Plank, S.; Ludwig, R. An automatic change detection approach for rapid flood mapping in Sentinel-1 SAR data. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 123–135. [Google Scholar] [CrossRef]

- Tong, X.; Luo, X.; Liu, S.; Xie, H.; Chao, W.; Liu, S.; Liu, S.; Makhinov, A.N.; Makhinova, A.F.; Jiang, Y. An approach for flood monitoring by the combined use of Landsat 8 optical imagery and COSMO-SkyMed radar imagery. ISPRS J. Photogramm. Remote Sens. 2018, 136, 144–153. [Google Scholar]

- Schumann, G.J.; Moller, D.K. Microwave remote sensing of flood inundation. Phys. Chem. Earth 2015, 83–84, 84–95. [Google Scholar] [CrossRef]

- Dong, Y.; Forster, B.; Ticehurst, C. Radar backscatter analysis for urban environments. Int. J. Remote Sens. 1997, 18, 1351–1364. [Google Scholar]

- Franceschetti, G.; Iodice, A.; Riccio, D. A canonical problem in electromagnetic backscattering from buildings. IEEE Trans. Geosci. Remote Sens. 2002, 40, 1787–1801. [Google Scholar] [CrossRef]

- Thiele, A.; Cadario, E.; Karsten, S.; Thönnessen, U.; Soergel, U. Building Recognition From Multi-Aspect High-Resolution InSAR Data in Urban Areas. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3583–3593. [Google Scholar] [CrossRef]

- Wegner, J.D.; Hansch, R.; Thiele, A.; Soergel, U. Building Detection From One Orthophoto and High-Resolution InSAR Data Using Conditional Random Fields. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 83–91. [Google Scholar] [CrossRef]

- Ferro, A.; Brunner, D.; Bruzzone, L.; Lemoine, G. On the relationship between double bounce and the orientation of buildings in VHR SAR images. IEEE Geosci. Remote Sens. Lett. 2011, 8, 612–616. [Google Scholar]

- Mason, D.C.; Speck, R.; Devereux, B.; Schumann, G.J.-P.; Neal, J.C.; Bates, P.D. Flood Detection in Urban Areas Using TerraSAR-X. IEEE Trans. Geosci. Remote Sens. 2010, 48, 882–894. [Google Scholar]

- Mason, D.C.; Davenport, I.J.; Neal, J.C.; Schumann, G.J.-P.; Bates, P.D. Near Real-Time Flood Detection in Urban and Rural Areas Using High-Resolution Synthetic Aperture Radar Images. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3041–3052. [Google Scholar] [CrossRef] [Green Version]

- Giustarini, L.; Hostache, R.; Matgen, P.; Schumann, G.J.; Bates, P.D.; Mason, D.C. A Change Detection Approach to Flood Mapping in Urban Areas Using TerraSAR-X. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2417–2430. [Google Scholar] [CrossRef]

- Mason, D.C.; Giustarini, L.; Garcia-Pintado, J.; Cloke, H.L. Detection of flooded urban areas in high resolution Synthetic Aperture Radar images using double scattering. Int. J. Appl. Earth Obs. Geoinf. 2014, 28, 150–159. [Google Scholar] [Green Version]

- Tanguy, M.; Chokmani, K.; Bernier, M.; Poulin, J.; Raymond, S. River flood mapping in urban areas combining Radarsat-2 data and flood return period data. Remote Sens. Environ. 2017, 198, 442–459. [Google Scholar] [CrossRef] [Green Version]

- Pulvirenti, L.; Chini, M.; Pierdicca, N.; Boni, G. Use of SAR data for detecting floodwater in urban and agricultural areas: The role of the interferometric coherence. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1532–1544. [Google Scholar]

- Iervolino, P.; Guida, R.; Iodice, A.; Riccio, D. Flooding water depth estimation with high-resolution SAR. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2295–2307. [Google Scholar]

- Chini, M.; Pulvirenti, L.; Pierdicca, N. Analysis and interpretation of the COSMO-SkyMed observations of the 2011 Japan tsunami. IEEE Geosci. Remote Sens. Lett. 2012, 9, 467–471. [Google Scholar] [CrossRef]

- Zebker, H.A.; Member, S.; Villasenor, J. Decorrelation in Interferometric Radar Echoes. IEEE Trans. Geosci. Remote Sens. 1992, 30, 950–959. [Google Scholar] [CrossRef]

- Li, Y.; Martinis, S.; Wieland, M. Urban flood mapping with an active self-learning convolutional neural network based on TerraSAR-X intensity and interferometric coherence. ISPRS J. Photogramm. Remote Sens. 2019, 152, 178–191. [Google Scholar] [CrossRef]

- Chini, M.; Pelich, R.; Pulvirenti, L.; Pierdicca, N.; Hostache, R.; Matgen, P. Sentinel-1 InSAR Coherence to Detect Floodwater in Urban Areas: Houston and Hurricane Harvey as A Test Case. Remote Sens. 2019, 11, 107. [Google Scholar] [CrossRef]

- Marcot, B.G.; Penman, T.D. Advances in Bayesian network modelling: Integration of modelling technologies. Environ. Model. Softw. 2019, 111, 386–393. [Google Scholar]

- Cheon, S.P.; Kim, S.; Lee, S.Y.; Lee, C.B. Bayesian networks based rare event prediction with sensor data. Knowl. Based Syst. 2009, 22, 336–343. [Google Scholar]

- Landuyt, D.; Broekx, S.; D’hondt, R.; Engelen, G.; Aertsens, J.; Goethals, P.L.M. A review of Bayesian belief networks in ecosystem service modelling. Environ. Model. Softw. 2013, 46, 1–11. [Google Scholar] [CrossRef]

- Chen, J.; Ping-An, Z.; An, R.; Zhu, F.; Xu, B. Risk analysis for real-time flood control operation of a multi-reservoir system using a dynamic Bayesian network. Environ. Model. Softw. 2019, 111, 409–420. [Google Scholar] [CrossRef]

- Li, M.; Stein, A.; Bijker, W.; Zhan, Q. Urban land use extraction from Very High Resolution remote sensing imagery using a Bayesian network. ISPRS J. Photogramm. Remote Sens. 2016, 122, 192–205. [Google Scholar] [CrossRef]

- Tao, J.; Wu, W.; Xu, M. Using the Bayesian Network to Map Large-Scale Cropping Intensity by Fusing Multi-Source Data. Remote Sens. 2019, 11, 168. [Google Scholar] [Green Version]

- D’Addabbo, A.; Refice, A.; Pasquariello, G.; Lovergine, F.P.; Capolongo, D.; Manfreda, S. A Bayesian Network for Flood Detection Combining SAR Imagery and Ancillary Data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3612–3625. [Google Scholar]

- D’Addabbo, A.; Refice, A.; Lovergine, F.P.; Pasquariello, G. DAFNE: A Matlab toolbox for Bayesian multi-source remote sensing and ancillary data fusion, with application to flood mapping. Comput. Geosci. 2018, 112, 64–75. [Google Scholar]

- Krähenbühl, P.; Koltun, V. Efficient Inference in Fully Connected CRFs with Gaussian Edge Potentials. In Proceedings of the Advances in Neural Information Processing Systems 24 (NIPS 2011), Granada, Spain, 12–14 December 2011; pp. 109–117. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Barber, D. Byesian Reasoning and Machine Learning; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Frey, D.; Butenuth, M.; Straub, D. Probabilistic Graphical Models for Flood State Detection of Roads Combining Imagery and DEM. IEEE Trans. Geosci. Remote Sens. 2012, 9, 1051–1055. [Google Scholar] [Green Version]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum Likelihood from Incomplete Data via the EM Algorithm. J. R. Stat. Soc. Ser. B 1977, 39, 1–38. [Google Scholar]

- Celik, T. Image change detection using Gaussian mixture model and genetic algorithm. J. Vis. Commun. Image Represent. 2010, 21, 965–974. [Google Scholar]

- Yang, G.; Li, H.C.; Yang, W.; Fu, K.; Celik, T.; Emery, W.J. Variational Bayesian Change Detection of Remote Sensing Images Based on Spatially Variant Gaussian Mixture Model and Separability Criterion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 849–861. [Google Scholar] [CrossRef]

- Watanabe, M.; Thapa, R.B.; Ohsumi, T.; Fujiwara, H.; Yonezawa, C.; Tomii, N.; Suzuki, S. Detection of damaged urban areas using interferometric SAR coherence change with PALSAR-2. Earth Planets Space 2016, 68, 131. [Google Scholar] [CrossRef]

- Lu, C.-H.; Ni, C.-F.; Chang, C.-P.; Yen, J.-Y.; Chuang, R. Coherence Difference Analysis of Sentinel-1 SAR Interferogram to Identify Earthquake-Induced Disasters in Urban Areas. Remote Sens. 2018, 10, 1318. [Google Scholar] [Green Version]

- NOAA Hurricane Harvey: Emergency Response Imagery of the Surrounding Regions. Available online: https://storms.ngs.noaa.gov/storms/harvey/index.html#7/28.400/-96.690 (accessed on 15 August 2019).

- Liu, W.; Yamazaki, F. Review article: Detection of inundation areas due to the 2015 Kanto and Tohoku torrential rain in Japan based on multi-temporal ALOS-2 imagery. Nat. Hazards Earth Syst. Sci. 2018, 18, 1905–1918. [Google Scholar] [CrossRef] [Green Version]

- GSI (Geospatial Information Authority of Japan): 2015 Kanto-Tohoku Heavy Rainfall. Available online: https://www.gsi.go.jp/BOUSAI/H27.taihuu18gou.html (accessed on 15 August 2019).

- GSI (Geospatial Information Authority of Japan): 2015 Kanto-Tohoku Heavy Rain Joso Area Regular Radiation Image. Available online: http://maps.gsi.go.jp/development/ichiran.html#t20150929dol (accessed on 15 August 2019).

- Amitrano, D.; Di Martino, G.; Iodice, A.; Riccio, D.; Ruello, G. A New Framework for SAR Multitemporal Data RGB Representation: Rationale and Products. IEEE Trans. Geosci. Remote Sens. 2015, 51, 117–133. [Google Scholar]

- Liu, J.G.; Black, A.; Lee, H.; Hanaizumi, H.; Moore, J.M. Land surface change detection in a desert area in Algeria using multi-temporal ERS SAR coherence images. Int. J. Remote Sens. 2001, 22, 2463–2477. [Google Scholar]

- Hossin, M.; Sulaiman, N. A Review on Evaluation Metrics For Data Classification Evaluations. Int. J. Data Min. Knowl. Manag. Process 2015, 5, 1–11. [Google Scholar]

- Dabboor, M.; Iris, S.; Singhroy, V. The RADARSAT Constellation Mission in Support of Environmental Applications. Proceedings 2018, 2, 323. [Google Scholar] [CrossRef]

- Moreira, A.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.; Younis, M.; Lopez-Dekker, P.; Huber, S.; Villano, M.; Pardini, M. Tandem-L: A Highly Innovative Bistatic SAR Mission for Global Observation of Dynamic Processes on the Earth’s Surface. IEEE Geosci. Remote Sens. Mag. 2015, 3, 8–23. [Google Scholar] [CrossRef]

- Kumar, R.; Rosen, P.; Misra, T. NASA-ISRO synthetic aperture radar: Science and applications. In Proceedings of the Earth Observing Missions and Sensors: Development, Implementation, and Characterization IV, New Delhi, India, 4–7 April 2016; Volume 9881, p. 988103. [Google Scholar]

- Feldman, D.; Faulkner, M.; Krause, A. Scalable Training of Mixture Models via Coresets. In Proceedings of the Advances in Neural Information Processing Systems 24 (NIPS 2011), Granada, Spain, 12–14 December 2011; pp. 2142–2150. [Google Scholar]

- Lucic, M.; Faulkner, M.; Krause, A.; Feldman, D. Training Gaussian Mixture Models at Scale via Coresets. J. Mach. Learn. Res. 2018, 18, 5885–5909. [Google Scholar]

- Xiong, T.; Zhang, L.; Yi, Z. Double Gaussian mixture model for image segmentation with spatial relationships. J. Vis. Commun. Image Represent. 2016, 34, 135–145. [Google Scholar]

| Acquisition Time | Polarization | Incidence Angle (°) | Resolution (m) | Orbit |

|---|---|---|---|---|

| 01/07/17 | VV | 36.7 | 20 | Descending |

| 07/07/17 | VV | 36.7 | 20 | Descending |

| 13/07/17 | VV | 36.7 | 20 | Descending |

| 19/07/17 | VV | 36.7 | 20 | Descending |

| 25/07/17 | VV | 36.7 | 20 | Descending |

| 31/07/17 | VV | 36.7 | 20 | Descending |

| 06/08/17 | VV | 36.7 | 20 | Descending |

| 12/08/17 | VV | 36.7 | 20 | Descending |

| 18/08/17 | VV | 36.7 | 20 | Descending |

| 24/08/17 | VV | 36.7 | 20 | Descending |

| 30/08/17 | VV | 36.7 | 20 | Descending |

| Acquisition Time | Window Size (Range Azimuth) | ||

|---|---|---|---|

| 01/07 – 07/07 | 6 | 47 | 28 7 |

| 07/07 – 13/07 | 6 | 31 | 28 7 |

| 13/07 – 19/07 | 6 | 79 | 28 7 |

| 19/07 – 25/07 | 6 | 45 | 28 7 |

| 25/07 – 31/07 | 6 | 38 | 28 7 |

| 31/07 – 06/08 | 6 | 38 | 28 7 |

| 06/08 – 12/08 | 6 | 52 | 28 7 |

| 12/08 – 18/08 | 6 | 58 | 28 7 |

| 18/08 – 24/08 | 6 | 82 | 28 7 |

| 24/08 – 30/08 | 6 | 55 | 28 7 |

| Acquisition Time | Polarization | Incidence Angle (°) | Resolution (m) | Orbit |

|---|---|---|---|---|

| 29/08/14 | HH | 35.4 | 3 | Ascending |

| 02/01/15 | HH | 35.4 | 3 | Ascending |

| 13/02/15 | HH | 35.4 | 3 | Ascending |

| 31/07/15 | HH | 35.4 | 3 | Ascending |

| 11/09/15 | HH | 35.4 | 3 | Ascending |

| 23/10/15 | HH | 35.4 | 3 | Ascending |

| 29/01/16 | HH | 35.4 | 3 | Ascending |

| Acquisition Time | Window Size (Range Azimuth) | ||

|---|---|---|---|

| 29/08 – 02/01 | 126 | 150 | 7 7 |

| 02/01 – 13/02 | 42 | 47 | 7 7 |

| 13/02 – 31/07 | 168 | 221 | 7 7 |

| 31/07 – 11/09 | 42 | 123 | 7 × 7 |

| 11/09 – 23/10 | 42 | 35 | 7 7 |

| 23/10 – 29/01 | 98 | 205 | 7 7 |

| Data | Precision | Recall | F1 | FPR | OA (%) | κ | |

|---|---|---|---|---|---|---|---|

| Houston city | Intensity + Coherence | 0.83 | 0.61 | 0.70 | 0.02 | 94.5 | 0.68 |

| Intensity | 0.85 | 0.50 | 0.63 | 0.01 | 93.7 | 0.60 |

| Data | Precision | Recall | F1 | FPR | OA (%) | κ | |

|---|---|---|---|---|---|---|---|

| Whole study area | Intensity + Coherence | 0.91 | 0.70 | 0.79 | 0.03 | 89.6 | 0.72 |

| Intensity | 0.85 | 0.59 | 0.70 | 0.04 | 86.0 | 0.61 | |

| Joso city | Intensity + Coherence | 0.94 | 0.66 | 0.78 | 0.03 | 84.3 | 0.66 |

| Intensity | 0.85 | 0.45 | 0.59 | 0.06 | 74.0 | 0.42 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Martinis, S.; Wieland, M.; Schlaffer, S.; Natsuaki, R. Urban Flood Mapping Using SAR Intensity and Interferometric Coherence via Bayesian Network Fusion. Remote Sens. 2019, 11, 2231. https://doi.org/10.3390/rs11192231

Li Y, Martinis S, Wieland M, Schlaffer S, Natsuaki R. Urban Flood Mapping Using SAR Intensity and Interferometric Coherence via Bayesian Network Fusion. Remote Sensing. 2019; 11(19):2231. https://doi.org/10.3390/rs11192231

Chicago/Turabian StyleLi, Yu, Sandro Martinis, Marc Wieland, Stefan Schlaffer, and Ryo Natsuaki. 2019. "Urban Flood Mapping Using SAR Intensity and Interferometric Coherence via Bayesian Network Fusion" Remote Sensing 11, no. 19: 2231. https://doi.org/10.3390/rs11192231