1. Introduction

The creation and maintenance of databases of buildings have numerous applications, which involve urban planning and monitoring as well as three-dimensional (3D) city modeling. In particular, the complete documentation of buildings in official cadastral maps is essential to the transparent management of land properties, which can guarantee the legal and secure acquisition of properties. In Germany, the boundary of a building is acquired through a terrestrial survey by the official authority and then a two-dimensional (2D) ground plan of buildings is documented in the official cadastral map, which is known as the digital cadastral map (DFK).

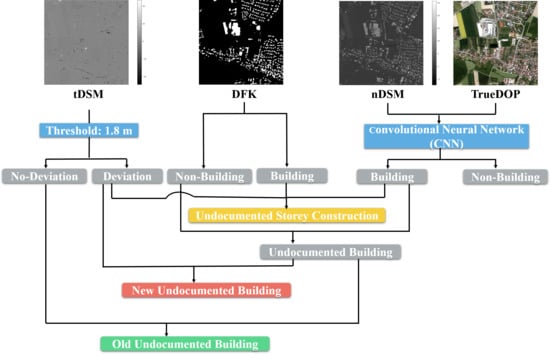

However, due to the lack of information from owners about some building construction projects, some building constructions are never recorded via terrestrial surveying and are thus missing in the DFK. These building constructions are called undocumented building constructions, and include both undocumented buildings and undocumented story construction. Undocumented buildings have two types, old undocumented buildings and new undocumented buildings. Old undocumented buildings are buildings that were constructed many years ago but never recorded in the cadastral maps. New undocumented buildings are buildings that have only recently been erected. In this regard, the building ground plans of both old and new undocumented buildings are missing in the DFK. Both old and new undocumented buildings should be terrestrially surveyed by the official authority, but they may only charge the terrestrial survey fee for new undocumented buildings, due to Germany’s regulations. In undocumented story construction, there are some changes on site, such as a newly built story or story demolition, that were not documented in the records of the official authority. Undocumented story construction will not lead to changes in the DFK, but this information is crucial to updating 3D building models. Therefore, collecting this undocumented building constructions is necessary to continue and complete these databases.

The technologies of airborne imaging and laser scanning show great potential in the task of building detection for nationwide 3D building model derivation [

1,

2]. The high resolution airborne data sets make detailed analysis of the geospatial targets more convenient and efficient. In the past, identifying undocumented buildings entailed a visual comparison of aerial images from different flying periods with DFK, enabling a comprehensive and timely interactive survey of changes in buildings. However, the visual interpretation of the aerial photos required a great amount of workforce and time.

In order to reduce the amount of work, two semi-automatic strategies are currently used by the state of Bavaria, Germany for the detection of undocumented buildings: the filter-based method [

3] and the comparison-based method [

4]. Both of these methods first detect buildings in remote sensing data. In the filter-based method, various filters, including a height filter, color filter, noise filter, and geometry filter, are applied to the data to detect the buildings. The comparison-based method detects all buildings with the aid of heuristically defined threshold values for the colors of buildings in the representative RGB color space and for the height in the Normalized Digital Surface Model (nDSM). Then both methods overlay the building detection results on the DFK to identify undocumented buildings. With the help of a Temporal Digital Surface Model (tDSM) derived from two Digital Surface Models (DSMs) in different epochs, new undocumented buildings can be discriminated from old undocumented buildings. Both methods are based on heuristic methods [

3]. However, the heuristic definition of threshold values is not standardized, and have to be determined individually for different flight campaigns. Therefore, the data covering a large area cannot be processed in a uniform and standardized manner. Moreover, there are many false alarms in the results obtained from these two methods, where vegetation is frequently misclassified as buildings. For instance, the results of undocumented buildings obtained from the filter-based method also involve isolated vegetation (see

Figure 1). In addition, these two methods do not provide any evidence of undocumented story construction.

Recently, deep learning methods such as the Convolutional Neural Network (CNN) have been favored by the remote sensing community [

5,

6] in applications such as land cover classification [

7,

8], change detection [

9,

10], multi-label classification [

11,

12], and human settlement extraction [

13,

14]. CNN comprises multiple processing layers, which can learn hierarchical feature representations from the input without any prior knowledge. For the task of building detection from remote sensing data, CNN has also proven to achieve remarkable performances that far exceed those of traditional methods [

15,

16,

17]. This is due to their superiority in generalization and accuracy without hand-crafted features. A key ingredient of CNN is training data. The amount of training data can be reduced if the pretrained transferable model is applicable in another unseen area [

18], a property that is called transferability [

19,

20]. However, due to the limited size and quality of existing publicly available data sets, transferability cannot be well investigated in the task of building detection.

In this paper, our unique contributions are three-fold:

- (1)

A new framework for the automatic detection of undocumented building constructions is proposed, which has integrated the state-of-the-art CNNs and fully harnessed official geodata. The proposed framework can identify old undocumented buildings, new undocumented buildings, and undocumented story construction according to their year and type of construction. Specifically, a CNN model is firstly exploited for the semantic segmentation of stacked nDSM and orthophoto with RGB bands (TrueDOP) data. Then, this derived binary map of “building” and “non-building” pixels is utilized to identify different types of undocumented building constructions through automatic comparison with the DFK and tDSM.

- (2)

Our building detection results are compared with those obtained from two conventional solutions utilized in the state of Bavaria, Germany. With a large collection of reference data, this comparison has statistical sense. Our method can significantly reduce the false alarm rate, which has demonstrated the use of CNN for the robust detection of buildings at large-scale.

- (3)

In order to offer insights for similar large-scale building detection tasks, we have investigated the transferability issue and sampling strategies further by using reference data of selected districts in the state of Bavaria, Germany and employing CNNs. It should be noted that this work is in an advanced position to study the practical strategies for the task of large-scale building detection, as we implement such high quality and resolution official geodata at large-scale.

The remainder of the paper is organized as follows: Related work is reviewed in

Section 2. The study area and official geodata utilized in this work are described in

Section 3.

Section 4 details the proposed framework for the detection of undocumented building constructions. The experiments are described in

Section 5. The results and discussion are provided in

Section 6 and

Section 7, respectively. Eventually,

Section 8 summarizes this work.

2. Related Work

2.1. Two Conventional Strategies for the Detection of Undocumented Buildings

In the state of Bavaria, Germany, there are two conventional strategies utilized to detect undocumented buildings, the filter-based method [

3] and the comparison-based method [

4]. For both methods, the detection of undocumented buildings is carried out by first detecting all buildings in the remote sensing data and then identifying undocumented buildings within the DFK by overlaying the results with the DFK. Finally, the detected undocumented buildings are separated into two classes by introducing a tDSM, i.e., they are classified as old undocumented buildings and new undocumented buildings.

The filter-based method detects buildings from remote sensing data based on multiple filters, which include height, color, and geometric filters. Considering that buildings are elevated objects, a “height filter” is first applied in an nDSM, in order to remove all points with height less than an empirically determined threshold. Then, the second filter “color filter” takes the color values of the individual points into account. It is assumed that all pixels belonging to the class “building” are normally distributed in an individual color channels. Thus, the values of the individual color channel from the TrueDOP for each building are calculated to derive a confidence range for the buildings. If the color values of the examined pixel are beyond this confidence range, it will be removed. The Normalized Difference Vegetation Index (NDVI) is then calculated to remove vegetation. The third filter, the “noise filter”, is implemented by comparing its height with neighboring points in a defined area. This is a further separation of those vegetation points. The last filter, the “geometry filter”, recognizes buildings according to their area, the number of breakpoints, the ratio of area to circumference, and elongation (angularity).

In the comparison-based method, all buildings at present are delineated by setting heuristic threshold values based on color and height information. The building footprints from the DFK are first intersected with the TrueDOP to derive the training areas of buildings. Then, the RGB color values from the training areas are collected from the TrueDOP as a reference [

4], where the frequency and distribution of the individual RGB combination are utilized in order to separate buildings from vegetation with an empirically chosen threshold. Finally, with the help of the nDSM, incorrect classifications between buildings and other objects such as streets are avoided by an empirically determined height threshold.

In order to minimize the incorrect detection of non-building cases that can be caused by the height noise of the nDSM or by vegetation, the filter-based method utilizes “color filters” and the comparison-based method exploits a RGB cube. However, aerial imaging is carried out with different airplanes and opposite trajectory directions at different times and with different lighting conditions, where the color channels for the same objects can also have varied values. The color values for each individual building are also largely dependent on the amount of current sunlight. Therefore, the confidence range or thresholds are not sufficient to identify buildings. For these two methods, buildings can only be identified through different heuristic thresholds for different districts, which is still not a fully automatic strategy. Furthermore, these two methods do not provide a more detailed type of undocumented building construction case–undocumented story construction.

2.2. Shallow Learning Methods for Building Detection

Building detection is a favored topic in the remote sensing community. Over the past decades, a large number of shallow learning methods have been proposed, which can be summarized into four general types [

15]: (1) edge-based, (2) region-based, (3) index-based, and (4) classification-based methods.

The edge-based methods recognize the buildings based on geometric details of buildings. In [

21], the edges of buildings are first detected using the edge operator, and then are grouped based on perceptual groupings to construct the boundary of the buildings. In the region-based methods, the region of buildings is identified based on image segmentation methods, using a two-level graph theory framework enhanced by shadow information [

22]. The index-based methods indicate the presence of buildings by a number of proposed indices to depict the building features. The morphological building index (MBI) [

23] is a building index that extracts buildings automatically, and describes the characteristics of buildings by using multiscale and multidirectional morphological operators. In the classification-based methods, buildings are extracted by feeding the spectral information and spatial features into a classifier to make a prediction. In [

24], automatic recognition of buildings is achieved through a Support Vector Machine (SVM) classification of a great quantity of geometric image features.

The shallow learning methods have shown some good results in the task of building detection by combining different spectral, spatial, or auxiliary information or assuming building hypotheses. However, the prior information and hand-crafted features of shallow learning methods make it difficult to achieve generic, robust, and scalable building detection results at large-scale. Moreover, the optimization of parameters in the shallow learning-based methods also leads to inefficiency in processing.

2.3. Deep Learning Methods for Building Detection

Recently, the emergence of deep learning methods, which are based on artificial neural networks, have made strong contributions to the task of building detection. The use of multiple layers in the network allows the automatic learning of representations from raw data. Prior information is not required in deep learning methods for hand-crafted feature design, which indicates that deep learning methods can generalize well over large areas. CNNs are deep learning architectures, that are commonly used and have been exploited as a preferred framework for the task of building detection, as they have demonstrated more powerful generalization capability and better performance than traditional methods [

25]. The task of building detection using CNNs is related to the task of semantic segmentation in computer vision, which aims at performing pixel-wise labelling in an image [

26]. This indicates that a CNN can assign a class label to every pixel in the image. Different CNN architectures, such as fully convolutional networks (FCN) [

27] and encoder-decoder based architectures (e.g., U-Net [

28], SegNet [

29] and others), are commonly used for the task of semantic segmentation, which outperform shallow learning approaches marginally [

30].

FCN is a pioneer work for semantic segmentation that effectively converts popular classification CNN models to generate pixel-level prediction maps with the transposed convolutions. In [

31], the spectral and height information from different data sets are combined as the input for FCN to generate building footprints. In addition to FCN, the encoder-decoder based architectures are another popular variant. Spatial resolution has been gradually reduced for highly efficient feature mapping in the encoder, while feature representations are recovered into a full-resolution segmentation map in the decoder. In U-Net, the skip connections, which links the encoder and the decoder, is beneficial to the preservation of the spatial details. Considering that the results of FCN-based methods are sensitive to the size of buildings, the U-Net structure implemented in [

32] increases scale invariance of algorithms for the task of building detection. SegNet is another encoder-decoder based architecture, where the max-pooling indices from the encoders are transferred to the corresponding decoders. By reusing max-pooling indices, SegNet requires less memory than U-Net. In [

25], SegNet is exploited to produce the first seamless building footprint map of America at the spatial resolution of 1 m. Currently, FC-DenseNet [

33] is a favoured method among different CNN architectures for the semantic segmentation of geospatial scenes, and is superior to many other networks in accuracy [

17,

34] due to its better feature extraction capability [

16].

3. Study Area and Official GeoData

In our research, the study area covers one-quarter of the state of Bavaria, Germany (see

Figure 2), which includes 16 districts: Ansbach, Bad Toelz, Deggendorf, Hemau, Kulmbach, Kronach, Landau, Landshut, Muenchen, Nuernburg, Regensburg, Rosenheim, Wasserburg, Schweinfurt, Weilheim, and Wolfratshausen. Bavaria is a federal state of Germany located in the southeast of the country. It is the state with the largest land area and the second most populous state in Germany. The 16 selected districts include both urban and rural areas, where different types of buildings are covered.

Four types of official geodata are used in this study: nDSM, tDSM, TrueDOP, and DFK. The sample data sets are illustrated in

Figure 3 and their related details are shown in

Table 1. In the state of Bavaria, Germany, aerial flight compaigns are acquired through both aerial photographs and Airborne Laser Scanning (ALS). A regular point grid from ALS can be derived as the Digital Terrain Model (DTM). The DSM is obtained from a point cloud generated from optical data with the dense matching method [

35]. The nDSM utilized in this research is a difference model between a current DSM at time point 2 (year 2017) and the DTM of the scene, which highlights elevated objects above the ground, such as buildings and trees. In this research, the tDSM is the difference model of two DSMs captured at two time points, i.e., time points 1 (year 2014) and 2 (year 2017). The TrueDOP is an orthophoto with RGB bands acquired in time point 2 (year 2017); ortho projection and geo-localization has been achieved corresponding to the DSM. Thus, all buildings and elevated objects in TrueDOP lie in position without geometric distortion. Each district is covered by a large number of tiles of TrueDOP, nDSM, and tDSM, where each tile has a size of

pixel at 0.4 m. The DFK is the cadastral 2D ground plan where the footprint of buildings is delineated. It is acquired via a terrestrial surveying in the field with accuracy in the range of cm. One of the limitations of a publicly available data set is the lack of high quality ground truth data [

36], where inaccurate locations of building annotations lead to the misalignment between the building footprint and the data used for analysis [

37]. It should be noted that, the DFK exploited as ground reference in our research is accurate: the buildings shown in a TrueDOP coincide the corresponding building footprint in the DFK.

5. Experiment

5.1. Data Preprocessing

The crucial element of our proposed framework is the CNN method that can predict buildings at current state. Training data is essential for CNN learning, and thus all the official geodata are preprocessed to collect training patches as input. DFK is provided as shape files, and first converted to the raster format at 0.4 m, which is the same spatial resolution as TrueDOP, nDSM, and tDSM. Then, all the tiles of TrueDOP, nDSM, and the DFK as corresponding ground reference are clipped into patches with a size of pixels, where each patch has an overlap of 124 pixels with its neighboring patches.

Then, we collect the patches from 14 districts in the state of Bavaria, Germany, except the districts of Bad Toelz and Nuernburg. And for each district among the 14 selected districts, we split the collected patches into the train and validation subset.

Table 2 shows the number of training and validation patches for the 14 selected districts.

5.2. Experiment Setup

Using the training and validation data collected from the 14 selected districts, we have firstly trained a FC-DenseNet model to get building detection results. Then, with the aid of tDSM, we have generated a seamless map of undocumented detection for one-quarter of the state of Bavaria, Germany.

To validate our building detection results, we choose the district “Bad Toelz” as the test area. Firstly, we compare our results in the district of Bad Toelz with those obtained from two conventional solutions (filter-based method and comparison-based method) utilized in the state of Bavaria, Germany. Furthermore, we also make a comparison among different CNNs. Thus, we implement another two commonly used networks (FCN-8s [

27] and U-Net [

28]) in the remote sensing community for building detection.

As one contribution of our work, the transferability issues with training data from selected districts around the state of Bavaria, Germany are explored. In this regard, transferability is examined by training another FC-DenseNet model with the training and validation data only from the district of Ansbach. Then we evaluate the two FC-DenseNet models on the districts of Bad Toelz and Nuernburg, respectively. Note that the districts of Bad Toelz and Nuernburg are not included from the 14 selected districts, which is helpful to investigate the transferability of these two trained models.

In order to investigate the sampling strategy in a local area where training samples are available, we also test the two trained FC-DenseNet models on the district of Ansbach, since the district of Ansbach is included in training and validation data of both trained models.

5.3. Training Details

In this study, all networks are applied under a Pytorch framework and trained for 100 epochs. All models are trained from scratch by a stochastic gradient descent (SGD) optimizer with a learning rate of 0.000001. The cross entropy loss is utilized as the loss function, and the batch size is 5. A Tesla P100 GPU with 16 G memory is used to train our models.

The configurations of CNNs included in experiments are listed as follows;

- (1)

FC-DenseNet is composed of four DenseNet blocks in both encoder and decoder, and one bottleneck block connecting them, which is also a DenseNet block. In each DenseNet block, we utilize 5 convolutional layers.

- (2)

FCN-8s adopts a VGG16 architecture [

44] as the backbone.

- (3)

U-Net is composed of five blocks in both the encoder and decoder. Each block in the encoder has two convolution layers, and in the decoder it has one transposed convolution layer.

5.4. Evaluation Metrics

For building detection, the model performance is evaluated by calculating the accuracy metrics, which include overall accuracy, precision, recall, F1 score, and intersection over union (IoU), which are defined as:

where

(true positive) is the number of pixels correctly identified with the class label “building”,

(false negative) denotes the number of omitted pixels with the class label of “building”.

(false positive) represents the number of “non-building” pixels in the ground reference, but are mislabeled as “building” by the model.

(true negative) is the number of the correctly detected pixels with the class label of “non-building”. Precision denotes the fraction of identified “building” pixels that are correct with ground reference, and recall represents how many “building” pixels in the ground reference are correctly predicted. The F1 score denotes a harmonic mean between precision and recall.

8. Conclusions

In order to ensure the transparent management of land properties, buildings as vital terrestrial objects, need an official terrestrial survey to be documented in the cadastral maps. For this purpose, we have proposed a framework for the detection of undocumented building constructions from official geodata, which includes nDSM, TrueDOP, and DFK. Moreover, the proposed framework categorizes detected undocumented building constructions into three types: old undocumented building, new undocumented building, and undocumented story construction with the aid of tDSM. This can contribute to the management of different construction cases.

Our framework is based on a CNN and decision fusion, and has shown greater potential for updating the building model in geographic information system than two strategies used so far in the state of Bavaria, Germany.

We investigated the transferability issue and sampling strategies for building detection at large-scale. In an unseen area, the model that collects diverse training samples from multiple districts has better transferability than the model that collects training data from only one district. However, in a local area where training samples are already available, the local samples collection and training can achieve comparative performance as the model that collects extensive training samples from different districts. These practical strategies are beneficial to other large-scale object detection works that use remote sensing data.

Furthermore, the seamless map of undocumented building constructions generated in our research covers one-quarter of the state of Bavaria, Germany at a spatial resolution of 0.4 m, and is beneficial to efficient land resource management and sustainable urban development.