1. Introduction

Vegetation plays an important role in urban ecology, environment, and daily life. Urban vegetation maintains urban ecological balance, reduces the effects of urban heat islands, and improves the quality of the living environment [

1,

2,

3,

4,

5,

6,

7,

8]. In addition, it can produce social benefits [

9], such as by reducing crime rates [

10], improving social relationships [

11,

12], and boosting residential property values [

13]. Accordingly, the study of urban vegetation is of great significance.

High-resolution remote sensing images are particularly suitable for urban vegetation type classification. Yu et al. [

14] noted better classification accuracy with Digital Airborne Imagery System (DAIS) images than medium-resolution images during detailed vegetation classification in Northern California. Zhang and Feng [

15] reported an accuracy of 87.71% in classifying five types of urban vegetation types in Nanjing (Jiangsu province, China) using IKONOS images. Compared with medium-resolution satellite images, high-resolution images have more detailed spatial information, which is instrumental in urban vegetation type classification.

Texture is the regular variation of pixel values in a digital image space. For vegetation images, textures are actually created by changes in the pattern, species, and density of vegetation, characteristics that are all closely related to vegetation types [

16,

17]. As a particularly important form of spatial information, textures have been extensively used to improve the accuracy of urban vegetation type classification based on high spatial-resolution remote sensing images [

14,

18]. The grey level co-occurrence matrix (GLCM) texture is the most widely used in vegetation classification. Yan et al. [

19] used IKONOS images to extract urban grass information in Nanjing, Jiangsu Province, China, comparing the extraction accuracy of GLCM-Contract, GLCM-Entropy, GLCM-Correlation, and GLCM-Angular-Second-Moment, and found the highest extraction accuracy, reaching 90.56%, for GLCM-Contract. Pu and Landry [

20] used WorldView-2 and IKONOS images to classify urban forest tree species in Tampa, Florida, with six kinds of GLCM in texture features used through LDA and CART classification methods. Urban forest trees were divided into seven types, with the classification accuracy of WorldView-2 (2 m) exceeding that of IKONOS images (4 m). The average highest classification accuracy of WorldView-2 was 67.22%; that of IKONOS was 53.67%.

Recently it has been reported that local binary pattern (LBP) is more efficient than GLCM in remote sensing classification [

21,

22]. LBP, which calculates the gray value difference between a center pixel and its neighborhoods, has been used in remote sensing image classification [

23,

24,

25]. Song et al. [

21] compared LBP and GLCM of four land-cover types classification using IKONOS images and found that overall accuracy for LBP was 4.42% higher than for GLCM. Similarly, Vigneshl and Thyagharajan [

22] reported better overall accuracy for LBP than traditional GLCM. The completed local binary patterns (CLBP) algorithm, an enhanced version of LBP [

26], could fully describe the texture information of remote sensing images, unlike LBP. Li et al. [

27] investigated land-use classification using CLBP textures, achieving an overall accuracy of 93.3%. Wang et al. [

28] employed CLBP textures to classify coastal wetland vegetation and achieve an overall accuracy of 85.38%. However, because CLBP textures have not been widely used to classify urban vegetation types, CLBP textures’ potential for urban vegetation type classification needs further exploration.

Window size is an important parameter for CLBP textures and is closely related to the accuracy of image segmentation and classification [

29,

30]. Numerous studies have shown that no single window size can describe the landscape characteristics of all remotely sensed objects [

31,

32,

33,

34,

35]. To achieve higher classification accuracy, texture features of different objects should be analyzed using different window sizes. For example, GLCM textures found that window size is an important parameter in vegetation classification [

36,

37,

38]. Yan et al. [

19] used 3 × 3 and 5 × 5 window size GLCM textures to improve the grass extraction accuracy in Nanjing (Jiangsu province, China) and found that the 3 × 3 window size produced better classification results. Fu and Lin [

39] extracted the loquat using 3 × 3, 5 × 5, 7 × 7, 9 × 9, and 11 × 11 GLCM window textures and found that the 7 × 7 window size produced the highest classification accuracy, 86.67%. They concluded that different window sizes have different effects on classification of different vegetation types.

Compared with GLCM, the optimal window size of CLBP textures has rarely been discussed for classification of urban vegetation types using high spatial-resolution remote sensing images. This study explores the impact of window sizes of CLBP texture extraction on classification of urban vegetation types.

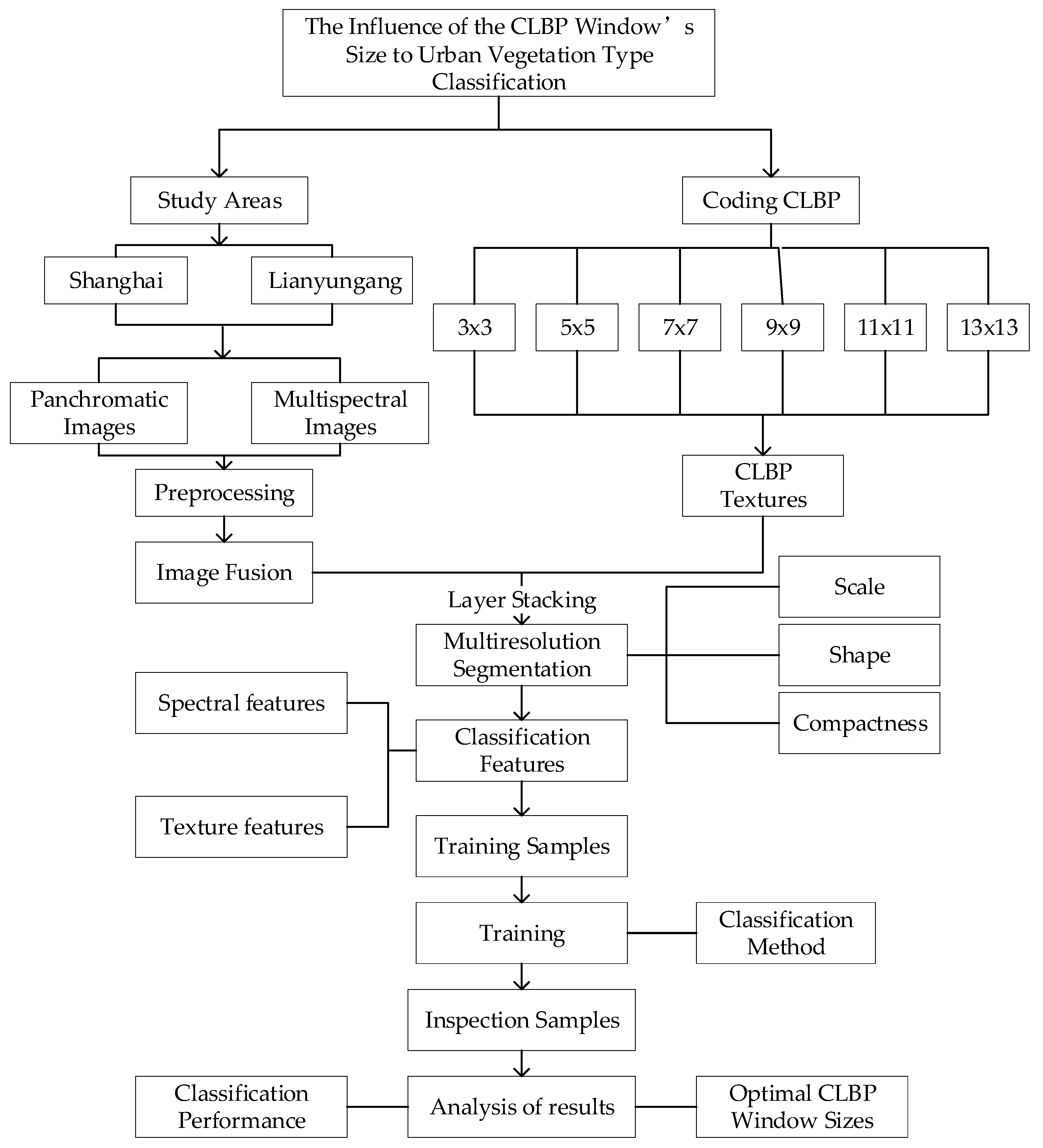

The main experimental process of this paper is shown in

Figure 1. The experimental process is mainly divided into remote sensing image preprocessing, CLBP window code writing, image segmentation, classification, and accuracy analysis.

In this paper, it mainly explains the function of urban vegetation in the city. The use of high-resolution remote sensing images is more suitable for the classification of urban vegetation types. In the study of urban vegetation classification, texture features play an important role in improving the classification effect. As a texture feature, CLBP has great potential in the classification of urban vegetation types, and it is of great research value to explore the influence of CLBP textures in different window sizes on the classification of urban vegetation types.

2. Study Area and Data

2.1. Study Area

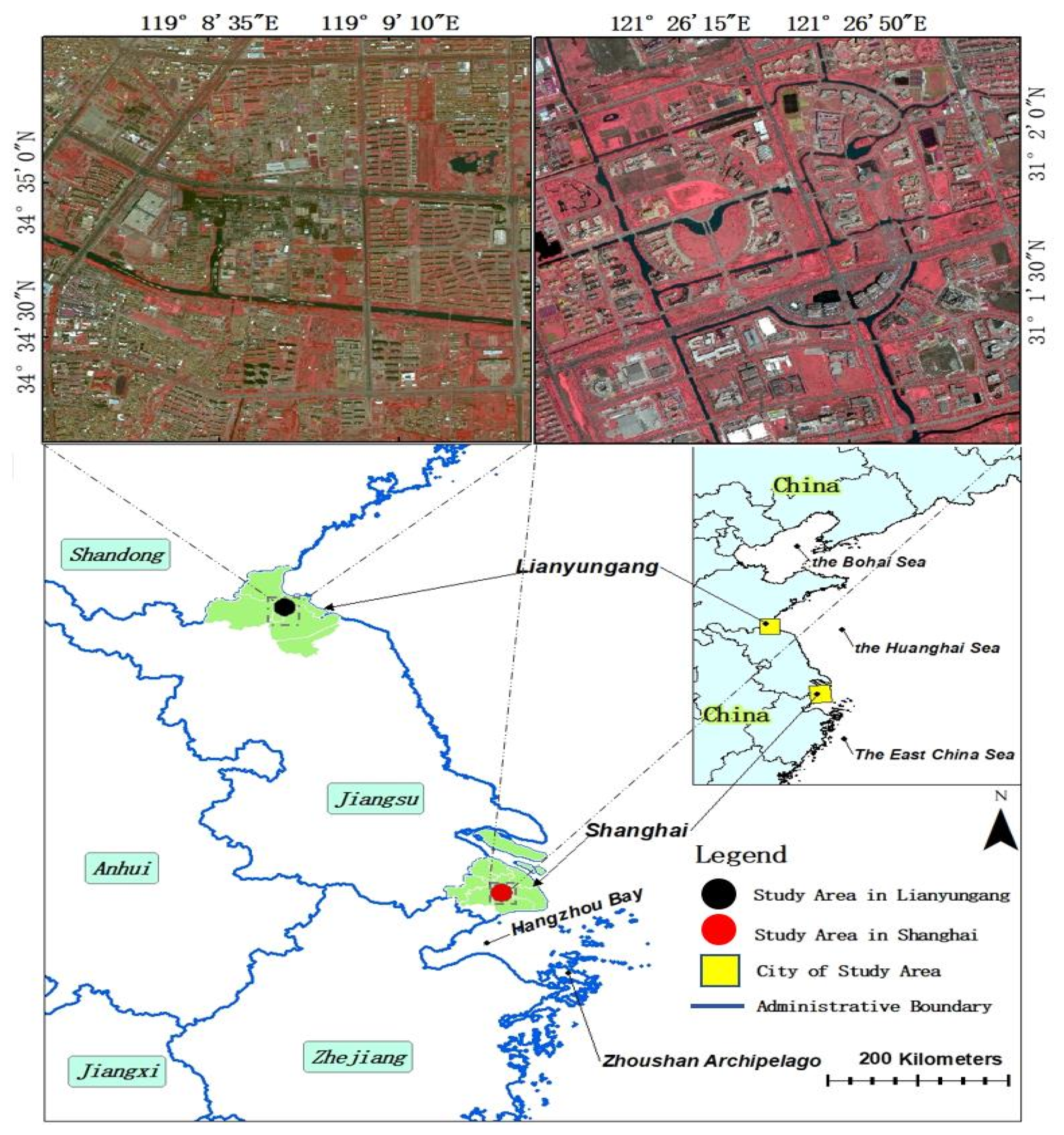

Shanghai, one of China’s commercial capitals, features a subtropical maritime monsoon climate, abundant rainfall, advanced urban planning, and intelligent technology development, which have led to flourishing urban vegetation and a reasonable scale layout. Urban vegetation construction in Shanghai is at the forefront in China.

Lianyungang, a coastal city in eastern China, in the northeast of Jiangsu Province, in the Huaihe River basin, has the climate characteristics of China’s south and north. Its economic level and population are representative of China as a whole.

The study area of Shanghai was selected at Shanghai Jiao Tong University (Minhang Campus), with an area of 8.8 km

2, at 121°25′30″–121°27′35″E and 31°0′55″–31°2′50″N (see

Figure 2). The vegetation type is mainly mixed with evergreen broad-leaved forest and artificial shrubs and grass. Vegetation distribution and planning are reasonable, and the growth of vegetation is prosperous. The research area of Lianyungang is nearby Haizhou Municipal Government, covering an area of 9.38 km

2, at 119°7′55″–119°9′55″E and 34°34′5″–34°35′35″N (see

Figure 2). The vegetation types in this area are similar to Shanghai, whilst the growth of vegetation and the vegetation planning and management is worse than Shanghai. The urban vegetation planning and growth in Lianyungang is representative in China.

2.2. Data

Both study areas used images from WorldView-2, acquired from April to May in 2015, without clouds or deformation; the terrain of the study areas is flat. Accordingly, the images are suitable for remote sensing vegetation classification. The images of the study areas in Shanghai and Lianyungang are composed of multispectral bands at 2 m resolution and panchromatic bands at 0.5 m resolution.

Table 1 gives relevant information about the images (

https://dg-cms-uploads-production.s3.amazonaws.com/uploads/document/file/98/WorldView2-DS-WV2-rev2.pdf) and

Table 2 shows the projection coordinates and geographic coordinate system of the images.

In the data preprocessing process, geometric correction and atmospheric correction were first conducted for each study area. Subsequently, the GS method was used to fuse four multispectral bands and one panchromatic band. The resulting image had 0.5 m spatial resolution and multispectral information. CLBP texture features were extracted by sharpening images, with urban vegetation in the two study areas classified by spectral information and textures.

2.3. Image Segmentation

Image segmentation models spatial relationships and dependencies by dividing a whole image into continuous and spatially independent objects [

40]. The essence of segmentation is the clustering of pixels. In continuous iterative steps, similar pixels are merged into small objects that are themselves merged into larger objects [

41], solving the salt-and-pepper problem [

13]. Image segmentation makes full use of images’ spatial information [

17] and has a high classification efficiency [

40]. In this study, image objects are used to classify urban vegetation based on image segmentation.

The image was segmented using a multiresolution segmentation algorithm, a bottom-up region-merging technique [

39]. Such approaches have three main parameters: scale, shape, and compactness [

14]. The scale parameter determines the maximum difference of the image object by adjusting the scale parameter to determine the size of the segmentation object. The shape parameter determines the degree of difference in the shape of the segmentation object, and the compactness parameter determines the degree of fragmentation of the segmented object.

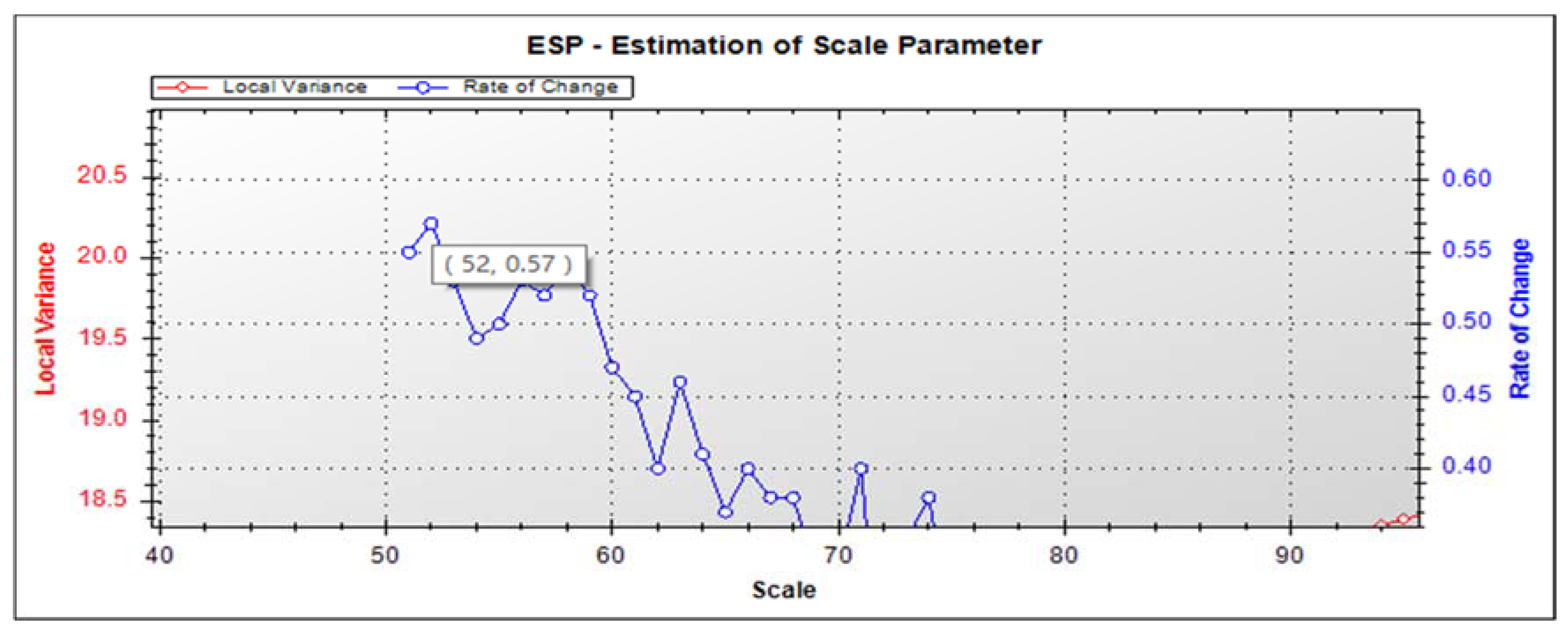

In this study, image segmentation had two steps: first segmentation of the entire image, then segmentation of the vegetation information. Over many attempts, the shape parameter was set to 0.2 and the compactness parameter to 0.6 in the first step of segmentation. After determination of shape and compactness parameters, the ESP (estimation of scale parameter) tool was used to help obtain the optimal segmentation scale, building on the idea of local variance of object heterogeneity within a scene. Peaks in the rate of change (ROC) graph indicated that imagery was segmented at the most appropriate scale [

42].

The most suitable ESP scale for distinguishing vegetation in detail was 52 (

Figure 3). Based on ESP, an artificial experiment (

Table 3) was conducted to identify the optimal scale parameter for the first segmentation as 50.

The second segmentation is based on the first step. The optimal scale parameter in the second step was 80, while shape and compactness parameters were 0.2 and 0.4. In the first segmentation, vegetation information was mainly separated from other ground objects and thus the segmentation scale became relatively small to obtain more detailed vegetation information. In the second segmentation, if the segmentation scale was too small, the vegetation would be prone to over segmentation. Therefore, the optimal scale of first segmentation was 50, and the second was 80 through the ESP and manual testing.

2.4. Feature Extraction

For this study, 19 inconsistent features—seven spectral features and 12 CLBP texture features—were used in each vegetation classification experiment. Spectral features included the spectral value of four bands (red, blue, green, and NIR), max.diff, and NDVI. Texture features included the CLBP_M, CLBP_S, and CLBP_C texture features of each band (red, blue, green, and NIR) with six different window sizes.

Table 4 gives detailed description of the features.

In Formula (1), the

is the brightness weight of layer k,

is the number of layers,

is the mean intensity of layer k of image object

(Trimble.eCognition

® Developer9.0.1 User Guide,

https://geospatial.trimble.com/ecognition-trial).

In Formula (2),

i,

j are image layers,

is the brightness of image object

,

is the mean intensity of layer i of image object

,

is the mean intensity of layer

j of image object

.

are layers of positive brightness weight with

,

is the layer weight (Trimble.eCognition

® Developer9.0.1 User Guide,

https://geospatial.trimble.com/ecognition-trial).

CLBP textures were used as an improvement on and optimization of LBP—a nonparametric local texture description algorithm that incorporates a simple principle, low computational complexity, and invariant illumination [

25].

In Formula (3),

is the gray value of the central pixel in the window,

is the gray value of neighbors,

is the number of neighbor pixels in the window,

is the size of the window [

26].

As the original LBP does not make full use of image information, Guo et al. proposed CLBP [

43], which includes mainly a signed component (CLBP_S), a magnitude component (CLBP_M), and a central pixel value binary component (CLBP_C). A neighborhood’s pixel value is compared with the central pixel value to obtain the corresponding symbol value of CLBP_S and magnitude value of CLBP_M. CLBP_C is obtained by comparing the central pixel value with the global average pixel value. On the basis of CLBP (CLBP_S, CLBP_M and CLBP_C), window sizes are changed to study the classification of urban vegetation types.

Based on the coding mode of LBP, different window sizes of CLBP textures were coded according to LBP. The value of 1 in CLBP_S was encoded as 1, and the value of −1 was encoded as 0. CLBP_S was the original LBP as shown in Formula (1), the value of CLBP_M was encoded by 1/0 as shown in Formula (2), and CLBP_C was a 1/0 binary grayscale image.

Formula (4) shows that the encoding process of CLBP_S, in which

is the neighborhood’s pixel value of the window,

is the pixel value of the central pixel of the window, and

is a symbol value that makes the difference between the neighborhood pixel value of the window and the central pixel value [

26]. The value of 1 is positive and −1 is negative. In the encoding, the positive value is coded to 1, the negative value is coded to 0, and then the value of CLBP_S is obtained.

Formula (5) shows that

is the mean of

for the whole image [

26].

is the absolute value of the difference between the pixel value of the neighborhood’s pixel and the central pixel value of the window.

is the number of the center pixel. The value of CLBP_M can be obtained by encoding 1/0 and converting it to decimal value.

Formula (6) shows that

is the mean of the pixel value of the whole image [

26]. When

, the value of CLBP_C is 1. When

, the value of CLBP_C is 0. With the increase of CLBP window size, the locality of CLBP information and data will be lost gradually, and the theoretical window size is extended to the entire image.

2.5. Vegetation Type Determination and Sample Selection

The vegetation types of the study areas were divided into three single types and three mixed types: grass, shrub, arbor, shrub-grass, arbor-grass, and arbor-shrub-grass. Shrub-grass, arbor-grass, arbor-shrub-grass are abbreviated as SG, AG, and ASG, respectively. These types were decided by urban vegetation characteristics and the results of multiresolution segmentation.

Table 5 shows the vegetation types, the principles for the selection, and field photographs of vegetation types samples.

Table 6 shows the typical examples under different features (spectrum, CLBP_M, CLBP_S, CLBP_C) of each vegetation type.

This paper describes two parts of vegetation samples: training samples and inspection samples. In total, 6350 vegetation samples were used: 3773 samples from Shanghai and 2577 from Lianyungang, comprising 2688 training samples and 3673 inspection samples.

Table 7 shows sample selection.

The experiment in this paper is unable to achieve a unified training sample size, because CLBP textures with different window sizes are added to the multiscale segmentation process. Different window sizes of CLBP textures lead to inconsistent segmentation results under the same segmentation scale, and thus this makes vegetation types more clearly segmented and helps to classify.

2.6. Classification Method

The random forest (RF) algorithm has been applied in many fields of remote sensing and achieved corresponding results [

44,

45,

46,

47]. It performs random selection of classification features, with random combination of features performed on the nodes of each decision tree. Meanwhile, the multiple decision trees that form the forest can evaluate all features’ combination classification results, then select the output classification to evaluate the best results [

48]. Compared with support vector machines (SVMs), RF has fewer parameters. It shows the relative importance of different features in classification [

49], using random trees to estimate internal error during the training stage. Wang et al. [

50] found that the RF method had a higher classification accuracy than the SVM and K-nearest neighbors (KNN) methods in coastal wetland classification. Meanwhile, RF not only improves the classification accuracy of remote sensing images but also is insensitive to noise and over-training [

51,

52]. Over many attempts, max-depth, max-tree-number, and forest-accuracy were set to 100, 100, and 0.01, respectively.

3. Classification Results

Classification of urban vegetation types was carried out in Shanghai and Lianyungang. Vegetation planning and growth in Shanghai are at an advanced level in China, whereas Lianyungang they are at average level. Combining research in Shanghai and Lianyungang can show whether CLBP window textures are stable and universal.

To select optimal CLBP window texture sizes for classification of urban vegetation types, the OA (overall classification accuracy) and KIA (kappa coefficient) of the confusion matrix are used to analyze the overall performance of each CLBP window texture’s extraction of urban vegetation. Second, producer accuracy (PA) and user accuracy (UA) are used to analyze the selection of optimal CLBP window textures for different urban vegetation types. PA refers to the ratio of the number of objects correctly classified as Class A in the whole study area to the actual total number of Class A objects (one column of the confusion matrix). UA refers to the number of objects correctly classified into Class A and the number of objects in the whole research area classified into the total number of class A objects by the classifier (a row of the confusion matrix). Finally, the research results of the two research areas were compared and summarized.

3.1. Optimal CLBP Window Texture Size Analysis in Shanghai

Shanghai is one of China’s economic centers and has a suitable living climate. Its vegetation planning and growth in Shanghai are among the best in China. Using Shanghai as a research area allows testing of the feasibility of CLBP window textures for classification of urban vegetation types.

3.1.1. Classification Performance of Different CLBP Window Textures

By analyzing the OA and KIA of the confusion matrix, overall classification performance for different sizes and types of urban vegetation in Shanghai using a CLBP window texture can be assessed.

Table 8 shows the OA and KIA of urban vegetation type classification using different CLBP window textures. In

Table 8, the “spectrum” column indicates that only spectral information is used to classify urban vegetation types, whereas the other columns indicate that spectral information and different CLBP window textures are used for classification.

As

Table 8 shows, all classification results based on a combination of spectral information and CLBP window textures achieved higher accuracy than when using only spectral information. Among the six selected CLBP window texture sizes from 3 × 3 to 13 × 13, all CLBP texture features except 13 × 13 showed markedly improved classification results. The 3 × 3 CLBP window texture size achieved the best overall accuracy, reaching 66.17%–17.28% higher than achieved using spectral information alone. The best KIA accuracy obtained by the 9 × 9 window size was 57.67% and the classification result improved by 19.41% from spectral information alone.

3.1.2. Selection of Optimal CLBP Window Sizes in Shanghai

The selection of optimal CLBP window textures for different urban vegetation types is mainly obtained by PA (Producer Accuracy) and UA (User Accuracy) in the confusion matrix.

Table 9 shows the UA and PA of urban vegetation type classification in Shanghai.

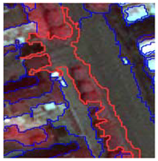

Figure 4a–g shows the classification results of urban vegetation types using only spectral information and combining spectral information with 3 × 3, 5 × 5, 7 × 7, 9 × 9, 11 × 11, and 13 × 13 CLBP window textures in Shanghai.

The optimal CLBP window textures in Shanghai can be obtained from PA and UA in

Table 9 and classification effect map in

Figure 4. The optimal window size of grass and shrubs is 3 × 3, and the average value of PA and UA in grass and shrub were 74.10% and 75.60%, respectively. The optimal window size of arbor was 11 × 11, and the average value of PA and UA in the arbor was 70.48%. The optimal window size for SG and AG was 9 × 9, the average value of PA and UA in SG and AG were 60.84% and 73.00%. The optimal window size for ASG was 7 × 7, while the average value of PA and UA in ASG was 75.06%.

3.2. Optimal CLBP Window Texture Sizes Analysis in Lianyungang

The Lianyungang area, in the Huaihe River region, has climate characteristics of both North and South China. Its vegetation planning and growth are representative of China overall. Research into classification of urban vegetation types in Lianyungang can verify the universality of CLBP window textures.

3.2.1. Classification Performance of Different CLBP Window Textures

Table 10 shows the overall performance of urban vegetation type classification with different sizes of CLBP window textures in Lianyungang.

As

Table 10 shows in the study area of Lianyungang, the 9 × 9 window size achieved the highest overall classification accuracy. The study results also show that the overall accuracy of using only spectrum information is less than using spectrum information and CLBP window textures information. The 9 × 9 CLBP window texture not only used spectral information, but also improved 7.28% in OA and 8.99% in KIA.

3.2.2. Selection of Optimal CLBP Window Sizes in Lianyungang

Table 11 shows the PA and UA of different CLBP window textures under different vegetation types in Lianyungang.

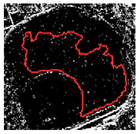

Figure 5a–g shows the classification results of urban vegetation types using only spectral information and combining spectral information with 3 × 3, 5 × 5, 7 × 7, 9 × 9, 11 × 11, and 13 × 13 CLBP window textures in Lianyungang.

Combined with

Figure 5 and

Table 11, it can be concluded that the optimal window size of grass and shrubs in Lianyungang was 3 × 3, the average values of PA and UA in grass and shrubs were 75.33% and 74.15%, respectively. The optimal window size of the arbor was 11 × 11, while the average value of PA and UA in arbor was 75.10%. The optimal window size for SG and AG was 9 × 9, while the average values of PA and UA in SG and AG were 65.70% and 66.13%, respectively. The optimal window size of ASG was 7 × 7, and the average values of PA and UA in ASG was 73.04%.

3.3. Summary of Research Results from Shanghai and Lianyungang

Combining the results of urban vegetation type classification from

Figure 4 and

Figure 5 and overall classification accuracy from

Table 8 and

Table 10 shows that the vegetation planning and growth effect in Shanghai is better than that in Lianyungang, combined spectral information with CLBP window textures ratio only using spectral information, with classification more accurate than when using only spectral information; overall classification effect of the 9 × 9 window in the two research areas was the best.

Table 9 and

Table 11 indicate that single vegetation has better separability than mixed vegetation, with the overall classification accuracy of single vegetation higher than for mixed vegetation.

Table 12 gives the summary of the experiment and optimal window sizes for the classification of vegetation type in the two study areas. The results show that optimal window size of grass and shrub was 3 × 3; the optimal window size for arbor was 11 × 11; optimal window size of SG and AG was 9 × 9; optimal window size of ASG was 7 × 7. Therefore, the experimental results indicate that the optimal window had a good stability and universality when CLBP window textures were used for urban vegetation types classification.

Selection of optimal CLBP window texture for urban vegetation type is related to the characteristics of vegetation texture. Grass and shrub have fine textures, so the optimal window is 3 × 3, whereas the texture of arbor is very rough, for an optimal window of 11 × 11. For mixed vegetation, texture roughness is composed of two or three kinds of roughness ranging from grass to arbor, for an optimal window between 3 × 3 and 11 × 11. It was also found that the optimal CLBP window texture had a limit value:

Table 12 shows that arbor has the coarsest texture, with an optimal window size of 11 × 11 instead of 13 × 13.

4. Discussion

For urban vegetation type classification, high-resolution remote sensing images can provide more vegetation details. WorldView satellite images and other high-resolution remote sensing images are suitable for classification of urban vegetation types. Compared with use of only spectral information, combination of spectral information with CLBP texture information can improve accuracy of urban vegetation classification by up to 7.28%. In this paper, different sizes of CLBP window texture are discussed to identify the optimal CLBP window for different types of urban vegetation. The results show that selection of optimal window is affected by plant size and vegetation type. The size of the CLBP window texture dramatically affect the accuracy of urban vegetation type classification, so that optimal windows vary among vegetation types.

Compared with other studies, Wang et al. [

28] used CLBP window textures to study classification of coastal vegetation types. The optimal window texture was CLBP

24,3 (circular window), and the accuracy of the CLBP

24,3 window texture was higher than for GLCM. Optimal windows were selected based only on separability, and the impact of CLBP window texture sizes on accuracy of vegetation type classification was not discussed. Notably, the complexity of the coastal vegetation types studied was much lower than the urban vegetation types. Unlike in the GLCM window texture research, Fu and Lim [

39] studied window texture sizes of 3 × 3, 5 × 5, 7 × 7, 9 × 9, and 11 × 11 for GLCM window textures and found that 7 × 7 GLCM window size produced the highest accuracy in litchi tree extraction. Unlike the results of our experiment, the optimal CLBP window size for arbor was 11 × 11. Owing to inconsistent texture features and because the litchi tree is not urban arbors, the conclusions reached are inconsistent. Similarly, Yan et al. [

19] studied window texture size for GLCM and found that a 3 × 3 window size extracted grass in Nanjing with greatest accuracy. The optimal GLCM window size obtained for grass was 3 × 3, consistent with the CLBP window texture effect in the experiment, as both studies related to urban grass. The texture characteristics of vegetation types are thus key to determining the optimal texture window size. Woźniak et al. [

53] studied SAR image classification using different decomposition windows and image classification accuracies, with 3 × 3, 5 × 5, 7 × 7, and 9 × 9 decomposition window sizes used for in-depth research into land use classification accuracy. They reported that for classification of uniform features, the 3 × 3 window size can be more accurate: as the window becomes larger, classification details are lost. This conclusion is similar to the results of our experiment, indicating that the texture characteristics determine window size to a degree.

As early as 1992, Woodcock et al. [

54] proposed a corresponding window texture size for application to different image feature types. Before 1995, it was concluded that no single window size could be applied to all types of remote sensing objects [

55,

56], it being inappropriate to use random window sizes for analysis of remote sensing images. The results of this paper validated previous views, while quantitatively clarifying the impact of CLBP window texture sizes on classification accuracy for urban vegetation types. In the body of research into remote sensing images, little research has studied the size of the texture window alone. This paper should prompt scholars to study the impact of window texture sizes on remote sensing image analysis [

55,

56].

In future research, the shape of CLBP window will be converted from a square window to a circular one. Combining CLBP textures of different window sizes to classify urban vegetation types will allow multiscale window texture sizes to provide more spatial information about vegetation types. Accuracy of CLBP window texture classification of urban vegetation types will be targeted for improvement. In future research, CLBP window textures of different sizes will be used in further remote sensing research.