1. Introduction

With the development of society and technology, measuring and monitoring human activity in the ocean area is becoming a topic of significance and increasing interest. In this topic, ship objects play an important role in both areas of the military and civilian, such as the maritime safety, marine traffic, border control, fisheries management, marine transport, etc. Meantime, the position and behavior information of the ship objects is the cornerstone of the marine domain awareness (MDA) [

1], which has been defined as the effective understanding of any activity associated with the maritime domain. Thus, a better performance on ship objects detection can greatly promote the harmonious development of the human and ocean.

The purpose of the object detection is to find out the targets with more attention in the human`s vision, then determine its location and category, which is one of the core issues in the field of computer vision (CV). Due to the similarity of the object features like background, texture, shape, etc., it is achievable to finish this task. However, it is still a challenging task because of the differences between target individuals.

Living in the age of rapid development of deep learning (DL), it will be able to design and realize an efficient object detection architecture for ship targets on the sea. The nature of DL is to extract and analyze the intrinsic law and feature representation method of the data, which is similar as the human learning process, by using the computers’ powerful ability. Actually, the improvement of DL has made a revolutionary progress in the technology of the CV and machine learning (ML) [

2], and promotes the objects detection task truly to be a data-driven, human-assisted data analysis method, in which the computer can automatically learn the sample features from the data rather than matching the target by using the features precisely created by the human [

3,

4].

DL includes supervised learning, unsupervised learning, semi-supervised learning, etc. [

5], which indicates the different ways to deal with the data. For example, in the field of object detection, it is the most common method to use supervised learning carried out based on sample data with categories and coordinate labels, which can obtain quite accurate results by cooperating with a large number of data and a series of sufficient training process. Therefore, with its intelligent, automatic and effective feature extraction ability and more accurate detection results, the DL method breaks through the bottleneck of traditional digital image processing algorithms and has been widely used in object detection task.

Specifically, the most important step in DL is the construction of the neural network [

6], which is inspired by the animal vision system and the purpose is to transform the original signal of objects into a high dimensional space to be classified by building a multi-level nonlinear processing mechanism. In a variety of artificial neural networks, the deep convolutional neural network (DCNN) [

7] is the most common used in field of image processing, including the object detection task, because the operation of the convolutional kernel fully takes the relationship between adjacent pixels into account, which fits the distribution pattern of the information in the image. Thus, it is more efficient to extract the object features from the image, and it is able to be a more accurate detection result by combining with the activate function, pooling function and fully-connection function [

5]. With the application of the DCNN, the tasks of object detection [

8], semantic segmentation [

9], scene classification [

10], etc. are more efficient and valuable.

As the continuous optimization of DCNN approaches, the object detection task comes into a high-speed development period. In 2013, Ross Girshick published the R-CNN algorithm [

11], which introduces the idea of detecting on each region of image obtained by a selective search [

12] and greatly improves its accuracy and efficiency compared to the ML methods. After that, two modified methods were proposed: The one is the Fast R-CNN [

13], which utilizes the convolutional operation on the whole image rather than on each region in the R-CNN, and it makes the performance much better than the R-CNN; the other is the Faster R-CNN [

14], which imports the region proposal network (RPN) into the Fast R-CNN and abandons the mechanism of the selective search, this improvement reduces the number of candidate regions and makes object detection more simpler, more accurate and more efficient. In fact, this series of algorithms that originated from the R-CNN are summarized as a two-stage method, representing the idea of regional detection. So far, many excellent algorithms in the two-stage method have been generated, such as the feature pyramid net (FPN) [

15], Mask R-CNN [

16], SNIP [

17], etc. More specifically, FPN combines the low-level feature and high-level feature as the basic feature, and then RPN is employed to complete detection. This method can effectively improve the accuracy of the small-scale object detection. Mask R-CNN can realize the semantic segmentation after the object detection task. SNIP introduces the image pyramid to change the object scale into a similar size to improve the accuracy of the multi-scale object detection.

After the publication of the Faster R-CNN, it arouses the exploration of the methods outside the two-stage mechanism. For example, single shot multibox detector (SSD), proposed by Liu in 2016 [

18], uses a single DCNN to conduct detecting objects, and discretizes the output bounding boxes into a set of default boxes over different aspect ratios and scales for each feature map location, which is like the idea of RPN, but this operation is integrated into the previous neural network instead of two separate neural networks (like Faster R-CNN). Another famous research is YOLO [

19], which pays more attention to the speed of object detection and chooses a more concise network, containing only 24 convolution layers to directly predict bounding boxes and classification probabilities on full images via one neural network. Thus, this unified architecture is extremely fast and reaches 155 frames per second, but its accuracy cannot reach the level of SSD and Faster R-CNN and still remains low until RPN is imported into the YOLO in 2018 [

20].

The architecture like SSD and YOLO are collectively called the one-stage network because of its single neural network architecture. Additionally for this reason, one-stage networks are more efficient but slightly lower in accuracy compared to the two-stage networks. Fortunately, it brings more alternative in different detection tasks due to these differences.

In fact, the ships detection in the high-resolution optical remote sensing image is one of the representative applications based on DCNN, in which the ship objects present the characteristics of multi-scale, multiple directions, multiple shapes and a complex background environment. Thus, taking these characters into account, the ship detection task is commonly divided into the following several important steps [

1]: Removal of Environment Effects, Sea-Land Separation, Ship Candidate Detection, and False Alarm Suppression. In addition, it is necessary to propose some effective measures to the specific problem at each step.

The first step: Removal of Environment Effects. The presence of environmental factors in optical images is an undesirable, but generally unavoidable fact. Some main factors significantly influence the ship detection accuracy such as clouds, waves and sunlight reflection. In recent years, some effective methods have been proposed to solve these environment effect problems. In 2013, a water/cloud clutter subspace is estimated and a continuum fusion derived anomaly detection algorithm is proposed by Daniel et al. [

21] to remove the clouds. In 2014, Kanjir et al. [

22] proposed a method based on histogram to remove the clouds, which takes advantage of the character of high value of the clouds in spectral bands. Further, Buck et al. [

23], used a Fourier transform algorithm to remove the clouds. While the effects of waves and sunlight reflection often result in false alarms, it will be solved in the fourth step rather than in the first step.

The second step: Sea-Land Separation. An accurate sea-land separation is not only necessary for an accurate detection of ships in harbor areas, but also important because DCNN-based methods may produce many false alarms when applied in the land scene. Sea-Land Separation falls into two groups: By introducing extra data such as the coastline data or by generating the segmentation sign by itself. In 2011, Lavalle et al. [

24], imported a GIS data to describe the line that separates a land surface from the ocean. In 2015, Jin and zhang [

25] introduced a shapefile to describe the coastline data. However, these methods based on extra information are undesirable on accuracy and it is hard to update the data timely. In another group, many researchers used histogram data to discriminate the sea and land such as in the works of Li et al. [

26] and Xu et al. [

27], which take the differences of sea and land in the distribution of gray value into account. Though these methods have a desirable efficiency, its accuracy is unsatisfied. To solve this problem, Besbinar and Alatan [

28] additionally used digital terrain elevation data to generate a precise sea-land mask by utilizing the zero values. Additionally, Burgess [

29] used a heuristic approach for land masking based on observations of the relationships between the values in the two input images for sea and land. In addition to the above methods, there are still many methods to have a good effect on sea-land separation and reduce the influence of onshore false alarms.

The third step: Ship Candidate Detection. After the removal of environment effects and sea-land separation, an appropriate ship candidate detection algorithm will be applied, and it is the most essential part in the procedure of ship detection. In addition, the purpose of this step is to find out all regions containing the targets, of course, this needs to consider about the characteristics of different scenes. For the multi-scale ship, many algorithms often combine the RPN structure introduced in the Faster R-CNN with the special architecture that fuses the low-level and high-level features, such as FPN, DFPN [

30] and SNIP, which have been mentioned above. Further, for multiple orientations of the ships, Jiang et al. [

31] and Yang et al. [

32] both proposed a method, training data with rotate bounding boxes, to obtain a real orientation of the target in the inference process. Except for these methods, there are many other works aiming at the specified problems, Zhang [

33] combined the convolutional neural network and manual ship features to improve the accuracy, and Bodla et al. [

34] proposed the Soft-NMS to relieve the problem of overlapping box suppression and improve the accuracy of the ship in dense scenes.

The fourth step: False Alarm Suppression. The phenomenon of the false alarm is the result from the misjudgment of interferes during the detection process, and the false alarm usually exists in the background and has some similar characteristics with the ship objects. In fact, false alarms in land can be removed by using sea-land segmentation, and for false alarms in sea caused by waves and sunlight reflection, Yang et al. [

35] proposed a structure named the MDA-Net to describe the saliency of the ship to suppress non-object pixels, and Zhang et al. [

33] imported manual ship features to overcome the false alarm. Although some measures can suppress the false alarms moderately, it is still a main challenging problem in ship detection.

Therefore, facing the realization process of the ship detection task, a complete detection framework has been established by combining the existing defogging algorithm and two DCNN-based algorithms proposed in this paper, which are aiming at the problems of the multi-scale ship detection and onshore false alarm suppression. At the first step, an improved network based on the Faster R-CNN with the function of scene mask estimation is proposed to achieve the sea-land segmentation and object detection. In this method, the network is trained by special data that includes the mask information of the target scene, which differentiates the target area (sea) and non-target area (land). By using this network, we can effectively remove the land area and reduce a lot of false alarms onshore during detection. In another step, a saliency-based Faster R-CNN is proposed to deal with the problem of multi-scale ship objects in high-resolution remote sensing image. In this method, a saliency estimation network is used to extract the salient region which presents the ship objects, then an image pyramid is used to compress the images containing the large-scale ship objects, in order to make the size of ships in the real-time image more similar with those in the training dataset, and finally, it will improve the accuracy of the ship detection. At last, one feasible processing chain is formed, including the image pre-processing, image database, results display and manual review modules, and the entire processing system is called the broad area target search (BATS).

The remainder of this paper is organized as follows. The proposed BATS structure and its details are described in

Section 2. Two DCNN-based methods proposed in this work are presented in

Section 3.

Section 4 will explain the mechanism of the interaction part between the user and system, which includes input, display and review modules. Experimental results on a lot of representative high-resolution remote sensing images are presented in

Section 5 followed by the conclusions drawn in

Section 6.

2. The Framework of Broad Area Target Search System

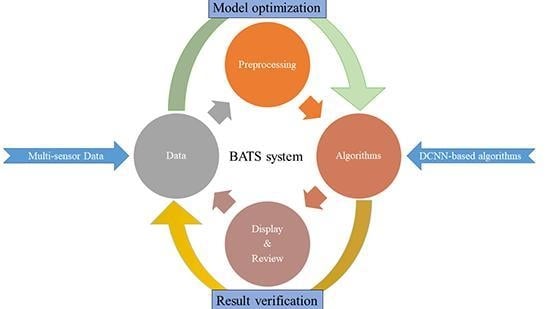

Different from the existing researches about the ship detection task, we not only focus on one specified algorithmic problem, but comprehensively consider various aspects existing in the task of the broad area ship detection, and then a processing chain of high-resolution data is established, named the broad area target search (BATS). In this framework, it includes image pre-processing, saliency estimation, scene mask estimation, ship detection, results presentation and manual review. The framework is shown in

Figure 1, where a stack (or cube) indicates that the module contains several effective sub-steps, for example, the object detection step of BATS has some different implementations including the Saliency-Faster R-CNN, Faster R-CNN, FPN, etc.

Before elaborating on the details of each module in this framework, some explanations of the detection process will be given. Firstly, a remote sensing image with the resolution better than 1 m is called the high-resolution image in our method. Based on this resolution, we can get more information about the shape and context details of the ship, and these high-resolution images reflect multi-scale features of targets. It is also a main form of remote sensing data for the ship objects detection and a prerequisite for fine-grained identification. In BATS, each step operates on the high-resolution remote sensing images. Secondly, the step of the algorithms (D, E, F) in the flow chart can be executed independently to solve the specified problems during ship detection. Meanwhile, different methods can be selected according to characteristics of input data and the type of tasks. For example, step D and F can be used for the broad scene under the clouds without E. Finally, the data of the algorithms and system are all derived from a shared image database which is generated after data preprocessing. This database supports the data import, data and results storage for each step.

Now, we focus on the meaning of each step and the corresponding implementation process. The first is the input of data (Step A of

Figure 1). In BATS, the high-resolution remote sensing data is obtained from Google Earth or uploaded from users. This is followed by the preprocessing of the raw data (Step B of

Figure 1). For the high-resolution and wide coverage images, it is necessary to balance the size of the input image with the GPU performance. The most common processing method is to cut the input image into fixed-size patches (such as 1024×1024) for prediction. In order to ensure the integrity of the ship targets, we use an overlap cutting and overlap rate can be controlled. The data augmentation process includes some image operations such as multi-angle rotation, color contrast control and addition of random noise to increase the diversity of samples. The results of the preprocessing of input data are shown in

Figure 2. For the structure management of the sample, the data is stored into the database after augmentation, which is convenient for the subsequent module.

After Step B, the database is utilized to store the real and public datasets separately for multiple utilization (Step C in

Figure 1). In this database, the information of the training set and test set are stored in different tables. The information table of the training dataset contains the storage path, image size, file name, position in the original input, etc. The information table for the test dataset contains the storage path and processing results after testing.

In the following, the ship detection is illustrated from three aspects: Imaging factor, environmental factor and the ship target itself. First, for input scenes, the image quality should be further analyzed. In the task of the ship objects detection, the cloud occlusion has a huge impact on the target imaging. Severe cloud occlusion will directly lead to the blurring and disappearance of ship targets in this scene, as shown in

Figure 3. Therefore, the effective measures should be taken to mitigate the cloud occlusion problem in such a scene (Step D of

Figure 1). The usual algorithms for clouds removal mainly have two types: Physical model defogging and image enhancement for defogging. Among these methods, the typical physical model algorithm is the defogging in the dark channel based on the dark channel prior [

36], and the most common traditional image enhancement is the contrast limited adaptive histogram equalization [

37], in which by calculating the histogram of multiple local regions of the image to redistribute the brightness and contrast to realize defogging. With these effective defogging methods, the DCNN inside BATS can effectively classify the foggy scene from the fog-free scene, and then uses the classic algorithm to process the foggy images.

After defogging, the environment factors of the ship target are considered in Step E. In the task of ship detection, there are many ships mooring in ports or sailing in near-land areas. However, the complex terrestrial environment may easily mislead the feature extraction of DL algorithms and result in a lot of onshore false alarms. Therefore, there are some papers for ship detection that use sea-land separation to remove land false alarms. In this paper, we propose a novel false alarms suppression method based on DCNN, which use the powerful ability of feature extraction to segment the sea and land. The specific structure will be explained in

Section 3.2.

The factors of the ship target itself should be focused to improve the ability of the ship targets detection (Step F of

Figure 1). The size of the ship targets in the high-resolution remote sensing images varies greatly, while the size of the targets in the commonly used training dataset, such as DOTA [

38], NWPU VHR-10 [

39] and UCAS-AOD [

40], are mostly similar and large-scale targets are rare, which results in a poor performance for large-scale ships detection. So far, some methods are proposed for concerning the problem of targets size, most of which use the combination of multi-layer feature layers [

15,

18,

30] to retain the feature of different scale targets, and to alleviate the difficulty of the multi-scale targets detection. There is also a study that compresses the target in the image to a fixed size to enhance detection performance [

17]. In our processing framework, a multi-scale ship detection method based on the saliency feature is proposed. This method adaptively compresses scenes containing large-scale ship targets, which improves the detection performance of remote sensing images in reality. The specific structure is explained in

Section 3.3.

After ship detection, the result patches are aligned to the grid of the original image and the coordinates of the predicted boxes are converted into the original image coordinates. This step is known as data post-processing (Step G of

Figure 1).

The post-processed data is stored in the database for the call of the display module. In the display module of BATS, the pixel coordinates of the predicted results are converted into geographic coordinates and the results are displayed in Google Map. In addition, the display module also provides an effective feedback mechanism, and that is the manual review for the ship detection algorithm (Step I of

Figure 1). Considering that the DCNN-based methods cannot guarantee the detection result to be completely correct, the wrong result is selected manually and retrained to make up for the shortage of training samples, and then achieving the purpose of the iterative optimization of the algorithm. The structure and implementation of the display module will be explained in

Section 4.3.

6. Discussions

The above experiments are designed to analyze the accuracy of two DCNN models independently. Then in this part, an analysis on the effectiveness of the system and cascading model (connecting the Saliency-Faster R-CNN with Mask-Faster R-CNN) is presented. First, the results of the cascading model are compared with each DCNN model separately to validate the accuracy and effectiveness of the cascading model. The evaluation results of the different models are shown in

Table 5, where time represents the average inference time on each image with 1024 × 1024.

According to

Table 5, the AP of the cascading mode (the fourth row) is 0.629, which has a little improvement compared with the Mask-Faster R-CNN, and it indicates that the cascading model really fuses the advantages of two DCNN models proposed in this paper. However, the consumption time of both the DCNN model is larger than that of the Faster R-CNN, which only has 0.173 sec/image, while the time of the Saliency-Faster R-CNN reaches 2.181 sec/image because of the saliency estimation in this network, so that the time of the cascading model even reaches 2.233 sec/image. Objectively speaking, this is unbearable in real-time detection tasks of massive data, but it still can be used as a time-accuracy balance choice because of its improvement on the large-scale ship detection.

Then, an evaluation of the efficiency on the entire system is conducted by using some high-resolution remote sensing images not in the testing data set. The environment of evaluation consists of one GPU, a communication network with 1000 Mbps, one CPU with quad core and eight threads. The results are shown in

Table 6.

In

Table 6, the upload represents the time consumption when uploading the images by the site designed in this paper, the pre-processing represents the time consumed in pre-processing, which includes images cutting with the fixed size and its position record in the original image, the post-processing represents the time consumed by dealing with the results after the detection process, which includes the coordinates transformation of boxes in the original images, the removal of repeated detection results by using the NMS operation in the overlapping area, finally the Num of Images represents the total number of images by using the overlapping cutting. According to statistics in

Table 6, the times of processing and transferring are very short compared to the detection time, it will not influence the efficiency of the entire system and can be accepted.

In addition, in order to demonstrate the superiority of the cascading model and each individual model on different conditions, the curve of the precision-recall (PR) for each DCNN model is drawn in

Figure 21.

From

Figure 21, in the same value of the recall, the precision from high to low is the cascading model, Mask-Faster R-CNN, Saliency-Faster R-CNN and Faster R-CNN. The result indicates that not only our two individual models can improve the accuracy of ship detection on their respective different concerns but the cascading model has a comprehensive improvement because of its fusion of two DCNN models.

In addition to the harbor scenes, we have encountered some scenes which contain icebergs located in the Arctic, and it is frequent to be some false alarms near or on iceberg during ship detection. Fortunately, our method still has a good performance on these scenes. Some results are shown in

Figure 22, and the Faster R-CNN is deteriorated because of the false alarms, however, the Mask-Faster R-CNN avoids such a problem by using the estimated scene mask. In order to prove the applicability ability of BATS, we uploaded some high-resolution panchromatic remote sensing images via the target detection module, which are obtained from Gaofen-2 (GF-2) and the resolution of images is 0.8 m, and the results are shown in

Figure 23. It is obvious that our method still has a good performance compared with the Faster R-CNN, even the type of input data is different from our training set. The generalization performance of the proposed system is verified to some extent.

Finally, one broad-area high-resolution remote sensing image is used to demonstrate the effectiveness and accuracy of our BATS for the complex scene. The size of the input image is 10656 × 7130, which is collected from Google Earth and it is one part of the Norfolk harbor, and the resolution of image is 0.3 m, which means it can nearly present a Nimitz-class aircraft carrier. The detection result is shown in

Figure 24.

It can be seen that in (a) of

Figure 24 almost each ship can be exactly detected in this complex scene, and some huge ships, for example the Nimitz-class aircraft carrier close to the harbor, which is shown in (e), can be marked through the Saliency-Faster R-CNN. Meanwhile, a dense ships scene is located on the right side of the image, and its scaled up image is shown in (b), and each small-scale ship has been detected, and it is amazing that some onshore alarms are suppressed, which means our Mask-Faster R-CNN plays its role in the system. At the same time, (d) and (f) obtained from the Faster R-CNN are the contrast of (c) and (e), respectively, which indicates that our method suppresses the onshore false alarms and detect the large-scale ship. Therefore, the BATS system has a good detection performance on the broad area.

7. Conclusions

In order to implement the DCNN-based ship detection in the broad area by using high-resolution remote sensing images, a ship detection system consisting of data pre-processing, core algorithms based on DCNN, results display module and manual review module is proposed in this paper, known as the broad area target search (BATS) system. The ship detection algorithms in this system are the key step in the ship detection task. For the problem of object scale differences between the training dataset and real scenes, a method known as the Saliency-Faster R-CNN combining the saliency estimation network with the object detection network is proposed in this paper, which uses the saliency feature to describe the scale of the ship inside the image, then the image pyramid is utilized to compress the input image that includes the large-scale objects so that the ship scale in the real high-resolution image is close to the scale of samples in the training dataset, and eventually it improves the detection accuracy. Additionally for the problem of onshore false alarms, a method named as Mask-Faster R-CNN is introduced, which inserts a branch of the scene mask estimation network into the object detection network to learn how to discriminate the target area (sea) from the non-target area (land), and then cover the land area with a zero response during the RPN process. This method can effectively reduce the appearance of onshore false alarms.

Furthermore, this system also incorporates the capabilities of the data structure management of database technology to realize the results storage and display of each step inside the system, and meantime, it also provides users an interface to review the results and iteratively optimize the core DCNN-based algorithms. The processing chain based on the database unit constitutes an important foundation of the data interaction between the BATS system and users.

Through the independent experiment of each DCNN algorithm, the validity of each part has been verified. Firstly, we validate the Saliency-Faster R-CNN and obtain its superiority compared to the Faster R-CNN on the large-scale ship detection. Then, the Mask-Faster R-CNN is verified to have its greatly improvement compared to the Faster R-CNN on the suppression of onshore false alarms. In the last part, two DCNN-based algorithms are combined to validate the accuracy and efficiency of the entire system. Actually, the results are really satisfied either on the independent experiment or on the cascade experiment. To summarize, the framework proposed in our research provides a series of effective solutions for ship detection in the high-resolution remote sensing image.

At last, taking the data-driven and complexity of the ship detection task into account, the scalability of the BATS system is increased by using the database and the way of algorithm cascading so that it inserts more DCNN-based algorithms and constantly improves the performance of ship detection. In future work, some targets of interest in high-resolution remote sensing images, such as the airplane, vehicle, storage tank etc., will be concerned in the BATS system. The DCNN models proposed in this paper need to be continuously optimized according to the diversity of targets and scenes in the detection task. Furthermore, considering the all-time and all-weather capability of the synthetic aperture radar (SAR) images, it can be used as a powerful complement to the optical remote sensing images to achieve an accurate target detection under the condition of the heavy cloud and fog and inadequate optical data.