Surveying Wild Animals from Satellites, Manned Aircraft and Unmanned Aerial Systems (UASs): A Review

Abstract

:1. Introduction

2. Methods

3. Results

3.1. Platforms

3.1.1. Satellites

3.1.2. Light Manned Aircraft

3.1.3. UASs

3.2. Sensors

3.2.1. Spaceborne Surveys

3.2.2. Manned Aerial Surveys

3.2.3. UAS Surveys

3.3. Data Availability, Resolution and Cost

3.3.1. Satellite Data

3.3.2. Aerial Data

3.3.3. UAS Data

3.4. Surveyed Species and Methodology

3.4.1. Spaceborne Surveys

3.4.2. Manned Aerial Surveys

3.4.3. UAS Surveys

3.5. Pixel Number of Target Species in Imagery

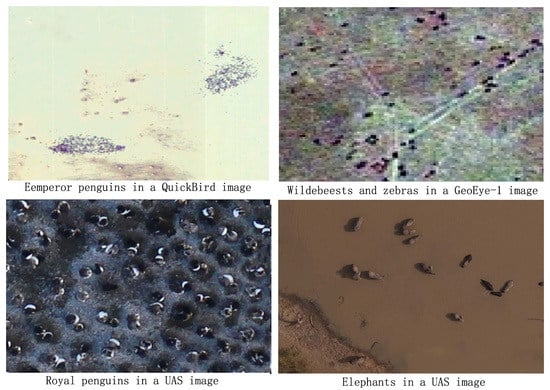

3.5.1. Spaceborne Surveys

3.5.2. UAS Surveys

3.6. Automatic and Semiautomatic Algorithms for Wild Animal Surveys

3.6.1. Pixel-Based Methods

3.6.2. Object-Based Methods and Machine Learning

3.6.3. Deep Learning

3.7. Accuracy of Remote Sensing-Based Counts

4. Discussion

4.1. Spaceborne Surveys

4.2. Manned Aerial Surveys

4.3. UAS Surveys

5. Perspectives

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Linchant, J.; Lisein, J.; Semeki, J.; Lejeune, P.; Vermeulen, C. Are unmanned aircraft systems (uass) the future of wildlife monitoring? A review of accomplishments and challenges. Mamm. Rev. 2015, 45, 239–252. [Google Scholar] [CrossRef]

- Smyser, T.J.; Guenzel, R.J.; Jacques, C.N.; Garton, E.O. Double-observer evaluation of pronghorn aerial line-transect surveys. Wildl. Res. 2016, 43, 474–481. [Google Scholar] [CrossRef]

- Rey, N.; Volpi, M.; Joost, S.; Tuia, D. Detecting animals in african savanna with uavs and the crowds. Remote Sens. Environ. 2017, 200, 341–351. [Google Scholar] [CrossRef]

- Sibanda, M.; Murwira, A. Cotton fields drive elephant habitat fragmentation in the mid zambezi valley, zimbabwe. Int. J. Appl. Earth Obs. Geoinf. 2012, 19, 286–297. [Google Scholar] [CrossRef]

- Crewe, T.; Taylor, P.; Mackenzie, S.; Lepage, D.; Aubry, Y.; Crysler, Z.; Finney, G.; Francis, C.; Guglielmo, C.; Hamilton, D. The motus wildlife tracking system: A collaborative research network to enhance the understanding of wildlife movement. Avian Conserv. Ecol. 2017, 12, 8. [Google Scholar]

- Borchers, D.L.; Buckland, S.T.; Zucchini, W. Estimating Animal Abundance; Springer: London, UK, 2002. [Google Scholar]

- Norouzzadeh, M.S.; Nguyen, A.; Kosmala, M.; Swanson, A.; Palmer, M.S.; Packer, C.; Clune, J. Automatically identifying, counting, and describing wild animals in camera-trap images with deep learning. Proc. Natl. Acad. Sci. USA 2018, 115, 5716–5725. [Google Scholar] [CrossRef]

- Witmer, G.W. Wildlife population monitoring: Some practical considerations. Wildl. Res. 2005, 32, 259–263. [Google Scholar] [CrossRef]

- Sauer, J.R.; Link, W.A.; Fallon, J.E.; Pardieck, K.L.; Ziolkowski Jr, D.J. The north american breeding bird survey 1966–2011: Summary analysis and species accounts. N. Am. Fauna 2013, 79, 1–32. [Google Scholar] [CrossRef]

- Betts, M.G.; Mitchell, D.; Dlamond, A.W.; Bety, J. Uneven rates of landscape change as a source of bias in roadside wildlife surveys. J. Wildl. Manag. 2007, 71, 2266–2273. [Google Scholar] [CrossRef]

- Dulava, S.; Bean, W.T.; Richmond, O.M.W. Applications of unmanned aircraft systems (uas) for waterbird surveys. Environ. Pract. 2015, 17, 201–210. [Google Scholar] [CrossRef]

- LaRue, M.A.; Stapleton, S.; Anderson, M. Feasibility of using high-resolution satellite imagery to assess vertebrate wildlife populations. Conserv. Biol. 2017, 31, 213–220. [Google Scholar] [CrossRef] [PubMed]

- Pettorelli, N.; Laurance, W.F.; O’Brien, T.G.; Wegmann, M.; Nagendra, H.; Turner, W. Satellite remote sensing for applied ecologists: Opportunities and challenges. J. Appl. Ecol. 2014, 51, 839–848. [Google Scholar] [CrossRef]

- Turner, W. Sensing biodiversity. Science 2014, 346, 301–302. [Google Scholar] [CrossRef]

- Kuenzer, C.; Ottinger, M.; Wegmann, M.; Guo, H.; Wang, C.; Zhang, J.; Dech, S.; Wikelski, M. Earth observation satellite sensors for biodiversity monitoring: Potentials and bottlenecks. Int. J. Remote Sens. 2014, 35, 6599–6647. [Google Scholar] [CrossRef]

- Michaud, J.S.; Coops, N.C.; Andrew, M.E.; Wulder, M.A.; Brown, G.S.; Rickbeil, G.J.M. Estimating moose (alces alces) occurrence and abundance from remotely derived environmental indicators. Remote Sens. Environ. 2014, 152, 190–201. [Google Scholar] [CrossRef]

- Ottichilo, W.K.; De Leeuw, J.; Skidmore, A.K.; Prins, H.H.T.; Said, M.Y. Population trends of large non-migratory wild herbivores and livestock in the masai mara ecosystem, kenya, between 1977 and 1997. Afr. J. Ecol. 2000, 38, 202–216. [Google Scholar] [CrossRef]

- Stapleton, S.; Peacock, E.; Garshelis, D. Aerial surveys suggest long-term stability in the seasonally ice-free foxe basin (nunavut) polar bear population. Mar. Mammal Sci. 2016, 32, 181–201. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef] [Green Version]

- Chabot, D.; Bird, D.M. Wildlife research and management methods in the 21st century: Where do unmanned aircraft fit in? J. Unmanned Veh. Syst. 2015, 3, 137–155. [Google Scholar] [CrossRef] [Green Version]

- Christie, K.S.; Gilbert, S.L.; Brown, C.L.; Hatfield, M.; Hanson, L. Unmanned aircraft systems in wildlife research: Current and future applications of a transformative technology. Front. Ecol. Environ. 2016, 14, 241–251. [Google Scholar] [CrossRef]

- Fiori, L.; Doshi, A.; Martinez, E.; Orams, M.B.; Bollardbreen, B. The use of unmanned aerial systems in marine mammal research. Remote Sens. 2017, 9, 543. [Google Scholar] [CrossRef]

- Hollings, T.; Burgman, M.; van Andel, M.; Gilbert, M.; Robinson, T.; Robinson, A. How do you find the green sheep? A critical review of the use of remotely sensed imagery to detect and count animals. Methods Ecol. Evol. 2018, 9, 881–892. [Google Scholar] [CrossRef]

- Fretwell, P.T.; Trathan, P.N. Penguins from space: Faecal stains reveal the location of emperor penguin colonies. Glob. Ecol. Biogeogr. 2009, 18, 543–552. [Google Scholar] [CrossRef]

- Schwaller, M.R.; Southwell, C.J.; Emmerson, L.M. Continental-scale mapping of adélie penguin colonies from landsat imagery. Remote Sens. Environ. 2013, 139, 353–364. [Google Scholar] [CrossRef]

- Schwaller, M.R.; Olson, C.E.; Ma, Z.Q.; Zhu, Z.L.; Dahmer, P. A remote-sensing analysis of adelie penguin rookeries. Remote Sens. Environ. 1989, 28, 199–206. [Google Scholar] [CrossRef]

- Loffler, E.; Margules, C. Wombats detected from space. Remote Sens. Environ. 1980, 9, 47–56. [Google Scholar] [CrossRef]

- Wilschut, L.I.; Heesterbeek, J.A.P.; Begon, M.; de Jong, S.M.; Ageyev, V.; Laudisoit, A.; Addink, E.A. Detecting plague-host abundance from space: Using a spectral vegetation index to identify occupancy of great gerbil burrows. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 249–255. [Google Scholar] [CrossRef]

- Yang, Z.; Wang, T.; Skidmore, A.K.; De, L.J.; Said, M.Y.; Freer, J. Spotting east african mammals in open savannah from space. PLoS ONE 2014, 9, e115989. [Google Scholar] [CrossRef]

- Stapleton, S.; Larue, M.; Lecomte, N.; Atkinson, S.; Garshelis, D.; Porter, C.; Atwood, T. Polar bears from space: Assessing satellite imagery as a tool to track arctic wildlife. PLoS ONE 2014, 9, e101513. [Google Scholar] [CrossRef]

- Platonov, N.G.; Mordvintsev, I.N.; Rozhnov, V.V. The possibility of using high resolution satellite images for detection of marine mammals. Biol. Bull. 2013, 40, 197–205. [Google Scholar] [CrossRef]

- Fretwell, P.T.; Scofield, P.; Phillips, R.A. Using super-high resolution satellite imagery to census threatened albatrosses. Ibis 2017, 159, 481–490. [Google Scholar] [CrossRef]

- Fretwell, P.T.; Staniland, I.J.; Forcada, J. Whales from space: Counting southern right whales by satellite. PLoS ONE 2014, 9, e88655. [Google Scholar] [CrossRef]

- Cubaynes, H.C.; Fretwell, P.T.; Bamford, C.; Gerrish, L.; Jackson, J.A. Whales from space: Four mysticete species described using new vhr satellite imagery. Mar. Mamm. Sci. 2018, 1, 1–26. [Google Scholar] [CrossRef]

- LaRue, M.A.; Rotella, J.J.; Garrott, R.A.; Siniff, D.B.; Ainley, D.G.; Stauffer, G.E.; Porter, C.C.; Morin, P.J. Satellite imagery can be used to detect variation in abundance of weddell seals (leptonychotes weddellii) in erebus bay, antarctica. Polar Biol. 2011, 34, 1727–1737. [Google Scholar] [CrossRef]

- Li, S. Aerial surveys of wildlife resources. Jilin For. Sci. Tech. 1985, 1, 50–51. [Google Scholar]

- Wiig, Ø.; Bakken, V. Aerial strip surveys of polar bears in the barents sea. Polar Res. 1990, 8, 309–311. [Google Scholar] [CrossRef]

- Kessel, S.T.; Gruber, S.H.; Gledhill, K.S.; Bond, M.E.; Perkins, R.G. Aerial survey as a tool to estimate abundance and describe distribution of a carcharhinid species, the lemon shark, negaprion brevirostris. J. Mar. Biol. 2013, 2013, 597383. [Google Scholar] [CrossRef]

- Andriolo, A.; Martins, C.; Engel, M.H.; Pizzorno, J.L.; Más-Rosa, S.; Freitas, A.C.; Morete, M.E.; Kinas, P.G. The first aerial survey to estimate abundance of humpback whales (megaptera movaeangliae) in the breeding ground off brazil (breeding stock a). J. Cetacean Res. Manag. 2006, 8, 307–311. [Google Scholar]

- Marsh, H.; Sinclair, D.F. An experimental evaluation of dugong and sea turtle aerial survey techniques. Aust. Wildl. Res. 1989, 16, 639–650. [Google Scholar] [CrossRef]

- Stoner, C.; Caro, T.; Mduma, S.; Mlingwa, C.; Sabuni, G.; Borner, M. Assessment of effectiveness of protection strategies in tanzania based on a decade of survey data for large herbivores. Conserv. Biol. 2007, 21, 635–646. [Google Scholar] [CrossRef]

- Martin, S.L.; Van Houtan, K.S.; Jones, T.T.; Aguon, C.F.; Gutierrez, J.T.; Tibbatts, R.B.; Wusstig, S.B.; Bass, J.D. Five decades of marine megafauna surveys from micronesia. Front. Mar. Sci. 2016, 2, 1–13. [Google Scholar] [CrossRef]

- Vermeulen, C.; Lejeune, P.; Lisein, J.; Sawadogo, P.; Bouche, P. Unmanned aerial survey of elephants. PLoS ONE 2013, 8, e54700. [Google Scholar] [CrossRef] [PubMed]

- Olivares-Mendez, M.A.; Fu, C.H.; Ludivig, P.; Bissyande, T.F.; Kannan, S.; Zurad, M.; Annaiyan, A.; Voos, H.; Campoy, P. Towards an autonomous vision-based unmanned aerial system against wildlife poachers. Sensors 2015, 15, 31362–31391. [Google Scholar] [CrossRef]

- Koh, L.; Wich, S. Dawn of drone ecology: Low-cost autonomous aerial vehicles for conservation. Trop. Conserv. Sci. 2012, 5, 121–132. [Google Scholar] [CrossRef]

- Christiansen, F.; Dujon, A.M.; Sprogis, K.R.; Arnould, J.P.Y.; Bejder, L. Noninvasive unmanned aerial vehicle provides estimates of the energetic cost of reproduction in humpback whales. Ecosphere 2016, 7, 18. [Google Scholar] [CrossRef]

- Ditmer, M.A.; Vincent, J.B.; Werden, L.K.; Tanner, J.C.; Laske, T.G.; Iaizzo, P.A.; Garshelis, D.L.; Fieberg, J.R. Bears show a physiological but limited behavioral response to unmanned aerial vehicles. Curr. Biol. 2015, 25, 2278–2283. [Google Scholar] [CrossRef]

- Cliff, O.M.; Fitch, R.; Sukkarieh, S.; Saunders, D.L.; Heinsohn, R. Online Localization of Radio-Tagged Wildlife with An Autonomous Aerial Robot System. In Proceedings of the Robotics: Science and Systems, Rome, Italy, 13–17 July 2015. [Google Scholar]

- U.S. Federal Aviation Administration. Faa Doubles ‘blanket’ Altitude for Many Uas Flights. Available online: https://www.faa.gov/uas/media/Part_107_Summary.pdf (accessed on 15 December 2017).

- Hodgson, A.; Peel, D.; Kelly, N. Unmanned aerial vehicles for surveying marine fauna: Assessing detection probability. Ecol. Appl. 2017, 27, 1253–1267. [Google Scholar] [CrossRef]

- Hodgson, A.; Kelly, N.; Peel, D. Unmanned aerial vehicles (uavs) for surveying marine fauna: A dugong case study. PLoS ONE 2013, 8, e79556. [Google Scholar] [CrossRef]

- Witczuk, J.; Pagacz, S.; Zmarz, A.; Cypel, M. Exploring the feasibility of unmanned aerial vehicles and thermal imaging for ungulate surveys in forests - preliminary results. Int. J. Remote Sens. 2017, 1–18. [Google Scholar] [CrossRef]

- Seymour, A.C.; Dale, J.; Hammill, M.; Halpin, P.N.; Johnston, D.W. Automated detection and enumeration of marine wildlife using unmanned aircraft systems (uas) and thermal imagery. Sci. Rep. 2017, 7, 10. [Google Scholar] [CrossRef]

- Hodgson, J.C.; Baylis, S.M.; Mott, R.; Herrod, A.; Clarke, R.H. Precision wildlife monitoring using unmanned aerial vehicles. Sci. Rep. 2016, 6, 22574. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kiszka, J.J.; Mourier, J.; Gastrich, K.; Heithaus, M.R. Using unmanned aerial vehicles (uavs) to investigate shark and ray densities in a shallow coral lagoon. Mar. Ecol. Prog. Ser. 2016, 560, 237–242. [Google Scholar] [CrossRef]

- Ivosevic, B. Monitoring butterflies with an unmanned aerial vehicle: Current possibilities and future potentials. J. Ecol. Environ. 2017, 41, 72–77. [Google Scholar] [CrossRef]

- Goebel, M.E.; Perryman, W.L.; Hinke, J.T.; Krause, D.J.; Hann, N.A.; Gardner, S.; Leroi, D.J. A small unmanned aerial system for estimating abundance and size of antarctic predators. Polar Biol. 2015, 38, 619–630. [Google Scholar] [CrossRef]

- Jones, G.P.I.; Pearlstine, L.G.; Percival, H.F. An assessment of small unmanned aerial vehicles for wildlife research. Wildl. Soc. Bull. 2006, 34, 750–758. [Google Scholar] [CrossRef]

- Vas, E.; Lescroël, A.; Duriez, O.; Boguszewski, G.; Grémillet, D. Approaching birds with drones: First experiments and ethical guidelines. Biol. Lett. 2015, 11, 20140754. [Google Scholar] [CrossRef]

- McEvoy, J.F.; Hall, G.P.; McDonald, P.G. Evaluation of unmanned aerial vehicle shape, flight path and camera type for waterfowl surveys: Disturbance effects and species recognition. PeerJ 2016, 4, e1831. [Google Scholar] [CrossRef] [PubMed]

- Wilson, A.M.; Barr, J.; Zagorski, M. The feasibility of counting songbirds using unmanned aerial vehicles. Auk 2017, 134, 350–362. [Google Scholar] [CrossRef]

- Gonzalez, L.F.; Montes, G.A.; Puig, E.; Johnson, S.; Mengersen, K.; Gaston, K.J. Unmanned aerial vehicles (uavs) and artificial intelligence revolutionizing wildlife monitoring and conservation. Sensors 2016, 16, 97. [Google Scholar] [CrossRef]

- Goodenough, A.E.; C63arpenter, W.S.; MacTavish, L.; MacTavish, D.; Theron, C.; Hart, A.G. Empirically testing the effectiveness of thermal imaging as a tool for identification of large mammals in the african bushveldt. Afr. J. Ecol. 2018, 56, 51–62. [Google Scholar] [CrossRef]

- Tremblay, J.A.; Desrochers, A.; Aubry, M.Y.; Pace, P.; Bird, D.M. A low-cost technique for radio-tracking wildlife using a small standar unmanned aerial vehicle. J. Unmanned Veh. Syst. 2017, 5, 102–108. [Google Scholar]

- Webber, D.; Hui, N.; Kastner, R.; Schurgers, C. Radio receiver design for unmanned aerial wildlife tracking. In Proceedings of the International Conference on Computing, Networking and Communications (ICNC), Santa Clara, CA, USA, 26–29 January 2017; pp. 942–946. [Google Scholar]

- Xue, Y.; Wang, T.; Skidmore, A.K. Automatic counting of large mammals from very high resolution panchromatic satellite imagery. Remote Sens. 2017, 9, 878. [Google Scholar] [CrossRef]

- Fretwell, P.T.; LaRue, M.A.; Morin, P.; Kooyman, G.L.; Wienecke, B.; Ratcliffe, N.; Fox, A.J.; Fleming, A.H.; Porter, C.; Trathan, P.N. An emperor penguin population estimate: The first global, synoptic survey of a species from space. PLoS ONE 2012, 7, e33751. [Google Scholar] [CrossRef]

- Abileah, R.; Marine Technology, S. Use of high resolution space imagery to monitor the abundance, distribution, and migration patterns of marine mammal populations. In Proceedings of the Annual Conference of the Marine-Technology-Society, Honolulu, HI, USA, 5–8 November 2001. [Google Scholar]

- McMahon, C.R.; Howe, H.; van den Hoff, J.; Alderman, R.; Brolsma, H.; Hindell, M.A. Satellites, the all-seeing eyes in the sky: Counting elephant seals from space. PLoS ONE 2014, 9, e92613. [Google Scholar] [CrossRef]

- Hawkins, A.S. Flyways: Pioneering waterfowl management in north america. Indian J. Med. Res. 1984, 49, 832. [Google Scholar]

- Descamps, S.; Béchet, A.; Descombes, X.; Arnaud, A.; Zerubia, J. An automatic counter for aerial images of aggregations of large birds. Bird Study 2011, 58, 302–308. [Google Scholar] [CrossRef]

- Chabot, D.; Dillon, C.; Francis, C.M. An approach for using off-the-shelf object-based image analysis software to detect and count birds in large volumes of aerial imagery. Avian Conserv. Ecol. 2018, 13, 15. [Google Scholar] [CrossRef]

- Groom, G.; Stjernholm, M.; Nielsen, R.D.; Fleetwood, A.; Petersen, I.K. Remote sensing image data and automated analysis to describe marine bird distributions and abundances. Ecol. Inform. 2013, 14, 2–8. [Google Scholar] [CrossRef]

- Burn, D.M.; Webber, M.A.; Udevitz, M.S. Application of airborne thermal imagery to surveys of pacific walrus. Wildl. Soc. Bull. 2006, 34, 51–58. [Google Scholar] [CrossRef]

- Garner, D.L.; Underwood, H.B.; Porter, W.F. Use of modern infrared thermography for wildlife population surveys. Environ. Manag. 1995, 19, 233–238. [Google Scholar] [CrossRef]

- Franke, U.; Goll, B.; Hohmann, U.; Heurich, M. Aerial ungulate surveys with a combination of infrared and high–resolution natural colour images. Anim. Biodivers. Conserv. 2012, 35, 285–293. [Google Scholar]

- Israel, M. A uav-based roe deer fawn detection system. In Proceedings of the International Conference on Unmanned Aerial Vehicle in Geomatics, Gottingen, Germany, 14–16 September 2011; pp. 51–55. [Google Scholar]

- Hayford, H.A.; O’Donnell, M.J.; Carrington, E. Radio tracking detects behavioral thermoregulation at a snail’s pace. J. Exp. Mar. Biol. Ecol. 2018, 499, 17–25. [Google Scholar] [CrossRef]

- Landinfo Worldwide Mapping LLC. Buying Satellite Imagery: Pricing Information for High Resolution Satellite Imagery. Available online: http://www.landinfo.com/satellite-imagery-pricing.html (accessed on 6 January 2019).

- Sandbrook, C. The social implications of using drones for biodiversity conservation. Ambio 2015, 44, 636–647. [Google Scholar] [CrossRef] [Green Version]

- Mulero-Pazmany, M.; Jenni-Eiermann, S.; Strebel, N.; Sattler, T.; Negro, J.J.; Tablado, Z. Unmanned aircraft systems as a new source of disturbance for wildlife: A systematic review. PLoS ONE 2017, 12, e0178448. [Google Scholar] [CrossRef] [PubMed]

- Sarda-palomera, F.; Bota, G.; Vinolo, C.; Pallares, O.; Sazatornil, V.; Brotons, L.; Gomariz, S.; Sarda, F. Fine-scale bird monitoring from light unmanned aircraft systems. IBIS 2012, 154, 177–183. [Google Scholar] [CrossRef]

- Hodgson, J.C.; Koh, L.P. Best practice for minimising unmanned aerial vehicle disturbance to wildlife in biological field research. Curr. Biol. 2016, 26, 404–405. [Google Scholar] [CrossRef] [PubMed]

- Resnik, D.B.; Elliott, K.C. Using drones to study human beings: Ethical and regulatory issues. Sci. Eng. Ethics 2018, 1–12. [Google Scholar] [CrossRef]

- Rümmler, M.C.; Mustafa, O.; Maercker, J.; Peter, H.U.; Esefeld, J. Measuring the influence of unmanned aerial vehicles on adélie penguins. Polar Biol. 2016, 39, 1329–1334. [Google Scholar] [CrossRef]

- Cracknell, A.P. Uavs: Regulations and law enforcement. Int. J. Remote Sens. 2017, 38, 3054–3067. [Google Scholar] [CrossRef]

- Guinet, C.; Jouventin, P.; Malacamp, J. Satellite remote-sensing in monitoring change of seabirds - use of spot image in king penguin population increase at ile aux cochons, crozet archipelago. Polar Biol. 1995, 15, 511–515. [Google Scholar] [CrossRef]

- Williams, M.; Dowdeswell, J.A. Mapping seabird nesting habitats in franz josef land, russian high arctic, using digital landsat thematic mapper imagery. Polar Res. 1998, 17, 15–30. [Google Scholar] [CrossRef]

- Ainley, D.G.; Larue, M.A.; Stirling, I.; Stammerjohn, S.; Siniff, D.B. An apparent population decrease, or change in distribution, of weddell seals along the victoria land coast. Mar. Mammal Sci. 2015, 31, 1338–1361. [Google Scholar] [CrossRef]

- Leblanc, G.; Francis, C.; Soffer, R.; Kalacska, M.; De Gea, J. Spectral reflectance of polar bear and other large arctic mammal pelts: Potential applications to remote sensing surveys. Remote Sens. 2016, 8, 273. [Google Scholar] [CrossRef]

- Caughley, G. Experiments in aerial survey. J. Wildl. Manag. 1976, 40, 290–300. [Google Scholar] [CrossRef]

- International Whaling Commission. Report of the Scientific Committee. Available online: https://iwc.int/scientifc-committee-reports (accessed on 21 May 2019).

- Norton-Griffiths, M. Counting Animals. Handbook No.1; African Wildlife Leadership Foundation: Nairobi, Kenya, 1978. [Google Scholar]

- Christiansen, P.; Steen, K.; Jørgensen, R.; Karstoft, H. Automated detection and recognition of wildlife using thermal cameras. Sensors 2014, 14, 13778. [Google Scholar] [CrossRef]

- Torney, C.J.; Dobson, A.P.; Borner, F.; Lloydjones, D.J.; Moyer, D.; Maliti, H.T.; Mwita, M.; Fredrick, H.; Borner, M.; Hopcraft, J.G.C. Assessing rotation-invariant feature classification for automated wildebeest population counts. PLoS ONE 2016, 11, e0156342. [Google Scholar] [CrossRef] [PubMed]

- Longmore, S.N.; Collins, R.P.; Pfeifer, S.; Fox, S.E.; Mulero-Pazmany, M.; Bezombes, F.; Goodwin, A.; Ovelar, M.D.; Knapen, J.H.; Wich, S.A. Adapting astronomical source detection software to help detect animals in thermal images obtained by unmanned aerial systems. Int. J. Remote Sens. 2017, 38, 2623–2638. [Google Scholar] [CrossRef]

- Shannon, C.E. Communication in the presence of noise. Proc. Inst. Radio Eng. 1949, 37, 10–21. [Google Scholar] [CrossRef]

- Liu, C.-C.; Chen, Y.-H.; Wen, H.-L. Supporting the annual international black-faced spoonbill census with a low-cost unmanned aerial vehicle. Ecol. Inform. 2015, 30, 170–178. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2018, 5, 8–36. [Google Scholar] [CrossRef]

- Kellenberger, B.; Marcos, D.; Tuia, D. Detecting mammals in uav images: Best practices to address a substantially imbalanced dataset with deep learning. Remote Sens. Environ. 2018, 216, 139–153. [Google Scholar] [CrossRef]

- Mulero-Pazmany, M.; Stolper, R.; van Essen, L.D.; Negro, J.J.; Sassen, T. Remotely piloted aircraft systems as a rhinoceros anti-poaching tool in africa. PLoS ONE 2014, 9, e83873. [Google Scholar] [CrossRef]

- Liao, X. The Second Chief Meeting of the Research Center of Uav Application and Regulation, Cas. Available online: http://www.igsnrr.ac.cn/xwzx/zhxw/201709/t20170901_4853837.html (accessed on 19 January 2018).

- Cunliffe, A.M.; Anderson, K.; DeBell, L.; Duffy, J.P. A uk civil aviation authority (caa)-approved operations manual for safe deployment of lightweight drones in research. Int. J. Remote Sens. 2017, 38, 2737–2744. [Google Scholar] [CrossRef]

- Toor, M.L.v.; Kranstauber, B.; Newman, S.H.; Prosser, D.J.; Takekawa, J.Y.; Technitis, G.; Weibel, R.; Wikelski, M.; Safi, K. Integrating animal movement with habitat suitability for estimating dynamic migratory connectivity. Landsc. Ecol. 2018, 33, 879–893. [Google Scholar] [CrossRef] [Green Version]

- Madec, S.; Jin, X.; Lu, H.; De Solan, B.; Liu, S.; Duyme, F.; Heritier, E.; Baret, F. Ear density estimation from high resolution rgb imagery using deep learning technique. Agric. For. Meteorol. 2019, 264, 225–234. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 2961–2969. [Google Scholar] [CrossRef]

- Clark, B.L.; Bevanda, M.; Aspillaga, E.; Jørgensen, N.H. Bridging disciplines with training in remote sensing for animal movement: An attendee perspective. Remote Sens. Ecol. Conserv. 2016, 3, 30–37. [Google Scholar] [CrossRef]

- China State Administration of Forestry. Notifications of the Relevant Work of the State Forestry Administration on the Full Start of the Second Investigation of Terrestrial Wildlife Resources. Available online: http://www.forestry.gov.cn/portal/main/govfile/13/govfile_1817.htm (accessed on 7 December 2017).

| Spaceborne Surveys | Manned aerial Surveys | UAS Survey | |

|---|---|---|---|

| Platforms | Satellite images are from GeoEye-1 (3/15), WorldView-1/2/3/4 (7/15), Quickbird-2 (3/15), and IKONOS satellites (1/15). | Aircraft used were mainly light manned helicopters (3/12) and fixed-wing aircraft (11/12). Surveyors of terrestrial mammals prefer using helicopters. Surveyors of animals in plains or marine environments prefer using fixed-wing aircraft. A long-period study used a combination of helicopters and fixed-wing airplanes. | UASs used include small fixed-wing UASs (11/18) and multicopters (9/18). Fixed-wing UASs are typically used to survey large or marine animals. Multicopter UASs are typically used to survey animals in uneven terrain and high-vegetation areas, as well as birds because of their superior vertical takeoff and landing capabilities and low noise. |

| Sensors | Panchromatic and multispectral images are the most widely used data (Two satellite remote sensing studies used panchromatic imagery, and the other thirteen studies used multispectral imagery). Pansharpening techniques were used to merge high-resolution panchromatic and lower-resolution multispectral imagery to create a single high-resolution color image to increase the differentiation between target objects and background. | Real-time surveys do not need imaging sensors. Photographic surveys used still RGB images, video, and infrared thermography to detect wild animals. | RGB images are suitable for detecting wild animals living in open lands or marine environments. Thermal infrared cameras are primarily used for detecting wild animals living in forests and other high-vegetation areas. Radio-tracking devices have been used on UASs in recent years to study the behavior of small animals. |

| Resolution | Up to 0.31 m resolution in the panchromatic band (WorldView-3 and -4) Up to 1.2 m in the multispectral band (WorldView-3 and -4) | Up to 2.5 cm (RGB imagery) | Up to 2 mm resolution (RGB imagery) |

| Coverage | Regional to global scales | Has been used for regular and geographically comprehensive animal monitoring on regional scales. Sampling distances were up to 12,800 km, with an area of approximately 6000 km2. | No more than 50 km2. Most survey areas were <2 km2. The minimum survey area was only 4 × 4 m. |

| Cost | Relatively low (the price of 0.5 m spatial resolution satellite imagery ranges from USD $14–27.5 per km2 depending on the spectral resolution, order area and data age). | Expensive to implement for small study areas because of the cost of the aircraft, operator, and fuel. | Medium Has been seen as a safer and low-budget alternative to manned aircraft. |

| Surveyed species | It is possible only to directly identify large-sized (≥0.6 m) individual animals from existing VHR commercial satellite imagery, such as wildebeests, zebras, polar bears, albatrosses, southern right whales, and Weddell seals. | The real-time survey method has long been used to survey terrestrial and marine animals with potentially low abundances in remote or large areas. Manned aerial imagery allows directly discern smaller (<0.6 m) animals, such as birds, sea turtles, and fish; large animals that are difficult to distinguish from the background at the species level, such as roe deer and red deer; and some animals with a significant temperature difference from the background environment, such as Pacific walruses. | UASs allow surveying of smaller animals, as well as their behaviors, such as butterfly species, Bicknell’s and Swainson’s thrushes, noisy miners, and iguanas. Most applications of UASs focus on assessing the possibilities of species detection in a small geographic area. |

| Methodology | Direct visual recognition and Automatic and semiautomatic detection using pixel-based and object-based methods | Direct visual recognition Automatic and semiautomatic detection using pixel-based and object-based methods and traditional machine learning. | Direct visual recognition Automatic and semiautomatic detection using pixel-based and object-based methods, traditional machine learning, and deep learning. |

| Pixel number of target species in imagery | 2–6 pixels | Did not investigate, but similar to those for UAS imagery. | Most animals cover 22–79 pixels. |

| Accuracy | Automated and semiautomatic counts of animals from remote sensing imagery are reported to usually be highly correlated with manual counts when these algorithms were applied to small areas in relatively homogenous environments. The manual counts of animals derived from different remote sensing imagery and ground-based counts collected within a short time interval are also reported to be highly correlated. Remote sensing-based counts often underestimate populations because some animals are invisible to remote sensing imagery, especially those living in high-vegetation areas and aquatic environments, but high-resolution imagery increases the detection possibility. | ||

| No. | Sensor/Instrument | Sensor Type | Spatial Resolution (Nadir) | Agency | Launch Year |

|---|---|---|---|---|---|

| 1 | IKONOS | Optical | Panchromatic 1 m, multispectral 4 m | Digital Globe, USA | 1999 |

| QuickBird-2 | Optical | Panchromatic 0.61 m, multispectral 2.62 m | 2001 | ||

| GeoEye-1 | Optical | Panchromatic 0.41 m, multispectral 1.65 m | 2008 | ||

| WorldView-1 | Optical | Panchromatic 0.46 m | 2007 | ||

| WorldView-2 | Optical | Panchromatic 0.46 m, multispectral 1.85 m | 2009 | ||

| WorldView-3/4 | Optical | Panchromatic 0.31 m, multispectral 1.24 m | 2014/2016 | ||

| 2 | COSMO-SkyMed 1/2/3/4/5/6 | SAR | X-Band up to 1 m | Italian Space Agency | 2007-2018 |

| 3 | Pleiades-1/2 | Optical | Panchromatic 0.5 m, multispectral 2 m | French space agency and EADS Astrium | 2011/2012 |

| 4 | TerraSAR-X TanDEM-X | SAR | X-Band up to 1 m | German Aerospace Center and EADS Astrium | 2007 |

| 5 | Resurs-DK1 | Optical | Panchromatic 1 m, multispectral 2-3 m | Russian Space Agency | 2006 |

| 6 | Kompsat-2 | Optical | Panchromatic 1 m, multispectral 4 m | Korean Academy of Aeronautics and Astronautics | 2006 |

| Kompsat-3 | Optical | Panchromatic 0.7 m, multispectral 2.8 m | 2012 | ||

| 7 | CartoSat-2/2A/2B | Optical | Panchromatic 1 m | Indian Space Research Organization | 2007 |

| 8 | EROS-B | Optical | Panchromatic 0.7 m | Israeli Aircraft Industries Ltd. (built) and ImageSat International N.V. (own) | 2006 |

| 9 | GF-2 | Optical | Panchromatic 0.8, multispectral 3.2 m | State Administration of Science, Technology and Industry for National Defense, China | 2014 |

| 10 | Beijing 2 | Optical | Panchromatic 0.8 m, multispectral 3.2 m | Twenty First Century Aerospace Technology Co., Ltd, China | 2015 |

| 11 | SuperView-1 | Optical | Panchromatic 0.5 m, multispectral 2 m | China Aerospace Science and Technology Corporation | 2016 |

| Group | Species or Items Detected | Satellites | Resolution (in the Panchromatic Band) | Data Type | Study |

|---|---|---|---|---|---|

| Terrestrial mammals | wildebeests (Connochaetes gnou), zebras (Equus quagga) | GeoEye-1 | 0.5 m | Multispectral imagery | [29] |

| wildebeests (Connochaetes gnou), zebras (Equus quagga) | GeoEye-1 | 0.5 m | Panchromatic imagery | [66] | |

| polar bears (Ursus maritimus) | WorldView-2 and Quickbird | 0.5 and 0.6 m, respectively | Multispectral imagery | [30] | |

| polar bears (Ursus maritimus) | GeoEye-1 | 0.5 m | Panchromatic imagery | [31] | |

| muskoxen (Ovibus moschatus) | WorldView-1 and WorldView-2 | 0.5 m | Multispectral imagery | [12] | |

| Aquatic and amphibious animals | walruses and bowhead whales | GeoEye-1 | 0.5 m | panchromatic imagery | [31] |

| southern right whales (Eubalaena australis) | WorldView-2 | 0.5 m | Multispectral imagery | [33] | |

| fin whales, southern right whales, and gray whales | WorldView-3 | 0.31 m | Multispectral imagery (Pansharpened) | [34] | |

| Weddell seals (Leptonychotes weddellii) | Quickbird-2 and WorldView-1 | 0.6 m | Multispectral imagery (Pansharpened) | [35] | |

| emperor penguins (Aptenodytes fosteri) | QuickBird | 0.6 m | Multispectral imagery (Pansharpened) | [67] | |

| humpback whales (up to 10 m in length) | IKONOS | 1 m | Multispectral imagery (Pansharpened) | [68] | |

| elephant seals (Mirounga leonina) | GeoEye-1 | 0.5 m | Multispectral imagery (Pansharpened) | [69] | |

| Weddell seals (Leptonychotes weddellii) | DigitalGlobe and GeoEye (specified satellites were not given) | 0.6 m | Multispectral imagery (Pansharpened) | [89] | |

| Flying organisms and insects | wandering albatross (Diomedea exulans) and northern royal albatross (Diomedea sanfordi) | WorldView-3 | 0.3 m | Multispectral imagery | [32] |

| Group | Species or Items Detected | Platforms | Sensors | Data Type | Surveyed Area (km2) | Flight Height (m) | Study |

|---|---|---|---|---|---|---|---|

| Terrestrial mammals | polar bears (Ursus maritimus) | Helicopter | Real-time surveys, no sensors | No imagery | 263 | 100 | [37] |

| polar bears (Ursus maritimus) | Bell 206 LongRanger (helicopter) | Real-time surveys, no sensors | No imagery | ~6000 | ~120 | [18] | |

| buffalos (Syncerus caffer), elands (Taurotragus oryx), elephants (Loxodonta africana), and giraffes (Giraffa camelopardalis) | Cessna 182 or 185 aircraft (fixed-wing aircraft) | Real-time surveys, no sensors | No imagery | <10,000 | Not mentioned | [41] | |

| pronghorns (Antilocapra americana) | Maule 5 (fixed-wing aircraft) | Real-time surveys, no sensors | No imagery | ~60 | 91.4 | [2] | |

| red kangaroos (Megaleia rufa), grey kangaroos (Macropus giganteus) and sheep | Cessna 182 (fixed-wing aircraft) | Real-time surveys, no sensors | No imagery | 136 | 46–183 | [91] | |

| buffalos, giraffes, elands and waterbucks, elephants, impalas, ostriches, cattle, goats and sheep | Cessna 185 or Partinevia (fixed-wing, high-wing aircraft) | Real-time surveys, no sensors | No imagery | 6000 | 90–120 | [17] | |

| red deer (Cervus elaphus), fallow deer (Dama dama), roe deer (Capreolus capreolus), wild boar (Sus scrofa), foxes, wolves and badgers | Microlight S–Stol (fixed-wing, electric) | A JENOPTIC@ infrared camera and a Canon 5D Mark 2 | Infrared videos and RGB images | 4 | 450 | [76] | |

| Aquatic and amphibious animals | lemon sharks (Negaprion brevirostris) | A Cessna 172, a Beechcraft 35 Bonanza, a Piper Pa-28 Archer, and a Piper PA-31-350 Navajo Chieftain (fixed-wing, low winged aircraft) | Real-time surveys, no sensors | No imagery | ~100 | 100 m | [38] |

| humpback whales (Megaptera novaeangliae) | Mitsubishi Marquese (fixed-wing, flat window aircraft) | Real-time surveys, no sensors | No imagery | 1180 | 152.4 m | [39] | |

| dugongs (Dugong dugon), dolphins, and sea turtles (Chelonia mydas) | Partenavia 68B (fixed-wing, high-wing aircraft) | Real-time surveys, no sensors | No imagery | ~120 | 137–274 | [40] | |

| sea turtles, sharks, manta rays, small delphinids, and large delphinids | Early surveys (1963–1965) used helicopters (e.g., Sikorsky SH- 3 SeaKing), and later surveys (1975–2012) used 4-seat single engine fixed-wing airplanes (e.g., Cessna 172 Skyhawk) | Real-time surveys, no sensors | No imagery | 70.16 | 92–200 | [42] | |

| Pacific walrus (Odobenus rosmarus divergens) | Aero Commander 690B (fixed-wing, high-wing aircraft) | Daedelus Airborne Multispectral Scanner (AMS) and Nikon D1X digital camera | Thermal infrared images and RGB images | ~11,398.5 | 457–3200 | [74] | |

| Flying organisms and insects | greater flamingo (Phoenicopterus roseus) | Not mentioned | 35 mm film or digital (5 M pixels) reflex cameras | RGB images | Not mentioned | 300 | [71] |

| lesser snow geese (Chen caerulescens) | Not mentioned | DSS 439 39-megapixel aerial camera | RGB images | Not mentioned | Not mentioned | [72] | |

| common scoter (Melanitta nigra), great cormorant (Phalacrocorax carbo), diver species group (Gavia sp.), Sandwich tern (Sterna sandvicensis), Manx shearwater (Puffinus puffinus) | Twin-engine Cessna 402B and Cessna 404 (fixed-wing aircraft) | Vexcel’s UltraCAM-D and UltraCAM-XP | RGB images | 670 | 475 | [73] |

| Group | Species or Items Detected | UAS Model (Type of UAS) | Sensor | Data Type | Surveyed Area (km2) | Flight Height (m) | Study |

|---|---|---|---|---|---|---|---|

| Terrestrial mammals | roe deer (Capreolus pygargus) | Falcon-8 (fixed-wing, electric) | FLIR Tau640 thermal imaging camera | Thermal image | 0.71 | 30–50 | [77] |

| elephants (Loxodonta africana) | Gatewing 100 (fixed-wing, electric) | Ricoh GR3 still camera | RGB image | 13.79 | 100–600 | [43] | |

| cows (Bos taurus) | Custom-made 750 mm carbon-folding Y6-multirotor (hexacopter, electric) | FLIR Tau 2 LWIR thermal imaging camera | Thermal image | <1.0 * | 80–120 | [96] | |

| koalas (Phascolarctos cinerus) | S800 EVO (hexacopter, electric) | Mobius RGB Camera +FLIR Tau 2-640 thermal imaging camera | RGB video + thermal video | 0.01 * | 20–60 | [62] | |

| red deer (Cervus elaphus), roe deer (Capreolus capreolus), and wild boar (Sus scrofa) | Skywalker X8 (fixed-wing, electric) | IRMOD v640 thermal imaging camera | Video | ~1.0 * | 149~150 | [52] | |

| Aquatic and amphibious animals | dugongs (Dugong dugon) | ScanEagle (fixed-wing, fuel) | Nikon D90 SLR camera + fixed video camera | RGB image+ RGB video | 1.3 | 152–304 | [51] |

| American alligators (Alligator mississippiensis) and Florida manatees (Trichechus manatus) | 1.5-m wingspan MLB FoldBat (fixed-wing, fuel) | Canon Elura 2 | RGB video | 1.3 | 100–150 | [58] | |

| leopard seals (Hydrurga leptonyx) | APH-22 (hexacopter, electric) | Olympus E-P1 | RGB image | <1.0 * | 45 | [57] | |

| humpback whales (Megaptera novaeangliae) | ScanEagle (fixed-wing, fuel) | Nikon D90 12 megapixel digital SLR camera | RGB image | 35.2 * | 732 | [50] | |

| blacktip reef sharks (Carcharhinus melanopterus) and pink whiprays (Himantura fai) | DJI Phantom 2 (quadcopter, electric) | GoPro Hero 3 | RGB video | 0.0288 | 12 | [55] | |

| gray seals (Halichoerus grypus) | senseFly eBee (fixed-wing, electric) | Canon S110+ FLIR Tau 2-640 thermal imaging camera | RGB image + thermal image | 0.16 * | 250 | [53] | |

| Flying organisms and insects | white ibises (Eudocimus albus) | 1.5-m wingspan MLB FoldBat (fixed-wing, fuel) | Canon Elura 2 | RGB video | 1.3 | 100–150 | [58] |

| black-headed gulls (Chroicocephalus ridibundus) | Multiplex Twin Star II model (fixed-wing, electric) | Panasonic Lumix FT-1 | RGB image | 0.0558 | 30–40 | [82] | |

| frigatebirds (Fregata ariel), crested terns (Thalasseus bergii), and royal penguins (Eudyptes schlegeli) | 3D Robotics (octocopter, electric) | Canon EOS M | RGB image | <1.0 * | 75 | [54] | |

| gentoo penguins (Pygoscelis papua) and chinstrap penguins (Pygoscelis antarctica) | APH-22 (hexacopter, electric) | Olympus E-P1 | RGB image | <1.0 * | 45 | [57] | |

| canvasbacks (Aythya valisineria), western/Clark’s grebes (Aechmophorus occidentalis/clarkii), and double-crested cormorants (Phalacrocorax auritus) | Honeywell RQ-16 T-Hawk (hexacopter, fuel) and AeroVironment RQ-11A (fixed-wing, electric) | Canon PowerShot SX230, SX260, GoPro Hero3, and Canon PowerShot S100 | RGB image | <1.0 * | 45–76 | [11] | |

| butterflies (Libythea celtis) | Phantom 2 Vision+ (quadcopter, electric) | GoPro Hero3 | RGB image | 0.000016 | 4 | [56] | |

| Bicknell’s and Swainson’s thrushes (C. ustulatus) | Sky Hero Spyder X8 (octocopter, electric) | Radio transmitter (Avian NanoTag model NTQB-4-2, Lotek Wireless Inc., Newmarket, Ont., Canada) | Radio-tracking data | <1.0 * | 50 | [64] | |

| noisy miners (Manorina Melanocephala) | Unmentioned (hexacopter, electric) | Radio transmitter (Avian NanoTag model NTQB-4-2, Lotek Wireless Inc., Newmarket, Ont., Canada) | Radio-tracking data | <1.0 * | 50 | [48] |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, D.; Shao, Q.; Yue, H. Surveying Wild Animals from Satellites, Manned Aircraft and Unmanned Aerial Systems (UASs): A Review. Remote Sens. 2019, 11, 1308. https://doi.org/10.3390/rs11111308

Wang D, Shao Q, Yue H. Surveying Wild Animals from Satellites, Manned Aircraft and Unmanned Aerial Systems (UASs): A Review. Remote Sensing. 2019; 11(11):1308. https://doi.org/10.3390/rs11111308

Chicago/Turabian StyleWang, Dongliang, Quanqin Shao, and Huanyin Yue. 2019. "Surveying Wild Animals from Satellites, Manned Aircraft and Unmanned Aerial Systems (UASs): A Review" Remote Sensing 11, no. 11: 1308. https://doi.org/10.3390/rs11111308