Joint Local Block Grouping with Noise-Adjusted Principal Component Analysis for Hyperspectral Remote-Sensing Imagery Sparse Unmixing

Abstract

:1. Introduction

2. Spatial Regularization Sparse Unmixing (SRSU) Model

3. Joint Local Block Grouping with Noise-Adjusted Principal Component Analysis (NAPCA) Sparse Unmixing

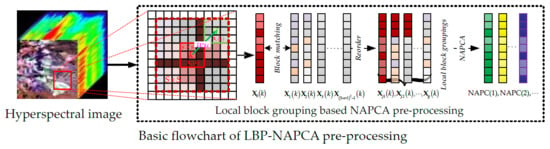

3.1. Local Block Grouping (LBG)

3.2. NAPCA for LBGs

3.3. LBG–NAPCA-Based Sparse Unmixing

| Algorithm 1: Pseudocode of the proposed method |

|

4. Experiments with Simulated Data

4.1. Simulated Datasets

- (1)

- Simulated Data Cube 1 (DC-1): DC-1 was generated with 75 × 75 pixels and 224 bands per pixel, using a linear mixture model. Five endmembers (shown as Figure 3a) were selected randomly from a standard spectral library, denoted as A (more information can be found at http://speclab.cr.usgs.gov/spectral.lib06). The abundance images were constructed simply, distributed spatially in the form of distinct square regions. Finally, independent and identically distributed (denoted as i.i.d.) Gaussian noise was added with SNR = 30 dB, which means a high intensity noise pollution. The true abundance maps of DC-1 are shown in Figure 3b–f.

- (2)

- Simulated Data Cube 2 (DC-2): DC-2 was provided by Dr. M. D. Iordache and Prof. J. M. Bioucas-Dias, with an image size of 100 × 100 pixels and 224 bands, and acts as a benchmark for spectral unmixing algorithms. In this simulated dataset, nine spectral signatures were selected from the standard spectral library A with spectral angles smaller than 4 degrees, which means they can be easily confused, and then a Dirichlet distribution was utilized uniformly over the probability simplex to obtain the fractional abundance maps, which can exhibit spatial homogeneity better. Finally, i.i.d. Gaussian noise of 30 dB was added. Figure 4 illustrates the true fractional abundance maps as well as the nine spectral curves.

- (3)

- Simulated Data Cube 3 (DC-3): DC-3, with 100 × 100 pixels and 221 bands per pixel, was created for benchmarking the accuracy of the spectral unmixing provided in the HyperMix tool [69]. There are fractal patterns since they can be approximated to a certain degrees, including clouds, mountain ranges, coastlines, vegetables, etc. The endmembers for DC-3 were randomly selected from a USGS library after removing certain bands. In addition, zero-mean Gaussian nose was added with the SNR = 10 dB, which means the poor quality of this data cube. The true abundance maps of the nine endmembers are shown in Figure 5.

4.2. Results and Discussion

5. Experiments with Real Hyperspectral Imagery

5.1. Real Hyperspectral Datasets

5.2. Results and Analysis

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ghamisi, P.; Yokoya, N.; Li, J.; Liao, W.; Liu, S.; Plaza, J.; Rasti, B.; Plaza, A. Advances in hyperspectral image and signal processing. IEEE Geosci. Remote Sens. Mag. 2017, 5, 37–78. [Google Scholar] [CrossRef]

- Tong, Q.; Xue, Y.; Zhang, L. Progress in hyperspectral remote sensing science and technology in China over the past three decades. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 70–91. [Google Scholar] [CrossRef]

- Li, C.; Liu, Y.; Cheng, J.; Song, R.; Peng, H.; Chen, Q.; Chen, X. Hyperspectral unmixing with bandwise generalized bilinear model. Remote Sens. 2018, 10, 1600. [Google Scholar] [CrossRef]

- Wang, Q.; Yuan, Z.; Du, Q.; Li, X. GETNET: A general end-to-end two-dimensional CNN framework for hyperspectral image change detection. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3–13. [Google Scholar] [CrossRef]

- Luo, H.; Liu, C.; Wu, C.; Guo, X. Urban change detection based on dempster-shafer theory for multi-temporal very high-resolution imagery. Remote Sens. 2018, 10, 980. [Google Scholar] [CrossRef]

- Luo, H.; Wang, L.; Wu, C.; Zhang, L. An improved method for impervious surface mapping incorporating LiDAR data and high-resolution imagery at different acquisition times. Remote Sens. 2018, 10, 1349. [Google Scholar] [CrossRef]

- Liu, J.; Luo, B.; Doute, S.; Chanussot, J. Exploration of planetary hyperspectral images with unsupervised spectral unmixing: A case study of planet Mars. Remote Sens. 2018, 10, 737. [Google Scholar] [CrossRef]

- Marcello, J.; Eugenio, F.; Martin, J.; Marques, F. Seabed mapping in coastal shallow waters using high resolution multispectral and hyperspectral imagery. Remote Sens. 2018, 10, 1208. [Google Scholar] [CrossRef]

- Zhang, X.; Li, C.; Zhang, J.; Chen, Q.; Feng, J.; Jiao, L.; Zhou, H. Hyperspectral unmixing via low-rank representation with sparse consistency constraint and spectral library pruning. Remote Sens. 2018, 10, 339. [Google Scholar] [CrossRef]

- Salehani, Y.E.; Gazor, S.; Cheriet, M. Sparse hyperspectral unmixing via heuristic lp-norm approach. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 1191–1202. [Google Scholar] [CrossRef]

- Shi, C.; Wang, L. Linear spatial spectral mixture model. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3599–3611. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.M.; Chanussot, J. Hyperspectral remote sensing data analysis and future challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef]

- Nascimento, J.M.P.; Bioucas-Dias, J.M. Does independent component analysis play a role in unmixing hyperspectral data? IEEE Trans. Geosci. Remote Sens. 2005, 43, 175–187. [Google Scholar] [CrossRef]

- Liu, X.; Xia, W.; Wang, B.; Zhang, L. An approach based on constrained nonnegative matrix factorization to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 757–772. [Google Scholar] [CrossRef]

- Wang, M.; Zhang, B.; Pan, X.; Yang, S. Group low-rank nonnegative matrix factorization with semantic regularizer for hyperspectral unmixing. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 1022–1029. [Google Scholar] [CrossRef]

- Fang, H.; Li, A.; Xu, H.; Wang, T. Sparsity-constrained deep nonnegative matrix factorization for hyperspectral unmixing. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1105–1109. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Dobigeon, N.; Parente, M.; Du, Q.; Gader, P.; Chanussot, J. Hyperspectral unmixing overview: Geometrical, statistical, and sparse regression-based approaches. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2012, 5, 354–379. [Google Scholar] [CrossRef]

- Hendrix, E.M.T.; Garcia, I.; Plaza, J.; Martin, G.; Plaza, A. A new minimum-volume enclosing algorithm for endmember identification and abundance estimation in hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2012, 50, 2744–2757. [Google Scholar] [CrossRef]

- Somers, B.; Zortea, M.; Plaza, A.; Asner, G. Automated extraction of image-based endmember bundles for improved spectral unmixing. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2012, 5, 396–408. [Google Scholar] [CrossRef]

- Cohen, J.E.; Gillis, N. Spectral unmixing with multiple dictionaries. IEEE Geosci. Remote Sens. Lett. 2018, 15, 187–191. [Google Scholar] [CrossRef]

- Xu, X.; Tong, X.; Plaza, A.; Zhong, Y.; Xie, H.; Zhang, L. Joint sparse sub-pixel model with endmember variability for remotely sensed imagery. Remote Sens. 2017, 9, 15. [Google Scholar] [CrossRef]

- Fu, X.; Ma, W.-K.; Bioucas-Dias, J.M.; Chan, T.-H. Semiblind hyperspectral unmixing in the presence of spectral library mismatches. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5171–5184. [Google Scholar] [CrossRef]

- Thouvenin, P.-A.; Dobigeon, N.; Tourneret, J.-Y. Hyperspectral unmixing with spectral variability using a perturbed linear mixing model. IEEE Trans. Signal. Process. 2016, 64, 525–538. [Google Scholar] [CrossRef]

- Drumetz, L.; Veganzones, M.-A.; Henrot, S.; Phlypo, R.; Chanussot, J.; Jutten, C. Blind hyperspectral unmixing using an entended linear mixing model to address spectral variability. IEEE Trans. Image Process. 2016, 25, 3890–3905. [Google Scholar] [CrossRef]

- Hong, D.; Yokoya, N.; Chanussot, J.; Zhu, X.-X. An augmented linear mixing model to address spectral variability for hyperspectral unmixing. IEEE Trans. Image Process. 2019, 28, 1923–1938. [Google Scholar] [CrossRef]

- Drumetz, L.; Meyer, T.R.; Chanussot, J.; Bertozzi, A.L.; Jutten, C. Hyperspectral image unmixing with endmember bundles and group sparsity inducing mixed norms. IEEE Trans. Image Process. 2019. [Google Scholar] [CrossRef] [PubMed]

- Hong, D.; Zhu, X.-X. SULoRA: Subspace unmixing with low rank attribute embedding for hyperspectral data analysis. IEEE J. Sel. Top. Signal. Process. 2018, 12, 1351–1363. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Figueiredo, M. Alternating direction algorithms for constrained sparse regression: Application to hyperspectral unmixing. In Proceedings of the 2nd IEEE GRSS Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Reykjavik, Iceland, 14–16 June 2010. [Google Scholar]

- Iordache, M.D. A Sparse Regression Approach to Hyperspectral Unmixing. Ph.D. Thesis, School of Electrical and Computer Engineering, Ithaca, NY, USA, 2011. [Google Scholar]

- Rizkinia, M.; Okuda, M. Joint local abundance sparse unmixing for hyperspectral images. Remote Sens. 2017, 9, 1224. [Google Scholar] [CrossRef]

- Zhu, F.; Wang, Y.; Xiang, S.; Fan, B.; Pan, C. Structured sparse method for hyperspectral unmixing. ISPRS J. Photogramm. Remote Sens. 2014, 88, 101–118. [Google Scholar] [CrossRef]

- Bruckstein, A.M.; Elad, M.; Zibulevsky, M. On the uniqueness of nonnegative sparse solutions to underdetermined systems of equations. IEEE Trans. Inf. Theory 2008, 54, 4813–4820. [Google Scholar] [CrossRef]

- Candes, E.; Romberg, J.J. Sparsity and incoherence in compressive sampling. IEEE Trans. Image Process. 2007, 23, 969–985. [Google Scholar] [CrossRef] [Green Version]

- Pati, Y.C.; Rezaiifar, R.; Krishnaprasad, P. Orthogonal matching pursuit: Recursive function approximation with applications to wavelet decomposition. In Proceedings of the 27th Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 1–3 November 1993; pp. 40–44. [Google Scholar]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A. Sparse unmixing of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2014–2039. [Google Scholar] [CrossRef]

- Li, C.; Ma, Y.; Mei, X.; Fan, F.; Huang, J.; Ma, J. Sparse unmixing of hyperspectral data with noise level estimation. Remote Sens. 2017, 9, 1166. [Google Scholar] [CrossRef]

- Feng, R.; Wang, L.; Zhong, Y. Least angle regression-based constrained sparse unmixing of hyperspectral remote sensing imagery. Remote Sens. 2018, 10, 1546. [Google Scholar] [CrossRef]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A. Total variation spatial regularization for sparse hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2012, 50, 4484–4502. [Google Scholar] [CrossRef]

- Zhong, Y.; Feng, R.; Zhang, L. Non-local sparse unmixing for hyperspectral remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 1889–1909. [Google Scholar] [CrossRef]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A. Collaborative sparse regression for hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2014, 52, 341–354. [Google Scholar] [CrossRef]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A.; Somers, B. MUSIC-CSR: Hyperspectral unmixing via multiple signal classification and collaborative sparse regression. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4364–4382. [Google Scholar] [CrossRef]

- Feng, R.; Zhong, Y.; Zhang, L. Adaptive non-local Euclidean medians sparse unmixing for hyperspectral imagery. ISPRS J. Photogramm. Remote Sens. 2014, 97, 9–24. [Google Scholar] [CrossRef]

- Feng, R.; Zhong, Y.; Zhang, L. Adaptive spatial regularization sparse unmixing strategy based on joint MAP for hyperspectral remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2016, 9, 5791–5805. [Google Scholar] [CrossRef]

- Zhang, S.; Li, J.; Li, H.; Deng, C.; Plaza, A. Spectral-spatial weighted sparse regression for hyperspectral image unmixing. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3265–3276. [Google Scholar] [CrossRef]

- Wang, S.; Huang, T.; Zhao, X.; Liu, G.; Cheng, Y. Double reweighted sparse regression and graph regularization for hyperspectral unmixing. Remote Sens. 2018, 10, 1046. [Google Scholar] [CrossRef]

- Rudin, L.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Xiong, F.; Qian, Y.; Zhou, J.; Tang, Y. Hyperspectral unmixing via total variation regularized nonnegative tensor factorization. IEEE Trans. Geosci. Remote Sens. 2019, 57, 2341–2357. [Google Scholar] [CrossRef]

- Wang, Q.; He, X.; Li, X. Locality and structure regularized low rank representation for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 911–923. [Google Scholar] [CrossRef]

- Feng, R.; Zhong, Y.; Zhang, L. An improved nonlocal sparse unmixing algorithm for hyperspectral imagery. IEEE Geosci. Remote Sens. Lett. 2015, 12, 915–919. [Google Scholar] [CrossRef]

- Wang, R.; Li, H.; Liao, W.; Huang, X.; Philips, W. Centralized collaborative sparse unmixing for hyperspectral images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 10, 1949–1962. [Google Scholar] [CrossRef]

- Wang, S.; Wang, C.M.; Chang, M.L.; Tsai, C.T.; Chang, C.I. Applications of kalman filtering to single hyperspectral signature analysis. IEEE Sens. J. 2010, 10, 547–563. [Google Scholar] [CrossRef]

- Zhang, S.; Li, J.; Wu, Z.; Plaza, A. Spatial discontinuity-weighted sparse unmixing of hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5767–5779. [Google Scholar] [CrossRef]

- Cai, J.F.; Osher, S.; Shen, Z. Convergence of the linearized Bregman iteration for l1-norm minimization. Math. Comput. 2009, 78, 2127–2136. [Google Scholar] [CrossRef]

- Zhang, X.; Burger, M.; Bresson, X.; Osher, S. Bregmanized nonlocal regularization for deconvolution and sparse reconstruction. SIAM J. Imaging Sci. 2010, 3, 253–276. [Google Scholar] [CrossRef]

- Lee, J.B.; Woodyatt, A.S.; Berman, M. Enhancement of high spectral resolution remote sensing data by a noise-adjusted principal components transform. IEEE Trans. Geosci. Remote Sens. 1990, 28, 295–304. [Google Scholar] [CrossRef]

- Roger, R.E. A fast way to compute the noise-adjusted principal components transform matrix. IEEE Trans. Geosci. Remote Sens. 1994, 32, 1194–1196. [Google Scholar] [CrossRef]

- Chang, C.I.; Du, Q. Interference and noise-adjusted principal components analysis. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2387–2396. [Google Scholar] [CrossRef] [Green Version]

- Mohan, A.; Sapiro, G.; Bosch, E. Spatially coherent nonlinear dimensionality reduction and segmentation of hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2007, 4, 206–210. [Google Scholar] [CrossRef]

- Li, X.; Wang, G. Optimal band selection for hyperspectral data with improved differential evolution. J. Ambient Intell. Hum. Comput. 2015, 6, 675–688. [Google Scholar] [CrossRef]

- Chaib, S.; Liu, H.; Gu, Y.; Yao, H. Deep feature fusion for VHR remote sensing scene classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4775–4784. [Google Scholar] [CrossRef]

- Wang, L.; Song, W.; Liu, P. Link the remote sensing big data to the image features via wavelet transformation. Cluster Comput. 2016, 19, 793–810. [Google Scholar] [CrossRef]

- Chaudhury, K.N.; Singer, A. Non-local Euclidean medians. IEEE Signal. Proc. Lett. 2012, 19, 745–748. [Google Scholar] [CrossRef]

- Pan, S.; Wu, J.; Zhu, X.; Zhang, C. Graph ensemble boosting for imbalanced noisy graph stream classification. IEEE Trans. Cybern. 2015, 45, 954–968. [Google Scholar]

- Wu, J.; Wu, B.; Pan, S.; Wang, H.; Cai, Z. Locally weighted learning: How and when does it work in Bayesian networks? Int. J. Comput. Int. Sys. 2015, 8, 63–74. [Google Scholar] [CrossRef]

- Wu, J.; Pan, S.; Zhu, X.; Cai, Z.; Zhang, P.; Zhang, C. Self-adaptive attribute weighting for Naive Bayes classification. Expert Syst. Appl. 2015, 42, 1478–1502. [Google Scholar] [CrossRef]

- Buades, A.; Coll, B.; Morel, J.M. A review of image denoising algorithm, with a new one. Multiscale Model. Sim. 2005, 4, 490–530. [Google Scholar] [CrossRef]

- Zhang, L.; Dong, W.; Zhang, D.; Shi, G. Two-stage image denoising by principal component analysis with local pixel grouping. Pattern Recogn. 2010, 43, 1531–1549. [Google Scholar] [CrossRef] [Green Version]

- Eckstein, J.; Bertsekas, D. On the Douglas-Rechford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 1992, 55, 293–318. [Google Scholar] [CrossRef]

- Jimenez, L.I.; Martin, G.; Plaza, A. A new tool for evaluating spectral unmixing applications for remotely sensed hyperspectral image analysis. In Proceedings of the International Conference Geographic Object-Based Image Analysis (GEOBIA), Rio de Janeiro, Brazil, 7–9 May 2012; pp. 1–5. [Google Scholar]

- Zhong, Y.; Wang, X.; Zhao, L.; Feng, R.; Zhang, L.; Xu, Y. Blind spectral unmixing based on sparse component analysis for hyperspectral remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2016, 119, 49–63. [Google Scholar] [CrossRef]

| Data | Algorithm | SUnSAL | SUnSAL-TV | NLSU | The Proposed Method |

|---|---|---|---|---|---|

| DC-1 | SRE (dB) | 15.1471 | 25.8333 | 29.6473 | 29.9368 |

| RMSE | 0.0421 | 0.0123 | 0.0079 | 0.0077 | |

| Time (s) | 0.4281 | 30.4375 | 19.5000 | 19.0945 | |

| DC-2 | SRE (dB) | 8.0355 | 12.5867 | 15.5208 | 15.7318 |

| RMSE | 0.1007 | 0.0597 | 0.0426 | 0.0415 | |

| Time (s) | 5.4388 | 63.6796 | 64.1788 | 63.9755 | |

| DC-3 | SRE (dB) | 4.5724 | 8.2779 | 9.9863 | 11.1548 |

| RMSE | 0.1444 | 0.0943 | 0.0774 | 0.0677 | |

| Time (s) | 2.9531 | 43.4063 | 73.7885 | 36.2213 |

| Data | Algorithm | SUnSAL | SUnSAL-TV | NLSU | The Proposed Method |

|---|---|---|---|---|---|

| R-1 | SRE(dB) | 4.928 | 5.309 | 6.002 | 6.084 |

| RMSE | 0.3051 | 0.2920 | 0.2696 | 0.2671 | |

| Time (s) | 2.9233 | 9.7656 | 270.9219 | 168.0189 | |

| R-2 | SRE(dB) | 8.6392 | 8.6937 | 8.7174 | 8.7408 |

| RMSE | 0.1240 | 0.1232 | 0.1228 | 0.1225 | |

| Time (s) | 133.1875 | 642.3750 | 586.9375 | 639.1004 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, R.; Wang, L.; Zhong, Y. Joint Local Block Grouping with Noise-Adjusted Principal Component Analysis for Hyperspectral Remote-Sensing Imagery Sparse Unmixing. Remote Sens. 2019, 11, 1223. https://doi.org/10.3390/rs11101223

Feng R, Wang L, Zhong Y. Joint Local Block Grouping with Noise-Adjusted Principal Component Analysis for Hyperspectral Remote-Sensing Imagery Sparse Unmixing. Remote Sensing. 2019; 11(10):1223. https://doi.org/10.3390/rs11101223

Chicago/Turabian StyleFeng, Ruyi, Lizhe Wang, and Yanfei Zhong. 2019. "Joint Local Block Grouping with Noise-Adjusted Principal Component Analysis for Hyperspectral Remote-Sensing Imagery Sparse Unmixing" Remote Sensing 11, no. 10: 1223. https://doi.org/10.3390/rs11101223