Analysis of Vegetation Red Edge with Different Illuminated/Shaded Canopy Proportions and to Construct Normalized Difference Canopy Shadow Index

Abstract

:1. Introduction

2. Materials and Methods

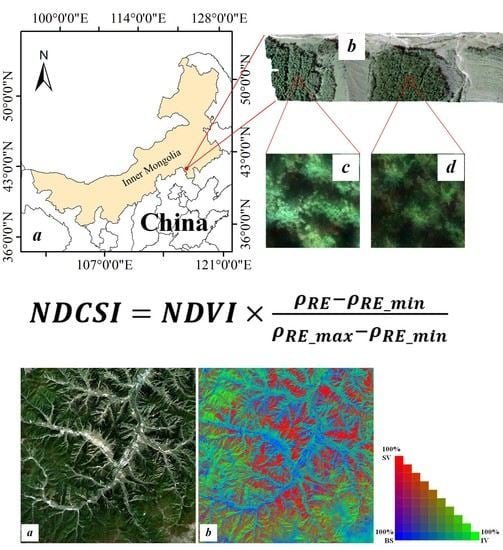

2.1. Study Area

2.2. Data Collection

2.2.1. Unmanned Aerial Vehicle Hyperspectral Image

2.2.2. Sentinel-2 Satellite Multispectral Image

2.3. Red Edge Technique

2.4. Linear Spectral Mixture Analysis

3. Normalized Difference Canopy Shadow Index

3.1. Response of Red Edge and NDVI to Vegetation Shadows

3.2. Proposed Normalized Difference Canopy Shadow Index

4. Results

4.1. Results of Unmanned Aerial Vehicle Data

4.2. Results of Sentinel-2 Satellite Data

5. Analysis and Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ahlstrom, A.; Xia, J.Y.; Arneth, A.; Luo, Y.Q.; Smith, B. Importance of vegetation dynamics for future terrestrial carbon cycling. Environ. Res. Lett. 2015, 10, 054019. [Google Scholar] [CrossRef]

- Fassnacht, F.E.; Latifi, H.; Stereńczak, K.; Modzelewska, A.; Lefsky, M.; Waser, L.T.; Straub, C.; Ghosh, A. Review of studies on tree species classification from remotely sensed data. Remote Sens. Environ. 2016, 186, 64–87. [Google Scholar] [CrossRef]

- Rautiainen, M.; Lang, M.; Mottus, M.; Kuusk, A.; Nilson, T.; Kuusk, J.; Lukk, T. Multi-angular reflectance properties of a hemiboreal forest: An analysis using CHRIS PROBA data. Remote Sens. Environ. 2008, 112, 2627–2642. [Google Scholar] [CrossRef]

- Hasegawa, K.; Izumi, T.; Matsuyama, H.; Kajiwara, K.; Honda, Y. Seasonal change of bidirectional reflectance distribution function in mature Japanese larch forests and their phenology at the foot of Mt. Yatsugatake, central Japan. Remote Sens. Environ. 2018, 209, 524–539. [Google Scholar] [CrossRef]

- Shao, Y.; Taff, G.N.; Walsh, S.J. Shadow detection and building-height estimation using IKONOS data. Int. J. Remote Sens. 2011, 32, 6929–6944. [Google Scholar] [CrossRef]

- Matsuki, T.; Yokoya, N.; Iwasaki, A. Hyperspectral tree species classification of Japanese complex mixed forest with the aid of Lidar data. IEEE J.-Stars 2015, 8, 2177–2187. [Google Scholar] [CrossRef]

- Li, Y.; Gong, P.; Sasagawa, T. Integrated shadow removal based on photogrammetry and image analysis. Int. J. Remote Sens. 2005, 26, 3911–3929. [Google Scholar] [CrossRef]

- le Hegarat-Mascle, S.; Andre, C. Use of Markov Random Fields for automatic cloud/shadow detection on high resolution optical images. ISPRS J. Photogramm. 2009, 64, 351–366. [Google Scholar] [CrossRef]

- Luther, J.E.; Fournier, R.A.; Houle, M.; Leboeuf, A.; Piercey, D.E. Application of shadow fraction models for estimating attributes of northern boreal forests. Can. J. For. Res.-Rev. Can. Rech. For. 2012, 42, 1750–1757. [Google Scholar] [CrossRef]

- Yang, X.; Tang, J.W.; Mustard, J.F.; Lee, J.E.; Rossini, M.; Joiner, J.; Munger, J.W.; Kornfeld, A.; Richardson, A.D. Solar-induced chlorophyll fluorescence that correlates with canopy photosynthesis on diurnal and seasonal scales in a temperate deciduous forest. Geophys. Res. Lett. 2015, 42, 2977–2987. [Google Scholar] [CrossRef]

- Cetin, M. Changes in the amount of chlorophyll in some plants of landscape studies. Kastamonu Univ. J. For. Fac. 2016, 16, 239–245. [Google Scholar]

- Hernandez-Clemente, R.; North, P.; Hornero, A.; Zarco-Tejada, P.J. Assessing the effects of forest health on sun-induced chlorophyll fluorescence using the FluorFLIGHT 3-D radiative transfer model to account for forest structure. Remote Sens. Environ. 2017, 193, 165–179. [Google Scholar] [CrossRef]

- Guerschman, J.P.; Hill, M.J.; Renzullo, L.J.; Barrett, D.J.; Marks, A.S.; Botha, E.J. Estimating fractional cover of photosynthetic vegetation, non-photosynthetic vegetation and bare soil in the Australian tropical savanna region upscaling the EO-1 Hyperion and MODIS sensors. Remote Sens. Environ. 2009, 113, 928–945. [Google Scholar] [CrossRef]

- Zhang, Q.; Chen, J.M.; Ju, W.; Wang, H.; Qiu, F.; Yang, F.; Fan, W.; Huang, Q.; Wang, Y.; Feng, Y.; Wang, X.; Zhang, F. Improving the ability of the photochemical reflectance index to track canopy light use efficiency through differentiating sunlit and shaded leaves. Remote Sens. Environ. 2017, 194, 1–15. [Google Scholar] [CrossRef]

- Asner, G.P.; Heidebrecht, K.B. Spectral unmixing of vegetation, soil and dry carbon cover in arid regions: Comparing multispectral and hyperspectral observations. Int. J. Remote Sens. 2002, 23, 3939–3958. [Google Scholar] [CrossRef]

- Jia, G.J.; Burke, I.C.; Goetz, A.; Kaufmann, M.R.; Kindel, B.C. Assessing spatial patterns of forest fuel using AVIRIS data. Remote Sens. Environ. 2006, 102, 318–327. [Google Scholar] [CrossRef]

- Zeng, Y.; Schaepman, M.E.; Wu, B.; Clevers, J.G.P.W.; Bregt, A.K. Scaling-based forest structural change detection using an inverted geometric-optical model in the Three Gorges region of China. Remote Sens. Environ. 2008, 112, 4261–4271. [Google Scholar] [CrossRef]

- Mostafa, Y.; Abdelwahab, M.A. Corresponding regions for shadow restoration in satellite high-resolution images. Int. J. Remote Sens. 2018, 39, 7014–7028. [Google Scholar] [CrossRef]

- Arévalo, V.; González, J.; Ambrosio, G. Shadow detection in colour high-resolution satellite images. Int. J. Remote Sens. 2008, 29, 1945–1963. [Google Scholar] [CrossRef]

- Sinclair, T.R.; Murphy, C.E.; Knoerr, K.R. Development and evaluation of simplified models for simulating canopy photosynthesis and transpiration. J. Appl. Ecol. 1976, 13, 813–829. [Google Scholar] [CrossRef]

- Mercado, L.M.; Bellouin, N.; Sitch, S.; Boucher, O.; Huntingford, C.; Wild, M.; Cox, P.M. Impact of changes in diffuse radiation on the global land carbon sink. Nature 2009, 458, 1014–1087. [Google Scholar] [CrossRef]

- Sprintsin, M.; Chen, J.M.; Desai, A.; Gough, C.M. Evaluation of leaf-to-canopy upscaling methodologies against carbon flux data in North America. J. Geophys. Res.-Biogeosci 2012, 117. [Google Scholar] [CrossRef]

- Strahler, A.H.; Jupp, D. Modeling bidirectional reflectance of forests and woodlands using boolean models and geometric optics. Remote Sens. Environ. 1990, 34, 153–166. [Google Scholar] [CrossRef]

- Dymond, J.R.; Shepherd, J.D.; Qi, J. A simple physical model of vegetation reflectance for standardising optical satellite imagery. Remote Sens. Environ. 2001, 75, 350–359. [Google Scholar] [CrossRef]

- Pu, R.; Landry, S. A comparative analysis of high spatial resolution IKONOS and WorldView-2 imagery for mapping urban tree species. Remote Sens. Environ. 2012, 124, 516–533. [Google Scholar] [CrossRef]

- ZJiang, Y.; Huete, A.R.; Chen, J.; Chen, Y.H.; Li, J.; Yan, G.J.; Zhang, X.Y. Analysis of NDVI and scaled difference vegetation index retrievals of vegetation fraction. Remote Sens. Environ. 2006, 101, 366–378. [Google Scholar]

- Zhou, G.Q.; Liu, S.H. Estimating ground fractional vegetation cover using the double-exposure method. Int. J. Remote Sens. 2015, 36, 6085–6100. [Google Scholar] [CrossRef]

- Song, W.J.; Mu, X.H.; Yan, G.J.; Huang, S. Extracting the green fractional vegetation cover from digital images using a shadow-resistant algorithm (SHAR-LABFVC). Remote Sens.-Basel 2015, 7, 10425–10443. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A.; Herrick, J.E.; Fredrickson, E.L.; Burkett, L. An object-based image analysis approach for determining fractional cover of senescent and green vegetation with digital plot photography. J. Arid Environ. 2007, 69, 1–14. [Google Scholar] [CrossRef]

- Li, X.W.; Strahler, A.H. Geometric-optical bidirectional reflectance modeling of the discrete crown vegetation canopy-effect of crown shape and mutual shadowing. IEEE Trans. Geosci. Remote 1992, 30, 276–292. [Google Scholar] [CrossRef]

- Chen, J.M.; Leblanc, S.G. A four-scale bidirectional reflectance model based on canopy architecture. IEEE Trans. Geosci. Remote 1997, 35, 1316–1337. [Google Scholar] [CrossRef]

- Leblanc, S.G.; Bicheron, P.; Chen, J.M.; Leroy, M.; Cihlar, J. Investigation of directional reflectance in boreal forests with an improved four-scale model and airborne POLDER data. IEEE Trans. Geosci. Remote 1999, 37, 1396–1414. [Google Scholar] [CrossRef]

- Chen, J.M.; Leblanc, S.G. Multiple-scattering scheme useful for geometric optical modeling. IEEE Trans. Geosci. Remote 2001, 39, 1061–1071. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Wallace, L. Direct georeferencing of ultrahigh-resolution UAV imagery. IEEE Trans. Geosci. Remote 2014, 52, 2738–2745. [Google Scholar] [CrossRef]

- Yuan, H.; Yang, G.; Li, C.; Wang, Y.; Liu, J.; Yu, H.; Feng, H.; Xu, B.; Zhao, X.; Yang, X. Retrieving soybean leaf area index from unmanned aerial vehicle hyperspectral remote sensing: Analysis of RF, ANN, and SVM regression models. Remote Sens.-Basel 2017, 9, 309. [Google Scholar] [CrossRef]

- Drusch, M.; del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s optical high-resolution mission for GMES operational services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Clevers, J.; Gitelson, A.A. Remote estimation of crop and grass chlorophyll and nitrogen content using red-edge bands on Sentinel-2 and-3. Int. J. Appl. Earth Obs. 2013, 23, 344–351. [Google Scholar] [CrossRef]

- Collins, W. Remote-sensing of crop type and maturity. Photogramm. Eng. Remote Sens. 1978, 44, 43–55. [Google Scholar]

- Boochs, F.; Kupfer, G.; Dockter, K.; Kuhbauch, W. Shape of the red edge as vitality indicator for plants. Int. J. Remote Sens. 1990, 11, 1741–1753. [Google Scholar] [CrossRef]

- Filella, I.; Penuelas, J. The red edge position and shape as indicators of plant chlorophyll content, biomass and hydric status. Int. J. Remote Sens. 1994, 15, 1459–1470. [Google Scholar] [CrossRef]

- Chang, C.; Heinz, D.C. Constrained subpixel target detection for remotely sensed imagery. IEEE Trans. Geosci. Remote 2000, 38, 1144–1159. [Google Scholar] [CrossRef]

- Liu, K.H.; Wong, E.L.; Du, E.Y.; Chen, C.; Chang, C.I. Kernel-based linear spectral mixture analysis. IEEE Geosci. Remote Sens. 2012, 9, 129–133. [Google Scholar] [CrossRef]

- Yang, C.; Everitt, J.H.; Bradford, J.M. Airborne hyperspectral imagery and linear spectral unmixing for mapping variation in crop yield. Precis. Agric. 2007, 8, 279–296. [Google Scholar] [CrossRef]

- Chang, C.I.; Ji, B.H. Weighted abundance-constrained linear spectral mixture analysis. IEEE Trans. Geosci. Remote 2006, 44, 378–388. [Google Scholar] [CrossRef]

- Verrelst, J.; Schaepman, M.E.; Koetz, B.; Kneubühler, M. Angular sensitivity analysis of vegetation indices derived from CHRIS/PROBA data. Remote Sens. Environ. 2008, 112, 2341–2353. [Google Scholar] [CrossRef]

- Huang, N.; Niu, Z.; Zhan, Y.L.; Xu, S.G.; Tappert, M.C.; Wu, C.Y.; Huang, W.J.; Gao, S.; Hou, X.H.; Cai, D.W. Relationships between soil respiration and photosynthesis-related spectral vegetation indices in two cropland ecosystems. Agric. For. Meteorol. 2012, 160, 80–89. [Google Scholar] [CrossRef]

- Zhao, J.J.; Liu, L.Y. Linking satellite-based spring phenology to temperate deciduous broadleaf forest photosynthesis activity. Int. J. Digit. Earth 2014, 7, 881–896. [Google Scholar] [CrossRef]

- D’Odorico, P.; Gonsamo, A.; Gough, C.M.; Bohrer, G.; Morison, J.; Wilkinson, M.; Hanson, P.J.; Gianelle, D.; Fuentes, J.D.; Buchmann, N. The match and mismatch between photosynthesis and land surface phenology of deciduous forests. Agric For. Meteorol. 2015, 214, 25–38. [Google Scholar] [CrossRef]

- Daughtry, C.S.T.; Doraiswamy, P.C.; Hunt, E.R.; Stern, A.J.; McMurtrey, J.E.; Prueger, J.H. Remote sensing of crop residue cover and soil tillage intensity. Soil Tillage Res. 2006, 91, 101–108. [Google Scholar] [CrossRef]

- Rikimaru, A.; Roy, P.S.; Miyatake, S. Tropical forest cover density mapping. Trop. Ecol. 2002, 43, 39–47. [Google Scholar]

| Spatial Resolution | Spectral Band | Central Wavelength (nm) | Bandwidth (nm) | Band Names | Application Fields |

|---|---|---|---|---|---|

| 10 m | B2 | 490 | 65 | Blue | Epicontinental monitoring Marine monitoring The polar monitoring |

| B3 | 560 | 35 | Green | ||

| B4 | 665 | 30 | Red | ||

| B8 | 842 | 115 | NIR | ||

| 20 m | B5 | 705 | 15 | Vegetation Red Edge | Vegetation monitoring Environmental monitoring |

| B6 | 740 | 15 | Vegetation Red Edge | ||

| B7 | 775 | 20 | Vegetation Red Edge | ||

| B8a | 865 | 20 | NIR | ||

| B11 | 1610 | 90 | SWIR | Ice monitoring Vegetation monitoring Geological monitoring | |

| B12 | 2190 | 180 | SWIR | ||

| 60 m | B1 | 443 | 20 | Coastal aerosol | Atmospheric correction (aerosol, water vapor, cirrus) |

| B9 | 940 | 20 | Water vapour | ||

| B10 | 1375 | 30 | SWIR-Cirrus |

| ID | Location of RE (nm) | Slope of RE | Reflectance of RE |

|---|---|---|---|

| Pinus (Illumination) | |||

| 1 | 702 | 49.5 | 0.1469 |

| 2 | 706 | 41.875 | 0.1686 |

| 3 | 706 | 45.625 | 0.1664 |

| 4 | 694 | 37.5 | 0.1032 |

| 5 | 710 | 43.5 | 0.1765 |

| Pinus (Shadow) | |||

| 6 | 706 | 15.375 | 0.0499 |

| 7 | 702 | 20.5 | 0.0492 |

| 8 | 710 | 18.875 | 0.0658 |

| 9 | 718 | 24 | 0.0984 |

| 10 | 718 | 22.875 | 0.0795 |

| Larix (Illumination) | |||

| 11 | 710 | 47.125 | 0.1219 |

| 12 | 718 | 57.125 | 0.1659 |

| 13 | 714 | 43 | 0.1426 |

| 14 | 718 | 65.875 | 0.1865 |

| 15 | 718 | 58.875 | 0.1952 |

| Larix (Shadow) | |||

| 16 | 710 | 9.625 | 0.0317 |

| 17 | 714 | 20.5 | 0.0488 |

| 18 | 714 | 14.5 | 0.0461 |

| 19 | 718 | 17.875 | 0.0568 |

| 20 | 706 | 15.5 | 0.0414 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, N.; Tian, J.; Tian, Q.; Xu, K.; Tang, S. Analysis of Vegetation Red Edge with Different Illuminated/Shaded Canopy Proportions and to Construct Normalized Difference Canopy Shadow Index. Remote Sens. 2019, 11, 1192. https://doi.org/10.3390/rs11101192

Xu N, Tian J, Tian Q, Xu K, Tang S. Analysis of Vegetation Red Edge with Different Illuminated/Shaded Canopy Proportions and to Construct Normalized Difference Canopy Shadow Index. Remote Sensing. 2019; 11(10):1192. https://doi.org/10.3390/rs11101192

Chicago/Turabian StyleXu, Nianxu, Jia Tian, Qingjiu Tian, Kaijian Xu, and Shaofei Tang. 2019. "Analysis of Vegetation Red Edge with Different Illuminated/Shaded Canopy Proportions and to Construct Normalized Difference Canopy Shadow Index" Remote Sensing 11, no. 10: 1192. https://doi.org/10.3390/rs11101192