Concentric Circle Pooling in Deep Convolutional Networks for Remote Sensing Scene Classification

Abstract

:1. Introduction

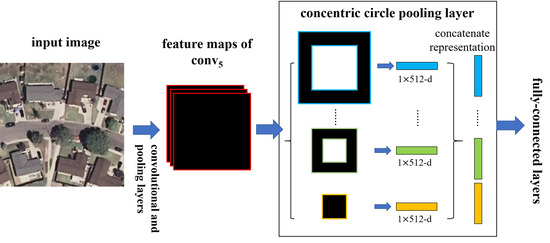

2. The Proposed Method

2.1. Deep Convolutional Neural Network

2.2. Concentric Circle Pooling Layer

2.3. Training the Network

3. Experiments and Analysis

3.1. Experimental Setup

- UC Merced Land Use Dataset. The UC Merced dataset (UCM) is one of the first publicly available high-resolution remote sensing imagery datasets [4]. This dataset contains 21 typical remote sensing scene categories, each of which consists of 100 images measuring pixels with a pixel resolution of 30 cm in the red-green-blue color space. Figure 6 shows two examples of ground truth images from each class in this dataset. The classification of the UCM dataset is challenging because of the high inter-class similarity among categories such as medium residential and dense residential areas.

- WHU-RS Dataset. The WHU-RS dataset is a publicly available dataset in which all the images were collected from Google Earth (Google Inc.) [5]. This dataset consists of 950 images with a size of pixels distributed among 19 scene classes. Examples of ground truth images are shown in Figure 7. As compared to the UCM dataset, the scene categories in the WHU-RS dataset are more complicated due to the variation in scale, resolution, and viewpoint-dependent appearance.

3.2. Parameter Sensitivity Analysis

3.2.1. Baseline Network Architectures

3.2.2. Parameter Evaluation

3.3. Confusion Matrix

3.4. Comparision with State-of-the-Art Methods

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhou, W.; Troy, A. An object-oriented approach for analysing and characterizing urban landscape at the parcel level. Int. J. Remote Sens. 2008, 29, 3119–3135. [Google Scholar] [CrossRef]

- Zhang, H.; Lin, H.; Li, Y.; Zhang, Y. Feature extraction for high-resolution imagery based on human visual perception. Int. J. Remote Sens. 2013, 34, 1146–1163. [Google Scholar] [CrossRef]

- Rogan, J.; Chen, D. Remote sensing technology for mapping and monitoring land-cover and land-use change. Prog. Plan. 2004, 61, 301–325. [Google Scholar] [CrossRef]

- Yang, Y.; Newsam, S. Bag-of-visual-words and spatial extensions for land-use classification. In Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, San Jose, CA, USA, 2–5 November 2010; pp. 270–279. [Google Scholar]

- Xia, G.S.; Yang, W.; Delon, J.; Gousseau, Y.; Sun, H.; Maitre, H. Structrual High-Resolution Satellite Image Indexing. In Proceedings of the ISPRS, TC VII Symposium Part A: 100 Years ISPRS—Advancing Remote Sensing Science, Vienna, Austria, 5–7 July 2010. [Google Scholar]

- Cheng, G.; Han, J.; Zhou, P.; Guo, L. Multi-class geospatial object detection and geographic image classification based on collection of part detectors. ISPRS J. Photogramm. Remote Sens. 2014, 98, 119–132. [Google Scholar] [CrossRef]

- Cheriyadat, A. Unsupervised Feature Learning for Aerial Scene Classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 439–451. [Google Scholar] [CrossRef]

- Zhang, F.; Du, B.; Zhang, L. Saliency-Guided Unsupervised Feature Learning for Scene Classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2175–2184. [Google Scholar] [CrossRef]

- Fan, J.; Tan, H.L.; Lu, S. Multipath sparse coding for scene classification in very high resolution satellite imagery. SPIE Remote Sens. 2015, 9643. [Google Scholar] [CrossRef]

- Hu, F.; Xia, G.; Wang, Z.; Huang, X.; Zhang, L.; Sun, H. Unsupervised Feature Learning via Spectral Clustering of Multidimensional Patches for Remotely Sensed Scene Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2015–2030. [Google Scholar] [CrossRef]

- Zhao, L.J.; Tang, P.; Huo, L.Z. Land-use scene classification using a concentric circle-structured multiscale bag-of-visual-words model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4620–4631. [Google Scholar] [CrossRef]

- Chehdi, K.; Soltani, M.; Cariou, C. Pixel classification of large-size hyperspectral images by affinity propagation. J. Appl. Remote Sens. 2014, 8, 083567. [Google Scholar] [CrossRef] [Green Version]

- Yu, Q. Object-based detailed vegetation classification with airborne high spatial resolution remote sensing imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhang, L.; Li, P.; Huang, B. Classification of high spatial resolution imagery using improved gaussian markov random-field-based texture features. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1458–1468. [Google Scholar] [CrossRef]

- Sivic, J.; Zisserman, A. Video Google: A text retrieval approach to object matching in videos. In Proceedings of the IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; pp. 1470–1477. [Google Scholar]

- Csurka, G.; Dance, C.R.; Fan, L.; Willamowski, J.; Bray, C. Visual categorization with bags of keypoints. In Proceedings of the Workshop on Statistical Learning in Computer Vision, European Conference on Computer Vision, Prague, Czech Republic, 11–14 May 2004; pp. 1–22. [Google Scholar]

- Bosch, A.; Zisserman, A.; Muoz, X. Scene classification using a hybrid generative/discriminative approach. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 712–727. [Google Scholar] [CrossRef] [PubMed]

- Jegou, H.; Douze, M.; Schmid, C. Improving bag-of-features for large scale image search. Int. J. Comput. Vis. 2010, 87, 316–336. [Google Scholar] [CrossRef] [Green Version]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Lazebnik, S.; Schmid, C.; Ponce, J. Beyond bags of features: Spatial pyramid matching for recognizing natural scene categories. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 2169–2178. [Google Scholar]

- Qi, K.; Wu, H.; Shen, C.; Gong, J. Land-use scene classification in high-resolution remote sensing images using improved correlatons. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2403–2407. [Google Scholar] [CrossRef]

- Chen, S.; Tian, Y. Pyramid of Spatial Relations for Scene-Level Land Use Classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1947–1957. [Google Scholar] [CrossRef]

- Chen, X.; Xiang, S.; Liu, C.L.; Pan, C.H. Vehicle detection in satellite images by hybrid deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2017, 11, 1797–1801. [Google Scholar] [CrossRef]

- Zhao, W.; Du, S. Scene classification using multi-scale deeply described visual words. Int. J. Remote Sens. 2016, 37, 4119–4131. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Twenty-Sixth Annual Conference on Neural Information Processing Systems, Lake Tahoe, NY, USA, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Hariharan, B.; Arbelaez, P.; Girshick, R.; Malik, J. Simultaneous detection and segmentation. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 297–312. [Google Scholar]

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. In Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Cimpoi, M.; Maji, S.; Kokkinos, I.; Vedaldi, A. Deep filter banks for texture recognition, description, and segmentation. Int. J. Comput. Vis. 2016, 118, 65–94. [Google Scholar] [CrossRef] [PubMed]

- Penatti, O.A.; Nogueira, K.; dos Santos, J.A. Do Deep Features Generalize from Everyday Objects to Remote Sensing and Aerial Scenes Domains? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 44–51. [Google Scholar]

- Chatfield, K.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Return of the Devil in the Details: Delving Deep into Convolutional Nets. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Donahue, J.; Jia, Y.; Vinyals, O.; Hoffman, J.; Zhang, N.; Tzeng, E.; Darrell, T. DeCAF: A Deep Convolutional Activation Feature for Generic Visual Recognition. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 647–655. [Google Scholar]

- Oquab, M.; Bottou, L.; Laptev, I.; Sivic, J. Learning and transferring mid-level image representations using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1717–1724. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

- Hu, F.; Xia, G.; Hu, J.; Zhang, L. Transferring deep convolutional neural networks for the scene classification of high-resolution remote sensing imagery. Remote Sens. 2015, 7, 14680–14707. [Google Scholar] [CrossRef]

- Cheng, G.; Yang, C.; Yao, X.; Guo, L.; Han, J. When deep learning meets metric learning: Remote sensing image scene classification via learning discriminative cnns. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2811–2821. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Qi, K.; Yang, C.; Guan, Q.; Wu, H.; Gong, J. A multiscale deeply described correlatons-based model for land-use scene classification. Remote Sens. 2017, 9, 917. [Google Scholar] [CrossRef]

- Grauman, K.; Darrell, T. The pyramid match kernel: Discriminative classification with sets of image features. In Proceedings of the International Conference on Computer Vision, Beijing, China, 17–21 October 2005; pp. 1458–1465. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Lu, X.; Zheng, X.; Yuan, Y. Remote sensing scene classification by unsupervised representation learning. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5148–5157. [Google Scholar] [CrossRef]

- Battiato, S.; Farinella, G.M.; Gallo, G.; Ravi, D. Spatial hierarchy of textons distributions for scene classification. In Conference on Multimedia Modeling; Springer: Berlin/Heidelberg, Germany, 2009; pp. 333–343. [Google Scholar]

- Zhou, L.; Zhou, Z.; Hu, D. Scene classification using multi-resolution low-level feature combination. Neurocomputing 2013, 122, 284–297. [Google Scholar] [CrossRef]

- Cheng, G.; Zhou, P.; Han, J. Learning rotation-invariant convolutional neural networks for object detection in vhr optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Li, K.; Cheng, G.; Bu, S.; You, X. Rotation-insensitive and context-augmented object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2337–2348. [Google Scholar] [CrossRef]

- Rao, A.; Srihari, R.K.; Zhang, Z. Spatial color histograms for content-based image retrieval. In Proceedings of the IEEE International Conference on TOOLS with Artificial Intelligence, Chicago, IL, USA, 9–11 November 1999; pp. 183–186. [Google Scholar]

- Lazebnik, S.; Schmid, C.; Ponce, J. A sparse texture representation using local affine regions. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1265–1278. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, G.; Wang, X.; Fan, B.; Pan, C. Feature extraction by rotation-invariant matrix representation for object detection in aerial image. IEEE Geosci. Remote Sens. Lett. 2017, 14, 851–855. [Google Scholar] [CrossRef]

- Li, Z.; Song, Y.; Mcloughlin, I.; Dai, L. Compact Convolutional Neural Network Transfer Learning for Small-Scale Image Classification. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 2737–2741. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Rumelhart, D.E.; Hintont, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Castelluccio, M.; Poggi, G.; Sansone, C.; Verdoliva, L. Land Use Classification in Remote Sensing Images by Convolutional Neural Networks. Available online: http://arxiv.org/abs/1508.00092 (accessed on 30 March 2017).

- Sheng, G.; Yang, W.; Xu, T.; Sun, H. High-resolution satellite scene classification using a sparse coding based multiple feature combination. Int. J. Remote Sens. 2012, 33, 2395–2412. [Google Scholar] [CrossRef]

- Chollet, F. Keras, GitHub Repository. 2015. Available online: https://github.com/fchollet/keras (accessed on 6 September 2017).

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: http://tensorflow.org/ (accessed on 6 September 2017).

- Raghavendra, K. Keras-Vis. 2017. Available online: https://github.com/raghakot/keras-vis. (accessed on 18 February 2018).

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Prechelt, L. Automatic early stopping using cross validation: Quantifying the criteria. Neural Netw. 1998, 11, 761–767. [Google Scholar] [CrossRef]

- Huang, L.; Chen, C.; Li, W.; Du, Q. Remote sensing image scene classification using multi-scale completed local binary patterns and fisher vectors. Remote Sens. 2016, 8, 483. [Google Scholar] [CrossRef]

- Negrel, R.; Picard, D.; Gosselin, P.H. Evaluation of second-order visual features for land-use classification. In Proceedings of the International Workshop on Content-Based Multimedia Indexing, Klagenfurt, Austria, 18–20 June 2014; pp. 1–5. [Google Scholar]

- Qi, K.; Liu, W.; Yang, C.; Guan, Q.; Wu, H. Multi-Task Joint Sparse and Low-Rank Representation for the Scene Classification of High-Resolution Remote Sensing Image. Remote Sens. 2017, 9, 10. [Google Scholar] [CrossRef]

| Accuracy (Mean ± std%) | ||||

|---|---|---|---|---|

| UCM | WHU-RS | |||

| VGG-VD16 | VGG-VD19 | VGG-VD16 | VGG-VD19 | |

| CCP-net | 94.93 ± 0.95 | 95.17 ± 0.92 | 97.13 ± 0.78 | 97.51 ± 0.73 |

| 2-level SPP | 93.47 ± 1.26 | 91.93 ± 1.42 | 95.13 ± 1.35 | 94.38 ± 0.77 |

| 3-level SPP | 93.78 ± 1.19 | 93.38 ± 1.46 | 95.11 ± 1.32 | 94.99 ± 1.1 |

| 4-level SPP | 93.81 ± 1.39 | 93.24 ± 1.4 | 95.21 ± 1.44 | 94.81 ± 1.77 |

| Traditional [36] | 94.07 | 93.15 | 94.35 | 94.36 |

| Method | Accuracy (Mean ± std) |

|---|---|

| BOVW [15] | 71.86 |

| SPM [21] | 74.0 |

| SPCK++ [4] | 77.38 |

| UFL [7] | 81.67 ± 1.23 |

| SG+UFL [8] | 82.72 ± 1.18 |

| VLAT [62] | 94.3 |

| MS-CLBP+FV [61] | 93.0 ± 1.2 |

| MTJSLRC [63] | 91.07 ± 0.67 |

| MBVW [25] | 96.14 |

| CaffeNet [31] | 93.42 ± 1.0 |

| OverFeat [31] | 90.91 ± 1.19 |

| MDDC [39] | 96.92 ± 0.57 |

| GoogLeNet + Fine-tune [59] | 97.1 |

| CCP-net | 97.52 ± 0.97 |

| Method | Acc | Agri | Air | Base | Beach | Build | Chap | Dres | Fore | Free | Golf | Harb | Inter | Mres | Mph | Over | Park | Riv | Run | Sres | Stor | Tenn |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CCP-net | 94.93 | 98 | 100 | 97.5 | 100 | 88 | 100 | 76 | 100 | 98 | 94 | 100 | 94.5 | 91.5 | 90 | 97.5 | 99 | 93 | 99.5 | 95.5 | 95 | 86.5 |

| 96.63 | 99. | 100. | 99.5 | 99.25 | 90.75 | 100 | 80.25 | 100. | 97.75 | 95.75 | 99.5 | 95.5 | 95.75 | 96.25 | 98.75 | 100 | 97.75 | 100 | 94.75 | 96.25 | 92.5 | |

| SPP-net | 93.81 | 98.5 | 99 | 98 | 100 | 83.5 | 100 | 73 | 100 | 98.25 | 93 | 100 | 88.25 | 88.5 | 92 | 95.5 | 99 | 94 | 100 | 93.5 | 90 | 86 |

| 94.55 | 99 | 99.75 | 98.5 | 99.25 | 84 | 100 | 74 | 98 | 96.75 | 93.25 | 100 | 89.5 | 91.5 | 97.5 | 94.5 | 99.5 | 95.25 | 99.75 | 93.5 | 92.25 | 89.75 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qi, K.; Guan, Q.; Yang, C.; Peng, F.; Shen, S.; Wu, H. Concentric Circle Pooling in Deep Convolutional Networks for Remote Sensing Scene Classification. Remote Sens. 2018, 10, 934. https://doi.org/10.3390/rs10060934

Qi K, Guan Q, Yang C, Peng F, Shen S, Wu H. Concentric Circle Pooling in Deep Convolutional Networks for Remote Sensing Scene Classification. Remote Sensing. 2018; 10(6):934. https://doi.org/10.3390/rs10060934

Chicago/Turabian StyleQi, Kunlun, Qingfeng Guan, Chao Yang, Feifei Peng, Shengyu Shen, and Huayi Wu. 2018. "Concentric Circle Pooling in Deep Convolutional Networks for Remote Sensing Scene Classification" Remote Sensing 10, no. 6: 934. https://doi.org/10.3390/rs10060934