Abstract

In recent decades, road extraction from very high-resolution (VHR) remote sensing images has become popular and has attracted extensive research efforts. However, the very high spatial resolution, complex urban structure, and contextual background effect of road images complicate the process of road extraction. For example, shadows, vehicles, or other objects may occlude a road located in a developed urban area. To address the problem of occlusion, this study proposes a semiautomatic approach for road extraction from VHR remote sensing images. First, guided image filtering is employed to reduce the negative effects of nonroad pixels while preserving edge smoothness. Then, an edge-constraint-based weighted fusion model is adopted to trace and refine the road centerline. An edge-constraint fast marching method, which sequentially links discrete seed points, is presented to maintain road-point connectivity. Six experiments with eight VHR remote sensing images (spatial resolution of 0.3 m/pixel to 2 m/pixel) are conducted to evaluate the efficiency and robustness of the proposed approach. Compared with state-of-the-art methods, the proposed approach presents superior extraction quality, time consumption, and seed-point requirements.

1. Introduction

Accurate and up-to-date road network information is extremely critical for various urban applications, such as navigation and infrastructure maintenance [1,2,3]. The advent of modern remote sensing has enabled the extraction of information from very high-resolution (VHR) and highly detailed optical images of roads to update urban road networks [4,5]. High spatial resolution enriches feature details but complicates object extraction [6,7,8,9,10]. Although considerable effort has been devoted to road-feature extraction from VHR images, a completely practical road-feature extraction technology remains unrealistic.

A considerable number of articles have been published on road-feature extraction from remote sensing images. Generally, state-of-the-art methods for road-feature extraction from VHR images fall into two categories: Automatic and semiautomatic methods. Automatic approaches require no prior information and can be executed by a series of image-processing algorithms, such as mathematical morphology [11,12], active snake model [13], dynamic programming [14], neural networks [15,16,17], probabilistic graphical models [18], filtering-based methods [19], and object-oriented methods [20]. In general, however, the unsatisfactory performance of the automatic method in road-feature extraction from images presenting complex natural road scenarios (e.g., image noise and tree and shadow occlusion) restricts its practical applications [21]. The limitation of automatic methods has encouraged the proliferation of studies on semiautomatic methods. In contrast to automatic methods, semiautomatic methods require user input or other prior information to achieve robust and stable results.

Two technical ideas are present for semi-automatic road extraction; the first involves treating the extraction as a problem of image segmentation (divided into road and non-road) and then obtaining the final result by post-processing [22,23,24,25,26]. This method is easily affected by vegetation occlusion or large shadows, which leads to low recognition rates. In addition, due to the introduction of a post-processing algorithm, other features are easily misjudged as roads.

The other idea involves treating the extraction as a network optimization problem. The road network is obtained by the connection of road seed points, and the final result is acquired with the use of graph theory or dynamic programming techniques [27,28,29,30,31,32]. The local features of the road (such as extensibility, edge characteristics, and topological structure of the road network, etc.) are fully considered in this method, and a reliable initial road seed point is obtained through human–computer interaction. Therefore, the accuracy of road extraction results is relatively high. However, the extraction effect of shaded and occluded roads is poor because of the different methods of connecting road seed points. In addition, the number of seed points needed for U- or S-shaped roads is more than that for linear roads, thereby requiring considerable manual work.

According to this analysis, the method based on image segmentation is more efficient but less accurate than that based on road seed points, which is less efficient but more precise. Inspired by Reference [33], we propose to treat road seed point connection as a shortest-path problem to improve the efficiency of road extraction on the basis of seed points. The fast marching method was recently developed for connecting road seed points [33]; it is a particular case of level set methods, which were developed by Osher and Sethian [34] as an efficient computational numerical algorithm for tracking and modeling the motion of a physical wave interface (front). This method has been applied to different research fields, including computer graphics, medical imaging, computational fluid dynamics, image processing, and computation of trajectories [33,35,36]. In Reference [33], this method showed high stability and general advantages and suitability for processing low-/medium-resolution remote sensing images. However, it is difficult to extract unbiased road centerline information from VHR remote sensing images by using the fast marching method alone.

In VHR remote sensing images, “noise” is produced by the improvement of resolution, which leads to inconspicuous useful edge information. Complex image backgrounds also produce a large number of finely divided edges, which are difficult to process and thus result in the difficulty of road edge extraction. Extracting straight roads and planar roads is challenging due to the existence of the same objects with different spectra and different objects with the same spectrum, which make the extraction of roads effectively by using the road spectral feature alone a difficult task. Thus, this study presents a semiautomatic edge-constraint fast marching (ECFM) method to extract road centerlines from VHR images. Edge information, road spectral feature, and the road centerline probability map are utilized and an edge-constraint-based weighted fusion model is introduced to assist the fast marching method. The proposed method enables accurate and unbiased road centerline extraction and shows high generalization capability in processing complex road scenarios, such as S-shaped, U-shaped, and shaded roads. The contributions of the method are as follows.

- (a)

- Edge information of remote sensing imagery has been studied extensively and widely used in the extraction and tracking of linear objects, such as roads and rivers, in medium-/low-resolution remote sensing imagery. The present study indicates that the synergy of edge information, road centerline probability map, and road spectral feature can overcome the shortcomings of the bias of the road centerline extracted by the fast marching method, which uses spectral feature only. Moreover, our method is robust to road extraction in shaded areas.

- (b)

- Another contribution of this study is that the proposed method needs only a few road seed points when extracting an S-shaped or U-shaped road. This characteristic leads to the efficiency of the widespread practical application of road centerline extraction from remote sensing images.

2. Related Work

Many approaches have been proposed in the last decades for extracting road segmentation from aerial and satellite images. Low-level features can be extracted and heuristic rules (such as connectivity and shape) can be defined in numerous ways to classify structures similar to roads. A geometric stochastic road model based on road width, length, curvature, and pixel intensity was applied in Reference [37]. Hinz and Baumgartner [38] used road models and their contexts, including their knowledge of radiation, geometry, and topology. The disadvantage of these rule-based heuristic models is that obtaining the optimal set of rules and parameters is difficult because of the wide variety of roads. Therefore, these methods can work only in areas where the features used (such as image edges) occur mainly on roads (e.g., rural areas).

Most approaches consider road extraction a binary segmentation problem. The path trajectory point [22] and the angle-based texture feature [23] of a particular pixel can be defined to quantify road probability on the basis of shape. Das et al. [24] adopted the spectral and local linear features of multispectral road images. By combining the probabilistic support vector machine (PSVM) method, dominant singular value method, local gradient function, and vertical central axis transformation method to classify the region, the authors detected the road edge, linked the broken road, and eliminated the non-road area. The advantages of this method were verified by experiments on many road images. In Reference [25], the image was initially segmented by fused multiscale collaborative representation and graph cuts, and the initial contour of the road was then obtained by filtering the road shape. Finally, the road centerline was obtained through tensor voting. In Reference [26], the image was first divided into road and non-road through SVM soft classification; then, the probability of each pixel belonging to the road was obtained simultaneously; the final road was acquired through the graph cut method. However, these methods work well in multispectral images only and can detect only the main roads in urban areas. Thus, extracting roads from areas with dense buildings or other areas which are similar to road grayscale is challenging.

Another semiautomatic road extraction method regards road extraction as the connection and tracking problem of road seed points. Hu et al. [27] proposed a segmented parabolic model to delineate road centerline networks. The method first uses seed points to generate parabolic segments and then applies least-squares template matching to calculate parameters for precise parabola extraction. Miao et al. [28] proposed a kernel density estimation method combined with the geodesic method to decrease the number of seed points required for road extraction. Zhou et al. [29] used particle filtering to track road segments between seed points. However, particle filtering is limited by its incapability to effectively deal with road branches. To extend the generalization capability of particle filtering to complex scenarios, Movaghati et al. [30] integrated particle filtering with extended Kalman filtering. Lv et al. [31] proposed a multifeature sparsity-based model that can utilize multifeature complementation to extract roads from high-resolution imagery. Dal Poz et al. [32] proposed a semiautomatic method to extract urban/suburban roads from stereoscopic satellite images. This method uses seed points to construct the road model in the object space. Optimal road segments between seed points are then generated through dynamic programming. Road extraction based on seed points can achieve high precision, but the efficiency is low. The main reason is that the input of road seed points needs human intervention. A large number of required seed points will affect the efficiency of road extraction.

3. Methodology

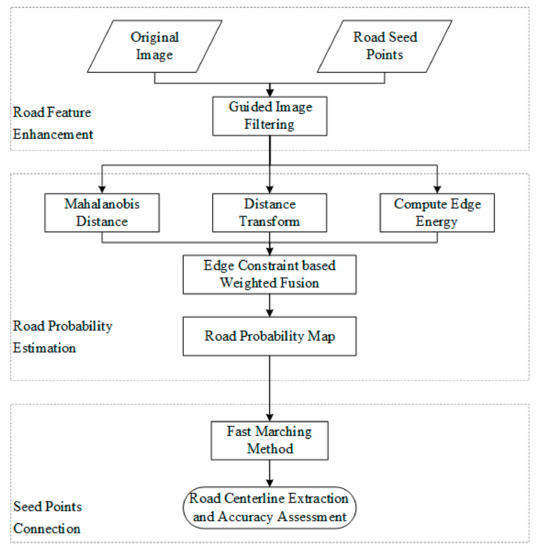

As shown in Figure 1, the proposed approach consists of three main steps. These steps include: (1) Road feature enhancement: The VHR image can reveal ground objects in great detail and depict the color, shape, size, and structure of objects. However, its spectra may contain considerable noise, which may reduce the reliability of the road extraction result. Thus, the image is first filtered through guided filtering to enhance road features; (2) Road probability estimation: Three road features are extracted, and an edge-constraint-based weighted fusion model is introduced for multifeature fusion and road probability estimation; (3) Seed-point connection: The fast marching method is used to link road seed points on the basis of the potential road map. To test the accuracy and efficiency of the proposed approach, the performance of the proposed method on four VHR images is compared with that of other road extraction approaches.

Figure 1.

Flowchart of the proposed ECFM method.We use a real example to illustrate the detailed flow of the presented method. The example is shown in Figure 2. A detailed description of the method is provided in the following subsections.

3.1. Road Feature Enhancement

The principle of this step is the compression of nonroad pixel signals in advance. In VHR images, roads are assumed to be locally homogeneous and elongated areas. However, some roads in VHR images are contaminated by numerous nonroad pixels, such as cars and traffic lines. Thus, image filtering is necessary to reduce the negative effect of nonroad pixels. Guided filtering performs edge-preserving smoothing on an image while guided by a second image, the so-called guidance image [39]. Similar to other filtering operations, guided image filtering is a neighborhood operation. However, it accounts for the statistics of the neighboring pixels of a central pixel in the guidance image when calculating the output value.

The commonly used linear translation-variant filtering can be formulated as follows:

where i and j are pixel indices, and I, p, and q denote the input, guidance, and output images, respectively. The filter kernel Wij is the weighted average function of I and p, which is defined as:

where is an overlapping window centered at pixel k; is the number of pixels in ; and denote the mean and variance of I in , respectively; and is a regularization parameter that controls the smoothness degree.

The key assumption of guided filtering is a local linear model between I and q [39]. This model is defined as:

where (ak, bk) are some linear coefficients assumed to be constant in . The two parameters are computed with a linear ridge regression model:

where

Here, is the mean of p in . After computing (ak, bk) for all windows in the image, the output of guided filtering is expressed as:

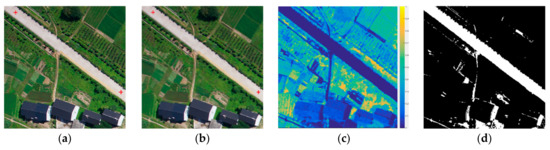

The guided image filtering result is shown in Figure 2b.

Figure 2.

(a) Test image. Seed points are represented by red crosses; (b) Image preprocessed through guided filtering; (c) Mahalanobis distance map; (d) Thresholding result, in which 1 and 0 represent road and nonroad classes, respectively; (e) Distance transform result; (f) Edge-energy information; (g) Road probability map obtained through multifeature fusion; (h) Minimal path extracted from the road probability map through the fast marching method.

3.2. Road Probability Estimation

This step aims to exploit multiple features of roads to overcome the shortcomings of the traditional fast marching method, which only considers spectral information. Thus, to estimate road probability, road spectral information, centerline probability, and edge-energy features are combined through a weighted fusion approach.

3.2.1. Mahalanobis Distance

The initial road seed point generated by users is taken as the central pixel, and its neighboring pixels (i.e., the window used in this study) are taken as training samples. The Mahalanobis distance [40] is subsequently applied to compute the road probability of pixel x, as follows:

where is the value of Mahalanobis distance at pixel x; I(x) is the vector datum of the spectral value of pixel x; and m and C indicate the mean values and the covariance matrix of the training samples, respectively. After computing the Mahalanobis distance values of all pixels, simple thresholding is used to divide the image into the foreground (i.e., road) and background (i.e., nonroad) regions. The thresholding is defined as:

where Label(x) is the class label of the pixel x, and T is the area ratio of the road area to the entire image region. In this study, a road area ratio of 0.2 is obtained through trial-and-error, and 1 and 0 represent the road and nonroad classes, respectively. Figure 2c,d show the Mahalanobis distance matrix and the corresponding thresholding result, respectively.

Then, the road spectral feature can be computed by applying a Gaussian filter, as follows:

where Si,j is the spectral feature value at the pixel location of (i,j), is the standard deviation, and k is the slide window size.

The obtained road class is processed through distance transformation [41] to produce a distance map Di,j that can be taken as the road centerline probability map. The result is shown in Figure 2e. Although the Mahalanobis distance method misclassifies some nonroad pixels as road pixels, this error negligibly affects the generation of the road centerline probability map because the connection of seed points in this study relies on the fast marching method, which is robust to noise.

3.2.2. Edge Energy

The edge information of remote sensing images has been extensively studied and widely used in the extraction and tracking of linear objects, such as roads and rivers, in medium/low-resolution remote sensing images. Thus, edge information can be used as a constraint for accurate road centerline extraction.

Image edge energy can be computed through an edge-filtering operation. The edge-filter operator filters the image on the basis of spectral variance and local similarity by considering the neighborhood of a pixel.

where (i, j) is the spatial coordinate of each pixel in the image, and vi,j is the spectral value of the pixel.

The Laplace operator is one of the most commonly used operators in edge extraction. To enhance the ability of the Laplacian operator to detect changes in the grayscale on the diagonal [42], a redesigned template that assigns different weights to the vertical, horizontal, and diagonal is defined as follows:

The image is convolved by the above neighborhood to obtain the edge detection result , as shown in the following equation:

and

where SA stands for spectral angle and is a measure of similarity between two pixels, and represents the spectral values of two pixels.

The edge filter operator has the following characteristics: (1) Small spectral variation in the homogeneous region. This characteristic leads to low edge operator values in the homogeneous region; (2) Sharp changes in the spectral range of the adjacent boundary area. This characteristic leads to high edge operator value in the boundary area. These two characteristics can be used to obtain the edge energy of an image, as shown in Figure 2f.

3.2.3. Road Probability Estimation

The information fusion of road features aims to estimate road candidates, to discard as many false positives as possible, and to improve the consistency of the extracted roads. Most existing fusion methods are feature fusion-based methods that combine multiple features derived from road areas.

Thus, an edge-constraint-based weighted fusion model, which consists of three items, was proposed to integrate road features detected through the approaches presented in Section 3.2.1 and Section 3.2.2:

where is the road probability map; Z is a normalization constant; , and denote the road spectral feature map, centerline probability, and edge energy information, respectively; fk is a metric calculated through the KDE method [43] to evaluate the distance of any given pixel from the boundary with a range of [0, 1]; α, β, and λ are the weights of the three terms in the model; and is the curvature measure of current pixels and depends on the relative direction of neighboring vectors [44]. It is defined as

where , and i and j are the row and column numbers of the current pixel, respectively.

This model is based on the assumption that the pixel with high spectral intensity, low edge intensity, and small curvature has a dominant role in extraction. The constraints used in the computational model can maximize extraction reliability and accuracy. Figure 2g shows an example of road probability estimation.

3.3. Seed-Point Connection

For a given image I and two road seed points p1 and p2, the road potential map P is obtained by the edge-constraint-based weighted fusion model:

The road has a small value on the potential energy map and thus has a large traveling speed term 1/P. Let S = {s1, s2… sn} be the set of paths between p1 and p2, and let l be the length parameter. The energy term is formulated as follows:

The shortest path Si between p1 and p2 is denoted as . Thus, the energy term E(s) has a global minimum value. For any given pixel x in image I, the value in the minimal energy map of p1 is defined as:

where U(x) is an Eikonal equation:

The minimal path can be obtained by solving the following difference equation:

Here, the fast marching method [34] is used to connect the seed points. The fast marching method is a particular case of level set methods and is a numerical solution of the Eikonal equation.

During fast marching, the pixel with the shortest arrival time is used as the point of the current front, and the minimum arrival time of its four neighborhood points is updated in accordance with the minimum arrival time of the point. Once the loop terminates, the final minimum arrival time of each point in the image is obtained. Then, the road centerline that connects two seed points will be generated. Figure 2h presents an example of seed-point connection through the fast marching method.

4. Experimental Study

An experimental study was performed with eight VHR remote sensing images to validate the effectiveness and adaptability of the proposed method in road extraction. A discussion of the experimental study is presented in this section, which is divided into three subsections. The first subsection provides a description of the study. The second subsection presents a discussion of the four experimental set-ups. The detailed parameter settings applied in the experimental set-ups are also given in this subsection. Finally, the results of the four experiments are provided in the last subsection.

4.1. Datasets

To assess the effectiveness and adaptability of the presented method, experiments were conducted with eight VHR remote sensing images. The images are described below.

The first image is shown in the first row of Figure 3. It is an aerial image with a spatial resolution of 0.3 m/pixel and a spatial size of 400 pixels × 400 pixels. It was downloaded from Computer Vision Lab [45].

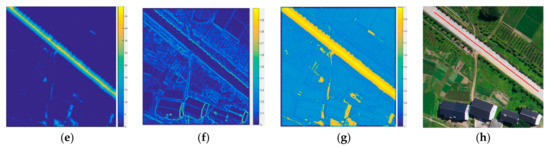

Figure 3.

Comparison of the results of road centerline extraction. (a) Red represents the result obtained with edge constraint; (b) Yellow represents the result obtained without edge constraint; (c) Superimposition of the two results. Seed points are shown as blue crosses.

The second image has a spatial resolution of 0.6 m/pixel and a spatial size of 512 pixels × 512 pixels. It was collected by the QuickBird satellite and was downloaded from VPLab [46]. The image is shown in the second row of Figure 3.

The third and fourth remote sensing images have spatial sizes of 400 pixels × 400 pixels and are shown in Figure 4. The images were downloaded from Computer Vision Lab [45]. They have a spatial resolution of 0.6 m/pixel and show an area that is mainly covered by vegetation, roads, and buildings.

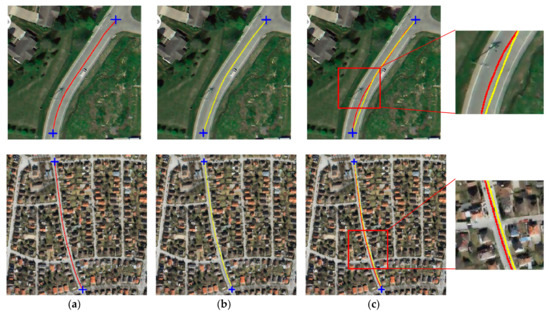

Figure 4.

Two cases of U-shaped road extraction. (a) Case 1; (b) Case 2.

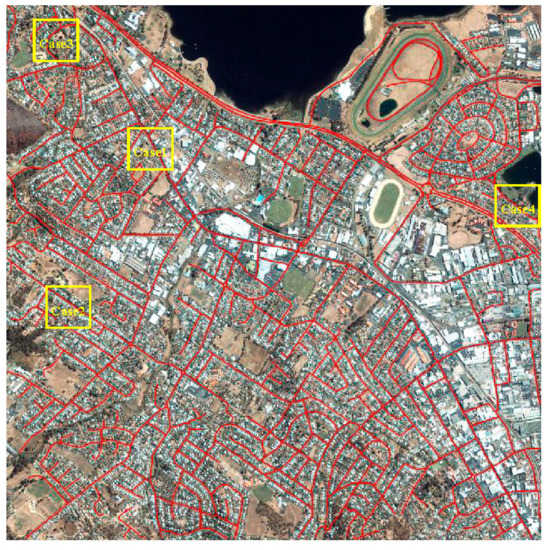

The fifth image is shown in Figure 5 and has a spatial size of 3500 pixels × 3500 pixels and a spatial resolution of 1 m/pixel. It was collected by the IKONOS satellite and shows an area of Hobart, Australia. This image includes different types of noises, such as vehicle occlusion, sharp roadway curves, and building shadows.

Figure 5.

Road extraction result provided by the proposed ECFM method for an IKONOS image.

The sixth image, which is shown in Figure 7, was collected by the QuickBird satellite. The image shows an area in Hong Kong. It has a spatial resolution of 0.6 m PAN band and a size of 1200 pixels × 1600 pixels. It includes various road conditions, such as road material changes, vehicle occlusion, and overhanging trees.

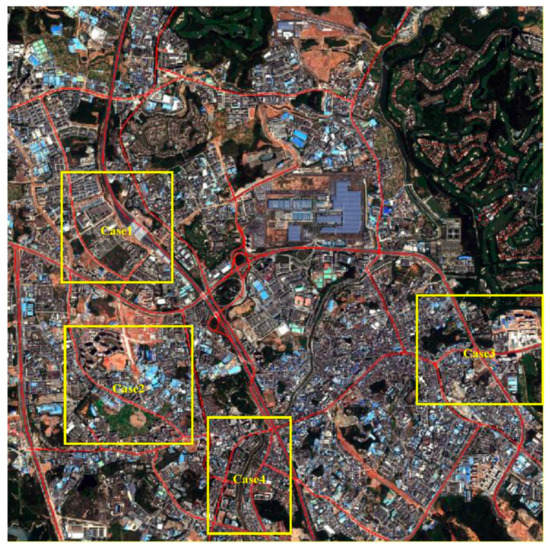

The seventh image has a spatial size of 3000 pixels × 3000 pixels and a spatial resolution of 2 m/pixel, as shown in Figure 8. This image was collected by the WorldView-2 satellite and shows an area of Shenzhen, China, covering a variety of roads with different materials. The image also includes several types of noise, such as zebra crossings, traffic-marking lines, and toll stations.

The eighth image, as shown in Figure 10, is a grayscale image with a spatial size of 725 pixels × 1018 pixels and a spatial resolution of 1 m/pixel. This image was collected by the IKONOS satellite and shows an area of Hobart, Australia, depicting several road conditions, such as overhanging trees, vehicle occlusion, and roads with large curvatures.

Road extraction from these datasets is challenging because of their very high spatial resolution of 1 m or higher. In addition, as seen from each image, roads, buildings, vehicles, and shade may be conflated with one another. Hence, uncertainties may be encountered during road centerline extraction from these datasets.

4.2. Experimental Setup and Parameter Setting

The accuracy and efficiency of the proposed ECFM road extraction method was investigated through the following six experimental setups with the eight VHR remote sensing images shown above.

The first experiment was designed to test the effect of the edge constraint in the proposed approach. Two VHR remote sensing images were used in the experiment, as shown in Figure 3. Two road seed points were marked by the user, and the road centerline was extracted through our proposed method with edge constraint and through a method without edge constraint. The parameters of the proposed method were T = 0.2, α = 0.9, β = 0.7, and λ = 0.5.

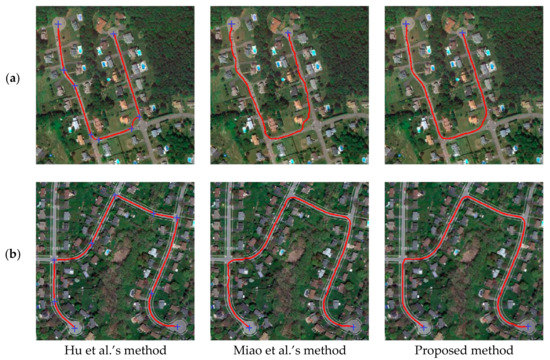

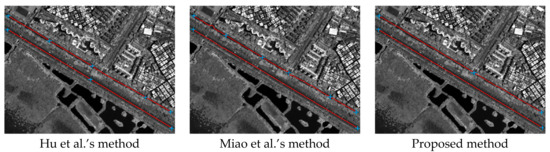

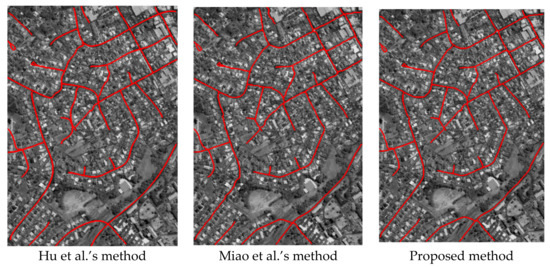

The second experiment aimed to assess the performance of the proposed approach in extracting the centerlines of U-shaped roads. Two VHR remote sensing images showing U-shaped roads were adopted in the experiment, as depicted in Figure 4. The images have a resolution of 0.6 m. To ensure fair comparison, we compared the proposed ECFM method with (1) Hu et al.’s method [27] and (2) Miao et al.’s method [28] because these two methods rely on user-selected seed points. We used the endpoints at both ends of the U-shaped road as the seed points for road extraction. If the two seed points failed to provide the correct road extraction results, we added some intermediate points to ensure the integrity of the road extraction results. The optimal parameters of each experiment were identified through the trial-and-error method. The parameters of these approaches were as follows: (1) In Hu’s method, the window size of the step-edge template was set at h = 5; (2) In Miao’s method, the threshold parameter was set at T = 0.002; (3) In the proposed method, the parameters were set as T = 0.2, α = 0.9, β = 0.9, and λ = 0.4.

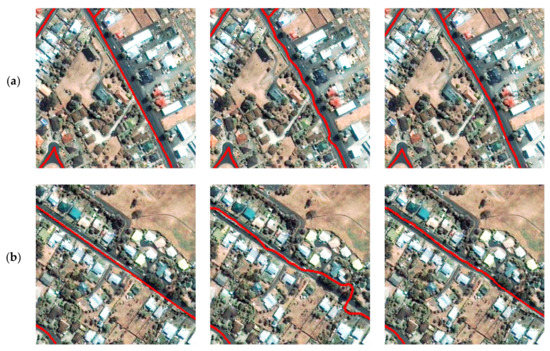

The third and fourth experiments were designed to investigate the accuracy and efficiency of the proposed ECFM method. This experiment employed satellite images with high spatial resolution and had two objectives. First, similar to the first experiment, it aimed to test the efficiency of the proposed method. Second, it aimed to verify the robustness of our proposed method for the centerline extraction of shadowed roads. We compared the proposed ECFM method with (1) Hu et al.’s method [27] and (2) Miao et al.’s method [28]. The parameter details of each approach are as follows: (1) In Hu’s method, the window size of the step-edge template was set at h = 5; (2) In Miao’s method, the threshold parameter was set at T = 0.002; (3) In the proposed method, the parameters were varied in accordance with the shading condition of the road. When the road was not shaded, the parameters were set as T = 0.2, α = 0.9, β = 0.9, and λ = 0.4. By contrast, when the road was shaded, the parameters were set as T = 0.2, α = 0.5, β = 0.5, and λ = 0.05.

The experiments were designed as follows:

- (1)

- For all methods, as few seed points are selected as possible to improve the efficiency of road extraction while ensuring integrity.

- (2)

- For an occluded road area, road seed points that are not occluded by shadows or automobiles are selected as much as possible to ensure the accuracy of road extraction.

The fifth and sixth experiments aimed to test the road extraction efficiency and accuracy of different methods under the same seed points. The fifth experiment used a Worldview-2 color image, and the sixth experiment used an IKONOS grayscale image. This design had two purposes. The first was to verify the efficiency and accuracy of different methods under the condition of using the same seed points, and the other was to verify the robustness of the methods proposed in this work on images with different color modes (color images and grayscale images). Seed points for these two groups of experiments were obtained by artificial marking. To ensure fairness, road extraction should be conducted according to the collection sequence of artificial seed points when different methods are adopted. (1) Hu et al.’s method [27] and (2) Miao et al.’s method [28] were used here for comparison. The parameters used in these experiments were the same as those applied in the third and fourth experiments.

4.3. Results and Quantitative Evaluation

Four accuracy measures [27,47] were used to evaluate the performance of the presented method. These measures included: (1) Completeness = TP/(TP + FN); (2) Correctness = TP/(TP + FP); (3) Quality = TP/(TP + FP + FN), where TP, FN, and FP represent true positive, false negative, and false positive, respectively; (4) Seed-point number. The ground truth was produced through the hand-drawing method, and the buffer width was set to four pixels.

4.3.1. Test of the Edge Constraint

Two remote sensing images were selected to test the edge constraint effect on road centerline extraction. The results are presented in Figure 3. The method using edge constraint provided better results than those provided by the method without edge constraint. The results obtained through the method without edge constraint easily deviated from the true road centerline, whereas those obtained through the proposed method with edge constraint could preserve the road centerline. The proposed method using edge constraint is more accurate than other methods because of the two following advantages: First, edge-energy computation and distance transformation can provide the ridgeline of the road segment, as shown in Figure 2g. Second, the fast marching method can trace the road centerline along the ridgeline. The visual comparison of the results, as presented in Figure 3, illustrates the advantages of the proposed method in road centerline extraction.

4.3.2. Experiment on Centerline Extraction from U-Shaped Roads

The results of the three methods are compared in Figure 4. This figure shows that all the three methods extracted the expected road centerlines. Compared with that of Hu’s method, the performance of Miao’s method and the proposed ECFM method improved with the number of road seed points. The proposed ECFM method, however, provided better results for both images than Hu’s and Miao’s methods. Table 1 shows the quantitative evaluation results of the three methods. Among the three tested methods, the presented method achieved the highest quality values for the two cases. These values coincided with the extraction results presented in Figure 4. Although Hu’s method accurately extracted centerlines, it consumed more road seed points than the other two methods because it requires intermediate road seed points when extracting centerlines from S- or U-shaped road segments. By contrast, the proposed method extracts centerlines from S- or U-shaped roads with only two road seed points.

Table 1.

Comparison of Three Semiautomatic Road Centerline Extraction Methods.

4.3.3. Experiment on An IKONOS Image

Figure 5 shows that the proposed ECFM method extracted most of the road segments and provided satisfactory results. A visual comparison between the extraction results is shown in Figure 6a–d. This figure shows that the proposed method performed better than the other methods. Table 2 shows the quantitative results of the three methods. The results shown in Table 2 indicate that the three methods successfully extracted a relatively complete road centerline with relatively high extraction quality. Nevertheless, the efficiency of the proposed ECFM method is superior to that of Hu’s and Miao’s methods. For example, the proposed method used the fewest seed points among all three tested methods. Given that the solution of Hu’s method for parabola parameters is heavily dependent on the radiometric features of dual edges, this method will encounter problems when extracting features from images with unclear edges. Specifically, Hu’s method will not provide the desired result if the road boundary is unclear. Miao’s method exploits the geodesic method to connect road seed points. Its performance, however, is affected by road occlusions. The presented method achieved the highest quality values among all tested methods, indicating that it achieves the best balance between road extraction quality and seed-point consumption. Although Hu’s method can extract relatively complete centerlines, its quality values are lower than those of the presented method because the result obtained through Hu’s method is biased to the ground truth, whereas that obtained through the presented method is considerably closer to the ground truth.

Figure 6.

Comparison of the results provided by different road extraction methods for an IKONOS image. (a) Case 1; (b) Case 2; (c) Case 3; (d) Case 4.

Table 2.

Quantitative Evaluation of Different Centerline Extraction Methods.

4.3.4. Experiment on A QuickBird Image

Figure 7 shows that Miao’s method cannot efficiently manage abrupt changes, such as road junctions and sudden material changes or conflations, in images. This limitation is attributed to the method’s requirement for an intermediate step to measure initial road centerline probability, which is computed on the basis of seed-point information, from the binary road image. Miao’s method could not extract the expected road centerline if road segments between seed points were occluded by shadows or by a vehicle. By contrast, the proposed method utilizes edge energy and curvature to reduce the effect of shadows and vehicles on the road. The performance of Hu’s method was comparable with that of the proposed method. However, the road seed-point consumption of the proposed method was superior to that of Hu’s method. Table 2 shows the quantitative evaluation results of three methods. Although the proposed method used fewer seed points than the other two methods, it obtained higher completeness, correctness, and quality values. These values coincided with the extraction results presented in Figure 7. The experimental results illustrate that the proposed method is robust to noise and has considerable potential applications in road extraction from VHR remote sensing images.

Figure 7.

Comparison of the results provided by different road extraction methods for a QuickBird image.

4.3.5. Experiment on A WorldView-2 Image

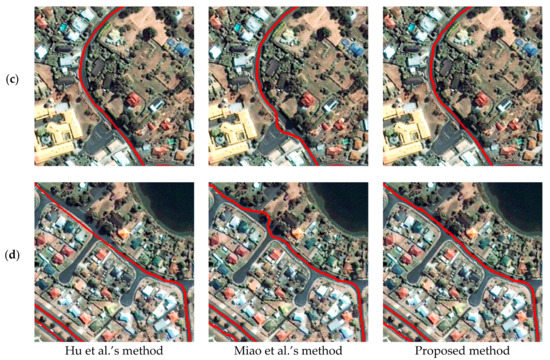

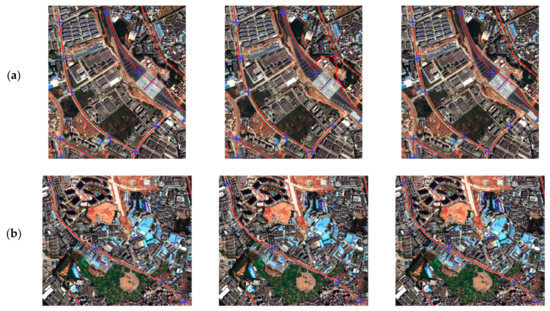

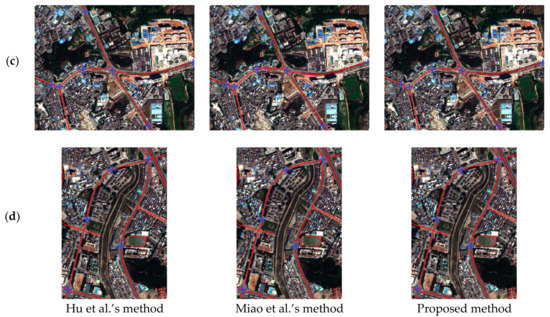

Figure 8 shows that the proposed ECFM method can be used to reliably and accurately extract roads in a wide range of high-resolution remote sensing images. Figure 9 shows the local comparison of roads extracted by different methods. Overall, all three methods can achieve satisfactory results. The comparison in Figure 9a shows that ECFM and Hu’s methods have good anti-noise performance when encountering toll stations, and compared with Miao’s method, the road centerline extracted is closer to the center. This difference is due to the fact that Miao’s method considers only the spectral features of roads while our and Hu’s methods not only consider the spectral features but also combine the edge features. Figure 9b shows that in road sections where road materials change greatly, all three methods can extract the road centerline accurately. Nevertheless, comparison indicates that the road centerline extracted by the ECFM method is smooth, and the technique can maintain high accuracy in sections with large road curvatures. Figure 9c shows the differences among three methods of extracting roads near road intersections. According to the figure, the road centerlines extracted by ECFM and Hu’s methods are relatively smooth. The road centerline extracted by Miao’s method is easily influenced by vehicles on the road, so the extraction results in the vehicle-intensive area are not smooth enough. Figure 9d shows the results of different methods in the case of shadow occlusion. Comparison shows that Hu’s extraction result is relatively smooth because the technique adopts the piecewise parabolic model, which can obtain a relatively smooth curve. However, according to the figure, the road centerline acquired by this method can easily shift. Miao’s method is influenced by shadows and cars, which lead to the unsmooth extraction results. The ECFM method proposed in this paper has achieved a relatively balanced performance, and it is better than the compared techniques in terms of road smoothness and accuracy. The statistical results in Table 3 are also consistent with those in Figure 9. Table 3 shows that the ECFM method performs well in terms of completeness, correctness, and quality under the condition of using the same number and location of road seed points.

Figure 8.

Road extraction result provided by the proposed ECFM method for a WorldView-2 image.

Figure 9.

Comparison of the results provided by different road extraction methods for a WorldView-2 image. Road seed points are marked with blue crosses. (a) Case 1; (b) Case 2; (c) Case 3; (d) Case 4.

Table 3.

Quantitative Evaluation of Different Centerline Extraction Methods.

4.3.6. Experiment on An IKONOS Grayscale Image

Figure 10 shows the results of three different methods for extracting the road centerline from an IKONOS grayscale remote sensing image. As can be seen from the figure, all roads can be extracted completely by the three methods. The road centerline extracted by Hu’s method is the smoothest, but the limitation of the piecewise parabolic model it uses causes the extracted results in areas with large changes in road curvature to tend to deviate from the road center. Miao’s method and the ECFM method can avoid this problem. Compared with Miao’s technique (which considers only the spectral features of roads), the ECFM method (which fuses the edge features and spectral features, thereby potentially overcoming the influence of spectral changes placed by shadows on road extraction results to a certain extent) shows better performance on shadow and vegetation occlusion. As can be seen from the statistical results in Table 3, the extraction completeness of all three methods is high when the same number and location of road seed points are used. However, our method achieves the best performance in terms of extracting correctness indicators. Similarly, our method demonstrates the best quality.

Figure 10.

Comparison of the results provided by different road extraction methods for an IKONOS grayscale image.

5. Discussion

In this section, we present our analysis and discussion of the parameter sensitivity and computational costs of Experiments 3 and 4. Then, from Experiments 5 and 6, we discuss the influence of the number and location of seed points on road extraction results. The details are provided in the following subsections.

5.1. Parameter Sensitivity Analysis

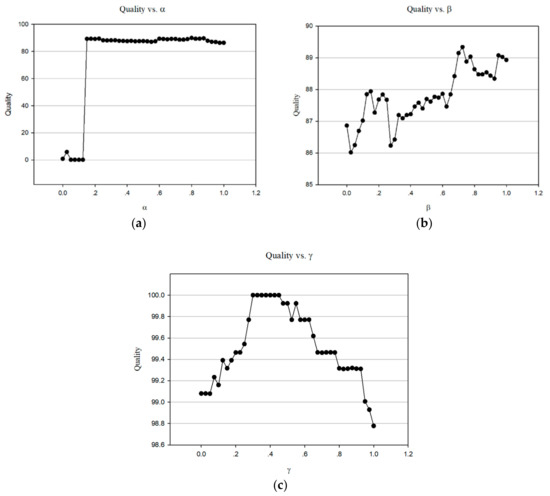

We analyzed the effects of parameters α, β, and λ used in the edge-constraint-based weighted fusion model. These parameters have various effects on road extraction performance. The QuickBird satellite image shown in Figure 7 was tested, and the three parameters were set from 0 to 1 with an interval of 0.075. As shown in Figure 11a, road extraction quality was less than 5% when α was small. However, when α exceeded 0.15, performance suddenly increased and was maintained at approximately 90%. This result indicated that spectral information plays a dominant role in the fusion model. β was proportional to recognition quality, as shown in Figure 11b. Thus, increasing the weight of the road centerline probability feature improves extraction accuracy. Figure 11c shows that the effect of the edge constraint is not proportional to extraction quality. Recognition rate will decrease if λ is excessively small or large. The proposed method yielded a good extraction result when λ was approximately 0.4.

Figure 11.

Quality of the results provided by the proposed method for the QuickBird image under different α, β, and λ values. (a) Quality vs. α; (b) Quality vs. β; (c) Quality vs. λ.

5.2. Computational Cost Analysis

In this section, we present a discussion of the computational cost of the proposed approach. All the experiments were performed on a personal computer with a 3.1-GHz Pentium dual-core CPU and 16-GB memory. Each experiment was repeated five times, and the average running time and seed-point number of the proposed approach with IKONOS and QuickBird satellite images are presented in Table 4. The proposed method ensured correct road extraction and consumed less computational time than the other two methods. According to this analysis, without considering the number and location of seed points, the road extraction efficiency of the method proposed in this work is the highest, thereby introducing an effective way for the extensive practical application of extracting road centerline from remote sensing images. In general, the presented method is moderately efficient.

Table 4.

Computation Cost of Different Centerline Extraction Methods.

5.3. Number and Location of Seed Points Analysis

In the third and fourth experiments, we adopted the strategy of obtaining the highest extraction quality by multiple extractions regardless of the number and location of seed points to compare different methods. As can be seen from Table 4, on the premise of ensuring the extraction quality, the number and time of seed points required by different methods remarkably vary. On the premise of obtaining the highest quality, our method requires the fewest number of seed points and time. However, the location and number of seed points have a considerable influence on different methods, and whether they are key factors affecting the experimental results needs to be analyzed.

Therefore, in the fifth and sixth experiments, to verify the influence of the number and location of seed points on the road extraction results of different methods, we used the same number and location of seed points to conduct comparative experiments. Seed points were obtained by artificial marking before the start of comparative experiment. The experimental results and statistical results show that the ECFM method produces good results in both groups of experiments. The statistical results in Table 5 indicate that when the same number of seed points is used, Miao’s method consumes the shortest time, followed by the ECFM method, and Hu’s method consumes the longest time. This finding is due to the fact that Miao’s method uses the simplest features, while Hu’s method uses the piecewise parabolic model and least-squares template matching, thereby prolonging the optimization of the road curve. Meanwhile, our method uses three features (spectral feature, edge feature, and road centerline probability), and the time required is also increased compared with Miao’s method.

Table 5.

Computation Cost of Different Centerline Extraction Methods

A comparison of the data in Table 4 and Table 5 shows that when a similar number of seed points is applied, the time required by our method to extract roads from different remote sensing images is remarkably different. This result has a substantial relationship with the resolution of the image used, the size of the area, and the density of the road network. A high resolution of the remote sensing image, large area, and high road network density result in a long extraction time.

6. Conclusions

This study presents a semiautomatic approach that uses road seed points to extract road centerlines from VHR remote sensing images. An edge-constraint-based weighted fusion model was introduced to overcome the influence of road occlusion and noise on road extraction. Finally, an edge-constraint fast marching method was proposed to improve the accuracy and quality of the road extraction results.

Six experiments were conducted on eight VHR remote sensing images that are related to different road conditions, including vehicle occlusion, sharp roadway curves, and building shadows. The advantages of the proposed method are as follows: (1) favorable road extraction accuracy and efficiency and (2) robustness to extracting road centerlines from VHR remote sensing images. Overall, the presented method is a superior and practical solution to road extraction from VHR optical remote sensing images.

In future work, the performance of the proposed method on additional types of remote sensing images, such as unmanned aerial vehicle images with very high spatial resolution, will be extensively investigated. The application of the proposed method to roads constructed from different materials and the automatic selection of road seed points are interesting future research directions.

Author Contributions

L.G. was primarily responsible for the original idea and experimental design. W.S. provided important suggestions for improving the paper’s quality. Z.M. provided ideas to improve the quality of the paper. Z.L. contributed to the experimental analysis and revised the paper. The contribution of Z.L. was equal to the first author (L.G.).

Acknowledgments

The authors would like to thank the editor-in-chief, the anonymous associate editor, and the reviewers for their insightful comments and suggestions. This work was supported by the National Natural Science Foundation of China (41331175 and 61701396), the Scientific Research Foundation for Distinguished Scholars, Central South University (502045001), the Natural Science Foundation of Shaan Xi Province (2017JQ4006), and Engineering Research Center of Geospatial Information and Digital Technology, NASG.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shi, W.; Zhu, C.; Wang, Y. Road feature extraction from remotely sensed image: Review and Prospects. Acta Geod. Cartogr. Sin. 2001, 30, 257–262. [Google Scholar] [CrossRef]

- Shi, W.; Miao, Z.; Debayle, J. An integrated method for urban main-road centerline extraction from optical remotely sensed imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3359–3372. [Google Scholar] [CrossRef]

- Mena, J.B. State of the art on automatic road extraction for GIS update: A novel classification. Pattern Recognit. Lett. 2003, 24, 3037–3058. [Google Scholar] [CrossRef]

- Wang, W.; Yang, N.; Zhang, Y.; Wang, F.; Cao, T.; Eklund, P. A review of road extraction from remote sensing images. J. Traffic Transp. Eng. (Engl. Ed.) 2016, 3, 271–282. [Google Scholar] [CrossRef]

- Miao, Z.; Shi, W.; Zhang, H.; Wang, X. Road centerline extraction from high-resolution imagery based on shape features and multivariate adaptive regression splines. IEEE Geosci. Remote Sens. Lett. 2013, 10, 583–587. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L.; Li, P. Classification and extraction of spatial features in urban areas using high-resolution multispectral imagery. IEEE Geosci. Remote Sens. Lett. 2007, 4, 260–264. [Google Scholar] [CrossRef]

- Li, M.; Stein, A.; Bijker, W.; Zhan, Q. Region-based urban road extraction from VHR satellite images using Binary Partition Tree. Int. J. Appl. Earth Obs. Geoinf. 2016, 44, 217–225. [Google Scholar] [CrossRef]

- Li, Z.; Shi, W.; Wang, Q.; Miao, Z. Extracting man-made objects from high spatial resolution remote sensing images via fast level set evolutions. IEEE Trans. Geosci. Remote Sens. 2015, 53, 883–899. [Google Scholar] [CrossRef]

- Miao, Z.; Shi, W.; Gamba, P.; Li, Z. An object-based method for road network extraction in VHR satellite images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 1–10. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. Road centreline extraction from high-resolution imagery based on multiscale structural features and support vector machines. Int. J. Remote Sens. 2009, 30, 1977–1987. [Google Scholar] [CrossRef]

- Courtrai, L.; Lefèvre, S. Morphological path filtering at the region scale for efficient and robust road network extraction from satellite imagery. Pattern Recognit. Lett. 2016, 83, 195–204. [Google Scholar] [CrossRef]

- Zang, Y.; Wang, C.; Cao, L.; Yu, Y.; Li, J. Road network extraction via aperiodic directional structure measurement. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3322–3335. [Google Scholar] [CrossRef]

- Butenuth, M.; Heipke, C. Network snakes: Graph-based object delineation with active contour models. Mach. Vis. Appl. 2012, 23, 91–109. [Google Scholar] [CrossRef]

- Gruen, A.; Li, H. Road extraction from aerial and satellite images by dynamic programming. ISPRS-J. Photogramm. Remote Sens. 1995, 50, 11–20. [Google Scholar] [CrossRef]

- Mokhtarzade, M.; Zoej, M.J.V. Road detection from high-resolution satellite images using artificial neural networks. Int. J. Appl. Earth Obs. Geoinf. 2007, 9, 32–40. [Google Scholar] [CrossRef]

- Panboonyuen, T.; Jitkajornwanich, K.; Lawawirojwong, S.; Srestasathiern, P.; Vateekul, P. Road segmentation of remotely-sensed images using deep convolutional neural networks with landscape metrics and conditional random fields. Remote Sens. 2017, 9, 680. [Google Scholar] [CrossRef]

- Mirnalinee, T.T.; Das, S.; Varghese, K. An integrated multistage framework for automatic road extraction from high resolution satellite imagery. J. Indian Soc. Remote Sens. 2011, 39, 1–25. [Google Scholar] [CrossRef]

- Wegner, J.D.; Montoya-Zegarra, J.A.; Schindler, K. A higher-order CRF model for road network extraction. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; IEEE CSP: Washington, DC, USA, 2013; pp. 1698–1705. [Google Scholar]

- Zang, Y.; Wang, C.; Yu, Y.; Luo, L.; Yang, K. Joint enhancing filtering for road network extraction. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1511–1524. [Google Scholar] [CrossRef]

- Maboudi, M.; Amini, J.; Hahn, M.; Saati, M. Road network extraction from VHR satellite images using context aware object feature integration and tensor voting. Remote Sens. 2016, 8, 637. [Google Scholar] [CrossRef]

- Miao, Z.; Shi, W.; Samat, A.; Lisini, G.; Gamba, P. Information fusion for urban road extraction from VHR optical satellite images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 1–14. [Google Scholar] [CrossRef]

- Hu, J.; Razdan, A.; Femiani, J.C.; Cui, M.; Wonka, P. Road network extraction and intersection detection from aerial images by tracking road footprints. IEEE Trans. Geosci. Remote Sens. 2007, 45, 4144–4157. [Google Scholar] [CrossRef]

- Lin, X.G.; Zhang, J.X.; Liu, Z.J.; Shen, J. Semi-automatic extraction of ribbon roads form high resolution remotely sensed imagery by cooperation between angular texture signature and template matching. In Proceedings of the ISPRS Congress, Beijing, China, 3–11 July 2008; pp. 539–544. [Google Scholar]

- Das, S.; Mirnalinee, T.T.; Varghese, K. Use of salient features for the design of a multistage framework to extract roads from high-resolution multispectral satellite images. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3906–3931. [Google Scholar] [CrossRef]

- Cheng, G.; Zhu, F.; Xiang, S.; Wang, Y.; Pan, C. Accurate urban road centerline extraction from VHR imagery via multiscale segmentation and tensor voting. Neurocomputing 2016, 205, 407–420. [Google Scholar] [CrossRef]

- Cheng, G.; Wang, Y.; Gong, Y.; Zhu, F.; Pan, C.B.I. Urban road extraction via graph cuts based probability propagation. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; IEEE: New York, NY, USA, 2014; pp. 5072–5076. [Google Scholar]

- Hu, X.; Zhang, Z.; Tao, C.V. A robust method for semi-automatic extraction of road centerlines using a piecewise parabolic model and least square template matching. Photogramm. Eng. Remote Sens. 2004, 70, 1393–1398. [Google Scholar] [CrossRef]

- Miao, Z.; Wang, B.; Shi, W.; Zhang, H. A semi-automatic method for road centerline extraction from VHR images. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1856–1860. [Google Scholar] [CrossRef]

- Zhou, J.; Bischof, W.F.; Caelli, T. Robust and efficient road tracking in aerial images. In Proceedings of the Joint Workshop of ISPRS and DAGM (CMRT’05) on Object Extraction for 3D City Models, Road Databases and Traffic Monitoring—Concepts, Algorithms and Evaluation, Vienna, Austria, 29–30 August 2005; pp. 35–40. [Google Scholar]

- Movaghati, S.; Moghaddamjoo, A.; Tavakoli, A. Road extraction from satellite images using particle filtering and extended Kalman filtering. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2807–2817. [Google Scholar] [CrossRef]

- Lv, Z.; Jia, Y.; Zhang, Q.; Chen, Y. An adaptive multifeature sparsity-based model for semiautomatic road extraction from high-resolution satellite images in urban areas. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1238–1242. [Google Scholar] [CrossRef]

- Dal Poz, A.P.; Gallis, R.A.B.; Silva, J.F.C.D.; Martins, E.F.O. Object-space road extraction in rural areas using stereoscopic aerial images. IEEE Geosci. Remote Sens. Lett. 2012, 9, 654–658. [Google Scholar] [CrossRef]

- Yang, K.; Li, M.; Liu, Y.; Jiang, C. Multi-points fast marching: A novel method for road extraction. In Proceedings of the International Conference on Geoinformatics, Beijing, China, 18–20 June 2010; pp. 1–5. [Google Scholar]

- Osher, S.; Sethian, J.A. Fronts propagating with curvature-dependent speed: Algorithms based on Hamilton-Jacobi formulations. J. Comput. Phys. 1988, 79, 12–49. [Google Scholar] [CrossRef]

- Jbabdi, S.; Bellec, P.; Toro, R.; Daunizeau, J.; Pelegrini-Issac, M.; Benali, H. Accurate anisotropic fast marching for diffusion-based geodesic tractography. Int. J. Biomed. Imaging 2008, 2008, 2. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Xue, Z.; Cui, K.; Wong, S. Diffusion tensor-based fast marching for modeling human brain connectivity network. Comput. Med. Imaging Graph. 2011, 35, 167–178. [Google Scholar] [CrossRef] [PubMed]

- Barzohar, M.; Cooper, D.B. Automatic finding of main roads in aerial images by using geometric-stochastic models and estimation. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 707–721. [Google Scholar] [CrossRef]

- Hinz, S.; Baumgartner, A. Automatic extraction of urban road networks from multi-view aerial imagery. ISPRS-J. Photogramm. Remote Sens. 2003, 58, 83–98. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided Image Filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Xiang, S.; Nie, F.; Zhang, C. Learning a Mahalanobis distance metric for data clustering and classification. Pattern Recognit. 2008, 41, 3600–3612. [Google Scholar] [CrossRef]

- Rosenfeld, A.; Pfaltz, J.L. Distance functions on digital pictures. Pattern Recognit. 1968, 1, 33–61. [Google Scholar] [CrossRef]

- Bakker, W.H.; Schmidt, K.S. Hyperspectral edge filtering for measuring homogeneity of surface cover types. ISPRS-J. Photogramm. Remote Sens. 2002, 56, 246–256. [Google Scholar] [CrossRef]

- Ahamada, I.; Flachaire, E. Non-Parametric Econometrics; Oxford University Press: Oxford, UK, 2010; ISBN 9780199578009. [Google Scholar]

- Williams, D.J.; Shah, M. A Fast algorithm for active contours and curvature estimation. CVGIP Image Underst. 1992, 55, 14–26. [Google Scholar] [CrossRef]

- Türetken, E.; Benmansour, F.; Fua, P. Automated reconstruction of tree structures using path classifiers and Mixed Integer Programming. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 566–573. [Google Scholar]

- VPLab Data. Available online: http://www.cse.iitm.ac.in/~vplab/satellite.html (accessed on 16 April 2017).

- Wiedemann, C.; Heipke, C.; Mayer, H.; Jamet, O. Empirical evaluation of automatically extracted road axes. In Empirical Evaluation Techniques in Computer Vision, 1st ed.; Bowyer, K., Phillips, P.J., Eds.; IEEE CSP: Los Alamitos, CA, USA, 1998; pp. 172–187. ISBN 0818684011. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).