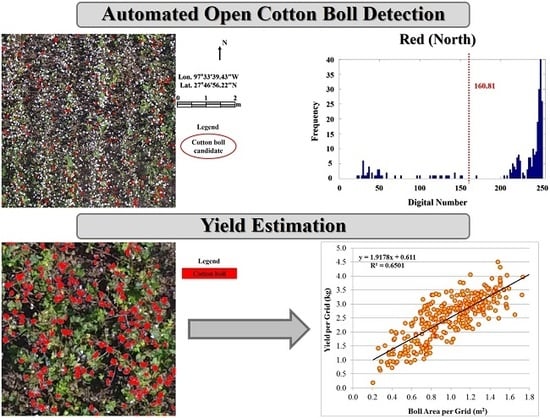

Automated Open Cotton Boll Detection for Yield Estimation Using Unmanned Aircraft Vehicle (UAV) Data

Abstract

:1. Introduction

1.1. Advantages of UAV Systems for Agriculture Research

1.2. Literature Review

1.3. Objectives of this Study

2. Methods

2.1. Cotton Boll Candidate Selection

2.2. Cotton Boll Extraction

3. Study Area and Data

3.1. Study Area

3.2. UAV Data

3.3. Evaluation

4. Results

4.1. Cotton Boll Candidate Selection

4.2. Cotton Boll Extraction

4.3. Accuracy Assessment

5. Discussion

5.1. Cotton Boll Candidate Selection and Cotton Boll Extraction

5.2. Accuracy Assessment

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jiang, Z.; Chen, Z.; Chen, J.; Ren, J.; Li, Z.; Sun, L. The estimation of regional crop yield using ensemble-based four-dimensional variational data assimilation. Remote Sens. 2014, 6, 2664–2681. [Google Scholar] [CrossRef]

- Bellón, B.; Bégué, A.; Lo Seen, D.; de Almeida, C.A.; Simões, M. A remote sensing approach for regional-scale mapping of agricultural land-use systems based on NDVI time series. Remote Sens. 2017, 9, 600. [Google Scholar] [CrossRef]

- Arvor, D.; Jonathan, M.; Meirelles, M.S.P.; Dubreuil, V.; Durieux, L. Classification of MODIS EVI time series for crop mapping in the state of Mato Grosso, Brazil. Int. J. Remote Sens. 2011, 32, 7847–7871. [Google Scholar] [CrossRef]

- Rembold, F.; Atzberger, C.; Savin, I.; Rojas, O. Using low resolution satellite imagery for yield prediction and yield anomaly detection. Remote Sens. 2013, 5, 1704–1733. [Google Scholar] [CrossRef] [Green Version]

- Zhang, M.; Wu, B.; Yu, M.; Zou, W.; Zheng, Y. Crop condition assessment with adjusted NDVI using the uncropped arable land ratio. Remote Sens. 2014, 6, 5774–5794. [Google Scholar] [CrossRef]

- Simms, D.M.; Waine, T.W.; Taylor, J.C.; Juniper, G.R. The application of time-series MODIS NDVI profiles for the acquisition of crop information across Afghanistan. Int. J. Remote Sens. 2014, 35, 6234–6254. [Google Scholar] [CrossRef]

- Wardlow, B.D.; Egbert, S.L. A comparison of MODIS 250-m EVI and NDVI data for crop mapping: A case study for southwest Kansas. Int. J. Remote Sens. 2010, 31, 805–830. [Google Scholar] [CrossRef]

- Akbari, M.; Mamanpoush, A.R.; Gieske, A.; Miranzadeh, M.; Torabi, M.; Salemi, H.R. Crop and land cover classification in Iran using Landsat 7 imagery. Int. J. Remote Sens. 2006, 27, 4117–4135. [Google Scholar] [CrossRef]

- Son, N.T.; Chen, C.F.; Chen, C.R.; Duc, H.N.; Chang, L.Y. A phenology-based classification of time-series MODIS data for rice crop monitoring in Mekong Delta, Vietnam. Remote Sens. 2013, 6, 135–156. [Google Scholar] [CrossRef]

- Bocco, M.; Sayago, S.; Willington, E. Neural network and crop residue index multiband models for estimating crop residue cover from Landsat TM and ETM+ images. Int. J. Remote Sens. 2014, 35, 3651–3663. [Google Scholar] [CrossRef]

- Rao, N.R. Development of a crop-specific spectral library and discrimination of various agricultural crop varieties using hyperspectral imagery. Int. J. Remote Sens. 2008, 29, 131–144. [Google Scholar] [CrossRef]

- Potgieter, A.; Apan, A.; Hammer, G.; Dunn, P. Estimating winter crop area across seasons and regions using time-sequential MODIS imagery. Int. J. Remote Sens. 2011, 32, 4281–4310. [Google Scholar] [CrossRef] [Green Version]

- Esquerdo, J.C.D.M.; Zullo Júnior, J.; Antunes, J.F.G. Use of NDVI/AVHRR time-series profiles for soybean crop monitoring in Brazil. Int. J. Remote Sens. 2011, 32, 3711–3727. [Google Scholar] [CrossRef]

- Zheng, Y.; Zhang, M.; Zhang, X.; Zeng, H.; Wu, B. Mapping winter wheat biomass and yield using time series data blended from PROBA-V 100- and 300-m S1 products. Remote Sens. 2016, 8, 824. [Google Scholar] [CrossRef]

- Sakamoto, T.; Wardlow, B.D.; Gitelson, A.A. Detecting spatiotemporal changes of corn developmental stages in the US corn belt using MODIS WDRVI data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 1926–1936. [Google Scholar] [CrossRef]

- Meroni, M.; Fasbender, D.; Balaghi, R.; Dali, M.; Haffani, M.; Haythem, I.; Hooker, J.; Lahlou, M.; Lopez-Lozano, R.; Mahyou, H.; et al. Evaluating NDVI data continuity between SPOT-VEGETATION and PROBA-V missions for operational yield forecasting in North African countries. IEEE Trans. Geosci. Remote Sens. 2016, 54, 795–804. [Google Scholar] [CrossRef]

- Hartfield, K.A.; Marsh, S.E.; Kirk, C.D.; Carrière, Y. Contemporary and historical classification of crop types in Arizona. Int. J. Remote Sens. 2013, 34, 6024–6036. [Google Scholar] [CrossRef]

- Huang, J.; Wang, H.; Dai, Q.; Han, D. Analysis of NDVI data for crop identification and yield estimation. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 4374–4384. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, M.; Zheng, Y.; Wu, B. Crop mapping using PROBA-V time series data at the Yucheng and Hongxing farm in China. Remote Sens. 2016, 8, 915. [Google Scholar] [CrossRef]

- Fan, C.; Zheng, B.; Myint, S.W.; Aggarwal, R. Characterizing changes in cropping patterns using sequential Landsat imagery: An adaptive threshold approach and application to Phoenix, Arizona. Int. J. Remote Sens. 2014, 35, 7263–7278. [Google Scholar] [CrossRef]

- Li, Q.; Wang, C.; Zhang, B.; Lu, L. Object-based crop classification with Landsat-MODIS enhanced time-series data. Remote Sens. 2015, 7, 16091–16107. [Google Scholar] [CrossRef]

- Murakami, T.; Ogawa, S.; Ishitsuka, N.; Kumagai, K.; Saito, G. Crop discrimination with multitemporal SPOT/HRV data in the Saga Plains, Japan. Int. J. Remote Sens. 2001, 22, 1335–1348. [Google Scholar] [CrossRef]

- De Wit, A.J.W.; Clevers, J.G.P.W. Efficiency and accuracy of per-field classification for operational crop mapping. Int. J. Remote Sens. 2004, 25, 4091–4112. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Viña, A.; Masek, J.G.; Verma, S.B.; Suyker, A.E. Synoptic monitoring of gross primary productivity of maize using Landsat data. IEEE Geosci. Remote Sens. Lett. 2008, 5, 133–137. [Google Scholar] [CrossRef]

- Dubovyk, O.; Menz, G.; Lee, A.; Schellberg, J.; Thonfeld, F.; Khamzina, A. SPOT-based sub-field level monitoring of vegetation cover dynamics: A case of irrigated croplands. Remote Sens. 2015, 7, 6763–6783. [Google Scholar] [CrossRef]

- Inglada, J.; Arias, M.; Tardy, B.; Hagolle, O.; Valero, S.; Morin, D.; Dedieu, G.; Sepulcre, G.; Bontemps, S.; Defourny, P.; et al. Assessment of an operational system for crop type map production using high temporal and spatial resolution satellite optical imagery. Remote Sens. 2015, 7, 12356–12379. [Google Scholar] [CrossRef]

- Marshall, M.T.; Husak, G.J.; Michaelsen, J.; Funk, C.; Pedreros, D.; Adoum, A. Testing a high-resolution satellite interpretation technique for crop area monitoring in developing countries. Int. J. Remote Sens. 2011, 32, 7997–8012. [Google Scholar] [CrossRef]

- Wei, C.; Huang, J.; Mansaray, L.R.; Li, Z.; Liu, W.; Han, J. Estimation and mapping of winter oilseed rape LAI from high spatial resolution satellite data based on a hybrid method. Remote Sens. 2017, 9, 488. [Google Scholar] [CrossRef]

- Coltri, P.P.; Zullo, J.; do Valle Goncalves, R.R.; Romani, L.A.S.; Pinto, H.S. Coffee crop’s biomass and carbon stock estimation with usage of high resolution satellites images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2013, 6, 1786–1795. [Google Scholar] [CrossRef]

- Schirrmann, M.; Giebel, A.; Gleiniger, F.; Pflanz, M.; Lentschke, J.; Dammer, K.H. Monitoring agronomic parameters of winter wheat crops with low-cost UAV imagery. Remote Sens. 2016, 8, 706. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating biomass of barley using crop surface models (CSMs) derived from UAV-based RGB imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Schirrmann, M.; Hamdorf, A.; Giebel, A.; Gleiniger, F.; Pflanz, M.; Dammer, K.H. Regression kriging for improving crop height models fusing ultra-sonic sensing with UAV imagery. Remote Sens. 2017, 9, 665. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Peña, J.M. An automatic object-based method for optimal thresholding in UAV images: Application for vegetation detection in herbaceous crops. Comput. Electron. Agric. 2015, 114, 43–52. [Google Scholar] [CrossRef] [Green Version]

- Peña, J.M.; Torres-Sánchez, J.; De Castro, A.I.; Kelly, M.; López-Granados, F. Weed mapping in early-season maize fields using object-based analysis of unmanned aerial vehicle (UAV) images. PLoS ONE 2013, 8, e77151. [Google Scholar] [CrossRef] [PubMed]

- López-Granados, F.; Torres-Sánchez, J.; De Castro, A.I.; Serrano-Pérez, A.; Mesas-Carrascosa, F.J.; Peña, J.M. Object-based early monitoring of a grass weed in a grass crop using high resolution UAV imagery. Agron. Sustain. Dev. 2016, 36, 67. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. Syst. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Texas Is Cotton Country. Available online: https://web.archive.org/web/20131021084716/http://cotton.tamu.edu/cottoncountry.htm (accessed on 28 May 2018).

- Agisoft PhotoScan. Available online: http://www.agisoft.com/ (accessed on 28 May 2018).

- Ostertagová, E. Modelling using polynomial regression. Procedia Eng. 2012, 48, 500–506. [Google Scholar] [CrossRef]

| Processing | Parameter | Value |

|---|---|---|

| Hierarchical Random Seed Point Extraction | Number of Iterations | 10 |

| Amount of Sampling | 0.1% | |

| Region Growing | Spectral Similarity | 10% |

| Spatial Feature Analysis | Bare Ground Masking | Size > 9 m2 |

| Cotton Boll Candidates Extraction 1 | 9 cm2 < Size < 225 cm2 | |

| Cotton Boll Candidates Extraction 2 | Roundness > 0.7 |

| Type | Reference | ||

|---|---|---|---|

| Cotton Bolls | Others | ||

| Extraction Results | Cotton Bolls | True positive () | False positive () |

| Others | False negative () | True negative () | |

| Type (Pixels) | Reference | ||

|---|---|---|---|

| Cotton Bolls | Others | ||

| Proposed Method | Cotton Bolls | 476 | 41 |

| Others | 24 | 459 | |

| Precision (%) | 92.1 | ||

| Recall (%) | 95.2 | ||

| F-measure (%) | 93.6 | ||

| Jaccard coefficient (%) | 88.0 | ||

| Type (Pixels) | Reference | ||

|---|---|---|---|

| Cotton Bolls | Others | ||

| Proposed Method | Cotton Bolls | 478 | 17 |

| Others | 22 | 483 | |

| Precision (%) | 96.6 | ||

| Recall (%) | 95.6 | ||

| F-measure (%) | 96.1 | ||

| Jaccard coefficient (%) | 92.5 | ||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yeom, J.; Jung, J.; Chang, A.; Maeda, M.; Landivar, J. Automated Open Cotton Boll Detection for Yield Estimation Using Unmanned Aircraft Vehicle (UAV) Data. Remote Sens. 2018, 10, 1895. https://doi.org/10.3390/rs10121895

Yeom J, Jung J, Chang A, Maeda M, Landivar J. Automated Open Cotton Boll Detection for Yield Estimation Using Unmanned Aircraft Vehicle (UAV) Data. Remote Sensing. 2018; 10(12):1895. https://doi.org/10.3390/rs10121895

Chicago/Turabian StyleYeom, Junho, Jinha Jung, Anjin Chang, Murilo Maeda, and Juan Landivar. 2018. "Automated Open Cotton Boll Detection for Yield Estimation Using Unmanned Aircraft Vehicle (UAV) Data" Remote Sensing 10, no. 12: 1895. https://doi.org/10.3390/rs10121895