We employ the ISPRS datasets of Vaihingen and Potsdam [

24] for experiments. The training of the multi-resolution and the fusion model is described and a quantitative evaluation is given. We especially have conducted a series of experiments concerning the layers’ sensitivity to specific classes as well as different modalities and visualize the results. The exploration of the layers’ sensitivity indicates the proposed optimal fusion model is able to improve the performance. This fusion model is verified by a comparison of all possible fusion models.

4.1. Datasets

Experiments are performed on the ISPRS 2D semantic labeling datasets showing the urban areas of Vaihingen and Potsdam. The datasets provide high resolution True Orthophotos (TOPs) and corresponding DSMs with a Ground Sampling Distance (GSD) of 9 cm. In the experiments, we use color infrared imagery (infrared-red-green) for the Vaihingen dataset and color imagery (red-green-blue) for the Potsdam dataset to test our approach on different data, even though the Potsdam data contains also infrared imagery. The Vaihingen dataset consists of 32 tiles in total with ground truth released for half of them, designated for training and validation. The Vaihingen dataset employs pixel-wise labeling for six semantic categories (impervious surface, building, low vegetation, tree, car, and clutter/background). We used twelve of the 16 labeled tiles for training and four tiles for validation. For training, we divided the selected tiles into patches with a size of 256 × 256 pixels with 128 pixels’ overlap. The patches are rotated (each time by 90 degrees) and flipped (top down, left right) for data augmentation. A set of 16,782 patches is generated. We selected four tiles (13, 15, 23, and 28) as the validation set. The validation set tiles are clipped into patches with a size of 256 × 256 pixels without overlap.

The Potsdam dataset consists of 38 tiles in total with ground truth released for 24. Each contains the infrared, red, blue, green channel and corresponding elevation. The resolution is 0.5 m. In the experiments, patches are divided into small patches with a size of 256 × 256 pixels. Patches smaller than 256 × 256 are discarded. A set of 11,638 patches is generated. 9310 of them are randomly selected as training set, the rest as test set.

4.3. Evaluation of Experimental Results

What layers learn about different objects can be represented by the recall for the specific class. The recall is the fraction of correct pixels of a class which are retrieved in the semantic labeling. It is defined together with the precision:

A higher recall of a layer for a specific class indicates in turn a higher sensitivity to the class. However, the recall for a single layer is not a reasonable measure for the sensitivity towards classes. When the recall for two layers is computed, it is useful to employ the descent rate of recall, defined as difference to the previous recall, to determine which layer has the primary influence. Thus, we evaluate the contribution of layers for each class based on recall and the descent rate of recall (

DR) defined as:

where

Rm is the recall of the model with all layers and

Rl is the recall of the model with the specific layer removed. In our experiment, we only compute the

DR of a specific class when the recall of the class is larger than 50% and the

DR is positive, otherwise the

DR is set to 0.

To evaluate the fusion model, we use the

F1 score and the overall accuracy (

OA). The

F1 score is defined in Equation (7).

OA is the percentage of the correctly classified pixels, as defined in Equation (8).

where

nij is the number of pixels of class

i predicted to belong to class

j. There are

ncl different classes and

ti is the total number of pixels of class

i.

4.4. Quantitative Results

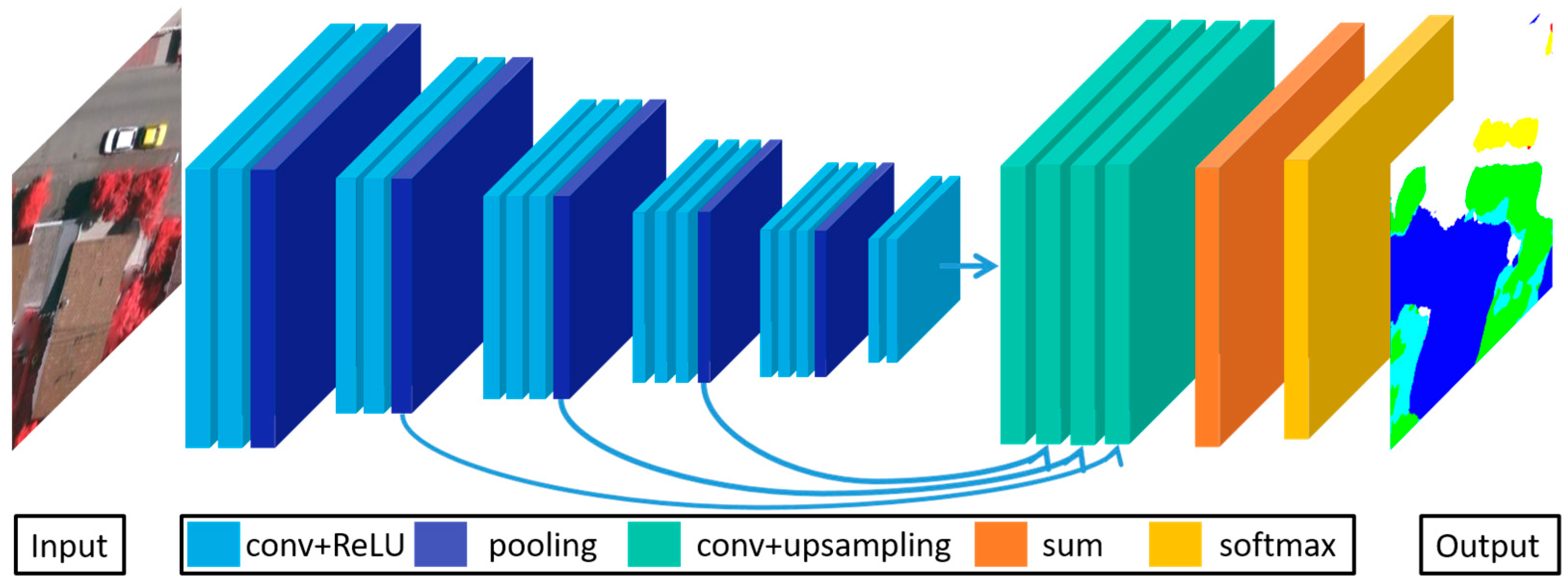

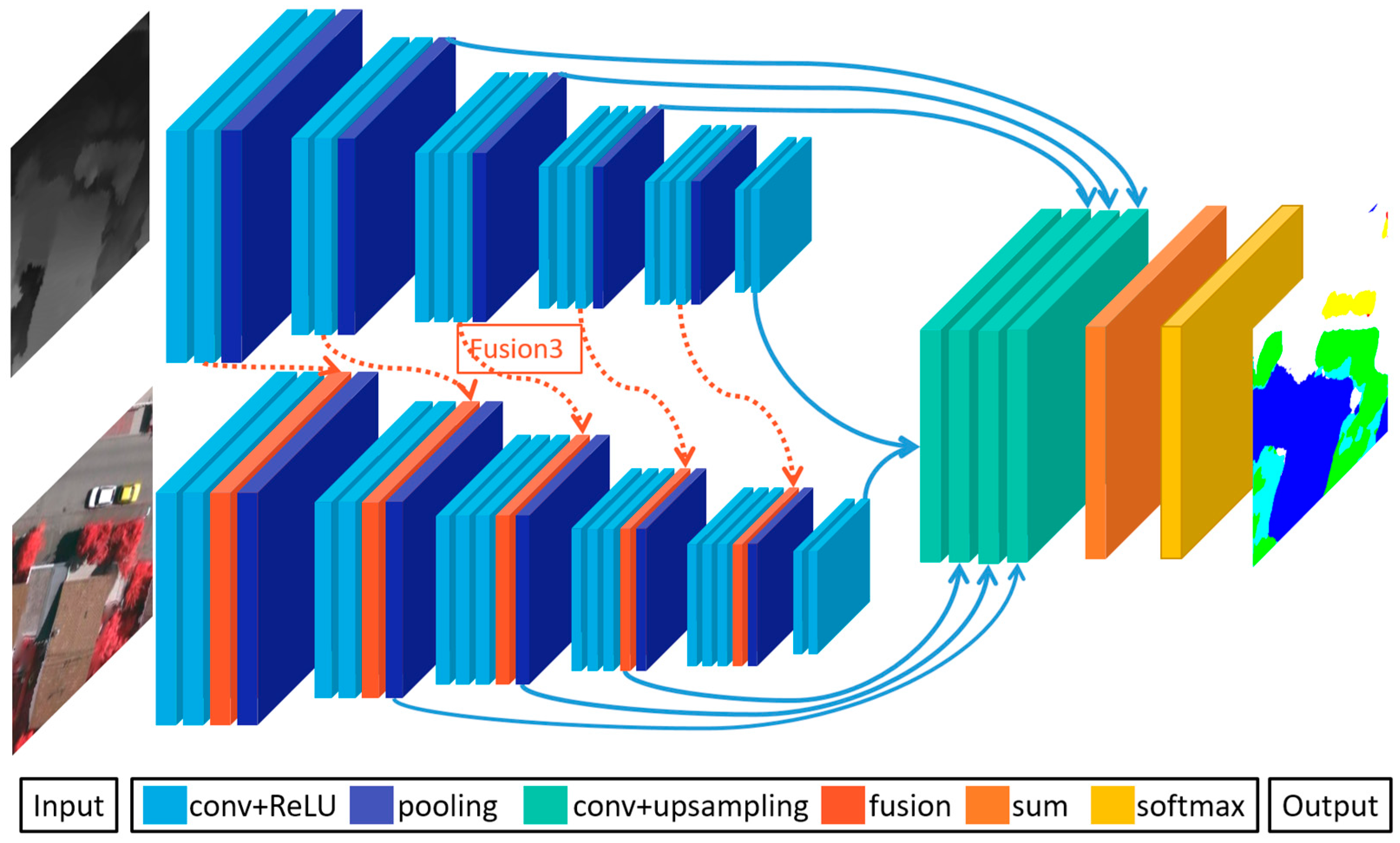

Imagery is included at the beginning and elevation is integrated at different layers.

Table 1 (image) and

Table 2 (elevation) list the recalls of single and combined layers: ‘layers-all’ includes all layers, ‘Layer-2’ represents the recall of layer 2, ‘Layers-345’ represents the recall of all layers without layer 2, and so on up to ‘Layers-234’.

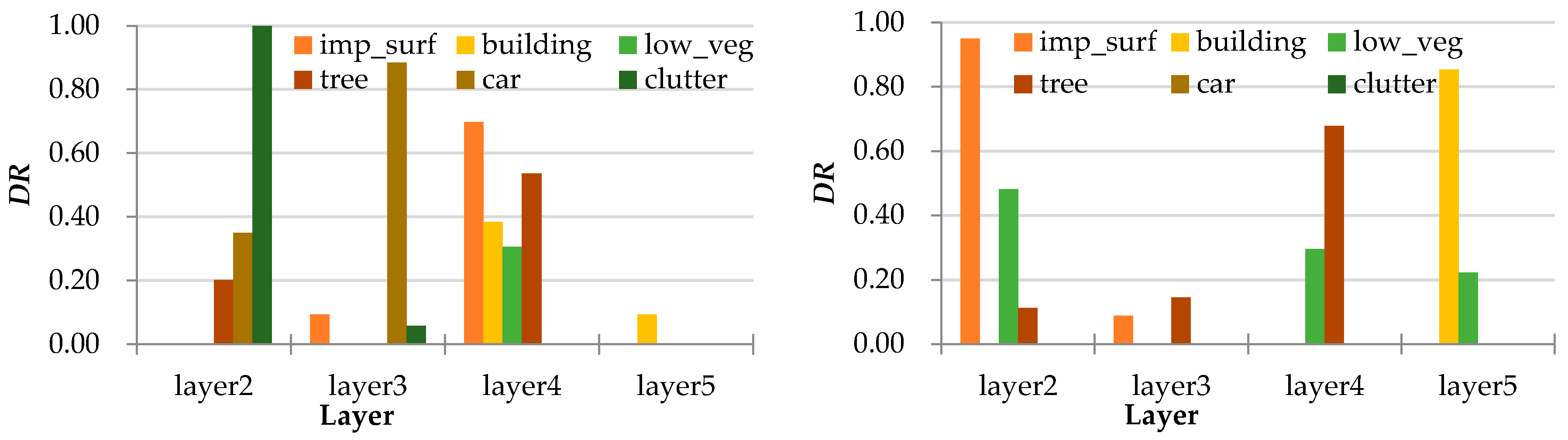

The recalls and

DRs for imagery are summarized in

Table 1 and

Figure 3 respectively. One can see that for imagery layer 2 is mostly sensitive to the class car, the

DR being 34%, and is slightly less sensitive to the class tree with a descent rate of 20.2%. Layer 3 is mostly sensitive to the classes car and impervious surface, the

DR being 88% and 9.3%, respectively. Impervious surfaces reach the top

DR value at layer 4, with trees, buildings, and low vegetation next. Layer 5 is only sensitive to buildings. They have the highest recall, although the

DR is not steeper than for layer 4. Low vegetation, on the other hand, has the steepest

DR at layer 5.

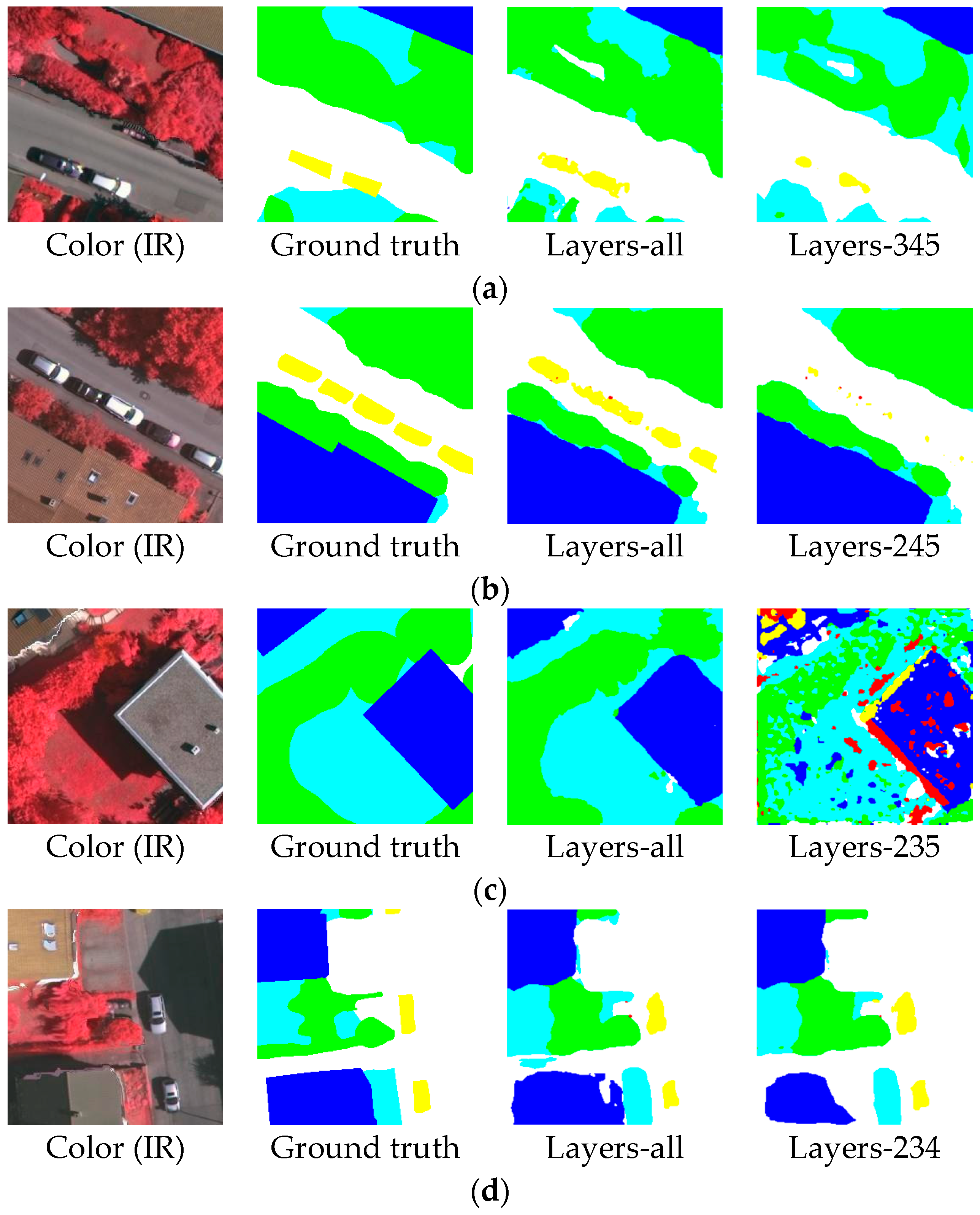

For imagery we, thus, conclude that the shallower layers containing the high resolution information, i.e., layers 2 and 3, are sensitive to small objects like cars. As demonstrated in

Figure 4a,b, when layer 2 or 3 is removed, cars or trees, respectively, cannot be recognized any more. Deeper layers, i.e., layers 4 and 5, are sensitive to objects which comprise a more complex texture and occupy larger parts of the image, i.e., buildings and trees. Deeper layers also learn the discriminative parts and link them together. As shown in

Figure 4c, when layer 4 is removed, the result is noisy, while adding layer 4 eliminates clutter. Without layer 5 parts of buildings are not detected, as shown in

Figure 4d.

For the elevation data layer 2 is sensitive to impervious surfaces with a DR of 94%, while that for low vegetation is 48% and for tree 11%. Layer 3 reacts stronger to trees and impervious surfaces. The fourth layer is sensitive to trees and low vegetation. Layer 5 is essential for the correct classification of buildings. If the fifth layer is removed, the recall for buildings decreases from 0.68 to 0.1.

For the elevation data we, thus, conclude that the shallower layers show relatively higher sensitivities to impervious surfaces and low vegetation than other classes of objects. As demonstrated in

Figure 5a, after removing Layer 2, the impervious surfaces and low vegetation are not segmented any more. Layer 3 reacts strongly to trees. Adding Layer 3 improves the classification of trees, as shown in

Figure 5b. Layer 4 is essential for the overall classification accuracy. As shown in

Figure 5c, when it is removed, the results are very noisy. When Layer 5 is removed, most of buildings are not detected anymore (

Figure 5d).

We conclude that the layers for each modality have a different contribution for specific classes.

Figure 3 shows that the

DRs of different layers are not identical. The effectiveness of features and the sensitivities of classes are different for the layers. If the multi-modal data are fused too early, the layers might learn conflicting features, which leads to an unexpected outcome. This explains why an early fusion does not lead to a satisfying result.

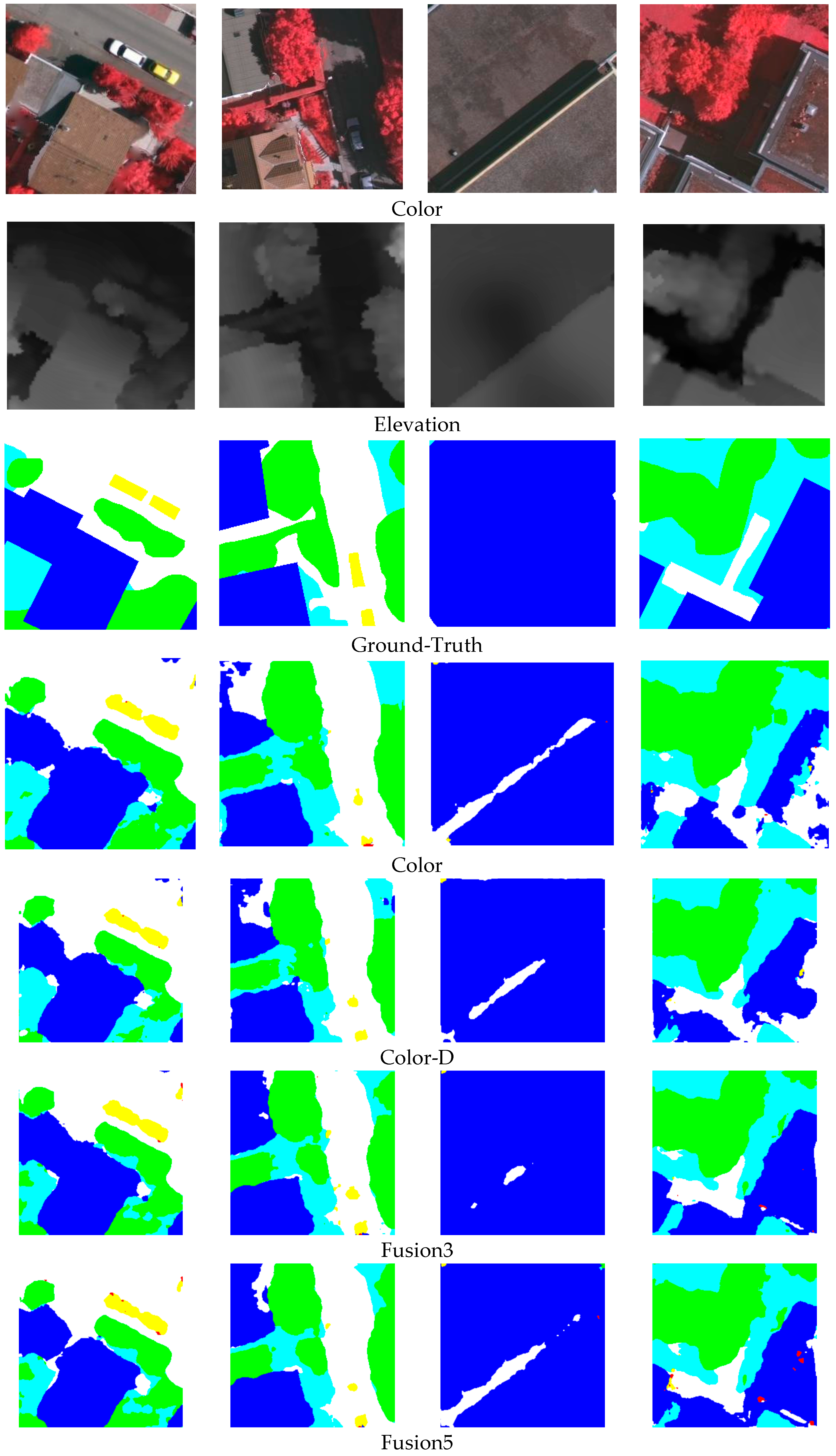

4.5. Fusion Model Results

A detailed account of the overall accuracy of the fusion model for the Vaihingen data is given in

Table 3. In

Figure 6, we demonstrate some visual comparison of the fusion models. Color-D indicates a simple four-channel input. We denote the fusion model by “Fusion” followed by the number of the fusion layer used in the FCN. The results demonstrate that Fusion3 obtains the highest

OA of 82.69% and all fusion models outperform the simple Color-D model. This verifies that the fusion nets improve urban scene classification compared to the early fusion of color and elevation data. We have learned from

Section 4.4 that for imagery Layer 2 is sensitive to trees, cars and clutter/background. It reacts, however, most strongly to impervious surfaces and trees for the elevation data. Layers 3, 4, and 5 have a similar sensitivity for all classes.

In

Table 4, we report F1 scores for the individual classes. The fusion models result in very competitive F1 scores. Concerning the six classes, Fusion3 outperforms all other for five of the six classes. When comparing the results for the individual features with the fusion model, we find that avoiding a particular conflicting layer (the layer having different sensitivity to specific classes) can improve the result for those classes. Thus, this investigation helps to effectively incorporate multi-modal data into a single model instead of using ensemble models with higher complexity and computational effort.

Results for the overall accuracy of the fusion model on the Potsdam data are presented in

Table 5. Also these results demonstrate that Fusion models obtain a higher

OA than other models. The later fusion produces a better

OA. This additionally verifies that avoiding a particular conflicting layer can improve the result. In

Table 6, we report

F1 scores for the individual classes. Compared to RGB and RGB-D, our fusion model greatly improves the

F1 score of building and low vegetation. This again demonstrates that the fusion model efficiently utilizes the multi-modal data.