Model of Color Parameters Variation and Correction in Relation to “Time-View” Image Acquisition Effects in Wheat Crop

Abstract

:1. Introduction

2. Materials and Methods

2.1. Location and Experimental Conditions

2.2. Biological Material and Crop Status

2.3. Taking Photos and Digital Images Analyzation

2.4. Experimental Data Analysis

3. Results

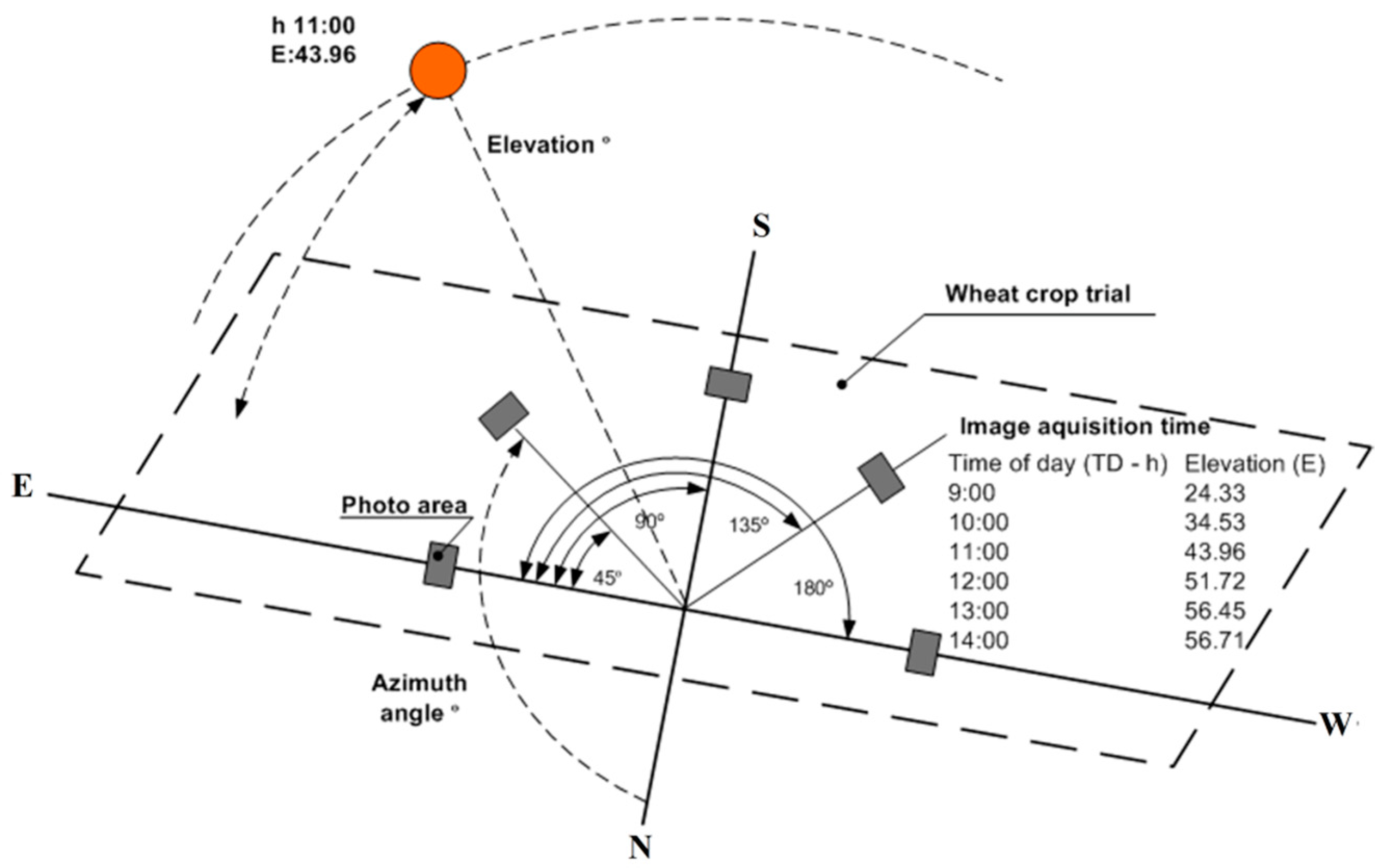

3.1. Subheadings Image Acquisition

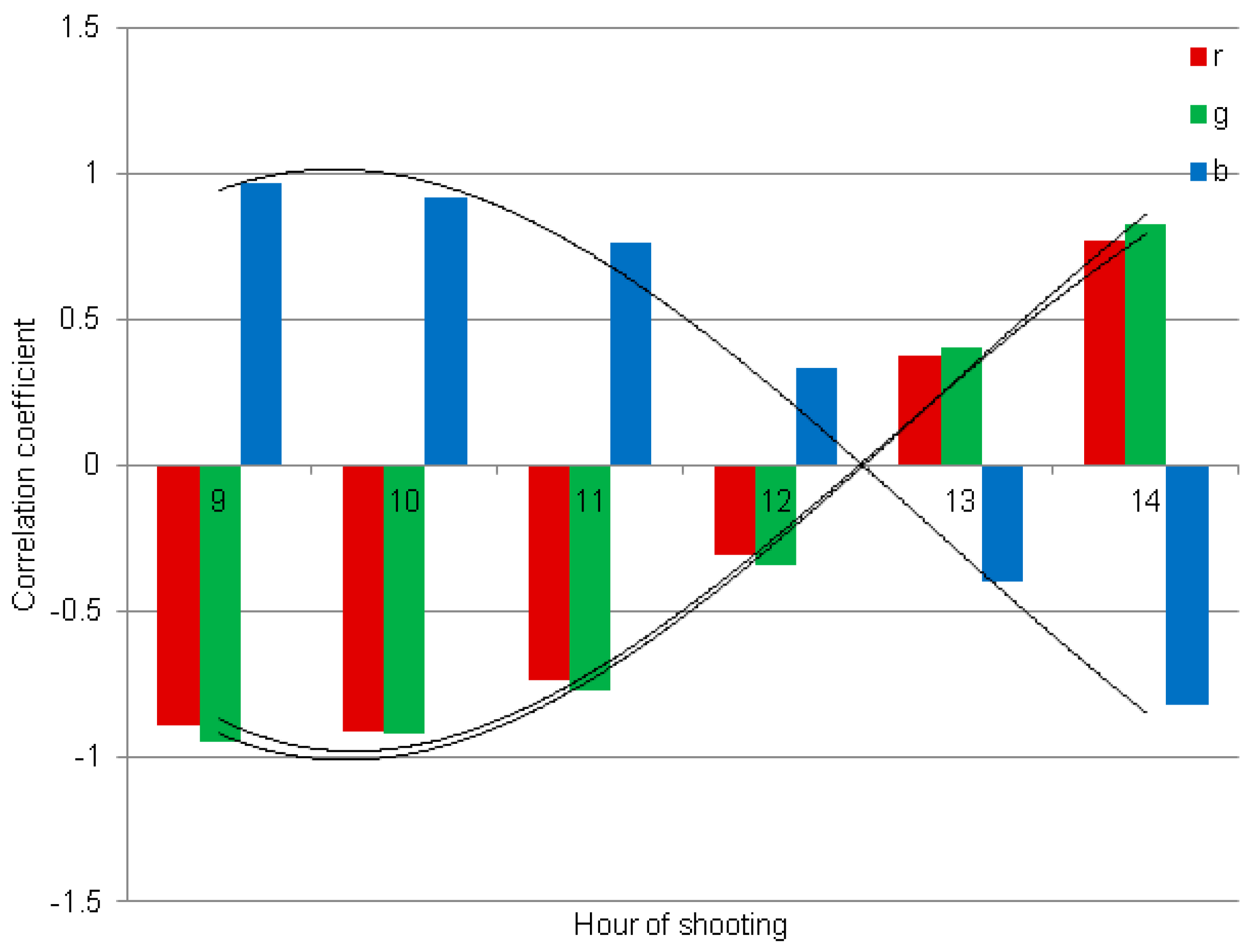

3.2. Normalized r,g,b—Time (t) Correlation at Different Angle (a)

3.3. Normalized r,g,b—Angle (a) Correlation at Different Time (t)

3.4. Corrections Model

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Rogan, J.; Franklin, J.; Roberts, D.A. A comparison of methods for monitoring multitemporal vegetation change using Thematic Mapper imagery. Remote Sens. Environ. 2002, 80, 143–156. [Google Scholar] [CrossRef]

- Xie, Y.; Sha, Z.; Yu, M. Remote sensing imagery in vegetation mapping: A review. J. Plant Ecol. 2008, 1, 9–23. [Google Scholar] [CrossRef]

- Panda, S.S.; Hoogenboom, G.; Paz, J.O. Remote sensing and geospatial technological applications for site-specific management of fruit and nut crops: A review. Remote Sens. 2010, 2, 1973–1997. [Google Scholar] [CrossRef] [Green Version]

- Cousins, S.A.O. Analysis of land-cover transitions based on 17th and 18th century cadastral maps and aerial photographs. Landsc. Ecol. 2001, 16, 41–54. [Google Scholar] [CrossRef]

- Lehmann, J.R.K.; Nieberding, F.; Prinz, T.; Knoth, C. Analysis of unmanned aerial system-based CIR images in forestry—A new perspective to monitor pest infestation levels. Forests 2015, 6, 594–612. [Google Scholar] [CrossRef] [Green Version]

- Torii, T. Research in autonomous agriculture vehicles in Japan. Comput. Electron. Agric. 2000, 25, 133–153. [Google Scholar] [CrossRef]

- Slaughter, D.C.; Giles, D.K.; Downey, D. Autonomous robotic weed control systems: A review. Comput. Electron. Agric. 2008, 61, 63–78. [Google Scholar] [CrossRef]

- Emmi, L.; Gonzales-de-Soto, M.; Pajares, G.; Gonzales-de-Santos, P. New trends in robotics for agriculture: Integration and assessment of a real fleet of robots. Sci. World J. 2014, 2014, 404059. [Google Scholar] [CrossRef] [Green Version]

- Van der Werf, H.M.G.; Petit, J. Evaluation of the environmental impact of agriculture at the farm level: A comparison and analysis of 12 indicator-based methods. Agric. Ecosyst. Environ. 2002, 93, 131–145. [Google Scholar] [CrossRef]

- Muñoz-Huerta, R.F.; Guevara-Gonzalez, R.G.; Contreras-Medina, L.M.; Torres-Pacheco, I.; Prado-Olivarez, J.; Ocampo-Velazquez, R.V. A review of methods for sensing the nitrogen status in plants: Advantages, disadvantages and recent advances. Sensors 2013, 13, 10823–10843. [Google Scholar] [CrossRef]

- Fiella, I.; Serrano, L.; Serra, J.; Penuelas, J. Evaluating wheat nitrogen status with canopy reflectance indices and discriminant analysis. Crop Sci. 1995, 35, 1400–1405. [Google Scholar] [CrossRef]

- Doraiswamy, P.C.; Moulin, S.; Cook, P.W.; Stern, A. Crop yield assessment from remote sensing. Photogramm. Eng. Remote Sens. 2003, 69, 665–674. [Google Scholar] [CrossRef]

- Yuzhu, H.; Xiaomei, W.; Song, S. Nitrogen determination in pepper (Capsicum frutescens L.) plants by color image analysis (RGB). Afr. J. Biotechnol. 2011, 10, 17737–17741. [Google Scholar]

- Delgado, J.A.; Kowalski, K.; Tebbe, C. The first Nitrogen Index app for mobile devices: Using portable technology for smart agricultural management. Comput. Electron. Agric. 2013, 91, 121–123. [Google Scholar] [CrossRef]

- Amundson, R.L.; Koehler, F.E. Utilization of DRIS for diagnosis of nutrient deficiencies in winter wheat. Agron. J. 1987, 79, 472–476. [Google Scholar] [CrossRef]

- Carter, G.A. Responses of leaf spectral reflectance to plant stress. Am. J. Bot. 1993, 80, 239–243. [Google Scholar] [CrossRef]

- Manjunath, K.R.; Potbar, M.B. Large area operational wheat yield model development and validation based on spectral and meteorological data. Int. J. Remote Sens. 2002, 23, 3023–3038. [Google Scholar] [CrossRef]

- Merzlyak, M.N.; Gitelson, A.A.; Chivkunova, O.B.; Solovchenko, A.E.; Pogosyan, S.I. Application of reflectance spectroscopy for analysis of higher plant pigments. Russ. J. Plant Physiol. 2003, 50, 704–710. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Chivkunova, O.B.; Merzlyak, M.N. Nondestructive estimation of anthocyanins and chlorophylls in anthocyanic leaves. Am. J. Bot. 2009, 96, 1861–1868. [Google Scholar] [CrossRef]

- Wright, D.L.; Rasmussen, V.P.; Ramsey, R.D.; Baker, D.J. Canopy reflectance estimation of wheat nitrogen content for grain protein management. GISCI Remote Sens. 2004, 41, 287–300. [Google Scholar] [CrossRef]

- De Souza, E.G.; Scharf, P.C.; Sudduth, K.A. Sun position and cloud effects on reflectance and vegetation indices of corn. Agron. J. 2010, 102, 734–744. [Google Scholar] [CrossRef] [Green Version]

- Witzenberger, A.; Hack, H.; van den Boom, T. Erläuterungen zum BBCH-Dezimal-Code für die Entwicklungsstadien des Getreides—Mit Abbildungen. Gesunde Pflanz. 1989, 41, 384–388. [Google Scholar]

- Lancashire, P.D.; Bleiholder, H.; Langeluddecke, P.; Stauss, R.; van den Boom, T.; Weber, E.; Witzen-Berger, A. A uniform decimal code for growth stages of crops and weeds. Ann. Appl. Biol. 1991, 119, 561–601. [Google Scholar] [CrossRef]

- Jia, L.; Chen, X.; Zhang, F.; Buerkert, A.; Römheld, V. Use of digital camera to assess nitrogen status oe winter wheat in the Northern China Plain. J. Plant Nutr. 2004, 27, 441–450. [Google Scholar] [CrossRef]

- Kakran, A.; Mahajan, R. Monitoring growth of wheat crop using digital image processing. Int. J. Comput. Appl. 2012, 50, 18–22. [Google Scholar] [CrossRef]

- Rasband, W.S. ImageJ; U.S. National Institutes of Health: Bethesda, MD, USA, 1997–2018. [Google Scholar]

- Lee, K.-J.; Lee, B.-W. Estimation of rice growth and nitrogen nutrition status using color digital camera image analysis. Eur. J. Agron. 2013, 48, 57–65. [Google Scholar] [CrossRef]

- Ahmad, I.S.; Reid, J.F. Evaluation of colour representations for maize images. J. Agric. Eng. Res. 1996, 63, 185–196. [Google Scholar] [CrossRef]

- Mao, W.; Wang, Y.; Wang, Y. Real-time detection of between—Row weeds using machine vision. In Proceedings of the 2003 ASAE Annual Meeting. American Society of Agricultural and Biological Engineers, Las Vegas, NV, USA, 27–30 July 2003. [Google Scholar]

- Karcher, D.E.; Richardson, M.D. Quantifying turfgrass color using digital image analysis. Crop Sci. 2003, 43, 943–951. [Google Scholar] [CrossRef]

- Hammer, Ø.; Harper, D.A.T.; Ryan, P.D. PAST: Paleontological statistics software package for education and data analysis. Palaeontol. Electron. 2001, 4, 1–9. [Google Scholar]

- Thessler, S.; Kooistra, L.; Teye, F.; Huitu, H.; Bregt, A.K. Geosensors to support crop production: Current applications and user requirements. Sensors 2011, 11, 6656–6684. [Google Scholar] [CrossRef] [Green Version]

- Tendero, Y.; Landeau, S.; Gilles, J. Non-uniformity correction of infrared images by midway equalization. Image Process. Line 2012, 2, 134–146. [Google Scholar] [CrossRef] [Green Version]

- Li, L.; Zhang, Q.; Huang, D. A review of imaging techniques for plant phenotyping. Sensors 2014, 14, 20078–20111. [Google Scholar] [CrossRef]

- Jackson, R.D.; Pinter, P.J.; Idso, S.B.; Reginato, R.J. Wheat spectral reflectance: Interactions between crop configuration, sun elevation, and azimuth angle. Appl. Opt. 1979, 18, 3730–3733. [Google Scholar] [CrossRef]

- Graeff, S.; Claupein, W. A novel approach revealing information on wheat (Triticum aestivum L.) nitrogen status by leaf reflectance measurements. Pflanzenbauwissenschaften 2006, 10, 66–74. [Google Scholar]

- Colwell, J.E. Vegetation canopy reflectance. Remote Sens. Environ. 1974, 3, 175–183. [Google Scholar] [CrossRef]

- Xiao, X.; He, L.; Salas, W.; Li, C.; Moore, B.; Zhao, R.; Frolking, S.; Boles, S. Quantitative relationships between field-measured leaf area index and vegetation index derived from VEGETATION images for paddy rice fields. Int. J. Remote Sens. 2002, 23, 3595–3604. [Google Scholar] [CrossRef]

- Kollenkark, J.C.; Vanderbilt, V.C.; Daughtry, C.S.T.; Bauer, M.E. Influence of solar illumination angle on soybean canopy reflectance. Appl. Opt. 1982, 21, 1179–1184. [Google Scholar] [CrossRef]

- Ranson, K.J.; Biehl, L.L.; Bauer, M.E. Variation in spectral response of soybeans with respect to illumination, view and canopy geometry. Int. J. Remote Sens. 1985, 6, 1827–1842. [Google Scholar] [CrossRef]

- Ranson, K.J.; Daughtry, C.S.T.; Biehl, L.I.; Bauer, M.E. Sun-view angle effects on reflectance factors of corn canopies. Remote Sens. Environ. 1985, 18, 147–161. [Google Scholar] [CrossRef]

- Ranson, K.J.; Daughtry, C.S.T.; Biehl, L.L. Sun angle, view angle, and background effects on spectral response of simulated balsam fir canopies. Photogramm. Eng. Remote Sens. 1986, 52, 649–658. [Google Scholar]

- Sumner, E.M. Use of the DRIS system in foliar diagnosis of crops at high yield levels. Commun. Soil Sci. Plant Anal. 1977, 8, 251–268. [Google Scholar] [CrossRef]

- Bouman, B.A.M. Crop modeling and remote sensing for yield prediction. Neth. J. Agric. Sci. 1995, 43, 143–161. [Google Scholar]

- Phillips, S.B.; Keahey, D.A.; Warren, J.G.; Mullins, G.L. Estimating winter wheat tiller density using spectral reflectance sensors for early-spring, variable rate nitrogen applications. Agron. J. 2004, 96, 591–600. [Google Scholar] [CrossRef]

- Jørgensen, R.N.; Hansen, P.M.; Bro, R. Exploratory study of winter wheat reflectance during vegetative growth using three-mode component analysis. Int. J. Remote Sens. 2006, 27, 919–937. [Google Scholar] [CrossRef]

- Graeff, S.; Pfenning, J.; Claupein, W.; Liebig, H.P. Evaluation of image analysis to determine the n-fertilizer demand of broccoli plants (Brassica oleracea convar. botrytis var. italica). Adv. Opt. Technol. 2008, 2008, 359760. [Google Scholar] [CrossRef] [Green Version]

- Shanahan, J.F.; Kitchen, N.R.; Raun, W.R.; Schepers, J.S. Responsive in-season nitrogen management for cereals. Comput. Electron. Agric. 2008, 6, 51–62. [Google Scholar] [CrossRef] [Green Version]

- Stellacci, A.M.; Castrignanò, A.; Diacono, M.; Troccoli, A.; Ciccarese, A.; Armenise, E.; Gallo, A.; De Vita, P.; Lonigro, A.; Mastro, M.A.; et al. Combined approach based on principal component analysis and canonical discriminant analysis for investigating hyperspectral plant response. Ital. J. Agron. 2012, 7, 247–253. [Google Scholar] [CrossRef] [Green Version]

- Herbei, M.V.; Sala, F. Use Landsat image to evaluate vegetation stage in sunflower crops. AgroLife Sci. J. 2015, 4, 79–86. [Google Scholar]

- Herbei, M.; Sala, F. Biomass prediction model in maize based on satellite images. AIP Conf. Proc. 2016, 1738, 1–4. [Google Scholar]

- Lebourgeois, V.; Bégué, A.; Labbé, S.; Mallavan, B.; Prévot, L.; Roux, B. Can commercial digital cameras be used as multispectral sensors? A crop monitoring test. Sensors 2008, 8, 7300–7322. [Google Scholar] [CrossRef]

- Kawashima, S.; Nakatani, M. An algorithm for estimating chlorophyll content in leaves using a video camera. Ann. Bot. 1998, 81, 49–54. [Google Scholar] [CrossRef] [Green Version]

- Golzarian, M.R.; Frick, R.A. Classification of images of wheat, ryegrass and brome grass species at early growth stages using principal component analysis. Plant Methods 2011, 7, 28. [Google Scholar] [CrossRef] [Green Version]

- Guendouz, A.; Guessoum, S.; Maamari, K.; Hafsi, M. Predicting the efficiency of using the RGB (Red, Green and Blue) reflectance for estimating leaf chlorophyll content of Durum wheat (Triticum durum Desf.) genotypes under semi arid conditions. Am. Eurasian J. Sustain. Agric. 2012, 6, 102–106. [Google Scholar]

- Yadav, S.P.; Ibaraki, Y.; Gupta, D.S. Estimation of the chlorophyll content of micropropagated potato plants using RGB based image analysis. Plant Cell Tissue Org. Cult. 2010, 100, 183–188. [Google Scholar] [CrossRef]

- Hemming, J.; Rath, T. Computer-vision based weed identification under field condition using controlled lighting. J Agric. Eng. Res. 2000, 78, 233–243. [Google Scholar] [CrossRef] [Green Version]

- Tillet, N.P.; Hague, T.; Miles, S.J. A field assessment of a potential method for weed and crop mapping geometry. Comput. Electron. Agric. 2001, 32, 229–246. [Google Scholar] [CrossRef]

- Aitkenhead, M.J.; Dalgetty, I.A.; Mullins, C.E.; McDonald, A.J.S.; Strachan, N.J.C. Weed and crop discrimination using image analysis and artificial intelligence methods. Comput. Electron. Agric. 2003, 39, 157–171. [Google Scholar] [CrossRef]

- Aldea, M.; Frank, T.D.; DeLucia, E.H. A method for quantitative analysis for spatially variable physiological processes across leaf surfaces. Photosynth. Res. 2006, 90, 161–172. [Google Scholar] [CrossRef]

- Mirik, M.; Michels, G.J., Jr.; Kassymzhanova-Mirik, S.; Elliott, N.C.; Catana, V.; Jones, D.B.; Bowling, R. Using digital image analysis and spectral reflectance data to quantify damage by greenbug (Hemitera: Aphididae) in winter wheat. Comput. Electron. Agric. 2006, 51, 86–98. [Google Scholar] [CrossRef]

- Dana, W.; Ivo, W. Computer image analysis of seed shape and seed color of flax cultivar description. Comput. Electron. Agric. 2008, 61, 126–135. [Google Scholar] [CrossRef]

- Fernandez-Gallego, J.A.; Kefauver, S.C.; Vatter, T.; Gutiérrez, N.A.; Nieto-Taladriz, M.T.; Araus, J.L. Low-cost assessment of grain yield in durum wheat using RGB images. Eur. J. Agronom. 2019, 105, 146–156. [Google Scholar] [CrossRef]

- Pinter, P.J.; Jackson, R.D.; Idso, S.B.; Reginato, R.J. Diurnal patterns of wheat spectral reflectances. IEEE Trans. Geosc. Remote Sens. 1983, GE-21, 156–163. [Google Scholar] [CrossRef]

- Pinter, P.J.; Jackson, R.D.; Ezra, C.E.; Gausman, H.W. Sun-angle and canopy-architecture effects on the spectral reflectance of six wheat cultivars. Int. J. Remote Sens. 1985, 6, 1813–1825. [Google Scholar] [CrossRef]

- Shibayama, M.; Wiegand, C.L. View azimuth and zenith, and solar angle effects on wheat canopy reflectance. Remote Sens. Environ. 1985, 18, 91–103. [Google Scholar] [CrossRef]

| Time (GMT+3) (t) | Azimuth | Elevation | Angle (a) | r | g | b | INT | NDI | DGCI |

|---|---|---|---|---|---|---|---|---|---|

| 9 | 96.63 | 24.51 | 0 | 0.330 | 0.530 | 0.139 | 73.570 | −0.229 | 0.439 |

| 45 | 0.341 | 0.517 | 0.143 | 64.567 | −0.203 | 0.452 | |||

| 90 | 0.323 | 0.486 | 0.191 | 72.433 | −0.198 | 0.513 | |||

| 135 | 0.311 | 0.447 | 0.242 | 82.303 | −0.177 | 0.596 | |||

| 180 | 0.293 | 0.455 | 0.253 | 64.137 | −0.214 | 0.676 | |||

| 10 | 108.47 | 34.53 | 0 | 0.369 | 0.486 | 0.146 | 88.147 | −0.136 | 0.383 |

| 45 | 0.378 | 0.499 | 0.123 | 93.887 | −0.136 | 0.339 | |||

| 90 | 0.360 | 0.474 | 0.165 | 102.493 | −0.135 | 0.382 | |||

| 135 | 0.339 | 0.434 | 0.227 | 116.137 | −0.122 | 0.466 | |||

| 180 | 0.331 | 0.410 | 0.259 | 124.887 | −0.105 | 0.521 | |||

| 11 | 122.96 | 43.96 | 0 | 0.350 | 0.471 | 0.179 | 95.430 | −0.146 | 0.422 |

| 45 | 0.369 | 0.498 | 0.132 | 102.140 | −0.147 | 0.340 | |||

| 90 | 0.360 | 0.486 | 0.154 | 103.690 | −0.148 | 0.371 | |||

| 135 | 0.337 | 0.450 | 0.212 | 107.593 | −0.142 | 0.459 | |||

| 180 | 0.325 | 0.415 | 0.259 | 120.507 | −0.120 | 0.536 | |||

| 12 | 141.51 | 51.73 | 0 | 0.341 | 0.452 | 0.208 | 103.833 | −0.138 | 0.459 |

| 45 | 0.358 | 0.487 | 0.155 | 93.317 | −0.152 | 0.391 | |||

| 90 | 0.365 | 0.494 | 0.142 | 100.000 | −0.149 | 0.359 | |||

| 135 | 0.350 | 0.471 | 0.179 | 107.750 | −0.145 | 0.399 | |||

| 180 | 0.332 | 0.432 | 0.237 | 114.517 | −0.130 | 0.490 | |||

| 13 | 165.24 | 56.46 | 0 | 0.329 | 0.429 | 0.243 | 107.740 | −0.130 | 0.521 |

| 45 | 0.344 | 0.465 | 0.191 | 100.110 | −0.149 | 0.437 | |||

| 90 | 0.358 | 0.486 | 0.156 | 102.200 | −0.149 | 0.374 | |||

| 135 | 0.359 | 0.484 | 0.157 | 109.273 | −0.147 | 0.364 | |||

| 180 | 0.337 | 0.450 | 0.213 | 109.933 | −0.142 | 0.452 | |||

| 14 | 191.95 | 56.73 | 0 | 0.333 | 0.415 | 0.252 | 118.900 | −0.109 | 0.510 |

| 45 | 0.340 | 0.447 | 0.213 | 101.363 | −0.134 | 0.472 | |||

| 90 | 0.357 | 0.471 | 0.172 | 101.930 | −0.136 | 0.394 | |||

| 135 | 0.372 | 0.492 | 0.137 | 105.383 | −0.137 | 0.334 | |||

| 180 | 0.354 | 0.469 | 0.176 | 104.350 | −0.138 | 0.391 |

| Angle (a) | r | g | b |

|---|---|---|---|

| 0 | −0.395 | −0.983 | 0.986 |

| 45 | −0.395 | −0.973 | 0.873 |

| 90 | 0.588 | −0.192 | −0.422 |

| 135 | 0.962 | 0.926 | −0.993 |

| 180 | 0.879 | 0.473 | −0.885 |

| Time (GMT+2) | r | g | b |

|---|---|---|---|

| 9 | −0.892 | −0.949 | 0.968 |

| 10 | −0.915 | −0.920 | 0.915 |

| 11 | −0.737 | −0.773 | 0.759 |

| 12 | −0.313 | −0.348 | 0.334 |

| 13 | 0.375 | 0.402 | −0.398 |

| 14 | 0.767 | 0.828 | −0.822 |

| Hour/Angle | 0° | 15° | 30° | 45° | 60° | 75° | 90° |

|---|---|---|---|---|---|---|---|

| 9 | 1.059023 | 1.054631 | 1.053435 | 1.055414 | 1.060604 | 1.069099 | 1.081059 |

| 10 | 1.034768 | 1.026829 | 1.021986 | 1.020152 | 1.021295 | 1.025435 | 1.032645 |

| 11 | 1.028831 | 1.017307 | 1.008937 | 1.003572 | 1.001119 | 1.001534 | 1.004825 |

| 12 | 1.040586 | 1.025066 | 1.012925 | 1.003938 | 0.997946 | 0.994843 | 0.994577 |

| 13 | 1.071283 | 1.050918 | 1.03436 | 1.021286 | 1.011452 | 1.00468 | 1.000851 |

| 14 | 1.124396 | 1.097702 | 1.07554 | 1.057439 | 1.043036 | 1.032052 | 1.024285 |

| Hour/Angle | 0° | 15° | 30° | 45° | 60° | 75° | 90° |

|---|---|---|---|---|---|---|---|

| 9 | 1.005337 | 1.001366 | 1.00109 | 1.004503 | 1.011682 | 1.022789 | 1.038083 |

| 10 | 0.997142 | 0.9887 | 0.98394 | 0.982757 | 0.985126 | 0.991097 | 1.000804 |

| 11 | 1.009517 | 0.996261 | 0.986909 | 0.981253 | 0.979167 | 0.980606 | 0.985601 |

| 12 | 1.044049 | 1.025002 | 1.01037 | 0.999804 | 0.993064 | 0.989997 | 0.990537 |

| 13 | 1.105488 | 1.078756 | 1.057373 | 1.040781 | 1.02857 | 1.020449 | 1.016231 |

| 14 | 1.203548 | 1.165623 | 1.13472 | 1.109916 | 1.09052 | 1.076022 | 1.06606 |

| Hour/Angle | 0° | 15° | 30° | 45° | 60° | 75° | 90° |

|---|---|---|---|---|---|---|---|

| 9 | 1.211144 | 1.228411 | 1.219851 | 1.186519 | 1.132312 | 1.06291 | 0.984449 |

| 10 | 1.042227 | 1.083681 | 1.106936 | 1.109476 | 1.091017 | 1.053582 | 1.000974 |

| 11 | 0.907673 | 0.961616 | 1.004588 | 1.032773 | 1.043386 | 1.035318 | 1.009414 |

| 12 | 0.798488 | 0.858024 | 0.912503 | 0.958201 | 0.991399 | 1.009034 | 1.009351 |

| 13 | 0.7085 | 0.769565 | 0.830044 | 0.887002 | 0.936901 | 0.975969 | 1.000788 |

| 14 | 0.633347 | 0.693569 | 0.756407 | 0.819954 | 0.881494 | 0.937547 | 0.984149 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sala, F.; Popescu, C.A.; Herbei, M.V.; Rujescu, C. Model of Color Parameters Variation and Correction in Relation to “Time-View” Image Acquisition Effects in Wheat Crop. Sustainability 2020, 12, 2470. https://doi.org/10.3390/su12062470

Sala F, Popescu CA, Herbei MV, Rujescu C. Model of Color Parameters Variation and Correction in Relation to “Time-View” Image Acquisition Effects in Wheat Crop. Sustainability. 2020; 12(6):2470. https://doi.org/10.3390/su12062470

Chicago/Turabian StyleSala, Florin, Cosmin Alin Popescu, Mihai Valentin Herbei, and Ciprian Rujescu. 2020. "Model of Color Parameters Variation and Correction in Relation to “Time-View” Image Acquisition Effects in Wheat Crop" Sustainability 12, no. 6: 2470. https://doi.org/10.3390/su12062470