Radiometric Calibration for Multispectral Camera of Different Imaging Conditions Mounted on a UAV Platform

Abstract

1. Introduction

2. Materials and Methods

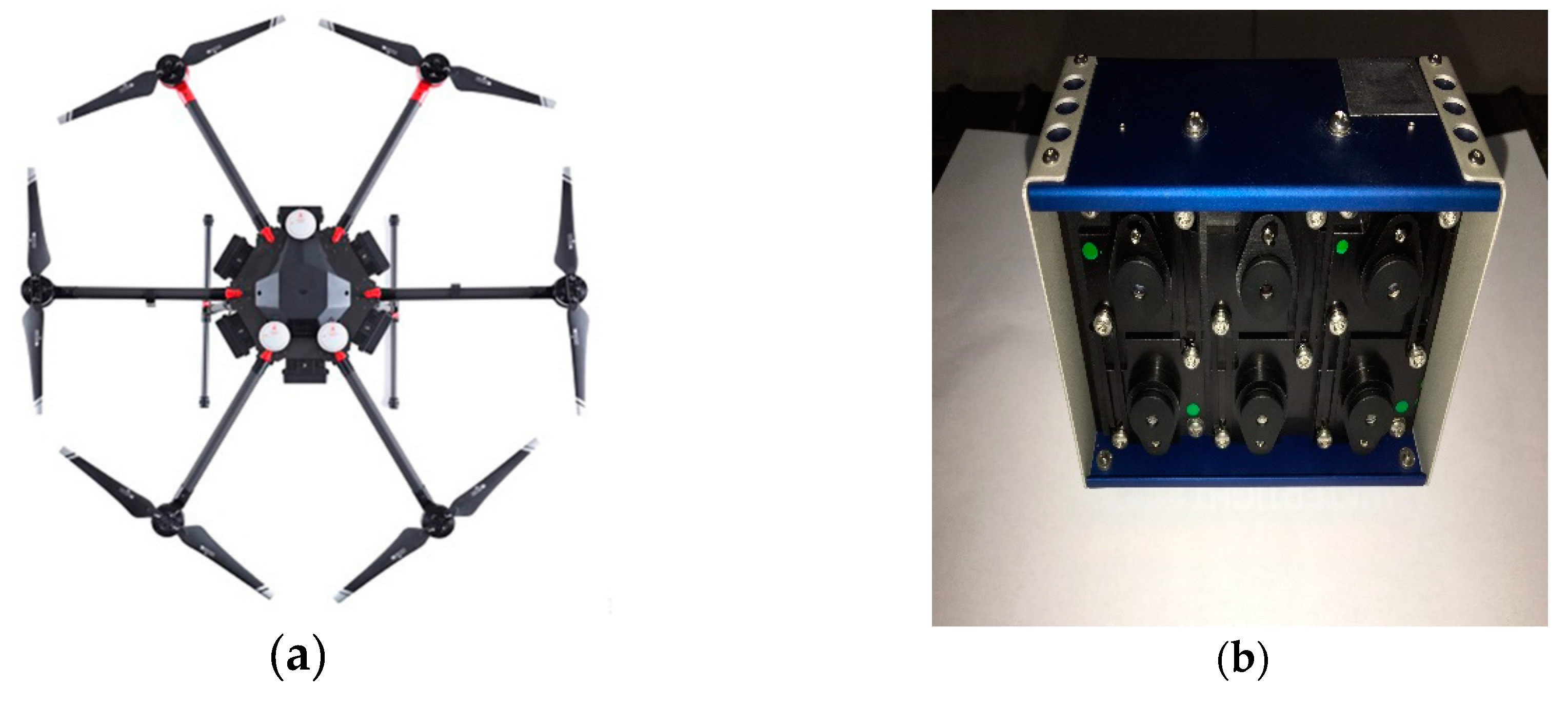

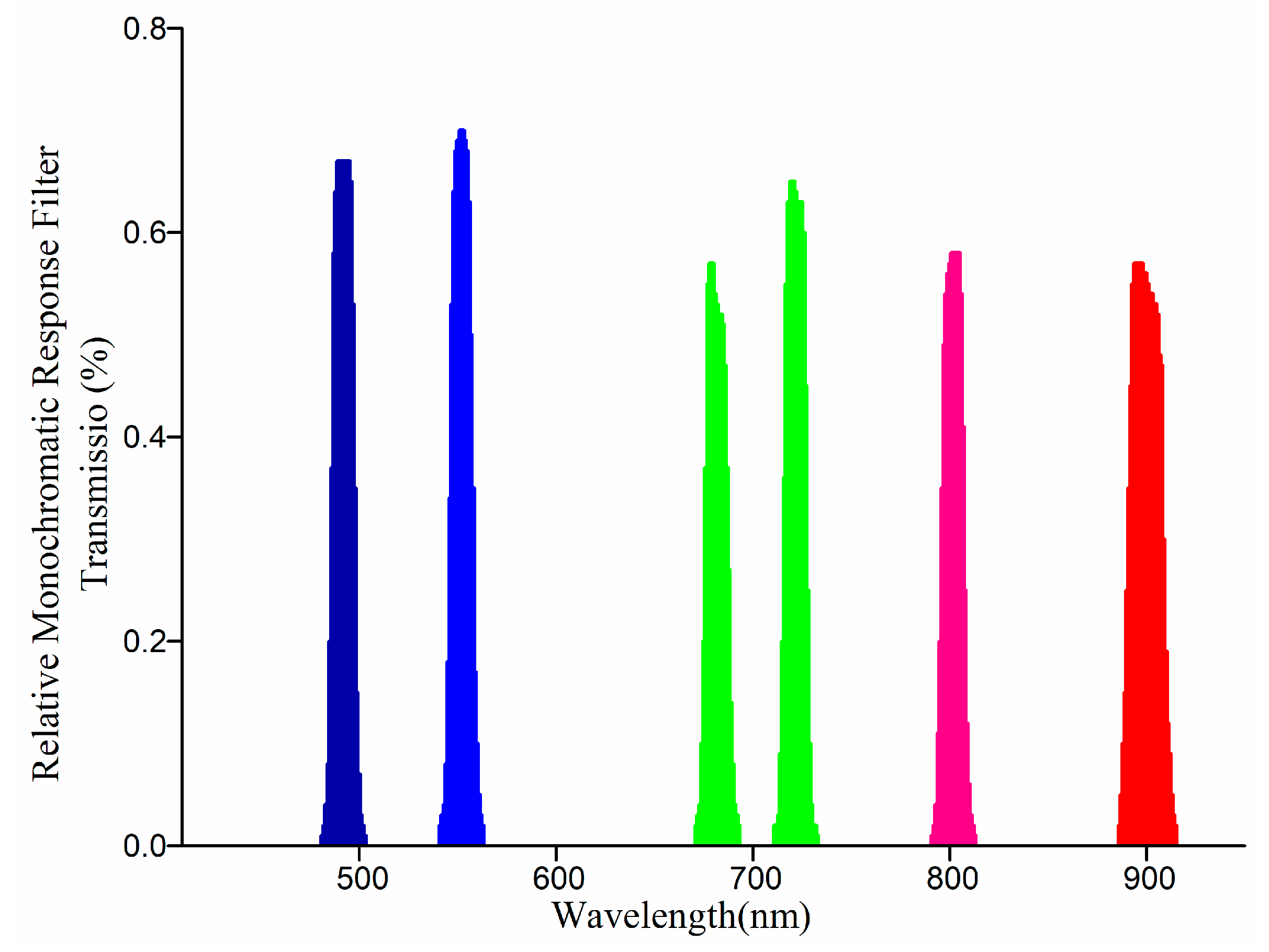

2.1. UAV, Sensors and Image Acquisition

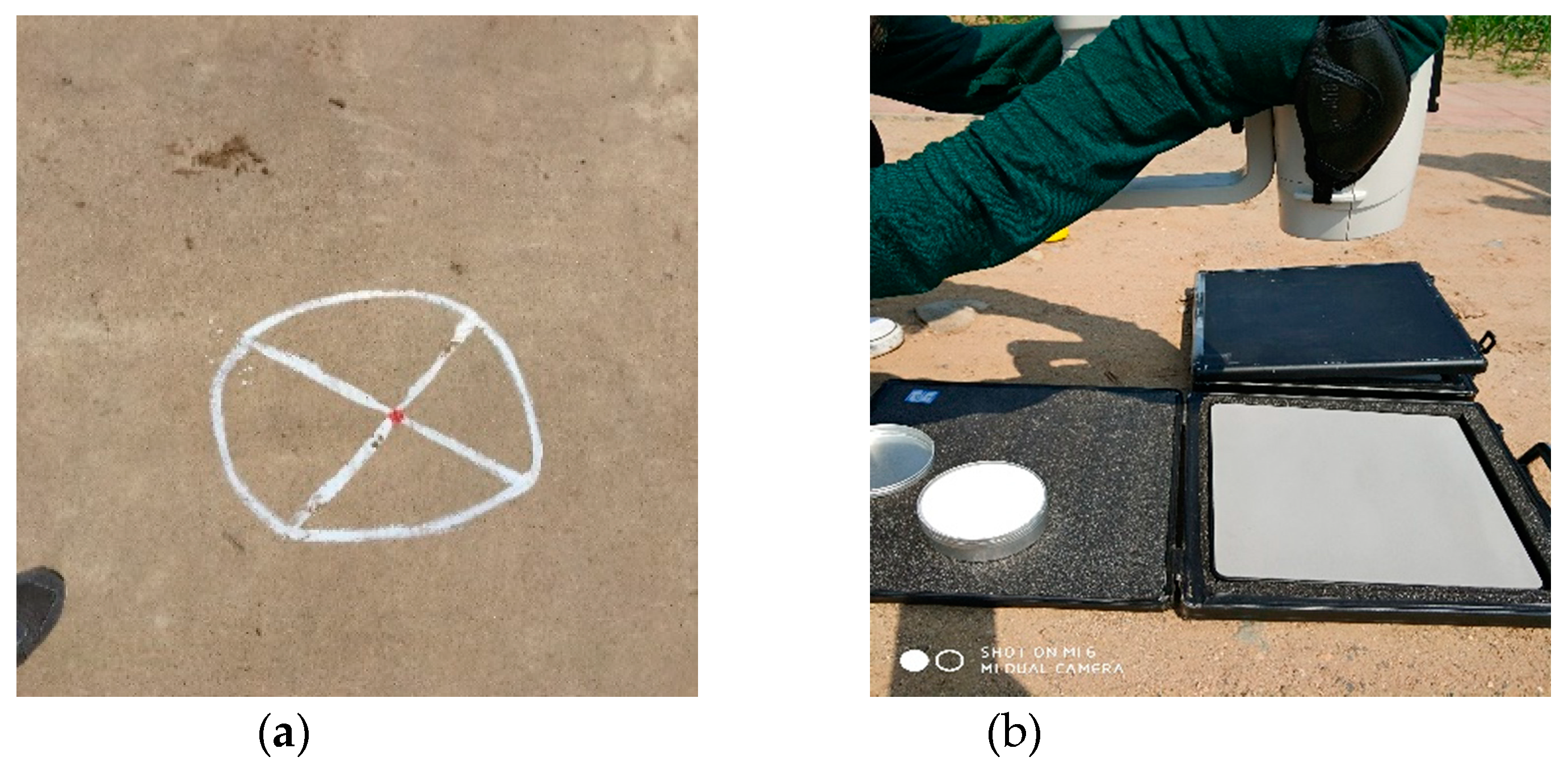

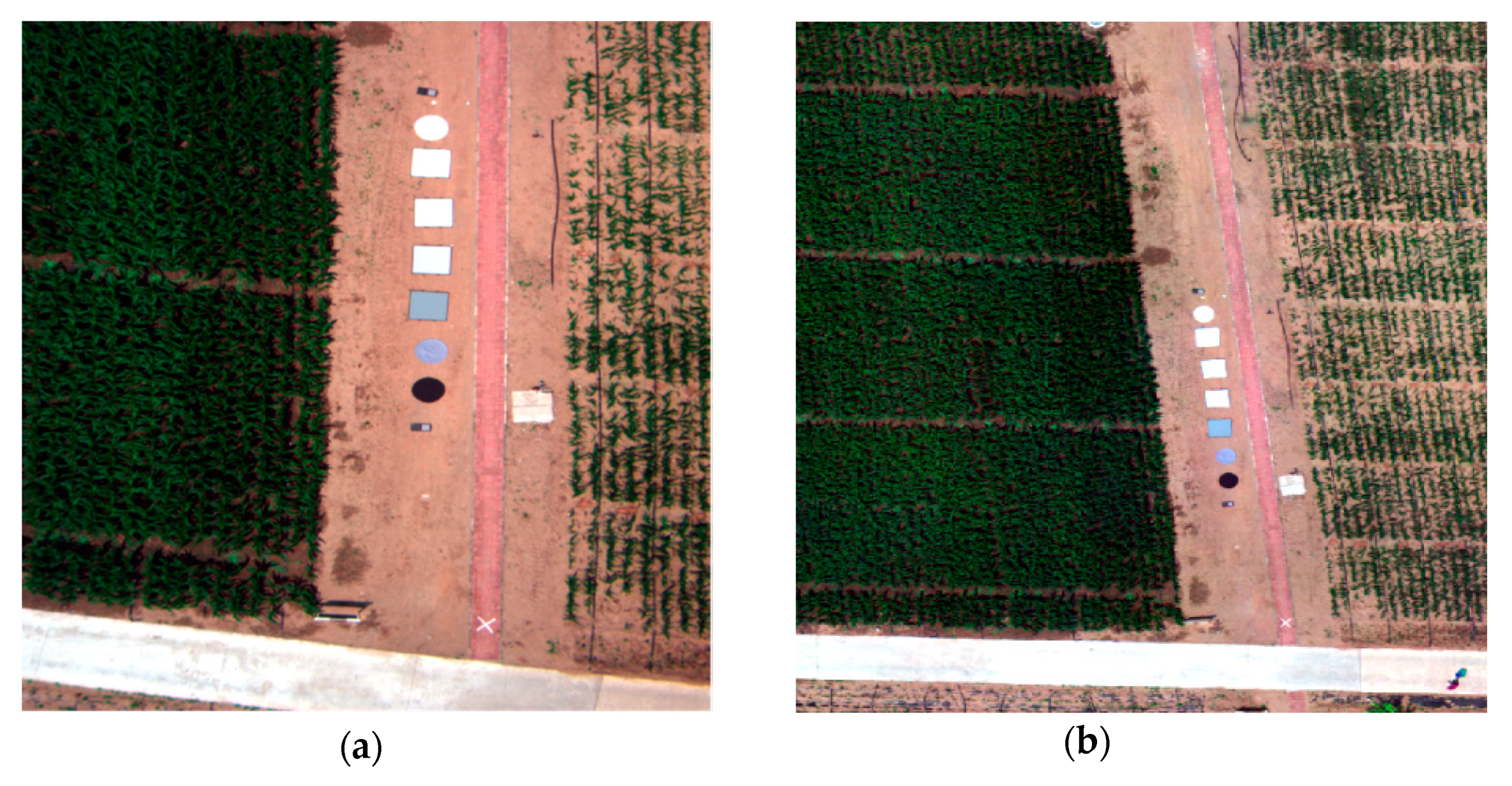

2.2. Ground Data Collection

2.3. Data Processing

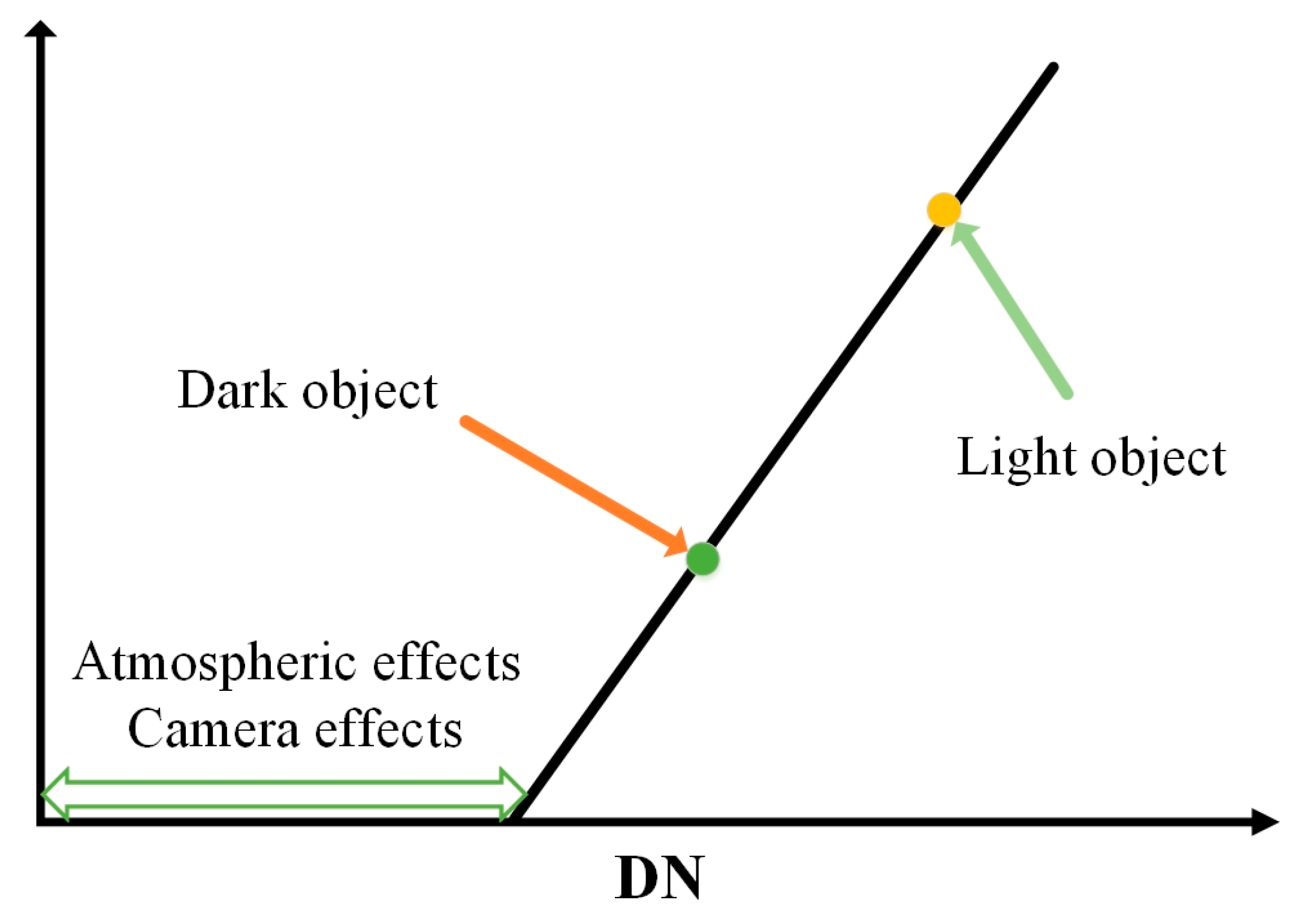

2.4. Methodology

3. Results

3.1. Single Images from Different Altitudes

3.1.1. The Optimal Selection of Calibration Targets

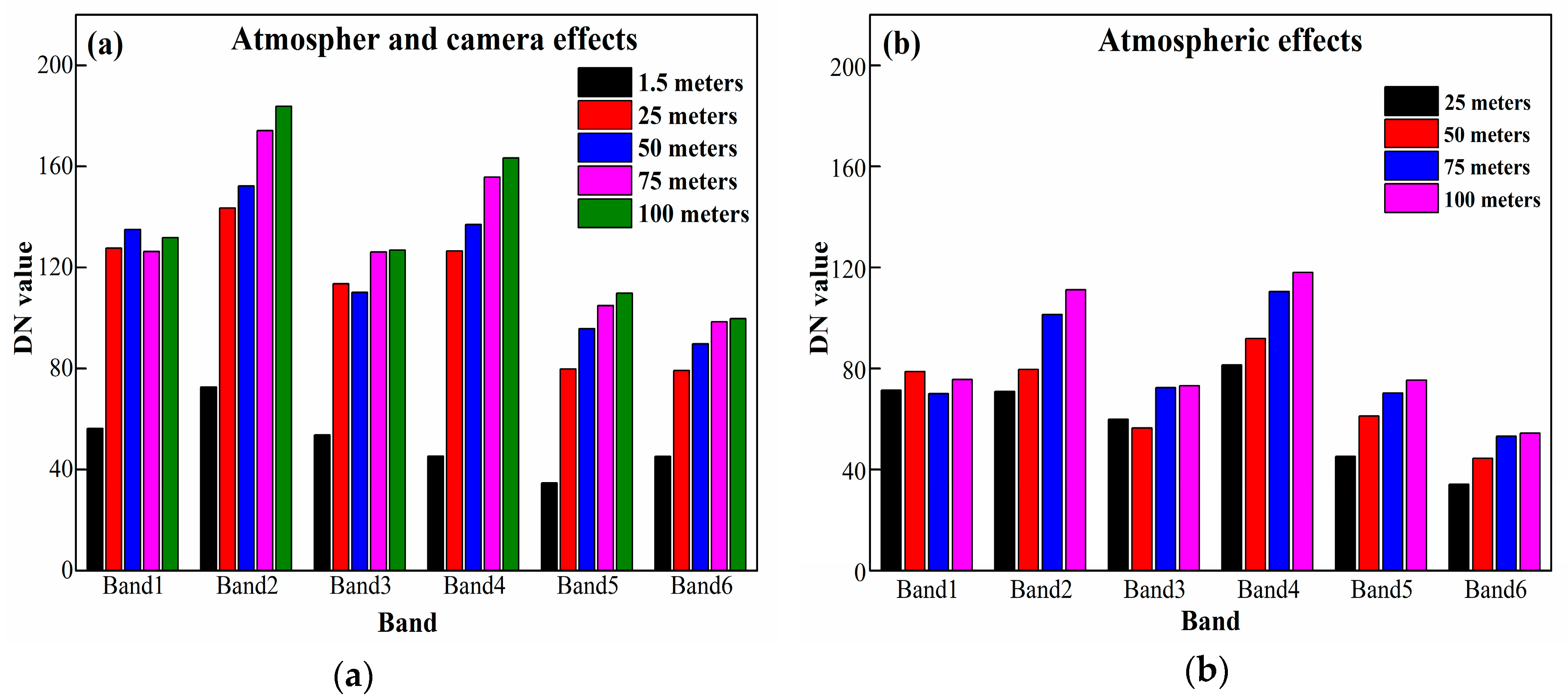

3.1.2. Atmospheric Effects

3.2. Mosaic Images of Different Weather Conditions

3.2.1. Effects of Mosaic to Radiometric Calibration

3.2.2. Test of Linear Regression Method in Different Weather Conditions

3.3. Universal Calibration Equation for Mini MCA 6

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Laliberte, A.S.; Goforth, M.A.; Steele, C.M.; Rango, A. Multispectral Remote Sensing from Unmanned Aircraft: Image Processing Workflows and Applications for Rangeland Environments. Remote Sens. 2011, 3, 2529–2551. [Google Scholar] [CrossRef]

- Jhan, J.-P.; Rau, J.-Y.; Huang, C.-Y. Band-to-band registration and ortho-rectification of multilens/multispectral imagery: A case study of MiniMCA-12 acquired by a fixed-wing UAS. Isprs J. Photogramm. Remote Sens. 2016, 114, 66–77. [Google Scholar] [CrossRef]

- Mulla, D.J. Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Hamylton, S.; Hedley, J.; Beaman, R. Derivation of High-Resolution Bathymetry from Multispectral Satellite Imagery: A Comparison of Empirical and Optimisation Methods through Geographical Error Analysis. Remote Sens. 2015, 7, 16257–16273. [Google Scholar] [CrossRef]

- He, Y.; Bo, Y.; Chai, L.; Liu, X.; Li, A. Linking in situ LAI and fine resolution remote sensing data to map reference LAI over cropland and grassland using geostatistical regression method. Int. J. Appl. Earth Obs. Geoinf. 2016, 50, 26–38. [Google Scholar] [CrossRef]

- Choudhury, B.J.; Tucker, C.J. Monitoring global vegetation using Nimbus-7 37 GHz Data Some empirical relations. Int. J. Remote Sens. 1987, 8, 1085–1090. [Google Scholar] [CrossRef]

- Duan, T.; Chapman, S.C.; Guo, Y.; Zheng, B. Dynamic monitoring of NDVI in wheat agronomy and breeding trials using an unmanned aerial vehicle. Field Crop. Res. 2017, 210, 71–80. [Google Scholar] [CrossRef]

- Grebby, S.; Cunningham, D.; Tansey, K.; Naden, J. The Impact of Vegetation on Lithological Mapping Using Airborne Multispectral Data: A Case Study for the North Troodos Region, Cyprus. Remote Sens. 2014, 6, 10860–10887. [Google Scholar] [CrossRef]

- Zhang, X.; Jayavelu, S.; Liu, L.; Friedl, M.A.; Henebry, G.M.; Yan, L.; Schaaf, C.B.; Richardson, A.D.; Gray, J. Evaluation of land surface phenology from VIIRS data using time series of PhenoCam imagery. Agric. For. Meteorol. 2018, 256, 137–149. [Google Scholar] [CrossRef]

- Honkavaara, E.; Arbiol, R.; Markelin, L.; Martinez, L.; Cramer, M.; Bovet, S.; Chandelier, L.; Ilves, R.; Klonus, S.; Marshal, P.; et al. Digital Airborne Photogrammetry—A New Tool for Quantitative Remote Sensing?—A State-of-the-Art Review On Radiometric Aspects of Digital Photogrammetric Images. Remote Sens. 2009, 1, 577–605. [Google Scholar] [CrossRef]

- Berk, A.; Anderson, G.P.; Acharya, P.K.; Dothe, H.; AdlerGolden, S.M.; Richtsmeier, S.C.; Hoke, M.L. MODTRAN4 radiative transfer modeling for atmospheric correction. Int. Soc. Opt. Photonics 1999, 3756, 348–353. [Google Scholar]

- Anderson, G.P.; Berk, A.; Acharya, P.K.; Dothe, H.; Adlergolden, S.M.; Ratkowski, A.J.; Felde, G.W.; Gardner, J.A.; Hoke, M.L.; Richtsmeier, S.C. Algorithms for Multispectral, Hyperspectral, and Ultraspectral Imagery VI, 2000. In MODTRAN4: Radiative Transfer Modeling for Remote Sensing; SPIE: San Jose, CA, USA, 2000. [Google Scholar]

- Vermote, E.F.; Tanre, D.; Deuze, J.L.; Herman, M. Second Simulation of the Satellite Signal in the Solar Spectrum, 6S: An overview. Geosci. Remote Sens. IEEE Trans. 2002, 35, 675–686. [Google Scholar] [CrossRef]

- Wu, J.; Wang, D.; Bauer, M.E. Image-based atmospheric correction of QuickBird imagery of Minnesota cropland. Remote Sens. Environ. 2005, 99, 315–325. [Google Scholar] [CrossRef]

- Mishra, V.D.; Sharma, J.K.; Singh, K.K.; Thakur, N.K.; Kumar, M. Assessment of different topographic corrections in AWiFS satellite imagery of Himalaya terrain. J. Earth Syst. Sci. 2009, 118, 11–26. [Google Scholar] [CrossRef]

- Richter, R. A spatially adaptive fast atmospheric correction algorithm. Int. J. Remote Sens. 1996, 17, 1201–1214. [Google Scholar] [CrossRef]

- Liang, S. Quantitative Remote Sensing of Land Surfaces; Wiley-Interscience: Hoboken, NJ, USA, 2004; pp. 413–415. [Google Scholar]

- Thome, K.J. Absolute radiometric calibration of Landsat 7 ETM+ using the reflectance-based method. Remote Sens. Environ. 2001, 78, 27–38. [Google Scholar] [CrossRef]

- Ahmed, O.S.; Shemrock, A.; Chabot, D.; Dillon, C.; Williams, G.; Wasson, R.; Franklin, S.E. Hierarchical land cover and vegetation classification using multispectral data acquired from an unmanned aerial vehicle. Int. J. Remote Sens. 2017, 38, 2037–2052. [Google Scholar] [CrossRef]

- Akar, A.; Gökalp, E.; Akar, Ö.; Yılmaz, V. Improving classification accuracy of spectrally similar land covers in the rangeland and plateau areas with a combination of WorldView-2 and UAV images. Geocarto Int. 2016, 32, 990–1003. [Google Scholar] [CrossRef]

- Vega, F.A.; Ramírez, F.C.; Saiz, M.P.; Rosúa, F.O. Multi-temporal imaging using an unmanned aerial vehicle for monitoring a sunflower crop. Biosyst. Eng. 2015, 132, 19–27. [Google Scholar] [CrossRef]

- Bagheri, N. Development of a high-resolution aerial remote-sensing system for precision agriculture. Int. J. Remote Sens. 2016, 38, 2053–2065. [Google Scholar] [CrossRef]

- Garciaruiz, F.; Sankaran, S.; Maja, J.M.; Lee, W.S.; Rasmussen, J.; Ehsani, R. Comparison of two aerial imaging platforms for identification of Huanglongbing-infected citrus trees. Comput. Electron. Agric. 2013, 91, 106–115. [Google Scholar] [CrossRef]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating Multispectral Images and Vegetation Indices for Precision Farming Applications from UAV Images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef]

- Primicerio, J.; Gennaro, S.F.D.; Fiorillo, E.; Genesio, L.; Lugato, E.; Matese, A.; Vaccari, F.P. A flexible unmanned aerial vehicle for precision agriculture. Precis. Agric. 2012, 13, 517–523. [Google Scholar] [CrossRef]

- Del Pozo, S.; Rodríguez-Gonzálvez, P.; Hernández-López, D.; Felipe-García, B. Vicarious Radiometric Calibration of a Multispectral Camera on Board an Unmanned Aerial System. Remote Sens. 2014, 6, 1918–1937. [Google Scholar] [CrossRef]

- Senthilnath, J.; Kandukuri, M.; Dokania, A.; Ramesh, K.N. Application of UAV imaging platform for vegetation analysis based on spectral-spatial methods. Comput. Electron. Agric. 2017, 140, 8–24. [Google Scholar] [CrossRef]

- Song, L.; Guanter, L.; Guan, K.; You, L.; Huete, A.; Ju, W.; Zhang, Y. Satellite sun-induced chlorophyll fluorescence detects early response of winter wheat to heat stress in the Indian Indo-Gangetic Plains. Glob. Chang. Biol. 2018, 24, 4023–4037. [Google Scholar] [CrossRef] [PubMed]

- Damm, A.; Guanter, L.; Paul-Limoges, E.; Tol, C.V.D.; Hueni, A.; Buchmann, N.; Eugster, W.; Ammann, C.; Schaepman, M.E. Far-red sun-induced chlorophyll fluorescence shows ecosystem-specific relationships to gross primary production: An assessment based on observational and modeling approaches. Remote Sens. Environ. 2015, 166, 91–105. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Peng, Y.; Arkebauer, T.J.; Schepers, J. Relationships between gross primary production, green LAI, and canopy chlorophyll content in maize: Implications for remote sensing of primary production. Remote Sens. Environ. 2014, 144, 65–72. [Google Scholar] [CrossRef]

- Berni, J.A.J.; Zarco-Tejada, P.J.; Suarez, L.; Fereres, E. Thermal and Narrowband Multispectral Remote Sensing for Vegetation Monitoring From an Unmanned Aerial Vehicle. Ieee Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Myneni, R.B.; Hoffman, S.; Knyazikhin, Y.; Privette, J.L.; Glassy, J.; Tian, Y.; Wang, Y.; Song, X.; Zhang, Y.; Smith, G.R. Global products of vegetation leaf area and fraction absorbed PAR from year one of MODIS data. Remote Sens. Environ. 2002, 83, 214–231. [Google Scholar] [CrossRef]

- Duveiller, G.; Cescatti, A. Spatially downscaling sun-induced chlorophyll fluorescence leads to an improved temporal correlation with gross primary productivity. Remote Sens. Environ. 2016, 182, 72–89. [Google Scholar] [CrossRef]

- Sutton, A.; Fidan, B.; Walle, D.V.D. Hierarchical UAV Formation Control for Cooperative Surveillance. Ifac Proc. Vol. 2008, 41, 12087–12092. [Google Scholar] [CrossRef]

- Harwin, S.; Lucieer, A. Assessing the Accuracy of Georeferenced Point Clouds Produced via Multi-View Stereopsis from Unmanned Aerial Vehicle (UAV) Imagery. Remote Sens. 2012, 4, 1573–1599. [Google Scholar] [CrossRef]

- Dinguirard, M.; Slater, P.N. Calibration of Space-Multispectral Imaging Sensors: A Review. Remote Sens. Environ. 1999, 68, 194–205. [Google Scholar] [CrossRef]

- Moran, M.S.; Bryant, R.; Thome, K.; Ni, W.; Nouvellon, Y.; Gonzalez-Dugo, M.P.; Qi, J.; Clarke, T.R. A refined empirical line approach for reflectance factor retrieval from Landsat-5 TM and Landsat-7 ETM+. Remote Sens. Environ. 2001, 78, 71–82. [Google Scholar] [CrossRef]

- Chen, W.; Yan, L.; Li, Z.; Jing, X.; Duan, Y.; Xiong, X. In-flight absolute calibration of an airborne wide-view multispectral imager using a reflectance-based method and its validation. Int. J. Remote Sens. 2012, 34, 1995–2005. [Google Scholar] [CrossRef]

- Chen, Z.; Zhang, B.; Zhang, H.; Zhang, W. Vicarious Calibration of Beijing-1 Multispectral Imagers. Remote Sens. 2014, 6, 1432–1450. [Google Scholar] [CrossRef]

- Smith, G.M.; Milton, E.J. The use of the empirical line method to calibrate remotely sensed data to reflectance. Int. J. Remote Sens. 2010, 20, 2653–2662. [Google Scholar] [CrossRef]

- Aquino da Silva, A.G.; Amaro, V.E.; Stattegger, K.; Schwarzer, K.; Vital, H.; Heise, B. Spectral calibration of CBERS 2B multispectral satellite images to assess suspended sediment concentration. Isprs J. Photogramm. Remote Sens. 2015, 104, 53–62. [Google Scholar] [CrossRef]

| Detail of Flight | 7 June | 7 June | 8 July | 14 August |

|---|---|---|---|---|

| Weather condition | Sunny | Sunny | Little cloud | Much cloud |

| Flight speed (m/s) | 0 | 3 | 3 | 3 |

| Number of images | 4 | 275 | 295 | 195 |

| forward overlapping | 0 | 75 | 76 | 76 |

| side overlapping | 0 | 75 | 80 | 80 |

| Altitude (meter) | 1.5,25,50,75,100 | 50 | 50 | 50 |

| Flight lines | vertical | 9 | 12 | 12 |

| Flight time | 9 | 9.5 | 12.5 | 12.5 |

| Photo step | 60 | 2 | 2 | 2 |

| Image processing | Single | Mosaic | Mosaic | Mosaic |

| GCP | Longitude (E) | Latitude (N) | Height (m) |

|---|---|---|---|

| BASE | 115.8490315 | 39.46362014 | 30.920 |

| GCP1 | 115.8496587 | 39.46363159 | 30.819 |

| GCP2 | 115.8503528 | 39.46361153 | 30.651 |

| GCP3 | 115.8505838 | 39.46362654 | 30.634 |

| GCP4 | 115.8505074 | 39.46417053 | 30.794 |

| GCP5 | 115.8489003 | 39.46425329 | 30.894 |

| Combination | Calibration Targets | Validation Targets | V | RMSE |

|---|---|---|---|---|

| 1 | 5%, 60% | Sand, Grass | 0.1582 | 0.1328 |

| 2 | 5%, 20%, 60% | Sand, Grass | 0.1581 | 0.1306 |

| 3 | 5%, 30%, 60% | Sand, Grass | 0.1554 | 0.1311 |

| 4 | 5%, 40%, 60% | Sand, Grass | 0.1575 | 0.1314 |

| 5 | 5%, 20%, 30%, 60% | Sand, Grass | 0.1608 | 0.1372 |

| 6 | 5%, 20%, 40%, 60% | Sand, Grass | 0.1609 | 0.1304 |

| 7 | 5%, 30%, 40%, 60% | Sand, Grass | 0.1594 | 0.1285 |

| 8 | 5%, 20%, 30%, 40%, 60% | Sand, Grass | 0.1623 | 0.1302 |

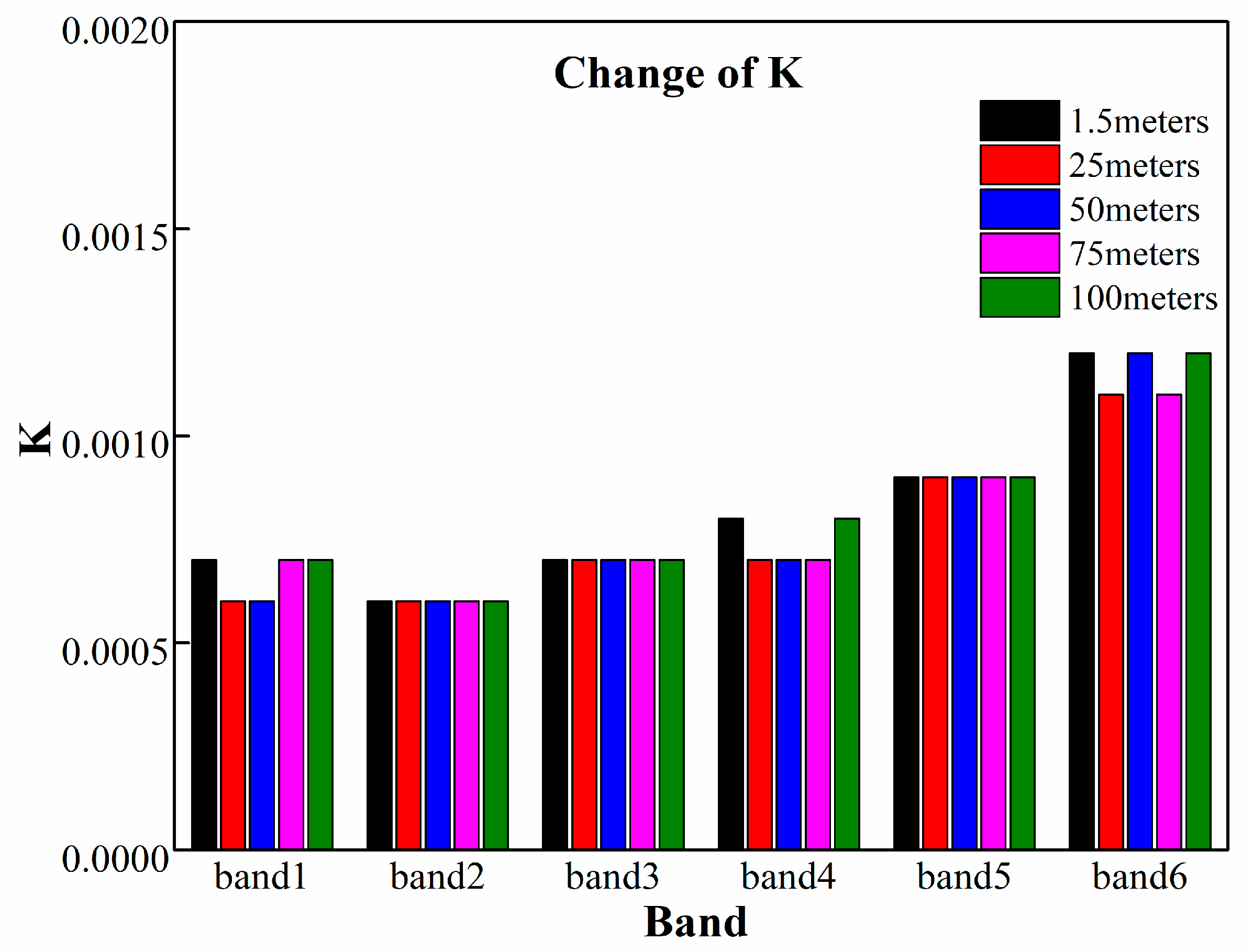

| Band | 1.5 m | 25 m | 50 m | 75 m | 100 m |

|---|---|---|---|---|---|

| band1 | y = 7.00E-4x − 3.90E-2 | y = 6.00E-4x – 7.70E-2 | y = 6.00E-4x − 8.10E-2 | y = 7.00E-4x − 8.84E-2 | y = 7.00E-4x − 9.23E-2 |

| band2 | y = 6.00E-4x − 4.30E-2 | y = 6.00E-4x − 8.60E-2 | y = 6.00E-4x − 9.10E-2 | y = 6.00E-4x − 1.04E-2 | y = 6.00E-4x − 1.10E-2 |

| band3 | y = 7.00E-4x − 3.70E-2 | y = 7.00E-4x − 7.90E-2 | y = 7.00E-4x − 7.71E-2 | y = 7.00E-4x − 8.83E-2 | y = 7.00E-4x − 8.88E-2 |

| band4 | y = 8.00E-4x − 3.60E-2 | y = 7.00E-4x − 8.80E-2 | y = 7.00E-4x − 9.69E-2 | y = 7.00E-4x − 1.09E-2 | y = 8.00E-4x − 1.14E-2 |

| band5 | y = 9.00E-4x − 3.10E-2 | y = 9.00E-4x − 7.10E-2 | y = 9.00E-4x − 8.61E-2 | y = 9.00E-4x − 9.43E-2 | y = 9.00E-4x − 9.89E-2 |

| band6 | y = 1.20E-3x − 5.40E-2 | y = 1.10E-3x − 8.70E-2 | y = 1.20E-3x − 1.08E-2 | y = 1.10E-3x − 1.08E-2 | y = 1.20E-3x − 1.20E-2 |

| Atmospheric Effects (%) | Band1 | Band2 | Band3 | Band4 | Band5 | Band6 |

|---|---|---|---|---|---|---|

| 25 m | 0.0494 | 0.0616 | 0.0625 | 0.0582 | 0.0591 | 0.0762 |

| 50 m | 0.0614 | 0.0806 | 0.0885 | 0.0782 | 0.0881 | 0.1042 |

| 75 m | 0.0774 | 0.1006 | 0.1175 | 0.1012 | 0.1211 | 0.1302 |

| 100 m | 0.0934 | 0.1216 | 0.1475 | 0.1292 | 0.1551 | 0.1592 |

| Single Image | Mosaic Image | |||

|---|---|---|---|---|

| band1 | y = 2.60E-3x − 6.07E-2 | R² = 0.9425 | y = 2.50E-3x − 6.10E-2 | R² = 0.9291 |

| band2 | y = 2.40E-3x − 7.17E-2 | R² = 0.9199 | y = 2.40E-3x − 6.49E-2 | R² = 0.8899 |

| band3 | y = 2.70E-3x − 6.23E-2 | R² = 0.9706 | y = 2.70E-3x − 6.05E-2 | R² = 0.9470 |

| band4 | y = 2.90E-3x − 7.43E-2 | R² = 0.9808 | y = 2.90E-3x − 7.70E-2 | R² = 0.9753 |

| band5 | y = 3.80E-3x − 7.68E-2 | R² = 0.9994 | y = 3.70E-3x − 7.32E-2 | R² = 0.9999 |

| band6 | y = 4.90E-3x − 9.86E-2 | R² = 0.9993 | y = 4.60E-3x − 8.64E-2 | R² = 0.9998 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, Y.; Senthilnath, J.; Wu, W.; Zhang, X.; Zeng, Z.; Huang, H. Radiometric Calibration for Multispectral Camera of Different Imaging Conditions Mounted on a UAV Platform. Sustainability 2019, 11, 978. https://doi.org/10.3390/su11040978

Guo Y, Senthilnath J, Wu W, Zhang X, Zeng Z, Huang H. Radiometric Calibration for Multispectral Camera of Different Imaging Conditions Mounted on a UAV Platform. Sustainability. 2019; 11(4):978. https://doi.org/10.3390/su11040978

Chicago/Turabian StyleGuo, Yahui, J. Senthilnath, Wenxiang Wu, Xueqin Zhang, Zhaoqi Zeng, and Han Huang. 2019. "Radiometric Calibration for Multispectral Camera of Different Imaging Conditions Mounted on a UAV Platform" Sustainability 11, no. 4: 978. https://doi.org/10.3390/su11040978

APA StyleGuo, Y., Senthilnath, J., Wu, W., Zhang, X., Zeng, Z., & Huang, H. (2019). Radiometric Calibration for Multispectral Camera of Different Imaging Conditions Mounted on a UAV Platform. Sustainability, 11(4), 978. https://doi.org/10.3390/su11040978