Abstract

This paper develops a bias compensation-based parameter and state estimation algorithm for the observability canonical state-space system corrupted by colored noise. The state-space system is transformed into a linear regressive model by eliminating the state variables. Based on the determination of the noise variance and noise model, a bias correction term is added into the least squares estimate, and the system parameters and states are computed interactively. The proposed algorithm can generate the unbiased parameter estimate. Two illustrative examples are given to show the effectiveness of the proposed algorithm.

1. Introduction

Mathematical models play a great role in adaptive control, online prediction and signal modeling [1,2,3,4]. The system models of physical plants can be constructed from first principle, but this method cannot work well when the physical mechanism of the true plants is not very clear [5,6]. Thus, data-driven-based black-box or gray-box state-space modeling can be used to approximate the law of motion [7,8,9]. The advantages of state-space modeling lie in that the model maps the relationship from inputs to outputs, and the states reflect the internal dynamic behavior of the plants. The estimation of state-space models may include both the unknown system parameters and the unmeasurable states [10,11]. It is well known that the Kalman filtering method captures the optimal state estimator based on the measurement data [12,13,14]. However, the model parameters of the system are generally assumed to be known in advance, and the statistical characteristics of measurement noise and process noise have to be chosen, which are hard to satisfy in practice [15,16,17].

On the identification of state-space models, the classical identification methods are the prediction-error methods, which require adequate knowledge of the system structures and parameters [18,19], and the subspace identification methods, which ignore the consistency of system parameter estimates [20,21,22]. Since the system states and parameters are involved in the state-space model, the simultaneous estimation of them is a feasible choice [23,24]. In this aspect, Pavelková and Kárný investigated the state and parameter estimation problem of the state-space model with bounded noise, providing a maximum estimator based on Bayesian theory [25]. By transforming the single-input multiple-output Hammerstein state-space model into the multivariable one, Ma et al. used the Kalman smoother to estimate the system states and the expectation maximization algorithm to compute parameter estimates [26]. Li et al. presented a maximum likelihood-based identification algorithm for the Wiener nonlinear system with a linear state-space subsystem [27]. Wang and Liu applied the recursive least squares algorithm to non-uniformly sampled state-space systems with the states being available and combined the singular value decomposition with the hierarchical identification principle for the identification of the system with the states being unavailable [28]. These studies were mainly focused on the state-space system with white noise.

In industrial processes, the observation data are often corrupted by colored noises [29,30,31]. As we have known, the parameter estimates generated by the recursive least squares algorithm for the system with colored noise are biased. To obtain the unbiased estimates, the bias compensation method was proposed [32]. The basic idea is to compensate the biased least squares estimate by adding a correction term [33,34,35]. Zhang investigated the parameter estimation of the multiple-input single-output linear system with colored noise and introduced a stable prefilter to preprocess the input data for the purpose of obtaining the unbiased estimate [36]. Zheng extended the bias compensation method to the errors-in-variables output-error system, where the input was contaminated by white noise and the output errors were modeled by colored noise [37]. On the identification of state-space models with colored noise, Wang et al. applied the Kalman filter algorithm to estimate the system states and developed a filtering-based recursive least squares algorithm for observer canonical state-space systems with colored noise [38]. On the basis of the work in [38], this paper discusses the state and parameter identification of the state-space model with colored noise and presents a bias compensation-based identification algorithm for jointly estimating the system parameters and states based on the bias compensation. The main contributions of the paper are as follows.

- By using the bias compensation, this paper derives the identification model and achieves the unbiased parameter estimation for observability canonical state-space models with colored noise.

- By employing the interactive identification, this paper explores the relationship between the noise parameters and variance and the bias correction term and realizes the simultaneous estimation of the system parameters, noise parameters and system states.

The rest of this paper is organized as follows. Section 2 demonstrates the problem formulation about the observability canonical state-space system and derives the identification model. Section 3 develops a bias compensation-based parameter and state estimation algorithm. Section 4 provides two illustrative examples to show that the proposed algorithm is effective. Finally, Section 5 offers some concluding remarks.

2. Problem Description and Identification Model

For narrative convenience, let us introduce some notation. The nomenclature is displayed in Table 1.

Table 1.

The nomenclature for symbols.

Consider the following state-space system with colored noise,

where is the state vector, is the system input, is the system output, is a random noise and , and are the system parameter matrix and vectors, defined as:

The external disturbance can be fitted by a moving average process, an autoregressive process or an autoregressive moving average process. Without loss of generality, we consider as a moving average noise process:

where is the white noise with zero mean and variance . Assume that , and for . The objective is to estimate the parameters , and and the system state from the available input-output data .

Note that the system in Equations (1) and (2) is an observability canonical form, and the observability matrix T is an identity matrix, i.e.,

Define the parameter matrix M and the information vectors , and as:

Equation (10) can be rewritten as:

Define the parameter vectors , and and the information vectors and as:

Define the intermediate variable:

Equation (14) or (15) is the system identification model of the state-space system in Equation (1), where the information vector is composed of the observed data. Define the parameter vector and the information vector as:

From Equation (3), we have:

3. The Bias Compensation-Based Parameter and State Estimation Algorithm

The algorithm includes two parts: the parameter estimation algorithm and the state estimation algorithm. Two parts are implemented in an interactive way.

3.1. The Parameter Estimation Algorithm

According to the identification model in Equation (15), define the cost function:

Using the least squares search and minimizing give the least squares estimate of the parameter vector :

Obviously, the least squares estimate in Equation (18) is a biased estimate since is correlated noise. Equation (18) can be rewritten as:

Dividing by k and taking limits on both sides give:

Note that in Equation (19) is the moving average noise, and is white noise with zero mean and variance and is independent of the inputs. From Equation (3), we have:

where is the autocorrelation function of the noise and when . Define the autocorrelation function vectors r and and the autocorrelation function matrices R, and Q as:

In fact, is a Toeplitz matrix consisting of n autocorrelation functions. Equation (19) can be rewritten as:

or:

It can be seen from Equation (25) that the bias of the least squares estimate can be eliminated by adding a compensation term . That is, the estimate is an unbiased estimate of the true parameter vector . Thus, the unbiased estimate can be computed by the following recursive expressions,

Equation (26) shows that the unbiased estimate is related to the estimates of R and r (i.e., the noise variance and the noise parameter vector ). The following derives their estimates based on the interactive identification.

Let the least squares residual . Using Equation (14) and the relation gives:

Thus, we have:

Let be the cost function at time k, and be the estimates of Q and at instant k, respectively. Then, the estimate of the noise variance can be computed by:

Note that Equations (30)–(34) involve the estimate of the noise parameter vector , which can be computed by the noise model in Equation (16). Let and be the estimates of and , respectively. Define the noise information vectors:

Thus, Equations (26)–(40) form the bias compensation-based parameter estimation (BC-PE) algorithm for identifying the parameter vector . The BC-PE algorithm is an interactive estimation process: we can compute the estimates and ; with these obtained variable estimates, we derive the estimate of R and r and then update the unbiased estimate using Equations (26)–(29).

3.2. The State Estimation Algorithm

When the system parameter estimation and the noise estimation are obtained from the BC-PE algorithm, we can estimate the system state using Equation (11).

Then, the parameter matrix M can be expressed as:

According to the definitions of and , we have:

Once the estimate of the parameter vector is computed by the BC-PE algorithm in Equations (26)–(40), we can extract the estimates and of the parameter vectors and . Then, the estimates and can be computed by:

From Equation (11), we have:

Replacing M and with their corresponding estimates and , we have:

Equations (41)–(47) form the state estimation algorithm for the state-space system in Equation (1). Let , . By interactively implementing the parameter estimation algorithm in Equations (26)–(40) and the state estimation algorithm in Equations (41)–(47), the estimation of the system parameter vector and the state can be obtained.

The steps for implementing the bias compensation-based parameter and state estimation (BC-PSE) algorithm in Equations (26) and (47) for state-space systems with colored noise are listed as follows.

- Let , and set the initial values , , , , .

- Compute the state estimate using Equation (43).

- Let . If , increase k by one, and go to Step 2; otherwise, stop, and obtain the parameter estimation vector .

4. Examples

Example 1.

Consider the following state-space system with moving average noise,

The parameter vector to be estimated is:

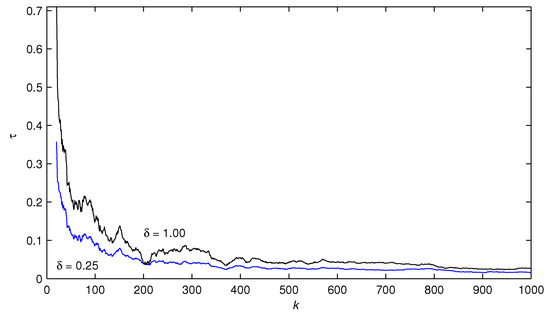

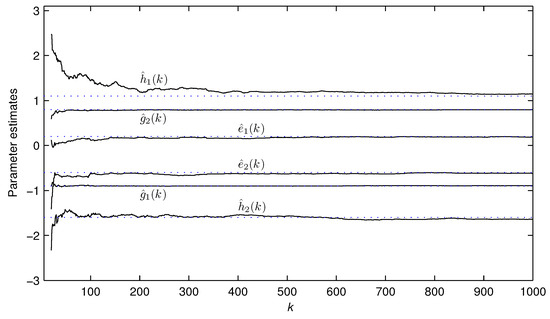

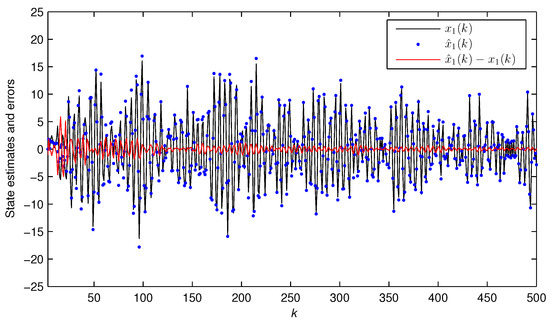

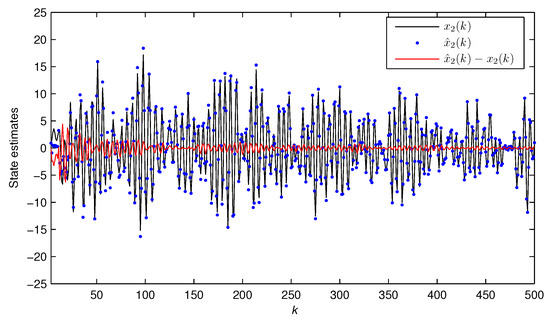

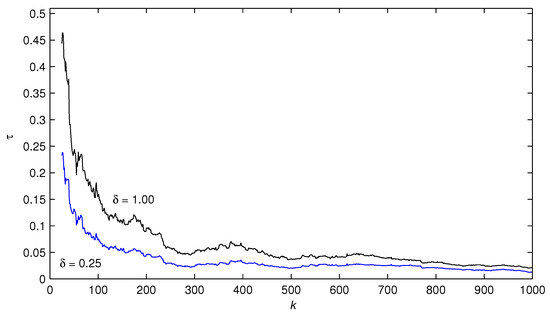

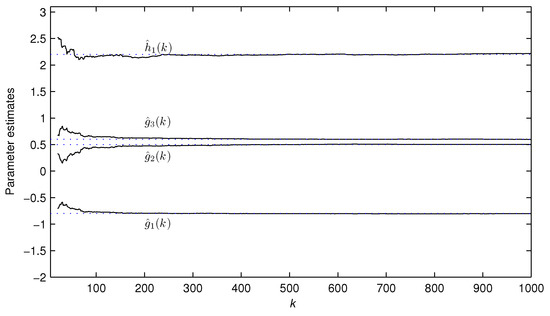

In simulation, the input is set as a persistent excitation sequence and as a zero-mean noise sequence with variance . Take the data length . Use the first 1000 data and apply the BC-PSE algorithm to get the estimates of the parameter vector and the system state . The parameter estimates and their errors under different noise variances are displayed in Table 2 and Figure 1, where the estimation error . The parameter estimate versus k is plotted in Figure 2, and the state estimate computed by the BC-PSE algorithm is illustrated in Figure 3 and Figure 4.

Table 2.

The bias compensation-based parameter estimates and errors for Example 1.

Figure 1.

The estimation errors versus k with different noise variances for Example 1.

Figure 2.

The parameter estimates versus k for Example 1.

Figure 3.

The state and the estimated state versus k for Example 1 ().

Figure 4.

The state and the estimated state versus k for Example 1 ().

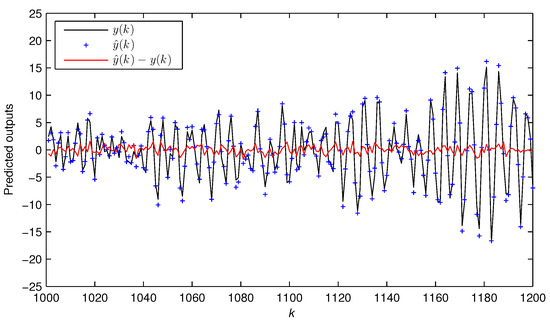

The remaining 200 data from –1200 are taken to test the effectiveness of the estimated model. As a comparison, the curves of the estimated output and the predicted output are depicted in Figure 5.

Figure 5.

The actual output and the estimated output versus k for Example 1.

Example 2.

Consider a third-order observability canonical state-space system,

The parameter vector to be estimated is:

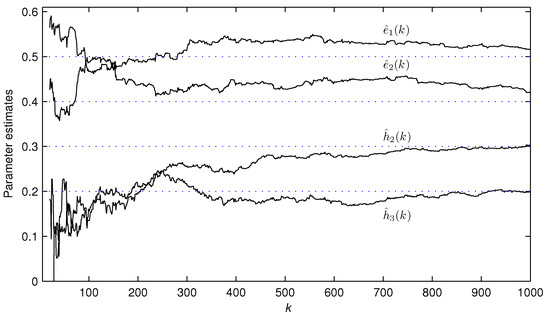

The simulation conditions are the same as those in Example 1. Table 3 and Figure 6 compare the bias compensation-based parameter estimates and errors under noise variances and . Figure 7 and Figure 8 depict the curves of the parameter estimates versus the instant k.

Table 3.

The bias compensation-based parameter estimates and errors for Example 2.

Figure 6.

The estimation errors versus k with different noise variances for Example 2.

Figure 7.

The parameter estimates , , and versus k for Example 2.

Figure 8.

The parameter estimates , , and versus k for Example 2.

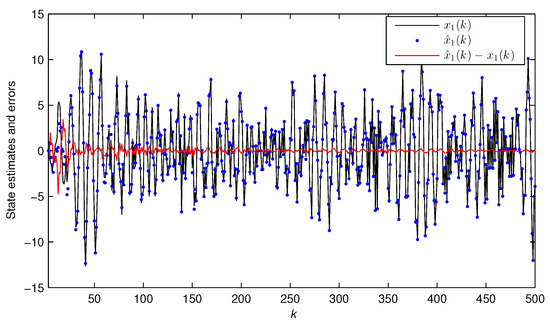

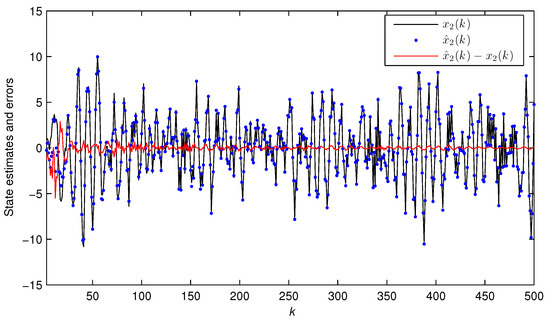

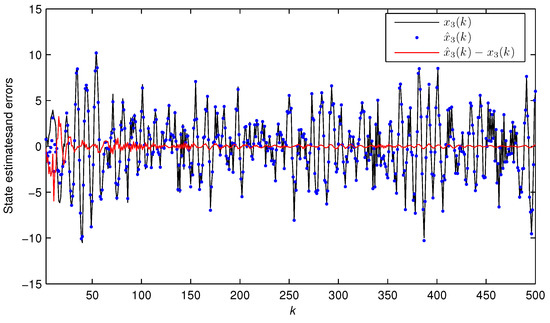

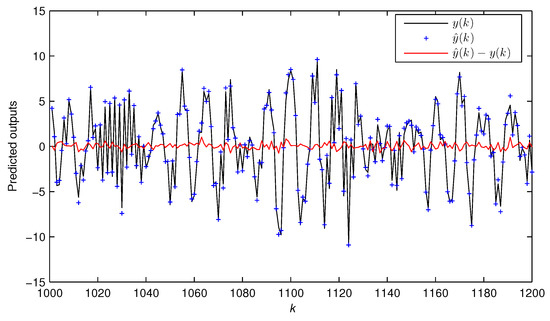

Figure 9, Figure 10 and Figure 11 plot the dynamics of three state estimates . Figure 12 describes the output comparison of with .

Figure 9.

The true state and the estimated state versus k for Example 2 ().

Figure 10.

The true state and the estimated state versus k for Example 2 ().

Figure 11.

The true state and the estimated state versus k for Example 2 ().

Figure 12.

The actual output and the estimated output versus k for Example 2.

From Table 2 and Table 3 and Figure 1, Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11 and Figure 12, we can draw some conclusions as follows.

5. Conclusions

This paper discusses the identification of the observability canonical state-space system with colored noise via our proposed bias compensation-based parameter and state estimation algorithm. The numerical results indicate that the algorithm can effectively estimate the system states and parameters. The advantage of this algorithm is that the parameter estimates are unbiased. The algorithm can be combined with other recursive algorithms, such as the multi-innovation algorithm, to study the identification of nonlinear state space systems [39], dual-rate systems [40], signal modeling [41] and time series analysis [42,43].

Author Contributions

Joint work.

Funding

This work was supported by the Science and Technology Project of Henan Province (China, 182102210536, 182102210538), the Key Research Project of Henan Higher Education Institutions (China, 18A120003, 18A130001, 18B520036), the National Science Foundation of China (China, 61503122) and Nanhu Scholars Program for Young Scholars of XYNU(China).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Na, J.; Yang, J.; Wu, X.; Guo, Y. Robust adaptive parameter estimation of sinusoidal signals. Automatica 2015, 53, 376–384. [Google Scholar] [CrossRef]

- Kalafatis, A.D.; Wang, L.; Cluett, W.R. Identification of time-varying pH processes using sinusoidal signals. Automatica 2005, 41, 685–691. [Google Scholar] [CrossRef]

- Na, J.; Yang, J.; Ren, X.M.; Guo, Y. Robust adaptive estimation of nonlinear system with time-varying parameters. Int. J. Adapt. Control Process. 2015, 29, 1055–1072. [Google Scholar] [CrossRef]

- Liu, S.Y.; Xu, L.; Ding, F. Iterative parameter estimation algorithms for dual-frequency signal models. Algorithms 2017, 10, 118. [Google Scholar] [CrossRef]

- Na, J.; Mahyuddin, M.N.; Herrmann, G.; Ren, X.; Barber, P. Robust adaptive finite-time parameter estimation and control for robotic systems. Int. J. Robust Nonlinear Control 2015, 25, 3045–3071. [Google Scholar] [CrossRef]

- Huang, W.; Ding, F. Coupled least squares identification algorithms for multivariate output-error systems. Algorithms 2017, 10, 12. [Google Scholar] [CrossRef]

- Goos, J.; Pintelon, R. Continuous-time identification of periodically parameter-varying state space models. Automatica 2016, 71, 254–263. [Google Scholar] [CrossRef]

- AlMutawa, J. Identification of errors-in-variables state space models with observation outliers based on minimum covariance determinant. J. Process Control 2009, 19, 879–887. [Google Scholar] [CrossRef]

- Yuan, Y.; Zhang, H.; Wu, Y.; Zhu, T.; Ding, H. Bayesian learning-based model predictive vibration control for thin-walled workpiece machining processes. IEEE/ASME Trans. Mechatron. 2017, 22, 509–520. [Google Scholar] [CrossRef]

- Ding, F. Combined state and least squares parameter estimation algorithms for dynamic systems. Appl. Math. Model. 2014, 38, 403–412. [Google Scholar] [CrossRef]

- Ma, J.X.; Xiong, W.L.; Chen, J.; Ding, F. Hierarchical identification for multivariate Hammerstein systems by using the modified Kalman filter. IET Control Theory Appl. 2017, 11, 857–869. [Google Scholar] [CrossRef]

- Fatehi, A.; Huang, B. Kalman filtering approach to multi-rate information fusion in the presence of irregular sampling rate and variable measurement delay. J. Process Control 2017, 53, 15–25. [Google Scholar] [CrossRef]

- Zhao, S.; Shmaliy, Y.S.; Liu, F. Fast Kalman-like optimal unbiased FIR filtering with applications. IEEE Trans. Signal Process. 2016, 64, 2284–2297. [Google Scholar] [CrossRef]

- Zhou, Z.P.; Liu, X.F. State and fault estimation of sandwich systems with hysteresis. Int. J. Robust Nonlinear Control 2018, 28, 3974–3986. [Google Scholar] [CrossRef]

- Zhao, S.; Huang, B.; Liu, F. Linear optimal unbiased filter for time-variant systems without apriori information on initial condition. IEEE Trans. Autom. Control 2017, 62, 882–887. [Google Scholar] [CrossRef]

- Zhao, S.; Shmaliy, Y.S.; Liu, F. On the iterative computation of error matrix in unbiased FIR filtering. IEEE Signal Process. Lett. 2017, 24, 555–558. [Google Scholar] [CrossRef]

- Erazo, K.; Nagarajaiah, S. An offline approach for output-only Bayesian identification of stochastic nonlinear systems using unscented Kalman filtering. J. Sound Vib. 2017, 397, 222–240. [Google Scholar] [CrossRef]

- Verhaegen, M.; Verdult, V. Filtering and System Identification: A Least Squares Approach; Cambridge University Press: Cambridge, UK, 2007. [Google Scholar]

- Ljung, L. System Identification: Theory for the User, 2nd ed.; Prentice Hall: Englewood Cliffs, NJ, USA, 1999. [Google Scholar]

- Yu, C.P.; Ljung, L.; Verhaegen, M. Identification of structured state-space models. Automatica 2018, 90, 54–61. [Google Scholar] [CrossRef]

- Naitali, A.; Giri, F. Persistent excitation by deterministic signals for subspace parametric identification of MISO Hammerstein systems. IEEE Trans. Autom. Control 2016, 61, 258–263. [Google Scholar] [CrossRef]

- Ase, H.; Katayama, T. A subspace-based identification of Wiener-Hammerstein benchmark model. Control Eng. Pract. 2015, 44, 126–137. [Google Scholar] [CrossRef]

- Xu, L.; Ding, F.; Gu, Y.; Alsaedi, A.; Hayat, T. A multi-innovation state and parameter estimation algorithm for a state space system with d-step state-delay. Signal Process. 2017, 140, 97–103. [Google Scholar] [CrossRef]

- Zhang, X.; Ding, F.; Xu, L.; Yang, E.F. State filtering-based least squares parameter estimation for bilinear systems using the hierarchical identification principle. IET Control Theory Appl. 2018, 12, 1704–1713. [Google Scholar] [CrossRef]

- Pavelkova, L.; Karny, M. State and parameter estimation of state-space model with entry-wise correlated uniform noise. Int. J. Adapt. Control Signal Process. 2014, 28, 1189–1205. [Google Scholar] [CrossRef]

- Ma, J.X.; Wu, O.Y.; Huang, B.; Ding, F. Expectation maximization estimation for a class of input nonlinear state space systems by using the Kalman smoother. Signal Process. 2018, 145, 295–303. [Google Scholar] [CrossRef]

- Li, J.H.; Zheng, W.X.; Gu, J.P.; Hua, L. A recursive identification algorithm for Wiener nonlinear systems with linear state-space subsystem. Circuits Syst. Signal Process. 2018, 37, 2374–2393. [Google Scholar] [CrossRef]

- Wang, H.W.; Liu, T. Recursive state-space model identification of non-uniformly sampled systems using singular value decomposition. Chin. J. Chem. Eng. 2014, 22, 1268–1273. [Google Scholar] [CrossRef]

- Ding, J.L. Data filtering based recursive and iterative least squares algorithms for parameter estimation of multi-input output systems. Algorithms 2016, 9, 49. [Google Scholar] [CrossRef]

- Yu, C.P.; You, K.Y.; Xie, L.H. Quantized identification of ARMA systems with colored measurement noise. Automatica 2016, 66, 101–108. [Google Scholar] [CrossRef]

- Jafari, M.; Salimifard, M.; Dehghani, M. Identification of multivariable nonlinear systems in the presence of colored noises using iterative hierarchical least squares algorithm. ISA Trans. 2014, 53, 1243–1252. [Google Scholar] [CrossRef] [PubMed]

- Sagara, S.; Wada, K. On-line modified least-squares parameter estimation of linear discrete dynamic systems. Int. J. Control 1977, 25, 329–343. [Google Scholar] [CrossRef]

- Mejari, M.; Piga, D.; Bemporad, A. A bias-correction method for closed-loop identification of linear parameter-varying systems. Automatica 2018, 87, 128–141. [Google Scholar] [CrossRef]

- Ding, J.; Ding, F. Bias compensation based parameter estimation for output error moving average systems. Int. J. Adapt. Control Signal Process. 2011, 25, 1100–1111. [Google Scholar] [CrossRef]

- Diversi, R. Bias-eliminating least-squares identification of errors-in-variables models with mutually correlated noises. Int. J. Adapt. Control Signal Process. 2013, 27, 915–924. [Google Scholar] [CrossRef]

- Zhang, Y. Unbiased identification of a class of multi-input single-output systems with correlated disturbances using bias compensation methods. Math. Comput. Model. 2011, 53, 1810–1819. [Google Scholar] [CrossRef]

- Zheng, W.X. A bias correction method for identification of linear dynamic errors-in-variables models. IEEE Trans. Autom. Control 2002, 47, 1142–1147. [Google Scholar] [CrossRef]

- Wang, X.H.; Ding, F.; Alsaedi, A.; Hayat, T. Filtering based parameter estimation for observer canonical state space systems with colored noise. J. Frankl. Inst. 2017, 354, 593–609. [Google Scholar] [CrossRef]

- Pan, W.; Yuan, Y.; Goncalves, J.; Stan, G.B. A sparse Bayesian approach to the identification of nonlinear state-space systems. IEEE Trans. Autom. Control 2016, 61, 182–187. [Google Scholar] [CrossRef]

- Yang, X.Q.; Yang, X.B. Local identification of LPV dual-rate system with random measurement delays. IEEE Trans. Ind. Electron. 2018, 65, 1499–1507. [Google Scholar] [CrossRef]

- Xu, L.; Xiong, W.L.; Alsaedi, A.; Hayat, T. Hierarchical parameter estimation for the frequency response based on the dynamical window data. Int. J. Control Autom. Syst. 2018, 16, 1756–1764. [Google Scholar] [CrossRef]

- Gan, M.; Chen, C.L.P.; Chen, G.Y.; Chen, L. On some separated algorithms for separable nonlinear squares problems. IEEE Trans. Cybern. 2018, 48, 2866–2874. [Google Scholar] [CrossRef] [PubMed]

- Chen, G.Y.; Gan, M.; Chen, G.L. Generalized exponential autoregressive models for nonlinear time series: Stationarity, estimation and applications. Inf. Sci. 2018, 438, 46–57. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).