A Review of Classification Problems and Algorithms in Renewable Energy Applications

Abstract

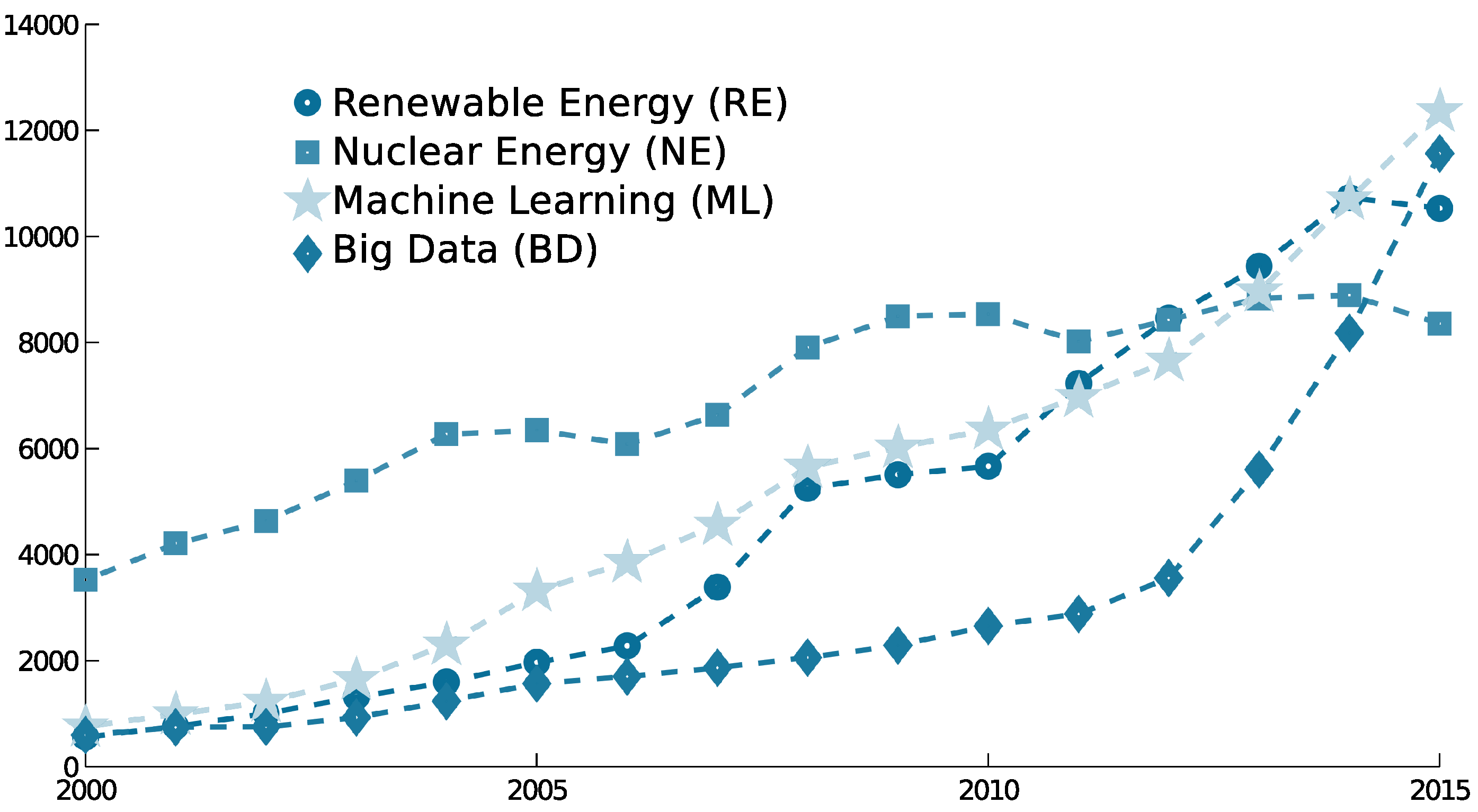

:1. Introduction

2. Classification Problems: An Important Part of Machine Learning (ML)

Performance Evaluation

- The accuracy () is the percentage of correctly-classified patterns, and it can be defined using the confusion matrix:where values range from zero to one, are the elements of the diagonal of the confusion matrix and is a Boolean test, which is one if the inner condition is true and zero otherwise.

- The Receiver Operating Characteristic (ROC) curve [22], which measures the misclassification rate of one class and the accuracy of the other. The standard ROC perspective is limited to classification problems with two classes, with the ROC curve and the area under the ROC curve being used to enhance the quality of binary classifiers [23].

- The Minimum Sensitivity () [24], which corresponds to the lowest percentage of patterns correctly predicted as belonging to each class, with respect to the total number of examples in the corresponding class:where is the sensitivity of the q-th class and values range from zero to one.

- The Mean Absolute Error () is the average absolute deviation of the predicted class from the true class (measuring the distance as the number of categories of the ordinal scale) [25]:where is the distance between the true () and the predicted () ranks and is the position of a label in the ordinal rank. values range from zero to .

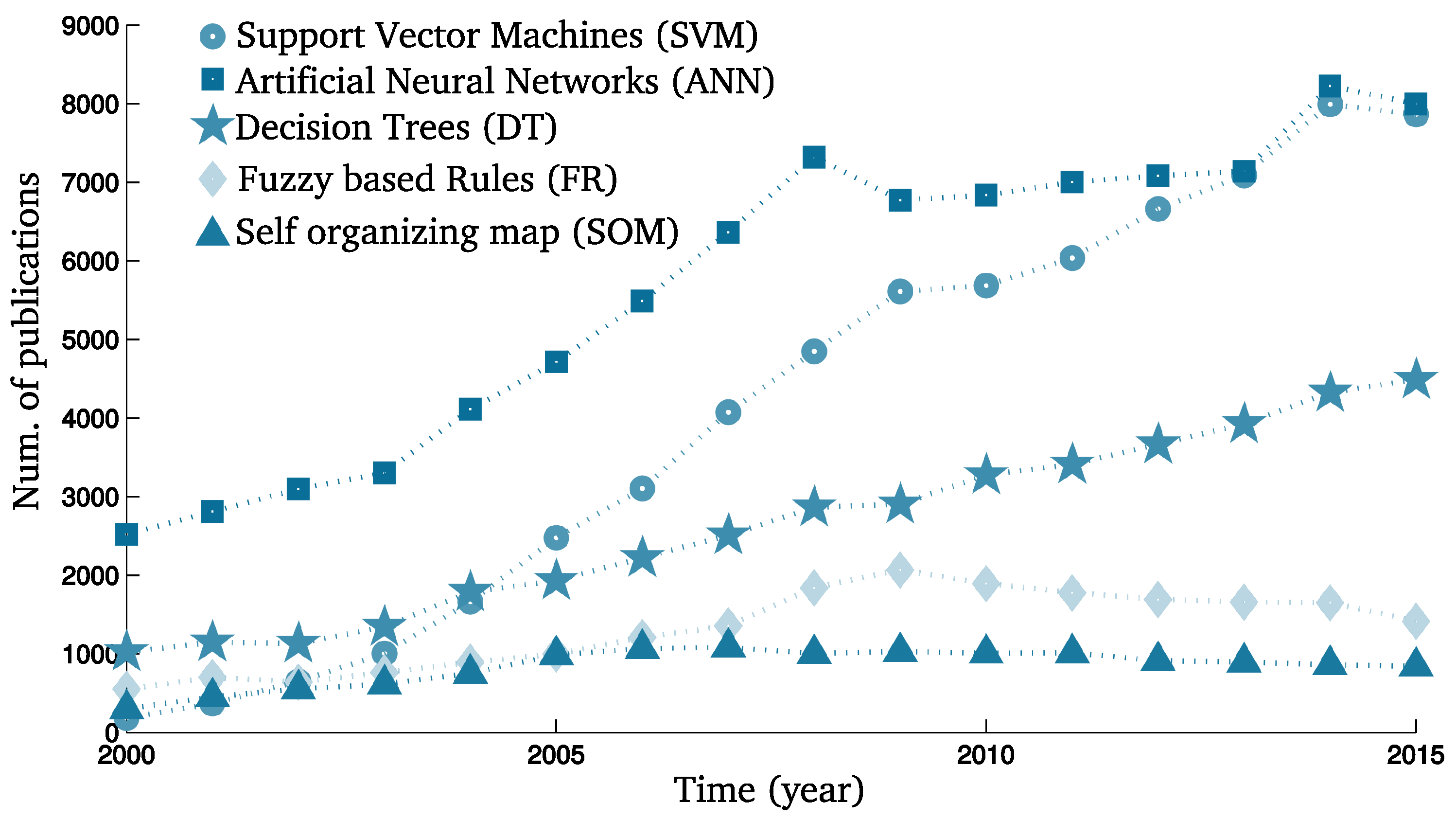

3. Main Algorithms Solving Classification Problems

3.1. Logistic Regression

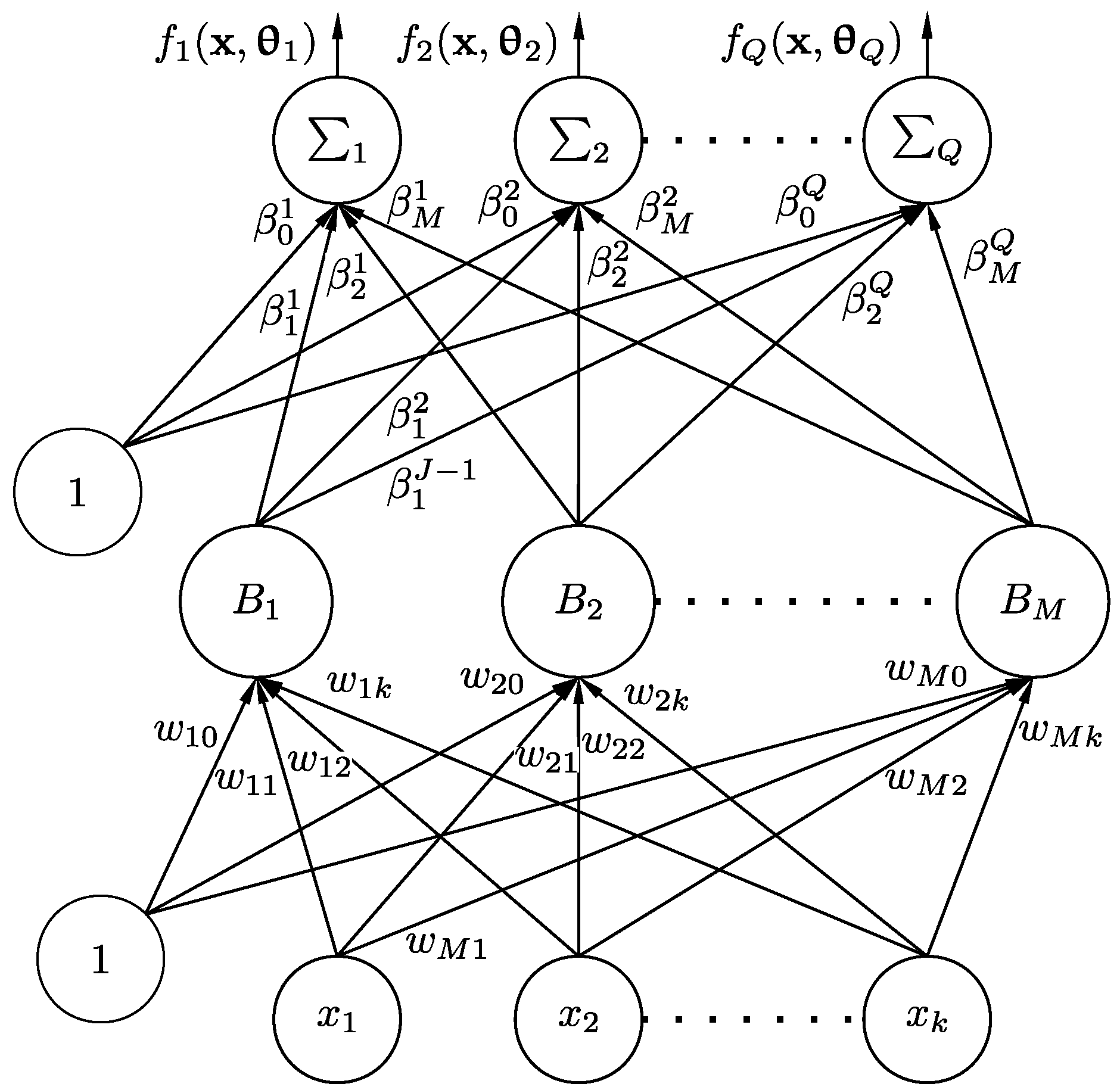

3.2. Artificial Neural Networks

3.3. Support Vector Machines

3.4. Decision Trees

3.5. Fuzzy Rule-Based Classifiers

3.6. Miscellaneous Classifiers

3.7. Discussion and Recommendations

- Data preprocessing: as stated before, the preprocessing step is considered as one of the most important phases in ML [109]. Preprocessing algorithms are usually used for: data cleaning, outlier detection, data imputation and transformation of features (e.g., from nominal to binary, given that many ML methods require all features to be real-valued).

- Dimensionality of the data: low dimensional data could result in a set of features that are not relevant (or sufficient) for solving the problem at hand; hence, the importance of the process of data acquisition. High dimensional data, on the other hand, could contain irrelevant and/or correlated features, forming a space where distances between data points might not be useful (thus harming the classification). There is not a standard of what is usually considered high or low dimensional, since this usually depends on the number of patterns (it is not the same having 10 patterns in a 100-dimensional space as 10,000 patterns). Note that different methods could be emphasized for high-dimensional data, although the most common approach is to perform a feature selection analysis [110,111] or dimensionality reduction, to obtain the set of most representative features for the classification problem.

- Number of patterns: the authors would also like to highlight the importance of BD and large-scale methods, as well as the use of distributed algorithms. BD algorithms are arising in ML given the immense amount of data collected daily, which makes its processing very difficult by using standard methods. Its usage is not only necessary in some cases, but also beneficial (e.g., in the case of distributed computing, different models could be created using spatial local information, and a more general model could be considered to be mixing the local models, as done in [112]). BD approaches usually involve a data partitioning step. The partitions are used to compute different learning models, which are then joined in the last step. Pattern or prototype selection algorithms are also a widely-used option for BD.

- Data imbalance: apart from the above-mentioned learning strategies, prediction models for RE could largely benefit from the use of alternative classification-related techniques [30,111,113]. Imbalanced data are one of the current challenges of ML researchers for classification problems [114], as this poses a serious hindrance for the classification method. The issue in this case is that there is a class (or a set of classes) that is significantly unrepresented in the dataset (i.e., this class presents a much lower prior probability than the rest). A common consequence is that this class is ignored by the prediction model, which is unacceptable as this class is usually the one with the greatest importance (e.g., in anomaly detection or fault monitoring). The solutions in this case are multiple, and they are still being researched. However, two commonly-used ideas are to consider a cost-sensitive classifier [115] (to set a higher loss for minority patterns) or to use an over-sampling approach [116] (to create synthetic patterns from the available ones).

- Interpretability: some applications require the extraction of tangible knowledge and emphasize less the model performance. In this case, decision trees or rule-based systems are preferred, where the user has to define the maximum number of rules or the size of the tree (two factors that are difficult to interpret). Linear models, such as LR, are also more easily interpretable and scale better with large data than nonlinear ones, although they result in some cases in a decrease of accuracy.

- The final purpose of the algorithm: the way the model is going to be used in production can impose constraints about the kind of classification method to consider, e.g., training the model in real time (where light methods should be used), model refinement when a new datum arrives (online strategies), storage of the learned model (where the size of the model is the most important factor) or the use of an evaluation metric specified by the application (where different strategies can be used to further optimize classification models according to a predefined fitness function, such as bioinspired approaches [117]).

- Experimental design and model selection: it is also crucial to perform a correct validation of the classifier learned, as well as to correctly optimize the different parameters of the learning process. Depending on the availability of data, different strategies can be considered to evaluate the performance of the classifier over unseen data [29] (e.g., a hold-out procedure, where a percentage of patterns is used as the test data, or a k-fold method, where the dataset is divided into k folds and k classifiers are learned, each one considering a different fold as the test set). When performing these data partitions, we emphasize the necessity of using stratified partitions, where the proportion of patterns of each class is maintained for all classes. Moreover, it is very important to consider a proper model selection process to ensure a fair comparison [76]. In this sense, when the classifier learning process involves different parameters (commonly known as hyper-parameters), the adjustment of these parameters should not be based on the test performance, given that this would result in over-fitting the test set. A proper way of performing model selection is by using a nested k-fold cross-validation over the training set. Once the lowest cross-validation error alternative is obtained, it is applied to the complete training set, and test results can be extracted.

4. A Comprehensive Review of Classification Problems and Algorithms in RE Applications

4.1. Classification Problems and Algorithms in Wind Speed/Power Prediction

4.2. Classification Problems and Algorithms in Fault Diagnosis in RE-Related Systems

4.3. Classification Problems and Algorithms in Power Quality Disturbance Detection and Analysis

4.4. Classification Problems and Algorithms in Appliance Load Monitoring Applications

4.5. Classification Problems and Algorithms in Alternative RE Applications

4.6. A Final Note on Classification Problems in RE

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| ANFIS | Adaptive Neuro-Fuzzy Inference System |

| ANN | Artificial Neural Network |

| AuDyC | Auto-adaptive Dynamical Clustering algorithm |

| BN | Bayesian Networks |

| CART | Classification and Regression Trees |

| DT | Decision Tree |

| ELM | Extreme Learning Machine |

| EMD | Empirical Mode Decomposition |

| FR | Fuzzy Rule |

| GA | Genetic Algorithm |

| GFS | Genetic Fuzzy System |

| HMM | Hidden Markov Model |

| k-NN | k Nearest Neighbors |

| LR | Logistic Regression |

| MAE | Mean Absolute Error |

| ML | Machine Learning |

| MLP | Multilayer Perceptron |

| MODIS | Moderate Resolution Imaging Spectroradiometer |

| MS | Minimum Sensitivity |

| PCA | Principal Component Analysis |

| PNN | Probabilistic Neural Network |

| PQ | Power Quality |

| RBF | Radial Basis Function |

| RE | Renewable Energy |

| RF | Random Forest |

| ROC | Receiver Operating Characteristic |

| SCADA | Supervisory Control and Data Acquisition |

| SNR | Signal to Noise Ratio |

| SOM | Self-Organizing Map |

| SVM | Support Vector Machine |

| WPPT | Wind Power Prediction Tool |

References

- Suganthi, L.; Samuel, A.A. Energy models for demand forecasting—A review. Renew. Sustain. Energy Rev. 2012, 16, 1223–1240. [Google Scholar] [CrossRef]

- Rowland, C.S.; Mjelde, J.W. Politics and petroleum: Unintended implications of global oil demand reduction policies. Energy Res. Soc. Sci. 2016, 11, 209–224. [Google Scholar] [CrossRef]

- Fernández, J.C.; Salcedo-Sanz, S.; Gutiérrez, P.A.; Alexandre, E.; Hervás-Martínez, C. Significant wave height and energy flux range forecast with machine learning classifiers. Eng. Appl. Artif. Intell. 2015, 43, 44–53. [Google Scholar] [CrossRef]

- Wang, F.; Zhen, Z.; Mi, Z.; Sun, H.; Su, S.; Yang, G. Solar irradiance feature extraction and support vector machines based weather status pattern recognition model for short-term photovoltaic power forecasting. Energy Build. 2015, 86, 427–438. [Google Scholar] [CrossRef]

- Panigrahi, B.K.; Dash, P.K.; Reddy, J.B.V. Hybrid signal processing and machine intelligence techniques for detection, quantification and classification of power quality disturbances. Eng. Appl. Artif. Intell. 2009, 22, 442–454. [Google Scholar] [CrossRef]

- National Science Board. Available online: http://www.nsf.gov/statistics/seind10/ (accessed on 29 July 2016).

- Cheng, M.; Zhu, Y. The state of the art of wind energy conversion systems and technologies: A review. Energy Convers. Manag. 2014, 88, 332–347. [Google Scholar] [CrossRef]

- Al-Mostafa, Z.A.; Maghrabi, A.H.; Al-Shehri, S.M. Sunshine-based global radiation models: A review and case study. Energy Convers. Manag. 2014, 84, 209–216. [Google Scholar] [CrossRef]

- Laghari, J.A.; Mokhlis, H.; Karimi, M.; Bakar, A.H.A. Hasmaini Mohamad, Computational Intelligence based techniques for islanding detection of distributed generation in distribution network: A review. Energy Convers. Manag. 2014, 88, 139–152. [Google Scholar] [CrossRef]

- Behera, S.; Sahoo, S.; Pati, B.B. A review on optimization algorithms and application to wind energy integration to grid. Renew. Sustain. Energy Rev. 2015, 48, 214–227. [Google Scholar] [CrossRef]

- Gieseke, F.; Airola, A.; Pahikkala, T.; Kramer, O. Fast and Simple Gradient-Based Optimization for Semi-Supervised Support Vector Machines. Neurocomputing 2014, 123, 23–32. [Google Scholar] [CrossRef]

- Lee, J.-S.; Du, L.-J. Unsupervised classification using polarimetric decomposition and the complex Wishart classifier. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2249–2258. [Google Scholar]

- Pahikkala, T.; Airola, A.; Gieseke, F.; Kramer, O. Unsupervised Multi-Class Regularized Least-Squares Classification. In Proceedings of the 12th IEEE International Conference on Data Mining (ICDM), Brussels, Belgium, 10–13 December 2012; pp. 585–594.

- Hang, J.; Zhang, J.; Cheng, M. Application of multi-class fuzzy support vector machine classifier for fault diagnosis of wind turbine. Fuzzy Sets Syst. 2016, 297, 128–140. [Google Scholar] [CrossRef]

- Kim, K.I.; Jin, C.H.; Lee, Y.K.; Kim, K.D.; Ryu, K.H. Forecasting wind power generation patterns based on SOM clustering. In Proceedings of the 3rd International Conference on Awareness Science and Technology (iCAST), Dalian, China, 27–30 September 2011; pp. 508–511.

- Lee, H.G.; Piao, M.; Shin, Y.H. Wind Power Pattern Forecasting Based on Projected Clustering and Classification Methods. ETRI J. 2015, 37, 283–294. [Google Scholar] [CrossRef]

- Gomez-Chova, L.; Camps-Valls, G.; Bruzzone, L.; Calpe-Maravilla, J. Mean Map Kernel Methods for Semisupervised Cloud Classification. IEEE Trans. Geosci. Remote Sens. 2010, 48, 207–220. [Google Scholar] [CrossRef]

- Naganathan, H.; Chong, W.K.; Chen, X.W. Semi-supervised Energy Modeling (SSEM) for Building Clusters Using Machine Learning Techniques. Procedia Eng. 2015, 118, 1189–1194. [Google Scholar] [CrossRef]

- Gutiérrez, P.A.; Pérez-Ortiz, M.; Sánchez-Monedero, J.; Fernández-Navarro, F.; Hervás-Martínez, C. Ordinal regression methods: Survey and experimental study. IEEE Trans. Knowl. Data Eng. 2015, 28, 127–146. [Google Scholar] [CrossRef]

- Gutiérrez, P.A.; Salcedo-Sanz, S.; Hervás-Martínez, C.; Carro-Calvo, L.; Sánchez-Monedero, J.; Prieto, L. Ordinal and nominal classification of wind speed from synoptic pressure patterns. Eng. Appl. Artif. Intell. 2013, 26, 1008–1015. [Google Scholar] [CrossRef]

- Sánchez-Monedero, J.; Salcedo-Sanz, S.; Gutiérrez, P.A.; Casanova-Mateo, C.; Hervás-Martínez, C. Simultaneous modeling of rainfall occurrence and amount using a hierarchical nominal–ordinal support vector classifier. Eng. Appl. Artif. Intell. 2014, 34, 199–207. [Google Scholar] [CrossRef]

- Provost, F.J.; Fawcett, T. Analysis and visualization of classifier performance: Comparison under imprecise class and cost distributions. In Proceedings of the Third International Conference on Knowledge Discovery and Data Mining, Newport Beach, CA, USA, 14–17 August 1997; Volume 97, pp. 43–48.

- Zolghadri, M.J.; Mansoori, E.G. Weighting fuzzy classification rules using receiver operating characteristics (ROC) analysis. Inf. Sci. 2007, 177, 2296–2307. [Google Scholar] [CrossRef]

- Caballero, J.F.; Martinez, F.; Hervas, C.; Gutierrez, P. Sensitivity Versus Accuracy in Multiclass Problems Using Memetic Pareto Evolutionary Neural Networks. IEEE Trans. Neural Netw. 2010, 21, 750–770. [Google Scholar] [CrossRef] [PubMed]

- Baccianella, S.; Esuli, A.; Sebastiani, F. Evaluation Measures for Ordinal Regression. In Proceedings of the Ninth International Conference on Intelligent Systems Design and Applications (ISDA ’09), Pisa, Italy, 30 November–2 December 2009; pp. 283–287.

- Cruz-Ramírez, M.; Hervás-Martínez, C.; Sánchez-Monedero, J.; Gutiérrez, P.A. Metrics to guide a multi-objective evolutionary algorithm for ordinal classification. Neurocomputing 2014, 135, 21–31. [Google Scholar] [CrossRef]

- Hosmer, D.W., Jr.; Lemeshow, S.; Sturdivant, R.X. Applied Logistic Regression; John Wiley & Sons: Malden, MA, USA, 2004. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning; Springer Series in Statistics; Springer: New York, NY, USA, 2009. [Google Scholar]

- Witten, I.H.; Frank, E. Data Mining: Practical Machine Learning Tools and Techniques, 2nd ed.; Morgan Kaufmann: Burlington, MA, USA, 2005. [Google Scholar]

- Trafalis, T.B.; Adrianto, I.; Richman, M.B.; Lakshmivarahan, S. Machine-Learning classifiers for imbalanced tornado data. Comput. Manag. Sci. 2013, 11, 403–418. [Google Scholar] [CrossRef]

- Sallis, P.J.; Claster, W.; Hernández, S. A machine-learning algorithm for wind gust prediction. Comput. Geosci. 2011, 37, 1337–1344. [Google Scholar] [CrossRef]

- Zontul, M.; Aydın, F.; Doğan, G.; Şener, S.; Kaynar, O. Wind Speed Forecasting Using REPTree and Bagging Methods in Kirklareli-Turkey. J. Theor. Appl. Inf. Technol. 2013, 56, 17–29. [Google Scholar]

- Carta, J.A.; Velázquez, S.; Matías, J.M. Use of Bayesian networks classifiers for long-term mean wind turbine energy output estimation at a potential wind energy conversion site. Energy Convers. Manag. 2011, 52, 1137–1149. [Google Scholar] [CrossRef]

- Croonenbroeck, C.; Dahl, C.M. Accurate medium-term wind power forecasting in a censored classification framework. Energy 2014, 73, 221–232. [Google Scholar] [CrossRef]

- Kusiak, A.; Verma, A. Prediction of Status Patterns of Wind Turbines: A Data-Mining Approach. J. Sol. Energy Eng. 2011, 133, 011008. [Google Scholar] [CrossRef]

- Schlechtingen, M.; Santos, I.; Achiche, S. Using Data-Mining Approaches for Wind Turbine Power Curve Monitoring: A Comparative Study. IEEE Trans. Sustain. Energy 2013, 4, 671–679. [Google Scholar] [CrossRef]

- Liu, W.; Wang, Z.; Han, J.; Wang, G. Wind turbine fault diagnosis method based on diagonal spectrum and clustering binary tree SVM. Renew. Energy 2013, 50, 1–6. [Google Scholar]

- Santos, P.; Villa, L.F.; Reñones, A.; Bustillo, A.; Maudes, J. An SVM-Based Solution for Fault Detection in Wind Turbines. Sensors 2015, 15, 5627–5648. [Google Scholar] [CrossRef] [PubMed]

- Malik, H.; Mishra, S. Application of Probabilistic Neural Network in Fault Diagnosis of Wind Turbine Using FAST, TurbSim and Simulink. Procedia Comput. Sci. 2015, 58, 186–193. [Google Scholar] [CrossRef]

- Pattison, D.; Garcia, M.S.; Xie, W.; Quail, F.; Revie, M.; Whitfield, R.I.; Irvine, I. Intelligent integrated maintenance for wind power generation. Wind Energy 2016, 19, 547–562. [Google Scholar] [CrossRef]

- Toubakh, H.; Sayed-Mouchaweh, M. Hybrid dynamic data-driven approach for drift-like fault detection in wind turbines. Evol. Syst. 2015, 6, 115–129. [Google Scholar] [CrossRef]

- Toubakh, H.; Sayed-Mouchaweh, M. Hybrid dynamic classifier for drift-like fault diagnosis in a class of hybrid dynamic systems: Application to wind turbine converters. Neurocomputing 2016, 171, 1496–1516. [Google Scholar] [CrossRef]

- Jiang, H.; Zhang, J.; Gao, W.; Wu, Z. Fault Detection, Identification, and Location in Smart Grid Based on Data-Driven Computational Methods. IEEE Trans. Smart Grid 2014, 5, 2947–2956. [Google Scholar] [CrossRef]

- Mahela, O.P.; Shaik, A.G.; Gupta, N. A critical review of detection and classification of power quality events. Renew. Sustain. Energy Rev. 2015, 41, 495–505. [Google Scholar] [CrossRef]

- Khokhar, S.; Zin, A.A.B.M.; Mokhtar, A.S.B.; Pesaran, M. A comprehensive overview on signal processing and artificial intelligence techniques applications in classification of power quality disturbances. Renew. Sustain. Energy Rev. 2015, 51, 1650–1663. [Google Scholar] [CrossRef]

- Chung, J.; Powers, E.; Grady, W.; Bhatt, S. Power disturbance classifier using a rule-based method and wavelet packet-based hidden Markov model. IEEE Trans. Power Deliv. 2002, 17, 233–241. [Google Scholar] [CrossRef]

- Gaing, Z.-L. Wavelet-based neural network for power disturbance recognition and classification. IEEE Trans. Power Deliv. 2004, 19, 1560–1568. [Google Scholar] [CrossRef]

- Srinivasan, D.; Ng, W.; Liew, A. Neural-Network-Based signature recognition for harmonic source identification. IEEE Trans. Power Deliv. 2006, 21, 398–405. [Google Scholar] [CrossRef]

- Monedero, I.; Leon, C.; Ropero, J.; Garcia, A.; Elena, J.; Montano, J. Classification of Electrical Disturbances in Real Time Using Neural Networks. IEEE Trans. Power Deliv. 2007, 22, 1288–1296. [Google Scholar] [CrossRef]

- Huang, N.; Xu, D.; Liu, X.; Lin, L. Power quality disturbances classification based on S-transform and probabilistic neural network. Neurocomputing 2012, 98, 12–23. [Google Scholar] [CrossRef]

- Valtierra-Rodriguez, M.; Romero-Troncoso, R.D.; Osornio-Rios, R.; Garcia-Perez, A. Detection and Classification of Single and Combined Power Quality Disturbances Using Neural Networks. IEEE Trans. Ind. Electron. 2014, 61, 2473–2482. [Google Scholar] [CrossRef]

- De Yong, D.; Bhowmik, S.; Magnago, F. An effective Power Quality classifier using Wavelet Transform and Support Vector Machines. Expert Syst. Appl. 2015, 42, 6075–6081. [Google Scholar] [CrossRef]

- Biswal, M.; Dash, P.K. Detection and characterization of multiple power quality disturbances with a fast S-transform and decision tree based classifier. Digit. Signal Process. 2013, 23, 1071–1083. [Google Scholar] [CrossRef]

- Ray, P.K.; Mohanty, S.R.; Kishor, N.; Catalao, J.P.S. Optimal Feature and Decision Tree-Based Classification of Power Quality Disturbances in Distributed Generation Systems. IEEE Trans. Sustain. Energy 2014, 5, 200–208. [Google Scholar] [CrossRef]

- Biswal, B.; Biswal, M.; Mishra, S.; Jalaja, R. Automatic Classification of Power Quality Events Using Balanced Neural Tree. IEEE Trans. Ind. Electron. 2014, 61, 521–530. [Google Scholar] [CrossRef]

- Biswal, B.; Behera, H.S.; Bisoi, R.; Dash, P.K. Classification of power quality data using decision tree and chemotactic differential evolution based fuzzy clustering. Swarm Evolut. Comput. 2012, 4, 12–24. [Google Scholar] [CrossRef]

- Liao, Y.; Lee, J.-B. A fuzzy-expert system for classifying power quality disturbances. Int. J. Electr. Power Energy Syst. 2004, 26, 199–205. [Google Scholar] [CrossRef]

- Meher, S.K.; Pradhan, A.K. Fuzzy classifiers for power quality events analysis. Electr. Power Syst. Res. 2010, 80, 71–76. [Google Scholar] [CrossRef]

- Hooshmand, R.; Enshaee, A. Detection and classification of single and combined power quality disturbances using fuzzy systems oriented by particle swarm optimization algorithm. Electr. Power Syst. Res. 2010, 80, 1552–1561. [Google Scholar] [CrossRef]

- Berges, M.; Goldman, E.; Matthews, H.S.; Soibelman, L. Learning systems for electric consumption of buildings. In Proceedings of the ASCE International Workshop on Computing in Civil Engineering, Austin, TX, USA, 24–27 July 2009.

- Berges, M.; Goldman, E.; Matthews, H.S.; Soibelman, L. Enhancing electricity audits in residential buildings with nonintrusive load monitoring. J. Ind. Ecol. 2010, 14, 844–858. [Google Scholar] [CrossRef]

- Liang, J.; Ng, S.K.K.; Kendall, G.; Cheng, J.W.M. Load signature study—Part I: Basic concept, structure and methodology. IEEE Trans. Power Deliv. 2010, 25, 551–560. [Google Scholar] [CrossRef]

- Liang, J.; Ng, S.K.K.; Kendall, G.; Cheng, J.W.M. Load signature study—Part II: Disaggregation farmework, simulation and applications. IEEE Trans. Power Deliv. 2010, 25, 561–569. [Google Scholar] [CrossRef]

- Jiang, L.; Luo, S.; Li, J. An approach of household power appliance monitoring based on machine learning. In Proceedings of the Fifth International Conference OnIntelligent Computation Technology and Automation (ICICTA), Zhangjiajie, China, 12–14 January 2012; pp. 577–580.

- Tapakis, R.; Charalambides, A.G. Equipment and methodologies for cloud detection and classification: A review. Sol. Energy 2013, 95, 392–430. [Google Scholar] [CrossRef]

- Mellit, A.; Kalogirou, S.A. Artificial intelligence techniques for photovoltaic applications: A review. Prog. Energy Combust. Sci. 2008, 34, 574–632. [Google Scholar] [CrossRef]

- Wang, J.; Azimi-Sadjadi, M.R.; Reinke, D. A temporally adaptive classifier for multispectral imagery. IEEE Trans. Neural Netw. 2004, 15, 159–165. [Google Scholar] [CrossRef] [PubMed]

- Saitwal, K.; Azimi-Sadjadi, M.; Reinke, D. A multichannel temporally adaptive system for continuous cloud classification from satellite imagery. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1098–1104. [Google Scholar] [CrossRef]

- Liu, Y.; Xia, J.; Shi, C.-X.; Hong, Y. An Improved Cloud Classification Algorithm for China’s FY-2c Multi-Channel Images Using Artificial Neural Network. Sensors 2009, 9, 5558–5579. [Google Scholar] [CrossRef] [PubMed]

- Lee, Y.; Wahba, G.; Ackerman, S.A. Cloud Classification of Satellite Radiance Data by Multicategory Support Vector Machines. J. Atmos. Ocean. Technol. 2004, 21, 159–169. [Google Scholar] [CrossRef]

- Chang, H.-C.; Lin, S.-C.; Kuo, C.-C.; Yu, H.-P.; Chang, H.-C.; Lin, S.-C.; Kuo, C.-C.; Yu, H.-P. Cloud Monitoring for Solar Plants with Support Vector Machine Based Fault Detection System, Cloud Monitoring for Solar Plants with Support Vector Machine Based Fault Detection System. Math. Probl. Eng. 2014, 2014, e564517. [Google Scholar] [CrossRef]

- Cheng, H.-Y.; Yu, C.-C. Multi-Model solar irradiance prediction based on automatic cloud classification. Energy 2015, 91, 579–587. [Google Scholar] [CrossRef]

- Ghosh, A.; Pal, N.R.; Das, J. A fuzzy rule based approach to cloud cover estimation. Remote Sens. Environ. 2006, 100, 531–549. [Google Scholar] [CrossRef]

- D’Andrea, E.; Lazzerini, B. A hierarchical approach to multi-class fuzzy classifiers. Expert Syst. Appl. 2013, 40, 3828–3840. [Google Scholar] [CrossRef]

- Pringle, J.; Stretch, D.D.; Bárdossy, A. Automated classification of the atmospheric circulation patterns that drive regional wave climates. Nat. Hazards Earth Syst. Sci. 2014, 14, 2145–2155. [Google Scholar] [CrossRef]

- Bishop, C. Pattern Recognition and Machine Learning, 1st ed.; Springer: New York, NY, USA, 2010. [Google Scholar]

- Bishop, C. Improving the Generalization Properties of Radial Basis Function Neural Networks. Neural Comput. 1991, 3, 579–588. [Google Scholar] [CrossRef]

- Lee, S.-J.; Hou, C.-L. An ART-based construction of RBF networks. IEEE Trans. Neural Netw. 2002, 13, 1308–1321. [Google Scholar] [PubMed]

- Cybenko, G. Approximation by superpositions of a sigmoidal function, Mathematics of control. Signals Syst. 1989, 2, 303–314. [Google Scholar] [CrossRef]

- Durbin, R.; Rumelhart, D.E. Product Units: A Computationally Powerful and Biologically Plausible Extension to Backpropagation Networks. Neural Comput. 1989, 1, 133–142. [Google Scholar] [CrossRef]

- Buchtala, O.; Klimek, M.; Sick, B. Evolutionary optimization of radial basis function classifiers for data mining applications. IEEE Trans. Syst. Man Cybern. 2005, 35, 928–947. [Google Scholar] [CrossRef]

- Yao, X. Evolving artificial neural networks. Proc. IEEE 1999, 87, 1423–1447. [Google Scholar]

- Gutiérrez, P.A.; López-Granados, F.; Peña-Barragán, J.M.; Jurado-Expósito, M.; Gómez-Casero, M.T.; Hervás-Martínez, C. Mapping sunflower yield as affected by Ridolfia segetum patches and elevation by applying evolutionary product unit neural networks to remote sensed data. Comput. Electron. Agric. 2008, 60, 122–132. [Google Scholar] [CrossRef]

- Hervás-Martínez, C.; Salcedo-Sanz, S.; Gutiérrez, P.A.; Ortiz-García, E.G.; Prieto, L. Evolutionary product unit neural networks for short-term wind speed forecasting in wind farms. Neural Comput. Appl. 2012, 21, 993–1005. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhou, H.; Ding, X.; Zhang, R. Extreme Learning Machine for Regression and Multiclass Classification. IEEE Trans. Syst. Man Cybern. 2012, 42, 513–529. [Google Scholar] [CrossRef] [PubMed]

- Boser, B.E.; Guyon, I.M.; Vapnik, V.N. A Training Algorithm for Optimal Margin Classifiers. In Proceedings of the Fifth Annual Workshop on Computational Learning Theory (COLT ’92), Pittsburgh, PA, USA, 27–29 July 1992; Association for Computing Machinery: New York, NY, USA, 1992; pp. 144–152. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Salcedo-Sanz, S.; Rojo-Álvarez, J.L.; Martínez-Ramón, M.; Camps-Valls, G. Support vector machines in engineering: An overview. In Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery; John Wiley & Sons: Hoboken, NJ, USA, 2014; Volume 4, pp. 234–267. [Google Scholar]

- Hsu, C.-W.; Lin, C.-J. A comparison of methods for multiclass support vector machines. IEEE Trans. Neural Netw. 2002, 13, 415–425. [Google Scholar] [PubMed]

- Rokach, L.; Maimon, O. Top-Down Induction of Decision Trees Classifiers—A Survey. IEEE Trans. Syst. Man Cybern. 2005, 35, 476–487. [Google Scholar] [CrossRef]

- Quinlan, J.R. C4.5: Programs for Machine Learning; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Breiman, L.; Friedman, J.; Stone, C.J.; Olshen, R.A. Classification and Regression Trees; Taylor & Francis: London, UK, 1984. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Angelov, P.; Zhou, X. Evolving Fuzzy-Rule-Based Classifiers from Data Streams. IEEE Trans. Fuzzy Syst. 2008, 16, 1462–1475. [Google Scholar] [CrossRef]

- Hoppner, F.; Klawonn, F. Obtaining interpretable fuzzy models from fuzzy clustering and fuzzy regression. In Proceedings of the Fourth International Conference on Knowledge-Based Intelligent Information Engineering Systems & Allied Technologies, Brighton, UK, 30 August–1 September 2000; Volume 1, pp. 162–165.

- Kuncheva, L. How good are fuzzy If-Then classifiers? IEEE Trans. Syst. Man Cybern. 2000, 30, 501–509. [Google Scholar] [CrossRef] [PubMed]

- Nauck, D.; Kruse, R. Obtaining interpretable fuzzy classification rules from medical data. Artif. Intell. Med. 1999, 16, 149–169. [Google Scholar] [CrossRef]

- Mitra, S.; Hayashi, Y. Neuro-Fuzzy rule generation: Survey in soft computing framework. IEEE Trans. Neural Netw. 2000, 11, 748–768. [Google Scholar] [CrossRef] [PubMed]

- Setnes, M.; Babuška, R. Fuzzy relational classifier trained by fuzzy clustering. IEEE Transa. Syst. Man Cybern. 1999, 29, 619–625. [Google Scholar] [CrossRef] [PubMed]

- Setnes, M.; Roubos, H. GA-fuzzy modeling and classification: Complexity and performance. IEEE Trans. Fuzzy Syst. 2000, 8, 509–522. [Google Scholar] [CrossRef]

- John, G.H.; Langley, P. Estimating Continuous Distributions in Bayesian Classifiers. In Proceedings of the Eleventh Conference on Uncertainty in Artificial Intelligence (UAI ’95), Montreal, QC, Canada, 18–20 August 1995; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1995; pp. 338–345. [Google Scholar]

- Ben-Gal, I. Bayesian Networks. In Encyclopedia of Statistics in Quality and Reliability; John Wiley & Sons, Ltd.: Malden, MA, USA, 2008. [Google Scholar]

- Anderson, T. The Theory and Practice of Online Learning; Athabasca University Press: Athabasca, AB, Canada, 2008. [Google Scholar]

- Manevitz, L.M.; Yousef, M. One-Class svms for document classification. J. Mach. Learn. Res. 2012, 2, 139–154. [Google Scholar]

- Lecun, Y.; Bengio, Y.; Hinton, G.E. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Tao, Y.; Hongkun, C.; Chuang, Q. Wind power prediction and pattern feature based on deep learning method. In Proceedings of the IEEE PES Asia-Pacific Power and Energy Engineering Conference (APPEEC), Hong Kong, China, 7–10 December 2014; IEEE: New York, NY, USA, 2014. [Google Scholar]

- Dalto, M.; Matusko, J.; Vasak, M. Deep neural networks for ultra-short-term wind forecasting. In Proceedings of the IEEE International Conference onIndustrial Technology (ICIT), Seville, Spain, 17–19 March 2015; IEEE: New York, NY, USA, 2015; pp. 1657–1663. [Google Scholar]

- Pyle, D. Data Preparation for Data Mining, 1st ed.; Morgan Kaufmann Publishers Inc.: New York, NY, USA, 1999. [Google Scholar]

- Guyon, I.; Elisseeff, A. An Introduction to Variable and Feature Selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- Neugebauer, J.; Kramer, O.; Sonnenschein, M. Classification Cascades of Overlapping Feature Ensembles for Energy Time Series Data. In Third Workshop on Data Analytics for Renewable Energy Integration; Springer: Berlin, Germany, 2015; pp. 76–93. [Google Scholar]

- Guarrancino, M.R.; Irpino, A.; Radziukyniene, N.; Verde, R. Supervised classification of distributed data streams for smart grids. Energy Syst. 2012, 3, 95–108. [Google Scholar] [CrossRef]

- Neugebauer, J.; Kramer, O.; Sonnenschein, M. Improving Cascade Classifier Precision by Instance Selection and Outlier Generation. In Proceedings of the 8th International Conference on Agents and Artificial Intelligence, Rome, Italy, 24–26 February 2016; Volume 2, pp. 96–104.

- Sun, Y.; Wong, A.K.C.; Kamel, M.S. Classification of imbalanced data: A review. Int. J. Pattern Recognit. Artif. Intell. 2009, 23, 687–719. [Google Scholar] [CrossRef]

- Sun, Y.; Kamel, M.S.; Wong, A.K.C.; Wang, Y. Cost-Sensitive boosting for classification of imbalanced data. Pattern Recognit. 2007, 40, 3358–3378. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-Sampling Technique. J. Artif. Int. Res. 2002, 16, 321–357. [Google Scholar]

- Binitha, S.; Sathya, S.S. A survey of bio inspired optimization algorithms. Int. J. Soft Comput. Eng. 2012, 2, 137–151. [Google Scholar]

- Janik, P.; Lobos, T. Automated classification of power-quality disturbances using SVM and RBF networks. IEEE Trans. Power Deliv. 2006, 21, 1663–1669. [Google Scholar] [CrossRef]

- Cerqueira, A.S.; Ferreira, D.D.; Ribeiro, M.V.; Duque, C.A. Power quality events recognition using a SVM-based method. Electr. Power Syst. Res. 2008, 78, 1546–1552. [Google Scholar] [CrossRef]

- Erişti, H.; Demir, Y. A new algorithm for automatic classification of power quality events based on wavelet transform and SVM. Expert Syst. Appl. 2010, 37, 4094–4102. [Google Scholar]

- Biswal, B.; Biswal, M.K.; Dash, P.K.; Mishra, S. Power quality event characterization using support vector machine and optimization using advanced immune algorithm. Neurocomputing 2013, 103, 75–86. [Google Scholar] [CrossRef]

- Shah, A.M.; Bhalja, B.R. Discrimination between Internal Faults and Other Disturbances in Transformer Using the Support Vector Machine-Based Protection Scheme. IEEE Trans. Power Deliv. 2013, 28, 1508–1515. [Google Scholar] [CrossRef]

- Arikan, C.; Ozdemir, M. Classification of Power Quality Disturbances at Power System Frequency and out of Power System Frequency Using Support Vector Machines. Prz. Elektrotech. 2013, 89, 284–291. [Google Scholar]

- Hart, G.W. Nonintrusive appliance load monitoring. Proc. IEEE 1992, 80, 1870–1891. [Google Scholar] [CrossRef]

- Zeifman, M.; Roth, K. Nonintrusive appliance load monitoring: Review and outlook. IEEE Trans. Consum. Electron. 2011, 57, 76–84. [Google Scholar] [CrossRef]

- Yu, L.; Li, H.; Feng, X.; Duan, J. Nonintrusive appliance load monitoring for smart homes: Recent advances and future issues. IEEE Instrum. Meas. Mag. 2016, 19, 56–62. [Google Scholar] [CrossRef]

| Predicted Class | |||||||

|---|---|---|---|---|---|---|---|

| 1 | ⋯ | l | ⋯ | Q | |||

| 1 | ⋯ | ⋯ | |||||

| ⋮ | ⋮ | ⋱ | ⋮ | ⋱ | ⋮ | ⋮ | |

| Real Class | q | ⋯ | ⋯ | ||||

| ⋮ | ⋮ | ⋱ | ⋮ | ⋱ | ⋮ | ⋮ | |

| Q | ⋯ | ⋯ | |||||

| ⋯ | ⋯ | N | |||||

| Reference | Year | Application Field | Problem | Specific Methodology Used |

|---|---|---|---|---|

| [3] | 2015 | Sea wave | Ordinal classification | SVM, ANN, LR |

| [4] | 2015 | Solar | Classification | SVM |

| [5] | 2009 | Power disturbance | Classification | SVM, wavelets |

| [10] | 2015 | Wind | Optimization | Bio-inspired, meta-heuristics |

| [14] | 2015 | Wind | Classification | Fuzzy SVM |

| [15] | 2011 | Wind | Classification | DT, SOM |

| [16] | 2015 | Wind | Classification | SVM, k-NN, fuzzy, ANN |

| [17] | 2010 | Solar | Classification | Semi-supervised SVM |

| [20] | 2013 | Wind | Ordinal classification | SVM, DT, LR, HMM |

| [30] | 2014 | Wind | Classification | SVM, LR, RF, rotation forest |

| [31] | 2011 | Wind | Classification | ANN, LR, DT, RF |

| [32] | 2013 | Wind | Classification | k-NN, RBF, DT |

| [33] | 2011 | Wind | Classification, regression | BN |

| [34] | 2014 | Wind | Classification, regression | Heuristic methodology: WPPT |

| [35] | 2011 | Wind | Classification | Bagging, ripper, rotation forest, RF, k-NN |

| [36] | 2013 | Wind | Classification | ANFIS, ANN |

| [37] | 2012 | Wind | Classification | SVM |

| [38] | 2015 | Wind | Classification | ANN, SVM |

| [39] | 2015 | Wind | Classification | PNN |

| [40] | 2015 | Wind | Classification | DT, BN, RF |

| [41] | 2015 | Wind | Classification, clustering | AuDyC |

| [42] | 2016 | Wind | Classification, clustering | AuDyC |

| [43] | 2010 | Power disturbance | Classification | HMM, SVM, ANN |

| [44] | 2015 | Power disturbance | Classification | SVM, NN, fuzzy, neuro-fuzzy, wavelets, GA |

| [45] | 2015 | Power disturbance | Classification | SVM, k-NN, ANN, fuzzy, wavelets |

| [46] | 2002 | Power disturbance | Classification | Rule-based classifiers, wavelets, HMM |

| [47] | 2004 | Power disturbance | Classification | PNN |

| [48] | 2006 | Power disturbance | Classification | ANN, RBF, SVM |

| [49] | 2007 | Power disturbance | Classification | ANN, wavelets |

| [50] | 2012 | Power disturbance | Classification | PNN |

| [51] | 2014 | Power disturbance | Classification | ANN |

| Ref. | Year | Application Field | Problem | Specific Methodology Used |

|---|---|---|---|---|

| [52] | 2015 | Power disturbance | Classification | SVM |

| [53] | 2013 | Power disturbance | Classification | DT, ANN, neuro-fuzzy, SVM |

| [54] | 2014 | Power disturbance | Classification | DT, SVM |

| [55] | 2014 | Power disturbance | Classification | DT |

| [56] | 2012 | Power disturbance | Classification | DT, DE |

| [57] | 2004 | Power disturbance | Classification | Fuzzy expert, ANN |

| [58] | 2010 | Power disturbance | Classification | Fuzzy classifiers |

| [59] | 2010 | Power disturbance | Classification | GFS |

| [48] | 2006 | Appliance load monitoring | Classification | ANN |

| [60] | 2009 | Appliance load monitoring | Classification | k-NN, DTs, naive Bayes |

| [61] | 2010 | Appliance load monitoring | Classification | k-NN, DTs, naive Bayes |

| [62,63] | 2010 | Appliance load monitoring | Classification | LR, ANN |

| [64] | 2012 | Appliance load monitoring | Classification | SVM |

| [65] | 2013 | Solar | Classification, regression | SVM, ANN, ANFIS, wavelet, GA |

| [66] | 2008 | Solar | Classification, regression | ANN, fuzzy systems, meta-heuristics |

| [67] | 2004 | Solar | Classification | PNN |

| [68] | 2006 | Solar | Classification | PNN |

| [69] | 2009 | Solar | Classification | PNN, SOM, SVM |

| [70] | 2004 | Solar | Classification | SVM |

| [71] | 2014 | Solar | Classification | SVM |

| [72] | 2015 | Solar | Classification | SVM |

| [73] | 2006 | Solar | Classification | Fuzzy rules |

| [74] | 2013 | Solar | Classification | Fuzzy classifiers |

| [75] | 2014 | Solar | Classification | Fuzzy rules |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pérez-Ortiz, M.; Jiménez-Fernández, S.; Gutiérrez, P.A.; Alexandre, E.; Hervás-Martínez, C.; Salcedo-Sanz, S. A Review of Classification Problems and Algorithms in Renewable Energy Applications. Energies 2016, 9, 607. https://doi.org/10.3390/en9080607

Pérez-Ortiz M, Jiménez-Fernández S, Gutiérrez PA, Alexandre E, Hervás-Martínez C, Salcedo-Sanz S. A Review of Classification Problems and Algorithms in Renewable Energy Applications. Energies. 2016; 9(8):607. https://doi.org/10.3390/en9080607

Chicago/Turabian StylePérez-Ortiz, María, Silvia Jiménez-Fernández, Pedro A. Gutiérrez, Enrique Alexandre, César Hervás-Martínez, and Sancho Salcedo-Sanz. 2016. "A Review of Classification Problems and Algorithms in Renewable Energy Applications" Energies 9, no. 8: 607. https://doi.org/10.3390/en9080607